1. Introduction

The data a process uses are stored in three types of memory space: stack, heap, and data. The stack is a memory area allocated or deallocated by the system, and its stores the local variables or parameters used by a function or within a block. The variables allocated to the stack are deallocated when a function call is completed, or when a function exits the block. The heap is the memory area to which dynamic memory is allocated or deallocated by the user (or developer). In this case, memory is allocated or deallocated via function calls, such as malloc()/free() in C/C++. The data area, similar to the stack area, is a memory area allocated or deallocated by the system. It stores global or static variables, which are allocated when a program starts and deallocated when the program terminates.

Memory corruption occurs when static or dynamic memory undergoes an unintentional change while the process is running [

1]. Memory corruption can occur when said process attempts to use or release invalid memory, such as unallocated or uninitialized memory, or tries to access memory whose size exceeds the allocated amount. In addition to dynamic memory, memory corruption can occur because of static memory usage, as in the case of global and stack buffer overflows. Memory corruption can induce a program malfunction or system crash and therefore must be resolved. However, the resolution process is difficult for multiple reasons, detailed hereafter.

First, the location of the memory corruption source is often different from that of the actual failure. For example, if memory allocation fails, but the memory pointer is used without checking whether it is NULL, a crash occurs where the memory use function was called. In this case, the root cause of the crash is an allocation failure, but most memory defect-detection methods provide information only about the location where the crash occurred, so it is difficult to identify the cause of the defect.

Second, the causes and symptoms of memory corruption are nondeterministic. For example, memory allocation might fail because one of its parameters was incorrectly entered. However, memory allocation can also fail if an insufficient amount of memory is available in the system for allocation. Thus, it becomes difficult to determine the cause of the memory corruption given the nature of the process. Buffer overflow is another example in which nondeterministic symptoms arise post-memory corruption. If the overflowed memory area of a variable or pointer is a memory area that has previously been allocated to another variable, a crash does not occur. However, the program could still malfunction, either because the value that should be stored in the original memory area is limited, or because the value of the overflowed variable changes. Contrarily, if an overflow occurs in an unallocated memory area, a crash occurs. The problem is that one cannot determine whether the overflowed area has been allocated when a buffer overflow occurs. Therefore, different results can be generated when the same code is executed. Thus, because the causes or symptoms of memory corruption vary according to the system status, faults are not consistently reproduced, making these defects difficult to resolve.

Memory corruption can occur when either dynamic or static memory is used. A typical example is stack overflow, which occurs when memory is read or written in excess of the array size. Moreover, even if the memory is static, if the memory address is used as a memory pointer by prepending the address of the operator “&”, or if an array allocated as a global variable is used as a memory pointer, memory corruption can still occur in the same manner as in the case of dynamic memory. Therefore, an effective memory defect-detection method must cover both the static and dynamic memory areas in order to detect and resolve memory corruption.

Memory corruption-detection methods have been consistently studied for over 20 years. However, previous studies have not monitored the entire memory area of a process; thus, undetectable forms of memory corruption persist. Furthermore, even if defect-detection is performed across the entire memory area, memory corruption due to external factors, including external libraries employed by the process, cannot be detected [

2]. Therefore, despite many years of research, memory corruption remains a challenging defect that requires resolution [

3]. This is because the location of the memory corruption source is often different from that of the actual failure (result), creating difficulties in correlating the cause and effect of memory corruption. Moreover, the symptoms, such as memory corruption-induced crashes, are nondeterministic [

4]; thus, it becomes difficult to reproduce them. This is another reason for the difficulty in overcoming memory corruption defects.

In our earlier study [

5], static memory information (address and size) obtained via a pre-analysis of executable binary files and dynamic memory usage information obtained using the memory function hooking technique, were stored as information tags. In addition, the earlier method was capable of detecting memory corruption caused by static/dynamic memory usage before a system crash, using the information tag for real-time memory defect detection.

Our method exhibits high defect detection accuracy because it surveys both the dynamic and static memory usages to perform memory corruption detection, while having minimal overhead. Additionally, even if a system crash occurs, memory corruption can still be detected because the data required for defect-detection can be collected. Furthermore, information is provided for memory corruption debugging when the defect source and actual failure locations differ. We implemented the proposed method as a Linux-based automation tool, called a memory corruption detection tool (MCDT). Its remarkable performance has been demonstrated through an experiment, in which our method was compared with other well-known memory defect-detection tools.

This paper is organized as follows:

Section 2 introduces existing studies related to memory corruption detection methods;

Section 3 presents our proposed memory corruption detection method and the associated automation tool;

Section 4 reports the validation experiment and results; finally,

Section 5 presents the conclusion and future research.

2. Related Work

Many studies have been conducted to detect memory corruption. The existing studies can be classified as those that employ memory corruption detection using static analysis and those that employ dynamic memory corruption detection, which depends on whether the target program was executed.

Memory corruption detection that uses static analysis is performed by studying the source code or executable binary files. Previously, Xie et al. [

4] proposed a memory corruption detection method in which the size of the variable or memory was determined through symbolic analysis, whereas Dor et al. [

6] proposed a memory error detection method in which the pointer operation or string manipulation function was analyzed. However, the above-mentioned methods could not detect memory corruption in dynamic memory. Furthermore, because these methods were not executed in an actual runtime environment, the defect detection accuracy was significantly low.

The instrumentation technique has been used to detect dynamic memory corruption in many studies [

7]. This adds a tracking code to the memory-related functions during compilation or at runtime, such that the information relevant to defect-detection can be collected when the memory-related function is called during execution. Their memory corruption detection accuracy was high because these studies were based on the actual execution of programs.

Tools including AddressSanitizer [

2], Mudflap [

8], and Frama-C/EACSL [

9] use a method that employs a tracking code at the compilation time for memory defect-detection. The AddressSanitizer tool detects memory corruption by checking the validity of all the memory areas via shadow memory. This process memory usage increases with the shadow memory size, as the defect-detection covers all memory areas. However, the execution overhead will not be significant, because the algorithm for detecting the defects in the actual and shadow memories is optimized. Mudflap is a method for preventing defects, such as referencing a NULL pointer, buffer overflow, and memory leaks. It works by adding a protection code to the statements related to pointer usage in C/C++. The Frama-C/EACSL tool determines whether the source code satisfies the coding rules, and it can detect memory errors in the global variable, stack, and heap areas. However, Mudflap and the Frama-C/EACSL tool detect defects using the compile time instrumentation technique; therefore, one must recompile the program to add the code for defect-detection. Consequently, the executable binary file is changed and becomes different to the one that runs on the actual target. Furthermore, the binary footprint increases.

Purify [

10], Memcheck [

11], and the method of Vorobyov et al. [

12] implement an executable tracking code for performing defect-detection during runtime. Purify can detect memory corruption in the dynamic memory, because the code that monitors memory validity is instrumented when the object code is created. However, the overhead here is significantly high because Purify monitors all memory access. Memcheck is an MCDT that runs in Valgrind, which is a virtual execution environment, rather than the actual execution environment. The process memory is managed via two stages of shadow memory and is used for memory corruption detection. Memcheck can detect memory corruption when accessing or deallocating uninitialized memory; however, similar to Purify, its execution overhead is high. Previously, Vorobyov et al. proposed a method that used shadow memory for the detection of transient memory errors. This method works by tracking the memory object and the pointer value while the program runs. However, this method only detects defects in dynamically allocated memory.

The shadow memory technique used by Memcheck, AddressSanitizer, Frama-C/EACSL, and Vorobyov et al. stores information from the memory areas used by a certain process in a separate area called shadow memory [

12]. Whenever an operation, such as memory allocation, deallocation, or access, is performed, the related memory operation results are stored in the shadow memory and are used for memory defect-detection. Typically, the shadow memory stores information regarding the access validity and memory initialization, or the absence thereof. This information can be used to detect memory corruption, such as the accessing of invalid or uninitialized memory by the process. Such methods are advantageous because they can accurately evaluate the memory space’s status. However, the usage of shadow memory demands high additional memory, and suffers from significant performance overhead. Notably, the overhead corresponds to the location of the memory used by the process.

Our earlier study [

5] involved a hybrid method that combines static analysis with dynamic defect-detection. The global variable and its size are assessed by analyzing the binary file of the memory defect-detection target. Then, the defects are detected via the dynamic monitoring of memory-related functions and the use of global variables. Since shadow memory stores only statically analyzed global variables and dynamically allocated and used memory, its memory overhead can be reduced compared to employing shadow memory for the entirety of the process’ memory.

3. Memory Corruption Detection

We propose a method of detecting memory corruption due to the usage of static/dynamic memory. The proposed method saves information regarding the usage of global and static variables stored in the data area and memory that is dynamically allocated to the heap area. The method then uses the saved information to perform real-time defect-detection.

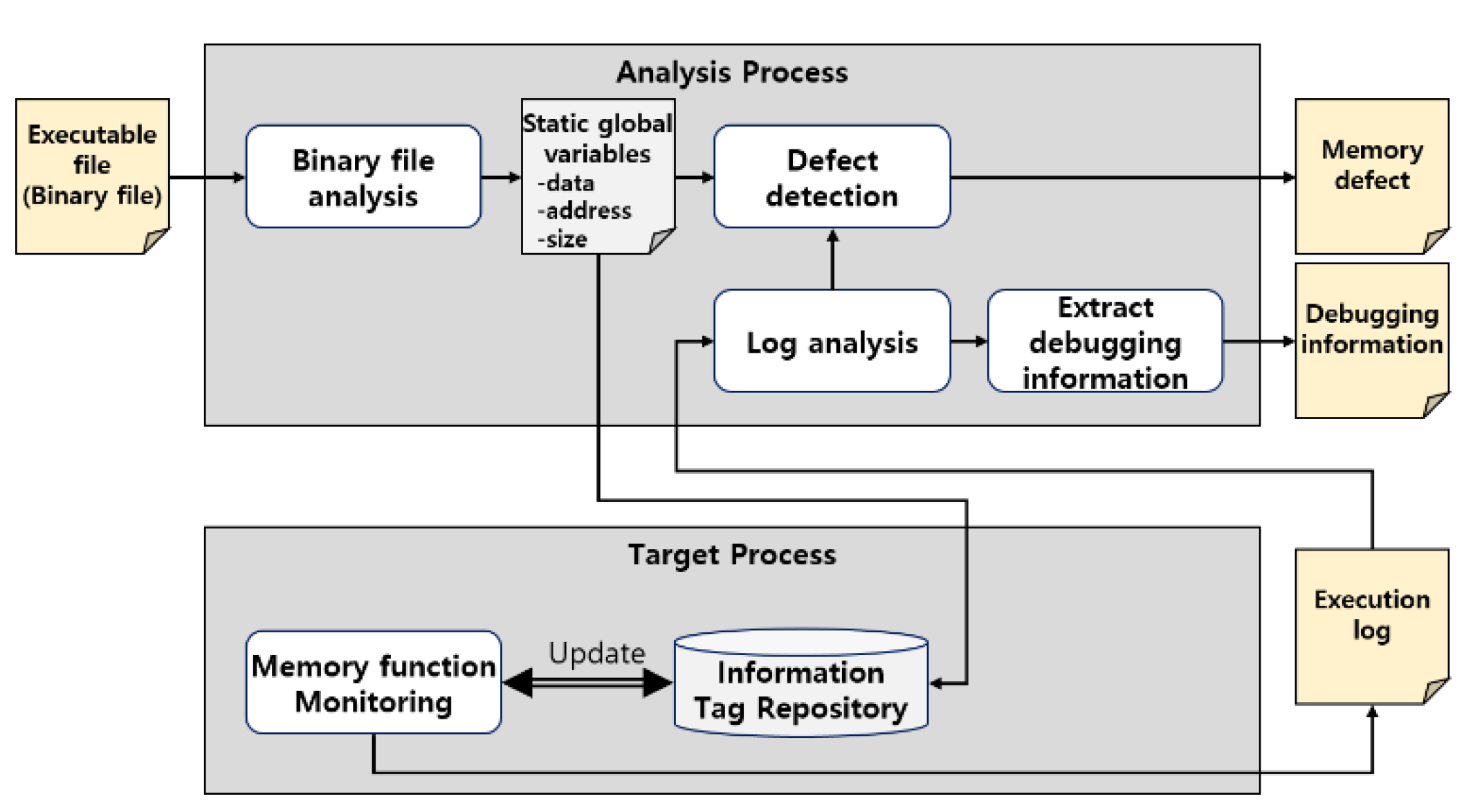

As shown in

Figure 1, our method analyzes the executable file (binary file) in the analysis process before detecting memory corruption, and stores the address and size of the static global variables in the information tag repository. Furthermore, while the target process of memory corruption detection is running, the execution of memory-related functions, such as malloc/memset/free, is monitored, dynamically updating the allocated/used/deallocated memory in the information tag repository, and memory corruption is detected through the information tag and execution log of memory-related functions.

In the case of local variables stored in the stack area, memory allocation and deallocation frequently occur and are managed by the system; thus, the memory usage information is not saved separately. However, the stack register can be used to check whether the memory being accessed or deallocated is in the stack area. Hence, the proposed method can be used to detect defects, such as access outside the stack area. As detailed in

Table 1, we classified the memory defects into various categories based on the common weakness enumeration specification [

13] and the existing memory defect classification [

14]. The defects listed in

Table 1 can cause memory corruption either directly or indirectly.

Although the result may vary depending on the C/C++ compiler type, the allocation of zero-size memory (M02) typically returns NULL, and the free() function does nothing when freeing a NULL pointer (M04). Conversely, these defects do not directly result in memory corruption. However, when NULL is returned because of an M02 defect, memory corruption can occur if the memory is accessed without checking whether the value is NULL. The location of the corruption is the function that accesses the memory. However, the memory corruption is caused by the memory-allocation function being called using a zero-size parameter. Hence, M02 is categorized as a defect.

Moreover, M04 is neither a defect nor the cause of a defect if the compiler adheres to the C language standard. However, if the compiler fails to adhere to the standard, memory corruption can occur depending on how the free() function has been implemented; hence, this case is categorized as a defect. Furthermore, in the case of dynamically allocated memory, the performance of the deallocation operation could result in memory corruption even if the compiler adheres to the standard, as one cannot determine whether the memory has a NULL value. For example, in the case of Linux GNU compiler collection (GCC), the free(NULL) statement is not included in the executable binary file. Thus, the statement does nothing. However, if the free() function does not know whether the value it is deallocating is NULL, as depicted in

Figure 2, the deallocation operation is included in the executable binary file and results in memory corruption.

In this study, information tags, which represent a type of shadow memory, are used to collect information to detect memory defects. While a process is running, the information tags of the memory accessed upon calling memory-related functions are used in real-time memory defect-detection. Therefore, memory corruption detection becomes possible in cases of both static and dynamic memory.

3.1. Information Tagging

To detect memory defects in real time, information that can be used to determine the validity of the memory to be used must be available. Therefore, the shadow memory technique is used. The “information tag” data structure, which is depicted in

Figure 3, is stored in the shadow memory. Information, including the memory address, allocation size, static/dynamic allocation flag, allocation function type, and deallocation flag, is saved in the information tags for real-time defect-detection. Furthermore, information regarding the function called to allocate or deallocate memory is stored in the information tags to analyze the cases in which the location of the defect is different from that of the actual defect.

Once a function that dynamically allocates memory (memory allocation function) is called, the location to be stored in the information tag repository is computed based on the address of the allocated memory, as shown in

Figure 4. Subsequently, the memory address allocated to the information tag location, the allocated size, the allocation function type, and the address that called the memory allocation function are stored. When the memory access function is called, the information tag location is computed using the address of the accessed memory, and the value of the information tag at the location is checked. If the information tag is not stored at the applicable location, it is possible to determine whether it is a case of random access to the dynamically allocated memory or of incorrect address access, by using the address and size of the adjacent information tag. When the memory deallocation function is called, the deallocation flag of the information tag of the memory address is set. Thereafter, the deallocation flag can be used to check whether the memory being accessed is deallocated memory.

To reduce the memory overhead due to the shadow memory, the entire virtual memory space of the process is not saved. Instead, only the static and global variables that were analyzed using the executable binary file and dynamically allocated information on the heap memory area are managed as information tags.

To further reduce the performance overhead, the following operation formula is used to map the information tags that correspond to the memory for performing detection:

The performance overhead is minimized by exploring the locations of the information tags of the current memory using only operations that use the number of static and global variables and the initial heap-memory address.

3.2. Real-Time Memory Defect-Detection

To monitor the memory usage of a process, the hooking of memory-related functions is used. As illustrated in

Figure 5, hooking is a technique that intercepts the execution path during runtime, and it is useful for monitoring memory-related operations [

15].

Once a memory-related function has been hooked, the hooked function is called each time a memory-related function is called. For example, after hooking Function 2, Hook_Func2 is run each time Function 2 is called. The hooked function uses information tags to detect defects in real time.

When allocating memory dynamically, the validity of the parameter of the memory allocation function is first verified, as depicted in

Figure 6. If the parameter is valid, the original memory allocation function is called. Subsequently, the validity of the result is verified. The execution result of the memory allocation function is the memory address that it has been allocated. If this memory address value is valid, then the address and size of the allocated memory, the dynamic allocation flag, the allocation function type, and the address of the function called for memory allocation are saved in the information tag.

When deallocating memory, the address of the target memory is first compared with the stack-top address to determine whether the memory being accessed lies in the stack area. To reduce the overhead, either a local variable or a parameter stored in the stack area is saved as an information tag. Hence, the memory address can be compared with the stack-top address, instead of performing a validity check. If the accessed memory is not a part of the stack area, then the parameter value of the memory allocation function is verified first, as depicted in

Figure 7. If the parameter value is valid, then the information tags are used to determine whether the parameter is a static variable. If the parameter is not a static variable and is the address of dynamically allocated memory, it is then determined whether the allocation and deallocation function pair matches either malloc()/free() or new()/delete(). If a match is found, the original memory deallocation function is called, and the released memory is updated in the information tag.

When accessing memory, similarly to the case of deallocating memory, the target memory address must be checked to verify whether it is part of the stack area. Subsequently, the process in

Figure 8 is followed to determine whether a defect exists, and if it does, to identify the defect type. After verifying the validity of the parameter of the memory access function, the information tag of the target memory address is explored to determine whether this memory is accessible. If the middle part of the memory block is accessed, such as addr + n, then the address and size of the nearest information tag are used to verify the validity of the memory address. Even if the address can be accessed, if the access memory exceeds the limited size, this is considered an access defect that has deviated from the approved range.

To explain this concept using a specific example,

Figure 9 presents a case in which a pointer,

bufP, that was dynamically allocated via the malloc() function should have been released before the function exited. However, a global variable

buf was incorrectly entered in the free() function. Assuming that the information about

buf was previously saved in the information tag via binary analysis (the address is 0 × 00010070, and the size is 0 × 64), the real-time defect detection process verifies whether a defect exists by following the process depicted in

Figure 6 when malloc() is first called. Line 4 in

Figure 9 shows no problem is encountered with the parameter value of the memory allocation. Therefore, the original malloc() function is called. If the result is not a NULL value, the data are saved in the information tags, as listed in

Table 2.

When the free() function is called on line 6, the target memory address for deallocation is returned as 0 × 10070, which is the address of buf. By exploring the information tags, the existence of a returned value is first verified. The allocation flag is checked because the address of buf was previously in the information tags. The value of the allocation flag for 0 × 10070 is 0 × 1, representing static allocation. Therefore, the defect in this case is determined to be the freeing of unallocated memory (M06).

3.3. Signal Hooking

Memory defects, including accessing unallocated memory (M10) or freeing previously deallocated memory (M05), result in a system crash. The information required to investigate defects, such as defect types, defect locations, function parameters, return values, and call stacks, may be lost if no action is performed at the time of the crash. Furthermore, when the location of the crash is different from that of the cause of the defect, the function–call relationship must be analyzed via the location of the crash and the previously collected defect information concerning the address of the accessed memory. Hence, the system status and call stack at the time of the crash must be collected. To detect defects without losing information when a system crashes, a signal handler, which is raised during the crash, is hooked.

When a system crashes, most operating systems create a signal linked to the cause, and the corresponding signal handler then executes the operation from the point at which the signal originated. Therefore, when the signal handler is hooked, the defect detection information is collected from the hooked signal handler; thus, the information is not lost. In the Linux system, the signals related to memory defects include SIGABRT, SIGBUS and SIGSEGV, as listed in

Table 3.

3.4. Automated Tool

We implemented the proposed method as an MCDT that runs on an ARM-based Linux system. Currently, the MCDT is implemented on Linux kernel (v.4.14.37) and has been developed to run on ARM-based systems.

When a user inputs an executable file, the executable binary file is automatically analyzed, and the executable file is run along with the automation tool (

Figure 10a). While the tool is running, it detects memory defects in real time and collects information. When the tool ends its operation (either normally or abnormally), it analyzes and reports the detected defects (

Figure 10b).

In

Figure 10b, the defect detected by the automation tool is an access defect of the deallocated memory. The tool not only detects this defect, but shows information on the process, the thread, and the function that accessed the deallocated memory, thereby providing information useful to the resolution of the defect.

As shown in

Figure 11, the automation tool comprises four modules that are loaded into the Linux system. The role of each module is listed below.

5. Conclusions

Memory corruption defects remain difficult to detect or debug, because, if only the part of the memory used by a process is monitored when memory corruption occurs, then it becomes difficult to detect the defect or analyze its cause. Therefore, to detect memory corruption, the entire memory space used by the process must be monitored. However, this increases the performance overhead; thus, it is challenging to apply this approach in a real environment. Additionally, the location of the memory corruption and that of its cause often differ, which is another reason why memory corruption issues are challenging to resolve.

We proposed an automated method of detecting memory corruption by analyzing static variables and dynamic memory usage. The proposed method was implemented as an automation tool called the MCDT, and it was experimentally compared with other tools. The results demonstrate the accuracy of the MCDT in detecting defects and analyzing their causes, as well as its excellent performance. The proposed method used the “information tag” data structure to manage the global and static variables of the remaining data space after excluding the stack space from the memory space used by the process, while using dynamic memory in the heap space, to detect defects. The performance overhead was reduced by not monitoring the entire memory space of the process. However, the dynamically and statically allocated memory was monitored to increase the accuracy of both defect-detection and cause-analysis. Additionally, the signal-hooking technique was applied, meaning defect information could be collected without data loss, even if a crash occurred.

We plan to conduct further studies to expand the static memory used for defect-detection to the entire static memory area, and to study static variables.