DeepD2V: A Novel Deep Learning-Based Framework for Predicting Transcription Factor Binding Sites from Combined DNA Sequence

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Source

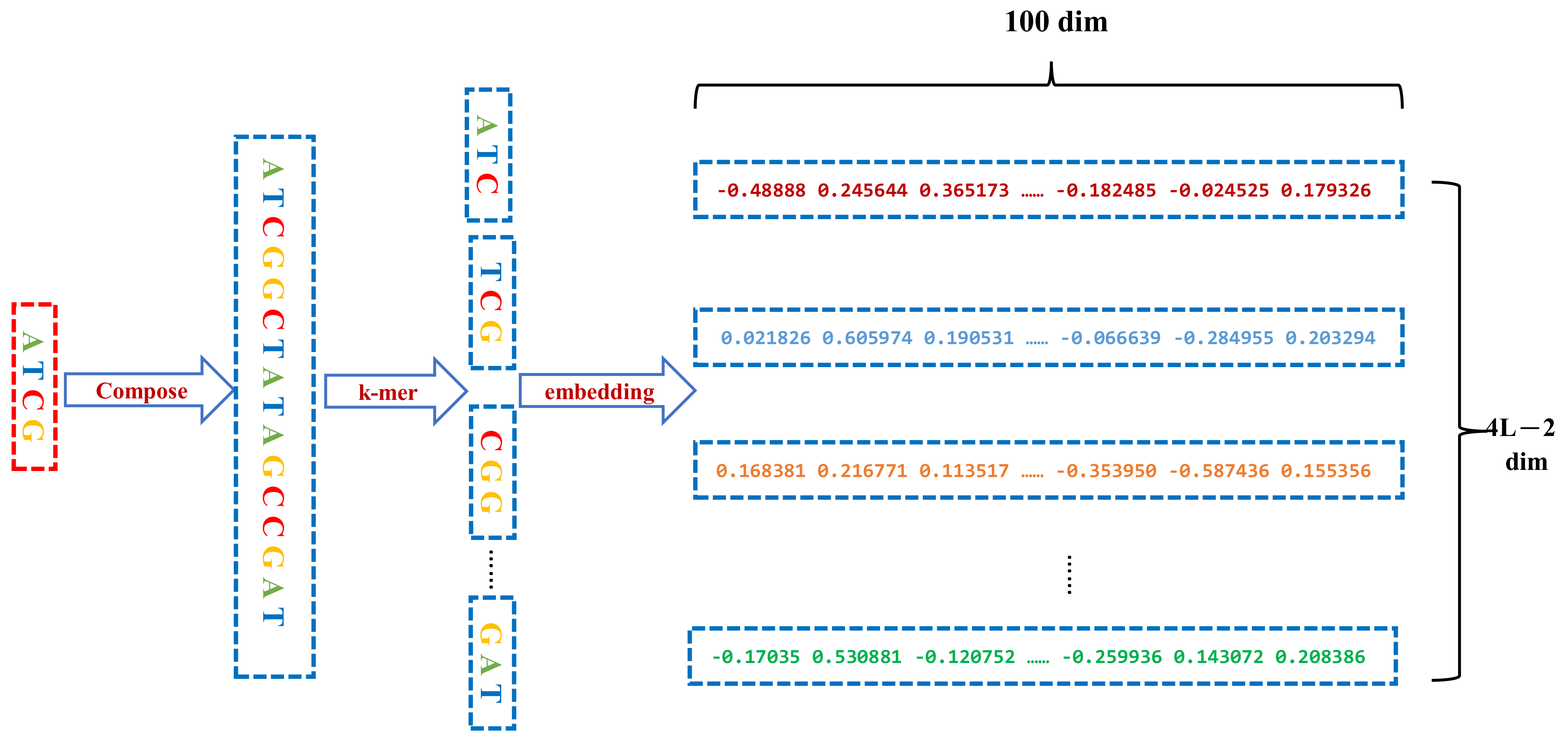

2.2. Sequence Conversion and Representation

2.3. Model Architecture

2.4. Implementation and Hyperparameter Optimization

3. Results

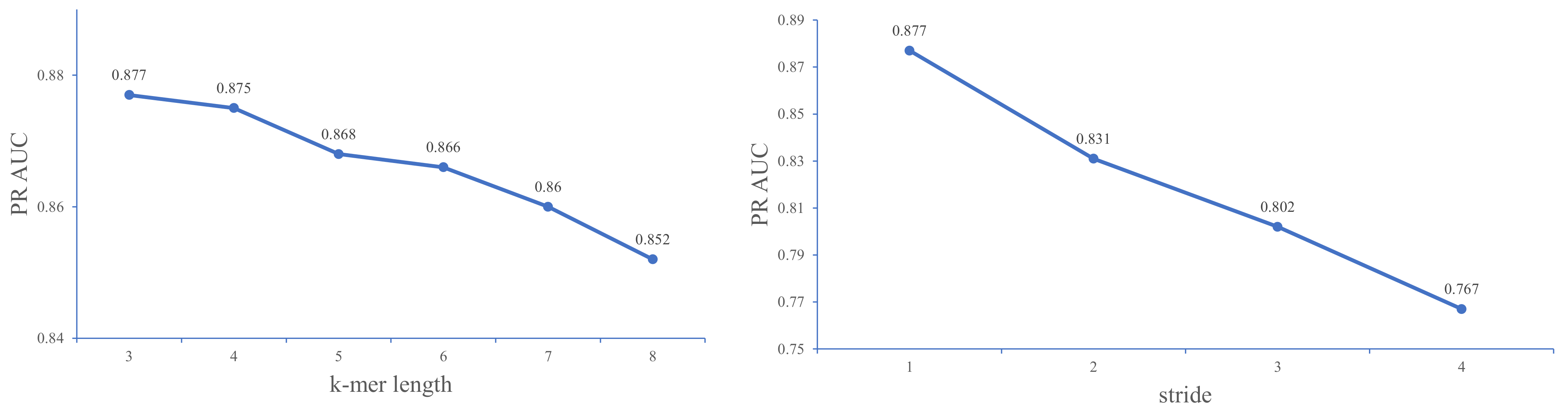

3.1. Parameter Optimization for k-Mers and Stride

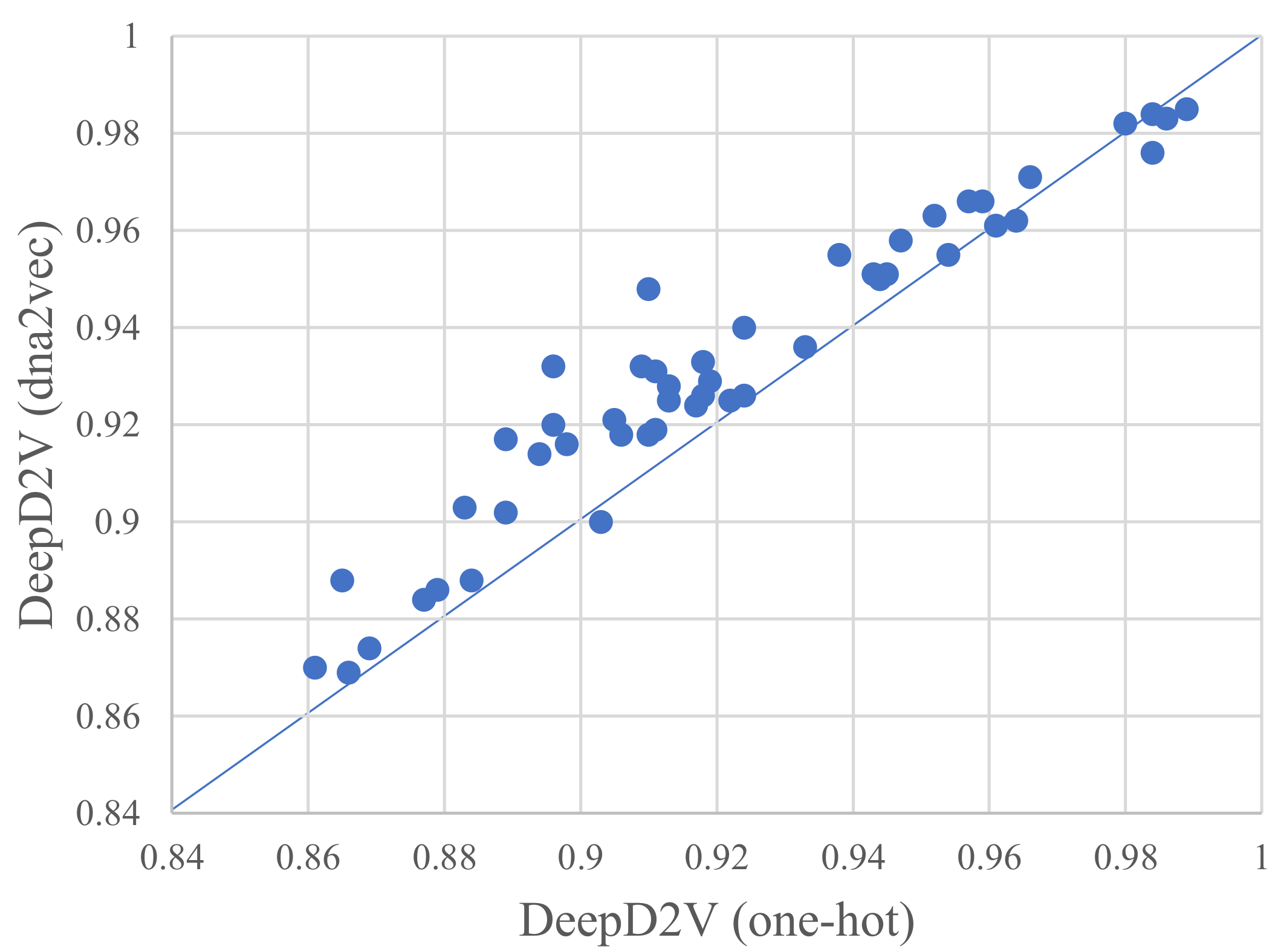

3.2. Distributed Representation Significantly Improves the Prediction Performance

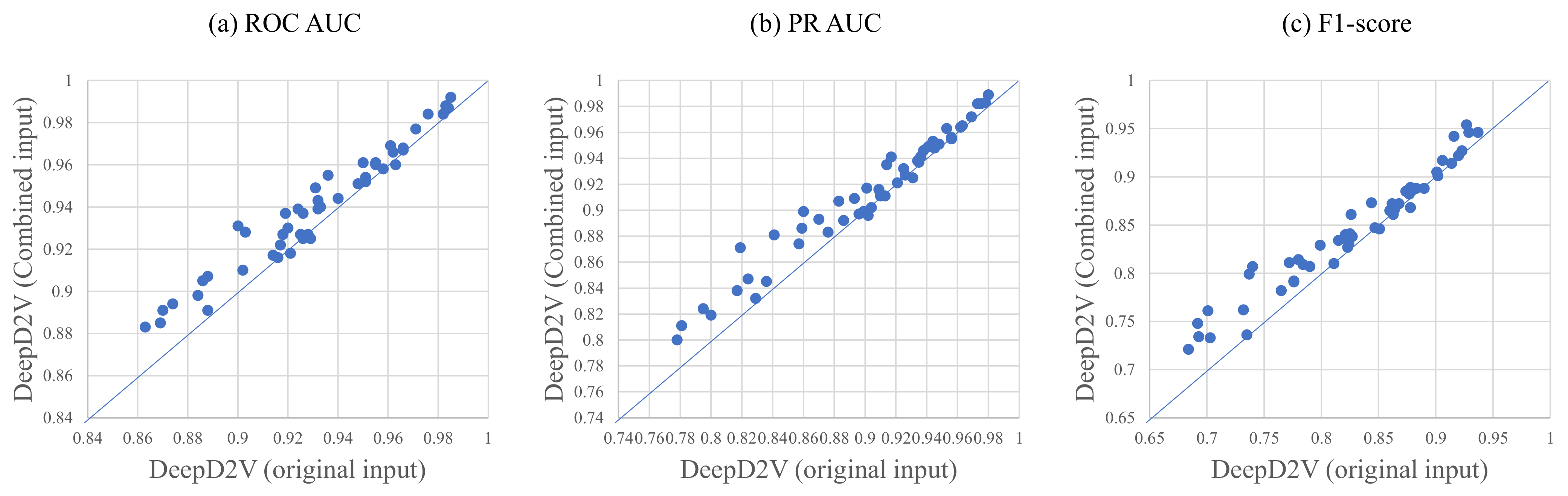

3.3. Combined Sequence Outperforms Original DNA Sequence

3.4. Performance Comparison between CNN, RNN, bi-LSTM, and DeepD2V

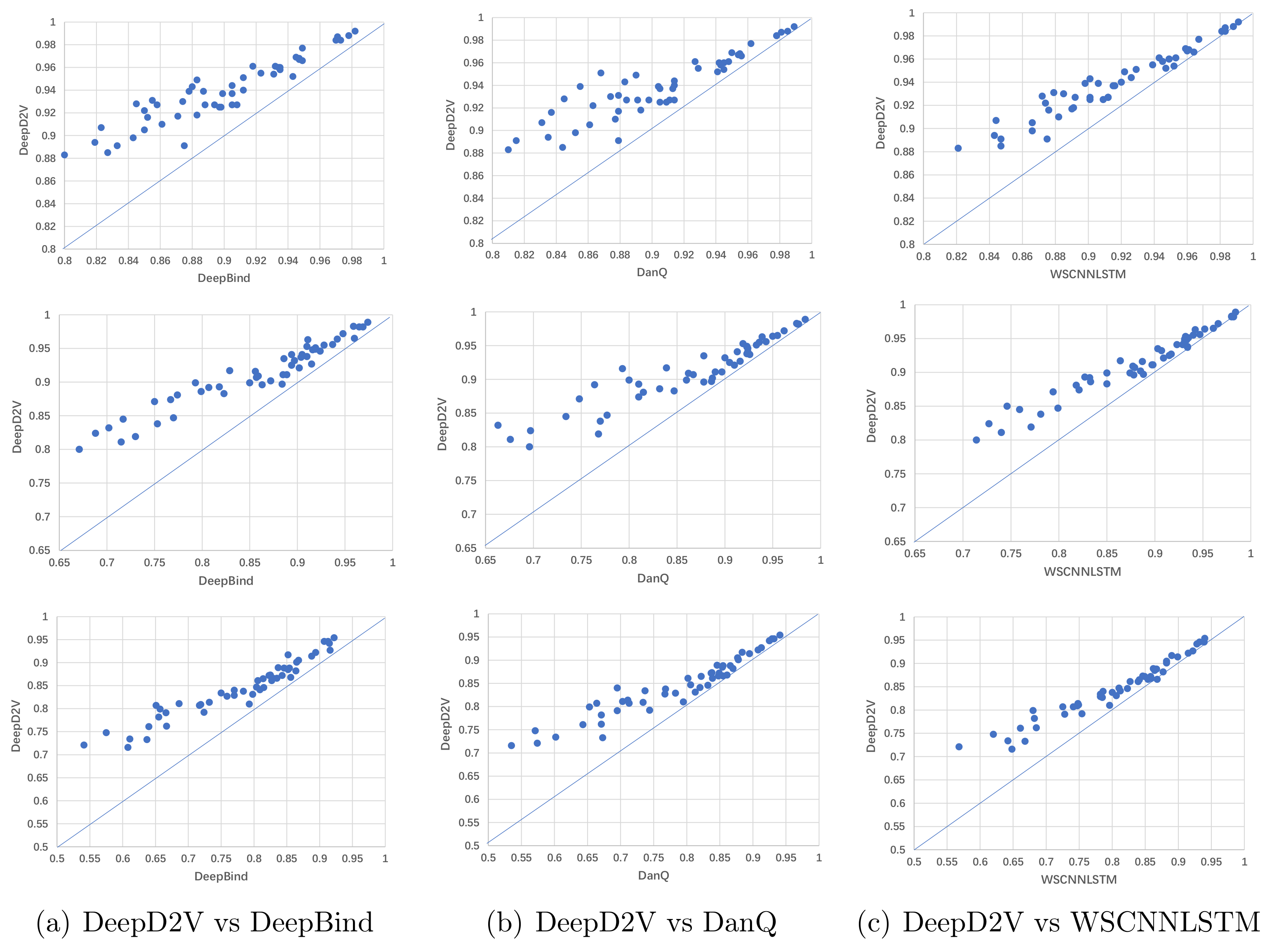

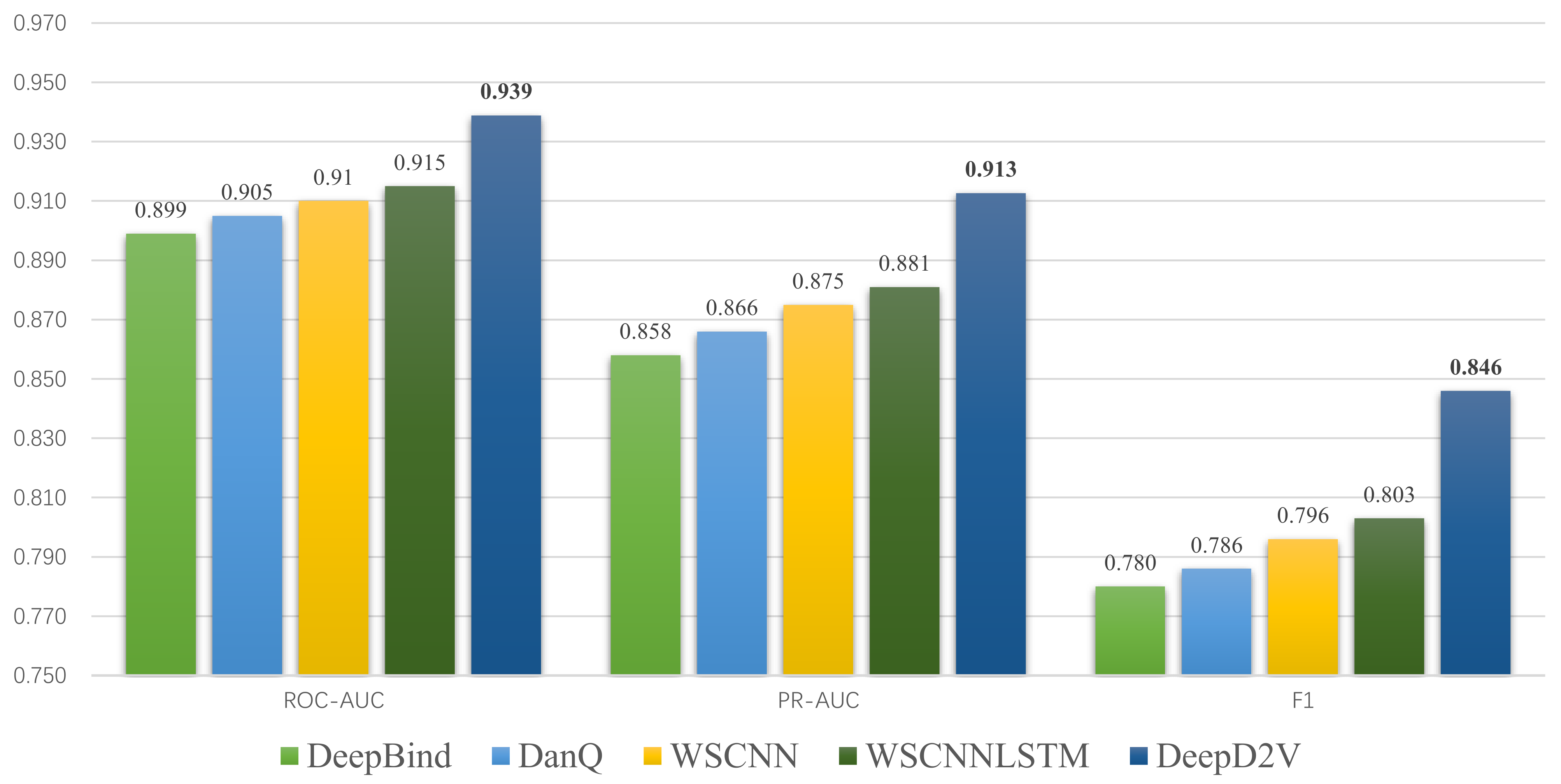

3.5. Performance Comparison with Competing Methods

4. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural networks |

| RNN | Recurrent neural networks |

| LSTM | Long-term short-term memory networks |

| ROC | Receiver operating characteristic |

| PR | Precision recall |

| AUC | Area under the curve |

References

- Elnitski, L.; Jin, V.X.; Farnham, P.J.; Jones, S.J. Locating mammalian transcription factor binding sites: A survey of computational and experimental techniques. Genome Res. 2006, 16, 1455–1464. [Google Scholar] [CrossRef] [PubMed]

- Orenstein, Y.; Shamir, R. A comparative analysis of transcription factor binding models learned from PBM, HT-SELEX and ChIP data. Nucleic Acids Res. 2014, 42, e63. [Google Scholar] [CrossRef] [PubMed]

- Kharchenko, P.V.; Tolstorukov, M.Y.; Park, P.J. Design and analysis of ChIP-seq experiments for DNA-binding proteins. Nat. Biotechnol. 2008, 26, 1351–1359. [Google Scholar] [CrossRef] [PubMed]

- Furey, T.S. ChIP–seq and beyond: New and improved methodologies to detect and characterize protein–DNA interactions. Nat. Rev. Genet. 2012, 13, 840–852. [Google Scholar] [CrossRef] [PubMed]

- Montanera, K.N.; Rhee, H.S. High-Resolution Mapping of Protein-DNA Interactions in Mouse Stem Cell-Derived Neurons using Chromatin Immunoprecipitation-Exonuclease (ChIP-Exo). J. Vis. Exp. Jove 2020. [Google Scholar] [CrossRef] [PubMed]

- Jothi, R.; Cuddapah, S.; Barski, A.; Cui, K.; Zhao, K. Genome-wide identification of in vivo protein–DNA binding sites from ChIP-Seq data. Nucleic Acids Res. 2008, 36, 5221. [Google Scholar] [CrossRef] [PubMed]

- Stormo, G.D. Consensus patterns in DNA. Methods Enzym. 1990, 183, 211–221. [Google Scholar]

- Stormo, G.D. DNA binding sites: Representation and discovery. Bioinformatics 2000, 16, 16–23. [Google Scholar] [CrossRef]

- Zhao, X.; Huang, H.; Speed, T.P. Finding short DNA motifs using permuted Markov models. J. Comput. Biol. 2005, 12, 894–906. [Google Scholar] [CrossRef]

- Badis, G.; Berger, M.F.; Philippakis, A.A.; Talukder, S.; Gehrke, A.R.; Jaeger, S.A.; Chan, E.T.; Metzler, G.; Vedenko, A.; Chen, X.; et al. Diversity and complexity in DNA recognition by transcription factors. Science 2009, 324, 1720–1723. [Google Scholar] [CrossRef]

- Ghandi, M.; Mohammad-Noori, M.; Ghareghani, N.; Lee, D.; Garraway, L.; Beer, M.A. gkmSVM: An R package for gapped-kmer SVM. Bioinformatics 2016, 32, 2205–2207. [Google Scholar] [CrossRef]

- Alipanahi, B.; Delong, A.; Weirauch, M.T.; Frey, B.J. Predicting the sequence specificities of DNA-and RNA-binding proteins by deep learning. Nat. Biotechnol. 2015, 33, 831–838. [Google Scholar] [CrossRef]

- Zhou, J.; Troyanskaya, O.G. Predicting effects of noncoding variants with deep learning–based sequence model. Nat. Methods 2015, 12, 931–934. [Google Scholar] [CrossRef]

- Quang, D.; Xie, X. DanQ: A hybrid convolutional and recurrent deep neural network for quantifying the function of DNA sequences. Nucleic Acids Res. 2016, 44, e107. [Google Scholar] [CrossRef]

- Nguyen, B.P.; Nguyen, Q.H.; Doan-Ngoc, G.N.; Nguyen-Vo, T.H.; Rahardja, S. iProDNA-CapsNet: Identifying protein-DNA binding residues using capsule neural networks. BMC Bioinform. 2019, 20. [Google Scholar] [CrossRef]

- Ali, F.; El-Sappagh, S.; Islam, S.; Kwak, D.; Kwak, K.S. A Smart Healthcare Monitoring System for Heart Disease Prediction Based On Ensemble Deep Learning and Feature Fusion. Inf. Fusion 2020, 63. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, L.; Bao, W.; Huang, D.S. Weakly-supervised convolutional neural network architecture for predicting protein-DNA binding. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 17, 679–689. [Google Scholar] [CrossRef]

- Zhang, Q.; Shen, Z.; Huang, D.S. Modeling in-vivo protein-DNA binding by combining multiple-instance learning with a hybrid deep neural network. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Zeng, H.; Edwards, M.D.; Liu, G.; Gifford, D.K. Convolutional neural network architectures for predicting DNA–protein binding. Bioinformatics 2016, 32, i121–i127. [Google Scholar] [CrossRef]

- Chen, S.; Liu, Z.; Li, M.; Huang, Y.; Wang, M.; Zeng, W.; Wei, W.; Zhang, C.; Gong, Y.; Guo, L. Potential Prognostic Predictors and Molecular Targets for Skin Melanoma Screened by Weighted Gene Co-expression Network Analysis. Curr. Gene Ther. 2020, 20, 5–14. [Google Scholar] [CrossRef]

- Pan, Y.; Zhou, S.; Guan, J. Computationally identifying hot spots in protein-DNA binding interfaces using an ensemble approach. BMC Bioinform. 2020, 21, 1–16. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Min, S.; Lee, B.; Yoon, S. Deep learning in bioinformatics. Briefings Bioinform. 2017, 18, 851–869. [Google Scholar] [CrossRef] [PubMed]

- Qin, Q.; Feng, J. Imputation for transcription factor binding predictions based on deep learning. PLoS Comput. Biol. 2017, 13, e1005403. [Google Scholar] [CrossRef]

- Gusmao, E.G.; Allhoff, M.; Zenke, M.; Costa, I.G. Analysis of computational footprinting methods for DNase sequencing experiments. Nat. Methods 2016, 13, 303–309. [Google Scholar] [CrossRef]

- Ng, P. dna2vec: Consistent vector representations of variable-length k-mers. arXiv 2017, arXiv:1701.06279. [Google Scholar]

- ENCODE Project Consortium. The ENCODE (ENCyclopedia of DNA elements) project. Science 2004, 306, 636–640. [Google Scholar] [CrossRef]

- Fletez-Brant, C.; Lee, D.; McCallion, A.S.; Beer, M.A. kmer-SVM: A web server for identifying predictive regulatory sequence features in genomic data sets. Nucleic Acids Res. 2013, 41, W544–W556. [Google Scholar] [CrossRef]

- Lee, D.; Gorkin, D.U.; Baker, M.; Strober, B.J.; Asoni, A.L.; McCallion, A.S.; Beer, M.A. A method to predict the impact of regulatory variants from DNA sequence. Nat. Genet. 2015, 47, 955. [Google Scholar] [CrossRef]

- Yao, Z.; MacQuarrie, K.L.; Fong, A.P.; Tapscott, S.J.; Ruzzo, W.L.; Gentleman, R.C. Discriminative motif analysis of high-throughput dataset. Bioinformatics 2014, 30, 775–783. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Shen, Z.; Bao, W.; Huang, D.S. Recurrent neural network for predicting transcription factor binding sites. Sci. Rep. 2018, 8, 1–10. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Min, X.; Zeng, W.; Chen, N.; Chen, T.; Jiang, R. Chromatin accessibility prediction via convolutional long short-term memory networks with k-mer embedding. Bioinformatics 2017, 33, i92–i101. [Google Scholar] [CrossRef]

- Le, N.; Nguyen, Q.H.; Chen, X.; Rahardja, S.; Nguyen, B.P. Classification of adaptor proteins using recurrent neural networks and PSSM profiles. BMC Genom. 2019, 20, 966. [Google Scholar] [CrossRef]

- Le, N.; Nguyen, B.P. Prediction of FMN Binding Sites in Electron Transport Chains based on 2-D CNN and PSSM Profiles. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019. [Google Scholar] [CrossRef]

- Nguyen, Q.H.; Nguyen-Vo, T.H.; Le, N.; Do, T.; Nguyen, B.P. iEnhancer-ECNN: Identifying enhancers and their strength using ensembles of convolutional neural networks. BMC Genom. 2019, 20, 951. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing System, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

| Calibration Hyper-Parameters | Search Space | Recommendation |

|---|---|---|

| Convolutional layer number | {1, 3, 5, 7} | 3 |

| Learning rate | {, , , } | |

| Batch Size | {1, 32, 64, 128, 256, 512} | 64 |

| Loss Function | / | Binary cross entropy |

| Optimizer | {Adam, AdaDelta} | Adam |

| Convolutional neurons number | {8, 16, 32, 64, 128} | 16 |

| Convolutional kernel size | {3, 9, 16, 24} | 3 |

| Max Pooling window size | {2, 4, 8} | 2 |

| Number of bi-LSTM neurons | {8, 16, 32, 64} | 16 |

| Dropout ratio | {0.1, 0.2, 0.5} | 0.1 |

| Cell Line | TF | DeepBind | DanQ | WSCNNLSTM | DeepD2V | Cell Line | TF | DeepBind | DanQ | WSCNNLSTM | DeepD2V |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Gm12878 | Batf | 0.887 | 0.904 | 0.906 | 0.939 | H1hesc | Rad | 0.973 | 0.978 | 0.981 | 0.984 |

| Gm12878 | Bcl1 | 0.823 | 0.831 | 0.844 | 0.907 | H1hesc | Sin3 | 0.894 | 0.898 | 0.912 | 0.927 |

| Gm12878 | Bcl3 | 0.858 | 0.884 | 0.892 | 0.927 | H1hesc | Sp1 | 0.874 | 0.874 | 0.885 | 0.930 |

| Gm12878 | Bclaf | 0.843 | 0.852 | 0.866 | 0.898 | H1hesc | Srf | 0.949 | 0.956 | 0.964 | 0.966 |

| Gm12878 | Ebf | 0.861 | 0.877 | 0.882 | 0.910 | H1hesc | Taf1 | 0.908 | 0.911 | 0.912 | 0.927 |

| Gm12878 | Egr1 | 0.934 | 0.942 | 0.949 | 0.960 | H1hesc | Tcf12 | 0.850 | 0.863 | 0.874 | 0.922 |

| Gm12878 | Elf1 | 0.905 | 0.914 | 0.912 | 0.927 | H1hesc | Usf1 | 0.973 | 0.978 | 0.981 | 0.984 |

| Gm12878 | Ets1 | 0.912 | 0.868 | 0.929 | 0.951 | H1hesc | Yy1 | 0.923 | 0.929 | 0.939 | 0.955 |

| Gm12878 | Irf4 | 0.833 | 0.815 | 0.847 | 0.891 | K562 | Atf3 | 0.931 | 0.945 | 0.952 | 0.954 |

| Gm12878 | Mef2a | 0.852 | 0.837 | 0.876 | 0.927 | K562 | E2f6 | 0.935 | 0.943 | 0.945 | 0.958 |

| Gm12878 | Nrsf | 0.899 | 0.905 | 0.915 | 0.937 | K562 | Egr1 | 0.947 | 0.954 | 0.960 | 0.967 |

| Gm12878 | Pax5c20 | 0.850 | 0.861 | 0.866 | 0.905 | K562 | Elf1 | 0.943 | 0.941 | 0.947 | 0.952 |

| Gm12878 | Pax5n19 | 0.845 | 0.845 | 0.872 | 0.928 | K562 | Ets1 | 0.0.883 | 0.893 | 0.891 | 0.918 |

| Gm12878 | Pbx3 | 0.855 | 0.879 | 0.879 | 0.931 | K562 | Fosl1 | 0.935 | 0.945 | 0.949 | 0.960 |

| Gm12878 | Pou2 | 0.819 | 0.835 | 0.843 | 0.894 | K562 | Gabp | 0.932 | 0.927 | 0.943 | 0.961 |

| Gm12878 | Pu1 | 0.949 | 0.962 | 0.967 | 0.977 | K562 | Gata2 | 0.827 | 0.844 | 0.847 | 0.885 |

| Gm12878 | Rad21 | 0.978 | 0.985 | 0.988 | 0.988 | K562 | Hey1 | 0.875 | 0.879 | 0.875 | 0.891 |

| Gm12878 | Sp1 | 0.800 | 0.810 | 0.821 | 0.883 | K562 | Max | 0.905 | 0.914 | 0.926 | 0.944 |

| Gm12878 | Srf | 0.883 | 0.890 | 0.922 | 0.949 | K562 | Nrsf | 0.880 | 0.883 | 0.901 | 0.943 |

| Gm12878 | Taf1 | 0.887 | 0.904 | 0.906 | 0.939 | K562 | Pu1 | 0.971 | 0.981 | 0.983 | 0.987 |

| Gm12878 | Tcf12 | 0.871 | 0.879 | 0.890 | 0.917 | K562 | Rad21 | 0.982 | 0.989 | 0.991 | 0.992 |

| Gm12878 | Usf1 | 0.918 | 0.948 | 0.953 | 0.961 | K562 | Srf | 0.878 | 0.855 | 0.898 | 0.939 |

| Gm12878 | Yy1 | 0.888 | 0.891 | 0.901 | 0.927 | K562 | Taf1 | 0.898 | 0.909 | 0.909 | 0.925 |

| H1hesc | Gabp | 0.905 | 0.913 | 0.916 | 0.937 | K562 | Usf1 | 0.973 | 0.978 | 0.981 | 0.984 |

| H1hesc | Nrsf | 0.945 | 0.950 | 0.959 | 0.969 | K562 | Yy1 | 0.912 | 0.914 | 0.920 | 0.940 |

| Average ROC AUC | 0.899 | 0.905 | 0.915 | 0.939 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, L.; Wu, H.; Liu, X.; Liu, H. DeepD2V: A Novel Deep Learning-Based Framework for Predicting Transcription Factor Binding Sites from Combined DNA Sequence. Int. J. Mol. Sci. 2021, 22, 5521. https://doi.org/10.3390/ijms22115521

Deng L, Wu H, Liu X, Liu H. DeepD2V: A Novel Deep Learning-Based Framework for Predicting Transcription Factor Binding Sites from Combined DNA Sequence. International Journal of Molecular Sciences. 2021; 22(11):5521. https://doi.org/10.3390/ijms22115521

Chicago/Turabian StyleDeng, Lei, Hui Wu, Xuejun Liu, and Hui Liu. 2021. "DeepD2V: A Novel Deep Learning-Based Framework for Predicting Transcription Factor Binding Sites from Combined DNA Sequence" International Journal of Molecular Sciences 22, no. 11: 5521. https://doi.org/10.3390/ijms22115521

APA StyleDeng, L., Wu, H., Liu, X., & Liu, H. (2021). DeepD2V: A Novel Deep Learning-Based Framework for Predicting Transcription Factor Binding Sites from Combined DNA Sequence. International Journal of Molecular Sciences, 22(11), 5521. https://doi.org/10.3390/ijms22115521