Convergence of Nanotechnology and Machine Learning: The State of the Art, Challenges, and Perspectives

Abstract

1. Introduction

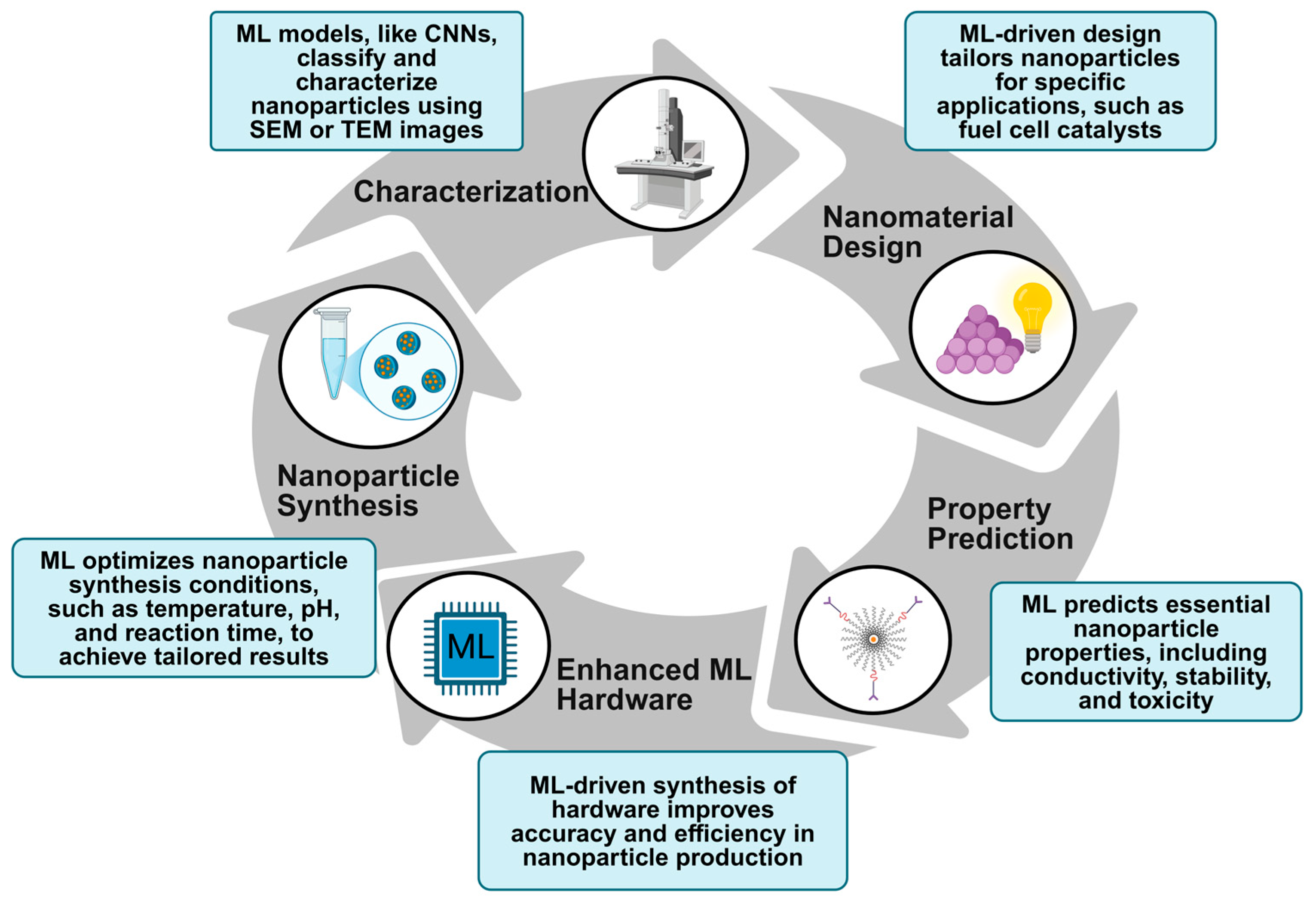

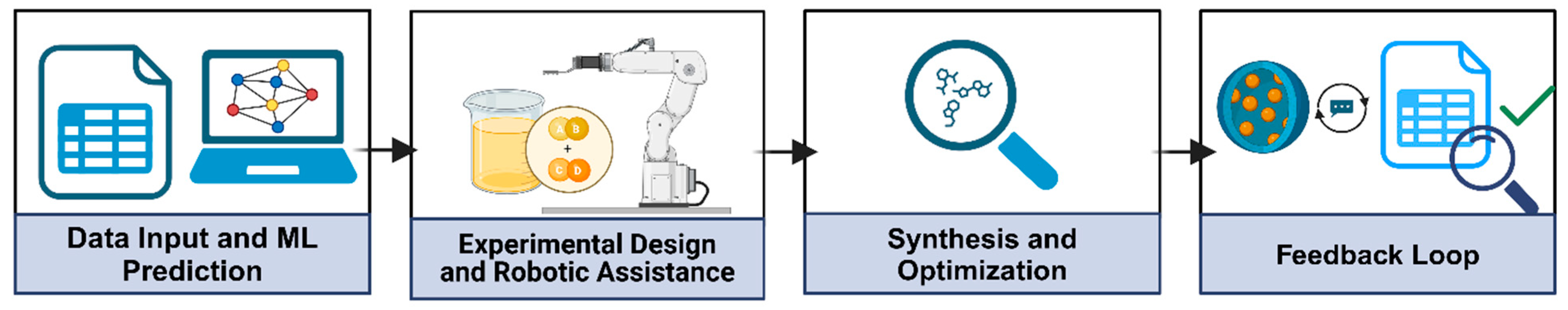

1.1. Synthesis of Nanoparticles

1.2. Nanoscale Characterization

1.3. Predicting Nanomaterial Properties

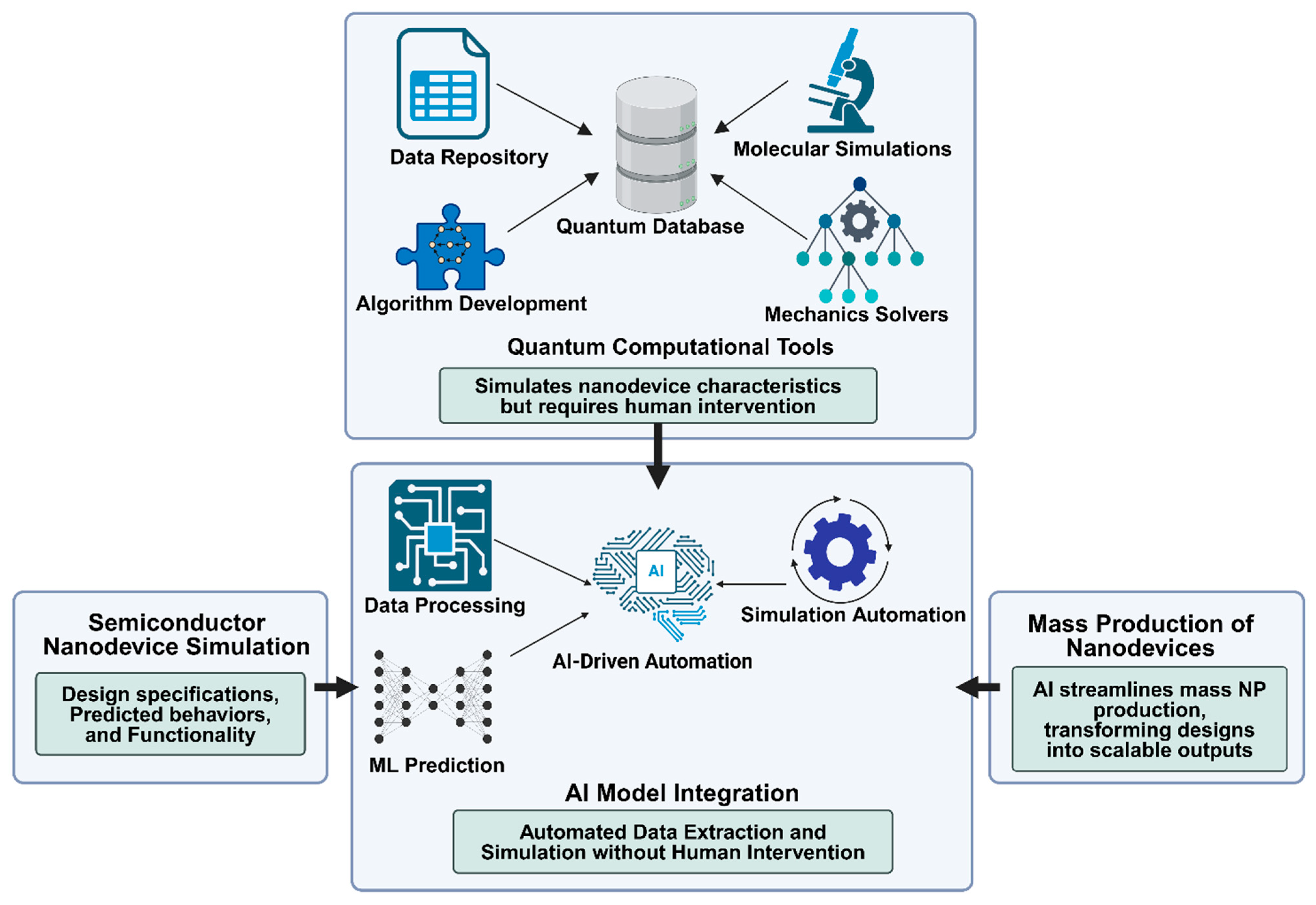

1.4. Nanodevice Design

2. Impact of Nanotechnology on ML

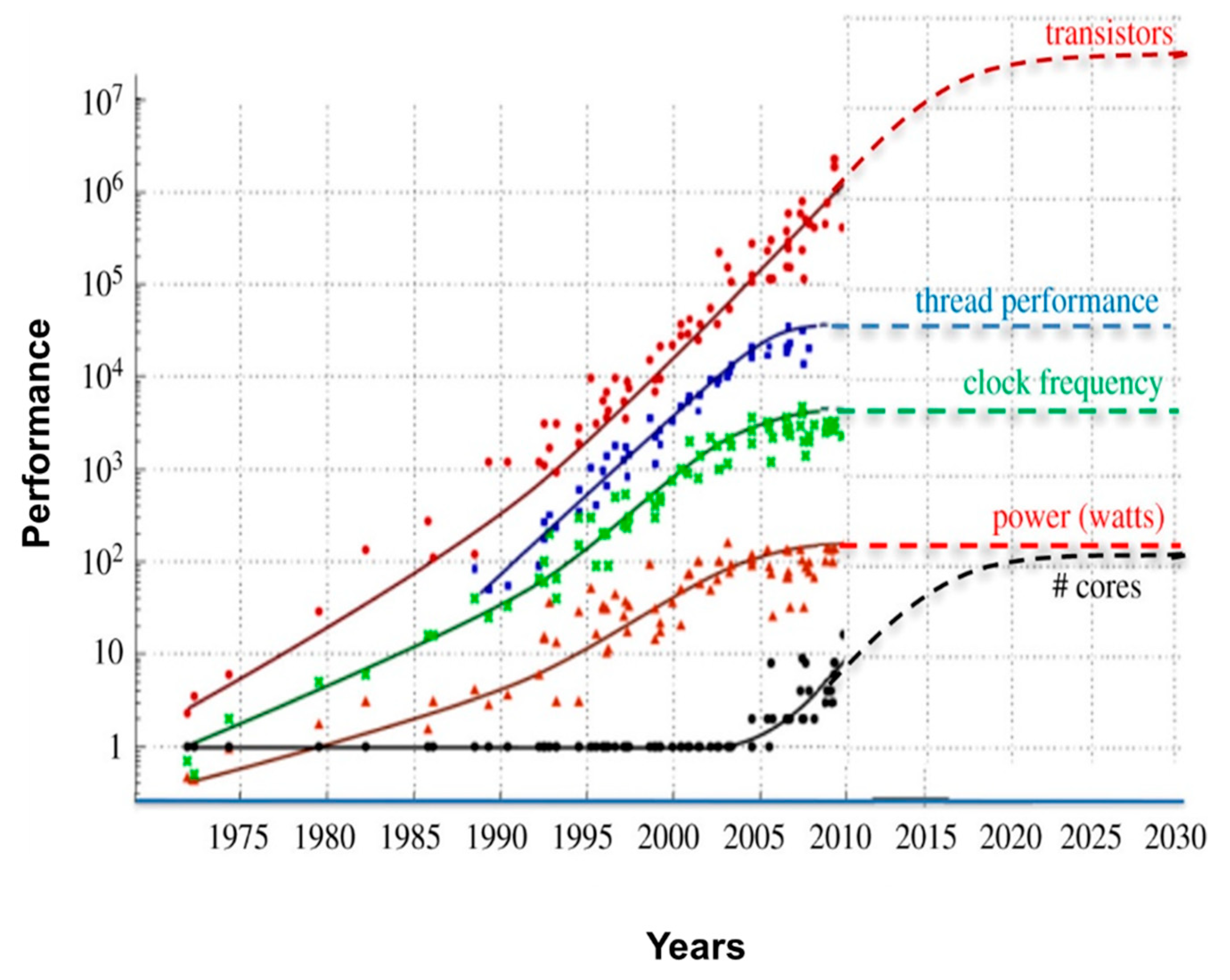

2.1. Hardware Acceleration

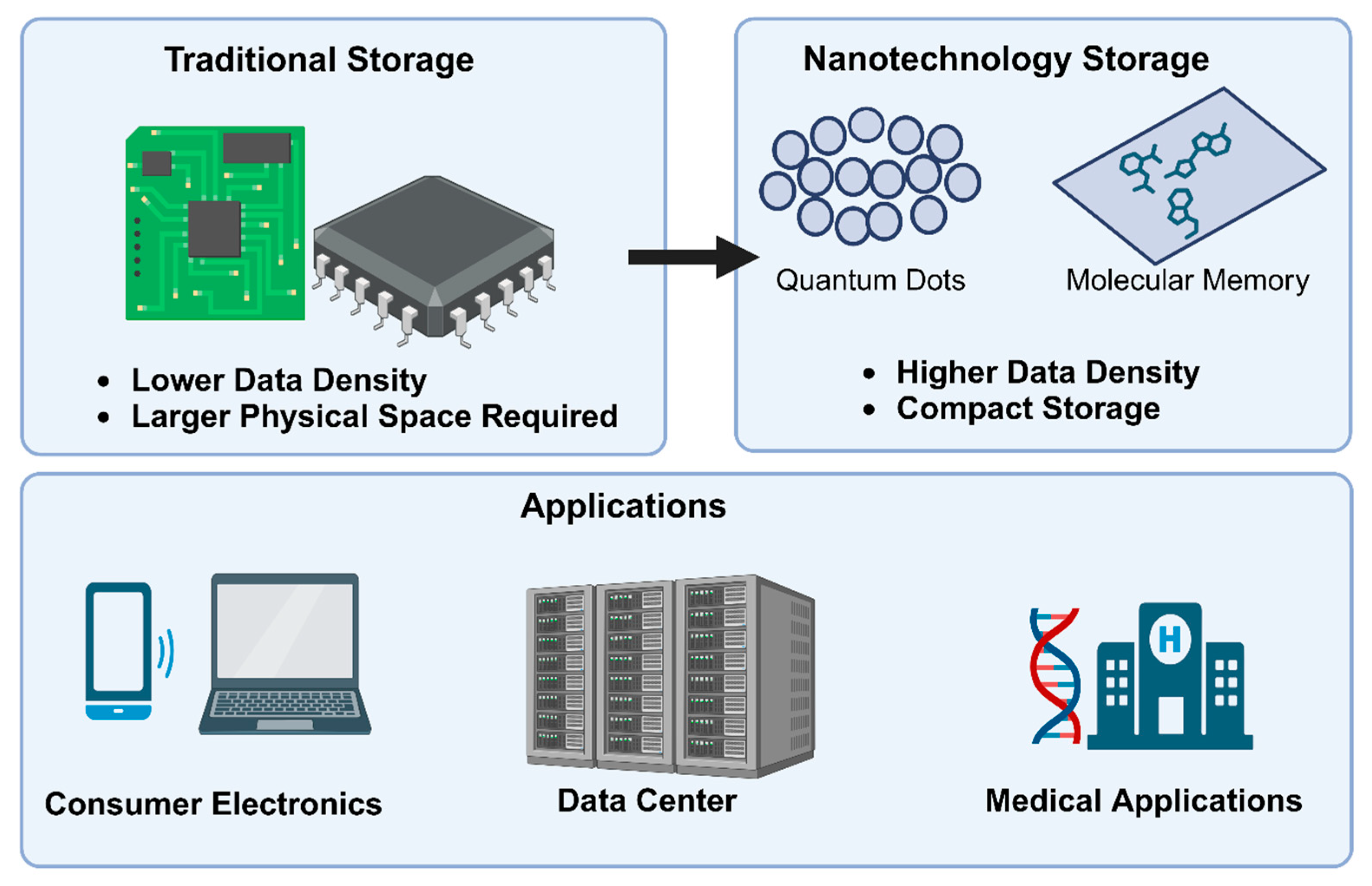

2.2. Data Storage

2.3. Nanomaterials for Data Preprocessing

2.4. Energy Efficiency

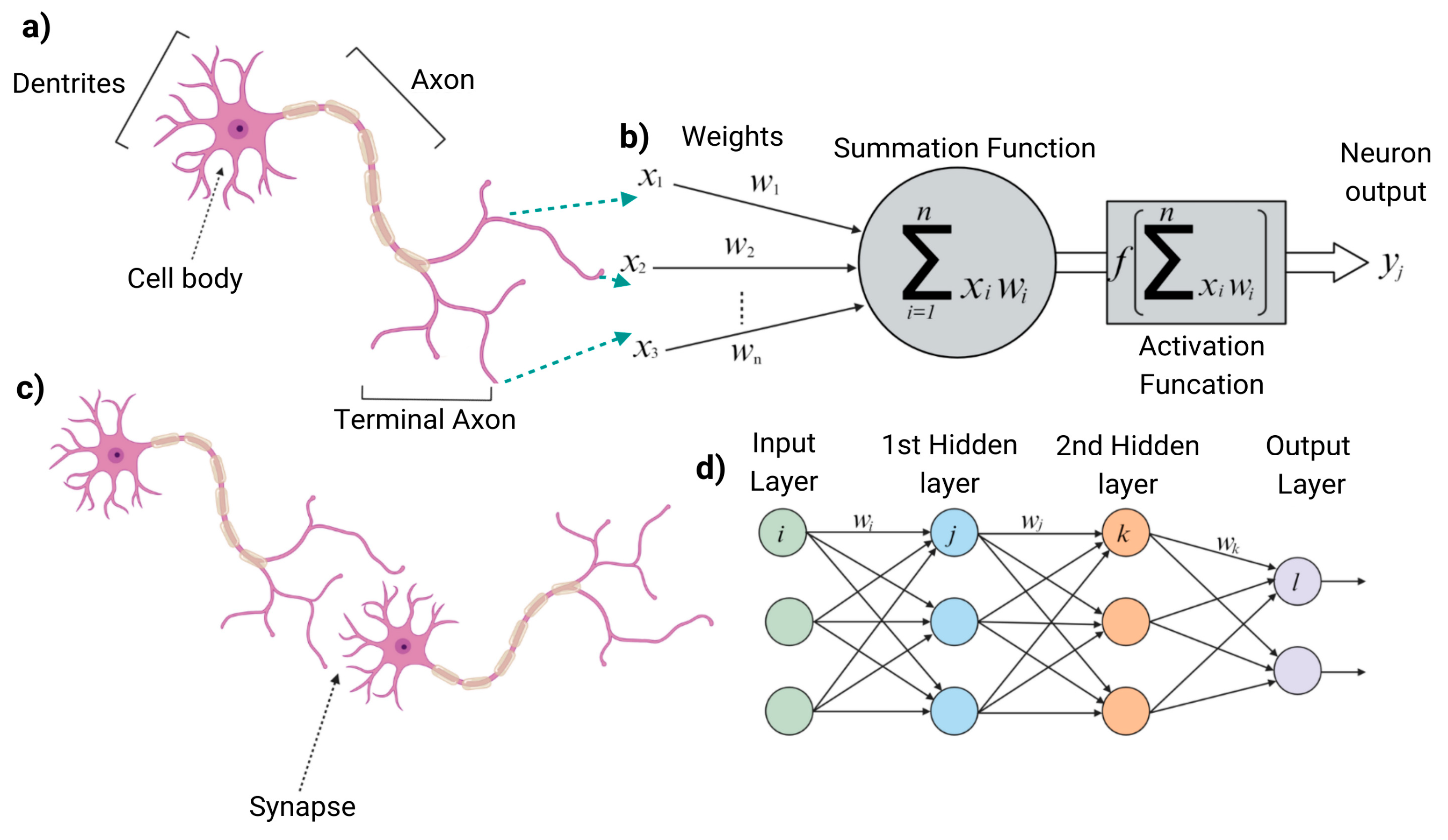

2.5. Bio-Inspired Computing

3. Limitations and Future Directions

3.1. Current Issues with Nanotechnology and ML

3.2. Future Directions

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ML | Machine Learning |

| AI | Artificial Intelligence |

| WANDA | Workstation for Automated Nanomaterials Discovery and Analysis |

| AMP | Antimicrobial Peptide |

| CVD | Chemical Vapor Deposition |

| CNT | Carbon Nanotube |

| SEM | Scanning Electron Microscope |

| SVM | Support Vector Machines |

| RF | Random Forest |

| RDF | Radial Distribution Function |

| PNC | Polymer Nanocomposite |

| MRI | Magnetic Resonance Imaging |

| DNN | Deep Neural Network |

| LED | Light-Emitting Diode |

| GNN | Graph Neural Networks |

| CMOS | Complementary Metal-Oxide Semiconductors |

| CNN | Convoluted Neural Network |

| SNN | Spiking Neural Network |

| DSF | Damage Sensitive Features |

| EHS | Environment, Health, and Safety |

References

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Foote, K.D. A Brief History of Machine Learning. Dataversity Webpage, March 2019, p. 26.

- Zhou, L.; Yao, A.M.; Wu, Y.; Hu, Z.; Huang, Y.; Hong, Z. Machine learning assisted prediction of cathode materials for Zn-ion batteries. Adv. Theory Simul. 2021, 4, 2100196. [Google Scholar] [CrossRef]

- Wang, X.; Lim, E.G.; Hoettges, K.; Song, P. A Review of Carbon Nanotubes, Graphene and Nanodiamond Based Strain Sensor in Harsh Environments. C 2023, 9, 108. [Google Scholar] [CrossRef]

- Qiu, X.; Parcollet, T.; Fernandez-Marques, J.; Gusmao, P.P.B.; Gao, Y.; Beutel, D.J.; Topal, T.; Mathur, A.; Lane, N.D. A first look into the carbon footprint of federated learning. J. Mach. Learn. Res. 2023, 24, 1–23. [Google Scholar]

- Yao, L.; Chen, Q. Machine learning in nanomaterial electron microscopy data analysis. In Intelligent Nanotechnology; Elsevier: Amsterdam, The Netherlands, 2023; pp. 279–305. [Google Scholar]

- Tulevski, G.S.; Franklin, A.D.; Frank, D.; Lobez, J.M.; Cao, Q.; Park, H.; Afzali, A.; Han, S.-J.; Hannon, J.B.; Haensch, W. Toward high-performance digital logic technology with carbon nanotubes. ACS Nano 2014, 8, 8730–8745. [Google Scholar] [CrossRef]

- Verhelst, M.; Murmann, B. Machine learning at the edge. In NANO-CHIPS 2030: On-Chip AI for an Efficient Data-Driven World; Springer: Berlin/Heidelberg, Germany, 2020; pp. 293–322. [Google Scholar]

- Jiang, Y.; Salley, D.; Sharma, A.; Keenan, G.; Mullin, M.; Cronin, L. An artificial intelligence enabled chemical synthesis robot for exploration and optimization of nanomaterials. Sci. Adv. 2022, 8, eabo2626. [Google Scholar] [CrossRef]

- Zhu, X. AI and Robotic Technology in Materials and Chemistry Research; John and Wiley and Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

- Park, J.; Kim, Y.M.; Hong, S.; Han, B.; Nam, K.T.; Jung, Y. Closed-loop optimization of nanoparticle synthesis enabled by robotics and machine learning. Matter 2023, 6, 677–690. [Google Scholar] [CrossRef]

- Chan, E.M.; Xu, C.; Mao, A.W.; Han, G.; Owen, J.S.; Cohen, B.E.; Milliron, D.J. Reproducible, high-throughput synthesis of colloidal nanocrystals for optimization in multidimensional parameter space. Nano Lett. 2010, 10, 1874–1885. [Google Scholar] [CrossRef]

- Sowers, A.; Wang, G.; Xing, M.; Li, B. Advances in antimicrobial peptide discovery via machine learning and delivery via nanotechnology. Microorganisms 2023, 11, 1129. [Google Scholar] [CrossRef]

- Cotta, M.A. Quantum Dots and Their Applications: What Lies Ahead? ACS Appl. Nano Mater. 2020, 3, 4920–4924. [Google Scholar] [CrossRef]

- Gakis, G.P.; Termine, S.; Trompeta, A.-F.A.; Aviziotis, I.G.; Charitidis, C.A. Unraveling the mechanisms of carbon nanotube growth by chemical vapor deposition. Chem. Eng. J. 2022, 445, 136807. [Google Scholar] [CrossRef]

- Jia, Y.; Hou, X.; Wang, Z.; Hu, X. Machine learning boosts the design and discovery of nanomaterials. ACS Sustain. Chem. Eng. 2021, 9, 6130–6147. [Google Scholar] [CrossRef]

- Modarres, M.H.; Aversa, R.; Cozzini, S.; Ciancio, R.; Leto, A.; Brandino, G.P. Neural network for nanoscience scanning electron microscope image recognition. Sci. Rep. 2017, 7, 13282. [Google Scholar] [CrossRef] [PubMed]

- Anagun, Y.; Isik, S.; Olgun, M.; Sezer, O.; Basciftci, Z.B.; Arpacioglu, N.G.A. The classification of wheat species based on deep convolutional neural networks using scanning electron microscope (SEM) imaging. Eur. Food Res. Technol. 2023, 249, 1023–1034. [Google Scholar] [CrossRef]

- Wang, X.; Li, J.; Ha, H.D.; Dahl, J.C.; Ondry, J.C.; Moreno-Hernandez, I.; Head-Gordon, T.; Alivisatos, A.P. AutoDetect-mNP: An unsupervised machine learning algorithm for automated analysis of transmission electron microscope images of metal nanoparticles. JACS Au 2021, 1, 316–327. [Google Scholar] [CrossRef]

- Zelenka, C.; Kamp, M.; Strohm, K.; Kadoura, A.; Johny, J.; Koch, R.; Kienle, L. Automated classification of nanoparticles with various ultrastructures and sizes via deep learning. Ultramicroscopy 2023, 246, 113685. [Google Scholar] [CrossRef]

- Brown, K.A.; Brittman, S.; Maccaferri, N.; Jariwala, D.; Celano, U. Machine learning in nanoscience: Big data at small scales. Nano Lett. 2019, 20, 2–10. [Google Scholar] [CrossRef]

- Timoshenko, J.; Duan, Z.; Henkelman, G.; Crooks, R.; Frenkel, A. Solving the structure and dynamics of metal nanoparticles by combining X-ray absorption fine structure spectroscopy and atomistic structure simulations. Annu. Rev. Anal. Chem. 2019, 12, 501–522. [Google Scholar] [CrossRef]

- Bao, H.; Min, L.; Bu, F.; Wang, S.; Meng, J. Recent advances of liquid biopsy: Interdisciplinary strategies toward clinical decision-making. Interdiscip. Med. 2023, 1, e20230021. [Google Scholar] [CrossRef]

- Sagar, M.S.I. Intelligent Devices for IoT Applications; Washington State University: Pullman, WA, USA, 2023. [Google Scholar]

- Darwish, M.A.; Abd-Elaziem, W.; Elsheikh, A.; Zayed, A.A. Advancements in Nanomaterials for Nanosensors: A Comprehensive Review. Nanoscale Adv. 2024, 6, 4015–4046. [Google Scholar] [CrossRef]

- Singh, R.; Tipu, R.K.; Mir, A.A.; Patel, M. Predictive Modelling of Flexural Strength in Recycled Aggregate-Based Concrete: A Comprehensive Approach with Machine Learning and Global Sensitivity Analysis. Iran. J. Sci. Technol. Trans. Civ. Eng. 2024, 1–26. [Google Scholar] [CrossRef]

- Champa-Bujaico, E.; García-Díaz, P.; Díez-Pascual, A.M. Machine learning for property prediction and optimization of polymeric nanocomposites: A state-of-the-art. Int. J. Mol. Sci. 2022, 23, 10712. [Google Scholar] [CrossRef]

- Khan, Z.; Gul, A.; Perperoglou, A.; Miftahuddin, M.; Mahmoud, O.; Adler, W.; Lausen, B. Ensemble of optimal trees, random forest and random projection ensemble classification. Adv. Data Anal. Classif. 2020, 14, 97–116. [Google Scholar] [CrossRef]

- Sang, L.; Wang, Y.; Zong, C.; Wang, P.; Zhang, H.; Guo, D.; Yuan, B.; Pan, Y. Machine learning for evaluating the cytotoxicity of mixtures of nano-TiO2 and heavy metals: qSAR model apply random forest algorithm after clustering analysis. Molecules 2022, 27, 6125. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Jin, X.; Jiao, Z.; Gao, L.; Dai, X.; Cheng, L.; Wang, Y.; Yan, L.-T. Designing Antibacterial Materials through Simulation and Theory. J. Mater. Chem. B 2024, 2, 9155–9172. [Google Scholar] [CrossRef]

- Mak, K.-K.; Wong, Y.-H.; Pichika, M.R. Artificial intelligence in drug discovery and development. In Drug Discovery and Evaluation: Safety and Pharmacokinetic Assays; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–38. [Google Scholar]

- Yan, X.; Zhang, J.; Russo, D.P.; Zhu, H.; Yan, B. Prediction of Nano–Bio Interactions through Convolutional Neural Network Analysis of Nanostructure Images. ACS Sustain. Chem. Eng. 2020, 8, 19096–19104. [Google Scholar] [CrossRef]

- Hart, G.L.; Mueller, T.; Toher, C.; Curtarolo, S. Machine learning for alloys. Nat. Rev. Mater. 2021, 6, 730–755. [Google Scholar] [CrossRef]

- He, T.; Huo, H.; Bartel, C.J.; Wang, Z.; Cruse, K.; Ceder, G. Precursor recommendation for inorganic synthesis by machine learning materials similarity from scientific literature. Sci. Adv. 2023, 9, eadg8180. [Google Scholar] [CrossRef]

- Guntuboina, C.; Das, A.; Mollaei, P.; Kim, S.; Farimani, A.B. Peptidebert: A language model based on transformers for peptide property prediction. J. Phys. Chem. Lett. 2023, 14, 10427–10434. [Google Scholar] [CrossRef]

- Adir, O.; Poley, M.; Chen, G.; Froim, S.; Krinsky, N.; Shklover, J.; Shainsky-Roitman, J.; Lammers, T.; Schroeder, A. Integrating artificial intelligence and nanotechnology for precision cancer medicine. Adv. Mater. 2020, 32, 1901989. [Google Scholar] [CrossRef]

- Ismail, M.; Rasheed, M.; Mahata, C.; Kang, M.; Kim, S. Nano-crystalline ZnO memristor for neuromorphic computing: Resistive switching and conductance modulation. J. Alloys Compd. 2023, 960, 170846. [Google Scholar] [CrossRef]

- Ayush, K.; Seth, A.; Patra, T.K. nanoNET: Machine learning platform for predicting nanoparticles distribution in a polymer matrix. Soft Matter 2023, 19, 5502–5512. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.G.; Jagtap, V.A. Two Heads Are Better Than One: Unravelling the potential Impact of Artificial Intelligence in Nanotechnology. Nano TransMed. 2024, 3, 100041. [Google Scholar] [CrossRef]

- Sandbhor, P.; Palkar, P.; Bhat, S.; John, G.; Goda, J.S. Nanomedicine as a multimodal therapeutic paradigm against cancer: On the way forward in advancing precision therapy. Nanoscale 2024, 16, 6330–6364. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.V.; Varma, M.; Laux, P.; Choudhary, S.; Datusalia, A.K.; Gupta, N.; Luch, A.; Gandhi, A.; Kulkarni, P.; Nath, B. Artificial intelligence and machine learning disciplines with the potential to improve the nanotoxicology and nanomedicine fields: A comprehensive review. Arch. Toxicol. 2023, 97, 963–979. [Google Scholar] [CrossRef]

- Hamilton, S.; Kingston, B.R. Applying artificial intelligence and computational modeling to nanomedicine. Curr. Opin. Biotechnol. 2024, 85, 103043. [Google Scholar] [CrossRef]

- Uusitalo, M.A.; Peltonen, J.; Ryhänen, T. Machine learning: How it can help nanocomputing. J. Comput. Theor. Nanosci. 2011, 8, 1347–1363. [Google Scholar] [CrossRef]

- Higgins, K.; Kingston, B.R. High-throughput study of antisolvents on the stability of multicomponent metal halide perovskites through robotics-based synthesis and machine learning approaches. J. Am. Chem. Soc. 2021, 143, 19945–19955. [Google Scholar] [CrossRef]

- Nandipati, M.; Fatoki, O.; Desai, S. Bridging Nanomanufacturing and Artificial Intelligence—A Comprehensive Review. Materials 2024, 17, 1621. [Google Scholar] [CrossRef]

- Badini, S.; Regondi, S.; Pugliese, R. Unleashing the power of artificial intelligence in materials design. Materials 2023, 16, 5927. [Google Scholar] [CrossRef]

- Karapiperis, K.; Kochmann, D.M. Prediction and control of fracture paths in disordered architected materials using graph neural networks. Commun. Eng. 2023, 2, 32. [Google Scholar] [CrossRef]

- Belay, T.; Worku, L.A.; Bachheti, R.K.; Bachheti, A.; Husen, A. Nanomaterials: Introduction, synthesis, characterization, and applications. In Advances in Smart Nanomaterials and Their Applications; Elsevier: Amsterdam, The Netherlands, 2023; pp. 1–21. [Google Scholar]

- Swanson, B. Moore’s Law at 50; American Enterprise Institute: Washington, DC, USA, 2015. [Google Scholar]

- Shalf, J. The future of computing beyond Moore’s Law. Philos. Trans. R. Soc. A 2020, 378, 20190061. [Google Scholar] [CrossRef] [PubMed]

- Taha, T.B.; Barzinjy, A.A.; Hussain, F.H.S.; Nurtayeva, T. Nanotechnology and computer science: Trends and advances. Mem.-Mater. Devices Circuits Syst. 2022, 2, 100011. [Google Scholar] [CrossRef]

- Rakheja, S.; Kumar, V.; Naeemi, A. Evaluation of the potential performance of graphene nanoribbons as on-chip interconnects. Proc. IEEE 2013, 101, 1740–1765. [Google Scholar] [CrossRef]

- Cai, Q.; Ye, J.; Jahannia, B.; Wang, H.; Patil, C.; Redoy, R.A.F.; Sidam, A.; Sameer, S.; Aljohani, S.; Umer, M.; et al. Comprehensive Study and Design of Graphene Transistor. Micromachines 2024, 15, 406. [Google Scholar] [CrossRef]

- Cooper, K. Scalable nanomanufacturing—A review. Micromachines 2017, 8, 20. [Google Scholar] [CrossRef]

- Tian, C.; Wei, L.; Li, Y.; Jiang, J. Recent progress on two-dimensional neuromorphic devices and artificial neural network. Curr. Appl. Phys. 2021, 31, 182–198. [Google Scholar] [CrossRef]

- Sun, B.; Chen, Y.; Zhou, G.; Cao, Z.; Yang, C.; Du, J.; Chen, X.; Shao, J. Memristor-based artificial chips. ACS Nano 2023, 18, 14–27. [Google Scholar] [CrossRef]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef]

- Aimone, J.B. A roadmap for reaching the potential of brain-derived computing. Adv. Intell. Syst. 2021, 3, 2000191. [Google Scholar] [CrossRef]

- Malik, S.; Muhammad, K.; Waheed, Y. Nanotechnology: A Revolution in Modern Industry. Molecules 2023, 28, 661. [Google Scholar] [CrossRef] [PubMed]

- Berggren, K.; Xia, Q.; Likharev, K.K.; Strukov, D.B.; Jiang, H.; Mikolajick, T.; Querlioz, D.; Salinga, M.; Erickson, J.R.; Pi, S.; et al. Roadmap on emerging hardware and technology for machine learning. Nanotechnology 2020, 32, 012002. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Mao, X.; Wang, F.; Zuo, X.; Fan, C. Data Storage Using DNA. Adv. Mater. 2024, 36, 2307499. [Google Scholar] [CrossRef] [PubMed]

- Zhirnov, V.V.; Cavin, R.; Hutchby, J.; Bourianoff, G. Limits to binary logic switch scaling-a gedanken model. Proc. IEEE 2003, 91, 1934–1939. [Google Scholar] [CrossRef]

- Molas, G.; Nowak, E. Advances in Emerging Memory Technologies: From Data Storage to Artificial Intelligence. Appl. Sci. 2021, 11, 11254. [Google Scholar] [CrossRef]

- González-Manzano, L.; Brost, G.; Aumueller, M. An architecture for trusted PaaS cloud computing for personal data. In Trusted Cloud Computing; Springer: Berlin/Heidelberg, Germany, 2014; pp. 239–258. [Google Scholar]

- Dananjaya, V.; Marimuthu, S.; Yang, R.; Grace, A.N.; Abeykoon, C. Synthesis, properties, applications, 3D printing and machine learning of graphene quantum dots in polymer nanocomposites. Prog. Mater. Sci. 2024, 144, 101282. [Google Scholar] [CrossRef]

- Pfaendler, S.M.-L.; Konson, K.; Greinert, F. Advancements in Quantum Computing—Viewpoint: Building Adoption and Competency in Industry. Datenbank-Spektrum 2024, 24, 5–20. [Google Scholar] [CrossRef]

- Dias, C. Resistive Switching in MgO and Si/Ag Metal-Insulator-Metal Structures. Ph.D. Thesis, Universidade do Porto (Portugal), Porto, Portugal, 2019. [Google Scholar]

- Lee, M.; Seung, H.; Kwon, J.I.; Choi, M.K.; Kim, D.-H.; Choi, C. Nanomaterial-based synaptic optoelectronic devices for in-sensor preprocessing of image data. ACS Omega 2023, 8, 5209–5224. [Google Scholar] [CrossRef]

- Wan, W.; Kubendran, R.; Schaefer, C.; Eryilmaz, S.B.; Zhang, W.; Wu, D.; Deiss, S.; Raina, P.; Qian, H.; Gao, B.; et al. A compute-in-memory chip based on resistive random-access memory. Nature 2022, 608, 504–512. [Google Scholar] [CrossRef]

- Mullani, N.B.; Kumbhar, D.D.; Lee, D.; Kwon, M.J.; Cho, S.; Oh, N.; Kim, E.; Dongale, T.D.; Nam, S.Y.; Park, J.H. Surface Modification of a Titanium Carbide MXene Memristor to Enhance Memory Window and Low-Power Operation. Adv. Funct. Mater. 2023, 33, 2300343. [Google Scholar] [CrossRef]

- Matsukatova, A.N.; Vdovichenko, A.Y.; Patsaev, T.D.; Forsh, P.A.; Kashkarov, P.K.; Demin, V.A.; Emelyanov, A.V. Scalable nanocomposite parylene-based memristors: Multifilamentary resistive switching and neuromorphic applications. Nano Res. 2023, 16, 3207–3214. [Google Scholar] [CrossRef]

- Liu, C.; Cohen, I.; Vishinkin, R.; Haick, H. Nanomaterial-Based Sensor Array Signal Processing and Tuberculosis Classification Using Machine Learning. J. Low Power Electron. Appl. 2023, 13, 39. [Google Scholar] [CrossRef]

- Marković, D.; Mizrahi, A.; Querlioz, D.; Grollier, J. Physics for neuromorphic computing. Nat. Rev. Phys. 2020, 2, 499–510. [Google Scholar] [CrossRef]

- Jiang, S.; Nie, S.; He, Y.; Liu, R.; Chen, C.; Wan, Q. Emerging synaptic devices: From two-terminal memristors to multiterminal neuromorphic transistors. Mater. Today Nano 2019, 8, 100059. [Google Scholar] [CrossRef]

- Subin, P.; Midhun, P.S.; Antony, A.; Saji, K.J.; Jayaraj, M.K. Optoelectronic synaptic plasticity mimicked in ZnO-based artificial synapse for neuromorphic image sensing application. Mater. Today Commun. 2022, 33, 104232. [Google Scholar] [CrossRef]

- Dhanabalan, S.C.; Dhanabalan, B.; Ponraj, J.S.; Bao, Q.; Zhang, H. 2D–Materials-Based Quantum Dots: Gateway Towards Next-Generation Optical Devices. Adv. Opt. Mater. 2017, 5, 1700257. [Google Scholar] [CrossRef]

- Hao, K. The computing power needed to train AI is now rising seven times faster than ever before. In MIT Technology Review; MIT: Cambridge, MA, USA, 2019. [Google Scholar]

- Hills, G.; Lau, C.; Wright, A.; Fuller, S.; Bishop, M.D.; Srimani, T.; Kanhaiya, P.; Ho, R.; Amer, A.; Stein, Y.; et al. Modern microprocessor built from complementary carbon nanotube transistors. Nature 2019, 572, 595–602. [Google Scholar] [CrossRef]

- Elzein, B. Nano Revolution:“Tiny tech, big impact: How nanotechnology is driving SDGs progress. Heliyon 2024, 10, e31393. [Google Scholar] [CrossRef]

- Rodrigues, J.F., Jr.; Paulovich, F.V.; De Oliveira, M.C.; de Oliveira, O.N., Jr. On the convergence of nanotechnology and Big Data analysis for computer-aided diagnosis. Nanomedicine 2016, 11, 959–982. [Google Scholar] [CrossRef]

- Prakash, P.; Sundaram, K.M.; Bennet, M.A. A review on carbon nanotube field effect transistors (CNTFETs) for ultra-low power applications. Renew. Sustain. Energy Rev. 2018, 89, 194–203. [Google Scholar] [CrossRef]

- Magno, M.; Wang, X.; Eggimann, M.; Cavigelli, L.; Benini, L. InfiniWolf: Energy efficient smart bracelet for edge computing with dual source energy harvesting. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020. [Google Scholar]

- Wang, M.; Mi, G.; Shi, D.; Bassous, N.; Hickey, D.; Webster, T.J. Nanotechnology and nanomaterials for improving neural interfaces. Adv. Funct. Mater. 2018, 28, 1700905. [Google Scholar] [CrossRef]

- Zhang, H.; Rong, G.; Bian, S.; Sawan, M. Lab-on-chip microsystems for ex vivo network of neurons studies: A review. Front. Bioeng. Biotechnol. 2022, 10, 841389. [Google Scholar] [CrossRef] [PubMed]

- Nwadiugwu, M.C. Neural networks, artificial intelligence and the computational brain. arXiv 2020, arXiv:2101.08635. [Google Scholar]

- Liu, S.E. Synthesis, Fabrication, and Characterization of Two-Dimensional Neuromorphic Electronic Nanomaterials. Ph.D. Thesis, Northwestern University, Evanston, IL, USA, 2024. [Google Scholar]

- Anthony, S. IBM Cracks Open a New Era of Computing with Brain-Like Chip: 4096 Cores, 1 Million Neurons, 5.4 Billion Transistors. 2014. Available online: https://www.extremetech.com/extreme/187612-ibm-cracks-open-a-new-era-of-computing-with-brain-like-chip-4096-cores-1-million-neurons-5-4-billion-transistors (accessed on 27 August 2024).

- Xu, X.; Ran, B.; Jiang, N.; Xu, L.; Huan, P.; Zhang, X.; Li, Z. A systematic review of ultrasonic techniques for defects detection in construction and building materials. Measurement 2024, 226, 114181. [Google Scholar] [CrossRef]

- Datta, G.; Kundu, S.; Jaiswal, A.R.; Beerel, P.A. ACE-SNN: Algorithm-hardware co-design of energy-efficient & low-latency deep spiking neural networks for 3d image recognition. Front. Neurosci. 2022, 16, 815258. [Google Scholar]

- Vasilache, A.; Nitzsche, S.; Floegel, D.; Schuermann, T.; von Dosky, S.; Bierweiler, T.; Mußler, M.; Kälber, F.; Hohmann, S.; Becker, J. Low-Power Vibration-Based Predictive Maintenance for Industry 4.0 using Neural Networks: A Survey. arXiv 2024, arXiv:2408.00516. [Google Scholar]

- Buckley, T.; Ghosh, B.; Pakrashi, V. A feature extraction & selection benchmark for structural health monitoring. Struct. Health Monit. 2023, 22, 2082–2127. [Google Scholar]

- Hu, Y.; Dong, J.; Zhang, G.; Wu, Y.; Rong, H.; Zhu, M. Cancer gene selection with adaptive optimization spiking neural p systems and hybrid classifiers. J. Membr. Comput. 2023, 5, 238–251. [Google Scholar] [CrossRef]

- Wu, X.; Dang, B.; Zhang, T.; Wu, X.; Yang, Y. Spatiotemporal audio feature extraction with dynamic memristor-based time-surface neurons. Sci. Adv. 2024, 10, eadl2767. [Google Scholar] [CrossRef]

- Lin, S. Representation Learning on Brain Data; University of California: Santa Barbara, CA, USA, 2022. [Google Scholar]

- Song, Y.; Guo, L.; Man, M.; Wu, Y. The spiking neural network based on fMRI for speech recognition. Pattern Recognit. 2024, 155, 110672. [Google Scholar] [CrossRef]

- Wang, J. Training multi-layer spiking neural networks with plastic synaptic weights and delays. Front. Neurosci. 2024, 17, 1253830. [Google Scholar] [CrossRef] [PubMed]

- Herdiana, Y.; Wathoni, N.; Shamsuddin, S.; Muchtaridi, M. Scale-up polymeric-based nanoparticles drug delivery systems: Development and challenges. OpenNano 2022, 7, 100048. [Google Scholar] [CrossRef]

- Tao, H.; Wu, T.; Aldeghi, M.; Wu, T.C.; Aspuru-Guzik, A.; Kumacheva, E. Nanoparticle synthesis assisted by machine learning. Nat. Rev. Mater. 2021, 6, 701–716. [Google Scholar] [CrossRef]

- Roco, M.C.; Grainger, D.; Alvarez, P.J.; Badesha, S.; Castranova, V.; Ferrari, M.; Godwin, H.; Grodzinski, P.; Morris, J.; Savage, N.; et al. Nanotechnology environmental, health, and safety issues. In Nanotechnology Research Directions for Societal Needs in 2020: Retrospective and Outlook; Springer: Berlin/Heidelberg, Germany, 2011; pp. 159–220. [Google Scholar]

- Masson, J.-F.; Biggins, J.S.; Ringe, E. Machine learning for nanoplasmonics. Nat. Nanotechnol. 2023, 18, 111–123. [Google Scholar] [CrossRef]

- Shah, V. Towards Efficient Software Engineering in the Era of AI and ML: Best Practices and Challenges. Int. J. Comput. Sci. Technol. 2019, 3, 63–78. [Google Scholar]

- Ur Rehman, I.; Ullah, I.; Khan, H.; Guellil, M.S.; Koo, J.; Min, J.; Habib, S.; Islam, M.; Lee, M.Y. A comprehensive systematic literature review of ML in nanotechnology for sustainable development. Nanotechnol. Rev. 2024, 13, 20240069. [Google Scholar] [CrossRef]

- Su, Z.; Tang, G.; Huang, R.; Qiao, Y.; Zhang, Z.; Dai, X. Based on Medicine, The Now and Future of Large Language Models. Cell. Mol. Bioeng. 2024, 17, 263–277. [Google Scholar] [CrossRef]

- Ferrara, E. Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies. Sci 2024, 6, 3. [Google Scholar] [CrossRef]

- Islam, M.R.; Ahmed, M.U.; Barua, S.; Begum, S. A systematic review of explainable artificial intelligence in terms of different application domains and tasks. Appl. Sci. 2022, 12, 1353. [Google Scholar] [CrossRef]

- Tovar-Lopez, F.J. Recent progress in micro-and nanotechnology-enabled sensors for biomedical and environmental challenges. Sensors 2023, 23, 5406. [Google Scholar] [CrossRef]

- Amarasinghe, S.; Campbell, D.; Carlson, W.; Chien, A.; Dally, W.; Elnohazy, E.; Hall, M.; Harrison, R.; Harrod, W.; Hill, K.; et al. Exascale Software Study: Software Challenges in Extreme Scale Systems. DARPA IPTO, Air Force Research Labs, Tech. Rep. 2009, pp. 1–153. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=9be173d1c4b4cf091c4ed027d6e396780c7c8f8f (accessed on 27 August 2024).

- Lu, J.-C.; Jeng, S.-L.; Wang, K. A review of statistical methods for quality improvement and control in nanotechnology. J. Qual. Technol. 2009, 41, 148–164. [Google Scholar] [CrossRef]

- Li, R.; Gong, Y.; Huang, H.; Zhou, Y.; Mao, S.; Wei, Z.; Zhang, Z. New advancements, challenges and opportunities of nanophotonics for neuromorphic computing: A state-of-the-art review. arXiv 2023, arXiv:2311.09767. [Google Scholar]

- Boulogeorgos, A.A.A.; Trevlakis, S.E.; Tegos, S.A.; Papanikolaou, V.K.; Karagiannidis, G.K. Machine learning in nano-scale biomedical engineering. IEEE Trans. Mol. Biol. Multi-Scale Commun. 2020, 7, 10–39. [Google Scholar] [CrossRef]

- Kardani, S.L. Nanocarrier-based formulations: Regulatory Challenges, Ethical and Safety Considerations in Pharmaceuticals. Asian J. Pharm. (AJP) 2024, 18. [Google Scholar] [CrossRef]

- Amutha, C.; Gopan, A.; Pushbalatatha, I.; Ragavi, M.; Reneese, J.A. Nanotechnology and Governance: Regulatory Framework for Responsible Innovation. In Nanotechnology in Societal Development; Springe: Berlin/Heidelberg, Germany, 2024; pp. 481–503. [Google Scholar]

- Gutierrez, R., Jr. Guiding the Next Technological Revolution: Principles for Responsible AI and Nanotech Progress. In Artificial Intelligence in the Age of Nanotechnology; IGI Global: Hershey, PA, USA, 2024; pp. 210–232. [Google Scholar]

- Chen, G.; Tang, D.-M. Machine Learning as a “Catalyst” for Advancements in Carbon Nanotube Research. Nanomaterials 2024, 14, 1688. [Google Scholar] [CrossRef]

- Goyal, S.; Mondal, S.; Mohanty, S.; Katari, V.; Sharma, H.; Sahu, K.K. AI-and ML-based Models for Predicting Remaining Useful Life (RUL) of Nanocomposites and Reinforced Laminated Structures. In Fracture Behavior of Nanocomposites and Reinforced Laminate Structures; Springer: Berlin/Heidelberg, Germany, 2024; pp. 385–425. [Google Scholar]

- Colón-Rodríguez, C.J. Shedding Light on Healthcare Algorithmic and Artificial Intelligence Bias; US Department of Health and Human Services Office of Minority Health: Rockville, MA, USA, 2023. [Google Scholar]

- Bayda, S.; Adeel, M.; Tuccinardi, T.; Cordani, M.; Rizzolio, F. The history of nanoscience and nanotechnology: From chemical–physical applications to nanomedicine. Molecules 2019, 25, 112. [Google Scholar] [CrossRef]

- Hussain, M. Sustainable Machine Vision for Industry 4.0: A Comprehensive Review of Convolutional Neural Networks and Hardware Accelerators in Computer Vision. AI 2024, 5, 1324–1356. [Google Scholar] [CrossRef]

- Mousavizadegan, M.; Firoozbakhtian, A.; Hosseini, M.; Ju, H. Machine learning in analytical chemistry: From synthesis of nanostructures to their applications in luminescence sensing. TrAC Trends Anal. Chem. 2023, 167, 117216. [Google Scholar] [CrossRef]

- Yadav, A.; Yadav, K.; Ahmad, R.; Abd-Elsalam, K.A. Emerging Frontiers in Nanotechnology for Precision Agriculture: Advancements, Hurdles and Prospects. Agrochemicals 2023, 2, 220–256. [Google Scholar] [CrossRef]

| Parameters | Nanomaterial/ Technology | ML Benefits | Applications |

|---|---|---|---|

| Hardware Acceleration | Graphene-based transistors, quantum dots | Operate at higher speeds and with greater efficiency than traditional silicon-based transistors, reducing power consumption, and increasing speed of data transfer for ML applications. | High-speed ML applications, especially deep learning models and real-time image processing. |

| Data Storage | Nanowire-based memristors, molecular memory | Enable high-density storage in a small footprint, supporting large datasets required for ML without power constraints. | Compact data storage for ML models, large-scale data centers, neuromorphic computing hardware. |

| Neuromorphic Computing | Memristors (e.g., nanocrystalline ZnO, TiO2) | Mimic synaptic functions, providing faster data processing and enabling ML algorithms to learn like biological neurons. | Pattern recognition, autonomous navigation, sensor data processing for ML and AI applications. |

| Data Processing | Spintronics, nanosensors | High-speed data access, reduced latency, and energy-efficient processing by leveraging spin properties for faster data retrieval. | ML-based edge computing, real-time environmental monitoring, and health diagnostics. |

| Energy Efficiency | Graphene supercapacitors, thermoelectric materials | Provide rapid energy discharge and reduce overall power consumption, supporting sustainable and efficient ML operations. | Edge computing devices, energy-limited applications, and high-performance ML hardware. |

| Goal | Innovation | Advantages | Applications | Storage Density | Power Consumption | Reference |

|---|---|---|---|---|---|---|

| To create memory devices with high storage density and low power consumption for neuromorphic computing | Develop novel memristors using MXene composite with nanocrystals to emulate synaptic properties and enhance data density | - High-density data storage | Neuromorphic computing systems | High (e.g., 10 Tb/in2) | Very low (<1 mW) | [70] |

| - Low power consumption | ||||||

| - Tunable gate properties | ||||||

| - Integration with existing electronics | ||||||

| To build scalable parylene-based memristors with improved memory stability for ML models | Optimize Ag nanocomposite in a parylene-based memristor structure for enhanced stability and reduced data loss | - Reduced internal stochasticity | ML hardware, data storage | Moderate (e.g., 5 Tb/in2) | Moderate (5 mW) | [71] |

| - Improved memory stability for ML applications | ||||||

| - Simplified architecture for neural networks | ||||||

| To create nanocrystalline ZnO-based memristors for compact, high-density storage in AI hardware | Implement ZnO-based memristors with multi-layer nanostructures for improved storage capacity and reliability | - Enhanced endurance and data retention | AI hardware, consumer electronics | High (e.g., 8 Tb/in2) | Low (2 mW) | [37] |

| - High to low resistance ratio | ||||||

| - Suitability for compact AI devices | ||||||

| - Potential for replicating short-term synaptic plasticity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tripathy, A.; Patne, A.Y.; Mohapatra, S.; Mohapatra, S.S. Convergence of Nanotechnology and Machine Learning: The State of the Art, Challenges, and Perspectives. Int. J. Mol. Sci. 2024, 25, 12368. https://doi.org/10.3390/ijms252212368

Tripathy A, Patne AY, Mohapatra S, Mohapatra SS. Convergence of Nanotechnology and Machine Learning: The State of the Art, Challenges, and Perspectives. International Journal of Molecular Sciences. 2024; 25(22):12368. https://doi.org/10.3390/ijms252212368

Chicago/Turabian StyleTripathy, Arnav, Akshata Y. Patne, Subhra Mohapatra, and Shyam S. Mohapatra. 2024. "Convergence of Nanotechnology and Machine Learning: The State of the Art, Challenges, and Perspectives" International Journal of Molecular Sciences 25, no. 22: 12368. https://doi.org/10.3390/ijms252212368

APA StyleTripathy, A., Patne, A. Y., Mohapatra, S., & Mohapatra, S. S. (2024). Convergence of Nanotechnology and Machine Learning: The State of the Art, Challenges, and Perspectives. International Journal of Molecular Sciences, 25(22), 12368. https://doi.org/10.3390/ijms252212368