A Neural Network Approach for Building An Obstacle Detection Model by Fusion of Proximity Sensors Data

Abstract

:1. Introduction

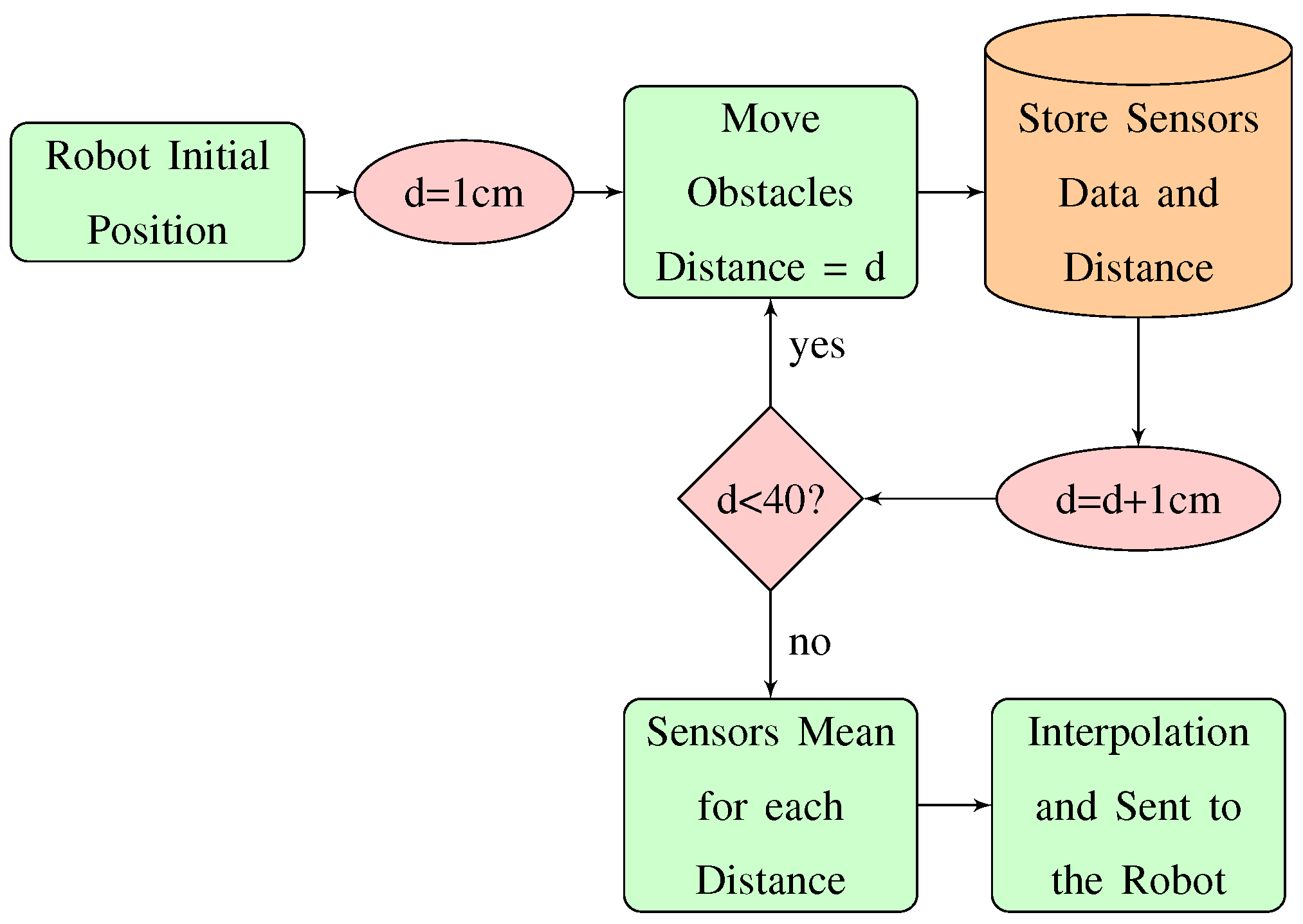

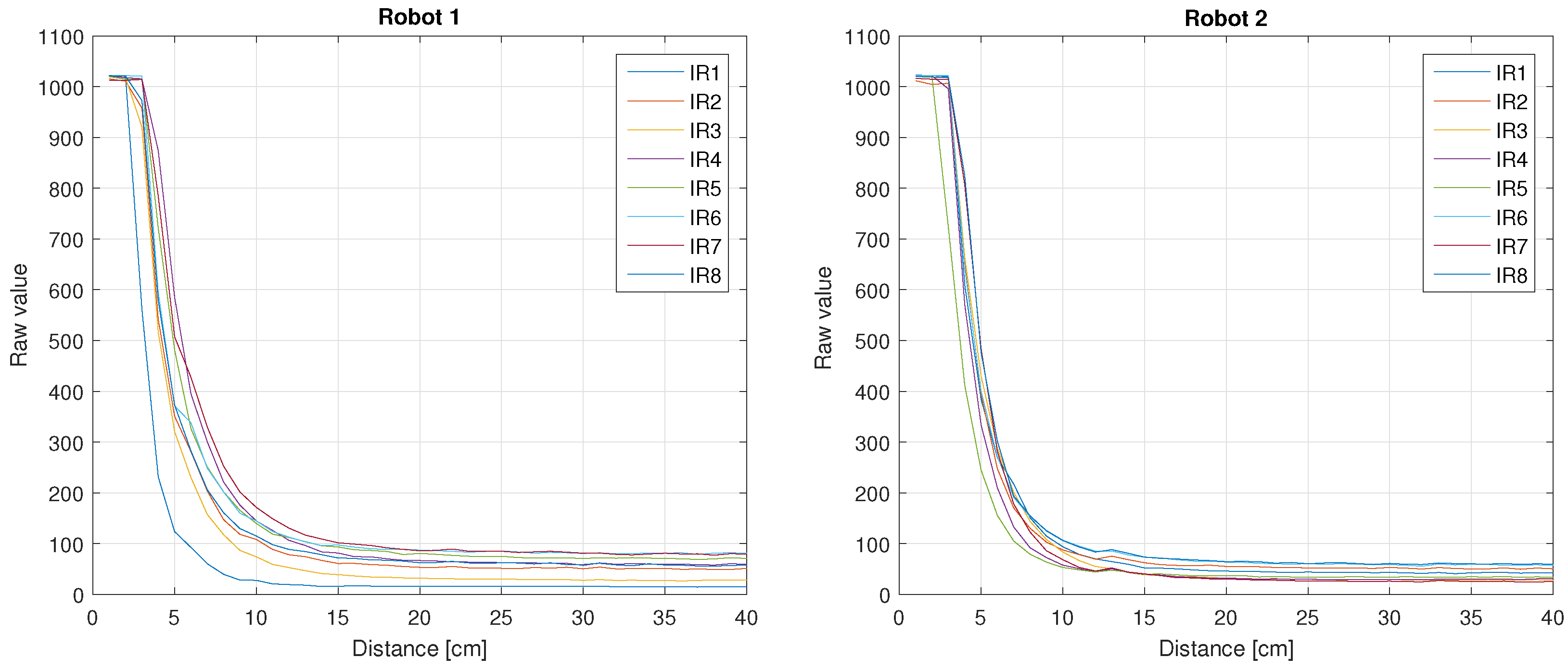

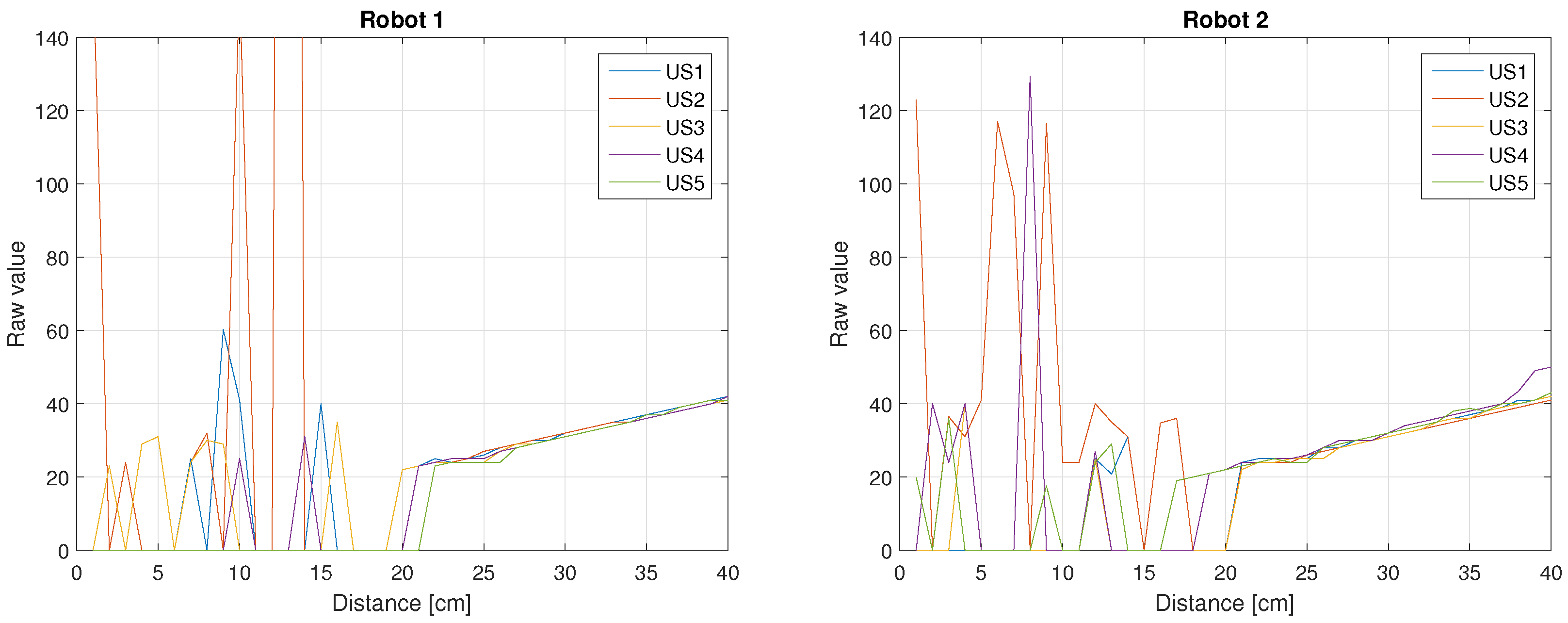

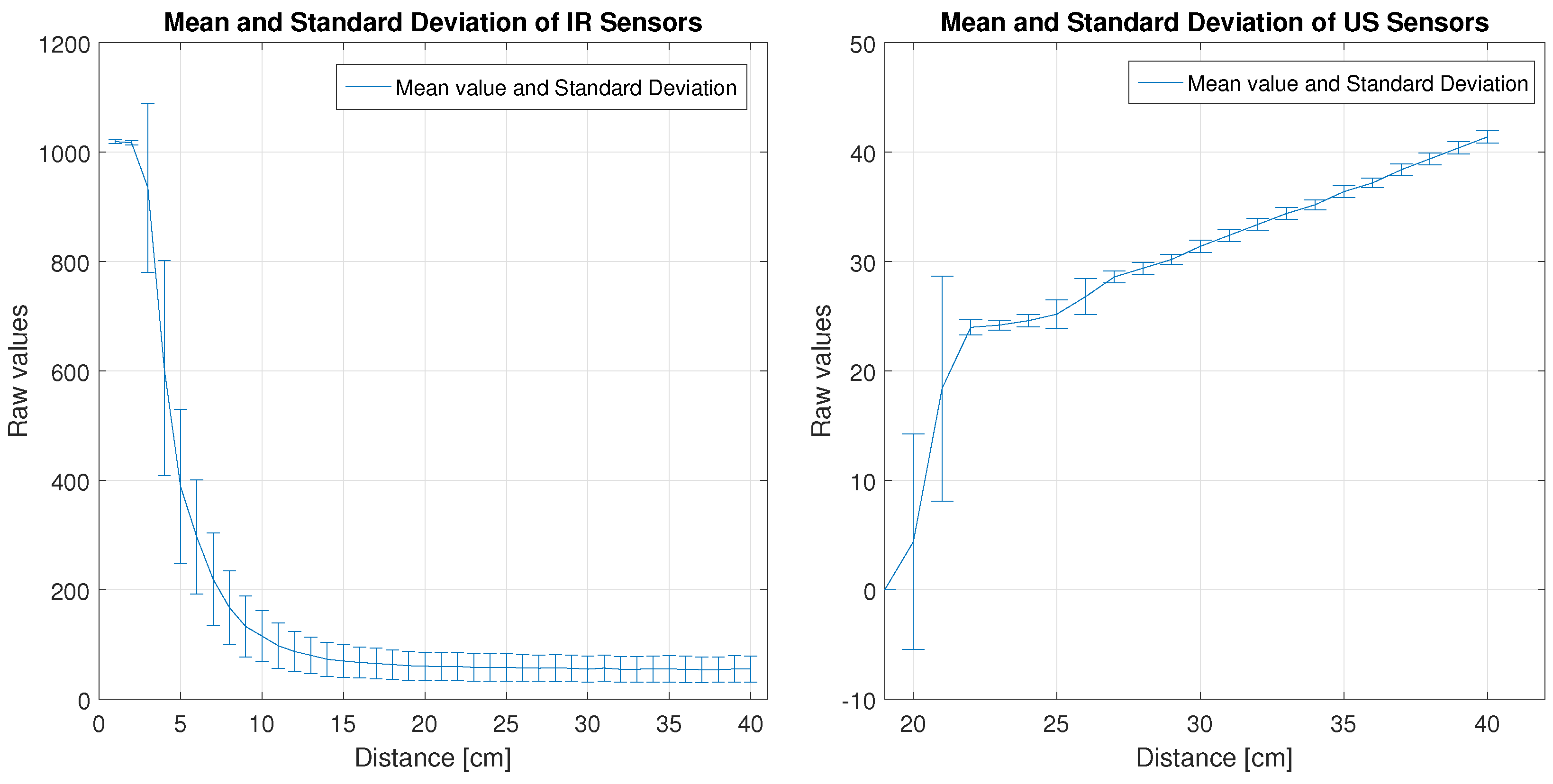

2. Traditional Calibration of Proximity Sensors

3. Automatic Smart Calibration Method

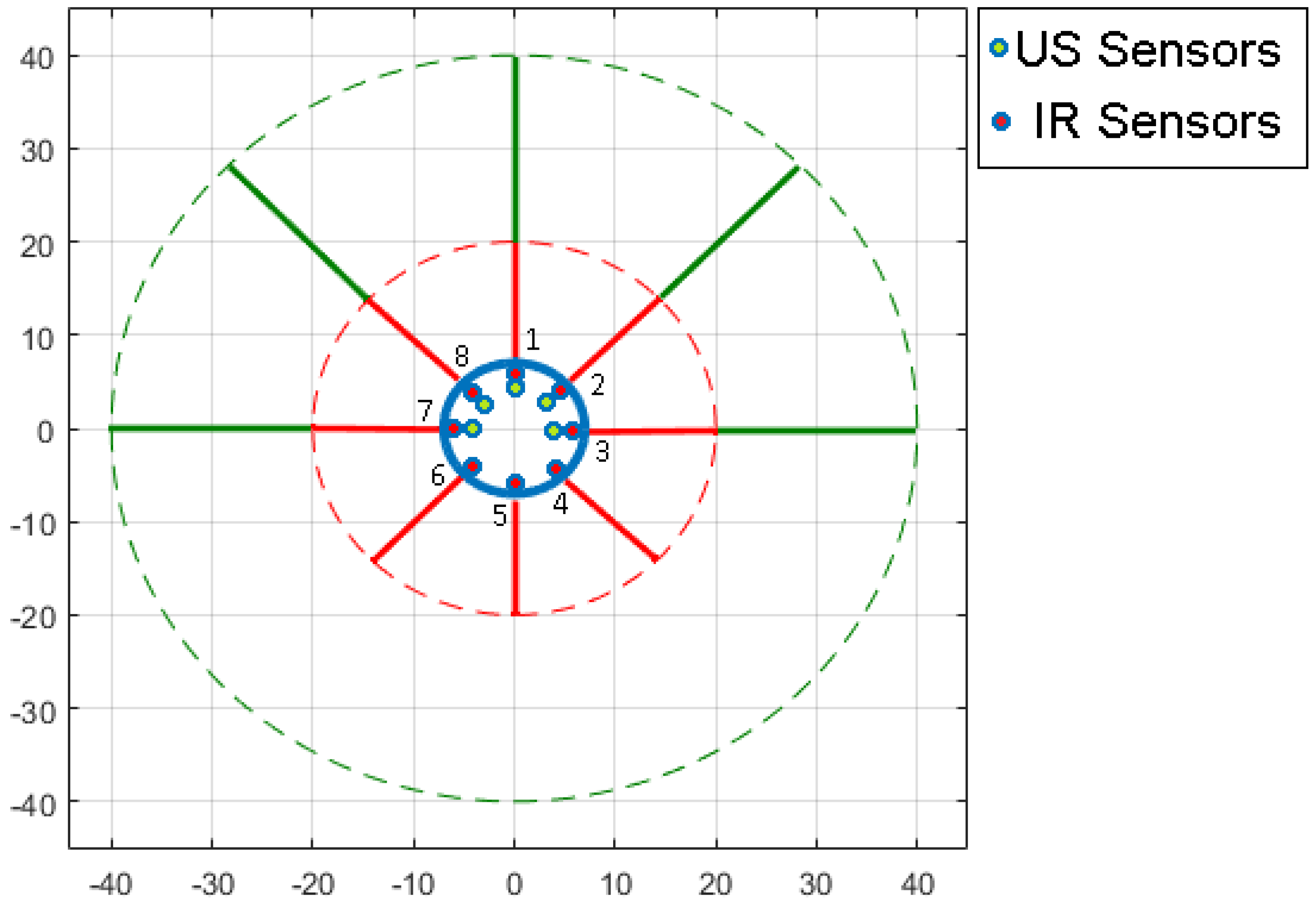

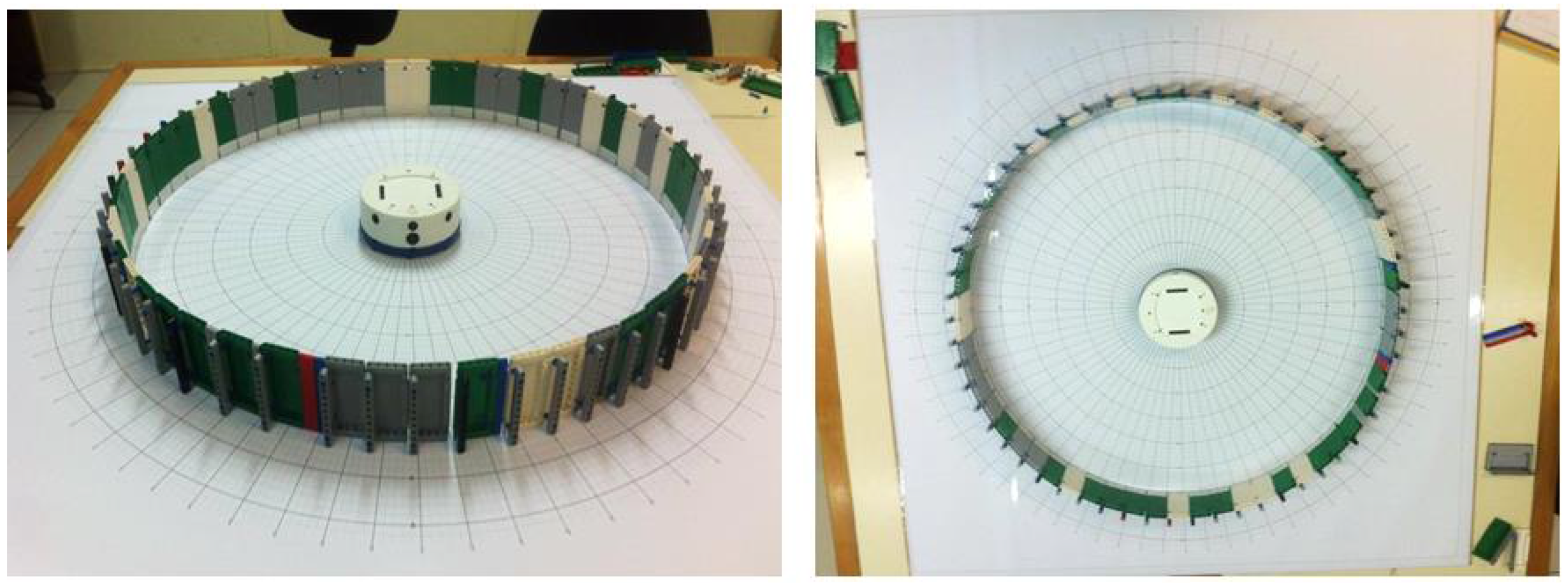

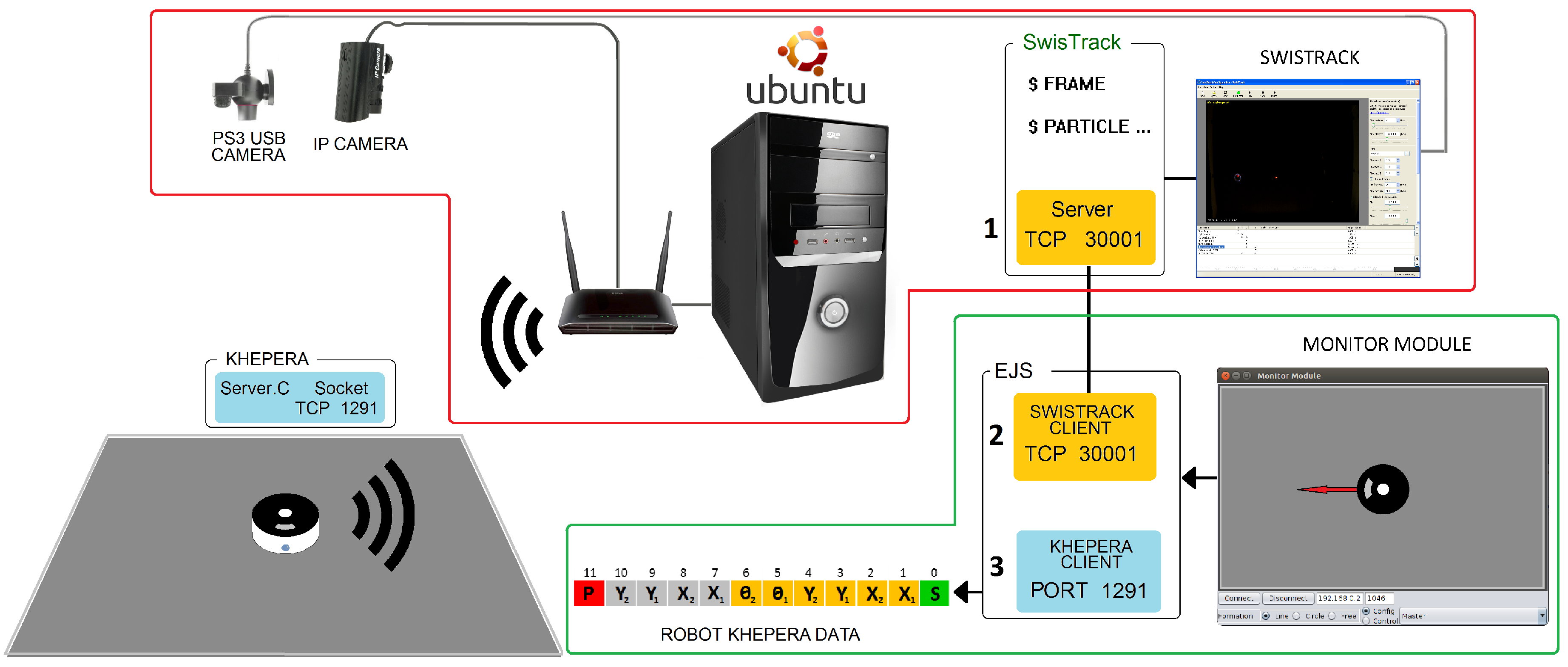

3.1. Platform Used in the Laboratory

3.2. Automatic Smart Calibration Method

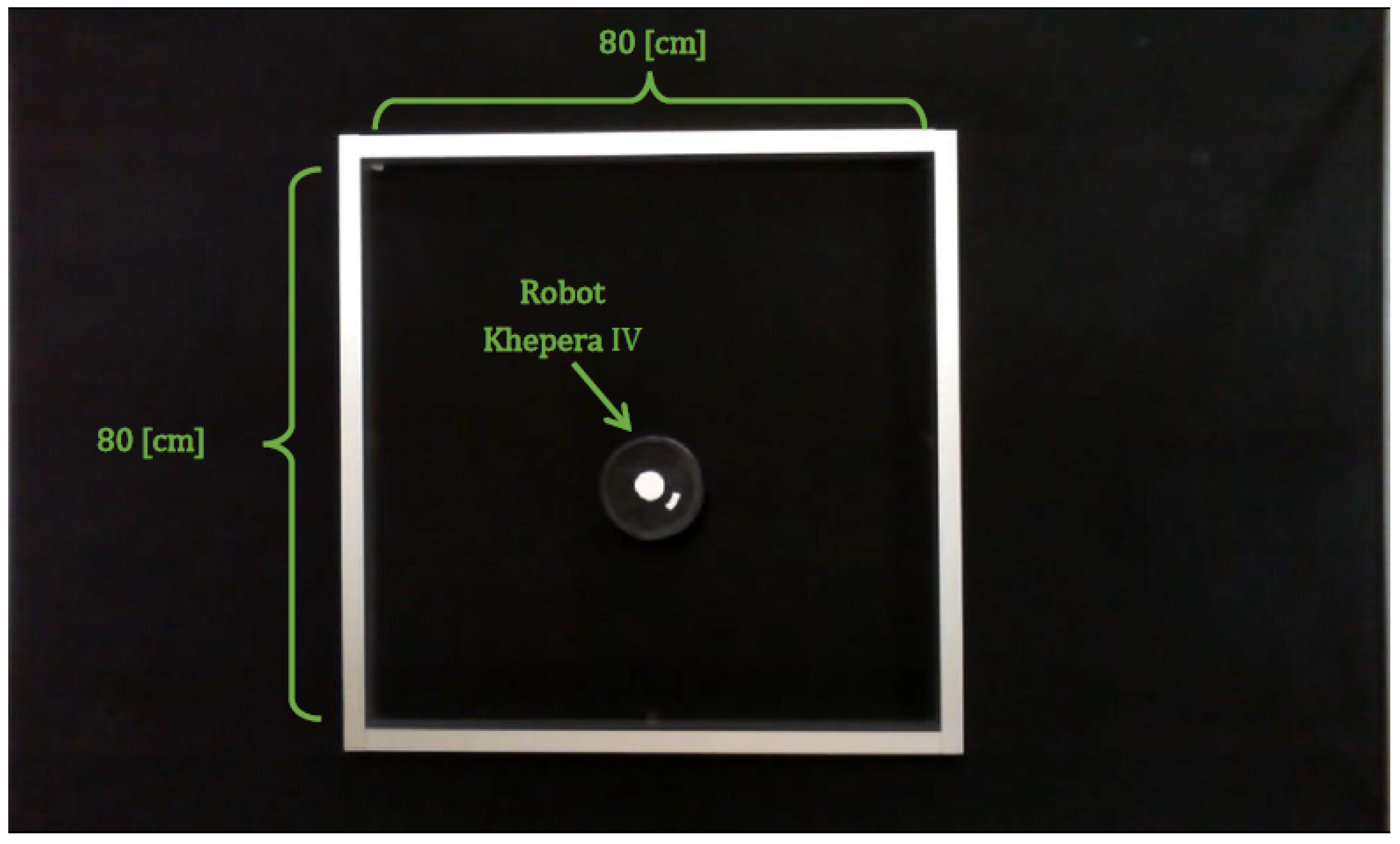

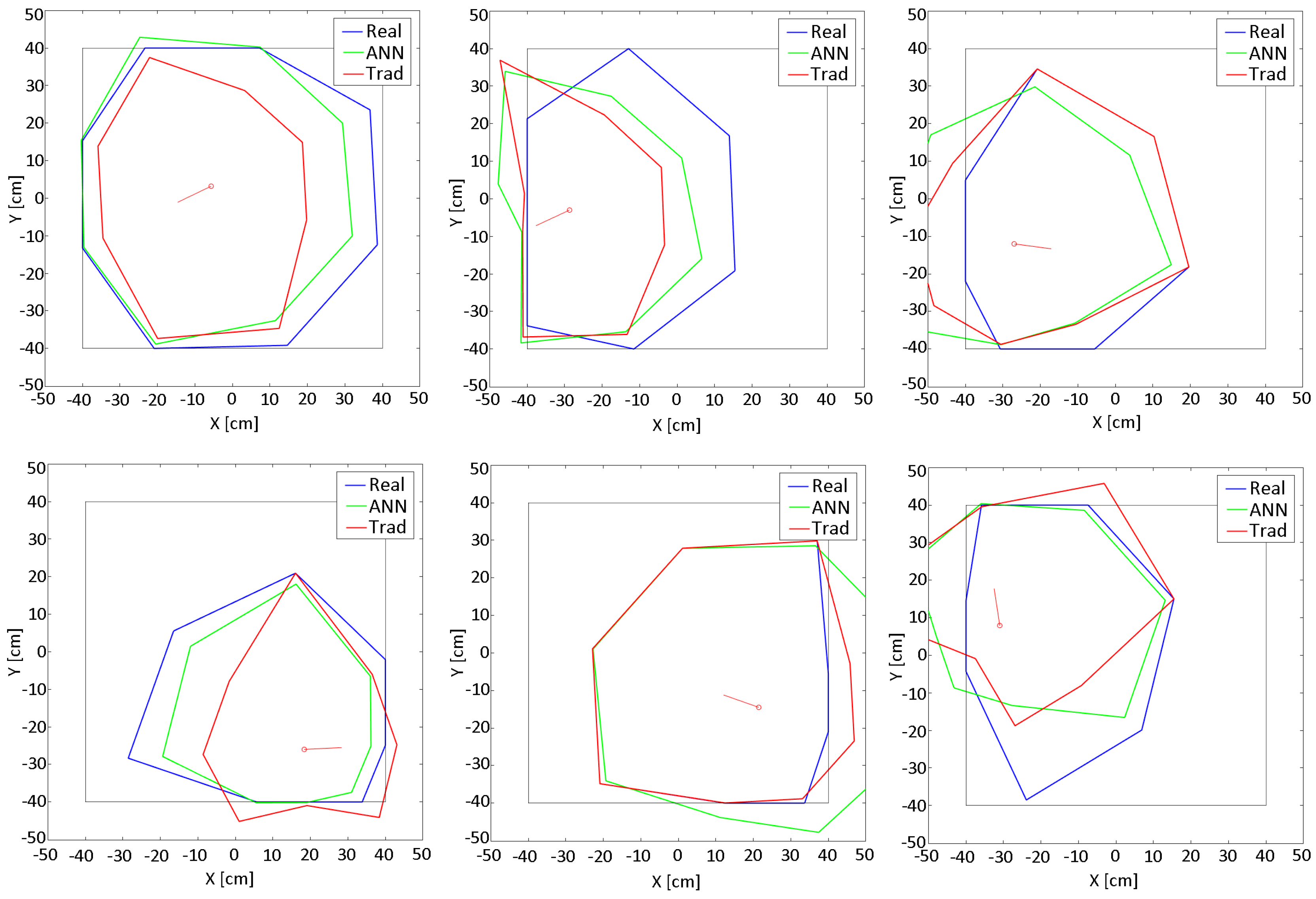

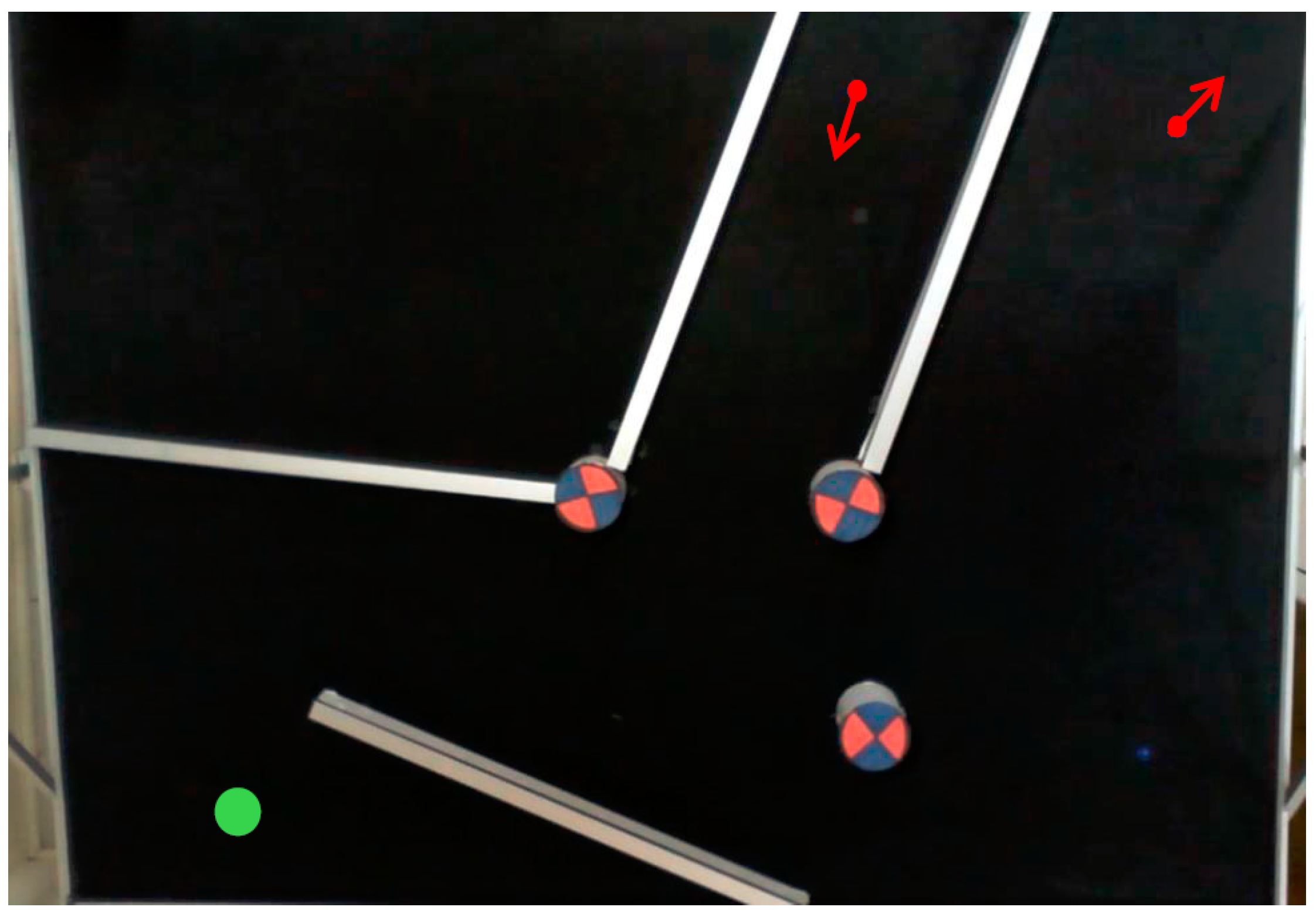

- Set up of the workspace: A square shaped wall (80 cm) is placed in the Arena. The robot is placed in the center of the square with a known orientation. Note the model will work until 40 cm. The robot starts to move implementing the Braitenberg algorithm [28] to avoid the obstacles. Figure 8 shows the aerial view of the set up.

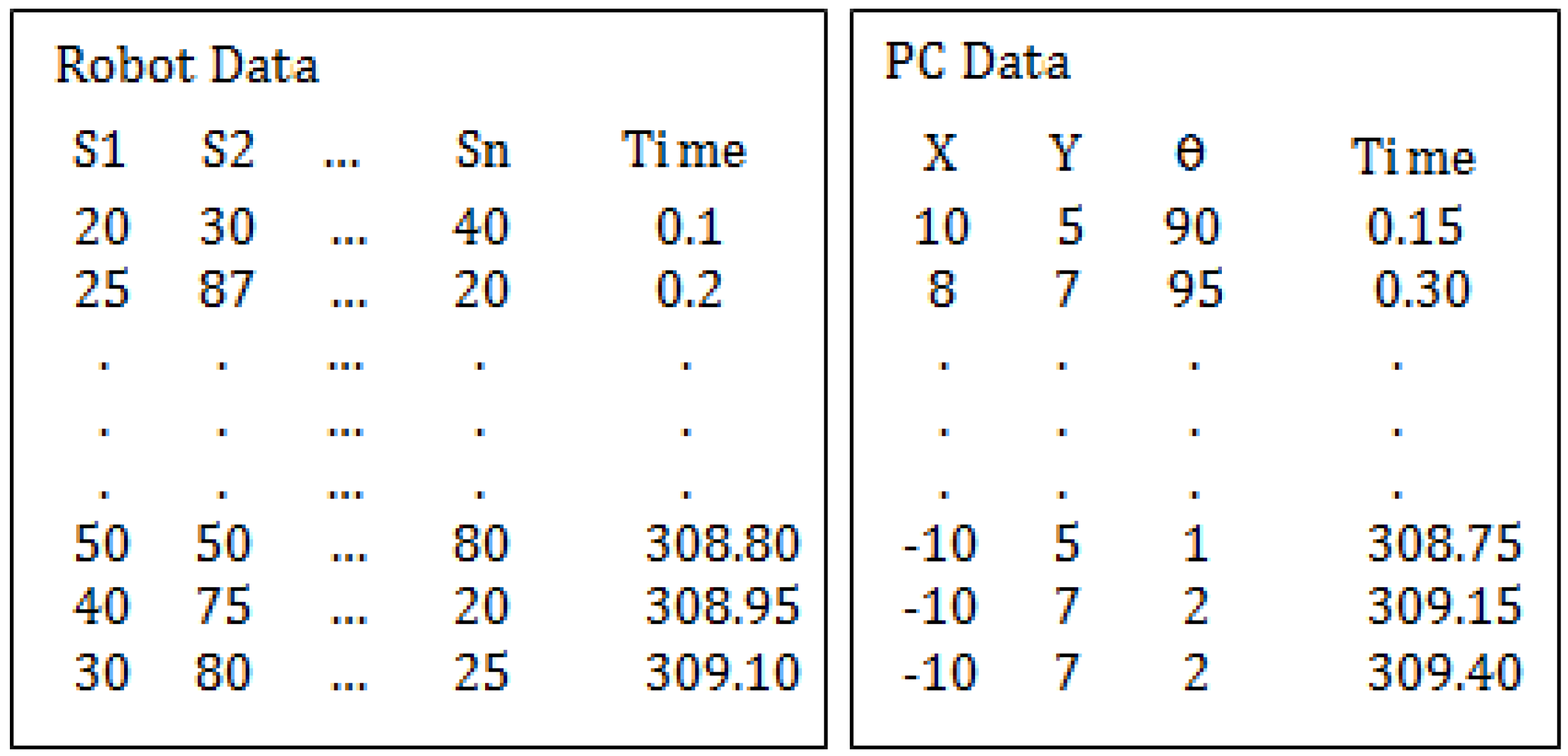

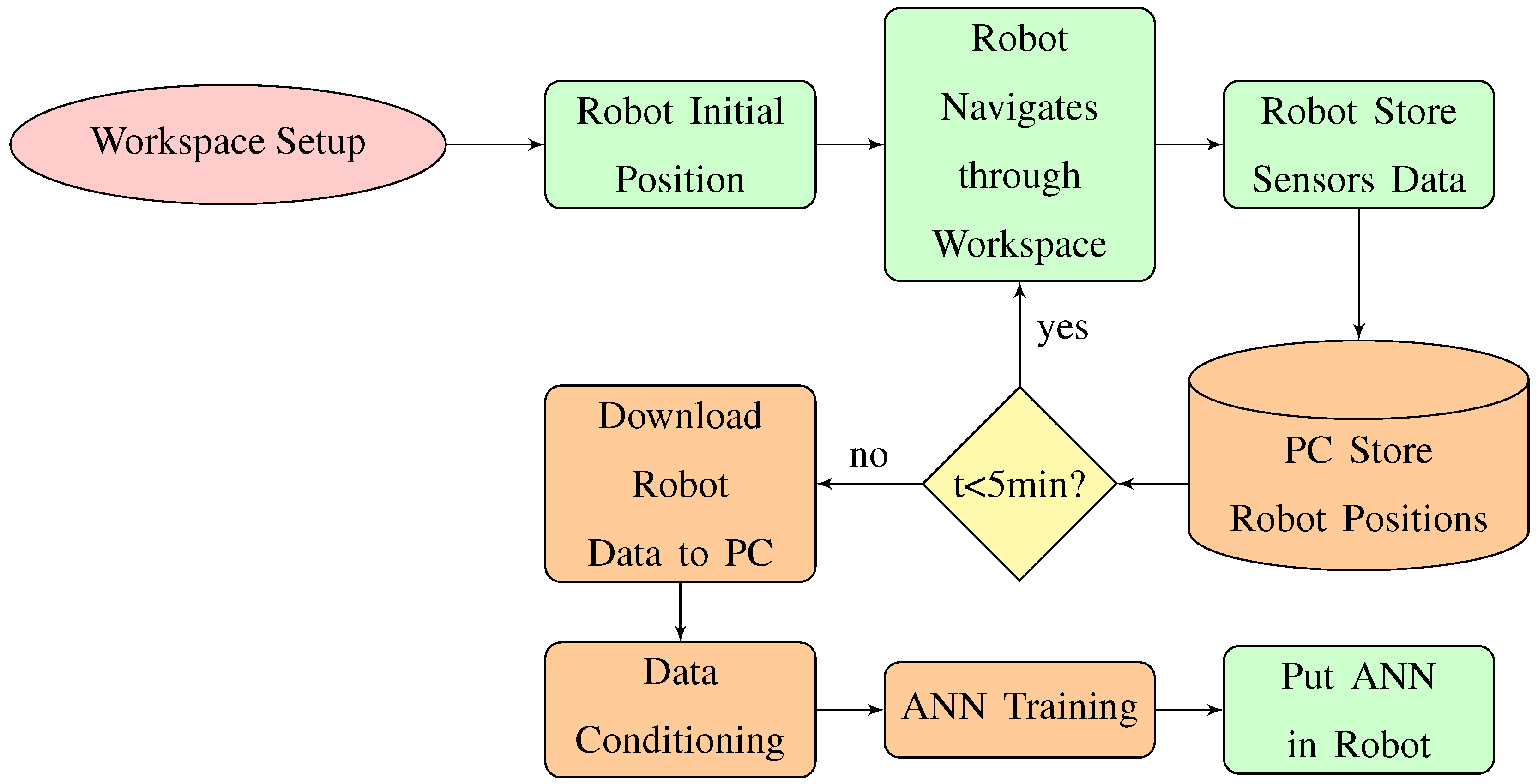

- Data acquisition: The robot stores the data of the sensors (S, S, ..., S) and the time during its movement. On the other hand, the PC stores the position of the robot (coordinates and orientation) and also the time during the experiment. At the beginning of the process the time in the PC and the robot starts in 0. But the sample time is different for both because they run independently. Which means that the acquired data must be synchronized in time by interpolation.After the experiment finishes, the data of the robot is copied to the PC. Since the time stored in the robot and in the PC are not coincident this data needs to be adjusted and synchronized. This process is carried out of a code developed in MATLAB to do this task. Figure 9 shows an example of the data stored in the robot and in the PC.

- Data conditioning and synchronization: The data is adjusted by liner interpolation of the time, using Equation (1):where and are the initial and final time of the position data where the interpolation is made. t is the time that is evaluated which corresponds with the data of the sensors. , and are the pose of the robot in time t. Figure 10 shows the results of this process, where the data of the robot sensors and the robot positions are merged.Once the position of the robot is obtained, it is necessary to obtain the positions of all sensors for each robot position. The positions of the sensors only depend on the pose of the robot. Using Equation (2) this position can be calculated.Figure 11 represents the distances from the sensors to the walls (yellow colour). The black solid lines are the walls. The blue circle is the robot, the small rectangles are the sensors and the blue arrow represents the orientation of the robot. The dashed lines represent the detection directions of the senors. The dashed circle (40 cm of radius) represents the maximum detection range of the sensors.The distance from each sensor to the walls is calculated by the intersection points (red) between the detection direction lines and the walls. Equation (3) shows how the coordinates of the intersection point P(;) are calculated for sensors 1, 2 and 3.For sensors 7 and 8 is the same process only changing the axis because in this case the intersection is with the wall x = 40. Having these intersection points the distances and the angles are easily calculated by Equation (4). Finally the obtained data is filtered.

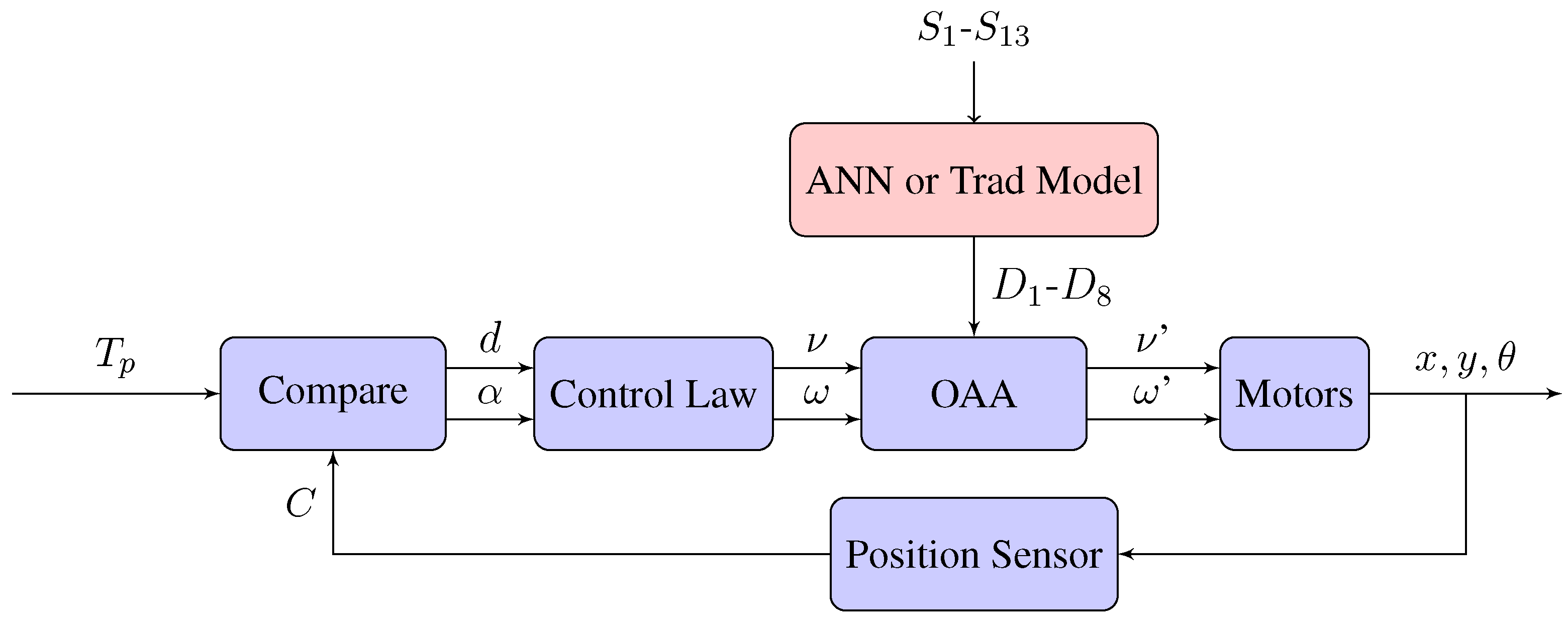

- Artificial Neural Network (ANN): With the calculated values and the raw data of the sensors, an ANN is built using the Neural Network Toolbox 9.0 of MATLAB [29], to obtain the model. Figure 12 shows a representation of the ANN implemented based on Levenberg-Marquardt method [30].The input layer receives the 13 raw values (S–S) of the sensors (8 IR + 5 US). While the hidden layer has 20 neurons (H–H) with the Hyperbolic Tangent as activation function. The output layer has 8 neurons with the Identity as activation function. The outputs (D–D) are distances to the obstacles in the positions of the sensors (every 45 around the robot). The training was carried out with the 70% of the acquired data, the validation with another 15% and the testing with the remaining 15%. Note that the parameters of the ANN (number of neurons in the hidden layer and activation functions) have been determined empirically by trial and error after multiple tests.

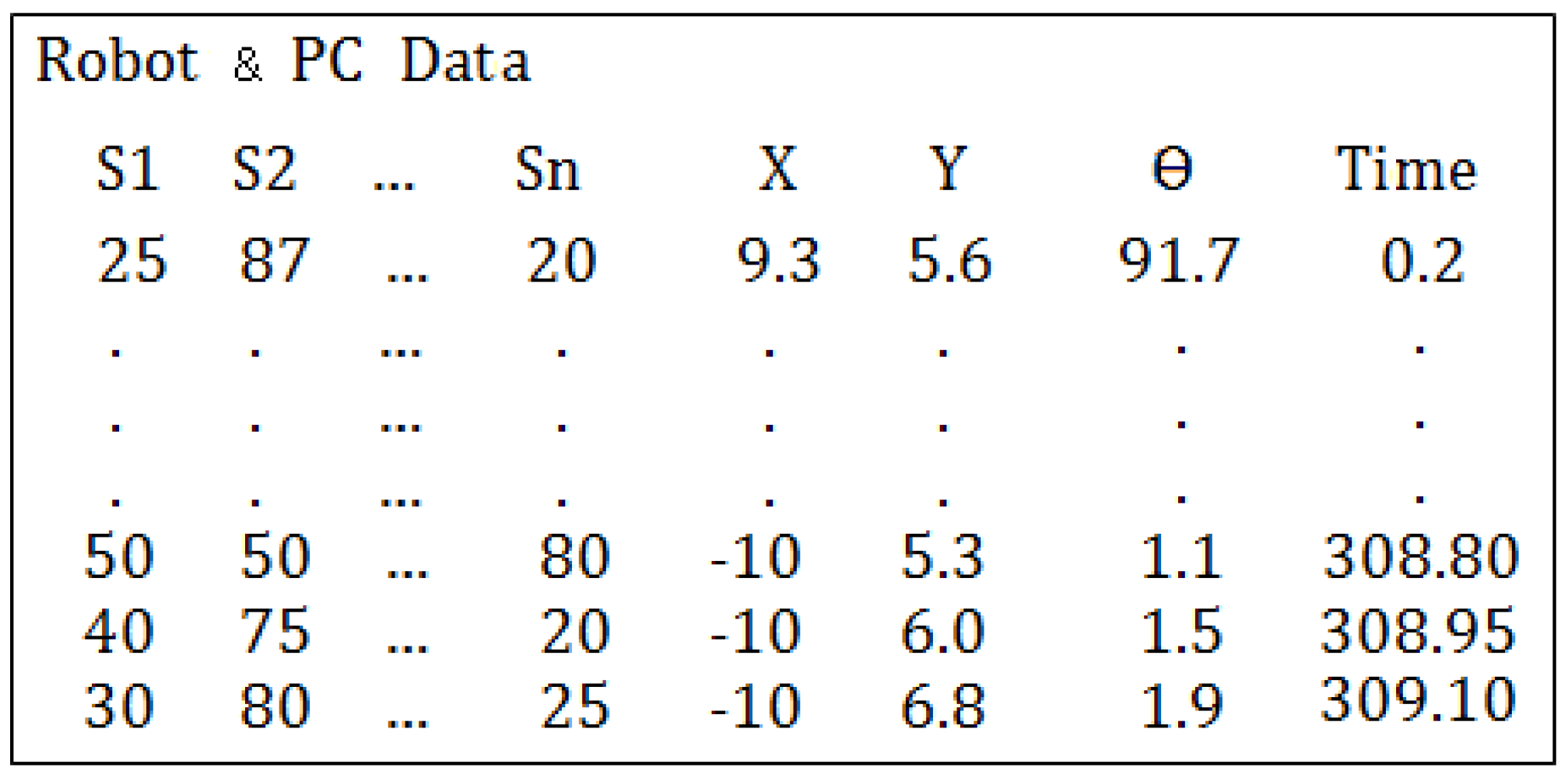

- Testing the resulting model: Figure 13 shows a test developed in the workspace where the model was obtained. The sequence of images shows the displacement of the robot through the scenario. The black lines are the walls of the square (80 cm by 80 cm). The red small line represents the position and orientation of the robot. The performance of the models is drawn around the robot: (a) Blue lines: are the real distances to the obstacles (less than 40 cm and calculated with the intersection process described before); (b) Green lines: are the results of the the ANN model, taking and fusing the raw inputs from the sensors and providing the 8 distances; and (c) Red lines: are the distances to the obstacles with the traditional calibration method.As can be seen, all the models have different behaviors for different situations. The better results are obtained in general for distances less than 30 cm. But there are appreciable differences between the results of the half front part of the robot and the back half. This is due to the fact that the back part of the robots does not have US sensors. Even so, these results are more than acceptable compared with the majority of existing obstacle avoidance methods, and based on the maximum velocity of this robot and its steering characteristics.Table 1 shows the Mean Absolute Percentage Error (MAPE) of the previous tests with respect to the real distances. The rows represent the distances (D–D) to the obstacles from the position of the sensors. The columns represent the results for both models (Artificial Neural Network (ANN) and Traditional (Trad)) for Robots 1 and 2.

4. Experiment of Position Control with Obstacles Avoidance

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, W.; Zelinsky, G.; Samaras, D. Real-time Accurate Object Detection using Multiple Resolutions. In Proceedings of the 11th IEEE International Conference on Computer Vision (ICCV), Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Ren, C.L.; Chun, C.L. Multisensor Fusion-Based Concurrent Environment Mapping and Moving Object Detection for Intelligent Service Robotics. IEEE Trans. Ind. Electr. 2014, 61, 4043–4051. [Google Scholar]

- Kaijen, H.; Nangeroni, P.; Huber, M.; Saxena, A.; Ng, A.Y. Reactive grasping using optical proximity sensors. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 2098–2105. [Google Scholar]

- Almasri, M.; Alajlan, A.; Elleithy, K. Trajectory Planning and Collision Avoidance Algorithm for Mobile Robotics System. IEEE Sens. J. 2016, 12, 5021–5028. [Google Scholar] [CrossRef]

- Alajlan, M.; Almasri, M.; Elleithy, K. Multi-sensor based collision avoidance algorithm for mobile robot. In Proceedings of the IEEE Long Island Systems, Applications and Technology Conference (LISAT), New York, NY, USA, 1 May 2015; pp. 1–6. [Google Scholar]

- Almasri, M.; Elleithy, K.; Alajlan, A. Sensor Fusion Based Model for Collision Free Mobile Robot Navigation. Sensors 2016, 16, 24. [Google Scholar] [CrossRef] [PubMed]

- Webster, J.G.; Halit, E. Measurement, Instrumentation, and Sensors Handbook: Spatial, Mechanical, Thermal, and Radiation Measurement, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Mustapha, B.; Zayegh, A.; Begg, R. Ultrasonic and infrared sensors performance in a wireless obstacle detection system. In Proceedings of the 1st International Conference on Artificial Intelligence, Modelling and Simulation (AIMS), Kota Kinabalu, Malasya, 3–5 December 2013; pp. 487–492. [Google Scholar]

- Adarsh, S.; Kaleemuddin, S.M.; Bose, D.; Ramachandran, K.I. Performance comparison of Infrared and Ultrasonic sensors for obstacles of different materials in vehicle/robot navigation applications. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bangalore, India, 14–16 July 2016; pp. 1–7. [Google Scholar]

- Shrivastava, A.K.; Verma, A.; Singh, S.P. Distance Measurement of an Object or Obstacle by Ultrasound Sensors using P89C51RD2. Int. J. Comput. Theory Eng. 2010, 64, 64–68. [Google Scholar]

- Mohd Rusdy, Y.; Anuar, M.K.; Syahrim, N.; Anwar, N. Effect of glittering and reflective objects of different colours to the output voltage-distance characteristics of sharp GP2D120 IR. Int. J. Electr. Power Eng. 2012, 2, 6–10. [Google Scholar]

- Benet, G.; Blanes, F.; Simó, J.E.; Pérez, P. Using infrared sensors for distance measurement in mobile robots. Robot. Auton. Syst. 2002, 40, 255–266. [Google Scholar] [CrossRef]

- Paunović, I.; Todorović, D.; Božić, M.; Đorđević, G.S. Calibration of ultrasonic sensors of a mobile robot. Serbian J. Electr. Eng. 2009, 3, 427–437. [Google Scholar] [CrossRef]

- Fung, M.L.; Chen, M.Z.; Chen, Y.H. Sensor fusion: A review of methods and applications. In Proceedings of the 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 3853–3860. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Martens, S.; Gaudiano, P.; Carpenter, G.A. Mobile robot sensor integration with fuzzy ARTMAP. In Proceedings of the Intelligent Control (ISIC) joinly with IEEE International Symposium on Computational Intelligence in Robotics and Automation (CIRA), Gaithersburg, MD, USA, 17 September 1998; pp. 307–312. [Google Scholar]

- Guanshan, H. Neural Network Applications in Sensor Fusion for a Mobile Robot Motion. In Proceedings of the WASE International Conference on Information Engineering (ICIE), Qinhuangdao, China, 14–15 August 2010; pp. 46–49. [Google Scholar]

- Akkaya, R.; Aydogdu, O.; Canan, S. An ANN based NARX GPS/DR System for Mobile Robot Positioning and Obstacle Avoidance. J. Autom. Control 2013, 1, 6–13. [Google Scholar]

- Barton, A.; Volna, E. Control of autonomous robot using neural networks. In Proceedings of the International Conference of Numerical Analysis and Applied Mathematics (ICNAAM), Rhodes, Greece, 19–25 September 2016; p. 070002. [Google Scholar]

- Safari, S.; Shabani, F.; Simon, D. Multirate multisensor data fusion for linear systems using Kalman filters and a neural network. Aerosp. Sci. Technol. 2014, 39, 465–471. [Google Scholar] [CrossRef]

- Myat, S.N.; Hla, M.T. Implementation of Multisensor Data Fusion Algorithm. Int. J. Sens. Sens. Netw. 2017, 5, 48–53. [Google Scholar]

- Kam, M.; Zhu, X.; Kalata, P. Sensor fusion for mobile robot navigation. Proc. IEEE 1997, 85, 108–119. [Google Scholar] [CrossRef]

- KTeam. Khepera IV User Manual. 2015. Available online: http://ftp.k-team.com/KheperaIV/UserManual/ (accessed on 2 October 2017).

- De Silva, O.; George, K.M.; Raymond, G.G. An ultrasonic and vision-based relative positioning sensor for multirobot localization. IEEE Sens. J. 2015, 15, 1716–1726. [Google Scholar] [CrossRef]

- Fabregas, E.; Farias, G.; Peralta, E.; Sánchez, J.; Dormido, S. Two Mobile Robots Platforms for Experimentation: Comparison and Synthesis. In Proceedings of the 14th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Madrid, Spain, 26–28 July 2017; pp. 439–445. [Google Scholar]

- Correll, N.; Sempo, G.; De Meneses, Y.L.; Halloy, J.; Deneubourg, J.L.; Martinoli, A. SwisTrack: A tracking tool for multi-unit robotic and biological systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Beijing, China, 9–15 October 2006; pp. 2185–2191. [Google Scholar]

- Lochmatter, T.; Roduit, P.; Cianci, C.; Correll, N.; Jacot, J.; Martinoli, A. Swistrack—A flexible open source tracking software for multi-agent systems. In Proceedings of the Intelligent Robots and Systems (IROS), Nice, France, 22–26 September 2008; pp. 4004–4010. [Google Scholar]

- Yang, X.; Patel, R.V.; Moallem, M. A fuzzy—Braitenberg navigation strategy for differential drive mobile robots. J. Intell. Robot. Syst. 2006, 47, 101–124. [Google Scholar] [CrossRef]

- Mathworks. MATLAB Neural Network Toolbox 9.0. 2017. Available online: https://es.mathworks.com/help/nnet/index.html (accessed on 2 October 2017).

- Çelik, O.; Teke, A.; Yıldırım, H.B. The optimized artificial neural network model with Levenberg-Marquardt algorithm for global solar radiation estimation in Eastern Mediterranean Region of Turkey. J. Clean. Prod. 2016, 116, 1–12. [Google Scholar] [CrossRef]

- Kuhne, F.; Lages, W.F.; Da Silva, J.G. Point stabilization of mobile robots with nonlinear model predictive control. In Proceedings of the IEEE International Conference Mechatronics and Automation (ICMA), Niagara Falls, ON, Canada, 29 July–1 August 2005; pp. 1163–1168. [Google Scholar]

- González Villela, V.J.; Parkin, R.; López Parra, M.; Dorador González, J.M.; Guadarrama Liho, M.J. A wheeled mobile robot with obstacle avoidance capability. Ing. Mec. Tecnol. Desarro. 2004, 5, 159–166. [Google Scholar]

| MAPE (%) | ||||

|---|---|---|---|---|

| Robot 1 | Robot 2 | |||

| ANN | Trad | ANN | Trad | |

| D | 55.77 | 54.33 | 30.45 | 38.49 |

| D | 67.06 | 79.01 | 25.56 | 40.09 |

| D | 66.64 | 76.20 | 21.05 | 29.24 |

| D | 50.71 | 66.46 | 12.68 | 23.44 |

| D | 44.35 | 51.74 | 9.50 | 17.17 |

| D | 47.61 | 51.12 | 11.76 | 21.54 |

| D | 46.29 | 56.80 | 21.68 | 39.24 |

| D | 58.09 | 55.37 | 32.03 | 42.45 |

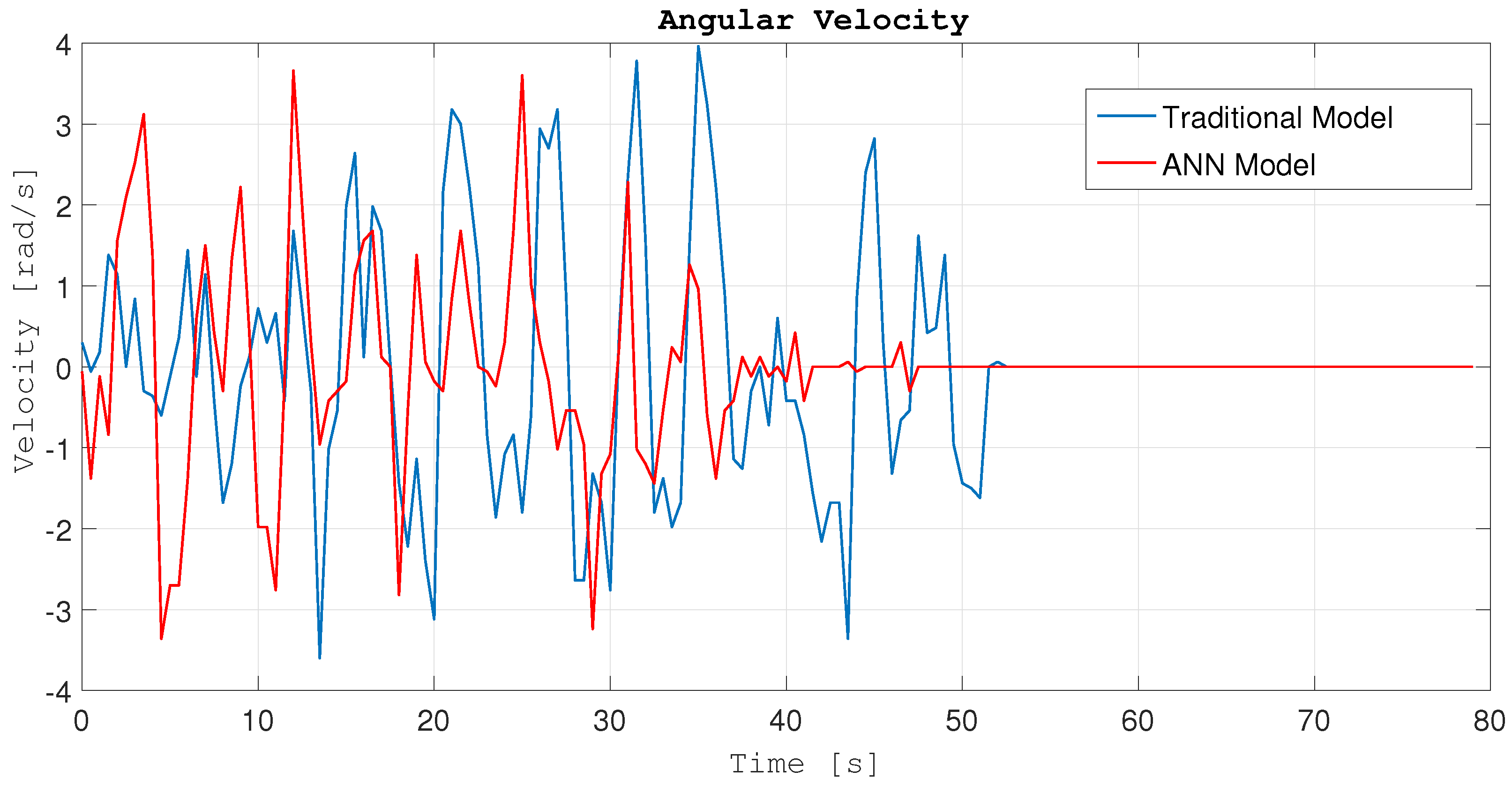

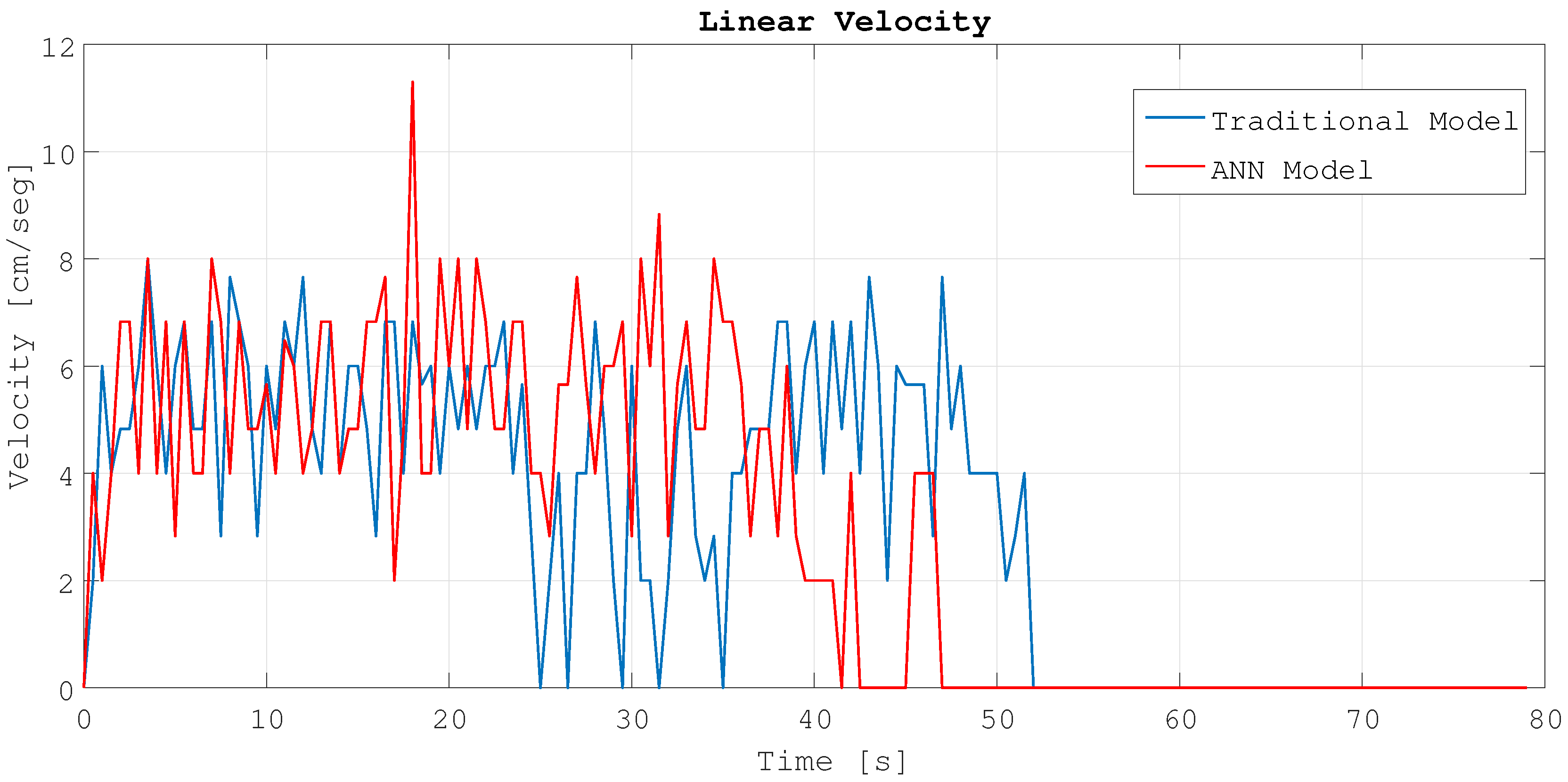

| Exp | Method | MAPE (%) | Time (s) |

|---|---|---|---|

| 1 | Trad | 83.9 | 59.4 |

| 1 | ANN | 65.5 | 50.8 |

| 2 | Trad | 88.8 | 60.4 |

| 2 | ANN | 58.4 | 51.3 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farias, G.; Fabregas, E.; Peralta, E.; Vargas, H.; Hermosilla, G.; Garcia, G.; Dormido, S. A Neural Network Approach for Building An Obstacle Detection Model by Fusion of Proximity Sensors Data. Sensors 2018, 18, 683. https://doi.org/10.3390/s18030683

Farias G, Fabregas E, Peralta E, Vargas H, Hermosilla G, Garcia G, Dormido S. A Neural Network Approach for Building An Obstacle Detection Model by Fusion of Proximity Sensors Data. Sensors. 2018; 18(3):683. https://doi.org/10.3390/s18030683

Chicago/Turabian StyleFarias, Gonzalo, Ernesto Fabregas, Emmanuel Peralta, Héctor Vargas, Gabriel Hermosilla, Gonzalo Garcia, and Sebastián Dormido. 2018. "A Neural Network Approach for Building An Obstacle Detection Model by Fusion of Proximity Sensors Data" Sensors 18, no. 3: 683. https://doi.org/10.3390/s18030683

APA StyleFarias, G., Fabregas, E., Peralta, E., Vargas, H., Hermosilla, G., Garcia, G., & Dormido, S. (2018). A Neural Network Approach for Building An Obstacle Detection Model by Fusion of Proximity Sensors Data. Sensors, 18(3), 683. https://doi.org/10.3390/s18030683