Vehicle Counting Based on Vehicle Detection and Tracking from Aerial Videos

Abstract

:1. Introduction

2. Architecture of the System

3. Vehicle Detection and Tracking

3.1. Vehicle Detection

3.1.1. Static Background

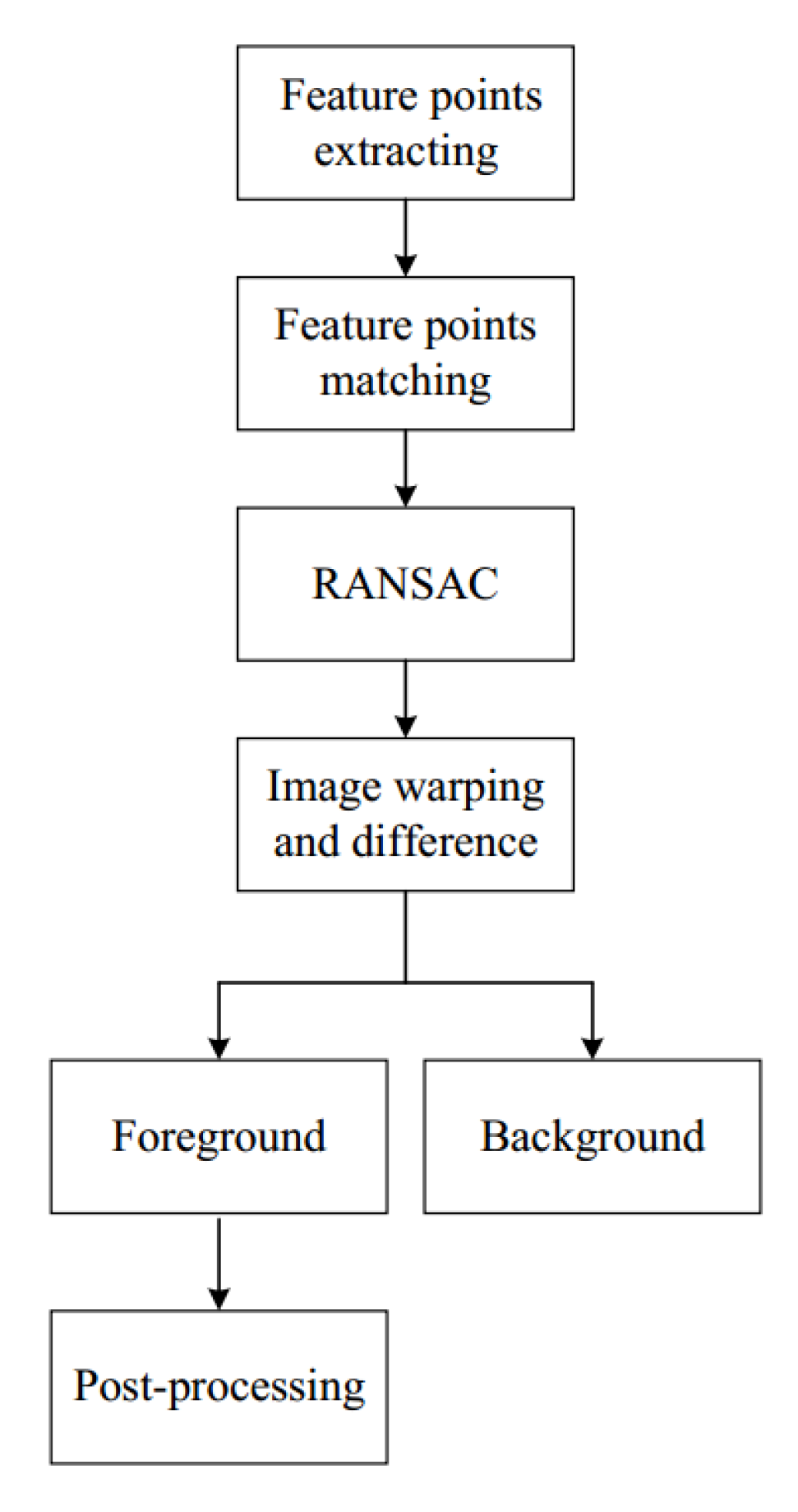

3.1.2. Moving Background

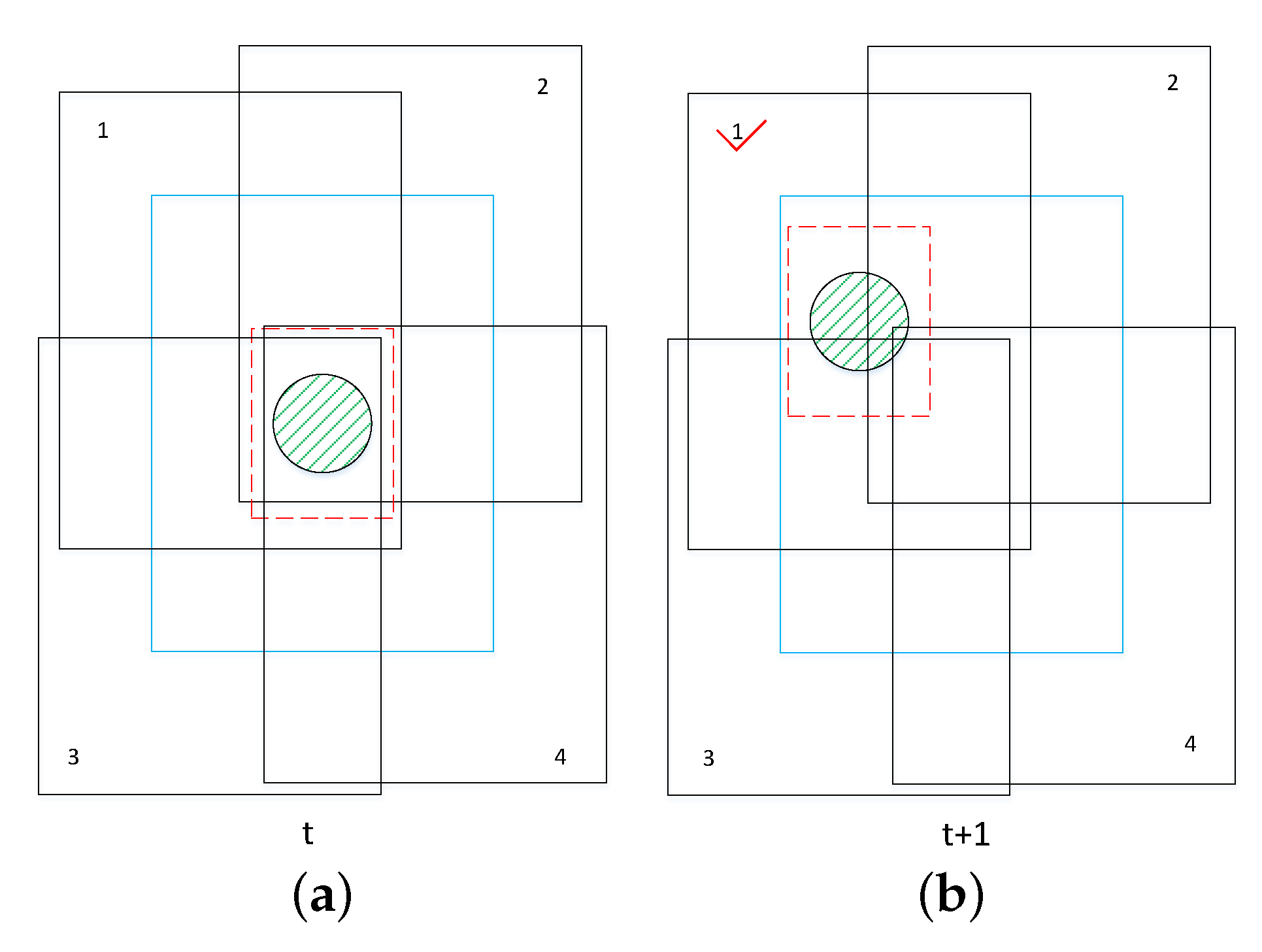

3.2. Vehicle Tracking

4. Multi-Vehicle Management Module

5. Vehicle Counting Module

6. Evaluation

6.1. Dataset

6.2. Estimation Results and Performance

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Scarzello, J.; Lenko, D.; Brown, R.; Krall, A. SPVD: A magnetic vehicle detection system using a low power magnetometer. IEEE Trans. Magnet. 1978, 14, 574–576. [Google Scholar] [CrossRef]

- Ramezani, A.; Moshiri, B. The traffic condition likelihood extraction using incomplete observation in distributed traffic loop detectors. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1291–1298. [Google Scholar]

- Agarwal, V.; Murali, N.V.; Chandramouli, C. A Cost-Effective Ultrasonic Sensor-Based Driver-Assistance System for Congested Traffic Conditions. IEEE Trans. Intell. Transp. Syst. 2009, 10, 486–498. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Ye, C.; Yu, J.; Zhang, Y. A Real-Time Distributed Intelligent Traffic Video-Surveillance System on Embedded Smart Cameras. In Proceedings of the 2013 Fourth International Conference on Networking and Distributed Computing, Los Angeles, CA, USA, 21–24 December 2013; pp. 51–55. [Google Scholar]

- Ebrahimi, S.G.; Seifnaraghi, N.; Ince, E.A. Traffic analysis of avenues and intersections based on video surveillance from fixed video cameras. In Proceedings of the 2009 IEEE 17th Signal Processing and Communications Applications Conference, Antalya, Turkey, 9–11 April 2009; pp. 848–851. [Google Scholar]

- Thadagoppula, P.K.; Upadhyaya, V. Speed detection using image processing. In Proceedings of the 2016 International Conference on Computer, Control, Informatics and its Applications (IC3INA), Jakarta, Indonesia, 3–5 October 2016; pp. 11–16. [Google Scholar]

- Engel, J.I.; Martín, J.; Barco, R. A Low-Complexity Vision-Based System for Real-Time Traffic Monitoring. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1279–1288. [Google Scholar] [CrossRef]

- Lin, S.P.; Chen, Y.H.; Wu, B.F. A Real-Time Multiple-Vehicle Detection and Tracking System with Prior Occlusion Detection and Resolution, and Prior Queue Detection and Resolution. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 1, pp. 828–831. [Google Scholar]

- Wang, J.M.; Chung, Y.C.; Chang, C.L.; Chen, S.W. Shadow detection and removal for traffic images. In Proceedings of the IEEE International Conference on Networking, Sensing and Control, Taipei, Taiwan, 21–23 March 2004; Volume 1, pp. 649–654. [Google Scholar]

- Douret, J.; Benosman, R. A volumetric multi-cameras method dedicated to road traffic monitoring. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 442–446. [Google Scholar]

- Gandhi, T.; Trivedi, M.M. Vehicle Surround Capture: Survey of Techniques and a Novel Omni-Video-Based Approach for Dynamic Panoramic Surround Maps. IEEE Trans. Intell. Transp. Syst. 2006, 7, 293–308. [Google Scholar] [CrossRef] [Green Version]

- Srijongkon, K.; Duangsoithong, R.; Jindapetch, N.; Ikura, M.; Chumpol, S. SDSoC based development of vehicle counting system using adaptive background method. In Proceedings of the 2017 IEEE Regional Symposium on Micro and Nanoelectronics (RSM), Batu Ferringhi, Malaysia, 23–25 August 2017; pp. 235–238. [Google Scholar]

- Prommool, P.; Auephanwiriyakul, S.; Theera-Umpon, N. Vision-based automatic vehicle counting system using motion estimation with Taylor series approximation. In Proceedings of the 2016 6th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Batu Ferringhi, Malaysia, 25–27 November 2016; pp. 485–489. [Google Scholar]

- Swamy, G.N.; Srilekha, S. Vehicle detection and counting based on color space model. In Proceedings of the 2015 International Conference on Communications and Signal Processing (ICCSP), Melmaruvathur, India, 2–4 April 2015; pp. 0447–0450. [Google Scholar] [CrossRef]

- Seenouvong, N.; Watchareeruetai, U.; Nuthong, C.; Khongsomboon, K.; Ohnishi, N. A computer vision based vehicle detection and counting system. In Proceedings of the 2016 8th International Conference on Knowledge and Smart Technology (KST), Chiangmai, Thailand, 3–6 February 2016; pp. 224–227. [Google Scholar]

- Ke, R.; Kim, S.; Li, Z.; Wang, Y. Motion-vector clustering for traffic speed detection from UAV video. In Proceedings of the 2015 IEEE First International Smart Cities Conference (ISC2), Guadalajara, Mexico, 25–28 October 2015; pp. 1–5. [Google Scholar]

- Shastry, A.C.; Schowengerdt, R.A. Airborne video registration and traffic-flow parameter estimation. IEEE Trans. Intell. Transp. Syst. 2005, 6, 391–405. [Google Scholar] [CrossRef]

- Cao, X.; Gao, C.; Lan, J.; Yuan, Y.; Yan, P. Ego Motion Guided Particle Filter for Vehicle Tracking in Airborne Videos. Neurocomput 2014, 124, 168–177. [Google Scholar] [CrossRef]

- Pouzet, M.; Bonnin, P.; Laneurit, J.; Tessier, C. Moving targets detection from UAV based on a robust real-time image registration algorithm. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2378–2382. [Google Scholar]

- Freis, S.; Olivares-Mendez, M.A.; Viti, F. Estimating speed profiles from aerial vision—A comparison of regression based sampling techniques. In Proceedings of the 2016 24th Mediterranean Conference on Control and Automation (MED), Athens, Greece, 21–24 June 2016; pp. 1343–1348. [Google Scholar]

- Chen, X.; Meng, Q. Vehicle Detection from UAVs by Using SIFT with Implicit Shape Model. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 3139–3144. [Google Scholar]

- Guvenc, I.; Koohifar, F.; Singh, S.; Sichitiu, M.L.; Matolak, D. Detection, Tracking, and Interdiction for Amateur Drones. IEEE Commun. Mag. 2018, 56, 75–81. [Google Scholar] [CrossRef]

- Shi, X.; Ling, H.; Blasch, E.; Hu, W. Context-driven moving vehicle detection in wide area motion imagery. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 2512–2515. [Google Scholar]

- LaLonde, R.; Zhang, D.; Shah, M. ClusterNet: Detecting Small Objects in Large Scenes by Exploiting Spatio-Temporal Information. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Wang, P.; Yan, X.; Gao, Z. Vehicle counting and traffic flow analysis with UAV by low rank representation. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 1401–1405. [Google Scholar]

- Barnich, O.; Droogenbroeck, M.V. ViBe: A Universal Background Subtraction Algorithm for Video Sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Understand. 2008, 110, 346–359. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hare, S.; Golodetz, S.; Saffari, A.; Vineet, V.; Cheng, M.M.; Hicks, S.L.; Torr, P.H.S. Struck: Structured Output Tracking with Kernels. IEEE Trans.Pattern Anal. Mach. Intell. 2016, 38, 2096–2109. [Google Scholar] [CrossRef] [PubMed]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans.Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

| Aerial Videos | Height | Static Background | Moving Background | Total Number of Frames |

|---|---|---|---|---|

| TEST_VIDEO_1 | 50 | √ | 5638 | |

| TEST_VIDEO_2 | 50 | √ | 5770 | |

| TEST_VIDEO_3 | 50 | √ | 5729 | |

| TEST_VIDEO_4 | 50 | √ | 5820 | |

| TEST_VIDEO_5 | 50 | √ | 5432 | |

| TEST_VIDEO_6 | 50 | √ | 5533 | |

| TEST_VIDEO_7 | 50 | √ | 5573 | |

| TEST_VIDEO_8 | 50 | √ | 5599 | |

| TEST_VIDEO_9 | 100 | √ | 5920 | |

| TEST_VIDEO_10 | 100 | √ | 5733 | |

| TEST_VIDEO_11 | 100 | √ | 5527 | |

| TEST_VIDEO_12 | 100 | √ | 5573 | |

| TEST_VIDEO_13 | 100 | √ | 5620 | |

| TEST_VIDEO_14 | 100 | √ | 5734 | |

| TEST_VIDEO_15 | 100 | √ | 5702 | |

| TEST_VIDEO_16 | 100 | √ | 5523 |

| Parameters | Height | Background | ||

|---|---|---|---|---|

| 50 | 100 | Fixed | Moving | |

| N | 50 | 45 | √ | - |

| 15 | 13 | √ | - | |

| 2 | 2 | √ | - | |

| 5 | 5 | √ | - | |

| 70 | 60 | - | √ | |

| H | 100 | 100 | - | √ |

| 15 | 10 | - | √ | |

| 2.5 | 2 | √ | √ | |

| √ | √ | |||

| 0.2 | 0.3 | √ | √ | |

| Height | Direction | Total Number of Vehicles | Number of the Counted Vehicles | Accuracy | Background |

|---|---|---|---|---|---|

| 50 | Forward | 202 | 193 | 95.54% | Fixed |

| 50 | Backward | 217 | 207 | 95.39% | Fixed |

| 50 | Forward | 164 | 144 | 87.80% | Moving |

| 50 | Backward | 139 | 122 | 87.77% | Moving |

| 50 | Forward and background | 722 | 666 | 92.24% | Fixed and moving |

| 100 | Forward | 174 | 160 | 91.95% | Fixed |

| 100 | Backward | 238 | 219 | 92.02% | Fixed |

| 100 | Forward | 173 | 148 | 85.55% | Moving |

| 100 | Backward | 147 | 126 | 85.71% | Moving |

| 100 | Forward and backward | 732 | 653 | 89.21% | Fixed and moving |

| 50 and 100 | Forward and backward | 831 | 779 | 93.74% | Fixed |

| 50 and 100 | Forward and backward | 623 | 540 | 86.68% | Moving |

| 50 and 100 | Forward and backward | 1454 | 1319 | 90.72% | Fixed and Moving |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, X.; Zhai, M.; Lv, N.; El Saddik, A. Vehicle Counting Based on Vehicle Detection and Tracking from Aerial Videos. Sensors 2018, 18, 2560. https://doi.org/10.3390/s18082560

Xiang X, Zhai M, Lv N, El Saddik A. Vehicle Counting Based on Vehicle Detection and Tracking from Aerial Videos. Sensors. 2018; 18(8):2560. https://doi.org/10.3390/s18082560

Chicago/Turabian StyleXiang, Xuezhi, Mingliang Zhai, Ning Lv, and Abdulmotaleb El Saddik. 2018. "Vehicle Counting Based on Vehicle Detection and Tracking from Aerial Videos" Sensors 18, no. 8: 2560. https://doi.org/10.3390/s18082560

APA StyleXiang, X., Zhai, M., Lv, N., & El Saddik, A. (2018). Vehicle Counting Based on Vehicle Detection and Tracking from Aerial Videos. Sensors, 18(8), 2560. https://doi.org/10.3390/s18082560