1. Introduction

Affective states, also referred to as mood [

1], are an inherent part of our daily life. They provide our emotional context, shaping the background of everything we experience and do [

2]. Furthermore, they play an active role when determining both the cognition content and the cognitive processes. For that reason, there is a strong evidence that affective states are highly related to some clinical disorders as depression, bipolarity or anxiety [

3,

4]. However, the changes on affective states also influence the cognition and social behavior of individuals who do not suffer from emotional disorders.

The affective states of an individual do not remain constant, they fluctuate over time. They are strongly influenced by the situations which individuals find themselves in, i.e., the context surrounding them [

5]. These fluctuations may be caused by situational variables (location, social interaction or weather), as well as internal variables (sleep patterns, physical activity levels or stress). Therefore, identifying daily life events or situations that could lead to a change in the individual’s affective profile has raised as a fundamental task in emotion research [

3,

6], as it can help us not only to model the evolution of affective states during the daily life, but also to understand the source of these changes. In order to accomplish this task, both affective state and context must be monitored continuously during the course of the day, so that the fluctuations of the affective state are registered along with information about what is happening around the subject. Nevertheless, a significant proportion of the analyses performed during the last years do not take into consideration the daily context data when evaluating the affective state [

7,

8]. Even when the context is considered, some of them make use of self-reported information, leading to errors due to recall and subjective interpretation of the context [

4,

9].

With the recent emergence of mobile technologies, a new breed of intelligent mechanisms have raised to ubiquitously monitor affective processes and daily life context [

10,

11]. In particular, smartphones are at the leading edge because of their richness in terms of sensors and computing resources, and their widespread use among every segment of the population. As we carry the smartphone with us almost every time, these devices offer the potential to measure our context and behavior continuously, objectively, and with a minimal effort for the user. Moreover, assessing mood and context through the smartphone prevent individuals from carrying around extra devices that could modify their behavior. For those reasons, they have powered real-time monitoring techniques, such as the Experience Sampling Method (ESM) [

12,

13], one of the most commonly used methods for recording affective data.

In view of the present challenges of mood variability assessment, in [

14] we presented the prototype of an integrated, multimodal platform that supports context-aware mobile ESM-reporting of the affective states during daily life. The present work completes and extends the implementation of this platform, describes it in detail, and assesses its validity and usability through a preliminary study. The proposed system is aimed to ubiquitously monitor the fluctuation of the affective states of an individual over time, and to perform an objective acquisition of its context through the sensors available in the smartphone. Moreover, the platform integrates the flexible management of the ESM questionnaires and smartphone sensor setup, the acquisition of the affect and context data through the smartphone, and the storage, visualization and processing of the gathered data.

Section 2 presents an overview of the state-of-the-art in affective assessment through mobile phones. The platform and its operation are described in

Section 3. The usability analysis and the evaluation of the platform are depicted in

Section 4. The results of the evaluation are discussed in

Section 5, and final conclusions and remarks are summarized in

Section 6.

2. Related Work

In recent years, the massive presence of smartphones among the population has raised mobile technology as a resource for ubiquitous personal sensing. As people carry them almost everywhere -the global mobile internet usage stands at 76% [

15], smartphones can be easily utilized in health and affective research to assess experiences during daily life [

16]. Their user-friendliness make people feel comfortable using their own device, making research studies less intrusive. In our society, the interest in tracking our mood for a better understanding of it has also been growing during the last years.

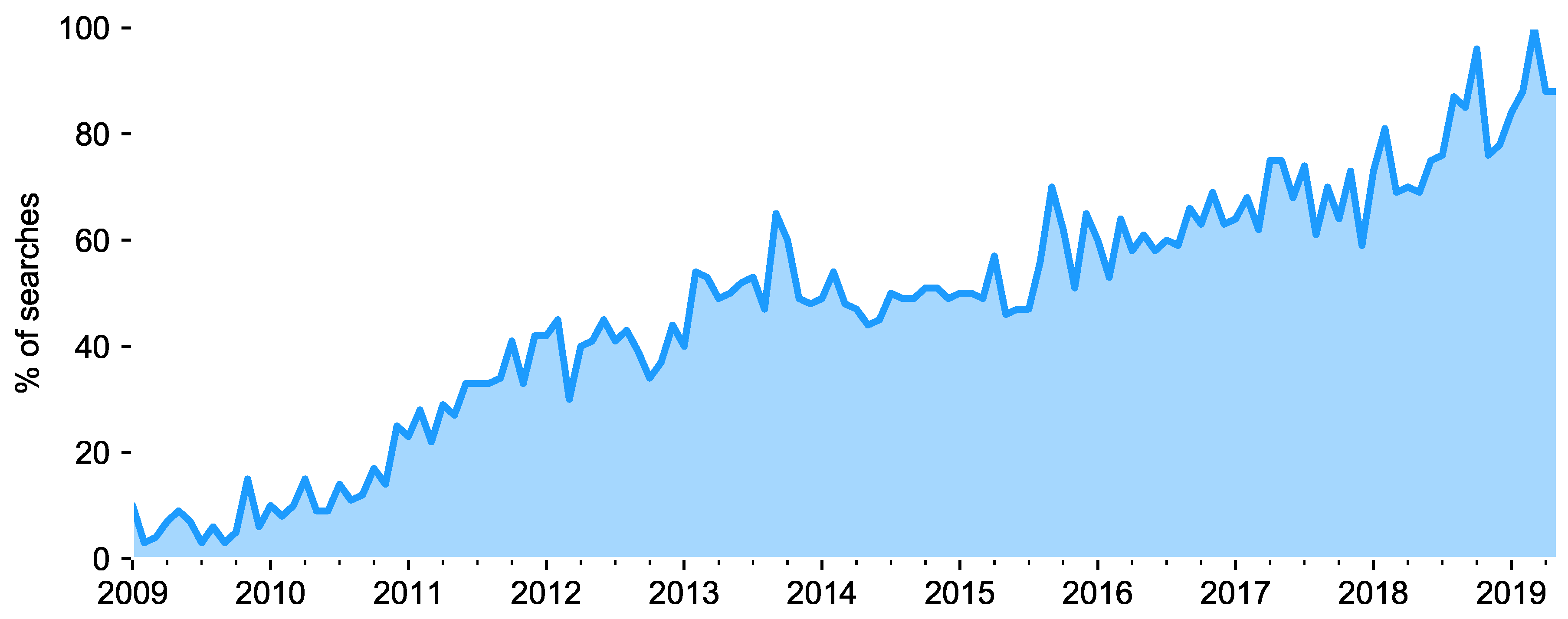

Figure 1 shows the worldwide search trend of the concept “mood app”. It shows that, during the last decade, people’s interest in this sort of apps has been increasing, and it still does. The values, depicted in percentages, reflect the amount of searches done, relative to the total of searches on that topic done on Google over time. It is also worth mentioning that this trend only refers to searches in English.

Within the scope of scientific research, the number of articles regarding this topic has also considerably increased. A systematic search through the Scopus and Web of Science scientific databases of the keywords “(mood OR affective OR emotion) AND (monitoring OR assessment OR (evaluation)) AND (mobile OR smartphone)” has been performed. The results obtained show that before year 2007, almost no article under this topic was indexed and, from this year on, the number of records has been increasing drastically, especially during the last decade. This finding, along with the fact that the total amount of articles found is not very high, proves that there is a good opportunity for the development of systems for the support of context-aware monitoring of affective states.

Several examples of affective states monitoring during the daily life using mobile technologies can be found in the literature, some of them leading to the development of specific monitoring apps. The vast majority of them ask individuals to assess their mood several times per day through self-reports, collected with the smartphone using the ESM technique. For example, in [

18] a computerized mobile phone method is used to assess the participants’ mood in daily life via short phone calls. Other similar examples can also be found [

3,

16,

19]. However, these studies do not consider capturing the context surrounding the subjects, thus lacking important information that could have an impact on the mood data and provide a better understanding of how it fluctuates. Mobile systems aimed at including the capture of context are also present in the literature. MoodPrism [

20] uses mobile ESM questionnaires delivered at the smartphone in order to assess the emotional state. The application also collects data from social networks and the music listened to infer the mood through text analysis. Some extra context features were also gathered by means of self-reports in the app. MoodScope and MoodMiner [

21,

22] have also leveraged the potential of built-in mobile phone sensors to objectively capture context and predict mood. Situational features such as location, social interaction, ambient light and noise, and physical activity are inferred from the smartphone sensors to contextualize the affective data acquired. Similar but also recent examples are [

23,

24]. These works have achieved encouraging results, and prove that this methodology is technically feasible for sensing mood. Nevertheless, these works present mobile apps with a fixed methodology of data acquisition. The content of the ESM questionnaires, their scheduling, and the smartphone sensor configuration are defined prior to the beginning of the data collection. This approach does not allow for making changes on the methodology based on the issues encountered during the progress of the study, which could be useful to improve certain parameters as the response rate, the energy consumption or the accuracy of the data acquired. In view of this limitation, it seems that there is a clear opportunity for the development of systems that improve the flexibility of monitoring procedures in this young and very promising research area.

4. Evaluation

In order to show the potential of the proposed system, and analyze its validity and usability, a preliminary study has been conducted. The aim of this study is to determine whether the data gathered using this system is representative of the participants’ daily life, and therefore, to check its suitability for a real-time context-aware affective state assessment task. The perception and user-friendliness of the system from the end-users was also assessed. Furthermore, the results lead to some recommendations for future studies with similar approaches. In the following, the details and results of the pilot study are described.

4.1. Participants

A total of 22 participants—11 males, 13 females, 17–52 years old, M = 22.2, SD = 7.4, were recruited for the study. All of them were required to have an Android-based smartphone and use their own device for the study. Following the ethics approval from the Ethical Committee of the University of Granada, before conducting the study, all the participants were informed about the aims of the research, and they read and signed an informed consent form. Participants were instructed about the installation of the app and the procedure to answer the ESM questionnaires, but no training session or additional information about the use of the app was given, since they do not even need to be aware of the presence of the app, as the data gathering and the ESM delivery is automatic. They were asked to follow the instructions for the installation of the AWARE Client app -including the Flexible ESM plugin-, and the scanning of the QR code for the automatic configuration. Thereafter, they were asked just to continue with their normal lives and answer the ESM questionnaires when received. Three psychology experts designed the ESM questionnaire and supervised the study.

4.2. Methods

4.2.1. Procedure

In the first place, the three experts designed the ESM questionnaire for assessing the affective states described in

Section 3.2, by means of the ESM Management Interface. After that, the app installation and configuration procedure was carried out on the participants’ smartphones. We collected data for a total of 14 consecutive days, including both weekdays and weekends. ESM-based studies are recommended to have a duration of 2–4 weeks, because response rate and accuracy is proved to drop after this time due to fatigue [

13,

49,

50]. During this period, both affective states and validity indicators were monitored. There is no agreement in the literature on the ideal number of notifications, usually ranging from 1 to 10 per day [

16]. However, it is suggested to gather the minimum required number of samples for having valid data, without cluttering participants [

13,

50,

51]. In our study, six questionnaires were delivered per day at pseudo-random times. The schedule of the notifications must also be taken into consideration. Researchers are encouraged to use random or pseudo-random schedules [

19], because they reduce the chance of biased reports. Nevertheless, it is necessary to ensure that the samples are not too close, which could produce redundant information. For that reason, we set six evenly distributed intervals of one hour, during which the questionnaire can be triggered. The intervals selected were: 07:00–08:00, 10:00–11:00, 13:00–14:00, 16:00–17:00, 19:00–20:00 and 22:00–23:00. Upon receiving the questionnaire, participants had 120 min to open the notification before it disappears and is marked as

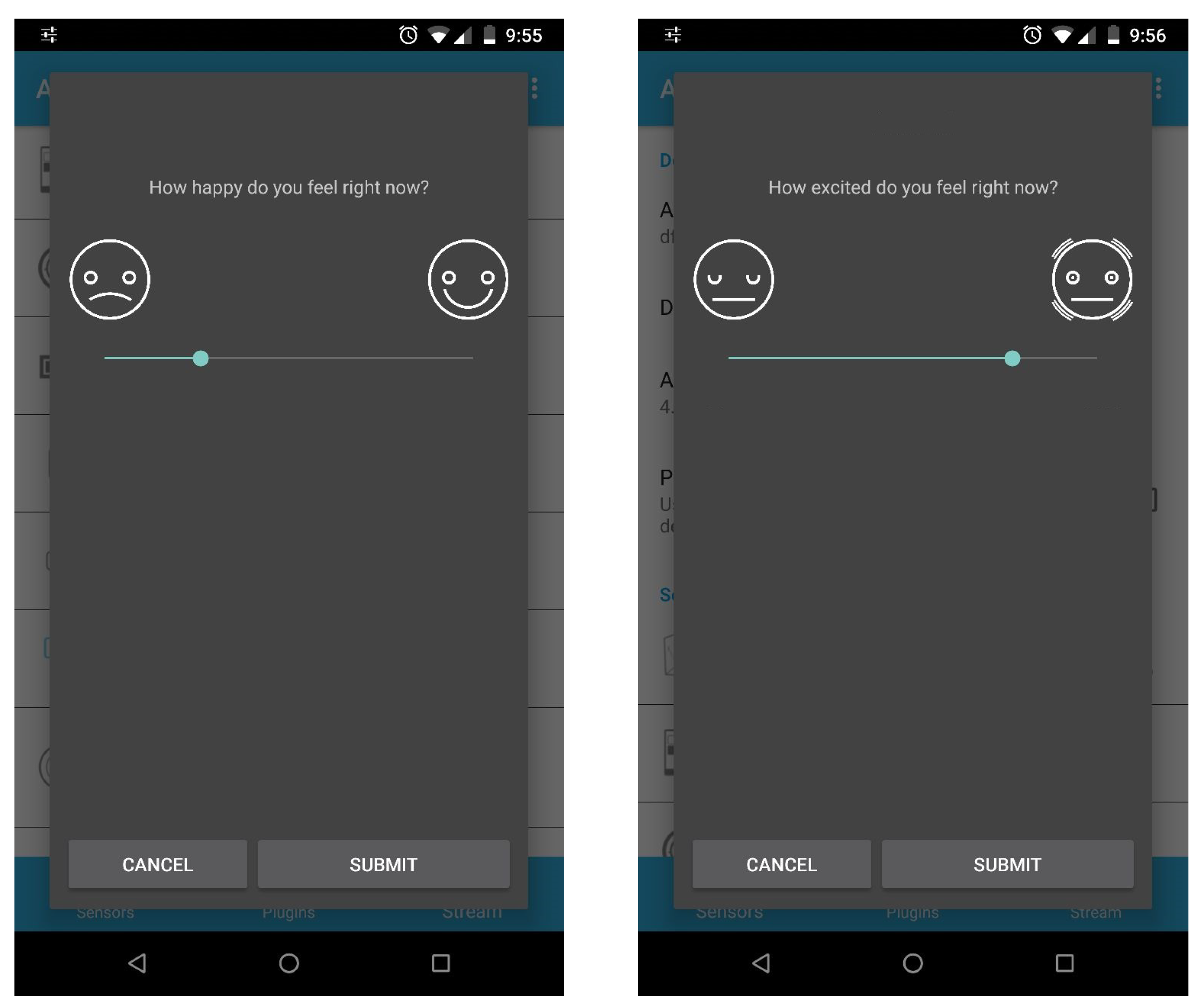

expired. The two questions are presented sequentially: once the valence level is reported, the arousal question appears on the screen. Finally, the penultimate day of the study, a reminder was sent as an extra ESM using th ESM Management Interface, in order to notify the participants of the upcoming end of the study and give them the instructions to stop the application.

4.2.2. Validity Indicators

In order to assess the validity of the affective state measurements, we have calculated a number of context indicators regarding the ESM questionnaires. These features have been used in previous works [

49,

50,

52] as indicators of the validity of the data acquired. The validity indicators are measured with regard to each questionnaire: hour of the day and relative day of the study when the questionnaire was responded; completion time for the questionnaire; and time elapsed between the questionnaire reception and its completion. The response rate has also been assessed.

4.2.3. Usability Evaluation

The goal of the last part of this evaluation is to assess the usability and interest of the proposed system, according to the users’ and experts’ opinions. To that end, we employed the System Usability Scale (SUS) [

53]. This scale has become an industry standard used to quantify user’s experience with a system. The SUS consists of a 10-items questionnaire, with every answer scored by the user through a 5-point scale ranging from “strongly disagree” to “strongly agree”. It is a quick and reliable method that provides an indicator of the usability of the system, and has been tested on a wide variety of systems [

54]. The SUS questions are the following:

(Q1) I think that I would like to use this system frequently.

(Q2) I found the system unnecessarily complex.

(Q3) I thought the system was easy to use.

(Q4) I think that I would need the support of a technical person to be able to use this system.

(Q5) I found the various functions in this system were well integrated.

(Q6) I thought there was too much inconsistency in this system.

(Q7) I would imagine that most people would learn to use this system very quickly.

(Q8) I found the system very cumbersome to use.

(Q9) I felt very confident using the system.

(Q10) I needed to learn a lot of things before I could get going with this system.

At the end of the study, all the participants were asked to fill a survey with these questions regarding the use of the AWARE client app with the Flexible ESM plugin, as well as to provide feedback on their use if desired. In the same way, after using the ESM Management Interface, the three experts were asked to provide their impressions regarding its use, and to evaluate it with the System Usability Scale.

4.3. Results

4.3.1. Response Rate

The response rate is an indicator of how well the data acquired represent the real feature studied. For that reason, it can be used to assess the validity of the proposed system towards the monitoring of affective states. During the two-week data collection period, a total of 1848 questionnaires were triggered. Participants completed a total of 1369 questionnaires, which means an overall response rate of 74.1%. A total of 11 notifications were actively dismissed by the participants (0.6%), while the 468 remaining questionnaires (25.3%) were automatically dismissed after 120 min. However, this feature also has to be measured individually, in order to evaluate whether the amount of valid data acquired for a particular subject is enough to represent correctly the evolution of the affective states.

Figure 6 shows the percentage of answered, dismissed and expired questionnaires for each subject. It can be seen that the individual response rate ranges from 27.8% (subject P08) to 97.6% (subject P13), with a mean value of 82.2% (±16.5%).

In our study, we triggered six questionnaires at different times during the course of the day. The response rate varies depending on the time when the questionnaire is submitted.

Figure 7 depicts the overall response rate in our study, versus the hour of the day when the ESM notification was triggered. The ESM schedule was randomly set within one hour intervals, so the hours in this figure are grouped within intervals evenly spaced around the triggering intervals. The response rate was considerably lower in the first half of the day between 06:00 and 12:00, increasing as the day progresses. The highest response rate is achieved between 18:00 and 00:00, with a value of 88.1%, which means an increase of a 18.3% with respect to the beginning of the day.

The response rate also fluctuates during the course of the study.

Figure 8 shows the evolution of the overall response rate as the study goes on. The central vertical line emphasizes the distinction between the two weeks covered by the study. It can be seen that the response rate keeps significantly high during the first week of the study, experiencing a progressive decrease in the second week. The mean response rates for each week are 86.9% and 77.9%, respectively. The previously mentioned reminder of the end of the study was sent on day 13. This reminder could explain the slight increase of responses appreciated in this day, as the participants started again to keep the questionnaires in mind.

4.3.2. Completion Time

The time elapsed between the opening of the notification and the submission of the answer has also been measured for this analysis. It is an indicator of the attention paid while responding of the question. The mean (±standard deviation) completion time for all the questionnaires was 7 (±7.54) and 4.9 (±4.2) seconds for the valence and arousal questions respectively. These values include the time spent by the plugin to load all the resources of the questionnaire including the images. Following the recommendations of [

49], we have removed the responses with completion times two standard deviations above the mean, since they may be caused by problems experienced when loading their resources or by excessive inattention during the response, thus producing low quality data.

Figure 9 and

Figure 10 show the mean completion times for each study day for both valence and arousal questions. This analysis shows two important results: first of all, it is worth mentioning that the mean completion times of the valence question are substantially higher than the ones for the arousal question, presumably as a result of the order of presentation of both questions (valence was the first dimension to be assessed). Secondly, the completion time considerably decreases during the first week of the study, and remains almost constant during the second week. This behavior is shared by both questions.

4.3.3. Elapsed Time from Notification Arrival to Response

For this evaluation, the time elapsed between the arrival of the notification to the time when the participant clicks on it and respond the questionnaire has also been measured. As the notification of the ESM persisted for 120 min after its reception, they could be responded out of the triggering intervals, thus modifying the time distribution of the samples. Computing the elapsed time from the notification to the response, we can get an idea on when the participants actually answer the questionnaires. It should be taken in consideration that this analysis only includes the questions that have been responded or dismissed, since the ones that expired do not have response time.

Figure 11 depicts the elapsed times versus the hour of the day when the notification was triggered.

It can be observed that in the early morning interval, from 06:00 to 09:00, participants take more time to open the questionnaire, with a mean (±standard deviation) elapsed time of 37.2 (±32.1) min. This value is considerably lower during the rest of the day, reaching its minimum point at the end of the day, from 21:00 to 00:00, with a mean elapsed time of 18.3 (±25.9) min.

4.3.4. Usability Assessment

A total of 20 of the 22 participants of the study completed the usability survey about the app at the end of the experiment, and the three experts did the same for the ESM Management Interface. They rated the 10 items stated in

Section 4.2 using a Likert scale ranging from 1 or “strongly disagree” to 5 or “strongly agree”. In order to compute the global SUS score for each user, an individual score is given to each item, following the guidelines presented in [

53]:

For odd-numbered items, the score is computed subtracting 1 to the user response.

For even-numbered items, the score is computed subtracting the user response to 5.

All the scores obtained—now ranging from 0 to 4, are added and multiplied by 2.5 to obtain the overall SUS score.

The average standardized SUS score is 68 [

54,

55]. A system with a score over this value is considered to have a good usability level. Moreover, systems who exceed a SUS score of 80.3 are considered to have an excellent usability level. Regarding the app and the plugin, the SUS scores given by each participant of the study after computing their overall value are shown in

Figure 12. The black dashed line indicates the mean value of the SUS scores given to our system, and the red one indicates the aforementioned good usability threshold. It can be seen that only two out of the twenty ratings are under 68, and the mean SUS score given to the system is 84.75. These values represent high levels of acceptability and ease of use, thus indicating that the usability of the system seems to be highly favorable for the end users.

With the aim of complementing this evaluation and have a better understanding about the ratings of the system, we also asked the participants to give voluntary feedback about the performance of the system. First, most of them noted the ease of use of the ESM questionnaires, and remarked that the response to them was much less time consuming than they expected: “The application is very intuitive, it automatically launches the questionnaires and you can answer very easily and quickly.” (P15). Some of them also gave some indications about the procedure of responding the questionnaires, pointing that both affective dimensions (valence and arousal) were difficult to define in such a wide scale, specially the arousal one. They suggested that it could be easier to evaluate using a scale with more restricted values (e.g., a Likert scale). They also commented that, although the face icons helped to identify the feelings at the beginning, after one or two days responding the questionnaires, they go unnoticed. One participant also suggested that “It could be interesting to access to the questionnaire directly when unlocking the phone.” (P02). Although the participants did not report special negative comments, some of them noted a slight increase of the battery drainage during the course of the day: “The only negative point could be that the battery drainage is noticeable even when the phone is not being used.” (P18). Despite of this fact, almost all the participants noticed that the extra battery drainage still allowed a normal daily operation of the smartphone.

With respect to the ESM Management Interface, the three experts gave a SUS score of 92.5, 87.5 and 95, respectively, resulting in a mean SUS score of 91.67. This score shows an excellent level of usability and acceptability of the system among the experts. They also provided their impressions regarding the use of this tool. They appreciated its high level of customizable settings and its easiness of use, since it makes very straightforward the process of configuring the questionnaires: “The tool offers several options and is very intuitive and easy to use, since it guides the process so that the main issues are addressed.” Likewise, the experts were truly impressed with the easiness to make modifications of the already defined questionnaires. The only negative issue reported is that the aspect of the ESM Management Interface when visualized in mobile devices is not confortable, since the aspect ratio of the elements does not suit properly the smartphone screen. However, despite this positive evaluation, to further confirm the usability of this tool, a study with a higher number of experts is needed, but these preliminary findings seem to be favorable.

5. Discussion

In this paper, we presented a smartphone-based platform intended to monitor the affective states and the context of an individual, with a flexible management of the data acquisition process. We conducted a pilot study to show the potential of the platform and assess its validity, as well as the usability of its elements. The results obtained and the implications and recommendations for future studies using this technology are further discussed in this section.

Response rate. The response rate is an indicator of how well does the data acquired represent the real feature studied. A high response rate indicates that the affective states have been captured in a wider variety of scenarios, so that it is more likely to be contextually diverse. In the opposite situation, a low response rate means a lack of samples, making it more difficult to represent faithfully the affective state fluctuations. Although there is no agreed gold standard for acceptable response rate, some studies noted that ESM data requires a compliance rate close to 80% to be representative of participants’ daily life [

16,

50]. Some researchers do sometimes remove from the analysis those participants with low compliance rates, as their no-representative results can bias the general analysis. The response rate of 17 out of 22 participants of our study are above 77%, verifying the validity of the data gathered and, thus, showing that the proposed system is suitable for monitoring the affective states through ESM questionnaires. However, this marker also shows that there are three participants that should not be considered in further analyses (P02, P08 and P14), because of having compliance rates of 55%, 26.8% and 67.4% respectively.

The response rate also varied within day and within the course of the study. We found that the response rate was considerably lower during the early hours of the morning (06:00 to 12:00), experiencing an increase during the day and reaching its maximum level at the last hours (18:00 to 24:00). This result was expected, because schedules are usually busier during the morning, and the participants might find themselves in situations such as work, lectures, or other environments where they are not aware of the mobile phone, or free to use it. To overcome this issue, it could be interesting to include some recall questionnaires at particular times during the day or to modify the schedules to fit the participants schedule, using the ESM Management Interface Regarding to the day of the study, several researchers warn of a decline in ESM data quality linked to the study duration. In [

50], the recommended duration for experience sampling studies is between 2–4 weeks. In [

49], a considerable drop in the response quality was found in the third week of study. However, it is advisable to keep a minimum duration of one week in order to ensure enough contextual variety in the data captured [

13]. In our study, we found a considerable drop in the number of responded questions from the second week of study (9% of decrease with respect to the first week). However, the daily response rate during the second week remains close to 80%, so we can consider that these data is still representative of the participants’ daily life. In fact, we noticed a slight increase in the response rate one day before the end of the study. This day, a reminder was sent to the participants using the ESM Management Interface with the instructions to end the data collection on the next day. The increase in the response rate may be related to this reminder. Based on this finding, we can both confirm the good performance of the ESM Management Interface, and set up an approach for keeping a high engagement level by sending periodic reminders.

Completion time. When the participants of a study have to answer the same questionnaire several times, they get used to the questions and the response format, and gradually “automatize” the response. The literature links extremely low completion times to inattention when responding the questionnaires, and several studies recommend removing questionnaires with suspiciously fast completion times [

13,

52]. In our study, the responses with completion times two standard deviations above and below the mean have been removed according to [

49]. Less than the 5% of the questions are outside these limits, so the remaining data is still enough to keep a high response rate. We also find that the first day, the mean completion time is substantially higher than the rest of the study, presumably due to the novelty of the questionnaires and the difficulty to identify correctly the valence and arousal levels. The daily mean completion time in our study decreases during the course of the study, specially during the first week. During the second week, the value remains constant. This decrease could be a result of a the aforementioned habituation effect. This automation process can be dangerous, since the users can pay less attention to their responses and the data quality could decrease. In that case, reformulating the questions or changing the response format during the course of the study, using the ESM Management Interface could help to maintain a high attention level when responding to the questions. A suggestion intended to keep the completion time more constant is to perform a learning phase at the beginning of the study, during which the participants are taught to identify correctly their valence and arousal levels, and get used to the procedure of responding the questionnaire, thus avoiding the initial higher completion times.

It is also worth mentioning the difference in the completion time of the valence question and the arousal question. The arousal question was responded an average of three seconds faster than the valence one. Further analyses are required to determine if this finding results from an easier identification of the arousal level, or from the order of presentation of the questions, since the valence question was presented first and participants may take more time to start the response procedure.

Elapsed time from notification arrival to response. The analysis of this measurement show that, during the early hours of the day, participants wait more time from the reception of the notification to the response of the questionnaire. This finding reinforces the previous affirmations about the response rate, which was lower during this period of the day. The implications of this finding for future studies are that, even when the participants respond to the questionnaires during the mornings, the sampling times could not be evenly spaced, so the data gathered through mobile ESMs during the morning may have less quality. Further analysis will be needed to determine, based on the context captured, which are the most suitable locations or moments to send an ESM questionnaire during the morning, thus considering to modify the schedule during the study to fit the participants’ daily routine.

Usability. The results of the usability analysis show that the proposed platform has a high level of user-friendliness, acceptability and ease of use, both from the participants’ and the experts’ perspective. The mean SUS scores given to the app and the ESM Management Interface were 84.75 and 91.67, respectively. It proves that the use of the platform does not require technical skills, making it appropriate for its spread use among the population and the scientific community. The feedback of the users and the experts has been highly valuable, and has let some suggestions for an improvement of the platform. For example, it could be interesting to open the questionnaires upon unlocking the phone, thus making it simpler and faster for the users to respond. It may also increase the response rate due to this more faster approach. This

smartphone-unlock triggering has been explored in other studies, with an increase in the response rate [

56]. Finally, if more sensors are intended to be activated in future studies, the battery drainage should be optimized to ensure a normal daily routine of the subjects, in order to keep the data as much reliable as possible, avoiding any type of intervention. The ESM Management Interface has had a great acceptability among the pysichology experts that supervised the study, who praised its usefulness for exploring new approaches of interventions during the course of research studies. According to the experts comments, it should be optimized for a better visualization in mobile devices.

Limitations. Finally, we discuss some limitations of the study that could have affected our results. First of all, the age group of the participants was limited, with a mean age of 22 years old. It biases the type of situations in which the participants are involved during their daily life. For example, a great part of the participants attended to lectures or work during the mornings, thus not being able to respond the questionnaires. A more wide range of ages would be recommended for future studies. Also could be interesting to conduct the study during a longer time frame, for a further confirmation of the results obtained. The platform should also be assessed by a higher number of experts to further confirm the usability levels reached in this study.