Lighting Deviation Correction for Integrating-Sphere Multispectral Imaging Systems

Abstract

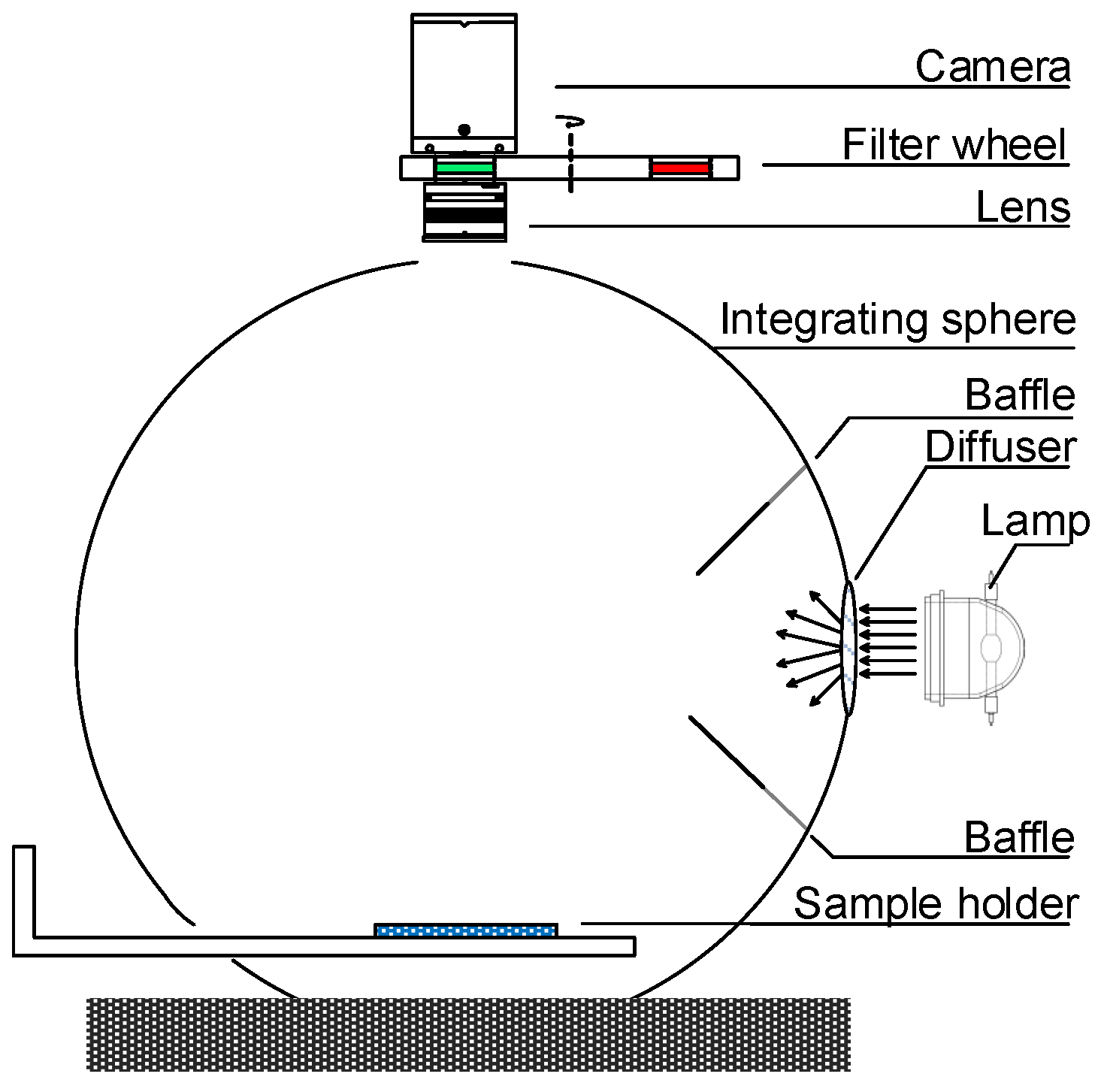

:1. Introduction

2. The Problem of Lighting Deviation Correction

3. Correction Stage I: White-Patch Normalization

3.1. Using the White Standard to Correct Spatial Non-Uniformity

3.2. Using the White Patch to Correct Lighting Deviation

4. Correction Stage II: Polynomial Regression Modeling

5. Experimental Results

5.1. Consistency Improvement on Camera Response

5.2. Consistency Improvement on Spectral Reflectance

5.3. Comparison with Existing Method

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Clarke, F.; Compton, J.A. Correction methods for integrating sphere measurement of hemispherical reflectance. Color Res. Appl. 1986, 11, 253–262. [Google Scholar] [CrossRef]

- Zerlaut, G.; Anderson, T. Multiple-integrating sphere spectrophotometer for measuring absolute spectral reflectance and transmittance. Appl. Opt. 1981, 20, 3797–3804. [Google Scholar] [CrossRef] [PubMed]

- Hanssen, L. Integrating-sphere system and method for absolute measurement of transmittance, reflectance, and absorptance of specular samples. Appl. Opt. 2001, 40, 3196–3204. [Google Scholar] [CrossRef] [PubMed]

- Gindele, K.; Köhl, M.; Mast, M. Spectral reflectance measurements using an integrating sphere in the infrared. Appl. Opt. 1985, 24, 1757–1760. [Google Scholar] [CrossRef] [PubMed]

- Ball, C.P.; Levick, A.P.; Woolliams, E.R.; Green, P.D.; Dury, M.R.; Winkler, R.; Deadman, A.J.; Fox, N.P.; King, M.D. Effect of polytetrafluoroethylene (PTFE) phase transition at 19° on the use of Spectralon as a reference standard for reflectance. Appl. Opt. 2013, 52, 4806–4812. [Google Scholar] [CrossRef] [PubMed]

- Hardeberg, J.Y.; Schmitt, F.J.; Brettel, H. Multispectral color image capture using a liquid crystal tunable filter. Opt. Eng. 2002, 41, 2532–2549. [Google Scholar]

- Shimano, N.; Terai, K.; Hironaga, M. Recovery of spectral reflectances of objects being imaged by multispectral cameras. J. Opt. Soc. Am. A 2007, 24, 3211–3219. [Google Scholar] [CrossRef]

- Park, C.; Kang, M. Color restoration of RGBN multispectral filter array sensor images based on spectral decomposition. Sensors 2016, 16, 719. [Google Scholar] [CrossRef] [PubMed]

- ElMasry, G.; Mandour, N.; Al-Rejaie, S.; Belin, E.; Rousseau, D. Recent applications of multispectral imaging in seed phenotyping and quality monitoring—An overview. Sensors 2019, 19, 1090. [Google Scholar] [CrossRef]

- Hidović-Rowe, D.; Rowe, J.E.; Lualdi, M. Markov models of integrating spheres for hyperspectral imaging. Appl. Opt. 2006, 45, 5248–5257. [Google Scholar] [CrossRef]

- Ljungqvist, M.G.; Frosch, S.; Nielsen, M.E.; Ersbøll, B.K. Multispectral image analysis for robust prediction of astaxanthin coating. Appl. Spectrosc. 2013, 67, 738–746. [Google Scholar] [CrossRef]

- Mahmoud, K.; Park, S.; Park, S.N.; Lee, D.H. An imaging spectrophotometer for measuring the two-dimensional distribution of spectral reflectance. Metrologia 2014, 51, S293. [Google Scholar] [CrossRef]

- Tsakanikas, P.; Pavlidis, D.; Panagou, E.; Nychas, G.J. Exploiting multispectral imaging for non-invasive contamination assessment and mapping of meat samples. Talanta 2016, 161, 606–614. [Google Scholar] [CrossRef]

- Claridge, E.; Hidovic-Rowe, D. Model based inversion for deriving maps of histological parameters characteristic of cancer from ex-vivo multispectral images of the colon. IEEE Trans. Med. Imaging 2014, 33, 822–835. [Google Scholar] [CrossRef]

- Vidovič, L.; Majaron, B. Elimination of single-beam substitution error in diffuse reflectance measurements using an integrating sphere. J. Biomed. Opt. 2014, 19, 027006. [Google Scholar] [CrossRef]

- Sloan, W.W. Correction of single-beam sample absorption error in a hemispherical 45°/0° spectrophotometer measurement cavity. Color Res. Appl. 2014, 39, 436–441. [Google Scholar] [CrossRef]

- Rey-Barroso, L.; Burgos-Fernández, F.; Delpueyo, X.; Ares, M.; Royo, S.; Malvehy, J.; Puig, S.; Vilaseca, M. Visible and extended near-infrared multispectral imaging for skin cancer diagnosis. Sensors 2018, 18, 1441. [Google Scholar] [CrossRef]

- De Lasarte, M.; Pujol, J.; Arjona, M.; Vilaseca, M. Optimized algorithm for the spatial nonuniformity correction of an imaging system based on a charge-coupled device color camera. Appl. Opt. 2007, 46, 167–174. [Google Scholar] [CrossRef]

- Katrašnik, J.; Pernuš, F.; Likar, B. A method for characterizing illumination systems for hyperspectral imaging. Opt. Express 2013, 21, 4841–4853. [Google Scholar] [CrossRef]

- Nouri, D.; Lucas, Y.; Treuillet, S. Calibration and test of a hyperspectral imaging prototype for intra-operative surgical assistance. Medical Imaging 2013: Digital Pathology. Int. Soc. Opt. Photonics 2013, 8676, 86760P. [Google Scholar]

- Shen, H.L.; Cai, P.Q.; Shao, S.J.; Xin, J.H. Reflectance reconstruction for multispectral imaging by adaptive Wiener estimation. Opt. Express 2007, 15, 15545–15554. [Google Scholar] [CrossRef] [Green Version]

- Hisdal, B.J. Reflectance of nonperfect surfaces in the integrating sphere. J. Opt. Soc. Am. 1965, 55, 1255–1260. [Google Scholar] [CrossRef]

- Roos, A.; Ribbing, C.G.; Bergkvist, M. Anomalies in integrating sphere measurements on structured samples. Appl. Opt. 1988, 27, 3828–3832. [Google Scholar] [CrossRef]

- Shen, H.L.; Weng, C.W.; Wan, H.J.; Xin, J.H. Correcting cross-media instrument metamerism for reflectance estimation in multispectral imaging. J. Opt. Soc. Am. A 2011, 28, 511–516. [Google Scholar] [CrossRef]

- Luo, L.; Tsang, K.M.; Shen, H.L.; Shao, S.J.; Xin, J.H. An investigation of how the texture surface of a fabric influences its instrumental color. Color Res. Appl. 2015, 40, 472–482. [Google Scholar] [CrossRef]

- Nicodemus, F.E. Directional reflectance and emissivity of an opaque surface. Appl. Opt. 1965, 4, 767–775. [Google Scholar] [CrossRef]

- Liang, H. Advances in multispectral and hyperspectral imaging for archaeology and art conservation. Appl. Phys. A 2012, 106, 309–323. [Google Scholar] [CrossRef]

- Martinez, K.; Cupitt, J.; Saunders, D.; Pillay, R. Ten years of art imaging research. Proc. IEEE 2002, 90, 28–41. [Google Scholar] [CrossRef]

- Yamaguchi, M.; Teraji, T.; Ohsawa, K.; Uchiyama, T.; Motomura, H.; Murakami, Y.; Ohyama, N. Color image reproduction based on multispectral and multiprimary imaging: Experimental evaluation. In Proceedings of the SPIE Conference on Color Imaging: Device-Independent Color, Color Hardcopy, and Applications VII, San Jose, CA, USA, 28 December 2001; Volume 4663, pp. 15–26. [Google Scholar]

- Berns, R.S.; Petersen, K.H. Empirical modeling of systematic spectrophotometric errors. Color Res. Appl. 1988, 13, 243–256. [Google Scholar] [CrossRef]

- Hong, G.; Luo, M.R.; Rhodes, P.A. A study of digital camera colorimetric characterization based on polynomial modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- Goebel, D.G. Generalized Integrating-Sphere Theory. Appl. Opt. 1967, 6, 125–128. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

| Band No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|---|

| Original | 0.0155 | 0.0172 | 0.0194 | 0.0159 | 0.0156 | 0.0128 | 0.0150 | 0.0129 | |

| Stage I | 0.0097 | 0.0102 | 0.0118 | 0.0082 | 0.0075 | 0.0049 | 0.0067 | 0.0049 | |

| Stage II | 0.0024 | 0.0023 | 0.0022 | 0.0022 | 0.0021 | 0.0021 | 0.0021 | 0.0021 | |

| Band No. | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | Average |

| Original | 0.0127 | 0.0146 | 0.0152 | 0.0137 | 0.0153 | 0.0155 | 0.0185 | 0.0212 | 0.0157 |

| Stage I | 0.0047 | 0.0061 | 0.0061 | 0.0047 | 0.0057 | 0.0048 | 0.0054 | 0.0059 | 0.0067 |

| Stage II | 0.0021 | 0.0020 | 0.0021 | 0.0021 | 0.0022 | 0.0024 | 0.0029 | 0.0032 | 0.0023 |

| Band No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| c1 | 0.8966 | 0.8787 | 0.9083 | 0.9168 | 0.9240 | 0.9438 | 0.9155 | 0.9293 |

| c2 | 0.1158 | 0.1337 | 0.1000 | 0.0901 | 0.0824 | 0.0613 | 0.0934 | 0.0792 |

| c3 | 0.2062 | 0.2034 | 0.2169 | 0.1507 | 0.1354 | 0.0837 | 0.1243 | 0.0849 |

| c4 | −0.2229 | −0.2193 | −0.2329 | −0.1617 | −0.1468 | −0.0913 | −0.1374 | −0.0943 |

| Band No. | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| c1 | 0.9445 | 0.9123 | 0.9155 | 0.9249 | 0.9118 | 0.9309 | 0.9276 | 0.9452 |

| c2 | 0.0608 | 0.0979 | 0.0931 | 0.0837 | 0.0972 | 0.0751 | 0.0778 | 0.0562 |

| c3 | 0.0782 | 0.1105 | 0.1059 | 0.0793 | 0.1000 | 0.0803 | 0.0951 | 0.1040 |

| c4 | −0.0868 | −0.1228 | −0.1172 | −0.0878 | −0.1097 | −0.0868 | −0.1009 | −0.1085 |

| Band No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| Original | 0.0060 | 0.0133 | 0.0172 | 0.0113 | 0.0101 | 0.0081 | 0.0092 | 0.0081 | |

| Stage I | 0.0046 | 0.0082 | 0.0106 | 0.0063 | 0.0055 | 0.0039 | 0.0049 | 0.0039 | |

| Stage II | 0.0024 | 0.0029 | 0.0036 | 0.0027 | 0.0025 | 0.0023 | 0.0024 | 0.0024 | |

| Band No. | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | Average |

| Original | 0.0082 | 0.0095 | 0.0099 | 0.0096 | 0.0106 | 0.0100 | 0.0098 | 0.0096 | 0.0100 |

| Stage I | 0.0039 | 0.0046 | 0.0045 | 0.0040 | 0.0043 | 0.0039 | 0.0041 | 0.0046 | 0.0051 |

| Stage II | 0.0025 | 0.0025 | 0.0028 | 0.0029 | 0.0029 | 0.0031 | 0.0035 | 0.0042 | 0.0028 |

| Spectral rms | (D65) | (A) | (F2) | |

|---|---|---|---|---|

| Original | 0.1169 | 5.1649 | 4.6991 | 5.3026 |

| Stage I | 0.0556 | 3.0092 | 2.6734 | 3.1455 |

| Stage II | 0.0149 | 0.3679 | 0.3573 | 0.3634 |

| Spectral rms | (D65) | (A) | (F2) | |

|---|---|---|---|---|

| Original | 0.1240 | 4.1274 | 3.9949 | 4.1644 |

| Stage I | 0.0617 | 1.7949 | 1.6909 | 1.8808 |

| Stage II | 0.0201 | 0.5821 | 0.5712 | 0.6020 |

| Response std. dev. | Spectral rms | (D65) | (A) | (F2) | |

|---|---|---|---|---|---|

| Original | 0.0100 | 0.1240 | 4.1274 | 3.9949 | 4.1644 |

| Markov model | 0.0044 | 0.0625 | 1.7368 | 1.6907 | 1.8580 |

| Ours | 0.0028 | 0.0201 | 0.5821 | 0.5712 | 0.6020 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, Z.; Shen, H.-L.; Li, S.; Zhu, Y.; Xin, J.H. Lighting Deviation Correction for Integrating-Sphere Multispectral Imaging Systems. Sensors 2019, 19, 3501. https://doi.org/10.3390/s19163501

Zou Z, Shen H-L, Li S, Zhu Y, Xin JH. Lighting Deviation Correction for Integrating-Sphere Multispectral Imaging Systems. Sensors. 2019; 19(16):3501. https://doi.org/10.3390/s19163501

Chicago/Turabian StyleZou, Zhe, Hui-Liang Shen, Shijian Li, Yunfang Zhu, and John H. Xin. 2019. "Lighting Deviation Correction for Integrating-Sphere Multispectral Imaging Systems" Sensors 19, no. 16: 3501. https://doi.org/10.3390/s19163501

APA StyleZou, Z., Shen, H.-L., Li, S., Zhu, Y., & Xin, J. H. (2019). Lighting Deviation Correction for Integrating-Sphere Multispectral Imaging Systems. Sensors, 19(16), 3501. https://doi.org/10.3390/s19163501