Restoration Method of a Blurred Star Image for a Star Sensor Under Dynamic Conditions

Abstract

:1. Introduction

2. Parameter Estimation of Blurred Star Image

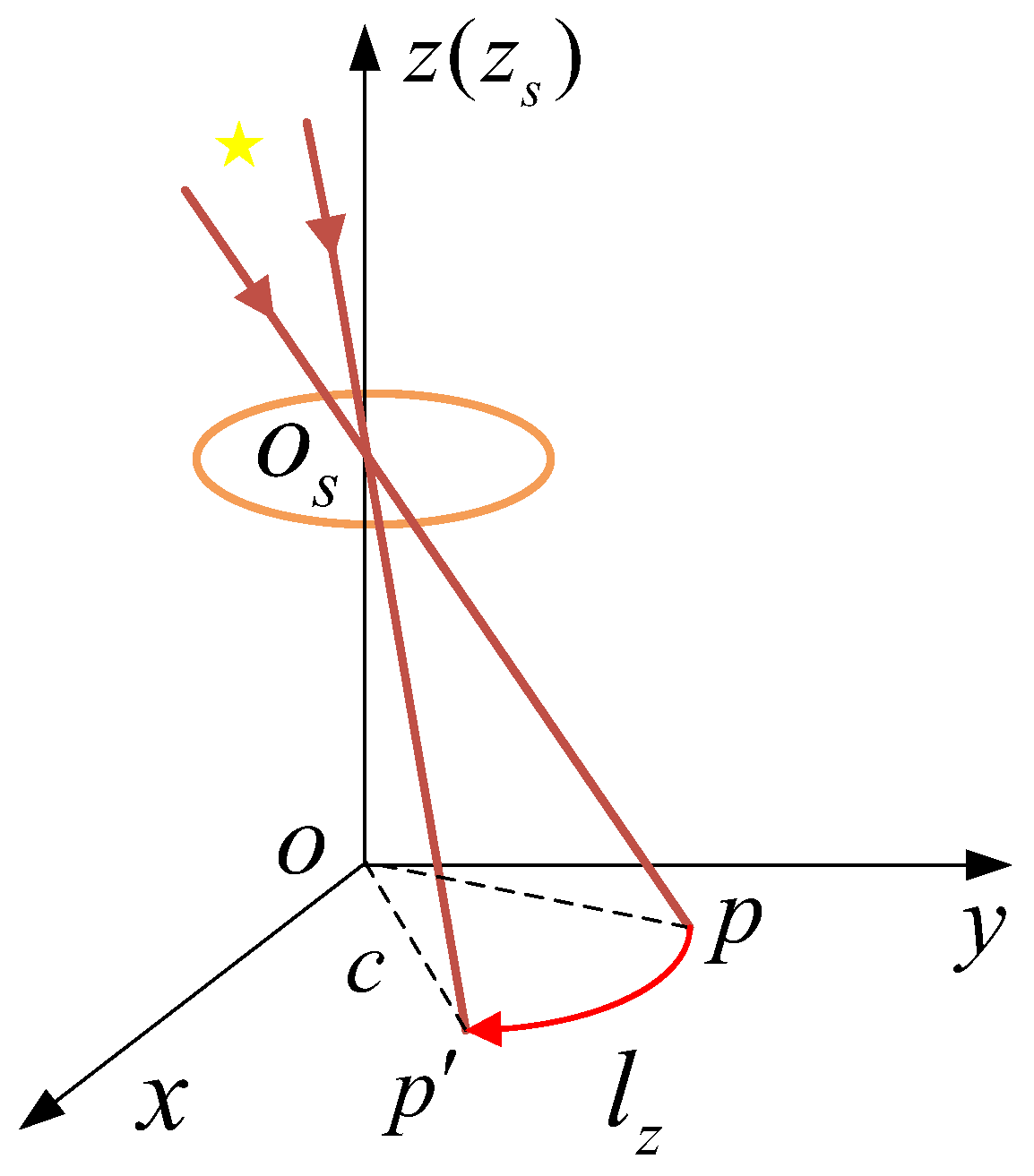

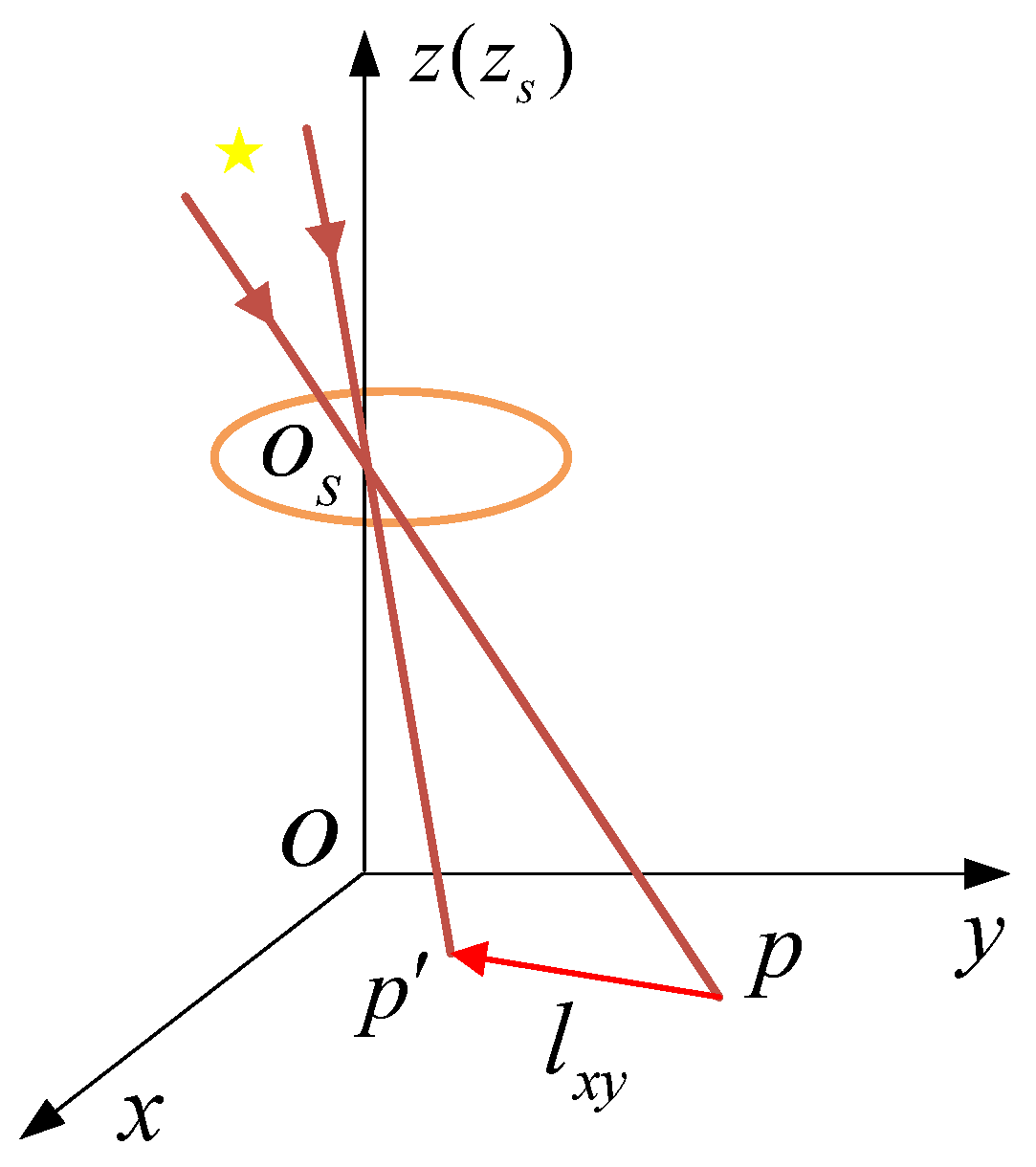

2.1. Motion Model of Star Centroid

2.2. The Degenerate Function of a Motion Blurred Image

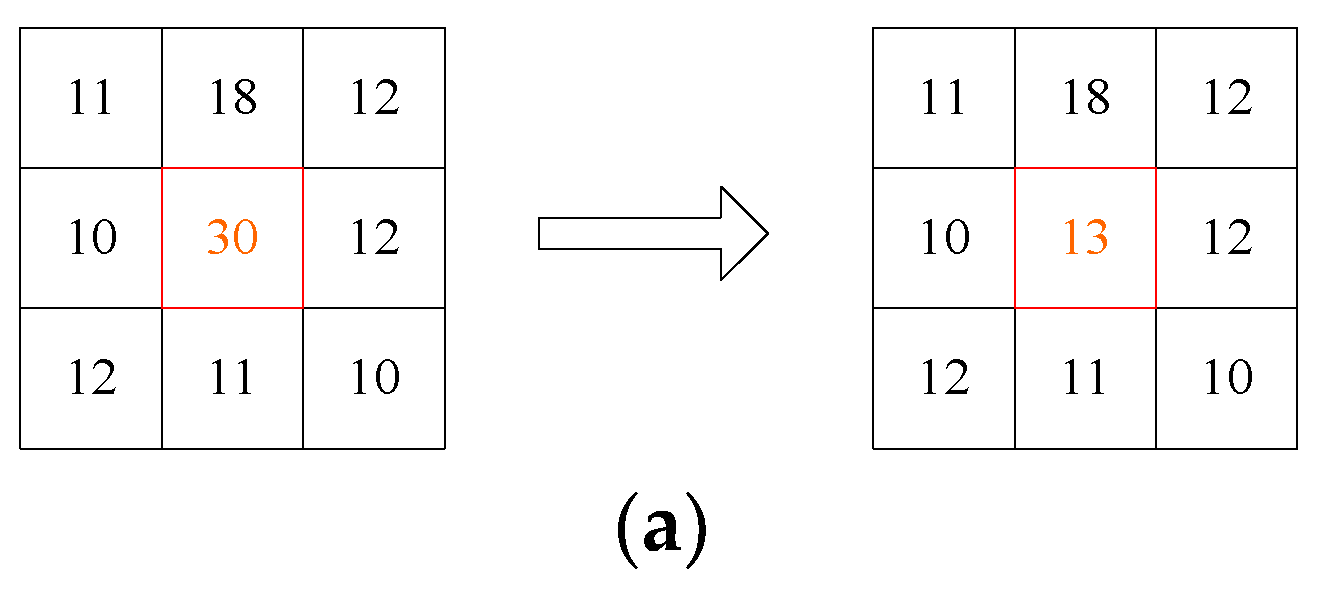

3. Blurred Star Image Denoising

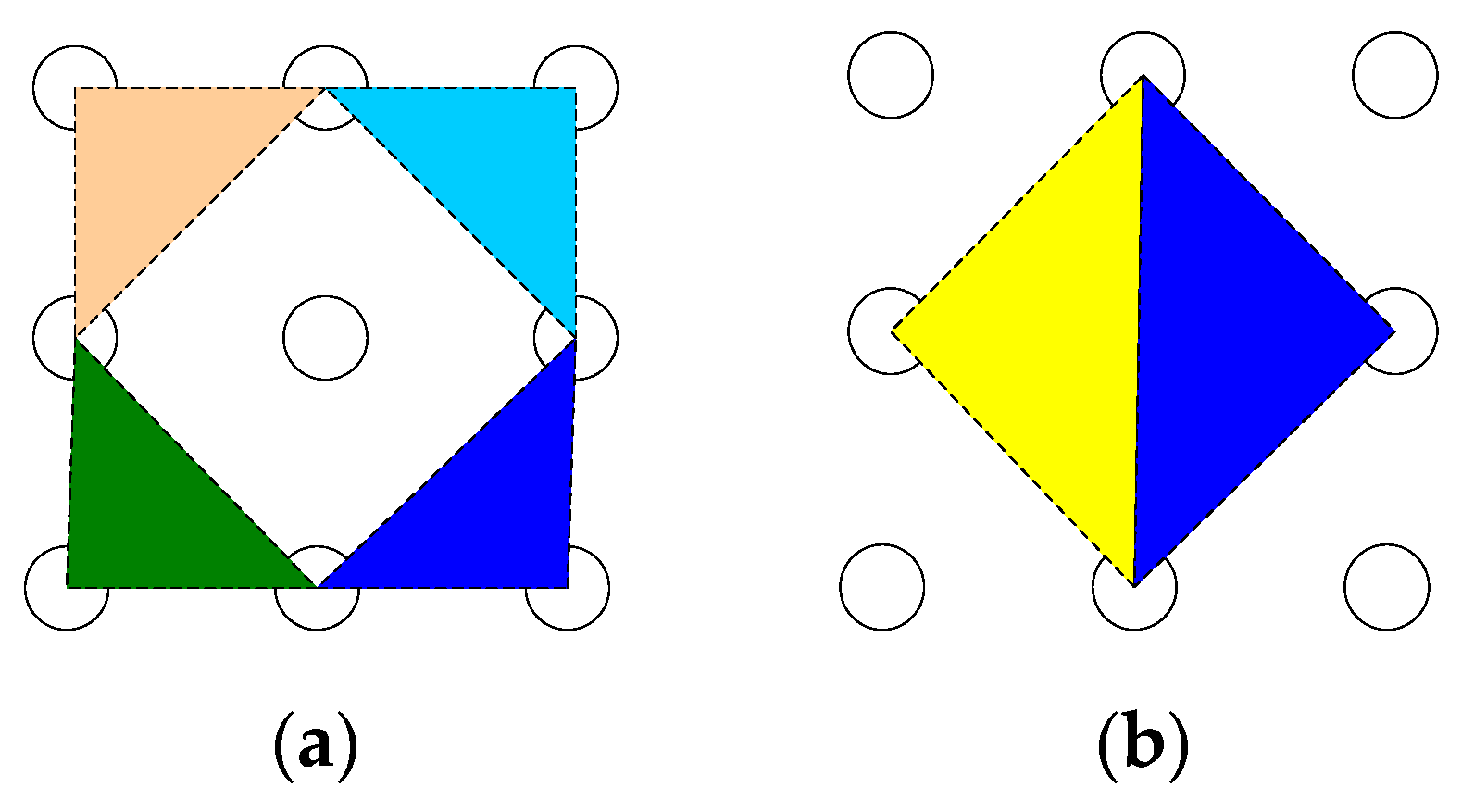

4. Restoration of Motion Blurred Star Image

5. Results and Analysis

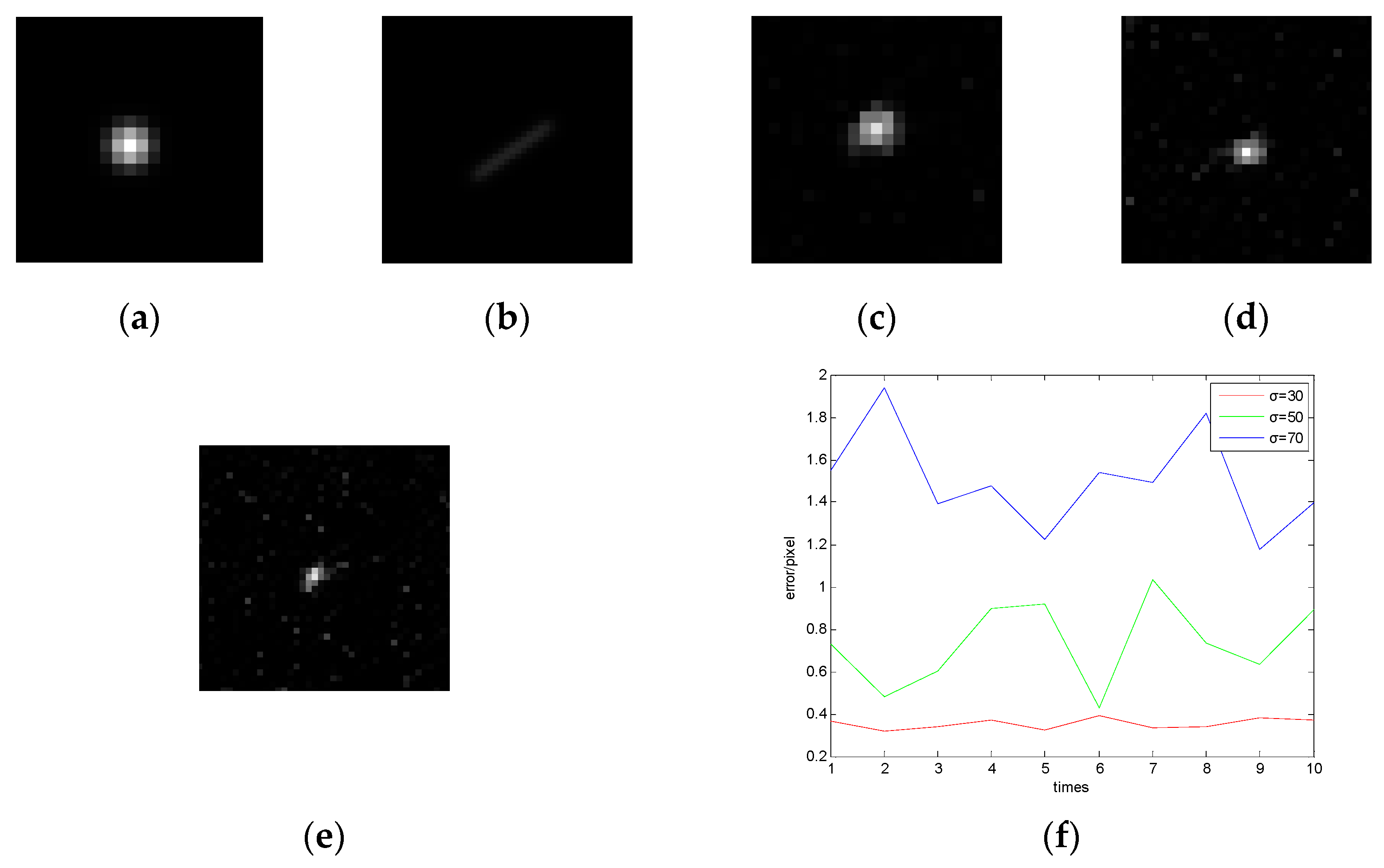

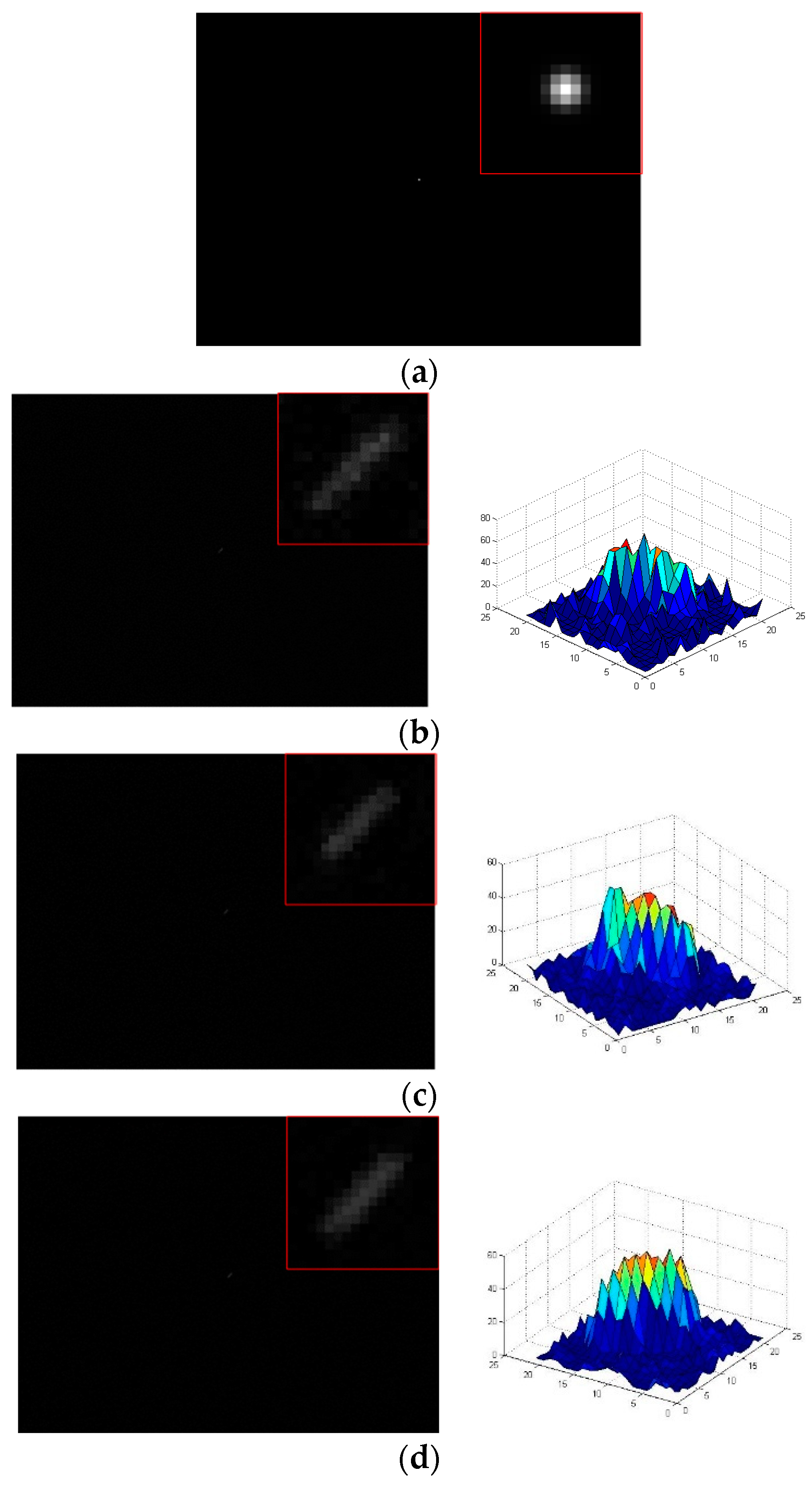

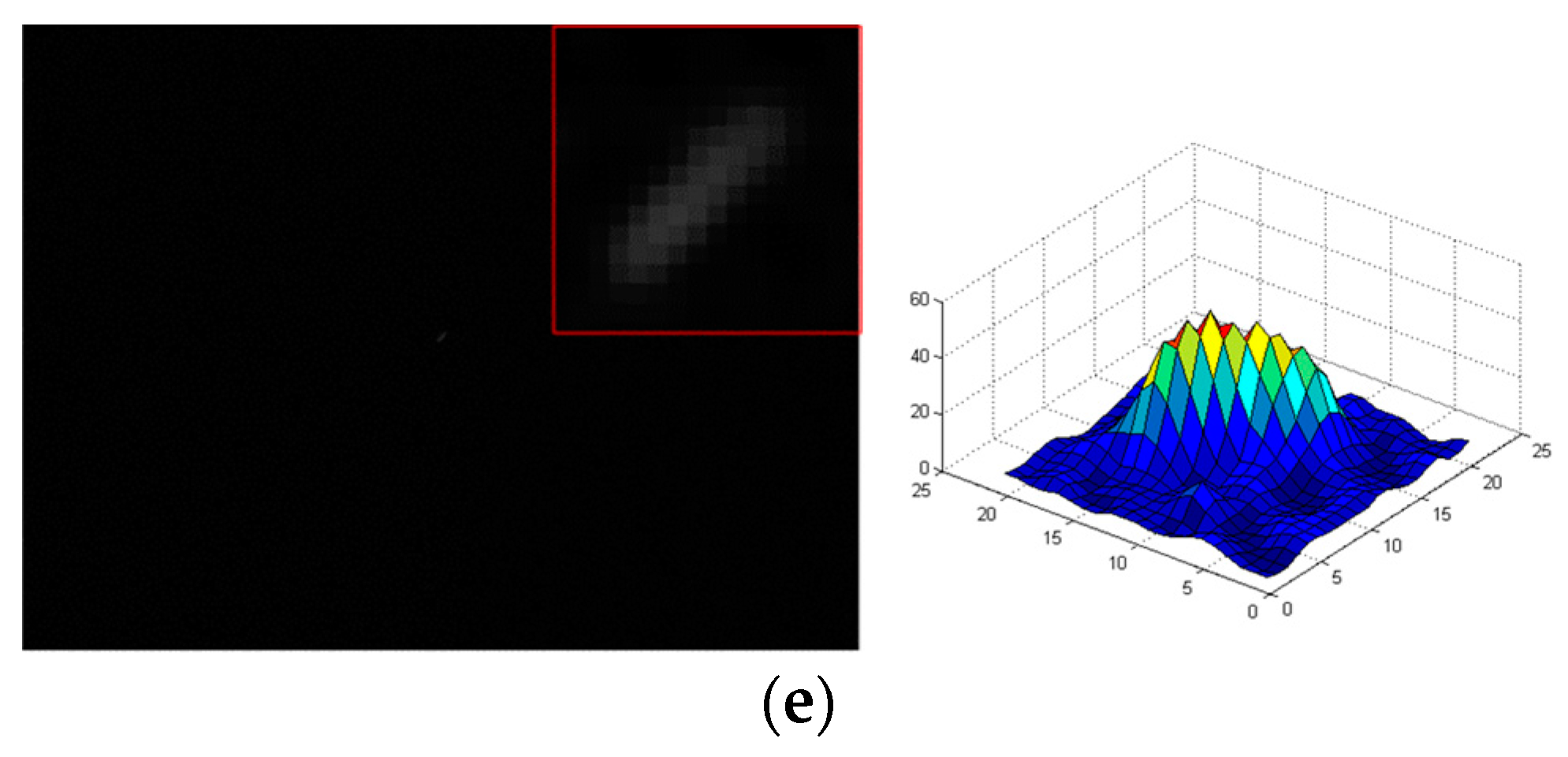

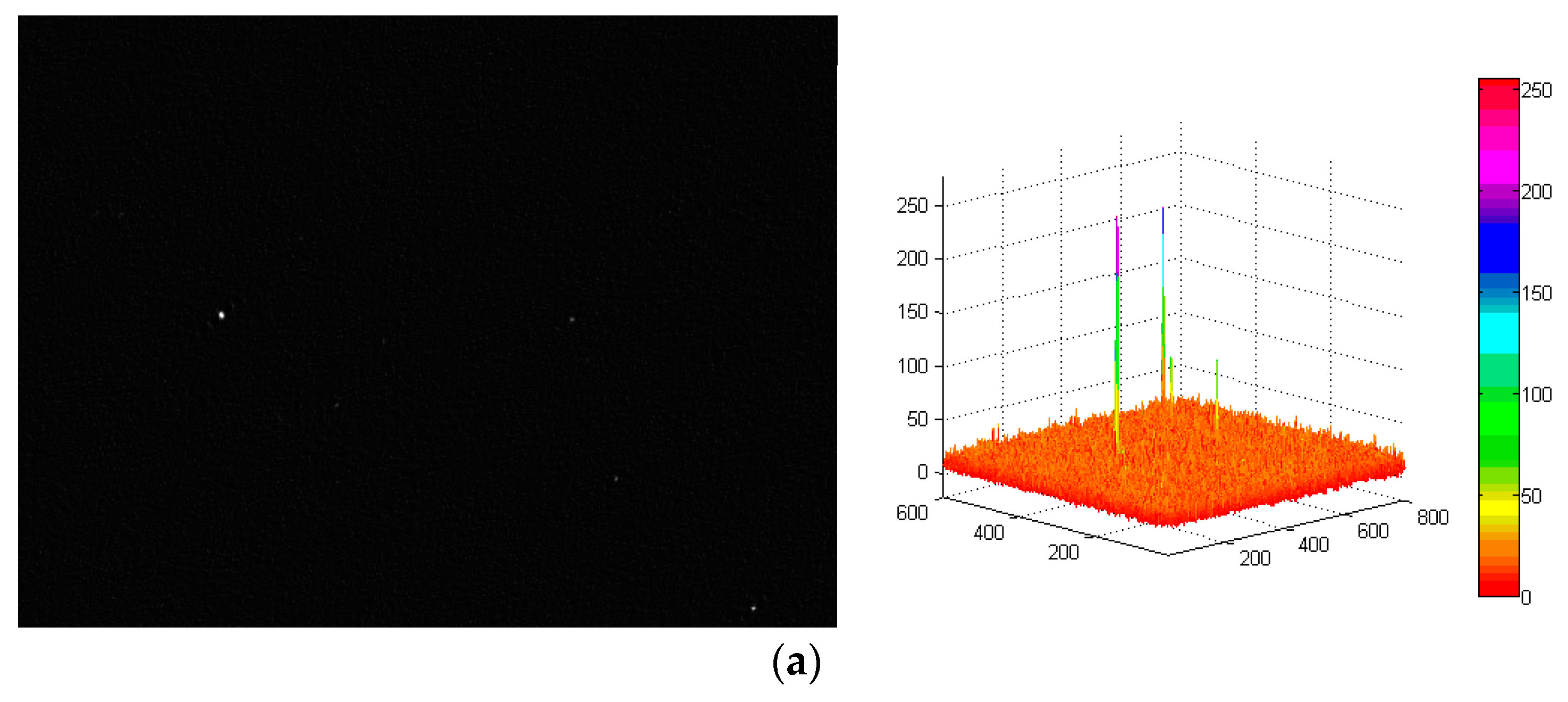

5.1. Denoising of the Blurred Star Image

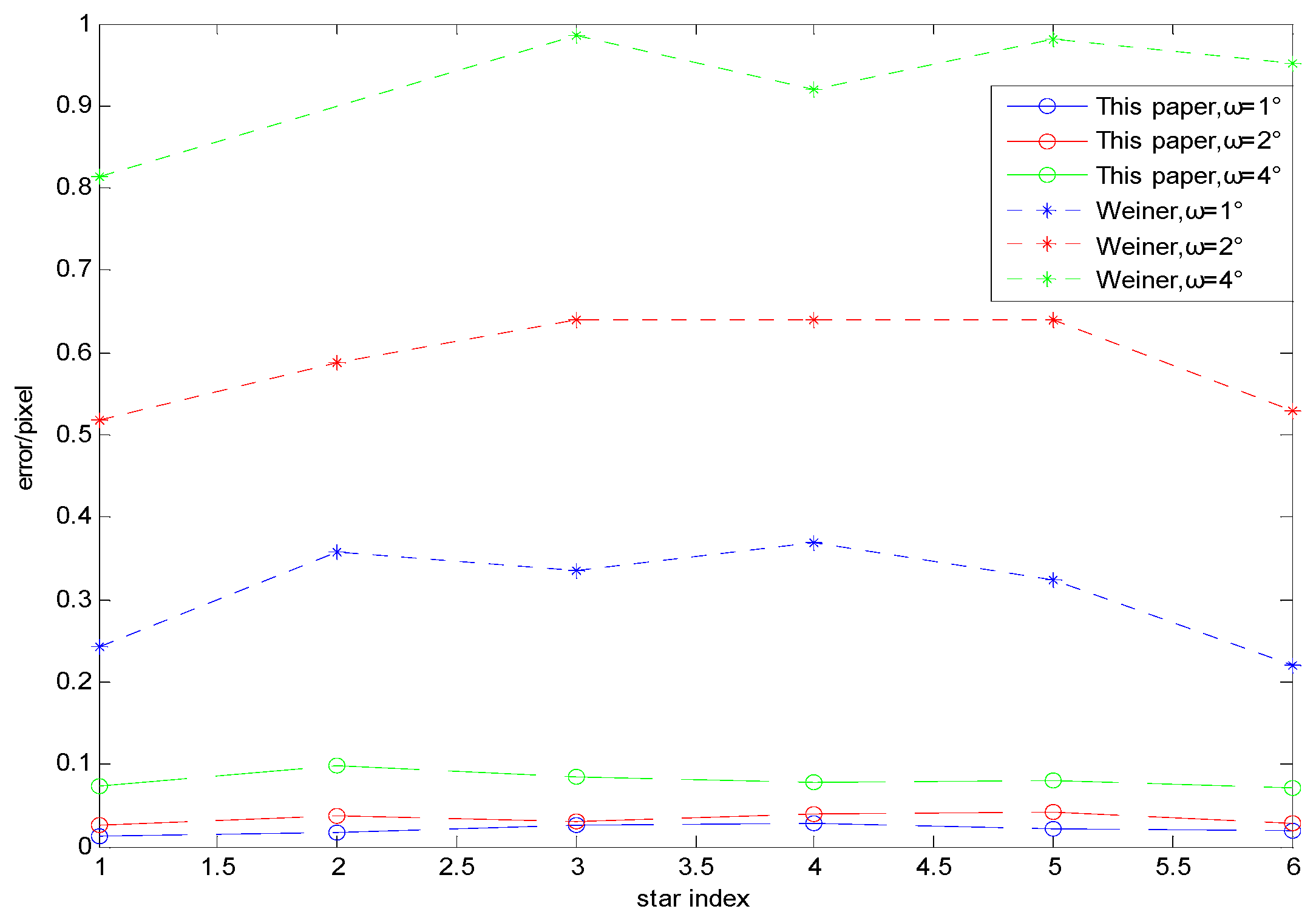

5.2. Restoration of Blurred Star Image

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bezooijen, V.; Roelof, W.H. Autonomous star tracker. In IR Space Telescopes and Instruments; International Society for Optics and Photonics: Bellingham, WA, USA, 2003; Volume 4580, pp. 108–121. [Google Scholar]

- Pasetti, A.; Habinc, S.; Creasey, R. Dynamical binning for high angular rate star tracking. Spacecr. Guid. Navig. Control Syst. 2000, 425, 255. [Google Scholar]

- Sun, T.; Xing, F.; You, Z. Smearing model and restoration of star image under conditions of variable angular velocity and long exposure time. Opt. Express 2014, 22, 6009–6024. [Google Scholar] [CrossRef] [PubMed]

- Richardson, W.H. Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 1972, 62, 55–59. [Google Scholar] [CrossRef]

- Lucy, L.B. An iterative technique for the rectification of observed distributions. Astron. J. 1974, 79, 745–754. [Google Scholar] [CrossRef]

- Sun, T.; Xing, F.; You, Z. Deep coupling of star tracker and MEMS-gyro data under highly dynamic and long exposure conditions. Meas. Sci. Technol. 2014, 25, 1–15. [Google Scholar] [CrossRef]

- Ma, L.H.; Bernelli-Zazzera, F.; Jiang, G.W. Region-confined restoration method for motion blurred star image of the star sensor under dynamic conditions. Appl. Opt. 2016, 55, 4621–4631. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.N.; Quan, W.; Guo, L. Blurred star image processing for star sensors under dynamic conditions. Sensors 2012, 12, 6712–6726. [Google Scholar] [CrossRef] [PubMed]

- Sun, T.; Xing, F.; You, Z. Motion-blurred star acquisition method of the star tracker under high dynamic conditions. Opt. Express 2013, 121, 20096–20110. [Google Scholar] [CrossRef] [PubMed]

- Bala, V.E.; Jain, L.C.; Zhao, X.M. Information Technology and Intelligent Transportation Systems; Springer: Basel, Switzerland, 2017; pp. 53–58. [Google Scholar]

- Wang, S.; Zhang, S.; Ning, M.; Zhou, B. Motion Blurred Star Image Restoration Based on MEMS Gyroscope Aid and Blur Kernel Correction. Sensors 2018, 18, 2662. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.H.; Sbalzarini, I.F. Curvature filters efficiently reduce certain variational energies. IEEE Trans. Image Process. 2017, 26, 1786–1798. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Publishing House of Electronics Industry: Beijing, China, 2013; pp. 75–89. [Google Scholar]

- Liang, B.; Zhu, H.L.; Zhang, T. Research status and development tendency of star tracker technique. Chin. Opt. 2016, 9, 16–29. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, L.; Zhang, Z.Y.; Lu, H.Z. A hardware acceleration-based algorithm for real-time binary image connected-component labeling. J. Electron. Inf. Technol. 2011, 33, 1069–1075. [Google Scholar] [CrossRef]

- Chang, S.G.; Yu, B.; Vetterli, M. Adaptive wavelet thresholding for image denoising and compression. IEEE Trans. Image Process 2000, 9, 1532–1546. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Fan, X.Y. Centroid locating for star image object by FPGA. Adv. Mater. Res. 2012, 403, 1379–1383. [Google Scholar] [CrossRef]

- Alain, H.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Kolomenkin, M.; Pollak, S.; Shimshoni, I.; Lindenbaum, M. Geometric voting algorithm for star trackers. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 441–456. [Google Scholar] [CrossRef]

- Liu, T.L.; Sun, K.M.; Liu, C.S. Sectioned Restoration of Blurred Star Image with Space-Variant Point Spread. In Information Technology and Intelligent Transportation Systems; Springer: Cham, Switzerland, 2017. [Google Scholar]

| Parameter | Value |

|---|---|

| FOV | 6° × 4.5° |

| Focal length | 60 mm |

| Pixel array | 800 × 600 pixels |

| Integration time | 60 ms |

| Detectable magnitude | 6.5 Mv |

| Variance of Noise | Original Image | Bayes Shrink Method | Open Operation | Our Method | |

|---|---|---|---|---|---|

| 30 | 25.761 | 32.862 | 36.559 | 42.397 | |

| 50 | 23.608 | 29.538 | 35.668 | 40.621 | |

| 70 | 20.783 | 26.855 | 33.427 | 39.723 | |

| 30 | 22.548 | 28.623 | 30.118 | 39.935 | |

| 50 | 20.833 | 25.477 | 28.822 | 36.842 | |

| 70 | 16.698 | 23.415 | 27.285 | 35.322 | |

| 30 | 18.637 | 23.243 | 28.219 | 34.723 | |

| 50 | 17.824 | 22.945 | 27.563 | 32.463 | |

| 70 | 15.382 | 20.527 | 25.952 | 30.861 |

| Angular Velocity | Star Index | True Star Centroid | Wiener | Our Method |

|---|---|---|---|---|

| 1 | (199.484,294.782) | (199.727,295.037) | (199.471, 294.807) | |

| 2 | (358.863,319.762) | (359.221, 320.174) | (358.881, 319.742) | |

| 3 | (311.276,383.885) | (310.941, 384.272) | (311.301, 383.551) | |

| 4 | (541.497,299.656) | (541.866, 299.204) | (541.526, 299.627) | |

| 5 | (585.364,455.912) | (585.039, 455.577) | (585.385, 455.937) | |

| 6 | (719.756,582.563) | (719.975, 582.914) | (719.775, 582.585) | |

| 1 | (199.484,294.782) | (200.001, 295.434) | (199.509, 295.03) | |

| 2 | (358.863,319.762) | (359.451, 319.334) | (358.901, 319.794) | |

| 3 | (311.276,383.885) | (310.635, 300.197) | (311.307, 383.91) | |

| 4 | (541.497,299.656) | (540.858, 298.971) | (541.536, 299.678) | |

| 5 | (585.364,455.912) | (586.005, 456.564) | (585.405, 455.96) | |

| 6 | (719.756,582.563) | (720.285, 583.114) | (719.785, 582.529) | |

| 1 | (199.484,294.782) | (200.297, 293.895) | (199.411, 294.708) | |

| 2 | (358.863,319.762) | Fail | (358.961, 319.848) | |

| 3 | (311.276,383.885) | (312.261, 384.910) | (311.191, 383.811) | |

| 4 | (541.497,299.656) | (542.416, 300.512) | (541.418, 299.588) | |

| 5 | (585.364,455.912) | (586.345, 454.914) | (585.283, 455.837) | |

| 6 | (719.756,582.563) | (720.707, 583.559) | (719.685, 582.515) |

| Index | X Axis | Y Axis | Z Axis |

|---|---|---|---|

| A | 2.4 | 2.2 | 2.6 |

| B | 2.9 | 2.8 | 2.9 |

| C | 3.3 | 3.5 | 3.2 |

| D | 3.8 | 3.9 | 3.8 |

| Frame Number | Our Method/ms | SVPS/ms |

|---|---|---|

| 1 | 89.645 | 169.631 |

| 2 | 84.388 | 167.864 |

| 3 | 95.452 | 175.082 |

| 4 | 103.054 | 194.967 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mu, Z.; Wang, J.; He, X.; Wei, Z.; He, J.; Zhang, L.; Lv, Y.; He, D. Restoration Method of a Blurred Star Image for a Star Sensor Under Dynamic Conditions. Sensors 2019, 19, 4127. https://doi.org/10.3390/s19194127

Mu Z, Wang J, He X, Wei Z, He J, Zhang L, Lv Y, He D. Restoration Method of a Blurred Star Image for a Star Sensor Under Dynamic Conditions. Sensors. 2019; 19(19):4127. https://doi.org/10.3390/s19194127

Chicago/Turabian StyleMu, Zhiya, Jun Wang, Xin He, Zhonghui Wei, Jiawei He, Lei Zhang, You Lv, and Dinglong He. 2019. "Restoration Method of a Blurred Star Image for a Star Sensor Under Dynamic Conditions" Sensors 19, no. 19: 4127. https://doi.org/10.3390/s19194127

APA StyleMu, Z., Wang, J., He, X., Wei, Z., He, J., Zhang, L., Lv, Y., & He, D. (2019). Restoration Method of a Blurred Star Image for a Star Sensor Under Dynamic Conditions. Sensors, 19(19), 4127. https://doi.org/10.3390/s19194127