Subject- and Environment-Based Sensor Variability for Wearable Lower-Limb Assistive Devices

Abstract

:1. Introduction

2. Methods

2.1. Data Collection

2.2. Feature Extraction

2.2.1. EMG, IMU and Goniometer Features

2.2.2. Vision-Based Features

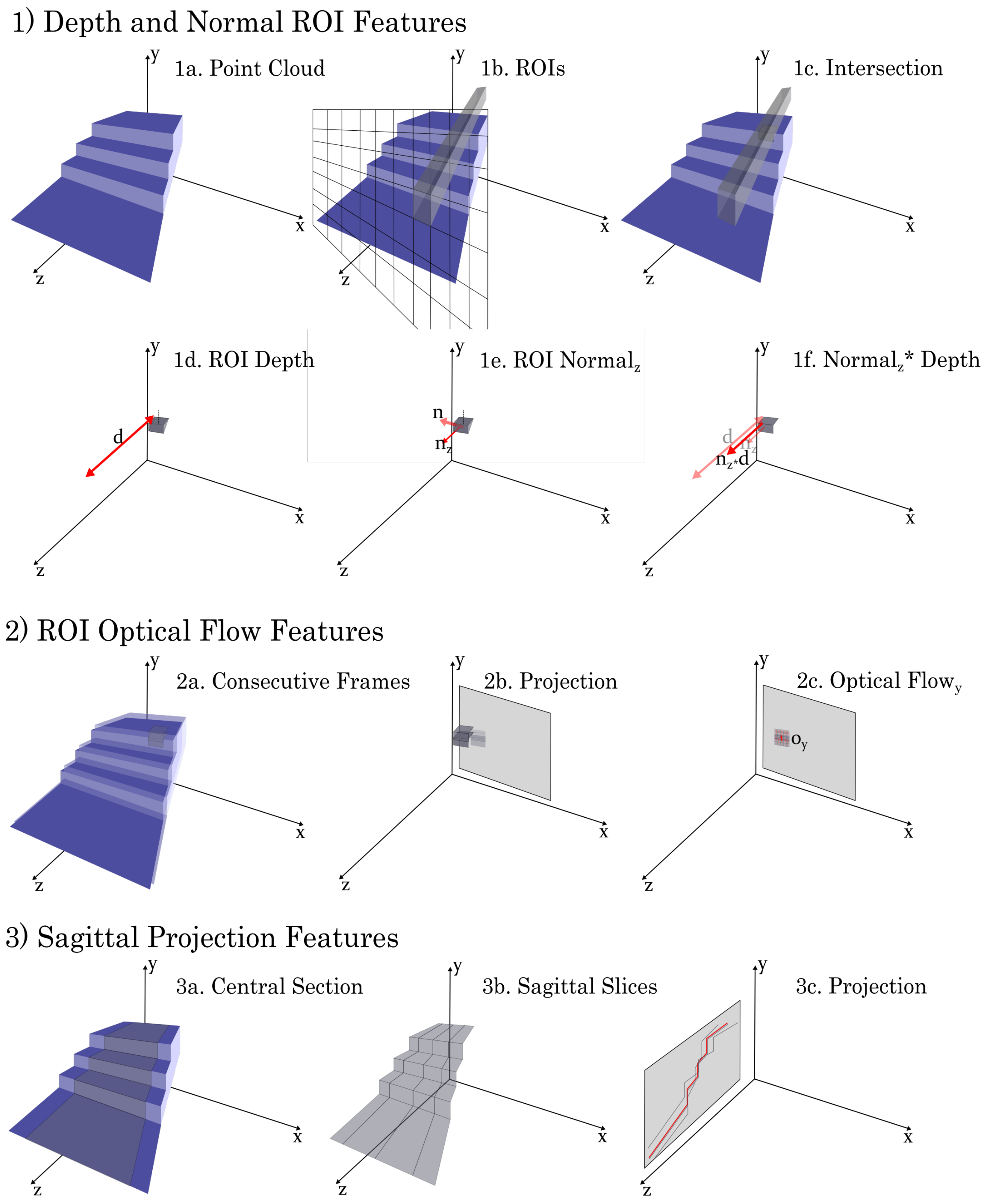

- Depth and Normal ROI features: Each frame was segmented into a 20 × 20 grid of regions of interest (ROIs) and all points within each ROI were then fit with a RANSAC [32] planar model (see Figure 2(1a–c)). Three features were then defined for each of these ROIs:

- (a)

- Depth: The mean distance from the origin of the sensor to the plane in the x-, y- and z-dimension were defined as Depth features (Figure 2(1d) shows the z-feature). These features essentially provided the projection of the depth in the frontal and sagittal planes and these were selected to provide average depth context for field of view.

- (b)

- Normal: The normal vector of the plane provides orientation context for each ROI. In particular, the magnitude of the y- and z-components of the normal vector of the plane were computed and defined as the Normal features (Figure 2(1e) shows the z- feature). These features were selected to help provide orientation context that might be missing from the depth features alone.

- (c)

- Norm x Depth: The Depth and Normal features for each ROI were then combined, using a simple scalar multiplication, into a single Norm x Depth feature (Figure 2(1f)). This feature was chosen as an attempt to represent the depth and orientation content efficiently in a single feature.

- Optical Flow features: Motion across frames was computed both as the simple difference between frames and using a dense Farneback optical flow method [33]. In particular, the frontal plane projection of the depth was used for the optical flow estimation. The optical flow components in the x- and y- directions were each averaged within each ROI of a 20 × 20 grid and defined as the Optical features (Figure 2(2c)). These features were selected as a means of providing context about the motion of the environment relative to the subject.

- Sagittal Projection features: The point cloud was filtered so that only the central two-thirds were considered, to help minimize the effect of occlusions and random objects within the field of view. Then all points were projected into the sagittal plane and the mean height of each position along the z-axis was then defined as the Sagittal feature (Figure 2(3c)). This feature was selected as a means of encoding context about the overall shape of the environment (particularly the height which was less integrated into other features).

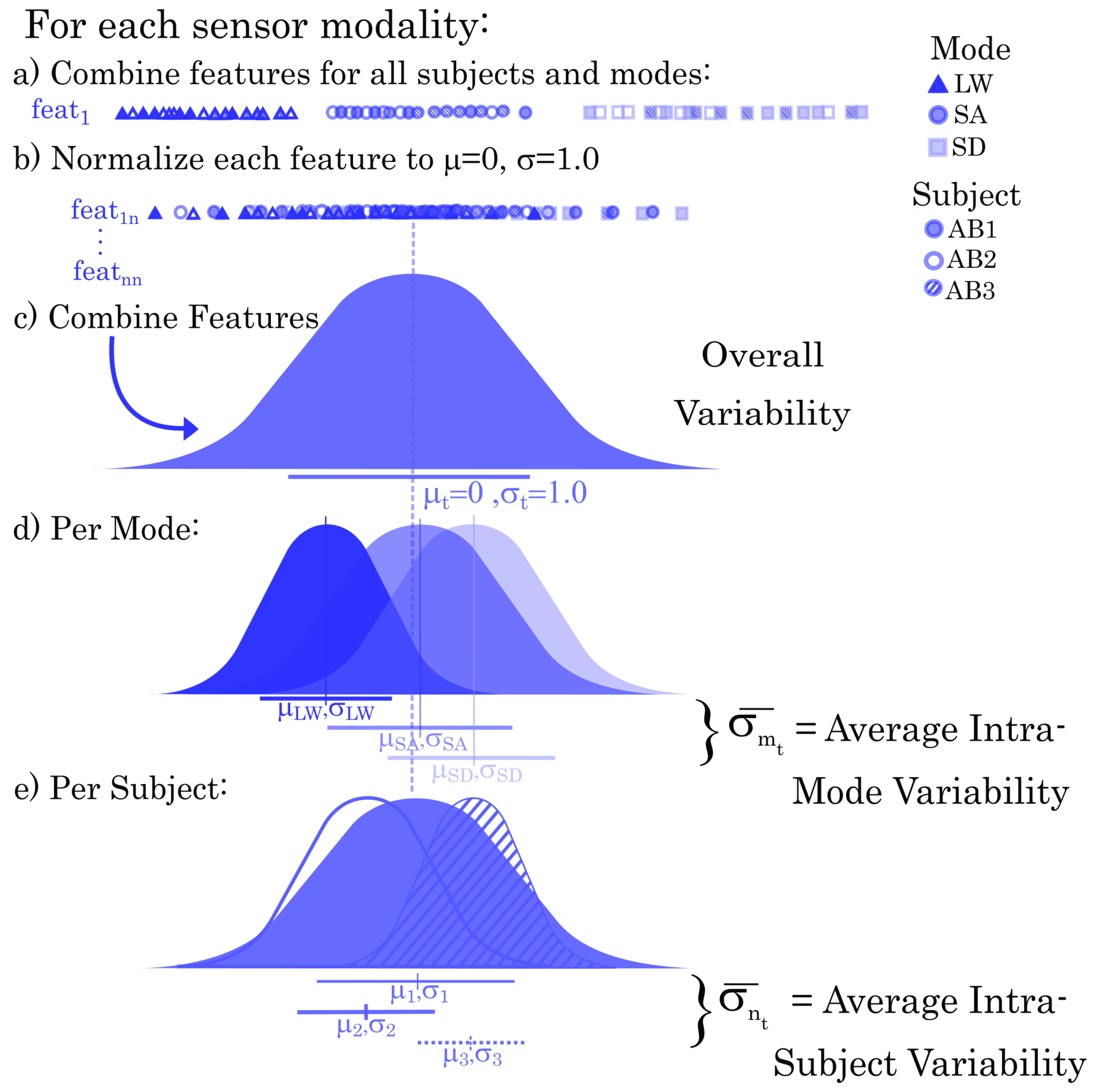

2.3. Normalization

2.4. Dimensionality Reduction

2.5. Sensor Modality Analysis

2.5.1. Repeatability Index

2.5.2. Separability Index

2.5.3. Mean Semi-Principal Axes

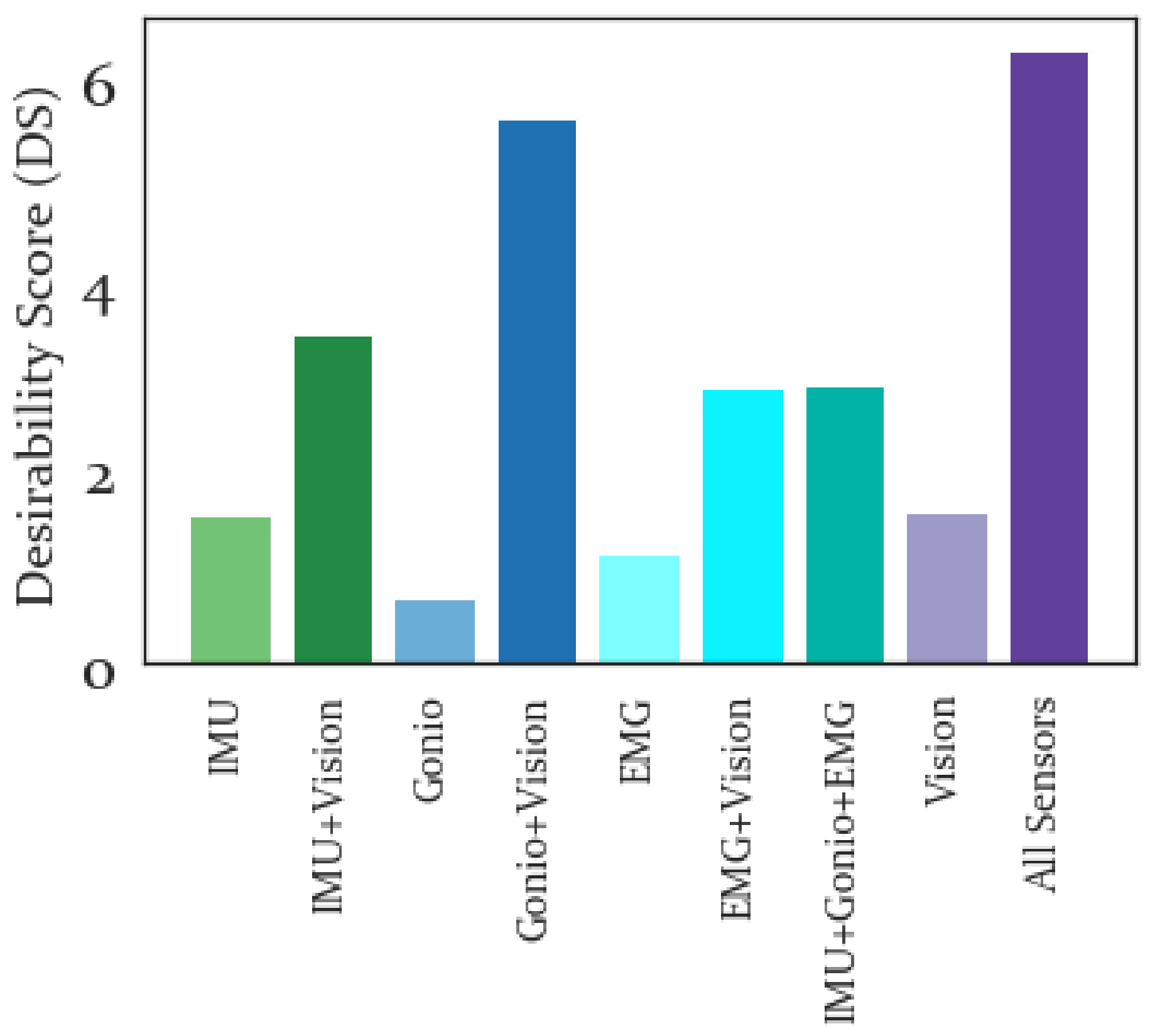

2.5.4. Desirability Score

3. Results

3.1. Normalization

3.2. Dimensionality Reduction

3.3. Sensor Modality Analysis

3.3.1. Repeatability Index

3.3.2. Separability Index

3.3.3. Mean Semi-Principal Axis

3.3.4. Desirability Score

4. Discussion

4.1. Result Implications

4.2. Future Work

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| EMG | Electromyography |

| IMU | Inertial Measurement Unit |

| LW | Level Ground Walking |

| RA | Ramp Ascent |

| RD | Ramp Descent |

| SA | Stair Ascent |

| SD | Stair Descent |

| RANSAC | RANdom SAmpling Consensus |

| ROI | Region of Interest |

| RI | Repeatability Index |

| SI | Separability Index |

| MSA | Mean Semi-Principal Axis |

| DS | Desirability Score |

| HC | Heel Contact |

| TO | Toe Off |

| LDA | Linear Discriminant Analysis |

| PCA | Principal Component Analysis |

| AB | Able-Bodied |

References

- Tucker, M.R.; Olivier, J.; Pagel, A.; Bleuler, H.; Bouri, M.; Lambercy, O.; del R Millán, J.; Riener, R.; Vallery, H.; Gassert, R. Control Strategies for Active Lower Extremity Prosthetics and Orthotics: A Review. J. Neuroeng. Rehabil. 2015, 12, 1–29. [Google Scholar] [CrossRef]

- Sup, F.; Bohara, A.; Goldfarb, M. Design and control of a powered transfemoral prosthesis. Int. J. Robot. Res. 2008, 27, 263–273. [Google Scholar] [CrossRef] [PubMed]

- Van der Schans, C.P.; Geertzen, J.H.; Schoppen, T.; Dijkstra, P.U. Phantom Pain and Health-Related Quality of Life in Lower Limb Amputees. J. Pain Symptom Manag. 2002, 24, 429–436. [Google Scholar] [CrossRef]

- Lenzi, T.; Hargrove, L.J.; Sensinger, J.W. Preliminary evaluation of a new control approach to achieve speed adaptation in robotic transfemoral prostheses. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2049–2054. [Google Scholar]

- Gregg, R.D.; Lenzi, T.; Hargrove, L.J.; Sensinger, J.W. Virtual constraint control of a powered prosthetic leg: From simulation to experiments with transfemoral amputees. IEEE Trans. Robot. 2014, 30, 1455–1471. [Google Scholar] [CrossRef] [PubMed]

- Hargrove, L.J.; Simon, A.M.; Young, A.J.; Lipschutz, R.D.; Finucane, S.B.; Smith, D.G.; Kuiken, T.A. Robotic leg control with EMG decoding in an amputee with nerve transfers. N. Engl. J. Med. 2013, 369, 1237–1242. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, F.; Hargrove, L.J.; Dou, Z.; Rogers, D.R.; Englehart, K.B. Continuous Locomotion-Mode Identification for Prosthetic Legs Based on Neuromuscular-Mechanical Fusion. IEEE Trans. Biomed. Eng. 2011, 58, 2867–2875. [Google Scholar] [CrossRef]

- Young, A.J.; Kuiken, T.A.; Hargrove, L.J. Analysis of Using EMG And Mechanical Sensors to Enhance Intent Recognition in Powered Lower Limb Prostheses. J. Neural Eng. 2014, 11, 56021. [Google Scholar] [CrossRef]

- Hargrove, L.J.; Simon, A.M.; Lipschutz, R.D.; Finucane, S.B.; Kuiken, T.A. Real-time myoelectric control of knee and ankle motions for transfemoral amputees. JAMA 2011, 305, 1542–1544. [Google Scholar] [CrossRef]

- Markovic, M.; Dosen, S.; Popovic, D.; Graimann, B.; Farina, D. Sensor fusion and computer vision for context-aware control of a multi degree-of-freedom. J. Neural Eng. 2015, 12, 066022. [Google Scholar] [CrossRef]

- Massalin, Y.; Abdrakhmanova, M.; Varol, H.A. User-Independent Intent Recognition for Lower Limb Prostheses Using Depth Sensing. IEEE Trans. Biomed. Eng. 2018, 65, 1759–1770. [Google Scholar]

- Goil, A.; Derry, M.; Argall, B.D. Using Machine Learning to Blend Human and Robot Controls for Assisted Wheelchair Navigation. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013. [Google Scholar]

- Krausz, N.E.; Lenzi, T.; Hargrove, L.J. Depth Sensing for Improved Control of Lower Limb Prostheses. IEEE Trans. Biomed. Eng. 2015, 62, 2576–2587. [Google Scholar] [CrossRef] [PubMed]

- Krausz, N.E.; Hargrove, L.J. Fusion of Depth Sensing, Kinetics and Kinematics for Intent Prediction of Lower Limb Prostheses. In Proceedings of the Conference of the Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017. [Google Scholar]

- Hu, B.H.; Krausz, N.E.; Hargrove, L.J. A novel method for bilateral gait segmentation using a single thigh-mounted depth sensor and IMU. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 807–812. [Google Scholar]

- Liu, M.; Wang, D.; Huang, H.H. Development of an Environment-Aware Locomotion Mode Recognition System for Powered Lower Limb Prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 434–443. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Chen, W.; Yan, X.; Wang, J.; Wu, X. Real-Time Stairs Geometric Parameters Estimation for Lower Limb Rehabilitation Exoskeleton. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 5018–5023. [Google Scholar]

- Kleiner, B.; Ziegenspeck, N.; Stolyarov, R.; Herr, H.; Schneider, U.; Verl, A. A radar-based terrain mapping approach for stair detection towards enhanced prosthetic foot control. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 105–110. [Google Scholar]

- Yan, T.; Sun, Y.; Liu, T.; Cheung, C.H.; Meng, M.Q.H. A locomotion recognition system using depth images. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 6766–6772. [Google Scholar]

- Laschowski, B.; McNally, W.; Wong, A.; McPhee, J. Preliminary Design of an Environment Recognition System for Controlling Robotic Lower-Limb Prostheses and Exoskeletons. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019. [Google Scholar]

- Novo-Torres, L.; Ramirez-Paredes, J.P.; Villarreal, D.J. Obstacle Recognition using Computer Vision and Convolutional Neural Networks for Powered Prosthetic Leg Applications. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3360–3363. [Google Scholar]

- Zhang, K.; Xiong, C.; Zhang, W.; Liu, H.; Lai, D.; Rong, Y.; Fu, C. Environmental Features Recognition for Lower Limb Prostheses Toward Predictive Walking. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 465–476. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, S.; Figueiredo, J.; Santos, C.P. Environment-Aware Locomotion Mode Transition Prediction System. In Proceedings of the 2019 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Porto, Portugal, 24–26 April 2019. [Google Scholar]

- Khademi, G.; Mohammadi, H.; Simon, D. Gradient-Based Multi-Objective Feature Selection for Gait Mode Recognition of Transfemoral Amputees. Sensors 2019, 19, 253. [Google Scholar] [CrossRef]

- Liu, D.X.; Xu, J.; Chen, C.; Long, X.; Tao, D.; Wu, X. Vision-Assisted Autonomous Lower-Limb Exoskeleton Robot. IEEE Trans. Syst. Man, Cybern. Syst. 2019. [Google Scholar] [CrossRef]

- Hu, B.; Rouse, E.; Hargrove, L. Fusion of Bilateral Lower-Limb Neuromechanical Signals Improves Prediction of Locomotor Activities. Front. Robot. AI 2018, 5, 78. [Google Scholar] [CrossRef]

- Hu, B.; Rouse, E.; Hargrove, L. Benchmark datasets for bilateral lower-limb neuromechanical signals from wearable sensors during unassisted locomotion in able-bodied individuals. Front. Robot. AI 2018, 5, 14. [Google Scholar] [CrossRef]

- PMD. Camboard Pico Flexx. Available online: http://pmdtec.com/picofamily/ (accessed on 8 November 2019).

- Varol, H.A.; Sup, F.; Goldfarb, M. Multiclass Real-Time Intent Recognition of a Powered Lower Limb Prosthesis. IEEE Trans. Biomed. Eng. 2010, 57, 542–551. [Google Scholar] [CrossRef]

- Huang, H.; Kuiken, T.A.; Lipschutz, R.D. A strategy for identifying locomotion modes using surface electromyography. IEEE Trans. Biomed. Eng. 2008, 56, 65–73. [Google Scholar] [CrossRef]

- Hargrove, L.; Englehart, K.; Hudgins, B. A training strategy to reduce classification degradation due to electrode displacements in pattern recognition based myoelectric control. Biomed. Signal Process. Control. 2008, 3, 175–180. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Farneback, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the 13th Scandinavian Conference on Image Analysis (SCIA), Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Bunderson, N.E.; Kuiken, T.A. Quantification of feature space changes with experience during electromyogram pattern recognition control. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 239–246. [Google Scholar] [CrossRef] [PubMed]

- Krausz, N.E.; Hargrove, L.J. A survey of teleceptive sensing for wearable assistive robotics. Sensors 2019. in review. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krausz, N.E.; Hu, B.H.; Hargrove, L.J. Subject- and Environment-Based Sensor Variability for Wearable Lower-Limb Assistive Devices. Sensors 2019, 19, 4887. https://doi.org/10.3390/s19224887

Krausz NE, Hu BH, Hargrove LJ. Subject- and Environment-Based Sensor Variability for Wearable Lower-Limb Assistive Devices. Sensors. 2019; 19(22):4887. https://doi.org/10.3390/s19224887

Chicago/Turabian StyleKrausz, Nili E., Blair H. Hu, and Levi J. Hargrove. 2019. "Subject- and Environment-Based Sensor Variability for Wearable Lower-Limb Assistive Devices" Sensors 19, no. 22: 4887. https://doi.org/10.3390/s19224887