Step by Step Towards Effective Human Activity Recognition: A Balance between Energy Consumption and Latency in Health and Wellbeing Applications

Abstract

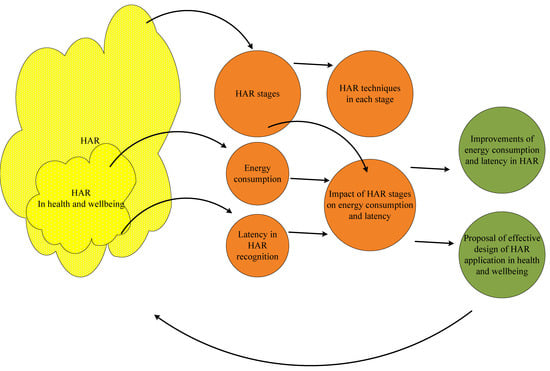

:1. Introduction

2. Research Methodology

3. Impact of Human Activity Recognition (HAR) Stages on Energy Consumption and Latency

3.1. Overview of HAR Stages

3.1.1. Data Collection and Filtering

3.1.2. Data Segmentation

3.1.3. Feature Extraction

3.1.4. Dimensionality Reduction

3.1.5. Classification

3.2. Energy Consumption and Latency per HAR Stage

3.2.1. Impact of HAR Stages on Energy Consumption

- Data collection and filtering stage: Firstly, in the data collection and filtering stage the set of used sensors affects energy consumption [62,110]. The reduction in the number of sensors can help improve the energy efficiency of the sensor device [61], whilst adding new sensor-type events can improve accuracy [54]. The number of sensors in this stage also affects the ability for complex activity detection, which is easier done with more than a single sensor unit [10]. In health and wellbeing applications, new sensor types (especially wearables) can be impractical for elderly people [52] because they are a source of discomfort for them. Therefore, the choice of the number of sensors is a very complex problem in HAR. Energy consumption cannot be reduced by a reduction in the number of sensors in the case of smartphone-based data collection, since the number of sensors is already limited. Furthermore, in the case of non-contact sensing, the number of sensors depends of their type and the covered HAR area. Having this in mind, some authors measured energy efficiency of HAR approaches with wearables [8,10,14,30,31,32]. Some authors analyzed the energy consumption of activity recognition of smartphones [45,111,112].

- Data segmentation stage: Segmentation approaches also affect energy consumption, which is calculated through the computational complexity of a segmentation algorithm. As highlighted in Section 3.2, PLR cannot be used as a universal segmentation approach because of high computational complexity (and consequently energy consumption) [63]. Many online Piecewise Linear Approximation (PLA) approaches have been noted in literature, and some of them are introduced to reduce energy consumption in WSNs (Wireless Sensor Networks) [32]. Even increasing the window size improved the recognition accuracy of various complex activities and had a smaller effect on simple activities in most cases [113]. Therefore, the choice of activities in HAR affects the choice of the segmentation approach, and consequently computational complexity and energy consumption in this stage.

- Feature extraction stage: The approaches and type of extracted features from each segment of data can potentially influence the computational load (energy consumption) and classification accuracy [78] of HAR. Therefore, the choice of feature extraction approaches influences the battery life [5] of sensor devices. Keeping in mind the type of extracted features, it is worth mentioning that time-domain features reduce complexity because they avoid the framing, windowing, filtering, Fourier transformation, liftering, etc. of data [29]. Following the aforementioned, they can be deployed in nodes with limited resources [29], which is the case of many practical applications of HAR [114]. However, they have shown to be prone to measurement and calibration errors [29], which lowers HAR accuracy. Frequency-domain features are less susceptible to signal quality variations [21] and have a more robust performance [2]. The lack of temporal descriptions [70] appears to be the main drawback of frequency-domain features. In conclusion, time-domain features consume less energy compared to frequency domain features [10]. Other techniques for energy reduction mentioned in literature include the usage of locally extracted features [115] and Fast Fourier Transform (FFT) based features [32].

- Feature selection stage: Generally, feature selection causes an increase in computational and memory demands because it changes the shape of objects into high dimensionality vectors. This stage affects energy consumption through computational complexity of the selected algorithm. For example, the dimensionality reduction done using PCA helps reduce overall energy consumption [116].

- Classification stage: Classification approaches affect energy consumption through computational complexity of selected classification algorithms. For example, the complexity of RF was higher than in SVM and NN classifiers, resulting in higher energy consumption [10].

3.2.2. Impact of HAR Stages on Latency

- Data segmentation stage: In this stage, latency can be reduced using advanced methods for data segmentation [39]. The choice of window size exhibits a high influence on latency during HAR. On the other hand, optimal size is not defined a priori [10]. Intuitively, by decreasing the window size, activity detection increases [98] and energy needs decrease [13]. However, short window usage has higher overheads because the recognition algorithm is triggered more frequently. In a popular segmentation technique, the sliding window technique, the window size of 1–2 s can be the best tradeoff between accuracy and recognition latency [10].

- Classification stage: Classification algorithms also affect latency during HAR. Long latency of HAR during the testing stage is achieved using the NN classifier, while RF, ANN, and SVM classifiers [80] show similar behavior.

3.3. Summary

4. The Optimization of Energy Consumption and Latency in HAR

4.1. Improvements of Energy Consumption and Latency in HAR

4.2. Proposal of an Effective Design of a HAR Application in Health and Wellbeing

4.3. Summary

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhao, S.; Li, W.; Cao, J.A. User-Adaptive Algorithm for Activity Recognition Based on K-Means Clustering, Local Outlier Factor, and Multivariate Gaussian Distribution. Sensors 2018, 18, 1850. [Google Scholar] [CrossRef] [PubMed]

- Sukor, A.S.A.; Zakaria, A.; Rahim, N.A. Activity Recognition using Accelerometer Sensor and Machine Learning Classifiers. In Proceedings of the 2018 IEEE 14th International Colloquium on Signal Processing & It’s Applications, Batu Feringghi, Malaysia, 9–10 March 2018. [Google Scholar]

- Mehrang, S.; Pietilä, J.; Korhonen, I. An Activity Recognition Framework Deploying the Random Forest Classifier and A Single Optical Heart Rate Monitoring and Triaxial Accelerometer Wrist-Band. Sensors 2018, 18, 613. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Shirahama, K.; Adeel Nisar, M.; Köping, L.; Grzegorzek, M. Comparison of Feature Learning Methods for Human Activity Recognition Using Wearable Sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef] [PubMed]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Zia Uddin, M.; Torreseb, J. Classification of Recurrence Plots’ Distance Matrices with a Convolutional Neural Network for Activity Recognition. Procedia Comput. Sci. 2018, 130, 157–163. [Google Scholar] [CrossRef]

- Yao, L.; Sheng, Q.Z.; Li, X.; Gu, T.; Tan, M.; Wang, X.; Wang, S.; Ruan, W. Compressive Representation for Device-Free Activity Recognition with Passive RFID Signal Strength. IEEE Trans. Mob. Comput. 2017, 10, 293–306. [Google Scholar] [CrossRef]

- Rault, T.; Bouabdallah, A.; Challal, Y.; Marin, F. A survey of energy-efficient context recognition systems using wearable sensors for healthcare applications. Pervasive Mob. Comput. 2017, 37, 23–44. [Google Scholar] [CrossRef]

- Jourdan, T.; Boutet, A.; Frindel, C. Toward privacy in IoT mobile devices for activity recognition. In Proceedings of the 15th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, New York, NY, USA, 12–14 November 2019. [Google Scholar]

- Ding, G.; Tian, J.; Wu, J.; Zhao, Q.; Xie, L. Energy Efficient Human Activity Recognition Using Wearable Sensors. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Barcelona, Spain, 15–18 April 2018. [Google Scholar]

- Su, X.; Tong, H.; Ji, P. Activity Recognition with Smartphone Sensors. Tsinghua Sci. Technol. 2014, 19, 235–249. [Google Scholar]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 33. [Google Scholar] [CrossRef]

- Banos, O.; Galvez, J.-M.; Damas, M.; Pomares, H.; Rojas, I. Window Size Impact in Human Activity Recognition. Sensors 2014, 14, 6474–6499. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4029702/ (accessed on 12 July 2019). [CrossRef]

- Cheng, W.; Erfani, S.; Zhang, R.; Ramamohanarao, K. Learning Datum-Wise Sampling Frequency for Energy-Efficient Human Activity Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Nweke, H.F.; Teh, Y.W.; Al-garadi, M.A. Deep Learning Algorithms for Human Activity Recognition using Mobile and Wearable Sensor Networks: State of the Art and Research Challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Kikhia, B.; Gomez, M.; Jiménez, L.L.; Hallberg, J.; Koronen, N.; Synnes, K. Analyzing Body Movements within the Laban Effort Framework Using a Single Accelerometer. Sensors 2014, 14, 5725–5741. [Google Scholar] [CrossRef] [PubMed]

- Saha, J.; Chowdhury, C.; Chowdhury, I.R.; Biswas, S.; Aslam, N. An Ensemble of Condition Based Classifiers for Device Independent Detailed Human Activity Recognition Using Smartphones. Information 2018, 9, 94. [Google Scholar] [CrossRef]

- Nguyen, H.; Lebel, K.; Boissy, P.; Bogard, S.; Goubault, E.; Duval, C. Auto detection and segmentation of daily living activities during a Timed Up and Go task in people with Parkinson’s disease using multiple inertial sensors. J. Neuroeng. Rehabil. 2017, 14, 26–39. [Google Scholar] [CrossRef] [PubMed]

- Twomey, A.; Diethe, T.; Fafoutis, X.; Elsts, A.; McConville, R.; Flach, P.; Craddock, I. A Comprehensive Study of Activity Recognition Using Accelerometers. Informatics 2018, 5, 27. [Google Scholar] [CrossRef]

- Allen, F.R.; Ambikairajah, E.; Lovell, N.H.; Celler, B.G. Classification of a known sequence of motions and postures from accelerometry data using adapted Gaussian mixture models. Physiol. Meas. 2006, 27, 935–951. [Google Scholar] [CrossRef]

- Dobbins, C.; Rawassizadeh, R. Towards Clustering of Mobile and Smartwatch Accelerometer Data for Physical Activity Recognition. Informatics 2018, 5, 29. Available online: https://www.mdpi.com/2227-9709/5/2/29 (accessed on 12 July 2019). [CrossRef]

- Jordao, A.; Borges Torres, L.A.; Schwartz, W.R. Novel approaches to human activity recognition based on accelerometer data. Signal Image Video Process. 2018, 12, 1387–1394. [Google Scholar] [CrossRef]

- Choi, H.; Wang, Q.; Toledo, M.; Turaga, P.; Buman, M.; Srivastava, A. Temporal Alignment Improves Feature Quality: An Experiment on Activity Recognition with Accelerometer Data. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Cleland, I.; Kikhia, B.; Nugent, C.; Boytsov, A.; Hallberg, J.; Synnes, K.; McClean, S.; Finlay, D. Optimal Placement of Accelerometers for the Detection of Everyday Activities. Sensors 2013, 13, 9183–9200. Available online: https://www.ncbi.nlm.nih.gov/pubmed/23867744 (accessed on 12 July 2019). [CrossRef]

- Fanchamps, M.H.J.; Horemans, H.L.D.; Ribbers, G.M.; Stam, H.J.; Bussmann, J.B.J. The Accuracy of the Detection of Body Postures and Movements Using a Physical Activity Monitor in People after a Stroke. Sensors 2018, 18, 2167. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6069255/ (accessed on 12 July 2019). [CrossRef]

- Khojasteh, S.B.; Villar, J.R.; Chira, C.; González, V.M.; de la Cal, E. Improving Fall Detection Using an On-Wrist Wearable Accelerometer. Sensors 2018, 18, 1350. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y. An activity recognition algorithm based on energy expenditure model. In Proceedings of the 3rd International Conference on Mechatronics, Robotics and Automation. Advances in Computer Science Research, Shenzhen, China, 20–21 April 2015. [Google Scholar]

- Avci, A.; Bosch, S.; Marin-Perianu, M.; Marin-Perianu, R.; Havinga, P. Activity Recognition Using Inertial Sensing for Healthcare, Wellbeing and Sports Applications: A Survey. In Proceedings of the 23th International Conference on Architecture of Computing Systems 2010, Hannover, Germany, 22–23 February 2010. [Google Scholar]

- Dargie, W. Analysis of Time and Frequency Domain Features of Accelerometer Measurements. Proceedings of 18th International Conference on Computer Communications and Networks, San Francisco, CA, USA, 3–6 August 2009. [Google Scholar]

- Rezaie, H.; Ghassemian, M. An Adaptive Algorithm to Improve Energy Efficiency in Wearable Activity Recognition Systems. IEEE Sens. J. 2017, 17, 5315–5323. [Google Scholar] [CrossRef]

- Ghasemzadeh, H.; Fallahzadeh, R.; Jafari, R. A Hardware-Assisted Energy-Efficient Processing Model for Activity Recognition Using Wearables. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2016, 2. Available online: https://dl.acm.org/citation.cfm?id=2886096 (accessed on 12 July 2019). [CrossRef]

- Grützmacher, F.; Beichler, B.; Hein, A.; Kirste, T.; Haubelt, C. Time and Memory Efficient Online Piecewise Linear Approximation of Sensor Signals. Sensors 2018, 18, 1672. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6022087/ (accessed on 12 July 2019). [CrossRef] [PubMed]

- Suto, J.; Oniga, S.; Sitar, P. Feature Analysis to Human Activity Recognition. Int. J. Comput. Commun. Control. 2017, 2, 116–130. [Google Scholar] [CrossRef]

- Chowdhury, A.K.; Tjondronegoro, D.; Chandran, V.; Trost, S.G. Physical Activity Recognition using Posterioradapted Class-based Fusion of Multi Accelerometers data. IEEE J. Biomed Health Inform. 2018, 22, 678–685. Available online: https://www.ncbi.nlm.nih.gov/pubmed/28534801 (accessed on 12 July 2019). [CrossRef]

- Cao, J.; Li, W.; Ma, C.; Tao, Z. Optimizing multi-sensor deployment via ensemble pruning for wearable activity recognition. Inf. Fusion 2018, 41, 68–79. [Google Scholar] [CrossRef]

- Rokni, S.A.; Ghasemzadeh, H. Autonomous Training of Activity Recognition Algorithms in Mobile Sensors: A Transfer Learning Approach in Context-Invariant Views. IEEE Trans. Mob. Comput. 2018, 17, 1764–1777. [Google Scholar] [CrossRef]

- Rueda, M.F.; Grzeszick, R.; Fink, G.A.; Feldhorst, S.; ten Hompel, M. Convolutional Neural Networks for Human Activity Recognition Using Body-Worn Sensors. Informatics 2018, 5, 26. [Google Scholar] [CrossRef]

- Khatun, S.; Morshed, B.I. Fully-Automated Human Activity Recognition with Transition Awareness from Wearable Sensor Data for mHealth. In Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018. [Google Scholar]

- De-la-Hoz-Franco, E.; Ariza-Colpas, P.; Quero, J.M.; Espinilla, M. Sensor-Based Datasets for Human Activity Recognition–A Systematic Review of Literature. IEEE Access 2018, 6, 59192–59210. Available online: https://ieeexplore.ieee.org/document/8478653 (accessed on 12 July 2019). [CrossRef]

- Chen, S.; Lach, J.; Lo, B.; Yang, G.-Z. Toward Pervasive Gait Analysis with Wearable Sensors: A Systematic Review. IEEE J. Biomed. Health Inform. 2016, 20, 1521–1537. Available online: https://ieeexplore.ieee.org/document/7574303 (accessed on 12 July 2019). [CrossRef] [PubMed]

- Dobkin, B.H. Wearable motion sensors to continuously measure real-world physical activities. Curr. Opin. Neurol. 2013, 26, 602–608. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4035103/ (accessed on 12 July 2019). [CrossRef] [PubMed]

- Scheurer, S.; Tedesco, S.; Brown, K.N.; O’Flynn, B. Human Activity Recognition for Emergency First Responders via Body-Worn Inertial Sensors. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Eindhoven, The Netherlands, 9–12 May 2017. [Google Scholar]

- Oniga, S.; Sütő, J. Human activity recognition using neural networks. In Proceedings of the 2014 15th International Carpathian Control Conference (ICCC), Velke Karlovice, Czech Republic, 28–30 May 2014. [Google Scholar]

- Al Machot, F.; Ranasinghe, S.; Plattner, J.; Jnoub, N. Human Activity Recognition based on Real Life Scenarios. In Proceedings of the CoMoRea 2018, IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018. [Google Scholar]

- Liang, Y.; Zhou, X.; Yu, Z.; Guo, B.; Yang, Y. Energy Efficient Activity Recognition Based on Low Resolution Accelerometer in Smart Phones. In Proceedings of the GPC 2012: Advances in Grid and Pervasive Computing, International Conference on Grid and Pervasive Computing, Uberlândia, Brazil, 26–28 May 2012; Miani, R., Camargos, L., Zarpelão, B., Rosas, E., Pasquini, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Usharani, J.; Sakthivel, U. Human Activity Recognition using Android Smartphone. In Proceedings of the 1st International Conference on Innovations in Computing & Networking (ICICN-16), Mysore Road, Bengalura, 12–13 May 2016. [Google Scholar]

- Chako, A.; Kavitha, R. Activity Recognition using Accelerometer and Gyroscope Sensor Data. Int. J. Comput. Tech. 2017, 4, 23–28. Available online: http://oaji.net/articles/2017/1948-1514030944.pdf (accessed on 12 July 2019).

- Mandong, A.; Minir, U. Smartphone Based Activity Recognition using K-Nearest Neighbor Algorithm. In Proceedings of the International Conference of Engineering Technologies (ICENTE’18), Konya, Turkey, 25–27 October 2019. [Google Scholar]

- Mughal, F.T. Latest trends in human activity recognition and behavioral analysis using different types of sensors. Int. J. Adv. Electron. Comput. Sci. 2018, 5, 2393–2835. [Google Scholar]

- Akter, S.S.; Holder, L.B.; Cook, D.J. Springer Nature. In Proceedings of the Activity Recognition Using Graphical Features from Smart Phone Sensor, International Conference on Internet of Things, Seattle, WA, USA, 25–30 June 2014; Georgakopoulos, D., Zhang, L.-J., Eds.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Allet, L.; Knols, R.H.; Shirato, K.; de Bruin, E.D. Wearable Systems for Monitoring Mobility-Related Activities in Chronic Disease: A Systematic Review. Sensors 2010, 10, 9026–9052. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cero Dinarević, E.; Baraković Husić, J.; Baraković, S. Issues of Human Activity Recognition in Healthcare. In Proceedings of the 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 20–22 March 2019. [Google Scholar]

- Walse, K.H.; Dharaskar, R.V.; Thakare, V.M. Performance Evaluation of Classifiers on WISDM Dataset for Human Activity Recognition. In Proceedings of the Second International Conference on Information and Communication Technology for Competitive Strategies, Udaipur, India, 4–5 March 2016. [Google Scholar]

- Niu, X.; Wang, Z.; Pan, Z. Extreme Learning Machine based Deep Model for Human Activity Recognition with Wearable Sensors. Comput. Sci. Eng. 2018, 21, 16–25. [Google Scholar] [CrossRef]

- Andrey, I. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar]

- Garcia-Ceja, E.; Brena, R. Activity Recognition Using Community Data to Complement Small Amounts of Labeled Instances. Sensors 2016, 16, 877. [Google Scholar] [CrossRef] [Green Version]

- Mohamed, R.; Zainudin, M.N.S.; Sulaiman, M.N.; Perumal, T.; Mustapha, N. Multi-label classification for physical activity recognition from various accelerometer sensor positions. J. Inf. Commun. Technol. 2018, 17, 209–231. [Google Scholar]

- Jain, A.; Kanhangad, V. Human Activity Classification in Smartphones using Accelerometer and Gyroscope Sensors. IEEE Sens. J. 2018, 18, 1169–1177. [Google Scholar] [CrossRef]

- Ali, H.H.; Moftah, H.M.; Youssif, A.A. Depth-based human activity recognition: A comparative perspective study on feature extraction. Future Comput. Inform. J. 2018, 3, 51–67. [Google Scholar] [CrossRef]

- Subasi, A.; Radhwan, M.; Kurdi, R.; Khateeb, K. IoT based Mobile Healthcare System for Human Activity Recognition. In Proceedings of the 2018 15th Learning and Technology Conference (L&T), Jeddah, Saudi Arabia, 25–26 February 2018. [Google Scholar]

- Khalifa, S.; Lan, G.; Hassan, M.; Seneviratne, A. HARKE: Human Activity Recognition from Kinetic Energy Harvesting Data in Wearable Devices. IEEE Trans. Mob. Comput. 2018, 17, 1353–1368. [Google Scholar] [CrossRef]

- Alzahrani, M.; Kammoun, S. Human Activity Recognition: Challenges and Process Stages. Int. J. Innov. Res. Comput. Commun. Eng. 2016, 4, 1111–1118. Available online: http://www.rroij.com/open-access/human-activity-recognition-challenges-and-process-stages-.pdf (accessed on 12 July 2019).

- Guerrero, J.L.; Berlanga, A.; Garcıa, J.; Molina, J.M. Piecewise Linear Representation Segmentation as a Multiobjective Optimization Problem. In Distributed Computing and Artificial Intelligence. Advances in Intelligent and Soft Computing; De Leon, F., de Carvalho, A.P., Rodríguez-González, S., De Paz Santana, J.F., Rodríguez, J.M.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; Volume 79, pp. 267–274. [Google Scholar]

- Nguyen, N.D.; Bui, D.T.; Truong, P.H.; Jeong, G.-M. Position-Based Feature Selection for Body Sensors regarding Daily Living Activity Recognition. J. Sens. 2018, 2018. [Google Scholar] [CrossRef] [Green Version]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. Available online: https://www.sciencedirect.com/science/article/pii/S156625351630077X (accessed on 12 July 2019). [CrossRef]

- Bharti, P.; De, D.; Chellappan, S.; Das, S.K. HuMAn: Complex Activity Recognition with Multi-Modal Multi-Positional Body Sensing. IEEE Trans. Mob. Comput. 2018, 18, 857–870. Available online: https://ieeexplore.ieee.org/document/8374816 (accessed on 12 July 2019). [CrossRef]

- Shaolin, M.; Scholten, H.; Having, P.J. Towards physical activity recognition using smartphone sensors. In Proceedings of the 2013 IEEE 10th International Conference on Ubiquitous Intelligence and Computing (UIC), Vietri sul Mare, Italy, 18–20 December 2013. [Google Scholar]

- Sun, W.; Cai, Z.; Li, Y.; Liu, F.; Fang, S.; Wang, G. Security and Privacy in the Medical Internet of Things: A Review. Secur. Commun. Netw. 2018, 2018, 9. [Google Scholar] [CrossRef]

- Sensorweb. Available online: http://sensorweb.engr.uga.edu/wp-content/uploads/2018/06/shi2018dynamic.pdf (accessed on 15 July 2019).

- Nakisa, B.; Rastgoo, M.N.; Tjondronegoro, D.; Chandran, V. Evolutionary computation algorithms for feature selection of EEG-based emotion recognition using mobile sensors. Expert Syst. Appl. 2018, 93, 143–155. [Google Scholar] [CrossRef] [Green Version]

- Fullerton, E.; Heller, B.; Munoz-Organero, M. Recognising human activity in free-living using multiple body-worn accelerometers. IEEE Sens. J. 2017, 17, 5290–5297. Available online: https://ieeexplore.ieee.org/document/7964661 (accessed on 12 July 2019). [CrossRef] [Green Version]

- Jos, D. Human Activity Pattern Recognition from Accelerometry Data. Master’s Thesis, German Aerospace Center Deutsches Zentrum für Luft- und Raumfahrt e.V. (DLR), Cologne, Germany, November 2013. [Google Scholar]

- Hölzemann, A.; Van Laerhoven, K. Using Wrist-Worn Activity Recognition for Basketball Game Analysis. In Proceedings of the 5th international Workshop on Sensor-based Activity Recognition and Interaction, Berlin, Germany, 20–21 September 2018. [Google Scholar]

- Lv, M.; Chen, L.; Chen, T.; Chen, G. Bi-View Semi-Supervised Learning Based Semantic Human Activity Recognition Using Accelerometers. IEEE Trans. Mob. Comput. 2018, 17, 1991–2001. [Google Scholar] [CrossRef]

- Gupta, P.; Dallas, T. Feature Selection and Activity Recognition System Using a Single Triaxial Accelerometer. IEEE Trans. Biomed. Eng. 2014, 61, 1780–1786. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhang, L.; Cao, Z.; Guo, J. Distilling the Knowledge from Handcrafted Features for Human Activity Recognition. IEEE Trans. Ind. Inform. 2018, 14, 4334–4342. Available online: https://ieeexplore.ieee.org/document/8247224 (accessed on 12 July 2019). [CrossRef]

- Shahid Khan, M.U.; Abbas, A.; Ali, M.; Jaward, M.; Khan, S.U.; Li, K.; Zomaya, A.Y. On the correlation of sensor location and Human Activity Recognition in Body Area Network (BANs). IEEE Syst. J. 2018, 12, 82–91. [Google Scholar] [CrossRef]

- Benson, L.C.; Clermont, C.A.; Osis, S.T.; Kobsar, D.; Ferber, R. Classifying Running Speed Conditions Using a Single Wearable Sensor: Optimal Segmentation and Feature Extraction Methods. J. Biomech. 2018, 71, 94–99. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Ke, R.; Li, J.; An, Y.; Wang, K.; Yu, L. A correlation-based binary particle swarm optimization method for feature selection in human activity recognition. Int. J. Distrib. Sens. Netw. 2018, 14, 1550147718772785. [Google Scholar] [CrossRef] [Green Version]

- Shen, C.; Chen, Y.; Yang, G.; Guan, X. Toward Hand-Dominated Activity Recognition Systems with Wristband-Interaction Behavior Analysis. IEEE Trans. Syst. Man Cybern. Syst. 2018, Early Access, 1–11. [Google Scholar] [CrossRef]

- Akbari, A.; Wu, J.; Grimsley, R.; Jafari, R. Hierarchical Signal Segmentation and Classification for Accurate Activity Recognition. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018. [Google Scholar]

- Jansi, R.; Amutha, R. A novel chaotic map based compressive classification scheme for human activity recognition using a tri-axial accelerometer. Multimed. Tools Appl. 2018, 77, 31261–31280. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4721778/ (accessed on 12 July 2019). [CrossRef] [Green Version]

- Wang, A.; Chen, G.; Wu, X.; Liu, L.; An, N.; Chang, C.-Y. Towards Human Activity Recognition: A Hierarchical Feature Selection Framework. Sensors 2018, 18, 3629. [Google Scholar] [CrossRef] [Green Version]

- Koldijk, S.; Neerincx, M.A.; Kraaij, W. Detecting Work Stress in Offices by Combining Unobtrusive Sensors. IEEE Trans. Affect. Comput. 2016, 9, 227–239. [Google Scholar] [CrossRef] [Green Version]

- Alumni. Media. Available online: http://alumni.media.mit.edu/~emunguia/pdf/PhDThesisMunguiaTapia08.pdf (accessed on 15 July 2019).

- Al-Garadi, M.A.; Mohames, A.; Al-Ali, A.; Du, X.; Guizani, M. A Survey of Machine and Deep Learning Methods for Internet of Things (IoT) Security. Available online: https://arxiv.org/abs/1807.11023 (accessed on 12 July 2019).

- Al Machot, F.; Heinrich, C.; Ranasinghe, M.S. A Hybrid Reasoning Approach for Activity Recognition Based on Answer Set Programming and Dempster–Shafer Theory. In Studies in Systems, Decision and Control, Recent Advances in Nonlinear Dynamics and Synchronization; Kyamakya, K., Mathis, W., Stoop, R., Chedjou, J.C., Li, Z., Eds.; MetaPress and Springerlink: Basel, Switzerland, 2017; Volume 109, pp. 303–318. [Google Scholar]

- Xue, Y.-W.; Liu, J.; Chen, J.; Zhang, Y.-T. Feature Grouping Based on Ga and L-Gem for Human Activity Recognition. In Proceedings of the 2018 International Conference on Machine Learning and Cybernetics (ICMLC), Kobe, Japan, 15–18 July 2018. [Google Scholar]

- He, W.; Guo, Y.; Gao, C.; Li, X. Recognition of human activities with wearable sensors. EURASIP J. Adv. Signal Processing 2012, 108, 1–13. [Google Scholar] [CrossRef] [Green Version]

- De Miguel, K.; Brunete, A.; Hernando, M.; Gambo, E. Home Camera-Based Fall Detection System for the Elderly. Sensors 2017, 17, 2864. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garcia-Cejaa, E.; Rieglera, M.; Nordgreenc, T.; Jakobsenc, P.; Oedegaardf, K.J.; Tørresena, J. Mental Health Monitoring with Multimodal Sensing and Machine Learning: A Survey. Pervasive Mob. Comput. 2018, 51, 1–26. [Google Scholar] [CrossRef]

- Muheidat, F.; Tawalbeh, L.; Tyrer, H. Context-Aware, Accurate, and Real Time Fall Detection System for Elderly People. In Proceedings of the 2018 IEEE 12th International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 31 January–2 February 2018. [Google Scholar]

- Moldovan, D.; Antal, M.; Pop, C.; Olosutean, A.; Ciora, T.; Anghel, I.; Salomie, I. Spark-Based Classification Algorithms for Daily Living Activities. In Advances in Intelligent Systems and Computing, Artificial Intelligence and Algorithms in Intelligent Systems; Silhavy, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 764, pp. 69–78. [Google Scholar]

- Kalita, S.; Karmakar, A.; Hazarika, S.M. Efficient extraction of spatial relations for extended objects vis-à-vis human activity recognition in video. Appl. Intell. 2018, 48, 204–219. [Google Scholar] [CrossRef]

- Jiménez, A.R.; Seco, F. Multi-Event Naive Bayes Classifier for Activity Recognition in the UCAmI Cup. In Proceedings of the 2th International Conference on Ubiquitous Computing and Ambient Intelligence (UCAmI 2018), Punta Cana, Dominican Republic, 4–7 December 2018. [Google Scholar]

- Malhotra, A.; Schizas, I.D.; Metsis, V. Correlation Analysis-Based Classification of Human Activity Time Series. IEEE Sens. J. 2018, 18, 8085–8095. [Google Scholar] [CrossRef]

- Sani, S.; Wiratunga, N.; Massie, S.; Cooper, K. Personalised Human Activity Recognition Using Matching Networks. In Case-Based Reasoning Research and Development; Cox, M.T., Funk, P., Begum, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11156, pp. 339–353. [Google Scholar]

- Sfar, H.; Bouzeghoub, A. Activity Recognition for Anomalous Situations Detection. Jetsan 2018, 39, 400–406. [Google Scholar] [CrossRef]

- Xu, W.; Pang, Y.; Yang, Y. Human Activity Recognition Based on Convolutional Neural Network. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Cadenasa, J.M.; Carmen Garrido, M.; Martinez-España, R.; Muñoz, A. A k-nearest neighbors based approach applied to more realistic activity recognition datasets. J. Ambient. Intell. Smart Environ. 2018, 10, 247–259. Available online: https://content.iospress.com/articles/journal-of-ambient-intelligence-and-smart-environments/ais486 (accessed on 12 July 2019). [CrossRef]

- Wang, X.-J. A Human Body Gait Recognition System Based on Fourier Transform and Quartile Difference Extraction. Int. J. Online Biomed. Eng. (IJOE) 2017, 13, 129–139. [Google Scholar] [CrossRef]

- Diraco, G.; Leone, A.; Siciliano, P. A Radar-Based Smart Sensor for Unobtrusive Elderly Monitoring in Ambient Assisted Living Applications. Biosensors 2017, 7, 29. Available online: https://www.ncbi.nlm.nih.gov/pubmed/29186786 (accessed on 12 July 2019). [CrossRef] [Green Version]

- Arif, M.; Kattan, A. Physical Activities Monitoring Using Wearable Acceleration Sensors Attached to the Body. PLoS ONE 2015, 10. Available online: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0130851 (accessed on 12 July 2019). [CrossRef] [Green Version]

- Khan, S.S.; Ye, B.; Taati, B.; Mihailidis, A. Detecting agitation and aggression in people with dementia using sensors-A systematic review. Alzheimer’s Dement. 2018, 14, 824–832. [Google Scholar] [CrossRef] [PubMed]

- Uddin, Z.; Kim, D.-H.; Kim, T.-S. A Human Activity Recognition System using HMMs with GDA on Enhanced Independent Component Features. Int. Arab. J. Inf. Technol. 2015, 12, 304–310. [Google Scholar]

- Reis, P.M.; Hebenstreit, F.; Gabsteiger, F.; von Tscharner, V.; Lochmann, M. Methodological aspects of EEG and body dynamics measurements during motion. Front. Hum. Neurosci. 2014, 8, 156–175. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Oliveira, G.L.; Nascimento, E.R.; Vieira, A.W.; Campos, M.F.M. Sparse Spatial Coding: A novel approach for efficient and accurate object recognition. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012. [Google Scholar]

- Adomavicius, G. Toward the Next Generation of Recommender Systems: A Survey of the State-of-the-Art and Possible Extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Chin, Z.H.; Ng, H.; Yap, T.T.V.; Tong, H.L.; Ho, C.C.; Goh, V.T. Daily Activities Classification on Human Motion Primitives Detection Dataset. In Proceedings of the Lecture Notes in Electrical Engineering, Computational Science and Technology, Kota Kinabalu, Malaysia, 29–30 August 2018. [Google Scholar]

- Ye, J.; Qi, G.-J.; Zhuang, N.; Hu, H.; Hua, K.A. Learning Compact Features for Human Activity Recognition via Probabilistic First-Take-All. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 1. [Google Scholar] [CrossRef]

- Lee, J.; Kim, J. Energy-Efficient Real-Time Human Activity Recognition on Smart Mobile Devices. Mob. Inf. Syst. 2016, 2016, 12. [Google Scholar] [CrossRef] [Green Version]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. [Google Scholar] [CrossRef]

- Muhammad, S.A.; Klein, B.N.; Van Laerhoven, K.; David, K. A Feature Set Evaluation for Activity Recognition with Body-Worn Inertial Sensors. In Constructing Ambient Intelligence, AmI 2011 Workshops, Amsterdam, The Netherlands, 16–18 November 2011; Wichert, R., Van Laerhoven, K., Gelissen, J., Eds.; Springer: Berlin/Heidelberg, Germany.

- Gordon, D.; Hanne, J.-H.; Berchtold, M.; Miyaki, T.; Beigl, M. Recognizing Group Activities using Wearable Sensors. Available online: https://www.teco.edu/~michael/publication/2011_MobiQuitous_GAR.pdf (accessed on 12 July 2019).

- Stiden, A.; Blinck, H.; Bhattacharya, S.; Siiger Prentow, T.; Kjærgaard, M.B.; Dey, A.; Sonne, T.M.; Jensen, M.M. Smart Devices are Different: Assessing and MitigatingMobile Sensing Heterogeneities for Activity Recognition. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015. [Google Scholar]

- Abidine, B.M.; Fergani, L.; Fergani, B.; Oussalah, B. The joint use of sequence features combination and modified weighted SVM for improving daily activity recognition. Pattern Anal. Appl. 2018, 21, 119–138. [Google Scholar] [CrossRef]

- Voicu, R.-A.; Dobre, C.; Bajenaru, L.; Ciobanu, R.-I. Human Physical Activity Recognition Using Smartphone Sensors. Sensors 2019, 19, 458. [Google Scholar] [CrossRef] [Green Version]

- Chelli, A.; Pätzold, M. A Machine Learning Approach for Fall Detection and Daily Living Activity Recognition. IEEE Access 2019, 7, 38670–38687. Available online: https://ieeexplore.ieee.org/document/8672567/authors#authors (accessed on 12 July 2019). [CrossRef]

- Martínez-Villaseñor, L.; Ponce, H.; Espinosa-Loera, R.A. Multimodal Database for Human Activity Recognition and Fall Detection. In Proceedings of the 2th International Conference on Ubiquitous Computing and Ambient Intelligence (UCAmI 2018), Punta Cana, Dominican Republic, 4–7 December 2018. [Google Scholar]

- Billiet, L.; Swinnen, T.W.; Westhovens, R.; de Vlam, K.; Van Huffel, S. Accelerometry-Based Activity Recognition and Assessment in Rheumatic and Musculoskeletal Diseases. Sensors 2016, 16, 2151. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5191131/ (accessed on 12 July 2019). [CrossRef] [PubMed] [Green Version]

- Yuan, G.; Wang, Z.; Meng, F.; Yan, Q.; Xia, S. An overview of human activity recognition based on smartphone. Sens. Rev. 2019, 39, 288–306. [Google Scholar] [CrossRef]

- Boukhechba, M.; Bouzouane, A.; Bouchard, B.; Gouin-Vallerand, C.; Giroux, S. Energy Optimization for Outdoor Activity Recognition. J. Sens. 2016, 2016. [Google Scholar] [CrossRef]

- Soria Morillo, L.M.; Gonzalez-Abril, L.; Ortega Ramirez, J.A.; de la Concepcion, M.A.A. Low Energy Physical Activity Recognition System on Smartphones. Sensors 2015, 15, 5163–5196. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, L.; Wu, D.; Ruan, X.; Weng, S.; Peng, A.; Tang, B.; Lu, H.; Shi, H.; Zheng, H. A Novel Energy-Efficient Approach for Human Activity Recognition. Sensors 2017, 17, 2064. [Google Scholar] [CrossRef] [Green Version]

- Awais, M.; Chiari, L.; Ihlen, E.A.F.; Helbostad, L.; Palmerini, L. Physical Activity Classification for Elderly People in Free Living Conditions. IEEE J. Biomed. Health Inform. 2019, 23, 197–207. [Google Scholar] [CrossRef]

- Torrers, R.L.S.; Visvanathan, R.; Hoskins, S.; van den Hengel, A.; Ranasinghe, D.C. Effectiveness of a Batteryless and Wireless Wearable Sensor System for Identifying Bed and Chair Exit’s in Healthy Older People. Sensors 2016, 16, 546–563. [Google Scholar] [CrossRef] [Green Version]

- Chen, M.; Ma, Y.; Li, Y.; Wu, D.; Zhang, Y.; Youn, C.-H. Wearable 2.0: Enabling Human-Cloud Integration in Next Generation Healthcare Systems. IEEE Commun. Mag. 2017, 55, 54–61. Available online: https://ieeexplore.ieee.org/document/7823338 (accessed on 12 July 2019). [CrossRef]

- Li, J. Methods for Assessment and Prediction of QoE, Preference and Visual Discomfort in Multimedia Application with Focus on S-3DTV. Ph.D. Thesis, Universitéde Nantes, Nantes, France, 2013. [Google Scholar]

- Yao, L.; Sheng, Q.Z.; Benatallah, B.; Dustdar, S.; Wang, X.; Shemshadi, A.; Kanhere, S.S. WIT’S: An IoT-endowed computational framework for activity recognition in personalized smart homes. Computing 2018, 100, 369–385. [Google Scholar] [CrossRef]

- Mohadis, H.M.; Mohamad Ali, N. A Study of Smartphone Usage and Barriers Among the Elderly. In Proceedings of the 2014 3rd International Conference on User Science and Engineering (i-USEr), Shah Alam, Malaysia, 2–5 September 2014. [Google Scholar]

- Bourke, K.A.; Ihlem, E.A.F.; Bergquist, R.; Wik, P.B.; Vereijken, B.; Helbostad, J.L. A Physical Activity Reference Data-Set Recorded from Older Adults Using Body-Worn Inertial Sensors and Video Technology—The ADAPT Study Data-Set. Sensors 2017, 17, 559. [Google Scholar] [CrossRef] [Green Version]

- Kafali, O.; Bromuri, S.; Sindlar, M.; Van der Weide, T.; Pelaez, E.A.; Schaechtle, U.; Alves, B.; Zufferey, D.; Rodriguez-Villegas, E.; Schumacher, M.I.; et al. COMMODITY12: A smart e-health environment for diabetes management. J. Ambient. Intell. Smart Environ. 2013, 5, 479–502. [Google Scholar]

- Vasilateanu, A.; Radu, I.C.; Buga, A. Environment crowd-sensing for asthma management. In Proceedings of the IEEE E-Health and Bioengineering Conference (EHB), Iasi, Rumania, 19–21 November 2015. [Google Scholar]

- Lin, J.J.; Mamykina, L.; Lindtner, S.; Delajoux, G.; Strub, H.B. Fish‘N’Steps: Encouraging physical activity with an interactive computer game. In Proceedings of the 8th International Conference on Ubiquitous Computing, Orange County, CA, USA, 17–21 September 2006. [Google Scholar]

- Consolvo, S.; McDonald, D.W.; Toscos, T.; Chen, M.Y.; Froehlich, J.; Harrison, B.; Klasnja, P.; LaMarca, A.; LeGrand, L.; Libby, R.; et al. Activity sensing in the wild: A field trial of ubifit garden. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008. [Google Scholar]

- Kańtoch, E.; Augustyniak, P.; Markiewicz, M.; Prusak, D. Monitoring activities of daily living based on wearable wireless body sensor network. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014. [Google Scholar]

- Alam, M.R.; Reazh, M.B.I.; Ali, M.A.M. A Review of Smart Homes—Past, Present, and Future. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 1190–1203. Available online: https://www.researchgate.net/publication/262687986_A_Review_of_Smart_Homes_-_Past_Present_and_Future (accessed on 12 July 2019). [CrossRef]

- Majumder, S.; Aghayi, E.; Noferesti, M.; Memarzadeh-Tehran, H.; Mondal, T.; Pang, Z.; Deen, M.J. Smart Homes for Elderly Healthcare—Recent Advances and Research Challenges. Sensors 2017, 17, 2496. [Google Scholar] [CrossRef] [Green Version]

- Agoulmine, N.; Jamal Deen, M.; Lee, J.-S.; Meyyappan, M. U-Health Smart Home. IEEE Nanotechnol. Mag. 2011, 5, 6–11. Available online: https://ieeexplore.ieee.org/document/5993590 (accessed on 12 July 2019). [CrossRef]

- Mirtchouk, M.; Merck, C.; Kleinberg, S. Automated Estimation of Food Type and Amount consumed from body-worn audio and motion sensors. In Proceedings of the 20016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016. [Google Scholar]

- Charlona, Y.; Bourennane, W.; Bettahar, F.; Campo, E. Activity monitoring system for elderly in a context of smart home. Digit. Technol. Healthc. 2013, 34, 60–63. Available online: https://www.sciencedirect.com/science/article/abs/pii/S1959031812001509 (accessed on 12 July 2019). [CrossRef]

| Dataset | Number of Activities/Actions/Class of Activities | Number of Involved Users | References |

|---|---|---|---|

| HAR | 6/0/0 | 30 | [19,33,53,54] |

| WISDM | 6/0/0 | 36 | [53,55,56,57] |

| UCI HAR | 6/0/0 | 30 | [35,55,58] |

| USCHAD | 12/0/0 | 14 | [19] |

| PAMAP2 | 12/0/0 | 9 | [19,37,57] |

| OPPORTUNITY | 5/0/0 | 12 | [4,35,37] |

| UniMiB-SHAR | 0/0/17 | 17 | [4] |

| MSR Action 3D | 0/30/0 | 1 | [59] |

| RGBD-HuDaAct | 12/0/0 | 30 | [59] |

| MSR Daily Activity 3D | 15/0/0 | 10 | [59] |

| MHEALTH | 12/0/0 | 10 | [60] |

| WHARF | 5/0/0 | 17 | [22] |

| KEH | 9/0/0 | 8 | [61] |

| Feature Domain | Measured Physical Signals | Feature Calculation | References |

|---|---|---|---|

| Time, Frequency, and Heuristic domain | Data from accelerometer | Min, Max, Mean, SD, SMA, SVM, Tilt angle, PSD, Signal entropy, Spectral energy | [2] |

| Time and Frequency domain | Data from 3-axis accelerometer | Mean, Min, SD, Variance, MED, Skewness, Kurtosis, Energy, Principal frequency, Magnitude of principal frequency (for each axis of a 3-axis accelerometer), Cross-correlation of accelerometer axis, MED crossing for each axis, 25th percentile for each axis, 75th percentile for each axis | [34] |

| Time and Frequency domain | Data from accelerometer | Mean, Skewness, Kurtosis, DFT, Autocorrelation | [35] |

| Time and Frequency domain | Data from 3-axis accelerometer, a 3-axis gyroscope and a 3-axis magnetometer | AMP, MED; MNVALUE, Max, Min, P2P, STD, RMS, S2E | [36] |

| Time and Frequency domain | Data from accelerometer, gyroscope and a magnetometer | Mean, STD, MED, Min, Max, Skewness, Kurtosis, Energy, Entropy, IQR | [38] |

| Time domain | Data from accelerometer, compass sensor, gyroscope and a barometer | Min, Max, Mean, SD | [46] |

| Time and Frequency domain | Data from 3-axial acceleration | Mean, Variance, SD, Min, Max, Range between min and max, Absolute Min, Coefficient of variation, Skewness, Kurtosis, 1st Quartile, 2nd Quartile, 3rd Quartile, IQR, MCR, Absolute Area, DFR, Energy, Entropy, TAA, TMA, Correlation Corr(X,Z) CorrXZ Corr(Y,Z) | [61] |

| Time, Frequency, and Heuristic domain | Data from acceleration | Mean, SD, RMS, Peak count, Peak amplitude, Spectral energy, Spectral power, SMA | [71] |

| Time and Frequency domain | Data from acceleration | Mean, SD, Absolute Max, First 3 peaks in power magnitude, Spectral entropy, Autoregressive coefficient, SMA | [72] |

| Time domain features | Data from acceleration, gyroscope, temperature, magnetometer and barometer | Mean, SD | [73] |

| Time and Frequency domain | Data from accelerometer | Mean, SD, IQR, RMS, Energy of FFT components, Entropy of FFT histogram | [74] |

| Time and Frequency domain | Data from 3-axial acceleration | Spectral energy, Spectral entropy, Mean, Variance, Mean Trend, WMD, Variance Trend, WVD, DFA coefficient, X-Z Energy uncorrelated (Spectral), Max, Difference acceleration | [75] |

| Time, Frequency, and Heuristic domain | Data from acceleration or gyroscope | Mean, SD, Max, Min, SMA, Average sum of the squares, IQR, Signal entropy, Autoregression coefficients, Correlation coefficient, Largest frequency component, Weighted average skewness, Kurtosis, Energy of a frequency interval, Angle between two vectors | [76] |

| Time and Frequency domain | Data from 3-axial acceleration | Min, Max, SD, Median, Mean, Skewness, Kurtosis, Absolute skewness, Absolute kurtosis | [77] |

| Time and Frequency domain | Data from accelerometer | Mean, SD, median, 25th percentile, 75th percentile, Peak, Valley, RMS, Principal frequency, Spectral energy, Entropy, the sum of FFT Coefficients grouped in four exponential bands | [78] |

| Time and frequency domain | Data from accelerometer | Mean, Variance, RMS, Mean absolute deviation, Range, Covariance, Quartile Deviation, Coefficient of correlation | [79] |

| Time and frequency domain | Data from wristband hand-dominated actions | Mean, Min, Max, Range of overall time, Variance, Kurtosis, Skewness, Cross-mean, Rate, Energy, Entropy, Percentage of energy each detailed wavelet components accounts for | [80] |

| Time and frequency domain | Data from 3D accelerometer, gyroscope, magnetometer, and ambient pressure sensor as well as linear acceleration, gravity, and orientation | Mean, Variance, SD, RMS, Mean crossing rate, Zero crossing rate, Skewness, Kurtosis, Entropy, Integration, SMA, Band power | [81] |

| Time and frequency domain | Data from 3-axial acceleration | Mean, SD, Median, 25th percentile, 75th percentile, Pairwise correlation, RMD, IQR, Mean crossing rate, Mean of movement intensity, Normalized SMA, Dominant frequency, Spectral energy, Spectral entropy | [82] |

| Feature Selection Approach | Feature Selection Approach Type | References |

|---|---|---|

| Filter-based methods | MR-MR | [65,79] |

| GCACO | [79] | |

| GCNC | [79] | |

| IG | [5,17,60,84,85,86,87] | |

| Gain ratio | [79,85,88] | |

| Term variance | [79] | |

| Gini index | [79] | |

| Laplacian score | [79] | |

| Fisher score | [79] | |

| RS | [79,89] | |

| RR | [79] | |

| UFSACO | [79] | |

| Wrapper-based | SBS | [79] |

| SFS | [46,79] | |

| ACO | [79] | |

| PSO | [79] | |

| GA | [2,39,79] | |

| Random mutation hill-climbing | [79] | |

| Simulated annealing | [79] | |

| ABC | [79] |

| Feature Transform Approach | Feature Transform Approach Type | References |

|---|---|---|

| Feature transform | FT | [27,102] |

| WT | [27,103,104] | |

| DWT | [27,104] | |

| LDA | [5,28,59,90] | |

| GDA | [90,105] | |

| CCA | [7,105,106,107] | |

| SVD | [4,108] | |

| PCA | [4,40,55,59,60,74] |

| Applications | HAR Stage in Focus | HAR Approaches | References |

|---|---|---|---|

| FD | Classification | Two public databases, ANN, kNN, QSVM, EBT | [119] |

| FD | Data collection and filtering, Classification | Wrist-Worn Sensor, Feed-Forward NN, GA, SVM, DT, RBS | [26] |

| FD | Data collection and filtering | Kalman Filter, kNN | [91] |

| FD | Feature extraction, Classification | Temporal and Frequency features, LDA, CART, NB, SVM, RF, kNN, NN | [120] |

| FD | Feature extraction, Feature selection, Classification | Improved RF, PCF, HSW | [10] |

| FD, AM | Data collection and filtering, Data segmentation, Feature selection | RFID sensors, CCA, MLGL1, LSVM, kNN, RF, NB | [7] |

| Health and wellbeing monitoring | Feature extraction, Classification | Wearable sensors (accelerometers, gyroscope, and magnetometer), 1 s. window with no overlap, BT | [38] |

| AM | Data collection and filtering, Feature extraction, Classification | Wristband sensor, Statistics-, Frequency-, and Wavelet-domain features, NB, kNN, NN, SV, RF | [80] |

| AAL | Data collection and filtering | Radar Smart Sensor, DTFT | [103] |

| AAL | Classification | Smartphone sensors (accelerometer, gyroscope, and gravity sensor), C4.5 DT, NB, SVM, RF, BA, kNN, HMM | [118] |

| RMD | Data segmentation, Feature extraction and classification | Accelerometer, DTW, RR, LDA | [121] |

| Monitoring of elderly people | Data collection and filtering, Classification | Tri-axial accelerometer, Relief-F, kNN, NB | [75] |

| HAR Stage | Improvement Approach | Verified in Literature | |

|---|---|---|---|

| Energy consumption | Data collection and filtering |

| [14,19,30,32,61,122,123] |

| Data segmentation |

| [32] | |

| Feature extraction |

| [10,32,115] | |

| Classification |

| [10,31,45,124] | |

| Latency | Data segmentation |

| [98] |

| Classification |

| [80] | |

| General |

| [39] |

| Context | Condition | Performance Importance | References | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Physical | User | Medical | Energy | Latency | |||||||||

| Indoor | Outdoor | Medical Institution | Smart Home | Older Adults | Other Population | Activities Management | Activities Monitoring | Activities Encouraging | Chronic Disease | Healthy | |||

| x | x | x | x | x | 2.34 | 3 | [30,129] | ||||||

| x | x | x | x | x | 2 | 2 | [7,83,103,126,130] | ||||||

| x | x | x | x | x | 2 | 2.5 | [140,142] | ||||||

| x | x | x | x | x | 2 | 2 | [127] | ||||||

| x | x | - | x | x | x | 3 | 2 | [128] | |||||

| x | x | x | x | x | 2.5 | 2.5 | |||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cero Dinarević, E.; Baraković Husić, J.; Baraković, S. Step by Step Towards Effective Human Activity Recognition: A Balance between Energy Consumption and Latency in Health and Wellbeing Applications. Sensors 2019, 19, 5206. https://doi.org/10.3390/s19235206

Cero Dinarević E, Baraković Husić J, Baraković S. Step by Step Towards Effective Human Activity Recognition: A Balance between Energy Consumption and Latency in Health and Wellbeing Applications. Sensors. 2019; 19(23):5206. https://doi.org/10.3390/s19235206

Chicago/Turabian StyleCero Dinarević, Enida, Jasmina Baraković Husić, and Sabina Baraković. 2019. "Step by Step Towards Effective Human Activity Recognition: A Balance between Energy Consumption and Latency in Health and Wellbeing Applications" Sensors 19, no. 23: 5206. https://doi.org/10.3390/s19235206

APA StyleCero Dinarević, E., Baraković Husić, J., & Baraković, S. (2019). Step by Step Towards Effective Human Activity Recognition: A Balance between Energy Consumption and Latency in Health and Wellbeing Applications. Sensors, 19(23), 5206. https://doi.org/10.3390/s19235206