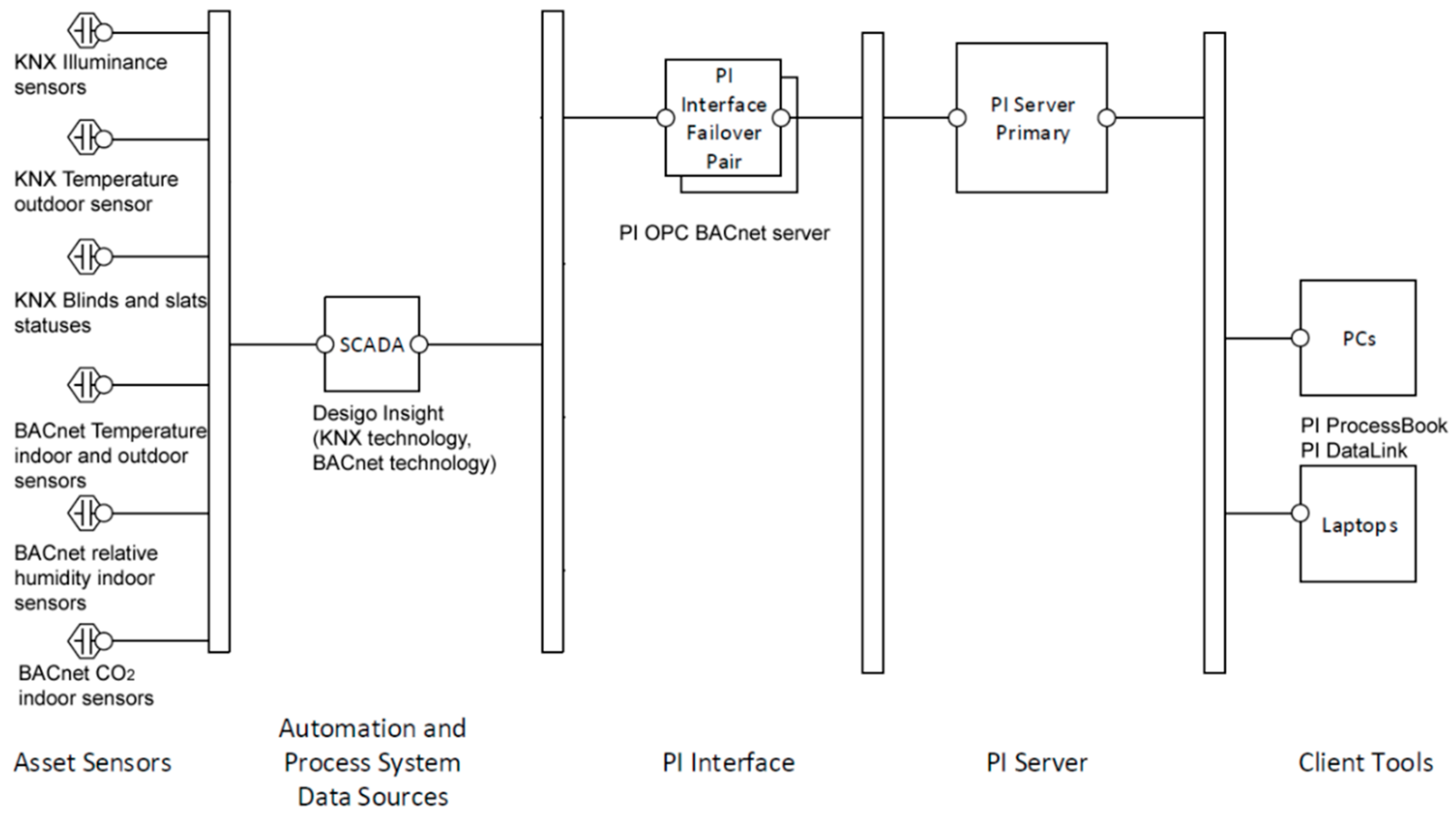

Figure 1.

A block diagram of data transfer from the Smart Home Care technology through the PI OPC (Ole for Process Control) interface to the PI Server and PI ProcessBook.

Figure 1.

A block diagram of data transfer from the Smart Home Care technology through the PI OPC (Ole for Process Control) interface to the PI Server and PI ProcessBook.

Figure 2.

The main visualization screen in SW tool PI ProcessBook, first floor.

Figure 2.

The main visualization screen in SW tool PI ProcessBook, first floor.

Figure 3.

A screen for room 204 with a detailed description of individual technologies and individual charts in SW tool PI ProcessBook.

Figure 3.

A screen for room 204 with a detailed description of individual technologies and individual charts in SW tool PI ProcessBook.

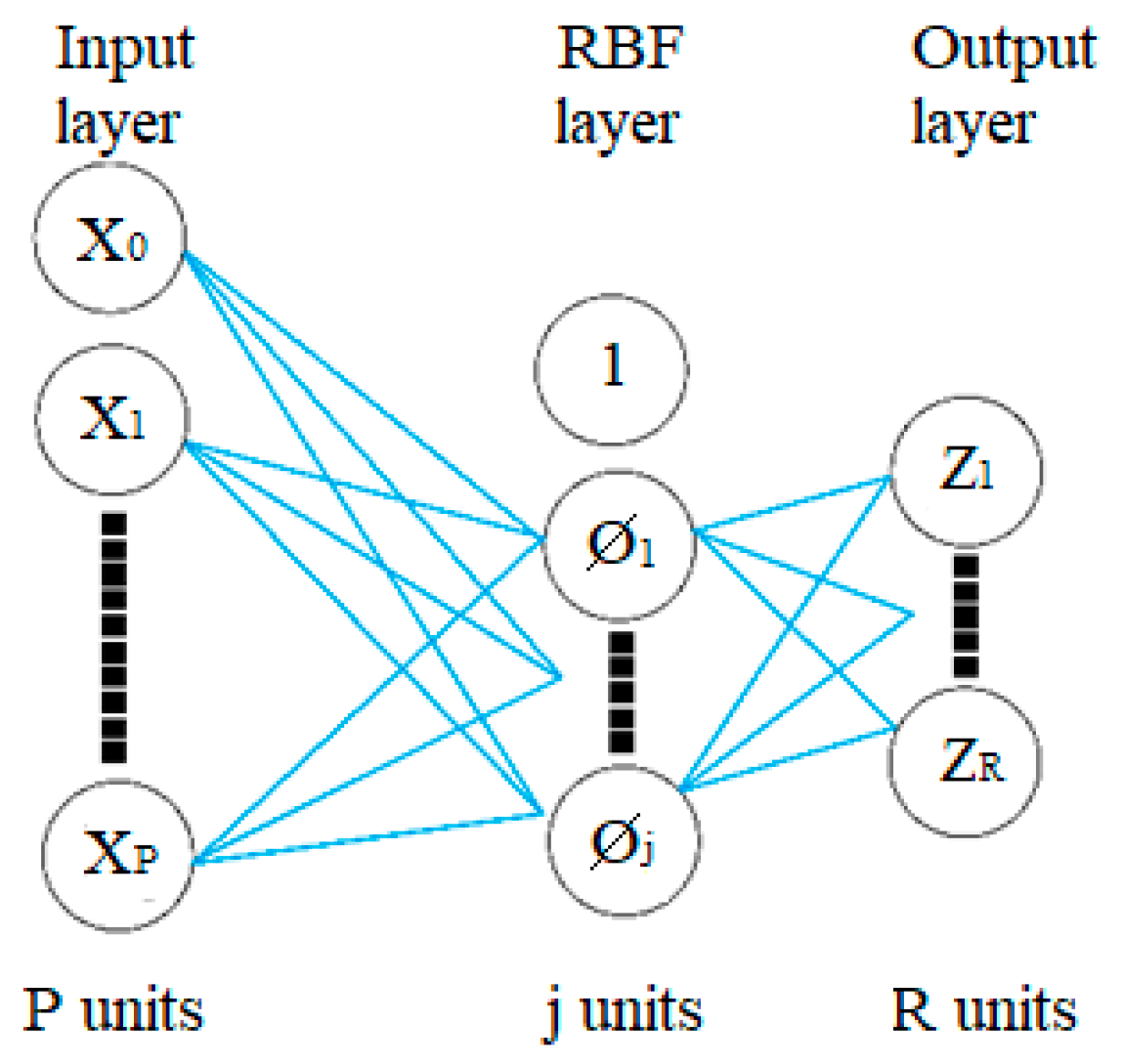

Figure 4.

The Radial Basis Function (RBF) neural network diagram.

Figure 4.

The Radial Basis Function (RBF) neural network diagram.

Figure 5.

The reference (measured) CO2 concentration course and Illuminance E (lx) course (12 November 2018) in room R203 in Smart Home Care (SHC) for Activities of Daily Living (ADL) monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), Time of Person Presence (TPP) ∆t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), TPP ∆t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), TPP ∆t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆t3 = 0:18:45. Light on I. 8:15:54, Light off II. 17:43:47.

Figure 5.

The reference (measured) CO2 concentration course and Illuminance E (lx) course (12 November 2018) in room R203 in Smart Home Care (SHC) for Activities of Daily Living (ADL) monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), Time of Person Presence (TPP) ∆t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), TPP ∆t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), TPP ∆t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆t3 = 0:18:45. Light on I. 8:15:54, Light off II. 17:43:47.

![Sensors 19 01407 g005]()

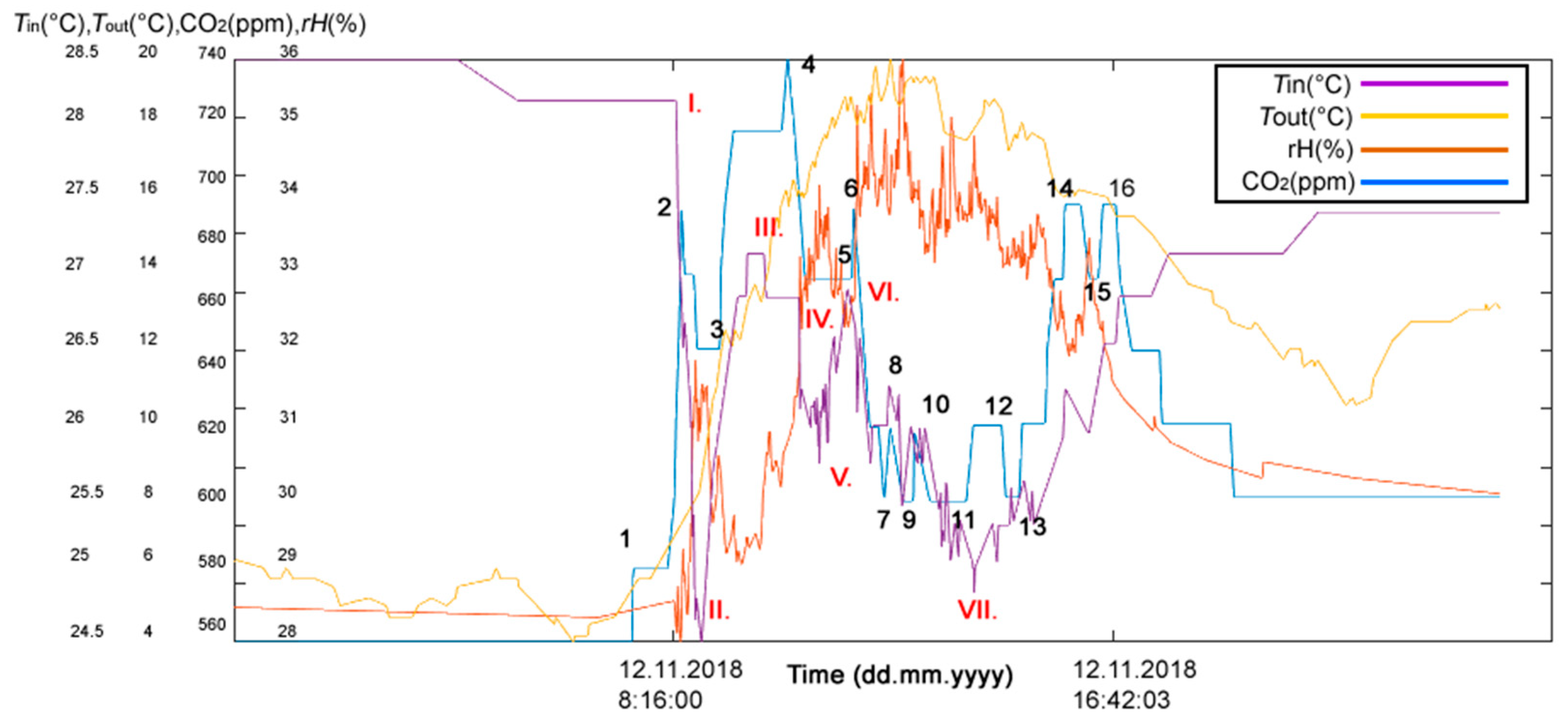

Figure 6.

The reference (measured) CO2 concentration course, indoor temperature and relative humidity course (12 November 2018), in room R203, outdoor temperature course (12 November 2018) within the measurement of nonelectrical values in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), (∆t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), (TPP ∆t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), (TPP ∆t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), (TPP ∆t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), (TPP ∆t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), (TPP ∆t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), (TPP ∆t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆t3 = 0:18:45. I. The window was opened at 8:22:44, II. The window was closed at 8:50:42, III. The window was opened partially at 10:02:19, IV. The entire window was opened at 10:42:34, V. The window was closed at 11:05:25, VI. The window was opened partially at 11:36:07, VII. The window was closed at 14:00:35.

Figure 6.

The reference (measured) CO2 concentration course, indoor temperature and relative humidity course (12 November 2018), in room R203, outdoor temperature course (12 November 2018) within the measurement of nonelectrical values in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), (∆t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), (TPP ∆t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), (TPP ∆t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), (TPP ∆t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), (TPP ∆t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), (TPP ∆t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), (TPP ∆t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆t3 = 0:18:45. I. The window was opened at 8:22:44, II. The window was closed at 8:50:42, III. The window was opened partially at 10:02:19, IV. The entire window was opened at 10:42:34, V. The window was closed at 11:05:25, VI. The window was opened partially at 11:36:07, VII. The window was closed at 14:00:35.

![Sensors 19 01407 g006]()

Figure 7.

The reference (measured) CO

2 concentration course and Blinds course (12 November 2018) in room R203 in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), ∆

t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆

t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆

t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), TPP ∆

t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), TPP ∆

t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆

t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆

t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆

t3 = 0:18:45. I. The Blind 43 (

Figure 2) was lowered down in time 8:32:37, II. The Blind 48 (

Figure 2) was lowered down in time 8:37:24 III. The Blind 33 (

Figure 2) was lowered down in time 8:43:12, IV. The blinds were pulled partially in time 11:52:29.

Figure 7.

The reference (measured) CO

2 concentration course and Blinds course (12 November 2018) in room R203 in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), ∆

t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆

t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆

t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), TPP ∆

t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), TPP ∆

t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆

t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆

t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆

t3 = 0:18:45. I. The Blind 43 (

Figure 2) was lowered down in time 8:32:37, II. The Blind 48 (

Figure 2) was lowered down in time 8:37:24 III. The Blind 33 (

Figure 2) was lowered down in time 8:43:12, IV. The blinds were pulled partially in time 11:52:29.

![Sensors 19 01407 g007]()

Figure 8.

The reference (measured) CO

2 concentration course and Slats course (12 November 2018) in room R203 (

Figure 2) in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), ∆

t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆

t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆

t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), (TPP ∆

t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), (TPP ∆

t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆

t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆

t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆

t3 = 0:18:45.I. Slats 47 (

Figure 2) were turned at 8:32:37, II. Slats 42 were turned at 8:33:18. III. Slats 42 were turned at 8:38:05, IV. Slats 42 were turned at 8:39:47, V. Slats 32 were turned at 8:43:12, IV. Slats 42 and 47 were turned at 11:52:29.

Figure 8.

The reference (measured) CO

2 concentration course and Slats course (12 November 2018) in room R203 (

Figure 2) in SHC for ADL monitoring. (1) arrival (12 November 2018 7:33:00), (2) departure (12 November 2018 8:28:11), ∆

t1 = 0:55:11; (3) arrival (12 November 2018 09:12:52), (4) departure (12 November 2018 10:29:16), TPP ∆

t2 = 1:16:24; (5) arrival (12 November 2018 11:41:55), (6) departure (12 November 2018 11:44:18), TPP ∆

t3 = 0:02:23. (7) arrival (12 November 2018 12:18:04), (8) departure (12 November 2018 12:26:15), (TPP ∆

t1 = 0:08:11; (9) arrival (12 November 2018 12:51:50), (10) departure (12 November 2018 12:53:12), (TPP ∆

t2 = 0:01:22; (11) arrival (12 November 2018 13:51:52), (12) departure (12 November 2018 14:32:47), TPP ∆

t3 = 0:40:55; (13) arrival (12 November 2018 14:52:34), (14) departure (12 November 2018 16:02:30), TPP ∆

t2 = 1:09:56; (15) arrival (12 November 2018 16:23:18), (16) departure (12 November 2018 16:42:03), TPP ∆

t3 = 0:18:45.I. Slats 47 (

Figure 2) were turned at 8:32:37, II. Slats 42 were turned at 8:33:18. III. Slats 42 were turned at 8:38:05, IV. Slats 42 were turned at 8:39:47, V. Slats 32 were turned at 8:43:12, IV. Slats 42 and 47 were turned at 11:52:29.

![Sensors 19 01407 g008]()

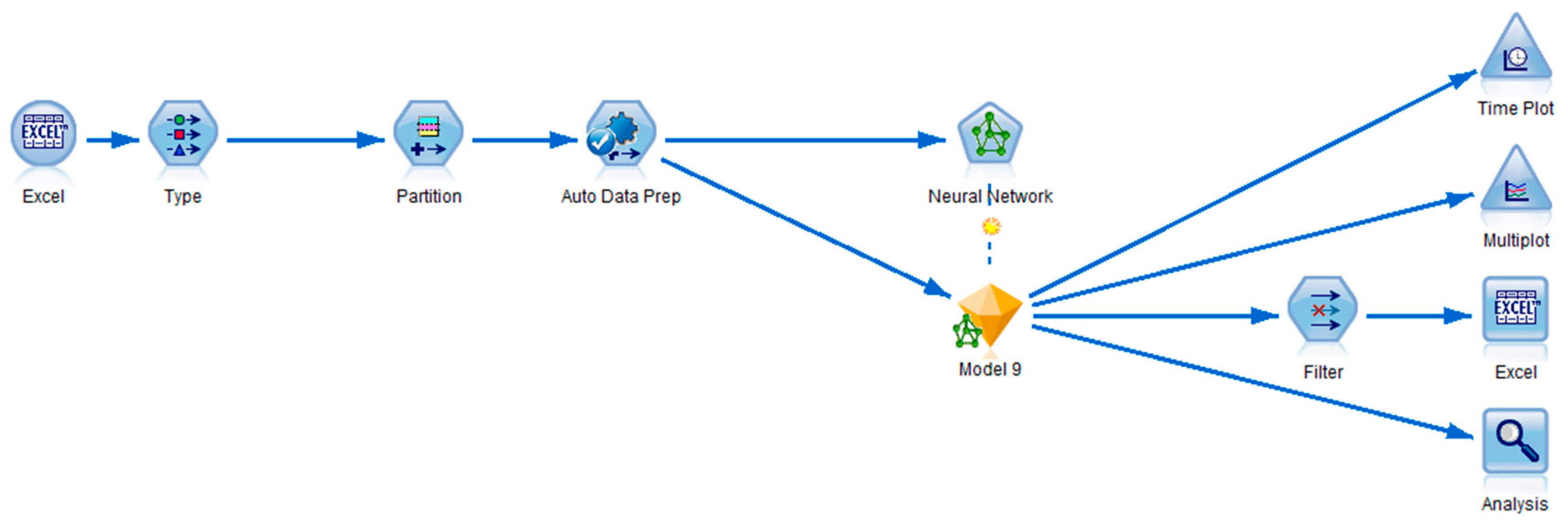

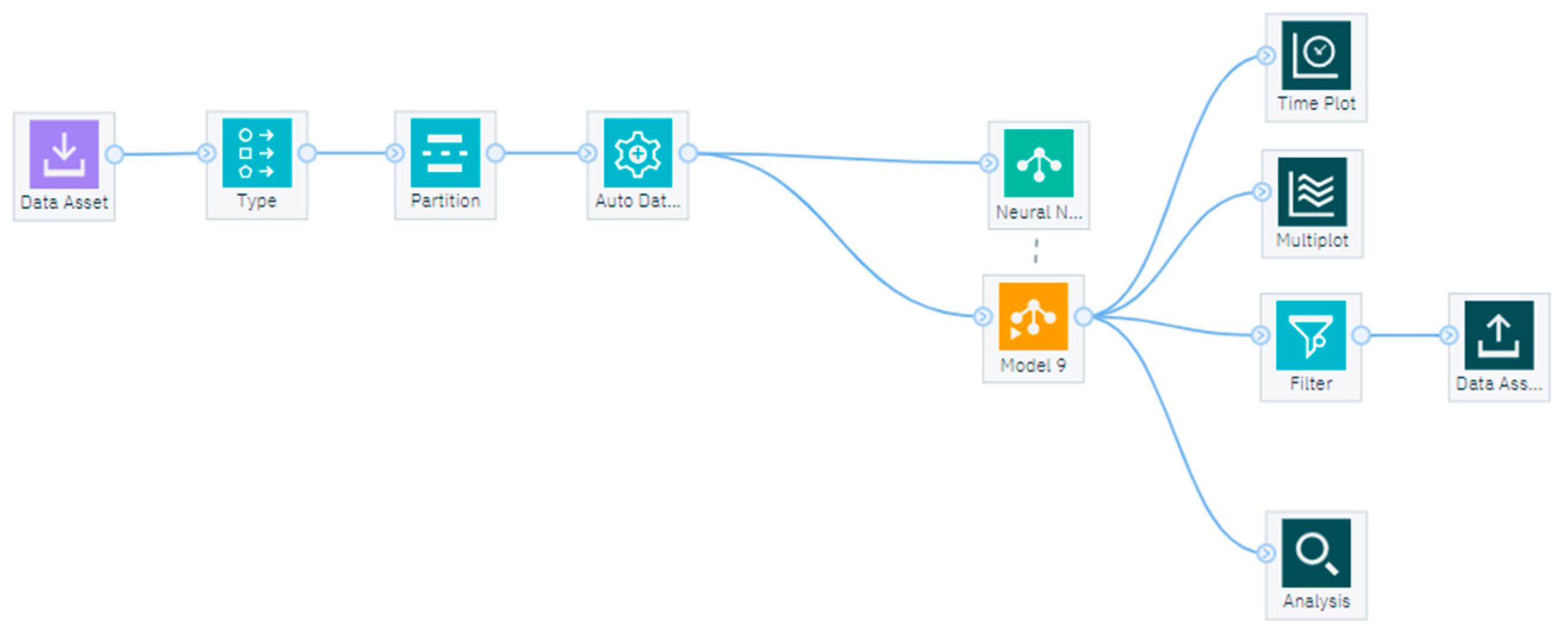

Figure 9.

The developed stream using IBM SPSS Modeler.

Figure 9.

The developed stream using IBM SPSS Modeler.

Figure 10.

The block diagram of the developed Stream using IBM SPSS Modeler.

Figure 10.

The block diagram of the developed Stream using IBM SPSS Modeler.

Figure 11.

The accuracy for each interval of experiments.

Figure 11.

The accuracy for each interval of experiments.

Figure 12.

Model number 9 trained and validated using data from yjr 15th of May 2018.

Figure 12.

Model number 9 trained and validated using data from yjr 15th of May 2018.

Figure 13.

Model number 9 trained and validated using data from the interval of the 6th of May 2018 to 13th of May 2018.

Figure 13.

Model number 9 trained and validated using data from the interval of the 6th of May 2018 to 13th of May 2018.

Figure 14.

Model number 3 trained and validated using data from the interval of 12th of May 2018.

Figure 14.

Model number 3 trained and validated using data from the interval of 12th of May 2018.

Figure 15.

Watson Studio with the data stream developed in IBM SPSS Modeler.

Figure 15.

Watson Studio with the data stream developed in IBM SPSS Modeler.

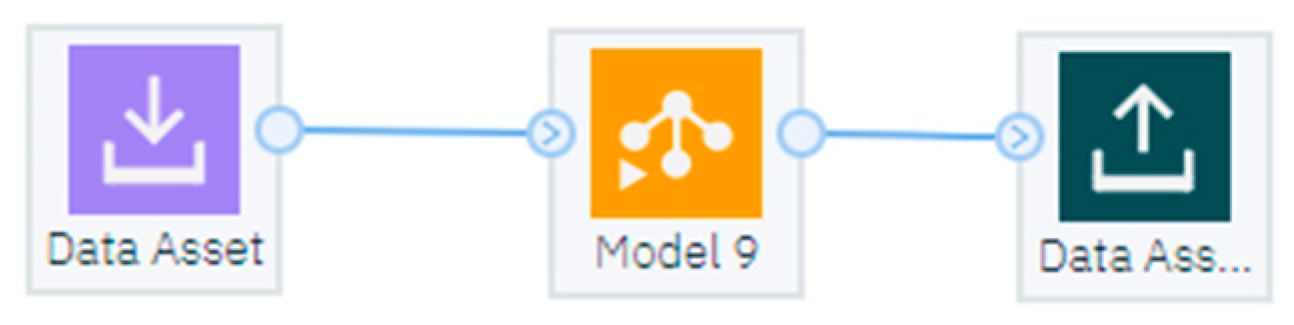

Figure 16.

Watson Studio with the data flow stream that uses model 9.

Figure 16.

Watson Studio with the data flow stream that uses model 9.

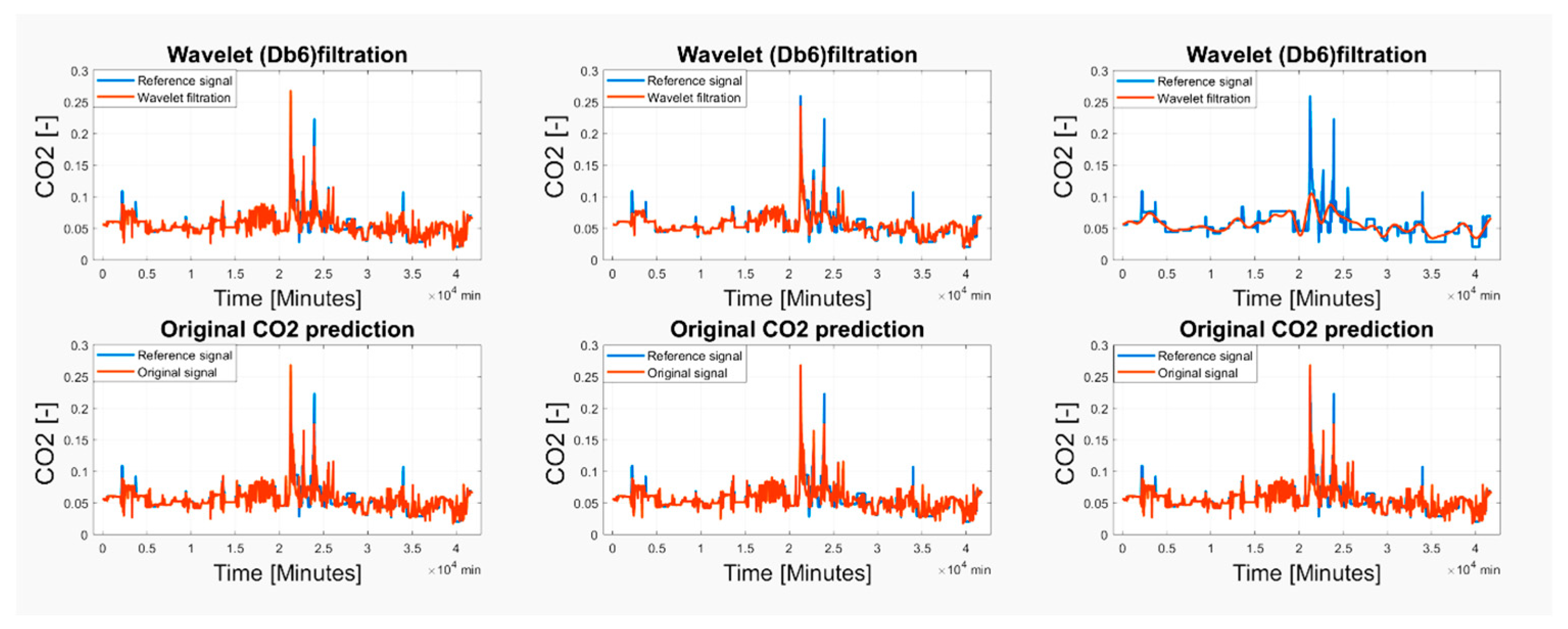

Figure 17.

The comparison of the original predicted CO2 signal and Wavelet filtration from 15 May 2018 for 2-level decomposition (left), 6-level decomposition (middle) and 7-level decomposition (right).

Figure 17.

The comparison of the original predicted CO2 signal and Wavelet filtration from 15 May 2018 for 2-level decomposition (left), 6-level decomposition (middle) and 7-level decomposition (right).

Figure 18.

The comparison of the original predicted CO2 signal and Wavelet filtration from 1–29 May 2018 for 2-level decomposition (left), 6-level decomposition (middle) and 7-level decomposition (right).

Figure 18.

The comparison of the original predicted CO2 signal and Wavelet filtration from 1–29 May 2018 for 2-level decomposition (left), 6-level decomposition (middle) and 7-level decomposition (right).

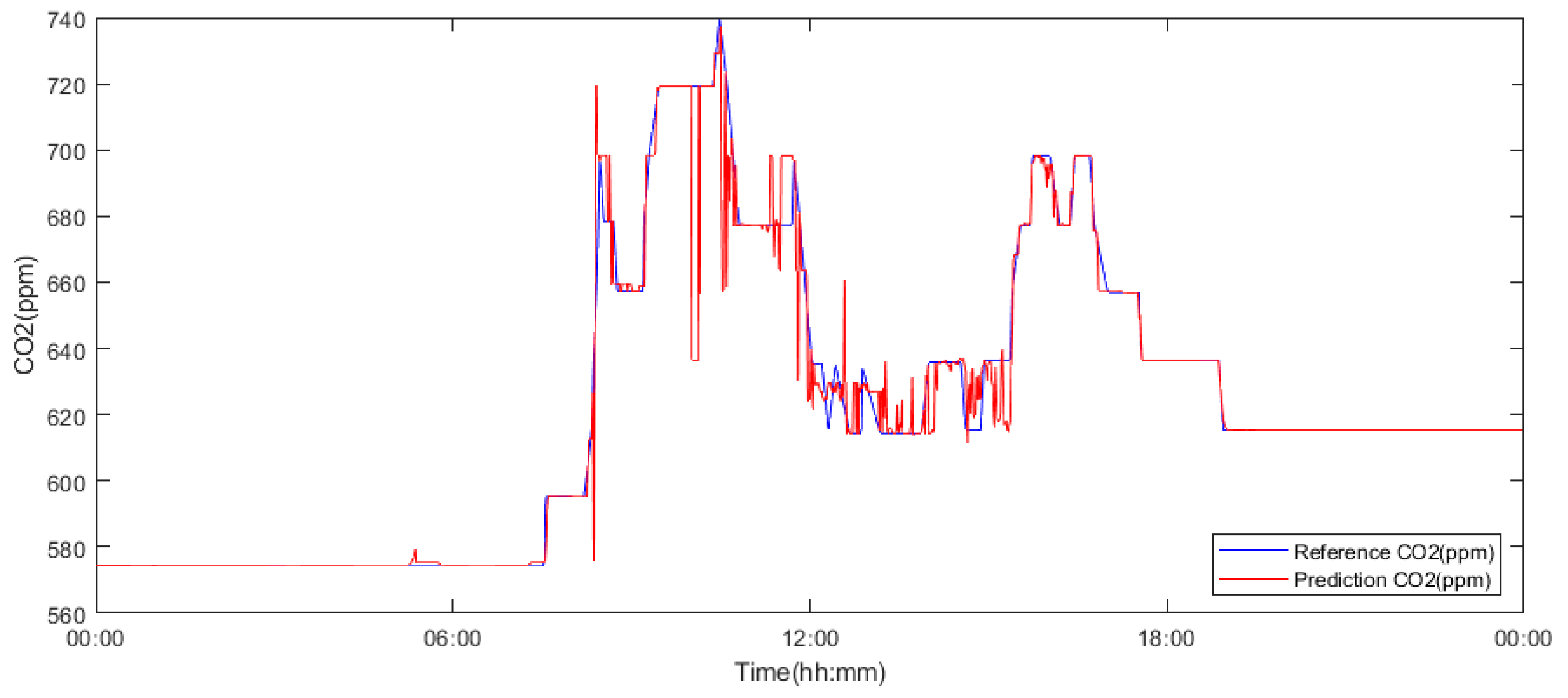

Figure 19.

An example of one-day prediction from 15th May 2018 of the CO2 signal. Filtration is done by using the Db6 wavelet with 6-level decomposition.

Figure 19.

An example of one-day prediction from 15th May 2018 of the CO2 signal. Filtration is done by using the Db6 wavelet with 6-level decomposition.

Figure 20.

An example of whole-month prediction from November 2018 of the CO2 signal. Filtration is done by using the Db6 wavelet with 6-level decomposition.

Figure 20.

An example of whole-month prediction from November 2018 of the CO2 signal. Filtration is done by using the Db6 wavelet with 6-level decomposition.

Figure 21.

The 6–13 November 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

Figure 21.

The 6–13 November 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

Figure 22.

The 15 November 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

Figure 22.

The 15 November 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

Figure 23.

The 4 November–3 December 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

Figure 23.

The 4 November–3 December 2018 prediction of the CO2 signal containing several glitches and signal spikes, marked as green rectangle.

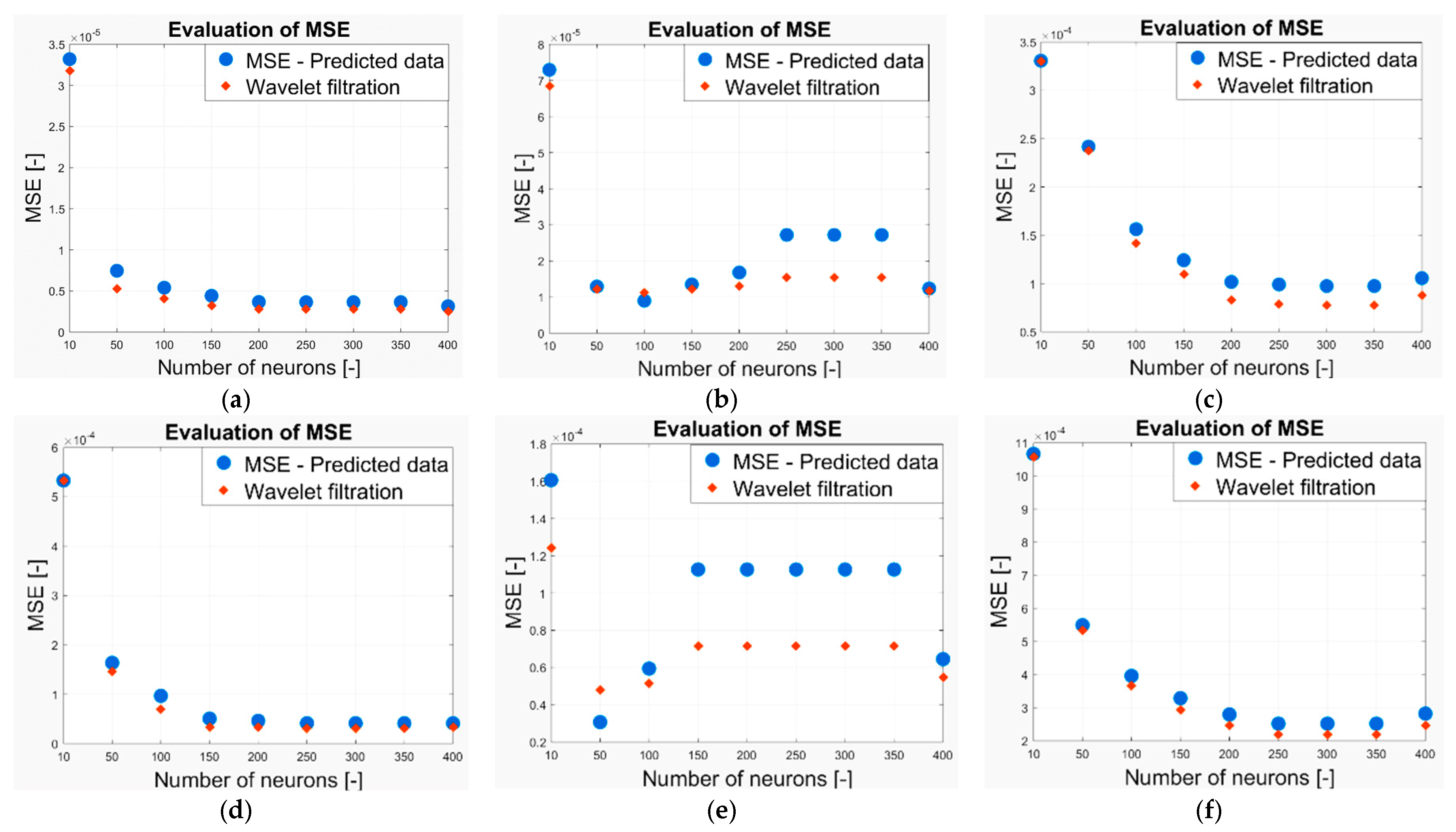

Figure 24.

The Mean Squared Error (MSE) evaluation for the CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Figure 24.

The Mean Squared Error (MSE) evaluation for the CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Figure 25.

The correlation coefficient evaluation for the CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Figure 25.

The correlation coefficient evaluation for the CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Figure 26.

The Euclidean distance evaluation for CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Figure 26.

The Euclidean distance evaluation for CO2 prediction (a) 6–13 May 2018, (b) 15 May 2018, (c) 1–29 May 2018, (d) 6–13 November 2018, (e) 15 November 2018, (f) 4 November–3 December 2018.

Table 1.

The trained models—Training period: May 2018.

Table 1.

The trained models—Training period: May 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 18.6 | 0.405 | 0.012 | 0.432 | 0.012 | 3.31 × 10−4 |

| 2 | 50 | 40.2 | 0.628 | 0.010 | 0.638 | 0.010 | 2.42 × 10−4 |

| 3 | 100 | 61.2 | 0.782 | 0.008 | 0.787 | 0.008 | 1.56 × 10−4 |

| 4 | 150 | 69.6 | 0.828 | 0.007 | 0.831 | 0.007 | 1.24 × 10−4 |

| 5 | 200 | 75.3 | 0.859 | 0.006 | 0.864 | 0.006 | 1.02 × 10−4 |

| 6 | 250 | 76.1 | 0.861 | 0.005 | 0.867 | 0.006 | 9.91 × 10−5 |

| 7 | 300 | 76.6 | 0.864 | 0.005 | 0.870 | 0.006 | 9.75 × 10−5 |

| 8 | 350 | 76.4 | 0.864 | 0.005 | 0.870 | 0.006 | 9.75 × 10−5 |

| 9 | 400 | 74.1 | 0.854 | 0.006 | 0.861 | 0.006 | 1.06 × 10−4 |

Table 2.

The trained models—Training period: November 2018.

Table 2.

The trained models—Training period: November 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 35.5 | 0.603 | 0.024 | 0.621 | 0.024 | 1.862 × 10−3 |

| 2 | 50 | 63.9 | 0.796 | 0.016 | 0.804 | 0.016 | 1.377 × 10−3 |

| 3 | 100 | 72.0 | 0.841 | 0.013 | 0.852 | 0.013 | 1.295 × 10−3 |

| 4 | 150 | 77.0 | 0.872 | 0.012 | 0.878 | 0.012 | 1.414 × 10−3 |

| 5 | 200 | 79.0 | 0.884 | 0.010 | 0.889 | 0.011 | 1.393 × 10−3 |

| 6 | 250 | 80.6 | 0.894 | 0.010 | 0.898 | 0.010 | 1.385 × 10−3 |

| 7 | 300 | 80.6 | 0.895 | 0.010 | 0.898 | 0.010 | 1.385 × 10−3 |

| 8 | 350 | 80.6 | 0.895 | 0.010 | 0.898 | 0.010 | 1.385 × 10−3 |

| 9 | 400 | 80.6 | 0.893 | 0.011 | 0.898 | 0.011 | 1.553 × 10−3 |

Table 3.

The trained models—6th of May 2018 to 13th of May 2018.

Table 3.

The trained models—6th of May 2018 to 13th of May 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 75.7 | 0.855 | 0.004 | 0.857 | 0.004 | 3.32 × 10−5 |

| 2 | 50 | 94.6 | 0.969 | 0.001 | 0.969 | 0.001 | 7.46 × 10−6 |

| 3 | 100 | 96.3 | 0.976 | 0.001 | 0.976 | 0.001 | 5.40 × 10−6 |

| 4 | 150 | 97.0 | 0.979 | 0.001 | 0.981 | 0.001 | 4.41 × 10−6 |

| 5 | 200 | 97.6 | 0.982 | 0.001 | 0.984 | 0.001 | 3.67 × 10−6 |

| 6 | 250 | 97.6 | 0.983 | 0.001 | 0.984 | 0.001 | 3.64 × 10−6 |

| 7 | 300 | 97.6 | 0.983 | 0.001 | 0.984 | 0.001 | 3.64 × 10−6 |

| 8 | 350 | 97.6 | 0.983 | 0.001 | 0.984 | 0.001 | 3.16 × 10−6 |

| 9 | 400 | 98.2 | 0.983 | 0.001 | 0.985 | 0.001 | 3.14 × 10−6 |

Table 4.

The trained models—6th of November 2018 to 13th of November 2018.

Table 4.

The trained models—6th of November 2018 to 13th of November 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 52.0 | 0.724 | 0.016 | 0.714 | 0.017 | 5.33 × 10−4 |

| 2 | 50 | 85.8 | 0.915 | 0.008 | 0.921 | 0.008 | 1.64 × 10−4 |

| 3 | 100 | 91.8 | 0.947 | 0.005 | 0.954 | 0.005 | 9.68 × 10−5 |

| 4 | 150 | 96.1 | 0.969 | 0.003 | 0.973 | 0.003 | 5.07 × 10−5 |

| 5 | 200 | 96.4 | 0.973 | 0.003 | 0.977 | 0.003 | 4.61 × 10−5 |

| 6 | 250 | 96.7 | 0.978 | 0.003 | 0.978 | 0.002 | 4.13 × 10−5 |

| 7 | 300 | 96.7 | 0.978 | 0.003 | 0.978 | 0.002 | 4.13 × 10−5 |

| 8 | 350 | 96.7 | 0.978 | 0.003 | 0.978 | 0.002 | 4.13 × 10−5 |

| 9 | 400 | 96.8 | 0.975 | 0.003 | 0.979 | 0.003 | 4.12 × 10−5 |

Table 5.

The trained models—Training period: 12th of May 2018.

Table 5.

The trained models—Training period: 12th of May 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 88.9 | 0.927 | 0.064 | 0.922 | 0.067 | 9.81 × 10−3 |

| 2 | 50 | 97.8 | 0.972 | 0.026 | 0.977 | 0.024 | 2.63 × 10−3 |

| 3 | 100 | 98.0 | 0.971 | 0.022 | 0.967 | 0.021 | 3.11 × 10−3 |

| 4 | 150 | 96.7 | 0.940 | 0.037 | 0.934 | 0.038 | 6.54 × 10−3 |

| 5 | 200 | 98.1 | 0.937 | 0.035 | 0.943 | 0.032 | 5.43 × 10−3 |

| 6 | 250 | 95.0 | 0.921 | 0.035 | 0.901 | 0.040 | 8.48 × 10−3 |

| 7 | 300 | 95.7 | 0.941 | 0.031 | 0.932 | 0.030 | 6.42 × 10−3 |

| 8 | 350 | 95.7 | 0.941 | 0.031 | 0.935 | 0.030 | 6.42 × 10−3 |

| 9 | 400 | 95.7 | 0.941 | 0.031 | 0.935 | 0.030 | 6.42 × 10−3 |

Table 6.

The trained models—Training period: 15th of May 2018.

Table 6.

The trained models—Training period: 15th of May 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 97.8 | 0.987 | 0.006 | 0.987 | 0.005 | 7.30 × 10−5 |

| 2 | 50 | 99.7 | 0.996 | 0.002 | 0.998 | 0.001 | 1.29 × 10−5 |

| 3 | 100 | 99.9 | 0.997 | 0.001 | 0.998 | 0.001 | 8.98 × 10−6 |

| 4 | 150 | 99.8 | 0.996 | 0.002 | 0.998 | 0.001 | 1.35 × 10−5 |

| 5 | 200 | 99.7 | 0.995 | 0.002 | 0.997 | 0.001 | 1.68 × 10−5 |

| 6 | 250 | 99.5 | 0.993 | 0.002 | 0.993 | 0.002 | 2.72 × 10−5 |

| 7 | 300 | 99.5 | 0.993 | 0.002 | 0.993 | 0.002 | 2.72 × 10−5 |

| 8 | 350 | 99.5 | 0.993 | 0.002 | 0.993 | 0.002 | 2.72 × 10−5 |

| 9 | 400 | 99.8 | 0.996 | 0.001 | 0.997 | 0.001 | 1.24 × 10−5 |

Table 7.

The trained models—Training period: 15th of November 2018.

Table 7.

The trained models—Training period: 15th of November 2018.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Testing | Validation |

|---|

| Linear Correlation | MAE | Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 79.0 | 0.895 | 0.006 | 0.890 | 0.007 | 1.61 × 10−4 |

| 2 | 50 | 94.8 | 0.987 | 0.002 | 0.987 | 0.002 | 3.07 × 10−5 |

| 3 | 100 | 95.4 | 0.926 | 0.002 | 0.952 | 0.002 | 4.94 × 10−5 |

| 4 | 150 | 91.9 | 0.861 | 0.003 | 0.897 | 0.003 | 1.13 × 10−4 |

| 5 | 200 | 91.9 | 0.861 | 0.003 | 0.897 | 0.003 | 1.13 × 10−4 |

| 6 | 250 | 91.9 | 0.861 | 0.003 | 0.897 | 0.003 | 1.13 × 10−4 |

| 7 | 300 | 91.9 | 0.861 | 0.003 | 0.897 | 0.003 | 1.13 × 10−4 |

| 8 | 350 | 91.9 | 0.861 | 0.003 | 0.897 | 0.003 | 1.13 × 10−4 |

| 9 | 400 | 99.7 | 0.913 | 0.002 | 0.895 | 0.003 | 6.45 × 10−5 |

Table 8.

The average of the obtained results in each experiment.

Table 8.

The average of the obtained results in each experiment.

| Measurement Period | Accuracy (%) | Validation |

|---|

| Linear Correlation | MAE | MSE |

|---|

| May | 63.1 | 0.78 | 7.44 × 10−3 | 1.51 × 10−4 |

| November | 72.2 | 0.848 | 1.30 × 10−2 | 1.45 × 10−3 |

| May 6 to 13 | 94.7 | 0.967 | 1.33 × 10−3 | 7.52 × 10−6 |

| November 6 to 13 | 89.9 | 0.939 | 5.00 × 10−3 | 1.17 × 10−4 |

| May 12 | 95.7 | 0.940 | 3.12 × 10−2 | 6.14 × 10−3 |

| May 15 | 99.5 | 0.995 | 1.78 × 10−3 | 2.44 × 10−5 |

| November 15 | 92.0 | 0.912 | 3.22 × 10−3 | 9.67 × 10−5 |

Table 9.

The average of the obtained results in the six performed experiments.

Table 9.

The average of the obtained results in the six performed experiments.

| Order of Measurement (Model Number) | Number of Neurons (-) | Accuracy (%) | Validation |

|---|

| Linear Correlation | MAE | MSE |

|---|

| 1 | 10 | 64.0 | 0.774 | 1.94 × 10−2 | 1.83 × 10−3 |

| 2 | 50 | 87.8 | 0.899 | 8.88 × 10−3 | 6.38 × 10−4 |

| 3 | 100 | 82.4 | 0.925 | 7.29 × 10−3 | 6.75 × 10−4 |

| 4 | 150 | 89.8 | 0.927 | 9.29 × 10−3 | 1.18 × 10−3 |

| 5 | 200 | 91.2 | 0.935 | 8.15 × 10−3 | 1.02 × 10−3 |

| 6 | 250 | 91.1 | 0.931 | 9.14 × 10−3 | 1.45 × 10−3 |

| 7 | 300 | 91.2 | 0.935 | 7.71 × 10−3 | 1.15 × 10−3 |

| 8 | 350 | 90.2 | 0.936 | 7.71 × 10−3 | 1.15 × 10−3 |

| 9 | 400 | 92.1 | 0.936 | 7.85 × 10−3 | 1.17 × 10−3 |

Table 10.

The summarization of the evaluation parameters of the original CO2 prediction.

Table 10.

The summarization of the evaluation parameters of the original CO2 prediction.

| Date of Prediction | MSE [-] | Corr [%] | ED [-] |

|---|

| 6–13 May 2018 | 7.577 × 10−6 | 97.0 | 0.266 |

| 15 May 2018 | 2.436 × 10−5 | 99.5 | 0.177 |

| 1–29 May 2018 | 1.505 × 10−4 | 77.9 | 2.439 |

| 6–13 November 2018 | 1.172 × 10−4 | 94.2 | 1.017 |

| 15 November 2018 | 9.761 × 10−5 | 93.3 | 0.366 |

| 4 November–3 December 2018 | 4.065 × 10−4 | 79.8 | 3.429 |

Table 11.

The summarization of the evaluation parameters of the wavelet smoothing CO2 prediction.

Table 11.

The summarization of the evaluation parameters of the wavelet smoothing CO2 prediction.

| Date of Prediction | MSE [-] | Corr [%] | ED [-] |

|---|

| 6–13 May 2018 | 6.442 × 10−6 | 98.1 | 0.238 |

| 15 May 2018 | 1.473 × 10−6 | 99.6 | 0.157 |

| 1–29 May 2018 | 1.360 × 10−4 | 80.6 | 2.292 |

| 6–13 November 2018 | 1.047 × 10−4 | 95.6 | 0.925 |

| 15 November 2018 | 7.061 × 10−5 | 95.7 | 0.315 |

| 4 November–3 December 2018 | 3.781 × 10−4 | 81.3 | 3.281 |

Table 12.

The difference parameters of CO2 prediction.

Table 12.

The difference parameters of CO2 prediction.

| Date of Prediction | Diff MSE [-] | Diff Corr [%] | Diff ED [-] |

|---|

| 6–13 May 2018 | 1.134 × 10−6 | 0.0049 | 0.0277 |

| 15 May 2018 | 4.886 × 10−6 | 8.524 × 10−4 | 0.0196 |

| 1–29 May 2018 | 1.443 × 10−5 | 0.027 | 0.147 |

| 6–13 November 2018 | 1.258 × 10−5 | 0.0079 | 0.092 |

| 15 November 2018 | 2.699 × 10−5 | 0.022 | 0.051 |

| 4 November–3 December 2018 | 2.842 × 10−5 | 0.015 | 0.147 |