Using Machine Learning to Provide Reliable Differentiated Services for IoT in SDN-Like Publish/Subscribe Middleware †

Abstract

:1. Introduction

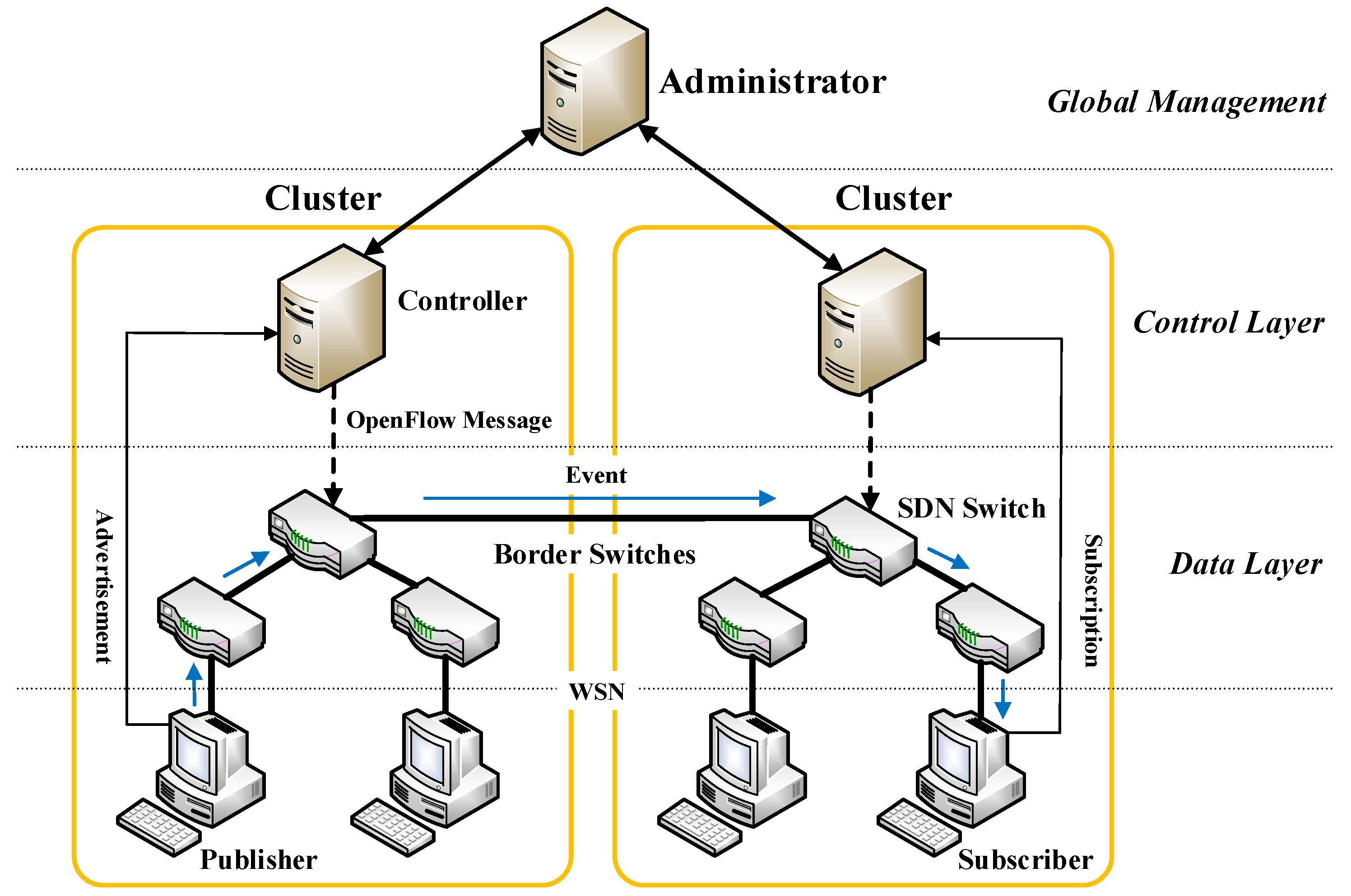

- We propose an SDN-like publish/subscribe middleware architecture and describe how to use this architecture and priority queues which we can configure on SDN switches directly to provide differentiated services. The encoding rules about event topics and priorities are presented in detail.

- To the best of our knowledge, we are the first to predict the queuing delay of switch egress ports using the eXtreme Gradient Boosting (XGBoost) model of machine learning. The difficult problem of how to obtain the queuing delay of switches accurately is solved. We also compare the performance of the XGBoost method with other methods.

- Based on the above two solutions and making full use of the programmability of SDN, we present a two-layer queue management mechanism based on user requirements to guarantee the reliability of differentiated services from two different perspectives: (1) The local queue bandwidth adjustment algorithm for a single switch in SDN controllers. The bandwidth of each queue of switch egress ports can be readjusted dynamically according to the queuing delay and the queue priority, more bandwidth is saved, and more delay requirements of users are satisfied with limited bandwidth. (2) The global QoS control strategy for all switches on the path from a publisher to a subscriber in the administrator of the pub/sub system. This strategy includes two algorithms: the initial delay constraint calculation algorithm and the dynamic delay constraint calculation algorithm. In this way, we can configure the delay constraint of each switch dynamically and allocate the queue bandwidth more reasonably from the system view, reliable differentiated IoT services are guaranteed.

2. Related Work

3. Preliminaries

3.1. XGBoost Model

3.2. ARIMA Model

3.3. RED Method

3.4. Incremental Difference Method

4. How to Provide Reliable Differentiated Services

4.1. SDN-like Pub/Sub System Architecture

4.2. Topic Encoding

4.3. Priority Queue

4.4. Reliable Differentiated Services Guarantee Mechanism

5. Queuing Delay Prediction

5.1. Data Preprocessing

5.2. Feature Selection

5.2.1. Packet Distribution

5.2.2. Feature Coding

5.3. Model Training and Parameter Adjustment

6. Reliable Differentiated Services Guarantee Mechanism

6.1. Local Queue Bandwidth Adjustment Algorithm

| Algorithm 1 Local Queue Bandwidth Adjustment Algorithm |

| Input:, , , , Output:,

|

6.2. Global QoS Control Strategy

| Algorithm 2 Initial Delay Constraint Calculation Algorithm |

| Input:, , Output:

|

| Algorithm 3 Dynamic Delay Constraint Calculation Algorithm |

| Input:, , , , , Output:

|

7. Experimental Evaluation

7.1. Experimental Setup

7.2. Queuing Delay Prediction Methods Comparison

7.3. Local Queue Bandwidth Adjustment Algorithm Verification

7.4. Local Queue Bandwidth Adjustment Algorithm Overall Test

7.5. Global QoS Control Strategy Verification

7.6. Background Traffic Experiment

7.7. CBR and VBR Traffic Experiments

7.8. Discussion

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef] [Green Version]

- Hakiri, A.; Berthou, P.; Gokhale, A.; Abdellatif, S. Publish/subscribe-enabled software defined networking for efficient and scalable IoT communications. IEEE Commun. Mag. 2015, 53, 48–54. [Google Scholar] [CrossRef] [Green Version]

- Kreutz, D.; Ramos, F.M.; Verissimo, P.E.; Rothenberg, C.E.; Azodolmolky, S.; Uhlig, S. Software-defined networking: A comprehensive survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef]

- Nunes, B.A.A.; Mendonca, M.; Nguyen, X.N.; Obraczka, K.; Turletti, T. A survey of software-defined networking: Past, present, and future of programmable networks. IEEE Commun. Surv. Tutor. 2014, 16, 1617–1634. [Google Scholar] [CrossRef]

- Farhady, H.; Lee, H.; Nakao, A. Software-defined networking: A survey. Comput. Netw. 2015, 81, 79–95. [Google Scholar] [CrossRef]

- Fundation, O.N. Software-Defined Networking: The New Norm for Networks; ONF White Paper; Open Networking Foundation: Palo Alto, CA, USA, 13 April 2012; pp. 1–12. [Google Scholar]

- Kim, H.; Feamster, N. Improving network management with software defined networking. IEEE Commun. Mag. 2013, 51, 114–119. [Google Scholar] [CrossRef]

- Xia, W.; Wen, Y.; Foh, C.H.; Niyato, D.; Xie, H. A survey on software-defined networking. IEEE Commun. Surv. Tutor. 2015, 17, 27–51. [Google Scholar] [CrossRef]

- McKeown, N.; Anderson, T.; Balakrishnan, H.; Parulkar, G.; Peterson, L.; Rexford, J.; Shenker, S.; Turner, J. OpenFlow: Enabling innovation in campus networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 69–74. [Google Scholar] [CrossRef]

- Bakken, D.E.; Bose, A.; Hauser, C.H.; Whitehead, D.E.; Zweigle, G.C. Smart generation and transmission with coherent, real-time data. Proc. IEEE 2011, 99, 928–951. [Google Scholar] [CrossRef]

- Tariq, M.A.; Koch, G.G.; Koldehofe, B.; Khan, I.; Rothermel, K. Dynamic publish/subscribe to meet subscriber-defined delay and bandwidth constraints. In Proceedings of the 16th International Euro-Par Conference, Ischia, Italy, 31 August–3 September 2010; pp. 458–470. [Google Scholar]

- Bhowmik, S.; Tariq, M.A.; Hegazy, L.; Rothermel, K. Hybrid content-based routing using network and application layer filtering. In Proceedings of the 2016 IEEE 36th International Conference on Distributed Computing Systems (ICDCS), Nara, Japan, 27–30 June 2016; pp. 221–231. [Google Scholar]

- Chen, C.; Jacobsen, H.A.; Vitenberg, R. Algorithms based on divide and conquer for topic-based publish/subscribe overlay design. IEEE/ACM Trans. Netw. 2016, 24, 422–436. [Google Scholar] [CrossRef]

- Tariq, M.A.; Koldehofe, B.; Bhowmik, S.; Rothermel, K. PLEROMA: A SDN-based high performance publish/subscribe middleware. In Proceedings of the 15th International Middleware Conference, Bordeaux, France, 8–12 December 2014; pp. 217–228. [Google Scholar]

- Bhowmik, S.; Tariq, M.A.; Koldehofe, B.; Durr, F.; Kohler, T.; Rothermel, K. High performance publish/subscribe middleware in software-defined networks. IEEE/ACM Trans. Netw. (TON) 2017, 25, 1501–1516. [Google Scholar] [CrossRef]

- Koldehofe, B.; Dürr, F.; Tariq, M.A.; Rothermel, K. The power of software-defined networking: Line-rate content-based routing using OpenFlow. In Proceedings of the 7th Workshop on Middleware for Next Generation Internet Computing, Montreal, QC, Canada, 3–7 December 2012; p. 3. [Google Scholar]

- Tariq, M.A.; Koldehofe, B.; Koch, G.G.; Khan, I.; Rothermel, K. Meeting subscriber-defined QoS constraints in publish/subscribe systems. Concurr. Comput. Pract. Exp. 2011, 23, 2140–2153. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Y.; Chen, J. Pursuing Differentiated Services in a SDN-Based IoT-Oriented Pub/Sub System. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 906–909. [Google Scholar]

- Hoffert, J.; Schmidt, D.C. Maintaining QoS for publish/subscribe middleware in dynamic environments. In Proceedings of the Third ACM International Conference on Distributed Event-Based Systems, Nashville, TN, USA, 6–9 July 2009; p. 28. [Google Scholar]

- Shi, Y.; Zhang, Y.; Jacobsen, H.A.; Han, B.; Wei, M.; Li, R.; Chen, J. Using Machine Learning to Provide Differentiated Services in SDN-like Publish/Subscribe Systems for IoT. In Proceedings of the International Conference on Service-Oriented Computing, Hangzhou, China, 12–15 November 2018; pp. 532–540. [Google Scholar]

- Razzaque, M.A.; Milojevic-Jevric, M.; Palade, A.; Clarke, S. Middleware for Internet of Things: A survey. IEEE Internet Things J. 2016, 3, 70–95. [Google Scholar] [CrossRef]

- Eugster, P.T.; Felber, P.A.; Guerraoui, R.; Kermarrec, A.M. The many faces of publish/subscribe. ACM Comput. Surv. (CSUR) 2003, 35, 114–131. [Google Scholar] [CrossRef] [Green Version]

- Happ, D.; Karowski, N.; Menzel, T.; Handziski, V.; Wolisz, A. Meeting IoT platform requirements with open pub/sub solutions. Ann. Telecommun. 2017, 72, 41–52. [Google Scholar] [CrossRef]

- Jokela, P.; Zahemszky, A.; Esteve Rothenberg, C.; Arianfar, S.; Nikander, P. LIPSIN: Line speed publish/subscribe inter-networking. ACM SIGCOMM Comput. Commun. Rev. 2009, 39, 195–206. [Google Scholar] [CrossRef]

- Chockler, G.; Melamed, R.; Tock, Y.; Vitenberg, R. Spidercast: A scalable interest-aware overlay for topic-based pub/sub communication. In Proceedings of the 2007 Inaugural International Conference on Distributed Event-Based Systems, Toronto, ON, Canada, 20–22 June 2007; pp. 14–25. [Google Scholar]

- Zhao, Y.; Kim, K.; Venkatasubramanian, N. Dynatops: A dynamic topic-based publish/subscribe architecture. In Proceedings of the 7th ACM International Conference on Distributed Event-Based Systems, Arlington, VA, USA, 29 June–3 July 2013; pp. 75–86. [Google Scholar]

- Fidler, E.; Jacobsen, H.A.; Li, G.; Mankovski, S. The PADRES Distributed Publish/Subscribe System. In Proceedings of the Feature Interactions in Telecommunications and Software Systems VIII, ICFI’05, Leicester, UK, 28–30 June 2005; pp. 12–30. [Google Scholar]

- Barazzutti, R.; Felber, P.; Fetzer, C.; Onica, E.; Pineau, J.F.; Pasin, M.; Rivière, E.; Weigert, S. StreamHub: A massively parallel architecture for high-performance content-based publish/subscribe. In Proceedings of the 7th ACM International Conference on Distributed Event-Based Systems, Arlington, VA, USA, 29 June–3 July 2013; pp. 63–74. [Google Scholar]

- Jayaram, K.; Eugster, P.; Jayalath, C. Parametric content-based publish/subscribe. ACM Trans. Comput. Syst. (TOCS) 2013, 31, 4. [Google Scholar] [CrossRef]

- Eugster, P. Type-based publish/subscribe: Concepts and experiences. ACM Trans. Program. Lang. Syst. (TOPLAS) 2007, 29, 6. [Google Scholar] [CrossRef]

- Dayal, J.; Bratcher, D.; Eisenhauer, G.; Schwan, K.; Wolf, M.; Zhang, X.; Abbasi, H.; Klasky, S.; Podhorszki, N. Flexpath: Type-based publish/subscribe system for large-scale science analytics. In Proceedings of the 2014 14th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), Chicago, IL, USA, 26–29 May 2014; pp. 246–255. [Google Scholar]

- Hakiri, A.; Gokhale, A. Data-centric publish/subscribe routing middleware for realizing proactive overlay software-defined networking. In Proceedings of the 10th ACM International Conference on Distributed and Event-based Systems, Irvine, CA, USA, 20–24 June 2016; pp. 246–257. [Google Scholar]

- Zhang, K.; Jacobsen, H.A. SDN-like: The next generation of pub/sub. arXiv, 2013; arXiv:1308.0056. [Google Scholar]

- Hoffert, J.; Mack, D.; Schmidt, D. Using machine learning to maintain pub/sub system qos in dynamic environments. In Proceedings of the 8th International Workshop on Adaptive And Reflective Middleware, Urbana Champaign, IL, USA, 1 December 2009; p. 4. [Google Scholar]

- Zeng, L.; Benatallah, B.; Ngu, A.H.; Dumas, M.; Kalagnanam, J.; Chang, H. QoS-aware middleware for web services composition. IEEE Trans. Softw. Eng. 2004, 30, 311–327. [Google Scholar] [CrossRef]

- Zeng, L.; Lei, H.; Chang, H. Monitoring the QoS for web services. In Proceedings of the International Conference on Service-Oriented Computing, Vienna, Austria, 17–20 September 2007; pp. 132–144. [Google Scholar]

- Behnel, S.; Fiege, L.; Muhl, G. On quality-of-service and publish-subscribe. In Proceedings of the 26th IEEE International Conference on Distributed Computing Systems Workshops, ICDCS Workshops 2006, Lisboa, Portugal, 4–7 July 2006. [Google Scholar]

- Lu, X.; Li, X.; Yang, T.; Liao, Z.; Liu, W.; Wang, H. QoS-aware publish-subscribe service for real-time data acquisition. In Proceedings of the International Workshop on Business Intelligence for the Real-Time Enterprise, Auckland, New Zealand, 24–24 August 2008; pp. 29–44. [Google Scholar]

- Wang, Z.; Zhang, Y.; Chang, X.; Mi, X.; Wang, Y.; Wang, K.; Yang, H. Pub/Sub on stream: A multi-core based message broker with QoS support. In Proceedings of the 6th ACM International Conference on Distributed Event-Based Systems, Berlin, Germany, 16–20 July 2012; pp. 127–138. [Google Scholar]

- Pongthawornkamol, T.; Nahrstedt, K.; Wang, G. Probabilistic QoS modeling for reliability/timeliness prediction in distributed content-based publish/subscribe systems over best-effort networks. In Proceedings of the 7th International Conference on Autonomic Computing, Washington, DC, USA, 7–11 June 2010; pp. 185–194. [Google Scholar]

- Almadani, B. QoS-aware real-time pub/sub middleware for drilling data management in petroleum industry. J. Ambient Intell. Humaniz. Comput. 2016, 7, 287–299. [Google Scholar] [CrossRef]

- Carlson, M.; Weiss, W.; Blake, S.; Wang, Z.; Black, D.; Davies, E. An Architecture for Differentiated Services; RFC 2475; The Internet Society: Reston, VA, USA, 1998; pp. 1–36. [Google Scholar]

- Hakiri, A.; Berthou, P.; Gokhale, A.; Schmidt, D.C.; Gayraud, T. Supporting end-to-end quality of service properties in OMG data distribution service publish/subscribe middleware over wide area networks. J. Syst. Softw. 2013, 86, 2574–2593. [Google Scholar] [CrossRef]

- Hakiri, A.; Berthou, P.; Gokhale, A.; Schmidt, D.C.; Thierry, G. Supporting SIP-based end-to-end data distribution service QoS in WANs. J. Syst. Softw. 2014, 95, 100–121. [Google Scholar] [CrossRef]

- Aiello, W.A.; Mansour, Y.; Rajagopolan, S.; Rosén, A. Competitive queue policies for differentiated services. J. Algorithms 2005, 55, 113–141. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Wu, Y.; Xie, F.; Chen, L.; Chen, C.; Zheng, Z. An Embedding Based Factorization Machine Approach for Web Service QoS Prediction. In Proceedings of the International Conference on Service-Oriented Computing, Malaga, Spain, 13–16 November 2017; pp. 272–286. [Google Scholar]

- Bartholomew, D. Time series analysis forecasting and control. J. Oper. Res. Soc. 1971, 22, 199–201. [Google Scholar] [CrossRef]

- Shaw, R.; Howley, E.; Barrett, E. Predicting the available bandwidth on intra cloud network links for deadline constrained workflow scheduling in public clouds. In Proceedings of the International Conference on Service-Oriented Computing, Malaga, Spain, 13–16 November 2017; pp. 221–228. [Google Scholar]

- Floyd, S.; Jacobson, V. Random early detection gateways for congestion avoidance. IEEE/ACM Trans. Netw. 1993, 1, 397–413. [Google Scholar] [CrossRef] [Green Version]

- Narisetty, R.; Dane, L.; Malishevskiy, A.; Gurkan, D.; Bailey, S.; Narayan, S.; Mysore, S. Openflow configuration protocol: Implementation for the of management plane. In Proceedings of the 2013 Second GENI Research and Educational Experiment Workshop (GREE), Salt Lake City, UT, USA, 20–22 March 2013; pp. 66–67. [Google Scholar]

- Chandrasekaran, B. Survey of network traffic models. In Washington University in St. Louis CSE; Washington University: Washington, DC, USA, 2009; Volume 567. [Google Scholar]

- Frost, V.S.; Melamed, B. Traffic modeling for telecommunications networks. IEEE Commun. Mag. 1994, 32, 70–81. [Google Scholar] [CrossRef]

- Kemp, C.; Kemp, A.W. Poisson random variate generation. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1991, 40, 143–158. [Google Scholar] [CrossRef]

- De Oliveira, R.L.S.; Schweitzer, C.M.; Shinoda, A.A.; Prete, L.R. Using mininet for emulation and prototyping software-defined networks. In Proceedings of the 2014 IEEE Colombian Conference on Communications and Computing (COLCOM), Bogota, Colombia, 4–6 June 2014; pp. 1–6. [Google Scholar]

- Rastogi, A.; Bais, A. Comparative analysis of software defined networking (SDN) controllers—In terms of traffic handling capabilities. In Proceedings of the Multi-Topic Conference, Islamabad, Pakistan, 5–6 December 2016. [Google Scholar]

- Megyesi, P.; Botta, A.; Aceto, G.; Pescape, A.; Molnar, S. Available bandwidth measurement in software defined networks. In Proceedings of the ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; pp. 651–657. [Google Scholar]

- Botta, A.; Dainotti, A.; Pescape, A. A tool for the generation of realistic network workload for emerging networking scenarios. Comput. Netw. 2012, 56, 3531–3547. [Google Scholar] [CrossRef]

- Botta, A.; Dainotti, A.; Pescape, A. Do You Trust Your Software-Based Traffic Generator? IEEE Commun. Mag. 2010, 48, 158–165. [Google Scholar] [CrossRef]

- Blum, R. Network Performance Open Source Toolkit Using Netperf, tcptrace, NISTnet, and SSFNet; John Wiley & Sons: New York, NY, USA, 2003. [Google Scholar]

| Time | Start | End | Width | MaxRoute | Sum | Pkg | In | Out | InOut | Mem | MaxPkg | MinTran | MaxMAC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2971099 | 0 | 0 | 80 | 12000 | 1500000 | 512 | 0 | 0 | 3351904417 | 512 M | 4 M | 1 µs | 32 K |

| 2769963 | 2972088 | 2770952 | 80 | 12000 | 1500000 | 512 | 0 | 0 | 3351904417 | 512 M | 4 M | 1 µs | 32 K |

| 3502000 | 5742931 | 6273832 | 80 | 12000 | 1500000 | 512 | 0 | 0 | 3351904417 | 512 M | 4 M | 1 µs | 32 K |

| 2793245 | 9246023 | 9068169 | 80 | 12000 | 1500000 | 512 | 0 | 0 | 3351904417 | 512 M | 4 M | 1 µs | 32 K |

| 2781192 | 12040209 | 11850302 | 80 | 12000 | 1500000 | 512 | 0 | 0 | 3351904417 | 512 M | 4 M | 1 µs | 32 K |

| XGBoost Parameter | RMSE |

|---|---|

| min_child_weight=10; subsample=0.7; colsample_bytree=0.7; scale_pos_weight=0.8; max_depth=4; eta=0.1; early_stopping_rounds=30; | |

| min_child_weight=10; subsample=0.7; colsample_bytree=0.7; scale_pos_weight=0.8; max_depth=6; eta=0.1; early_stopping_rounds=40; | |

| min_child_weight=10; subsample=1; colsample_bytree=1; scale_pos_weight=1; max_depth=10; eta=0.1; early_stopping_rounds=50; |

| Requirement | Priority | Queue | Bandwidth |

|---|---|---|---|

| 10 ms | high | 7 | 60 M/s |

| 60 ms | medium | 6 | 30 M/s |

| 150 ms | low | 5 | 10 M/s |

| Parameter Name | Parameter Value |

|---|---|

| rate | 1 MB/s |

| time interval | 5 ms |

| packet size | 1 KB |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Y.; Zhang, Y.; Jacobsen, H.-A.; Tang, L.; Elliott, G.; Zhang, G.; Chen, X.; Chen, J. Using Machine Learning to Provide Reliable Differentiated Services for IoT in SDN-Like Publish/Subscribe Middleware. Sensors 2019, 19, 1449. https://doi.org/10.3390/s19061449

Shi Y, Zhang Y, Jacobsen H-A, Tang L, Elliott G, Zhang G, Chen X, Chen J. Using Machine Learning to Provide Reliable Differentiated Services for IoT in SDN-Like Publish/Subscribe Middleware. Sensors. 2019; 19(6):1449. https://doi.org/10.3390/s19061449

Chicago/Turabian StyleShi, Yulong, Yang Zhang, Hans-Arno Jacobsen, Lulu Tang, Geoffrey Elliott, Guanqun Zhang, Xiwei Chen, and Junliang Chen. 2019. "Using Machine Learning to Provide Reliable Differentiated Services for IoT in SDN-Like Publish/Subscribe Middleware" Sensors 19, no. 6: 1449. https://doi.org/10.3390/s19061449

APA StyleShi, Y., Zhang, Y., Jacobsen, H.-A., Tang, L., Elliott, G., Zhang, G., Chen, X., & Chen, J. (2019). Using Machine Learning to Provide Reliable Differentiated Services for IoT in SDN-Like Publish/Subscribe Middleware. Sensors, 19(6), 1449. https://doi.org/10.3390/s19061449