A Unified Multiple-Target Positioning Framework for Intelligent Connected Vehicles

Abstract

:1. Introduction

1.1. Self-Positioning

1.2. Target Localization

1.3. Contributions

- A unified theoretical framework for vehicular self-positioning and relative localization of targets based on V2X is proposed, and it can integrate data from the on-board sensors in the vehicular network and HD maps with GNSS/INS measurements into a unified system.

- By cooperative positioning, accuracy of under 0.2 m can be achieved in terms of self-positioning and relative localization of targets in urban areas using low-cost GNSS/INS, on-board sensors, and widely equipped HD maps. Simultaneously, the target sensing range is extended beyond the line of sight and field of view, and this greatly improves the integrity of perception.

- Furthermore, compared with state-of-the-art techniques, the proposed framework places fewer demands on vehicular network nodes’ density and the amount of vehicle-to-target measurements.

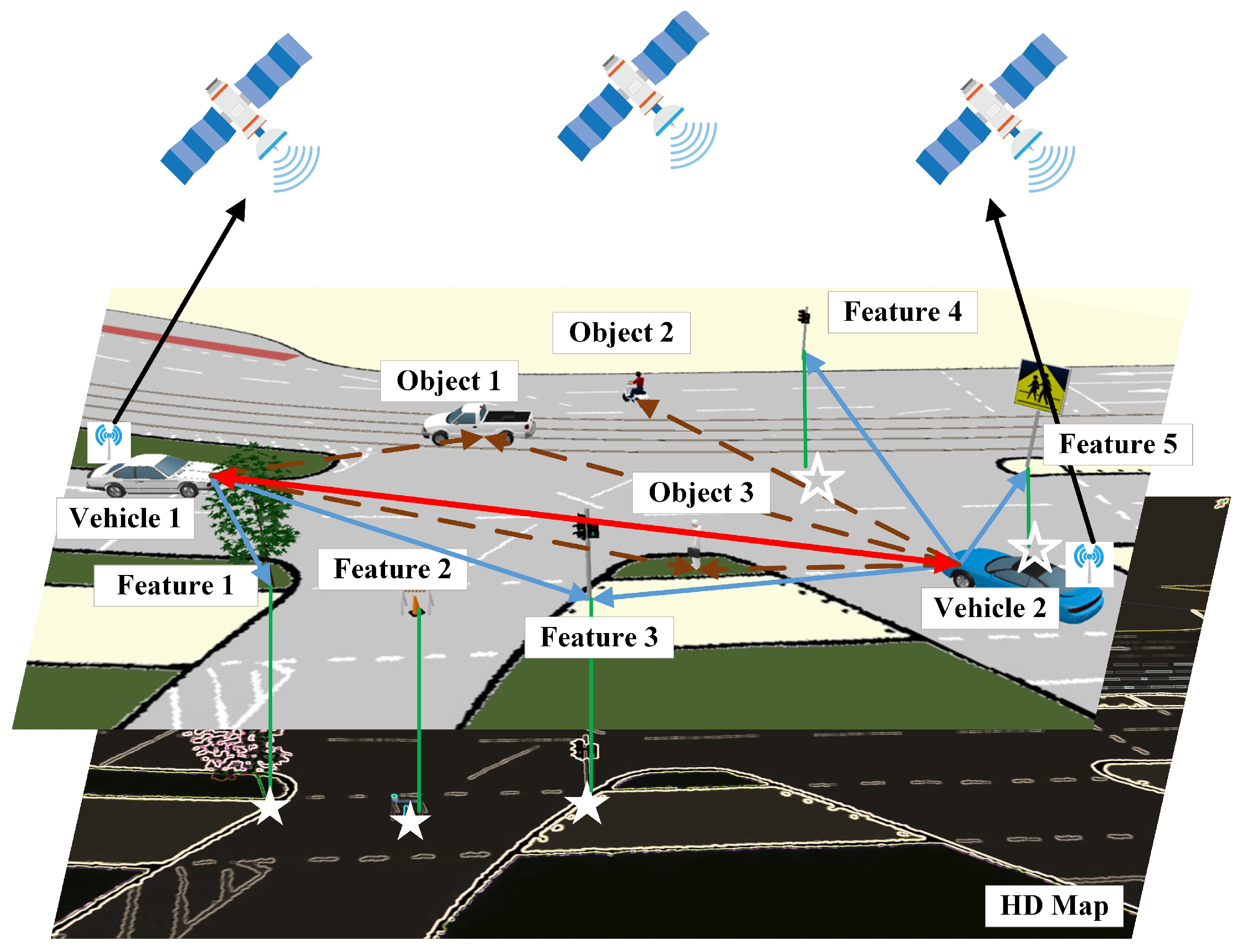

2. Problem Formulation

- Targets: All objects related to vehicle driving, including the connected vehicles themselves and the elements that constitute the environment.

- Connected vehicles: Vehicles in the vehicular network that can obtain information from other vehicles and HD maps.

- Features: Static targets that can be associated with HD maps, e.g., lamps, trees, traffic lights, and traffic signs.

- Objects: Targets, both static and moving, that do not exist in HD maps. These can be pedestrians, bicycles, and disconnected vehicles, all of which are unlabeled on the map.

3. The Unified Multiple-Target Positioning Framework

- Factor between the variables and , on behalf of the constraints of V-V, vehicle-feature (V-F), vehicle-object (V-O), as expressed in Equation (18).

- Factor between the variables and GNSS/INS, on behalf of the constraints from GNSS/INS, as expressed in Equation (19).

- Factor between the variables and the map, on behalf of the constraints from the HD map, as expressed in Equation (20).

4. Implementation Aspects

4.1. Perception Demands and Sensing Capability of Vehicles

4.2. Measurement Model

4.3. Optimized Variable Allocation and Data Association

4.4. Optimization Problem Solving

5. Theoretical Analysis on the Framework Performance

- vehicle , measured from GNSS/INS;

- measured from vehicle i to vehicle l, where ;

- feature , measured from the HD map;

- vehicle to feature , measured from the vehicle’s on-board sensors; and

- vehicle i to object .

6. Numerical Results

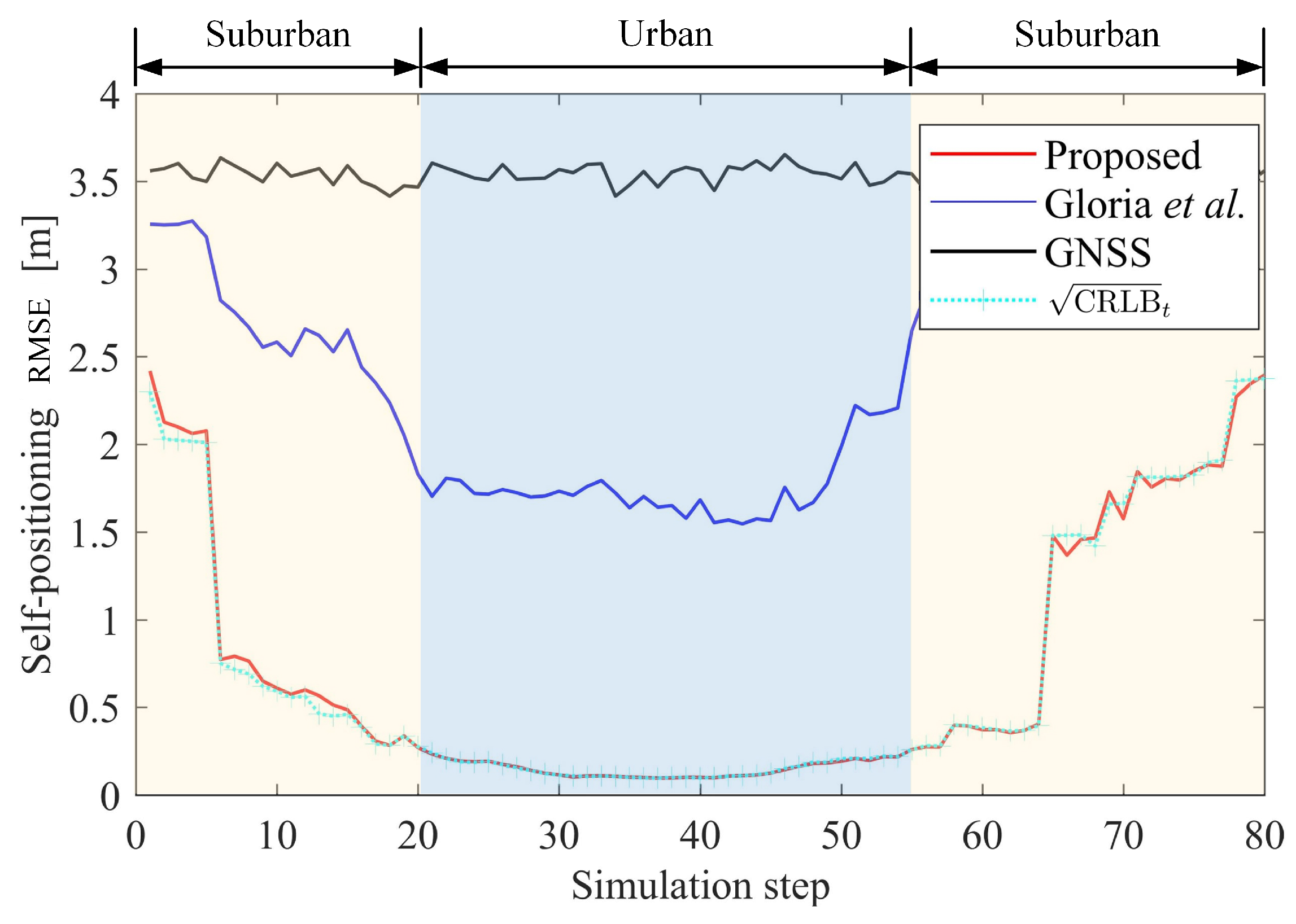

6.1. Performance in a Typical Scenario

- (15 lamps, 4 traffic lights, and 4 traffic signs)

- (10 pedestrians, and 8 disconnected vehicles)

- 0.25 m, and 0.05 m

- 2.5 m, and 0.1 rad

- 70 m, 80 m, and

- 100 m, 30 m, and 60 m

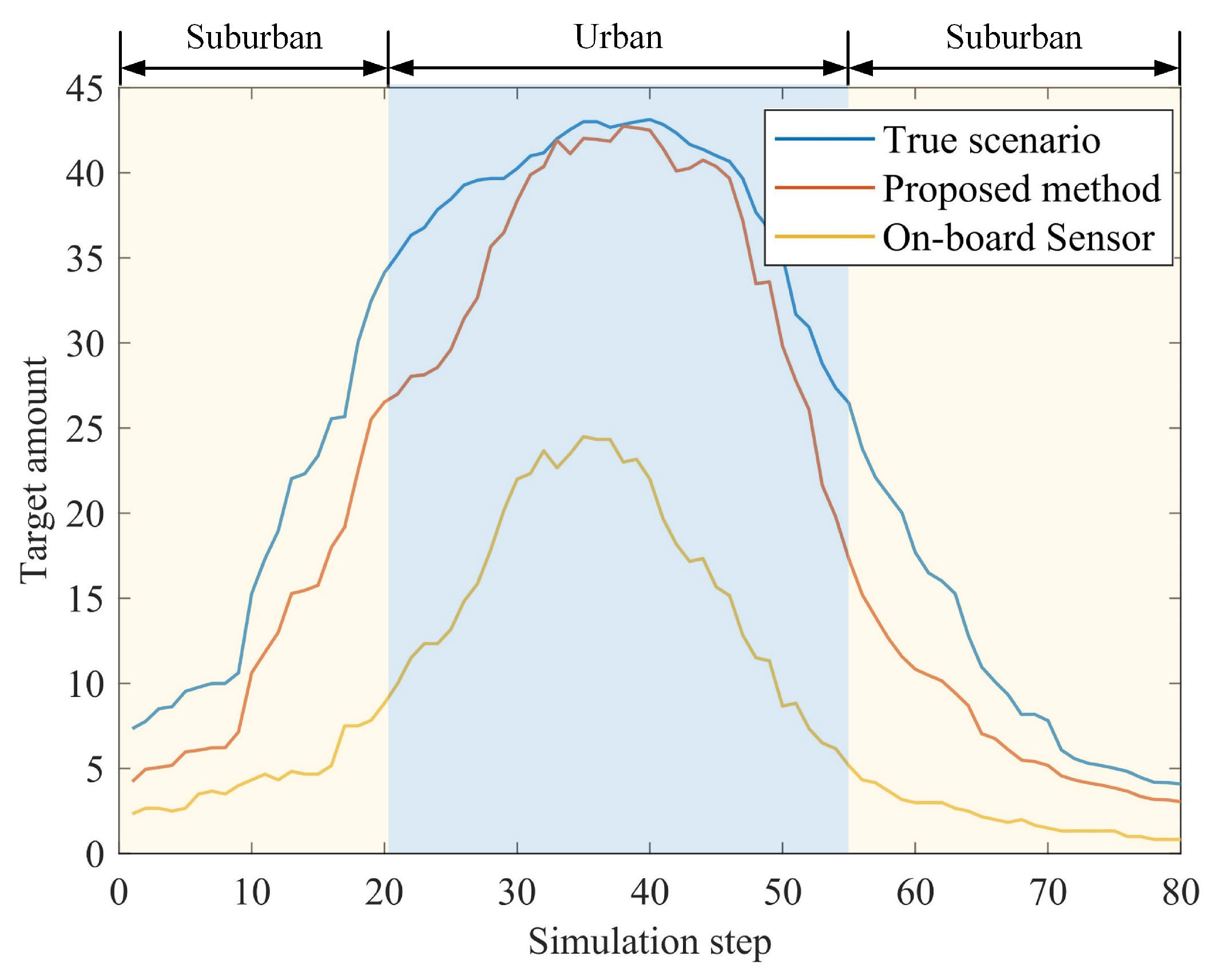

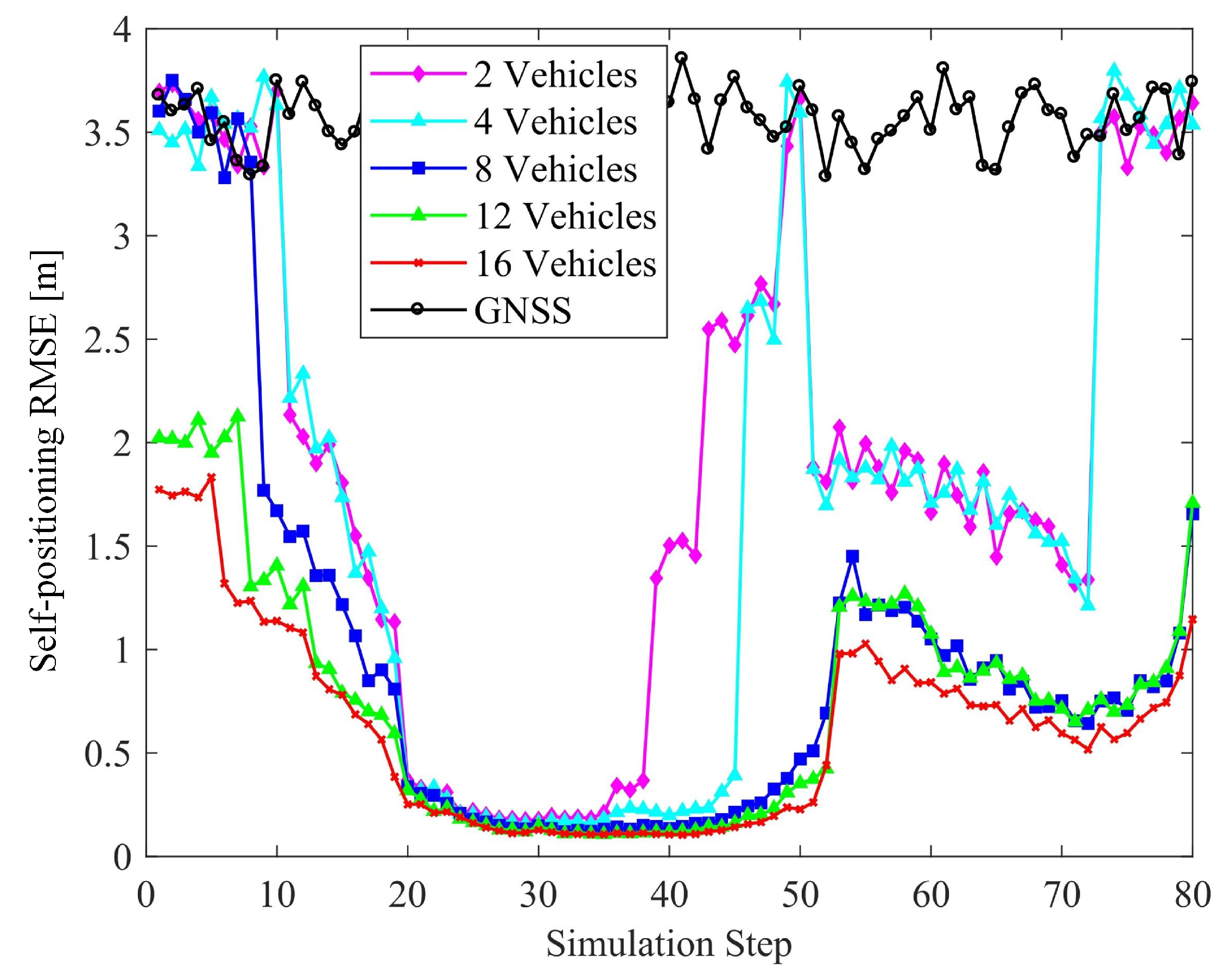

6.2. Adaptability to Different Scenarios

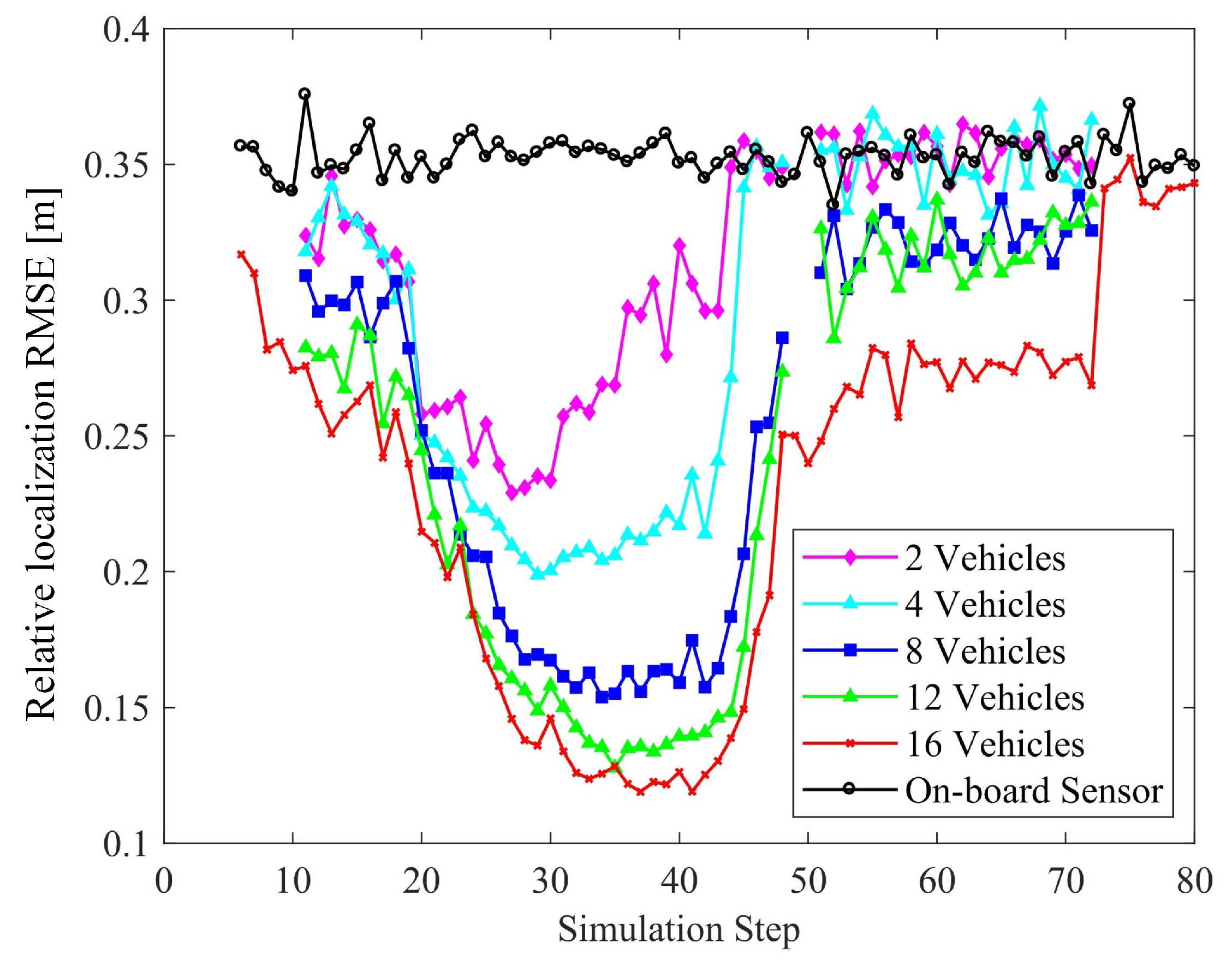

6.2.1. Number of Connected Vehicles

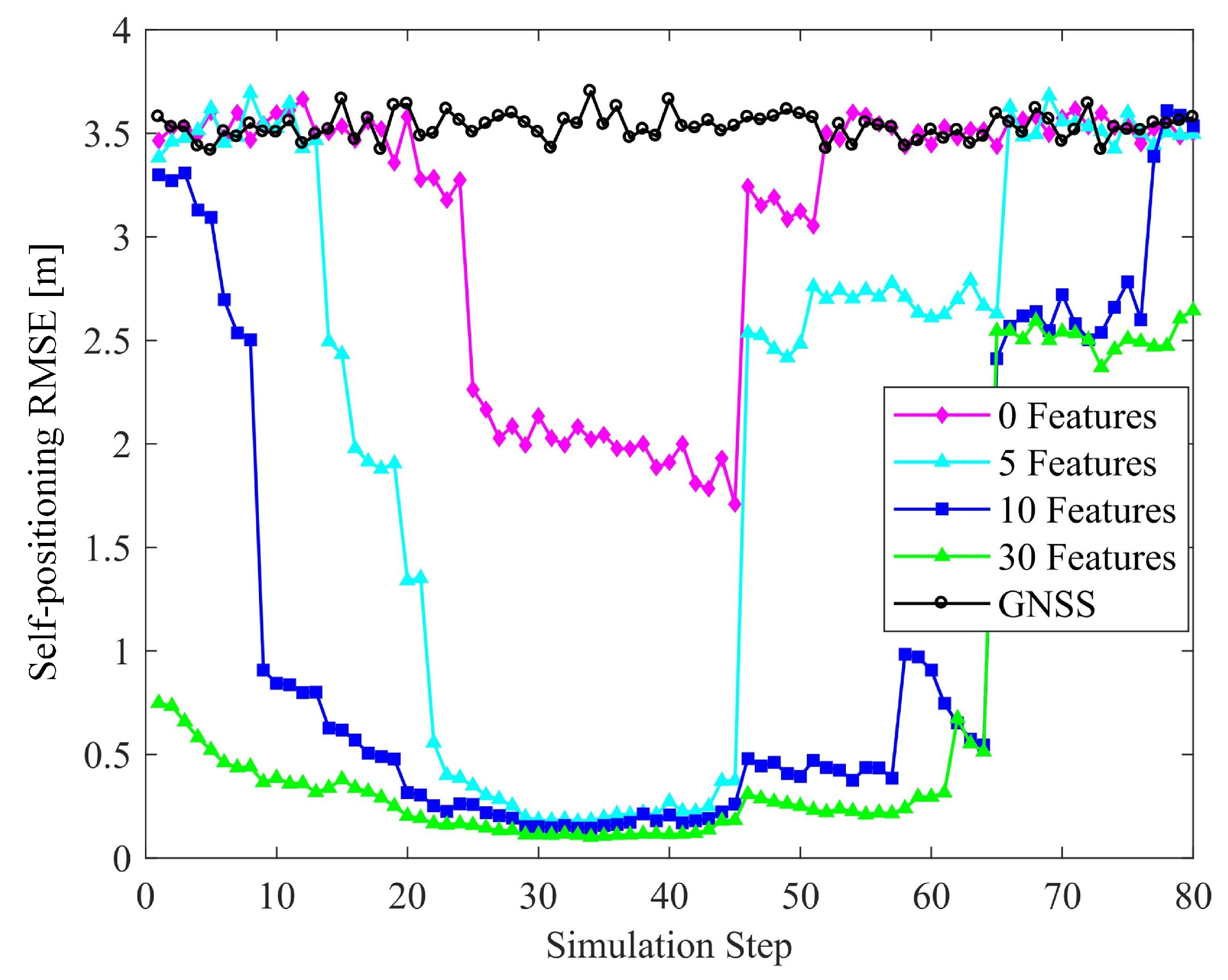

6.2.2. Number of Features

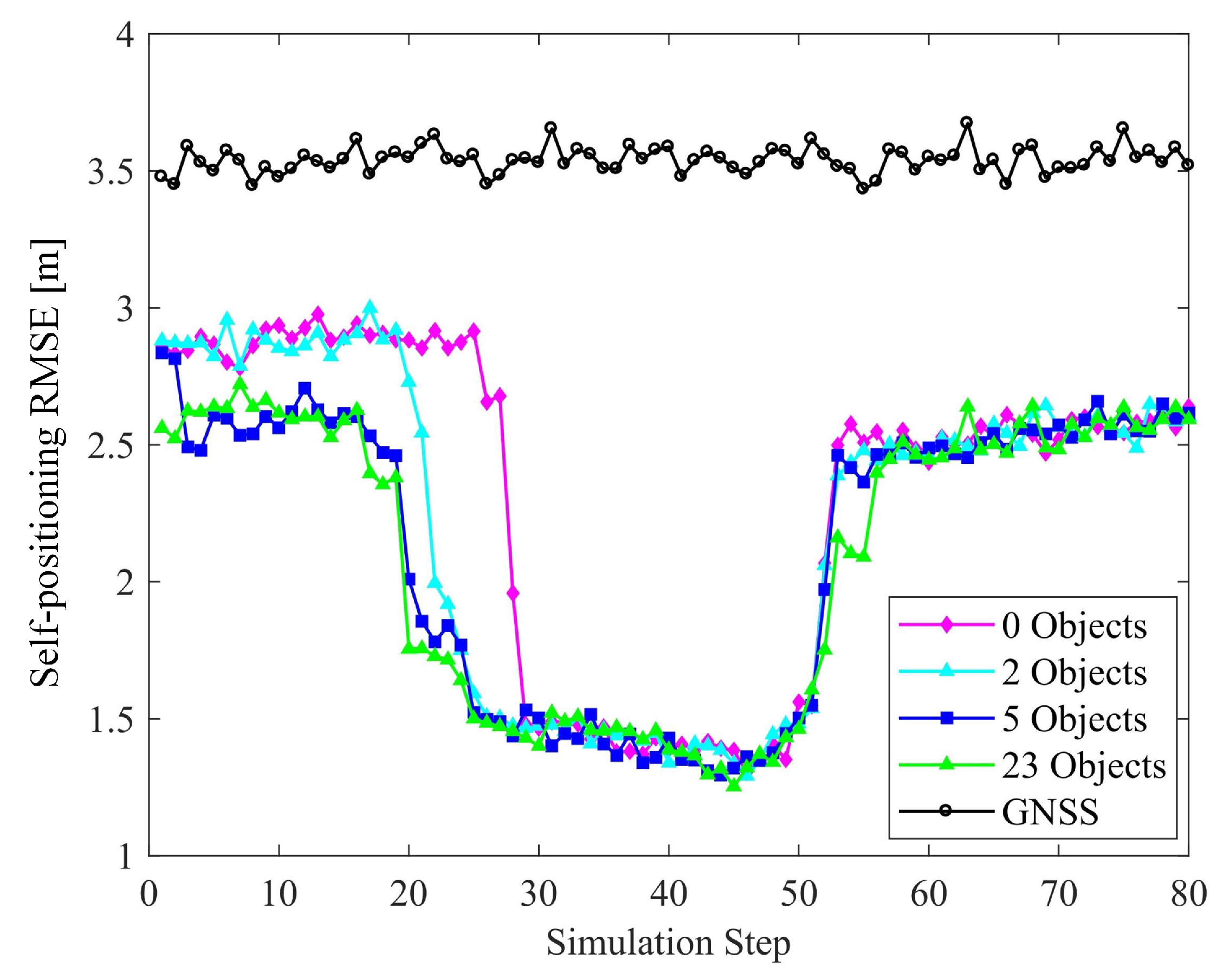

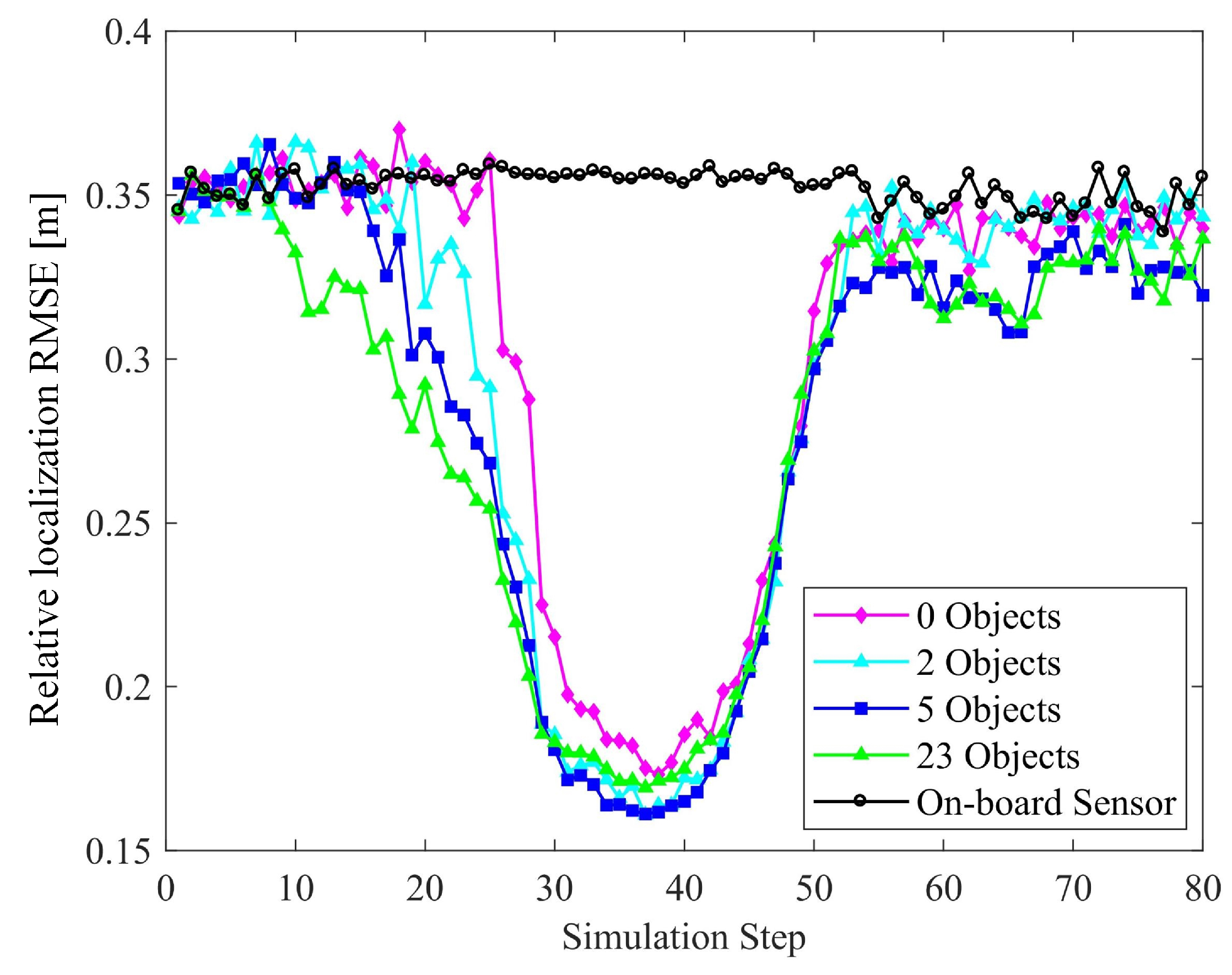

6.2.3. Number of Objects

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, X.; Ning, Z.; Hu, X.; Ngai, E.C.; Wang, L.; Hu, B.; Kwok, R.Y.K. A city-wide real-time traffic management system: Enabling crowdsensing in social internet of vehicles. IEEE Commun. Mag. 2018, 56, 19–25. [Google Scholar] [CrossRef]

- Usman, M.; Asghar, M.R.; Ansari, I.S.; Granelli, F.; Qaraqe, K.A. Technologies and solutions for location-based services in smart cities: Past, present, and future. IEEE Access 2018, 6, 22240–22248. [Google Scholar] [CrossRef]

- Brummelen, J.V.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Yang, D.; Jiang, K.; Zhao, D.; Yu, C.; Cao, Z.; Xie, S.; Xiao, Z.; Jiao, X.; Wang, S.; Zhang, K. Intelligent and connected vehicles: Current status and future perspectives. Sci. China Technol. Sci. 2018, 61, 1446–1471. [Google Scholar] [CrossRef]

- Vivacqua, R.P.D.; Bertozzi, M.; Cerri, P.; Martins, F.N.; Vassallo, R.F. Self-localization based on visual lane marking maps: An accurate low-cost approach for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 582–597. [Google Scholar] [CrossRef]

- Kim, S.; Liu, W.; Ang, M.H.; Frazzoli, E.; Rus, D. The Impact of Cooperative Perception on Decision Making and Planning of Autonomous Vehicles. IEEE Intell. Transp. Syst. Mag. 2015, 7, 39–50. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Kuutti, S.; Fallah, S.; Katsaros, K.; Dianati, M.; Mccullough, F.; Mouzakitis, A. A Survey of the State-of-the-Art Localization Techniques and Their Potentials for Autonomous Vehicle Applications. IEEE Internet Things J. 2018, 5, 829–846. [Google Scholar] [CrossRef]

- Liu, J.; Liu, J. Intelligent and connected vehicles: Current situation, future directions, and challenges. IEEE Commun. Stand. Mag. 2018, 2, 59–65. [Google Scholar] [CrossRef]

- Skog, I.; Handel, P. In-car positioning and navigation technologies-A survey. IEEE Trans. Intell. Transp. Syst. 2009, 10, 4–21. [Google Scholar] [CrossRef]

- Jackson, J.; Davis, B.; Gebre-Egziabher, D. A performance assessment of low-cost RTK GNSS receivers. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 642–649. [Google Scholar]

- Ahmed, E.; Gharavi, H. Cooperative vehicular networking: A survey. IEEE Trans. Intell. Transp. Syst. 2018, 19, 996–1014. [Google Scholar] [CrossRef] [PubMed]

- Nam, S.; Lee, D.; Lee, J.; Park, S. CNVPS: Cooperative Neighboring Vehicle Positioning System Based on Vehicle-to-Vehicle Communication. IEEE Access 2019, 7, 16847–16857. [Google Scholar] [CrossRef]

- Jeong, H.Y.; Nguyen, H.H.; Bhawiyuga, A. Spatiotemporal Local-Remote Senor Fusion (ST-LRSF) for Cooperative Vehicle Positioning. Sensors 2018, 18, 1092. [Google Scholar] [CrossRef] [PubMed]

- De, P.M.F. Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors 2017, 17, 271. [Google Scholar]

- Severi, S.; Wymeersch, H.; Härri, J.; Ulmschneider, M.; Denis, B.; Bartels, M. Beyond GNSS: Highly accurate localization for cooperative-intelligent transport systems. In Proceedings of the IEEE Wireless Communications and Networking Conference, Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Hobert, L.; Festag, A.; Llatser, I.; Altomare, L.; Visintainer, F.; Kovacs, A. Enhancements of V2X communication in support of cooperative autonomous driving. IEEE Commun. Mag. 2015, 53, 64–70. [Google Scholar] [CrossRef]

- Shen, X.; Andersen, H.; Leong, W.K.; Kong, H.X.; Ang, M.H., Jr.; Rus, D. A General Framework for Multi-vehicle Cooperative Localization Using Pose Graph. arXiv 2017, arXiv:1704.01252. [Google Scholar]

- Soatti, G.; Nicoli, M.; Garcia, N.; Denis, B.; Raulefs, R.; Wymeersch, H. Implicit Cooperative Positioning in Vehicular Networks. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3964–3980. [Google Scholar] [CrossRef]

- Soatti, G.; Nicoli, M.; Garcia, N.; Denis, B.; Raulefs, R.; Wymeersch, H. Enhanced vehicle positioning in cooperative ITS by joint sensing of passive features. In Proceedings of the IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Fascista, A.; Ciccarese, G.; Coluccia, A.; Ricci, G. Angle of arrival-based cooperative positioning for smart vehicles. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2880–2892. [Google Scholar] [CrossRef]

- Seif, H.G.; Hu, X. Autonomous Driving in the iCity—HD Maps as a Key Challenge of the Automotive Industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Factors to Evaluate Capability of Map for Vehicle Localization. IEEE Access 2018, 6, 49850–49867. [Google Scholar] [CrossRef]

- Quack, T.M.; Reiter, M.; Abel, D. Digital map generation and localization for vehicles in urban intersections using LiDAR and GNSS data. IFAC-PapersOnLine 2017, 50, 251–257. [Google Scholar] [CrossRef]

- Xiao, Z.; Jiang, K.; Xie, S.; Wen, T.; Yu, C.; Yang, D. Monocular Vehicle Self-localization method based on Compact Semantic Map. In Proceedings of the 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3083–3090. [Google Scholar]

- Hsu, C.M.; Shiu, C.W. 3D LiDAR-Based Precision Vehicle Localization with Movable Region Constraints. Sensors 2019, 19, 942. [Google Scholar] [CrossRef]

- Levinson, J.; Montemerlo, M.; Thrun, S. Map-based precision vehicle localization in urban environments. In Proceedings of the Robotics: Science and Systems III, Atlanta, GA, USA, 27–30 June 2007. [Google Scholar]

- Wang, C.; Huang, H.; Ji, Y.; Wang, B.; Yang, M. Vehicle localization at an intersection using a traffic light map. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1432–1441. [Google Scholar] [CrossRef]

- Atia, M.M.; Hilal, A.R.; Stellings, C.; Hartwell, E.; Toonstra, J.; Miners, W.B.; Basir, O.A. A Low-Cost Lane-Determination System Using GNSS/IMU Fusion and HMM-Based Multistage Map Matching. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3027–3037. [Google Scholar] [CrossRef]

- Oguz-Ekim, P.; Ali, K.; Madadi, Z.; Quitin, F.; Tay, W.P. Proof of concept study using DSRC, IMU and map fusion for vehicle localization in GNSS-denied environments. In Proceedings of the IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 841–846. [Google Scholar]

- Campbell, S.; O’Mahony, N.; Krpalcova, L.; Riordan, D.; Walsh, J.; Murphy, A.; Ryan, C. Sensor Technology in Autonomous Vehicles: A review. In Proceedings of the 29th Irish Signals and Systems Conference, Belfast, UK, 21–22 June 2018; pp. 1–4. [Google Scholar]

- Kim, S.W.; Wei, L. Cooperative Autonomous Driving: A Mirror Neuron Inspired Intention Awareness and Cooperative Perception Approach. IEEE Intell. Transp. Syst. Mag. 2016, 8, 23–32. [Google Scholar] [CrossRef]

- Kim, S.; Qin, B.; Chong, Z.J.; Shen, X.; Liu, W.; Ang, M.H.; Frazzoli, E.; Rus, D. Multivehicle Cooperative Driving Using Cooperative Perception: Design and Experimental Validation. IEEE Trans. Intell. Transp. Syst. 2015, 16, 663–680. [Google Scholar] [CrossRef]

- Rauch, A.; Klanner, F.; Rasshofer, R.; Dietmayer, K. Car2X-based perception in a high-level fusion architecture for cooperative perception systems. In Proceedings of the IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 270–275. [Google Scholar]

- Xiao, Z.; Mo, Z.; Jiang, K.; Yang, D. Multimedia Fusion at Semantic Level in Vehicle Cooperactive Perception. In Proceedings of the IEEE International Conference on Multimedia Expo Workshops (ICMEW), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef]

- Yang, B.; Liang, M.; Urtasun, R. HDNET: Exploiting HD Maps for 3D Object Detection. In Proceedings of the 2nd Conference on Robot Learning, Zurich, Switzerland, 29–31 October 2018; Volume 87, pp. 146–155. [Google Scholar]

- Kurdej, M.; Moras, J.; Cherfaoui, V.; Bonnifait, P. Map-Aided Evidential Grids for Driving Scene Understanding. IEEE Intell. Transp. Syst. Mag. 2015, 7, 30–41. [Google Scholar] [CrossRef]

- Wang, S.; Fidler, S.; Urtasun, R. Holistic 3D scene understanding from a single geo-tagged image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3964–3972. [Google Scholar]

- Kschischang, F.R.; Frey, B.J.; Loeliger, H.A. Factor graphs and the sum-product algorithm. IEEE Trans. Inf. Theory 2001, 47, 498–519. [Google Scholar] [CrossRef]

- He, Z.; Hu, Y.; Wu, J.; Wang, J.; Kang, W. A comprehensive method for multipath performance analysis of GNSS navigation signals. In Proceedings of the IEEE International Conference on Signal Processing, Xi’an, China, 14–16 September 2011. [Google Scholar]

- Hamza, B.; Nebylov, A. Robust Nonlinear Filtering Applied to Integrated Navigation System INS/GNSS under Non Gaussian Measurement noise effect. IFAC Proc. Vol. 2012, 45, 202–207. [Google Scholar] [CrossRef]

- Rauch, A.; Maier, S.; Klanner, F.; Dietmayer, K. Inter-vehicle object association for cooperative perception systems. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems, The Hague, The Netherlands, 6–9 October 2013; pp. 893–898. [Google Scholar]

- Thomaidis, G.; Tsogas, M.; Lytrivis, P.; Karaseitanidis, G.; Amditis, A. Multiple hypothesis tracking for data association in vehicular networks. Inf. Fusion 2013, 14, 374–383. [Google Scholar] [CrossRef]

- Marquardt, D. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing, Volume I: Estimation Theory; Prentice Hall: Upper Saddle River, NJ, USA, 1993. [Google Scholar]

- Lownes, N.E.; Machemehl, R. VISSIM: A multi-parameter sensitivity analysis. In Proceedings of the Simulation Conference, Monterey, CA, USA, 3–6 December 2006; pp. 1406–1413. [Google Scholar]

| Grade | Title | Map | Accuracy | Typical Condition |

| Driver Scenario | ||||

| 1 (DA) | Driver Assistance | ADAS | Submeter | Optional |

| 2 (PA) | Partial Autopilot | ADAS | Submeter | Optional |

| Automatic Driving Sys. Scenario | ADAS + HD | Submeter Centimeter | Optional | |

| 3 (CA) | Conditional Autopilot | |||

| 4 (HA) | High-Level Automated Driving | ADAS + HD | Submeter Centimeter | Essential |

| 5 (FA) | Completely Automated Driving | HD | Centimeter | Essential (auto updated) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, Z.; Yang, D.; Wen, F.; Jiang, K. A Unified Multiple-Target Positioning Framework for Intelligent Connected Vehicles. Sensors 2019, 19, 1967. https://doi.org/10.3390/s19091967

Xiao Z, Yang D, Wen F, Jiang K. A Unified Multiple-Target Positioning Framework for Intelligent Connected Vehicles. Sensors. 2019; 19(9):1967. https://doi.org/10.3390/s19091967

Chicago/Turabian StyleXiao, Zhongyang, Diange Yang, Fuxi Wen, and Kun Jiang. 2019. "A Unified Multiple-Target Positioning Framework for Intelligent Connected Vehicles" Sensors 19, no. 9: 1967. https://doi.org/10.3390/s19091967

APA StyleXiao, Z., Yang, D., Wen, F., & Jiang, K. (2019). A Unified Multiple-Target Positioning Framework for Intelligent Connected Vehicles. Sensors, 19(9), 1967. https://doi.org/10.3390/s19091967