Vibration-Response-Only Structural Health Monitoring for Offshore Wind Turbine Jacket Foundations via Convolutional Neural Networks

Abstract

:1. Introduction

A defining marine environment main characteristic is that structures are always subject to excitations. Techniques for structural health monitoring, vibration and data analysis must be capable of coping with such ambient excitations. As the input is typically not known, a normal input-output formalism cannot be used.

2. Experimental Setup

- The top beam ( m), where the modal shaker is attached to simulate a nacelle mass and the effects of wind excitation;

- The tower with three tubular sections connected with bolts;

- The jacket, which includes a pyramidal structure made up by 32 bars (S275JR steel) of different lengths, sheets (DC01 LFR steel), and other elements such as bolts and nuts.

3. Structural Health Monitoring Proposed Methodology

3.1. Data Gathering

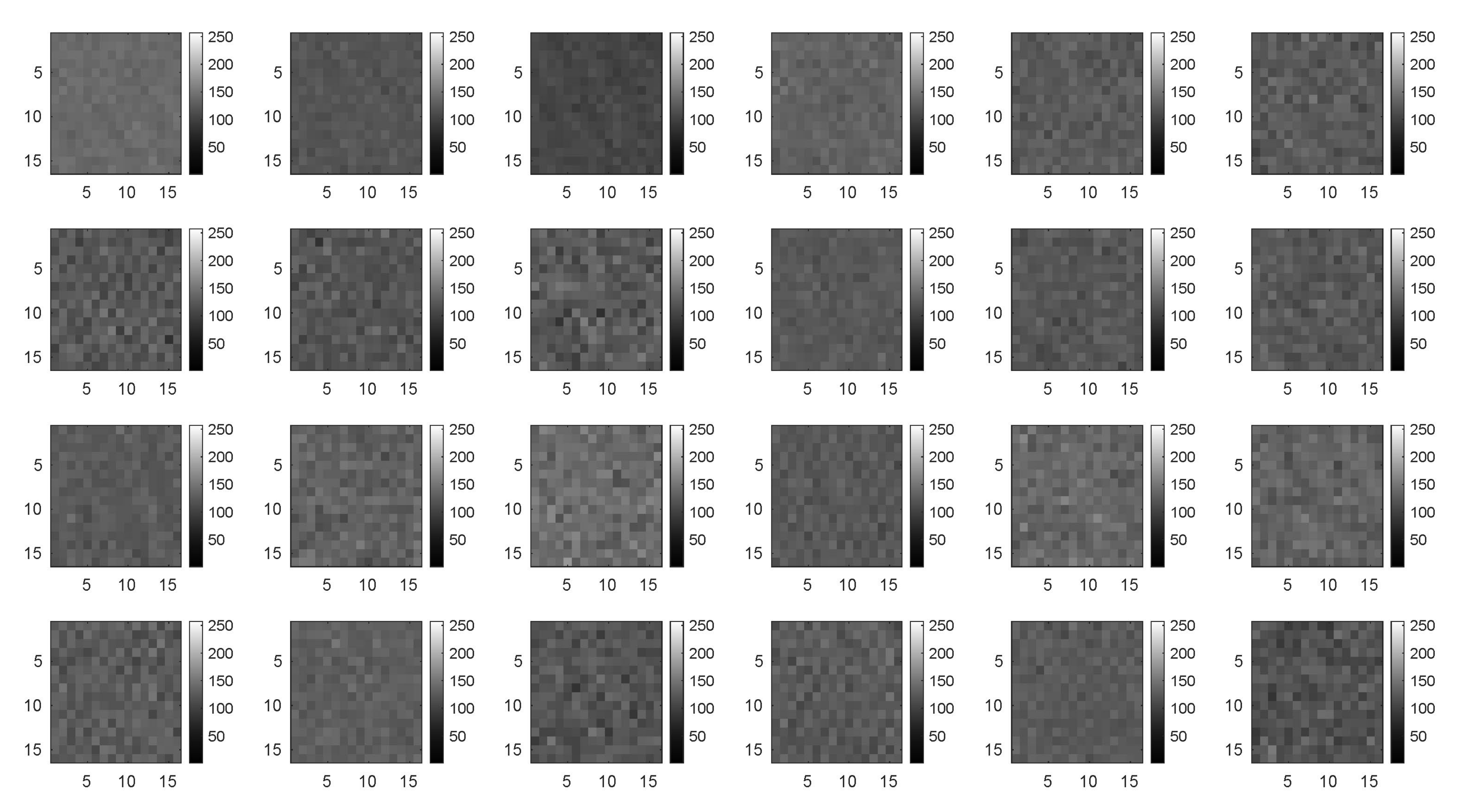

3.2. Data Preprocessing: Scaling, Reshaping, Augmentation, and Signal-To-Image Conversion

3.2.1. Data Scaling

3.2.2. Data Reshaping

3.2.3. Data Augmentation

3.2.4. Signal-To-Image Conversion

3.3. Deep Convolutional Neural Network

3.3.1. Data Split: Training Set and Validation Set

3.3.2. Network Architecture

3.3.3. Network Training

3.3.4. Network Architecture and Hyperparameter Tuning

3.3.5. Network Implementation

4. Results and Discussion

4.1. Metrics to Evaluate the Classification Model

- Accuracy: proportion of true results (both true positives and true negatives) among the total number of cases examined.

- Precision: proportion of positive results that are true positive.

- Recall: proportion of actual positives that are correctly identified as such.

- Specificity: proportion of actual negatives that are correctly identified as such.

- F1-score: harmonic mean of the precision and recall.

4.2. Results of the CNN Classification Method

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ohlenforst, K.; Backwell, B.; Council, G.W.E. Global Wind Report 2018. Available online: https://gwec.net/global-wind-report-2018/ (accessed on 15 June 2020).

- Lai, W.J.; Lin, C.Y.; Huang, C.C.; Lee, R.M. Dynamic analysis of Jacket Substructure for offshore wind turbine generators under extreme environmental conditions. Appl. Sci. 2016, 6, 307. [Google Scholar] [CrossRef] [Green Version]

- Moulas, D.; Shafiee, M.; Mehmanparast, A. Damage analysis of ship collisions with offshore wind turbine foundations. Ocean. Eng. 2017, 143, 149–162. [Google Scholar] [CrossRef]

- Van Kuik, G.; Peinke, J. Long-Term Research Challenges in Wind Energy-A Research Agenda by the European Academy of Wind Energy; Springer: Berlin/Heidelberg, Germany, 2016; Volume 6. [Google Scholar]

- Liu, W.; Tang, B.; Han, J.; Lu, X.; Hu, N.; He, Z. The structure healthy condition monitoring and fault diagnosis methods in wind turbines: A review. Renew. Sustain. Energy Rev. 2015, 44, 466–472. [Google Scholar] [CrossRef]

- Qing, X.; Li, W.; Wang, Y.; Sun, H. Piezoelectric transducer-based structural health monitoring for aircraft applications. Sensors 2019, 19, 545. [Google Scholar] [CrossRef] [PubMed]

- Weijtjens, W.; Verbelen, T.; De Sitter, G.; Devriendt, C. Foundation structural health monitoring of an offshore wind turbine: A full-scale case study. Struct. Health Monit. 2016, 15, 389–402. [Google Scholar] [CrossRef]

- Oliveira, G.; Magalhães, F.; Cunha, Á.; Caetano, E. Vibration-based damage detection in a wind turbine using 1 year of data. Struct. Control. Health Monit. 2018, 25, e2238. [Google Scholar] [CrossRef]

- Zugasti Uriguen, E. Design and validation of a methodology for wind energy structures health monitoring. Ph.D. Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2014. [Google Scholar]

- Lee, J.J.; Lee, J.W.; Yi, J.H.; Yun, C.B.; Jung, H.Y. Neural networks-based damage detection for bridges considering errors in baseline finite element models. J. Sound Vib. 2005, 280, 555–578. [Google Scholar] [CrossRef]

- Kim, B.; Min, C.; Kim, H.; Cho, S.; Oh, J.; Ha, S.H.; Yi, J.H. Structural health monitoring with sensor data and cosine similarity for multi-damages. Sensors 2019, 19, 3047. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stetco, A.; Dinmohammadi, F.; Zhao, X.; Robu, V.; Flynn, D.; Barnes, M.; Keane, J.; Nenadic, G. Machine learning methods for wind turbine condition monitoring: A review. Renew. Energy 2019, 133, 620–635. [Google Scholar] [CrossRef]

- Ruiz, M.; Mujica, L.E.; Alferez, S.; Acho, L.; Tutiven, C.; Vidal, Y.; Rodellar, J.; Pozo, F. Wind turbine fault detection and classification by means of image texture analysis. Mech. Syst. Signal Process. 2018, 107, 149–167. [Google Scholar] [CrossRef] [Green Version]

- Vidal, Y.; Pozo, F.; Tutivén, C. Wind turbine multi-fault detection and classification based on SCADA data. Energies 2018, 11, 3018. [Google Scholar] [CrossRef] [Green Version]

- Spanos, N.I.; Sakellariou, J.S.; Fassois, S.D. Exploring the limits of the Truncated SPRT method for vibration-response-only damage diagnosis in a lab-scale wind turbine jacket foundation structure. Procedia Eng. 2017, 199, 2066–2071. [Google Scholar] [CrossRef]

- Spanos, N.A.; Sakellariou, J.S.; Fassois, S.D. Vibration-response-only statistical time series structural health monitoring methods: A comprehensive assessment via a scale jacket structure. Struct. Health Monit. 2019, 19, 736–750. [Google Scholar] [CrossRef]

- Vidal Seguí, Y.; Rubias, J.L.; Pozo Montero, F. Wind turbine health monitoring based on accelerometer data. In Proceedings of the 9th ECCOMAS Thematic Conference on Smart Structures and Materials, Paris, France, 8–11 July 2019; pp. 1604–1611. [Google Scholar]

- Vidal, Y.; Aquino, G.; Pozo, F.; Gutiérrez-Arias, J.E.M. Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept. Sensors 2020, 20, 1835. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pozo, F.; Vidal, Y.; Serrahima, J. On real-time fault detection in wind turbines: Sensor selection algorithm and detection time reduction analysis. Energies 2016, 9, 520. [Google Scholar] [CrossRef] [Green Version]

- Pal, K.K.; Sudeep, K. Preprocessing for image classification by convolutional neural networks. In Proceedings of the 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 20–21 May 2016; pp. 1778–1781. [Google Scholar]

- Chen, X.W.; Lin, X. Big data deep learning: Challenges and perspectives. IEEE Access 2014, 2, 514–525. [Google Scholar] [CrossRef]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How does batch normalization help optimization? In Proceedings of the Conference on Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 2483–2493. [Google Scholar]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Rusiecki, A. Trimmed categorical cross-entropy for deep learning with label noise. Electron. Lett. 2019, 55, 319–320. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- DeCastro-García, N.; Muñoz Castañeda, Á.L.; Escudero García, D.; Carriegos, M.V. Effect of the Sampling of a Dataset in the Hyperparameter Optimization Phase over the Efficiency of a Machine Learning Algorithm. Complexity 2019, 2019, 6278908. [Google Scholar] [CrossRef]

- Swersky, K.; Snoek, J.; Adams, R.P. Multi-task bayesian optimization. In Proceedings of the Advances in Neural Information Processing Systems, Stateline, NV, USA, 5–10 December 2013; pp. 2004–2012. [Google Scholar]

- Hossin, M.; Sulaiman, M. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process. (IJDKP) 2015, 5, 1–11. [Google Scholar]

- Saito, K.; Yamamoto, S.; Ushiku, Y.; Harada, T. Open set domain adaptation by backpropagation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 153–168. [Google Scholar]

| Label | Structural State | 0.5 WN | 1 WN | 2 WN | 3 WN |

|---|---|---|---|---|---|

| 1 | Healthy bar | 10 tests | 10 tests | 10 tests | 10 tests |

| 2 | Replica bar | 5 tests | 5 tests | 5 tests | 5 tests |

| 3 | Crack damaged bar | 5 tests | 5 tests | 5 tests | 5 tests |

| 4 | Unlocked bolt | 5 tests | 5 tests | 5 tests | 5 tests |

| Sensor 1 | … | Sensor 24 |

|---|---|---|

| = | ⋯ | = |

| Signal 1 | Signal 2 | … | Signal 24 | |

|---|---|---|---|---|

| = |

| Layer | Ouput Size | Parameters | # of Parameters |

|---|---|---|---|

| Input 16 × 16 × 24 images | 16 × 16 × 24 | - | 0 |

| Convolution#1 32 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 14 × 14 × 32 | Weight 5 × 5 × 24 × 32 Bias 1 × 1 × 32 | 19,232 |

| Batch Normalization#1 | 14 × 14 × 32 | Offset 1 × 1 × 32 Scale 1 × 1 × 32 | 64 |

| ReLu#1 | 14 × 14 × 32 | - | 0 |

| Convolution#2 64 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 12 × 12 × 64 | Weight 5 × 5 × 32 × 64 Bias 1 × 1 × 64 | 51,264 |

| Batch Normalization#2 | 12 × 12 × 64 | Offset 1 × 1 × 64 Scale 1 × 1 × 64 | 128 |

| ReLu#2 | 12 × 12 × 64 | - | 0 |

| Convolution#3 128 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 10 × 10 × 128 | Weight 5 × 5 × 64 × 128 Bias 1 × 1 × 128 | 204,928 |

| Batch Normalization#3 | 10 × 10 × 128 | Offset 1 × 1 × 128 Scale 1 × 1 × 128 | 256 |

| ReLu#3 | 10 × 10 × 128 | - | 0 |

| Convolution#4 256 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 8 × 8 × 256 | Weight 5 × 5 × 128 × 256 Bias 1 × 1 × 256 | 819,456 |

| Batch Normalization#4 | 8 × 8 × 256 | Offset 1 × 1 × 256 Scale 1 × 1 × 256 | 512 |

| ReLu#4 | 8 × 8 × 256 | - | 0 |

| Convolution#5 128 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 6 × 6 × 128 | Weight 5 × 5 × 256 × 128 Bias 1 × 1 × 128 | 819,456 |

| Batch Normalization#5 | 6 × 6 × 128 | Offset 1 × 1 × 128 Scale 1 × 1 × 128 | 256 |

| ReLu#5 | 6 × 6 × 128 | - | 0 |

| Convolution#6 64 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 4 × 4 × 64 | Weight 5 × 5 × 128 × 64 Bias 1 × 1 × 64 | 204,864 |

| Batch Normalization#6 | 4 × 4 × 64 | Offset 1 × 1 × 64 Scale 1 × 1 × 64 | 128 |

| ReLu#6 | 4 × 4 × 64 | - | 0 |

| Convolution#7 32 filters of size 5 × 5 × 24 with padding [1 1 1 1] | 2 × 2 × 32 | Weight 5 × 5 × 64 × 32 Bias 1 × 1 × 32 | 51,232 |

| Batch Normalization#7 | 2 × 2 × 32 | Offset 1 × 1 × 32 Scale 1 × 1 × 32 | 64 |

| ReLu#7 | 2 × 2 × 32 | - | 0 |

| Fully connected layer#1 | 1 × 1 × 32 | Weight 32 × 128 Bias 32 × 1 | 4128 |

| Fully connected layer#2 | 1 × 1 × 16 | Weight 16 × 32 Bias 16 × 1 | 528 |

| Fully connected layer#3 | 1 × 1 × 4 | Weight 4 × 16 Bias 4 × 1 | 68 |

| Softmax | - | - | 0 |

| classoutput | - | - | 0 |

| Strategy | Accuracy | Precision | Recall | F1 Score | Specificity |

|---|---|---|---|---|---|

| ReLu-Padding-L2 regularization | 93.81 | 92.77 | 93.73 | 93.22 | 97.98 |

| Relu-No padding-L2 regularization | 93.69 | 92.73 | 93.44 | 93.07 | 97.92 |

| Relu-Padding-No L2 regularization | 93.63 | 92.73 | 93.82 | 93.25 | 97.89 |

| Predicted Class | |||

|---|---|---|---|

| Positive | Negative | ||

| Actual class | Positive | True positive (TP) | False negative (FN) |

| Negative | False positive (FP) | True negative (TN) | |

| Dataset | Label | Precision | Recall | F1-Score | Specificity |

|---|---|---|---|---|---|

| Without data augmentation | 1: Healthy bar | 97.97 | 94.14 | 96.02 | 98.61 |

| 2: Replica bar | 90.31 | 94.75 | 92.48 | 97.61 | |

| 3: Crack damaged bar | 90.31 | 92.63 | 91.46 | 97.59 | |

| 4: Unlocked bolt | 92.50 | 93.38 | 92.94 | 98.13 | |

| With data augmentation | 1: Healthy bar | 99.89 | 99.96 | 99.92 | 99.92 |

| 2: Replica bar | 99.90 | 99.87 | 99.88 | 99.97 | |

| 3: Crack damaged bar | 99.94 | 99.86 | 99.90 | 99.99 | |

| 4: Unlocked bolt | 99.90 | 99.86 | 99.88 | 99.97 |

| Accuracy | Validation Error | Training Error | Training Time | # of Images | |

|---|---|---|---|---|---|

| Whitout data augmentation | 93.81 | 0.1692 | 0.1167 | 11 min | 6400 |

| With data augmentation | 99.90 | 0.0044 | 0.0026 | 1196 min | 1,612,800 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Puruncajas, B.; Vidal, Y.; Tutivén, C. Vibration-Response-Only Structural Health Monitoring for Offshore Wind Turbine Jacket Foundations via Convolutional Neural Networks. Sensors 2020, 20, 3429. https://doi.org/10.3390/s20123429

Puruncajas B, Vidal Y, Tutivén C. Vibration-Response-Only Structural Health Monitoring for Offshore Wind Turbine Jacket Foundations via Convolutional Neural Networks. Sensors. 2020; 20(12):3429. https://doi.org/10.3390/s20123429

Chicago/Turabian StylePuruncajas, Bryan, Yolanda Vidal, and Christian Tutivén. 2020. "Vibration-Response-Only Structural Health Monitoring for Offshore Wind Turbine Jacket Foundations via Convolutional Neural Networks" Sensors 20, no. 12: 3429. https://doi.org/10.3390/s20123429