A Vision-Based Machine Learning Method for Barrier Access Control Using Vehicle License Plate Authentication

Abstract

:1. Introduction

2. Related Work

2.1. Detection or Localization

2.2. License Plate Character Segmentation

2.3. Recognition or Classification

2.4. Recent Methods of AVLPR

3. Methodology

3.1. Image Acquisition

3.2. Detection of the License Plate

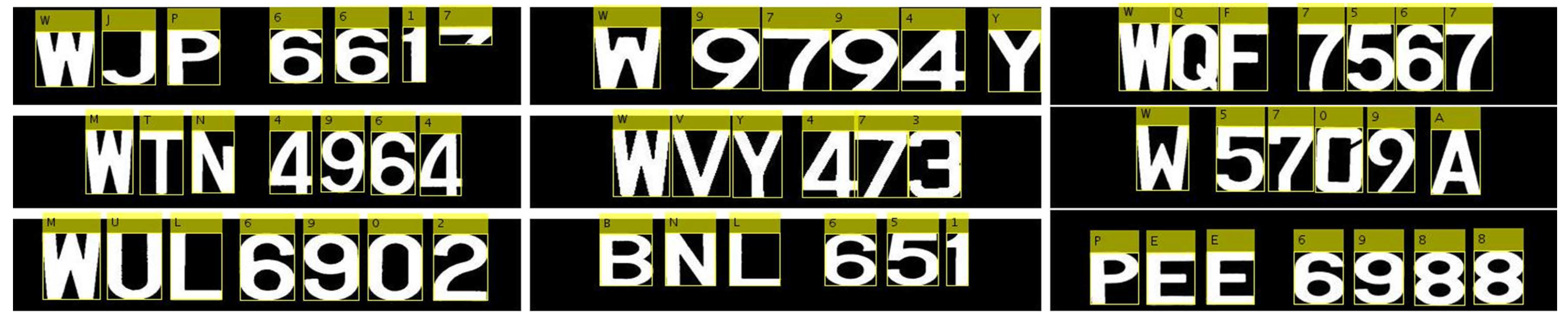

3.3. Alphanumeric Character Segmentation

| Algorithm 1: Alphanumeric character segmentation algorithm. |

|

3.4. Feature Extraction

3.5. Artificial Neural Network (ANN) Architecture

4. Experimental Results

4.1. Generation of a Synthetic Data for Training

4.2. Performance on Synthetic Data

4.3. Performance on Real Data

4.4. Comparison of Different Feature Extraction and Classification Methods

4.5. Performance Comparison with Other Similar Methods

4.6. Comparison of Methods with Respect to the Medialab Database

4.7. Comparison of Methods with Respect to the UFPR-ALPR Database

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Data Availability

Abbreviations

| ANN | artificial Neural Network |

| AVLPR | automatic vehicle license plate recognition |

| BoF | bag of words |

| CCA | connected component analysis |

| CNN | convolution neural network |

| FSVM | fuzzy support vector machines |

| GA | genetic algorithm |

| HOG | histogram of oriented gradients |

| KNN | k-nearest neighbors |

| NN | neural network |

| OCR | optical character recognition |

| ReLU | rectified linear unit |

| RGB | red, green, and blue |

| ROC | receiver operating characteristic |

| ROI | region of interest |

| SAE | stacked auto-encoders |

| SIFT | scale-invariant feature transform |

| SGDM | stochastic gradient descent with momentum |

| SVM | support Vector Machine |

| YOLO | you only look once |

References

- Saha, S.; Basu, S.; Nasipuri, M.; Basu, D.K. Localization of License Plates from Surveillance Camera Images: A Color Feature Based ANN Approach. Int. J. Comput. Appl. 2010, 1, 27–31. [Google Scholar] [CrossRef]

- Hongliang, B.; Changping, L. A hybrid license plate extraction method based on edge statistics and morphology. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004. [Google Scholar] [CrossRef]

- Jin, L.; Xian, H.; Bie, J.; Sun, Y.; Hou, H.; Niu, Q. License Plate Recognition Algorithm for Passenger Cars in Chinese Residential Areas. Sensors 2012, 12, 8355–8370. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Zhao, W.; Shen, Y. Automatic License Plate Recognition System Based on Color Image Processing. In Proceedings of the Computational Science and Its Applications, Singapore, 9–12 May 2005; Gervasi, O., Gavrilova, M.L., Kumar, V., Laganá, A., Lee, H.P., Mun, Y., Taniar, D., Tan, C.J.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1159–1168. [Google Scholar]

- Rizvi, S.; Patti, D.; Björklund, T.; Cabodi, G.; Francini, G. Deep Classifiers-Based License Plate Detection, Localization and Recognition on GPU-Powered Mobile Platform. Future Internet 2017, 9, 66. [Google Scholar] [CrossRef]

- Rafique, M.A.; Pedrycz, W.; Jeon, M. Vehicle license plate detection using region-based convolutional neural networks. Soft Comput. 2018, 22, 6429–6440. [Google Scholar] [CrossRef]

- Salau, A.O.; Yesufu, T.K.; Ogundare, B.S. Vehicle plate number localization using a modified GrabCut algorithm. J. King Saud Univ. Comput. Inf. Sci. 2019. [Google Scholar] [CrossRef]

- Kakani, B.V.; Gandhi, D.; Jani, S. Improved OCR based automatic vehicle number plate recognition using features trained neural network. In Proceedings of the 2017 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 3–5 July 2017. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Khairuddin, A.S.M.; Paramesran, R. A Vehicular License Plate Recognition Framework For Skewed Images. KSII Trans. Internet Inf. Syst. 2018, 12. [Google Scholar] [CrossRef] [Green Version]

- Yogheedha, K.; Nasir, A.; Jaafar, H.; Mamduh, S. Automatic Vehicle License Plate Recognition System Based on Image Processing and Template Matching Approach. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA), Kuching, Malaysia, 15–17 August 2018. [Google Scholar] [CrossRef]

- Ansari, N.N.; Singh, A.K.; Student, M.T. License Number Plate Recognition using Template Matching. Int. J. Comput. Trends Technol. 2016, 35, 175–178. [Google Scholar] [CrossRef]

- Samma, H.; Lim, C.P.; Saleh, J.M.; Suandi, S.A. A memetic-based fuzzy support vector machine model and its application to license plate recognition. Memetic Comput. 2016, 8, 235–251. [Google Scholar] [CrossRef]

- Tabrizi, S.S.; Cavus, N. A Hybrid KNN-SVM Model for Iranian License Plate Recognition. Procedia Comput. Sci. 2016, 102, 588–594. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Lee, H. Local Tiled Deep Networks for Recognition of Vehicle Make and Model. Sensors 2016, 16, 226. [Google Scholar] [CrossRef] [Green Version]

- Leeds City Council. Automatic Number Plate Recognition (ANPR) Project. 2018. Available online: http://data.gov.uk/dataset/f90db76e-e72f-4ab6-9927-765101b7d997 (accessed on 6 February 2019).

- Dynamics, S. Australia’s Leading ANPR—Automatic Number Plate Recognition Provider. 2015. Available online: http://www.sensordynamics.com.au/ (accessed on 6 February 2019).

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar] [CrossRef] [Green Version]

- Silva, S.M.; Jung, C.R. License Plate Detection and Recognition in Unconstrained Scenarios. Available online: http://www.inf.ufrgs.br/~smsilva/alpr-unconstrained/ (accessed on 7 February 2019).

- Resende Gonçalves, G.; Alves Diniz, M.; Laroca, R.; Menotti, D.; Robson Schwartz, W. Real-Time Automatic License Plate Recognition through Deep Multi-Task Networks. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 110–117. [Google Scholar] [CrossRef]

- Ullah, F.; Anwar, H.; Shahzadi, I.; Ur Rehman, A.; Mehmood, S.; Niaz, S.; Mahmood Awan, K.; Khan, A.; Kwak, D. Barrier Access Control Using Sensors Platform and Vehicle License Plate Characters Recognition. Sensors 2019, 19, 3015. [Google Scholar] [CrossRef] [Green Version]

- Suryanarayana, P.; Mitra, S.; Banerjee, A.; Roy, A. A Morphology Based Approach for Car License Plate Extraction. In Proceedings of the 2005 Annual IEEE India Conference-Indicon, Chennai, India, 11–13 December 2005. [Google Scholar] [CrossRef]

- Mahini, H.; Kasaei, S.; Dorri, F.; Dorri, F. An Efficient Features—Based License Plate Localization Method. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006. [Google Scholar] [CrossRef]

- Zheng, D.; Zhao, Y.; Wang, J. An efficient method of license plate location. Pattern Recognit. Lett. 2005, 26, 2431–2438. [Google Scholar] [CrossRef]

- Luo, Y.; Li, Y.; Huang, S.; Han, F. Multiple Chinese Vehicle License Plate Localization in Complex Scenes. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; pp. 745–749. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Hsieh, C.T.; Juan, Y.S.; Hung, K.M. Multiple License Plate Detection for Complex Background. In Proceedings of the 19th International Conference on Advanced Information Networking and Applications (AINA’05) Volume 1 (AINA papers), Taipei, Taiwan, 28–30 March 2005. [Google Scholar] [CrossRef]

- Haar, A. Zur Theorie der orthogonalen Funktionensysteme. Math. Ann. 1910, 69, 331–371. [Google Scholar] [CrossRef]

- Niu, B.; Huang, L.; Hu, J. Hybrid Method for License Plate Detection from Natural Scene Images. In Proceedings of the First International Conference on Information Science and Electronic Technology, Wuhan, China, 21–22 March 2015; Atlantis Press: Paris, France, 2015. [Google Scholar] [CrossRef] [Green Version]

- Arafat, M.Y.; Khairuddin, A.S.M.; Khairuddin, U.; Paramesran, R. Systematic review on vehicular licence plate recognition framework in intelligent transport systems. IET Intell. Transp. Syst. 2019. [Google Scholar] [CrossRef]

- Chai, D.; Zuo, Y. Extraction, Segmentation and Recognition of Vehicle’s License Plate Numbers. In Advances in Information and Communication Networks; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 724–732. [Google Scholar]

- Dhar, P.; Guha, S.; Biswas, T.; Abedin, M.Z. A System Design for License Plate Recognition by Using Edge Detection and Convolution Neural Network. In Proceedings of the 2018 International Conference on Computer, Communication, Chemical, Material and Electronic Engineering (IC4ME2), Rajshahi, Bangladesh, 8–9 February 2018; pp. 1–4. [Google Scholar] [CrossRef]

- De Gaetano Ariel, O.; Martín, D.F.; Ariel, A. ALPR character segmentation algorithm. In Proceedings of the 2018 IEEE 9th Latin American Symposium on Circuits Systems (LASCAS), Puerto Vallarta, Mexico, 25–28 February 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Kahraman, F.; Kurt, B.; Gökmen, M. License Plate Character Segmentation Based on the Gabor Transform and Vector Quantization. In Proceedings of the Computer and Information Sciences—ISCIS 2003, Antalya, Turkey, 3–5 November 2003; Yazıcı, A., Şener, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 381–388. [Google Scholar]

- Wu, Q.; Zhang, H.; Jia, W.; He, X.; Yang, J.; Hintz, T. Car Plate Detection Using Cascaded Tree-Style Learner Based on Hybrid Object Features. In Proceedings of the 2006 IEEE International Conference on Video and Signal Based Surveillance, Sydney, Australia, 22–24 November 2006. [Google Scholar] [CrossRef]

- Zhang, H.; Jia, W.; He, X.; Wu, Q. Learning-Based License Plate Detection Using Global and Local Features. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006. [Google Scholar] [CrossRef] [Green Version]

- Prewitt, J.M. Object enhancement and extraction. Pict. Process. Psychopictorics 1970, 10, 15–19. [Google Scholar]

- Thakur, M.; Raj, I.; P, G. The cooperative approach of genetic algorithm and neural network for the identification of vehicle License Plate number. In Proceedings of the 2015 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 19–20 March 2015. [Google Scholar] [CrossRef]

- Cheng, R.; Bai, Y.; Hu, H.; Tan, X. Radial Wavelet Neural Network with a Novel Self-Creating Disk-Cell-Splitting Algorithm for License Plate Character Recognition. Entropy 2015, 17, 3857–3876. [Google Scholar] [CrossRef] [Green Version]

- Brillantes, A.K.M.; Bandala, A.A.; Dadios, E.P.; Jose, J.A. Detection of Fonts and Characters with Hybrid Graphic-Text Plate Numbers. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju, South Korea, 28–31 October 2018; pp. 629–633. [Google Scholar] [CrossRef]

- Mukherjee, R.; Pundir, A.; Mahato, D.; Bhandari, G.; Saxena, G.J. A robust algorithm for morphological, spatial image-filtering and character feature extraction and mapping employed for vehicle number plate recognition. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 864–869. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Sobel, I. An isotropic 3 × 3 image gradient operater. In Machine Vision for Three-Dimensional Scenes; ResearchGate: Berlin, Germany, 1990; pp. 376–379. [Google Scholar]

- Olmí, H.; Urrea, C.; Jamett, M. Numeric Character Recognition System for Chilean License Plates in semicontrolled scenarios. Int. J. Comput. Intell. Syst. 2017, 10, 405. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Yao, J.; Zhao, J.; Tu, J.; Liu, Y. Multi-Oriented and Scale-Invariant License Plate Detection Based on Convolutional Neural Networks. Sensors 2019, 19, 1175. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Li, Y. A Recognition Algorithm for Similar Characters on License Plates Based on Improved CNN. In Proceedings of the 2015 11th International Conference on Computational Intelligence and Security (CIS), Shenzhen, China, 19–20 December 2015. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates Inc.: Dutchess County, NY, USA, 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Lee, S.; Son, K.; Kim, H.; Park, J. Car plate recognition based on CNN using embedded system with GPU. In Proceedings of the 2017 10th International Conference on Human System Interactions (HSI), Ulsan, South Korea, 17–19 July 2017. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Kessentini, Y.; Besbes, M.D.; Ammar, S.; Chabbouh, A. A two-stage deep neural network for multi-norm license plate detection and recognition. Expert Syst. Appl. 2019, 136, 159–170. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Chen, R.C. Automatic License Plate Recognition via sliding-window darknet-YOLO deep learning. Image Vis. Comput. 2019, 87, 47–56. [Google Scholar] [CrossRef]

- Yonetsu, S.; Iwamoto, Y.; Chen, Y.W. Two-Stage YOLOv2 for Accurate License-Plate Detection in Complex Scenes. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, USA, 11–13 January 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Abdullah, S.; Mahedi Hasan, M.; Muhammad Saiful Islam, S. YOLO-Based Three-Stage Network for Bangla License Plate Recognition in Dhaka Metropolitan City. In Proceedings of the 2018 International Conference on Bangla Speech and Language Processing (ICBSLP), Sylhet, Bangladesh, 21–22 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Silva, S.M.; Jung, C.R. Real-Time Brazilian License Plate Detection and Recognition Using Deep Convolutional Neural Networks. In Proceedings of the 2017 30th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Niteroi, Brazil, 17–20 October 2017; pp. 55–62. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; Omnipress: Madison, WI, USA, 2010; pp. 807–814. [Google Scholar]

- Robbins, H.; Monro, S. A Stochastic Approximation Method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Takahashi, K.; Takahashi, S.; Cui, Y.; Hashimoto, M. Remarks on Computational Facial Expression Recognition from HOG Features Using Quaternion Multi-layer Neural Network. In Engineering Applications of Neural Networks; Mladenov, V., Jayne, C., Iliadis, L., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 15–24. [Google Scholar]

- Sivic, J.; Zisserman, A. Efficient Visual Search of Videos Cast as Text Retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 591–606. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar] [CrossRef]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef] [Green Version]

- Anagnostopoulos, I.; Psoroulas, I.; Loumos, V.; Kayafas, E.; Anagnostopoulos, C.; Medialab LPR Database. Multimedia Technology Laboratory, National Technical University of Athens. Available online: http://www.medialab.ntua.gr/research/LPRdatabase.html (accessed on 7 November 2019).

| Name | Description |

|---|---|

| Image acquisition device name | Canon® Power Shot SX530 HS |

| Zooming capabilities | 50× Optical zoom |

| Camera zooming position | 5× Optical zoom |

| Weather | Daylight, rainy, sunny, cloudy |

| Capturing period | Day and Night |

| Background | Complex; not fixed |

| Horizontal field-of-view | Approximately 75° |

| Image dimension | |

| Vehicle speed limit | 20 km/h; 5.56 m/s |

| Capturing distance | 15 meter |

| Hidden Neurons | Repetition Number | Iterations | Time | Performance | Gradient | Error (%) |

|---|---|---|---|---|---|---|

| 10 | 1 | 160 | 0:00:45 | 1.47 × 10 | 9.03 × 10 | 1.50 × 10 |

| 2 | 179 | 0:00:51 | 8.10 × 10 | 9.09 × 10 | 6.11 × 10 | |

| 3 | 273 | 0:01:17 | 6.00 × 10 | 9.62 × 10 | 8.06 × 10 | |

| 4 | 189 | 0:00:53 | 2.02 × 10 | 1.34 × 10 | 1.86 × 10 | |

| 5 | 214 | 0:01:00 | 1.34 × 10 | 6.66 × 10 | 1.19 × 10 | |

| 20 | 1 | 167 | 0:01:07 | 2.00 × 10 | 1.04 × 10 | 2.50 × 10 |

| 2 | 186 | 0:01:18 | 4.86 × 10 | 1.97 × 10 | 2.50 × 10 | |

| 3 | 140 | 0:00:59 | 1.51 × 10 | 6.95 × 10 | 3.33 × 10 | |

| 4 | 165 | 0:01:10 | 6.12 × 10 | 2.72 × 10 | 2.22 × 10 | |

| 5 | 137 | 0:00:58 | 1.04 × 10 | 5.59 × 10 | 3.61 × 10 | |

| 40 | 1 | 124 | 0:01:26 | 3.65 × 10 | 2.35 × 10 | 2.78 × 10 |

| 2 | 124 | 0:01:25 | 2.78 × 10 | 2.12 × 10 | 1.67 × 10 | |

| 3 | 151 | 0:01:44 | 1.29 × 10 | 7.51 × 10 | 1.11 × 10 | |

| 4 | 142 | 0:02:03 | 7.50 × 10 | 3.67 × 10 | 1.67 × 10 | |

| 5 | 105 | 0:01:18 | 1.22 × 10 | 1.81 × 10 | 3.33 × 10 |

| Characters | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | A |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Quantity | 29 | 37 | 36 | 40 | 40 | 41 | 39 | 51 | 35 | 33 | 13 |

| Characters | B | C | D | E | F | G | H | J | K | L | M |

| Quantity | 16 | 7 | 7 | 10 | 5 | 7 | 7 | 13 | 12 | 12 | 7 |

| Characters | N | P | Q | R | S | T | U | V | W | X | Y |

| Quantity | 10 | 15 | 9 | 12 | 9 | 13 | 3 | 15 | 73 | 6 | 9 |

| Method | Processing Time (s) | Accuracy (%) | ||||

|---|---|---|---|---|---|---|

| Plate Extraction | Character Extraction | Feature Extraction | Classification | Total | ||

| BoF+SAE | 0.15 | 0.25 | 0.21 | 0.015 | 0.625 | 95.73 |

| BoF+KNN | 0.15 | 0.25 | 0.21 | 0.024 | 0.634 | 88.25 |

| BoF+SVM | 0.15 | 0.25 | 0.21 | 0.020 | 0.630 | 89.78 |

| BoF+ANN | 0.15 | 0.25 | 0.21 | 0.021 | 0.631 | 98.33 |

| SIFT+SAE | 0.15 | 0.25 | 0.28 | 0.019 | 0.699 | 93.75 |

| SIFT+KNN | 0.15 | 0.25 | 0.28 | 0.030 | 0.710 | 87.38 |

| SIFT+SVM | 0.15 | 0.25 | 0.28 | 0.027 | 0.707 | 88.94 |

| SIFT+ANN | 0.15 | 0.25 | 0.28 | 0.026 | 0.706 | 96.18 |

| HOG+SAE | 0.15 | 0.25 | 0.27 | 0.018 | 0.688 | 94.30 |

| HOG+KNN | 0.15 | 0.25 | 0.27 | 0.028 | 0.698 | 97.60 |

| HOG+SVM | 0.15 | 0.25 | 0.27 | 0.025 | 0.695 | 98.90 |

| Proposed (HOG+ANN) | 0.15 | 0.25 | 0.27 | 0.010 | 0.690 | 99.70 |

| Method | Feature Extraction Method | Classifier | Total Time (s) | Accuracy (%) |

|---|---|---|---|---|

| Jin et al. [3] | Hand-Crafted | Fuzzy | 0.432 | 92.00 |

| Arafat et al. [9] | OCR | OCR | 0.681 | 97.86 |

| Samma et al. [12] | Haar-like | FSVM | 0.649 | 98.36 |

| Tabrizi et al. [13] | KNN+SVM | KNN+SVM | 0.721 | 97.03 |

| Niu et al. [28] | HOG | SVM | 0.645 | 96.60 |

| Li et al. [45] | CNN | CNN | 0.825 | 99.20 |

| Thakur et al. [37] | GA | ANN | 0.532 | 97.00 |

| Cheng et al. [38] | SCDCS-LS | RWNN | 0.659 | 99.54 |

| Lee et al. [47] | AlexNet | AlexNet | 0.983 | 99.58 |

| Proposed | HOG | ANN | 0.280 | 99.70 |

| Method | Accuracy (%) | ||

|---|---|---|---|

| Detection | Segmentation | Classification | |

| Jin et al. [3] | 95.73 | 98.87 | 91.25 |

| Arafat et al. [9] | 98.30 | 99.30 | 96.57 |

| Samma et al. [12] | 96.25 | — | 98.05 |

| Tabrizi et al. [13] | 96.98 | 96.85 | 96.54 |

| Niu et al. [28] | 98.45 | — | 96.38 |

| Li et al. [45] | — | — | 98.52 |

| Thakur et al. [37] | 97.85 | 98.37 | 97.35 |

| Cheng et al. [38] | — | — | 99.38 |

| Lee et al. [47] | — | — | 97.38 |

| Proposed | 99.30 | 99.45 | 99.50 |

| Method | Accuracy (%) | ||

|---|---|---|---|

| Detection | Segmentation | Classification | |

| Jin et al. [3] | 85.48 | 91.75 | 85.35 |

| Arafat et al. [9] | 85.45 | 93.45 | 90.37 |

| Samma et al. [12] | 80.35 | — | 91.70 |

| Tabrizi et al. [13] | 84.45 | 90.50 | 92.86 |

| Niu et al. [28] | 85.80 | — | 89.32 |

| Li et al. [45] | — | — | 92.71 |

| Thakur et al. [37] | 82.35 | 91.22 | 90.85 |

| Cheng et al. [38] | — | — | 92.50 |

| Lee et al. [47] | — | — | 92.75 |

| Proposed | 98.45 | 93.85 | 95.80 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, K.T.; Raj, R.G.; Shamsul Islam, S.M.; Wijewickrema, S.; Hossain, M.S.; Razmovski, T.; O’Leary, S. A Vision-Based Machine Learning Method for Barrier Access Control Using Vehicle License Plate Authentication. Sensors 2020, 20, 3578. https://doi.org/10.3390/s20123578

Islam KT, Raj RG, Shamsul Islam SM, Wijewickrema S, Hossain MS, Razmovski T, O’Leary S. A Vision-Based Machine Learning Method for Barrier Access Control Using Vehicle License Plate Authentication. Sensors. 2020; 20(12):3578. https://doi.org/10.3390/s20123578

Chicago/Turabian StyleIslam, Kh Tohidul, Ram Gopal Raj, Syed Mohammed Shamsul Islam, Sudanthi Wijewickrema, Md Sazzad Hossain, Tayla Razmovski, and Stephen O’Leary. 2020. "A Vision-Based Machine Learning Method for Barrier Access Control Using Vehicle License Plate Authentication" Sensors 20, no. 12: 3578. https://doi.org/10.3390/s20123578

APA StyleIslam, K. T., Raj, R. G., Shamsul Islam, S. M., Wijewickrema, S., Hossain, M. S., Razmovski, T., & O’Leary, S. (2020). A Vision-Based Machine Learning Method for Barrier Access Control Using Vehicle License Plate Authentication. Sensors, 20(12), 3578. https://doi.org/10.3390/s20123578