Autonomous Road Roundabout Detection and Navigation System for Smart Vehicles and Cities Using Laser Simulator–Fuzzy Logic Algorithms and Sensor Fusion

Abstract

1. Introduction

2. Related Works

- Autonomous road roundabout detection

- Autonomous road roundabout navigation

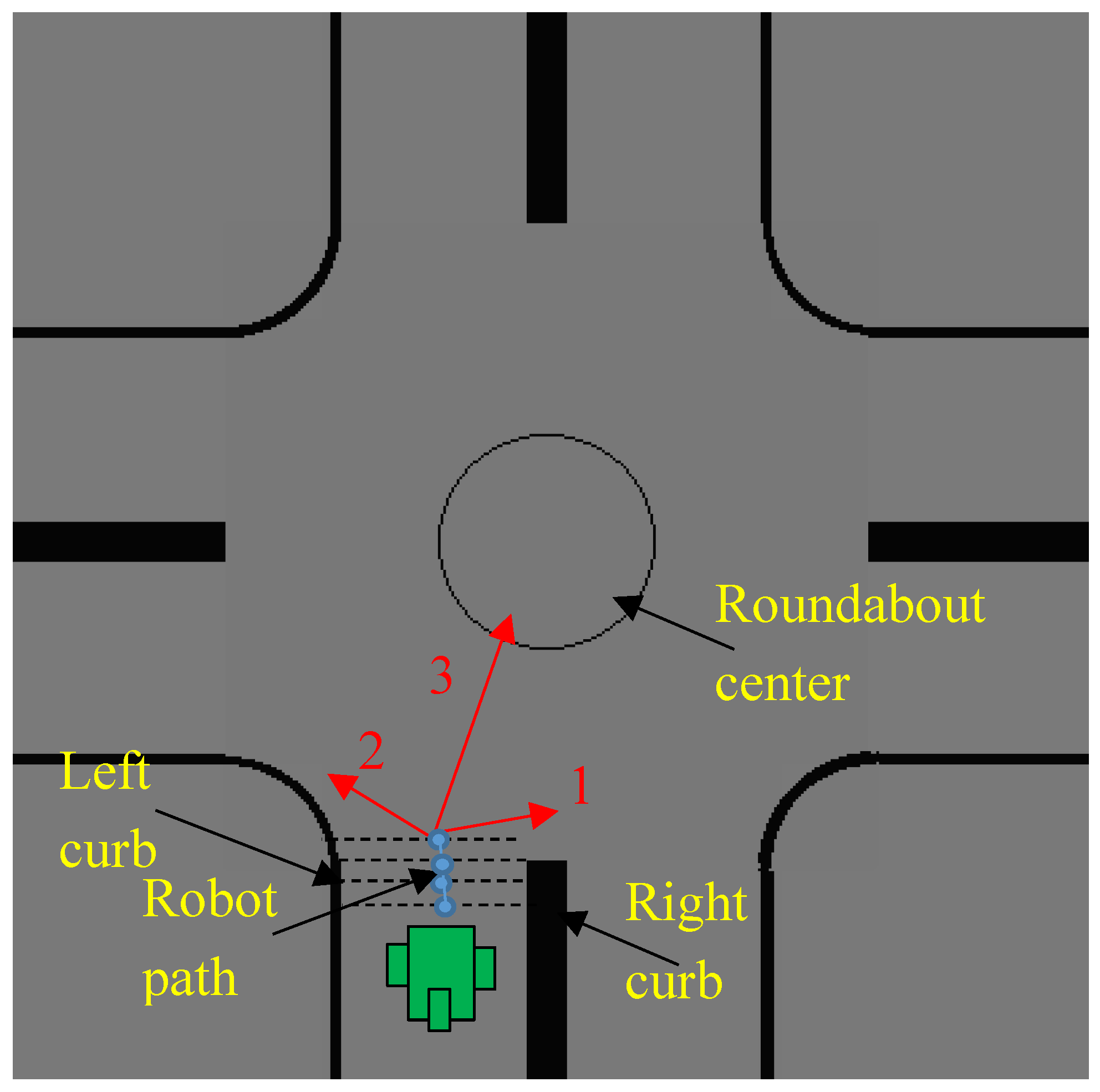

3. Autonomous Road Roundabout Detection

- The right curb of the road is suddenly faded

- The left curb of the road is slightly faded

- There is a circular/elliptical curve located in front of the robot

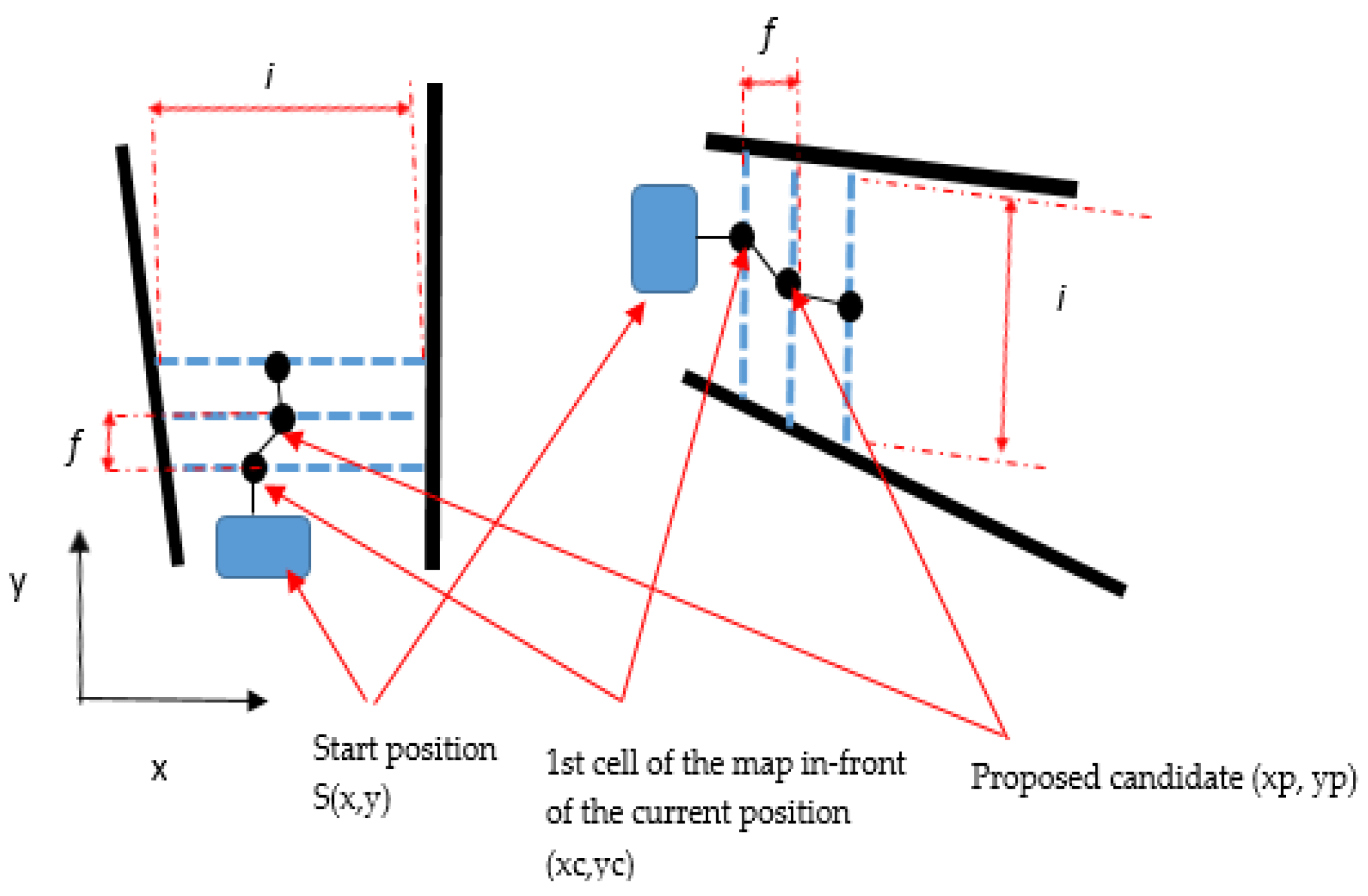

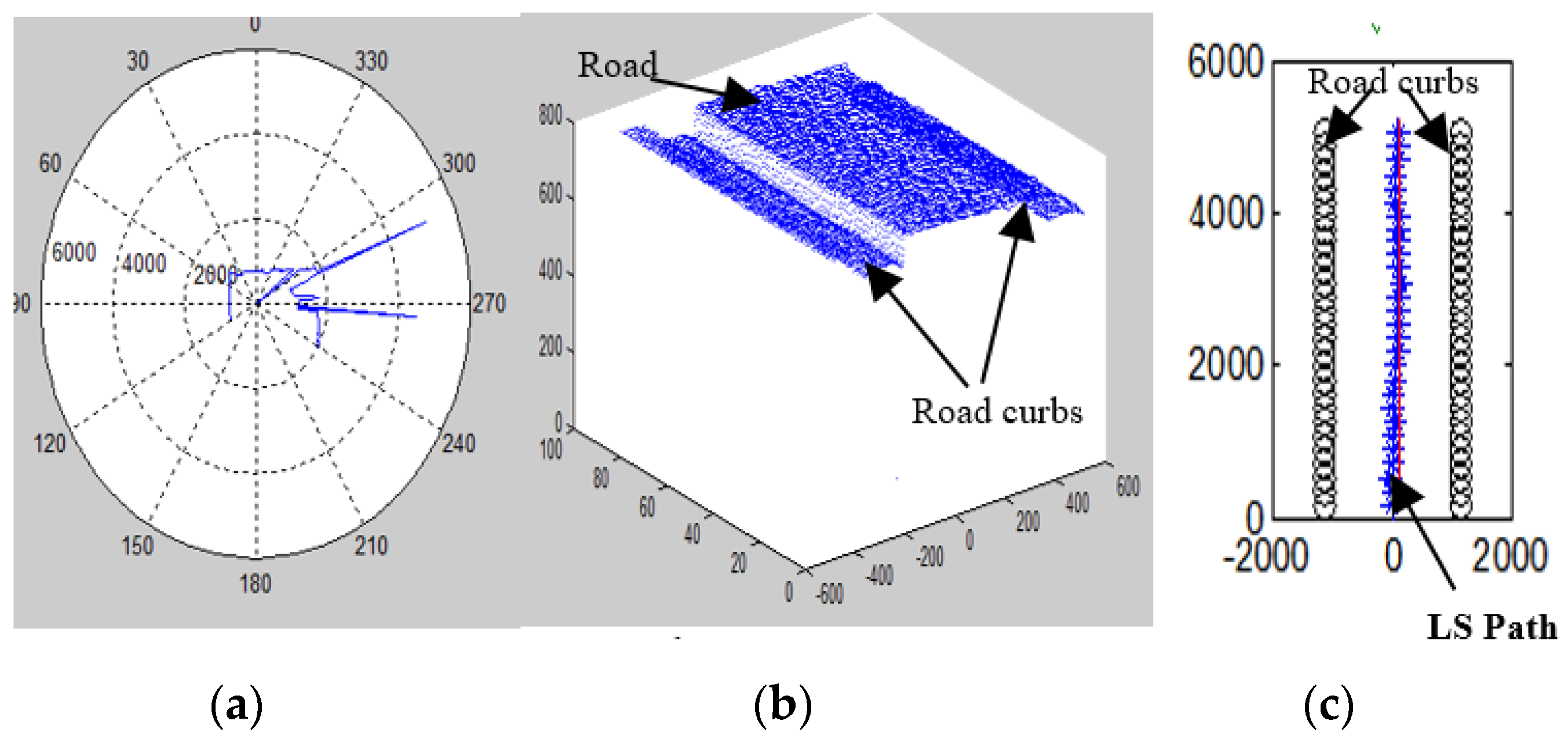

3.1. Developing of a Local Map for the Road Environment

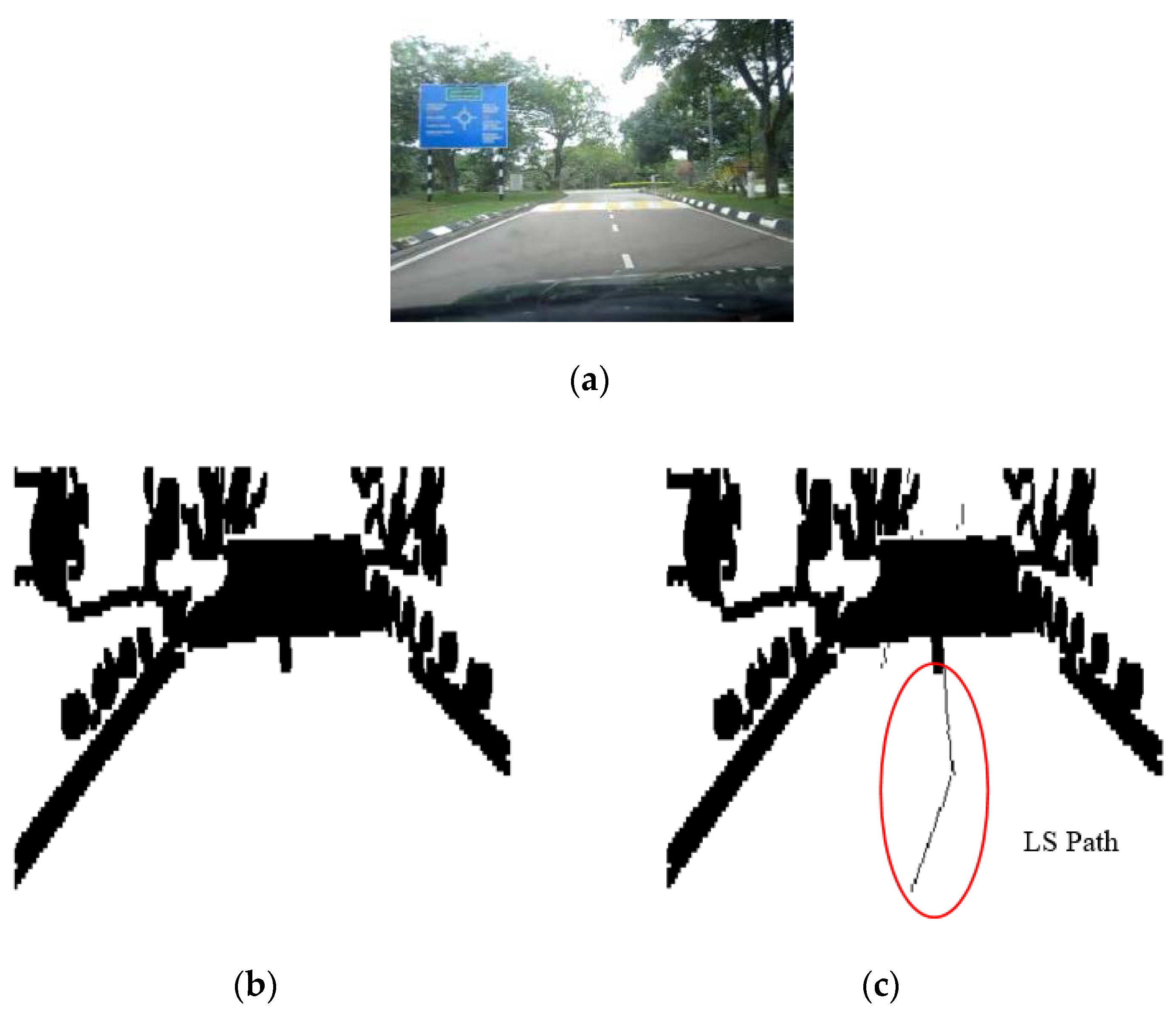

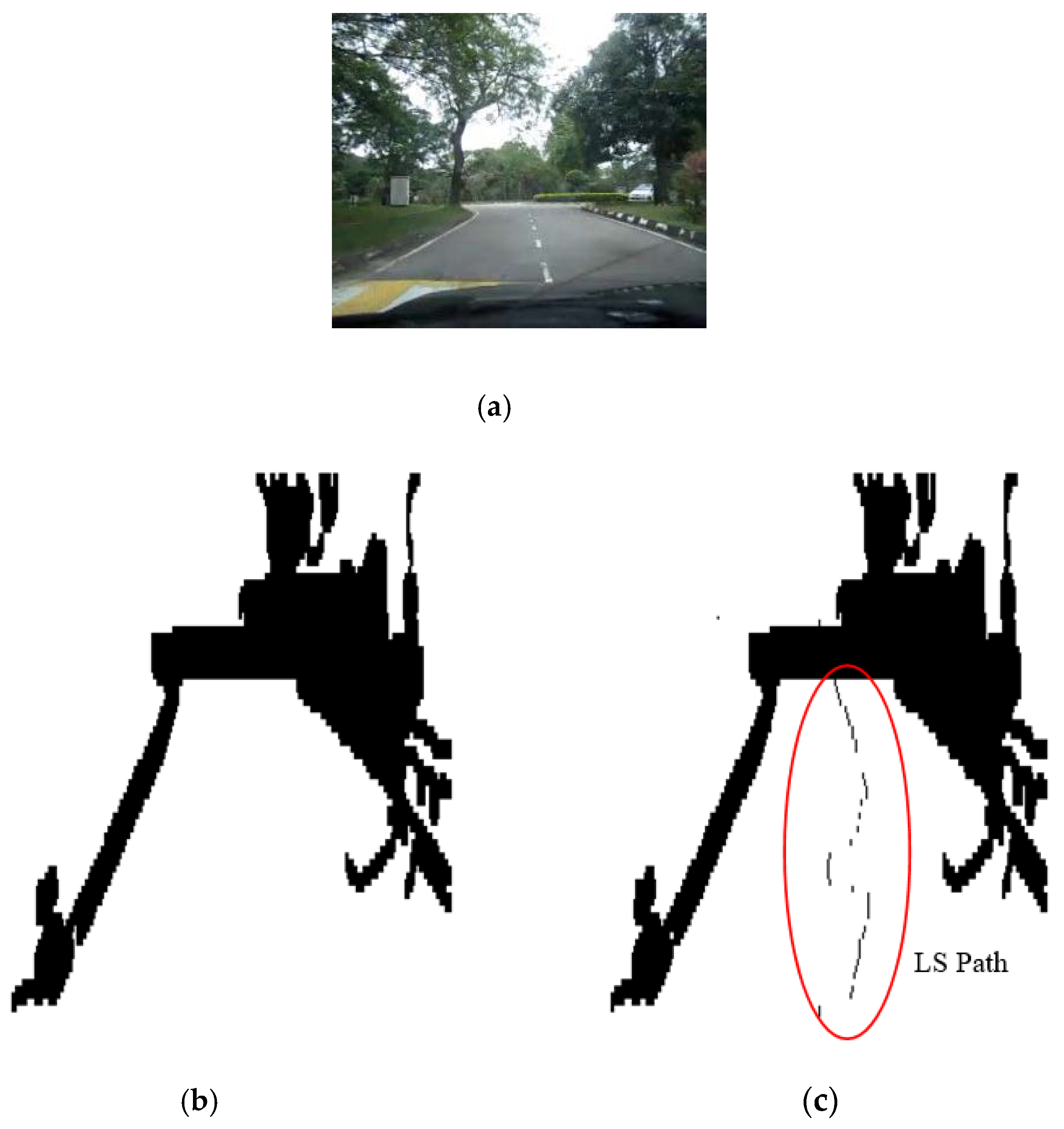

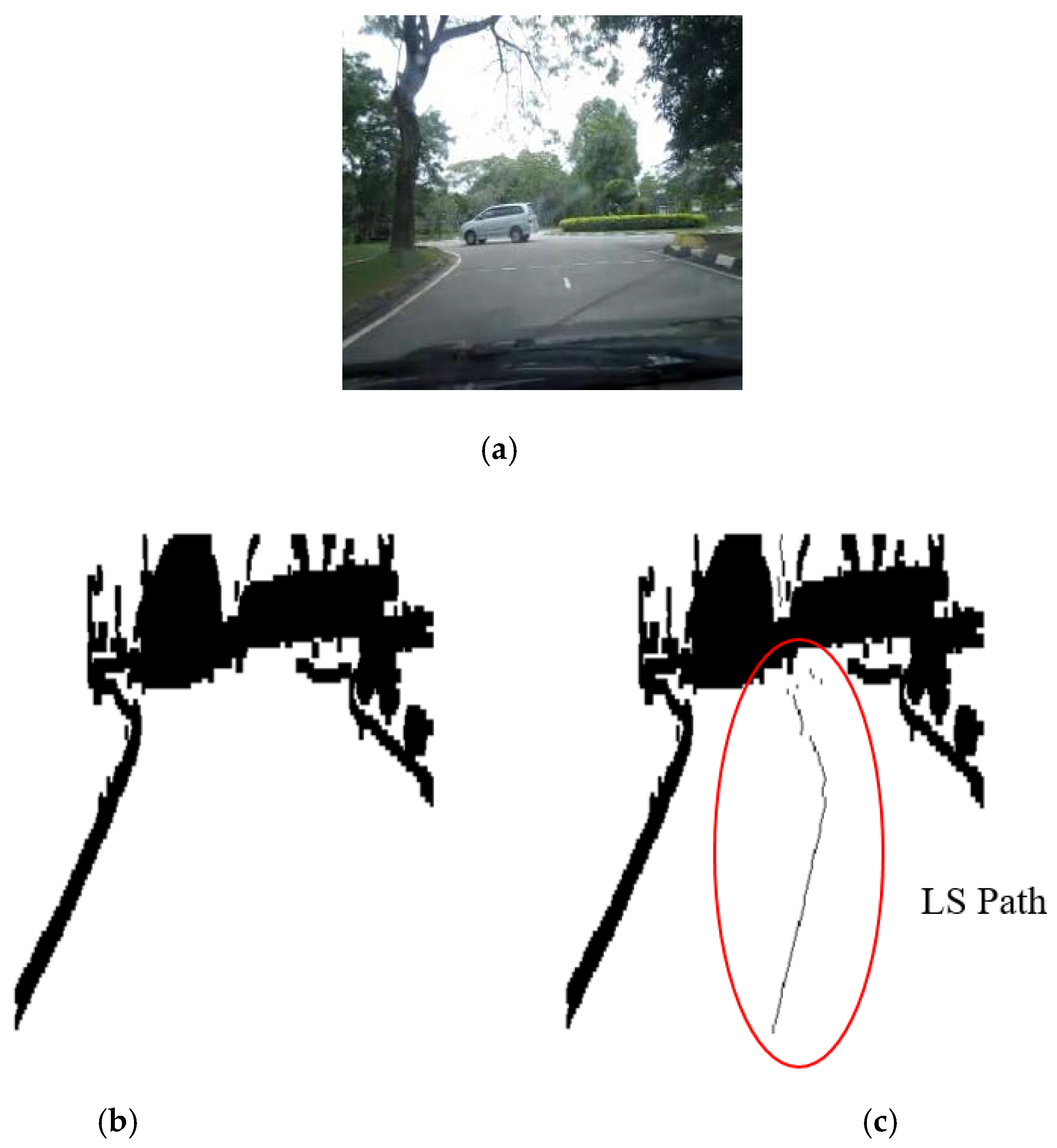

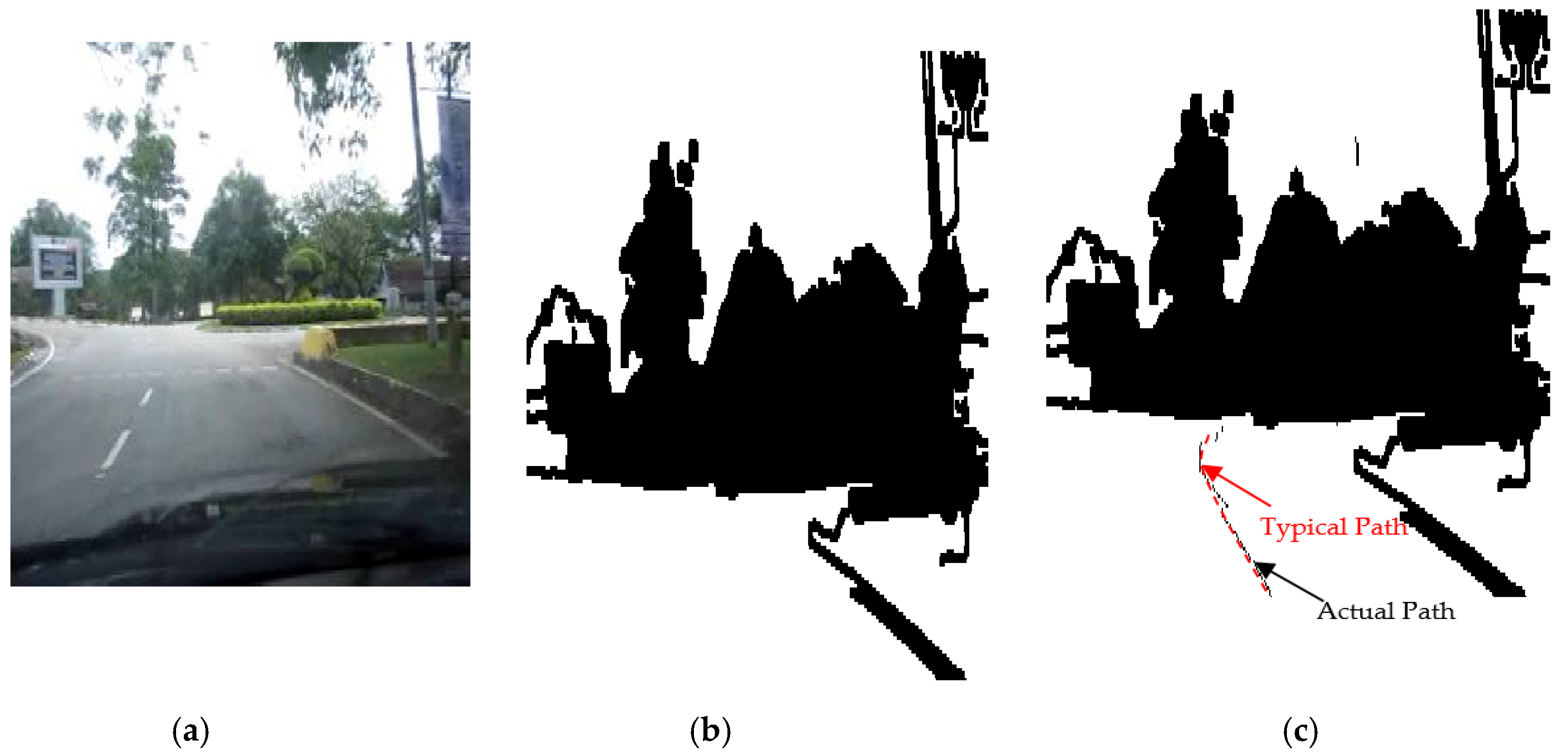

3.2. Laser Simulator-Based Roundabout Detection

3.2.1. Right and Left Side Detection

Generation of Points’ Center Reference in the Image

Detecting of the Curbs in the Right and Left Sides

3.2.2. Roundabout Center Detection:

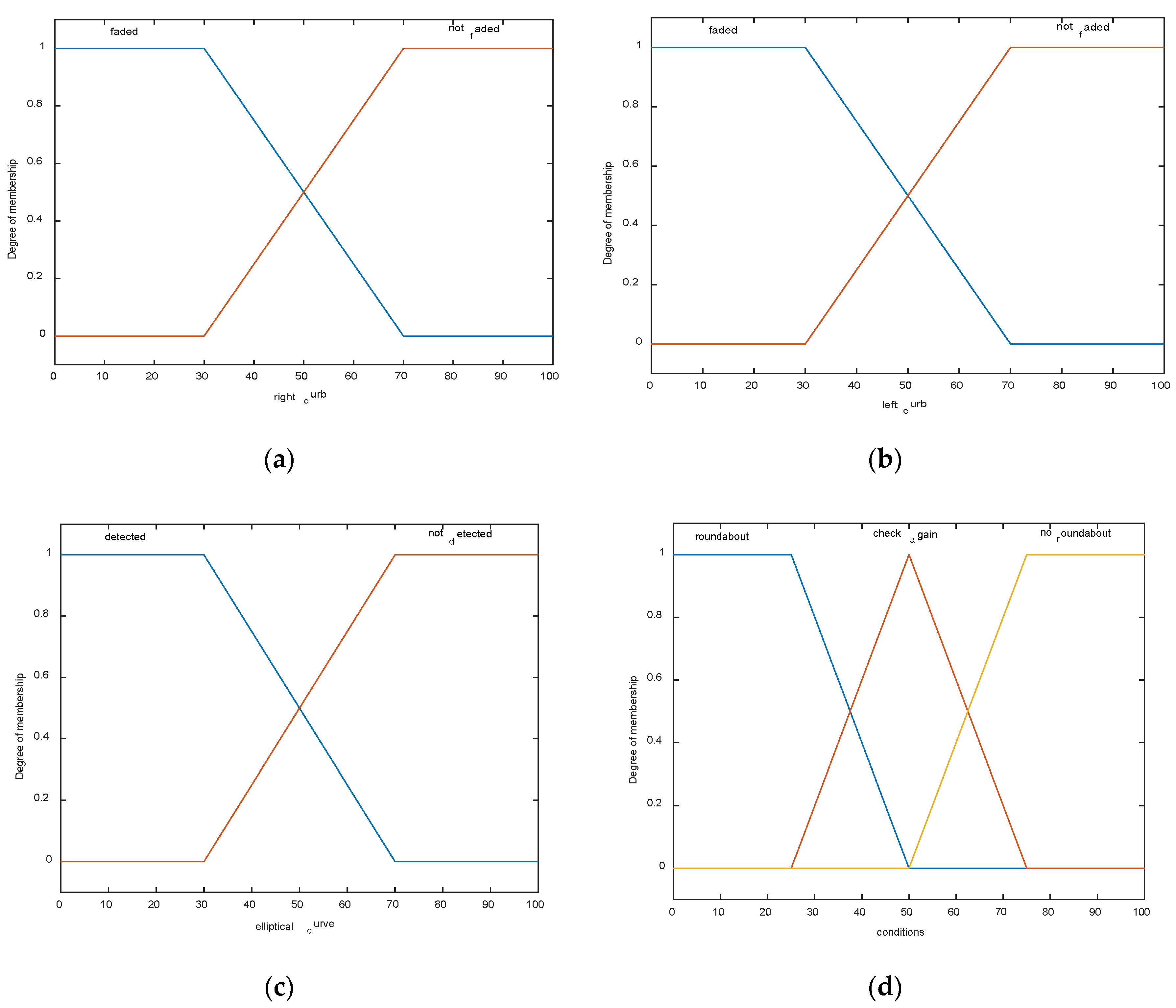

3.3. Fuzzy Logic-Based Decision Making

- IF (the right curb is faded) and (elliptical curve is detected) and (left curb is faded) THEN (there is a roundabout in front.)

- IF (the right curb is faded) and (elliptical curve is detected) and (left curb is not faded) THEN (check again in the next laser simulator lines)

- IF (the right curb is not faded) and (elliptical curve is detected) and (left curb is faded) THEN (check again in the next laser simulator lines).

- IF (the right curb is not faded) and (elliptical curve is not detected) and (left curb is faded) THEN (this is not a roundabout).

4. Sensor Fusion for Path Planning and Roundabout Navigation

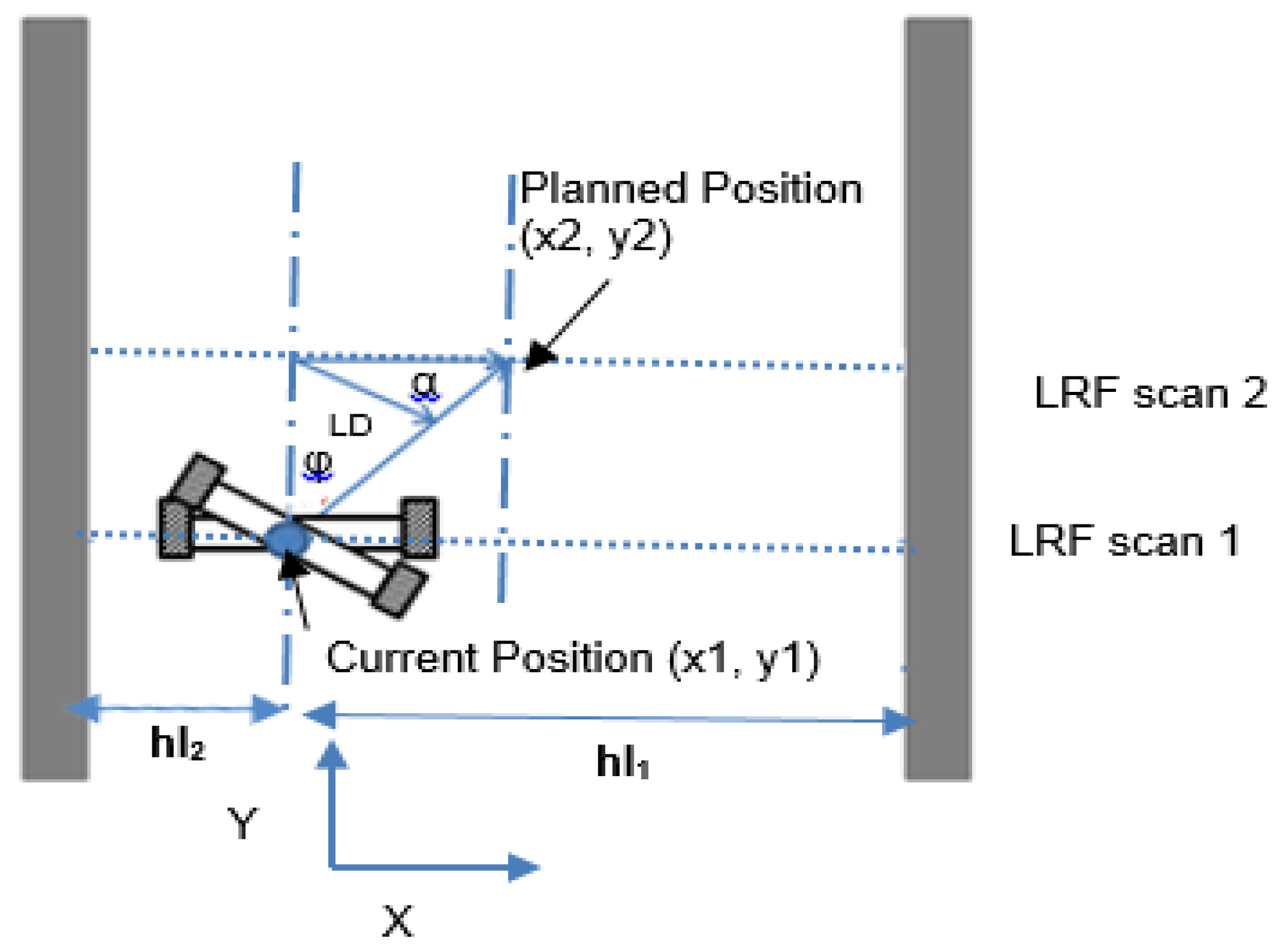

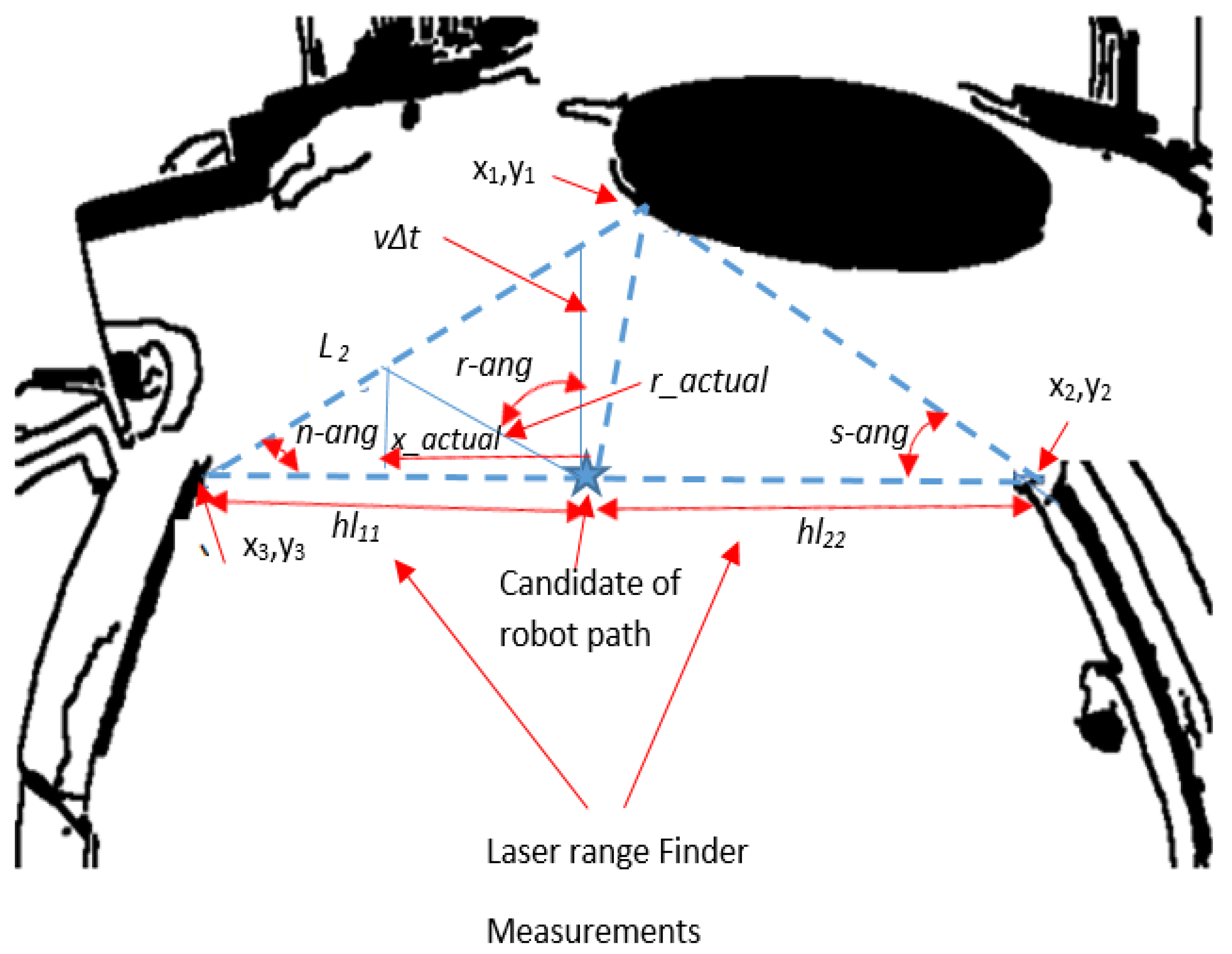

4.1. Sensor Fusion Modeling

4.1.1. Odometry-Based Measurements

4.1.2. LRF-Based Measurements

4.2. Road Roundabout Navigation

4.2.1. Navigation in the Road Following

4.2.2. Navigation in Road Roundabout Center

- rd > π/2: robot will exit the roundabout at the first left turn.

- rd > π: robot will exit the roundabout at the second left turn and in the straight direction.

- rd > 3π/2: robot will exit the roundabout at the third left turn.

- rd > 2π: robot will exit the roundabout at the fourth left turn.

5. Results and Discussion

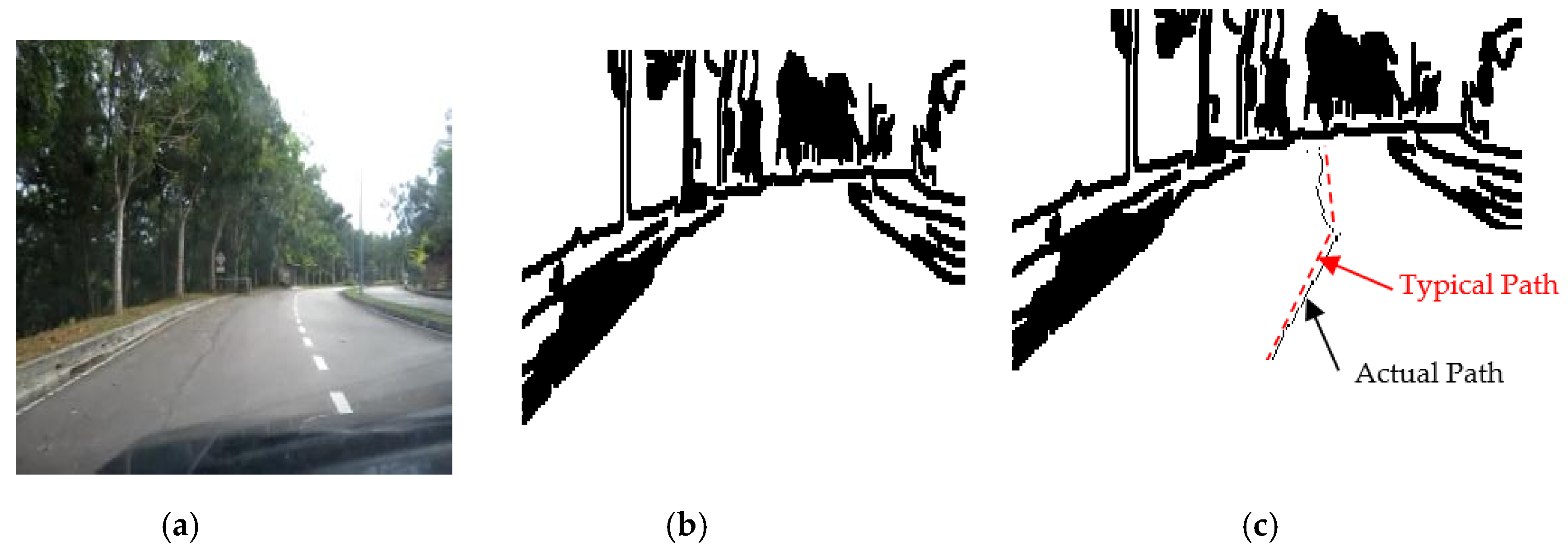

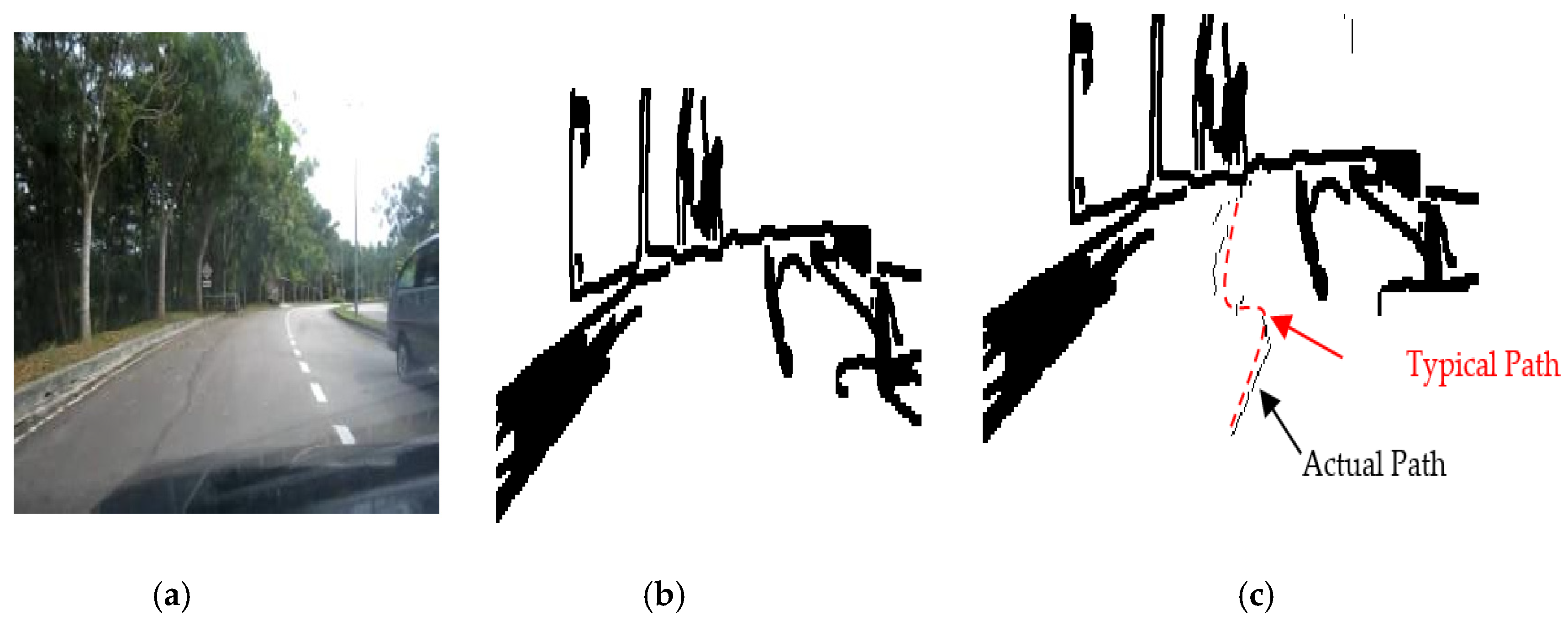

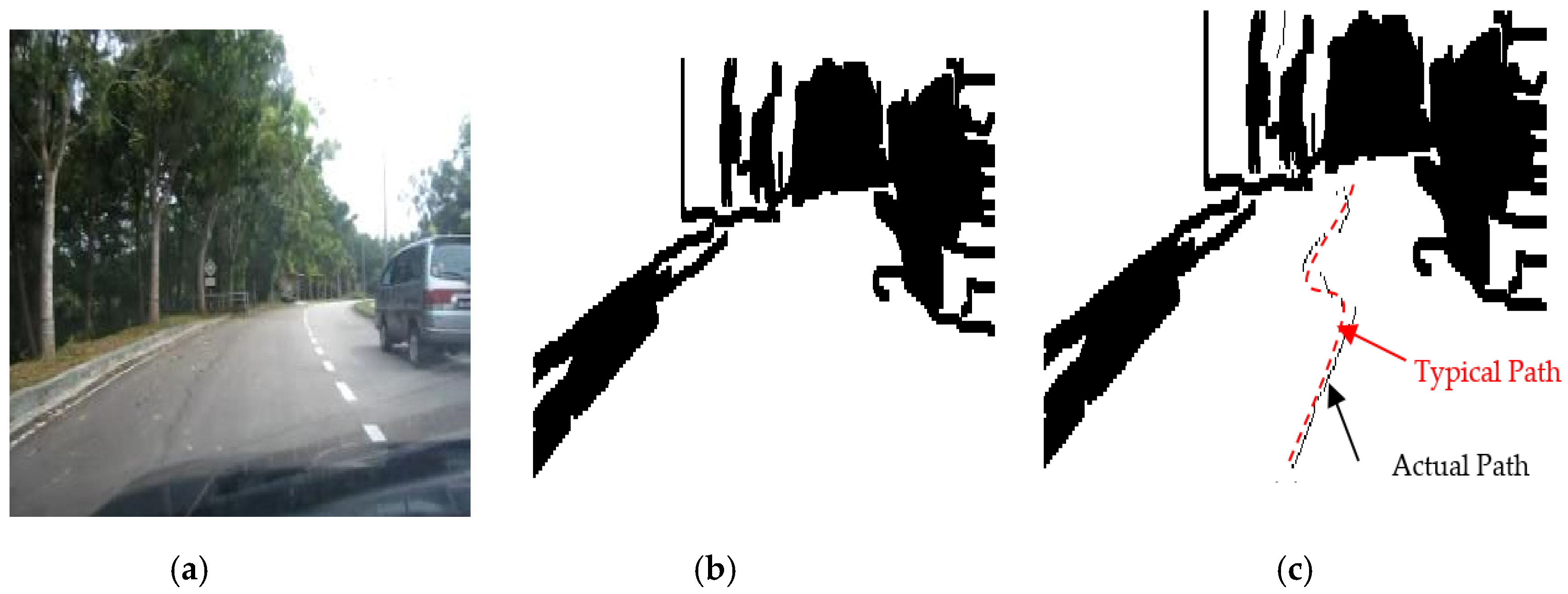

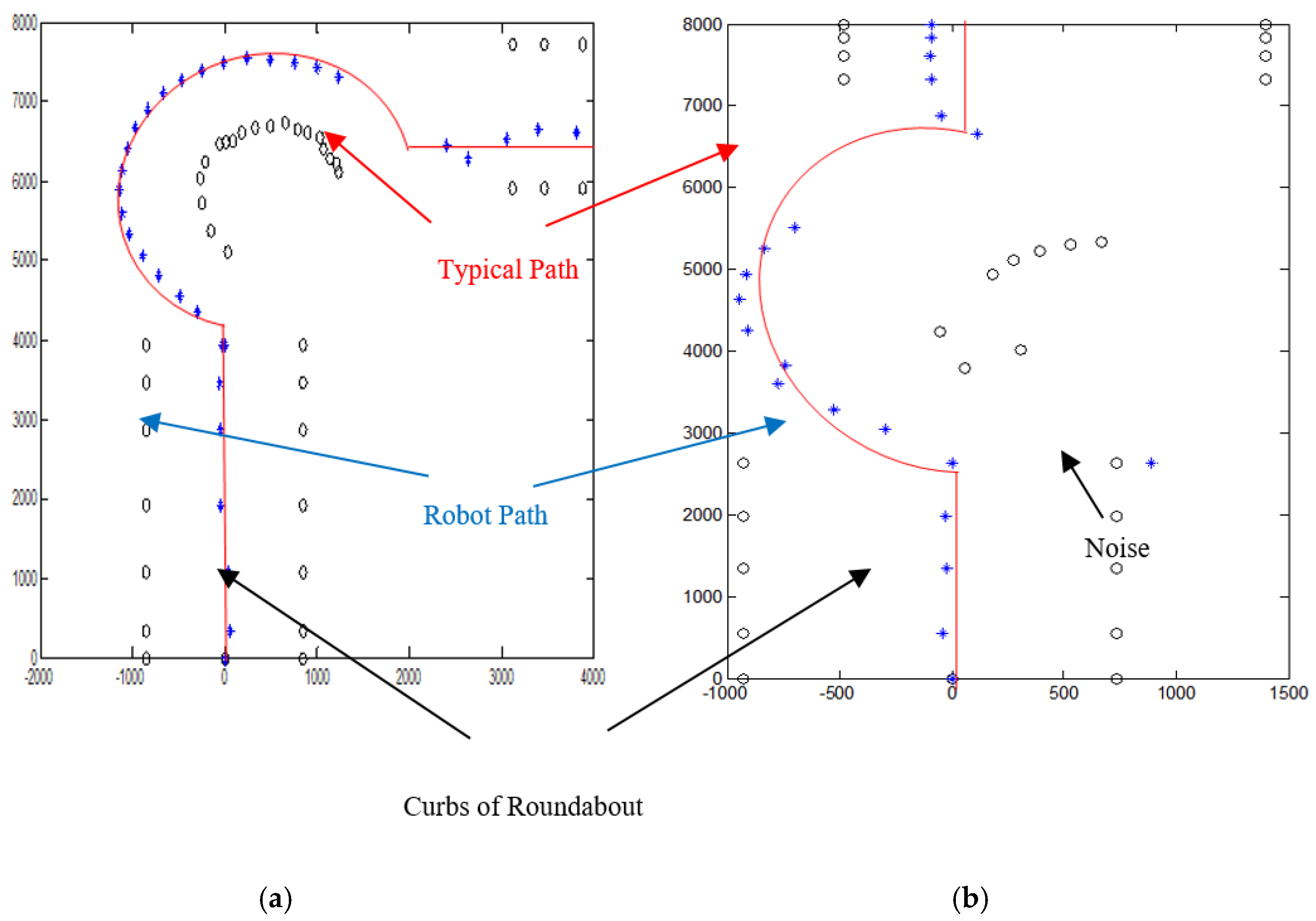

- Accuracy of navigation system: It can be defined as the variation between the actual and typical paths during navigation in the road from start to goal position. For this purpose, the generated path (black dotted line as in Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21 and Figure 22) is compared with the typical path (red dotted line as in Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21 and Figure 22). The typical path in this work is considered as the path located in the middle of the road.

- Efficiency (Reliability) of navigation system: It can be defined as the capability of the proposed algorithm to detect the road boundaries and borders among other surrounding environments of robot during autonomous navigation on the roads, in the presence of noise.

- Cost of navigation system: One can differentiate between two kinds of costs, namely; fixed and operational costs. The fixed cost is the total cost of the hardware that has been used to perform the suggested algorithm, which is too low, in comparison with Tesla and Google autonomous vehicles. The total cost of robotic system in this project as shown in Figure 10 is around 5K USD; however, the cost of current autonomous vehicles such as Tesla or Google are in the range of 50–500K USD. The operational costs are varied during autonomous navigation on the roads based on the road conditions where the fuel consumption and electrical current profiles are changed during road navigation.

5.1. Road Following

- The autonomous vehicle is moving lonely on the road

- The autonomous vehicle navigation system recognizes partially other vehicles on the side/in front of autonomous vehicle, as shown in Figure 17.

5.2. Roundabout Intersection

5.3. Comparison with Other Related Works

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ali, M.A.H.; Mailah, M.; Tang, A.; Hing, H. Path Navigation of Mobile Robot in a Road Roundabout Setting. In Proceedings of the 1st International Conference on Systems, Control, Power and Robotics, Singapore, 11–13 March 2012. [Google Scholar]

- Ali, M.A.H.; Mailah, M.; Tang, A.; Hing, H. Path Planning of Mobile Robot for Autonomous Navigation of Road Roundabout Intersection. Int. J. Mech. 2012, 6, 203–211. [Google Scholar]

- Ali, M.A.H.; Mailah, M.; Tang, A.; Hing, H. A novel Approach for Visibility Search Graph Based Path Planning. In Proceedings of the 13th International conference on Robotics, Control and Manufacturing Systems, Kuala-Lumpur, Malaysia, 2–4 April 2013; pp. 44–49. [Google Scholar]

- Lotufo, R.; Morgan, A.; Dagless, E.; Milford, D.; Morrissey, J.; Thomas, B. Real-Time Road Edge Following For Mobile Robot Navigation. Electron. Commun. Eng. J. 1990, 2, 35–40. [Google Scholar] [CrossRef]

- Matsushita, Y.; Miura, J. On-line Road Boundary Modeling with Multiple Sensory Features, Flexible Road Modeland Particle Filter. Robot. Auton. Syst. 2011, 59, 274–284. [Google Scholar] [CrossRef]

- Sotelo, M.A.; Rodriguez, F.J.; Magdalena, L.; Bergasa, L.M.; Boquete, L. A Color Vision-Based Lane Tracking System for Autonomous Driving on Unmarked Roads. Auton. Robot. 2004, 16, 95–116. [Google Scholar] [CrossRef]

- Crisman, J.; Thorpe, C. SCARF: A Color Vision System that Tracks Roads and Intersections. IEEE Trans. Robot. Autom. 1993, 9, 49–58. [Google Scholar] [CrossRef]

- Kluge, K.; Thorpe, C. The YARF System for Vision-Based Road Following. Math. Comput. Model. 1995, 22, 213–233. [Google Scholar] [CrossRef]

- Okutomi, M.; Nakano, K.; Matsuyama, J.; Hara, T. Robust Estimation of Planar Regions for Visual Navigation Using Sequential Stereo Images. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 3321–3327. [Google Scholar]

- Enkelmann, W.; Struck, G.; Geisler, J. ROMA—A System for Model-Based Analysis of Road Markings. In Proceedings of the Intelligent Vehicles ‘95. Symposium, Detroit, MI, USA, 25–26 September 1995; pp. 356–360. [Google Scholar]

- Jochem, T.; Pomerleau, D.; Thorpe, C. Vision-Based Neural Network Road and Intersection Detection and Traversal; Robot. Inst., Carnegie Mellon Univ.: Pittsburgh, PA, USA, 1995. [Google Scholar]

- Kim, S.; Roh, C.; Kang, S.; Park, M. Outdoor Navigation of a Mobile Robot Using Differential GPS and Curb Detection. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3414–3419. [Google Scholar]

- Georgiev, A.; Allen, P. Localization Methods for a Mobile Robot in Urban Environments. IEEE Trans. Robot. 2004, 20, 851–864. [Google Scholar] [CrossRef]

- Narayan, A.; Tuci, E.; Labrosse, F.; Alkilabi, M.H. A Dynamic Colour Perception System for Autonomous Robot Navigation on Unmarked Roads. Neurocomputing 2018, 275, 2251–2263. [Google Scholar] [CrossRef]

- Qian, C.; Shen, X.; Zhang, Y.; Yang, Q.; Shen, J.; Zhu, H. Building and Climbing based Visual Navigation Framework for Self-Driving Cars. Mob. Netw. Appl. 2017, 23, 624–638. [Google Scholar] [CrossRef]

- Ali, M.A.H.; Mailah, M. Path Planning and Control of Mobile Root in Road Environments using Sensor Fusion and Active Force Control. IEEE Trans. Veh. Techol. 2019, 68, 2176–2195. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, W.; Wu, X.; Liu, Z. Vehicle-Following Model Using Virtual Piecewise Spline Tow Bar. J. Transp. Eng. 2016, 142, 04016051. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, W.; Peter, C.C.; Wu, X. A Novel Navigation System for Indoor Cleaning Robot. In Proceedings of the International Conference on Robotics and Biomimetics, Qingdao, China, 3–7 December 2016; pp. 2159–2164. [Google Scholar]

- Battista, T.; Woolsey, C.; Perez, T.; Valentinis, F. A dynamic model for underwater vehicle maneuvering near a free surface. IFAC OnLine 2016, 49, 68–73. [Google Scholar]

- Cossu, G.; Sturniolo, A.; Messa, A.; Grechi, S.; Costa, D.; Bartolini, A.; Scaradozzi, D.; Caiti, A.; Ciaramella, E. Sea-trial of optical ethernet modems for underwater wireless communications. J. Light. Technol. 2018, 36, 5371–5380. [Google Scholar] [CrossRef]

- Ling, S.D.; Mahon, I.; Marzloff, M.; Pizarro, O.; Johnson, C.R.; Williams, S.B. Stereo-imaging AUV detects trends in sea urchin abundance on deep overgrazed reefs. Limnol. Oceanogr. Methods 2016, 14, 293–304. [Google Scholar] [CrossRef]

- Jorge, F. Detecting Roundabout Manoeuvres Using Open-Street-Map and Vehicle State. Master’s Thesis, Department Vehicle Safety, Chalmers University of Tech., Goteborg, Sweden, 2012. [Google Scholar]

- Perez, J.; Milanes, V.; De Pedro, T.; Vlacic, L. Autonomous driving manoeuvres in urban road traffic environment: A study on roundabouts. IFAC Proc. Vol. 2011, 44, 13795–13800. [Google Scholar] [CrossRef]

- Katrakazas, C.; Quddus, M.A.; Chen, W.-H.; Deka, L. Real-time Motion Planning Methods for Autonomous on Road Driving: State-of-Art and Future Research Directions. Transp. Res. Part C Emerg. Technol. 2015, 60, 416–442. [Google Scholar] [CrossRef]

- Perez, J.; Godoy, J.; Villagra, J.; Onieva, E. Trajectory generator for autonomous vehicles in urban environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1–7. [Google Scholar]

- Okusa, Y. Navigation System. U.S. Patent 2007/0150182 A1, 28 June 2007. [Google Scholar]

- Cuenca, L.G.; Sanchez-Soriano, J.; Puertas, E.; Fernández, J.; Aliane, N. Machine Learning Techniques for Undertaking Roundabouts in Autonomous Driving. Sensors 2019, 19, 2386. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3D Object Detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yu, Y.; Li, J.; Wen, C.; Guan, H.; Luo, H.; Wang, C. Bag-of-visual-phrases and hierarchical deep models for traffic sign detection and recognition in mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2016, 113, 106–123. [Google Scholar] [CrossRef]

- Bimbraw, K. Autonomous cars: Past, present and future a review of the developments in the last century, the present scenario and the expected future of autonomous vehicle technology. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; pp. 191–198. [Google Scholar]

| Condition/ Property | Clear Road Curbs | Presence of Obstacle | Camera and LRF Problems | Missed Road Curbs |

|---|---|---|---|---|

| Accuracy | 1–3 cm | 2–5 cm | 3–10 cm | 2–5 cm |

| Efficiency | 95% | 90% | 90% | 90% |

| Operational Cost | decreased | increased | increased | Increased |

| Condition/Property | Clear Road Curbs | Approaching to Roundabout | Presence of Obstacle | Missed Road Curbs | Camera and LRF Problem |

|---|---|---|---|---|---|

| Accuracy | 2–3 cm | 2–4 cm | 2–4 cm | 2–4 cm | 3–8 cm |

| Efficiency | 95% | 90% | 90% | 90% | 90% |

| Operational Cost | decreased | increased | increased | Increased | increased |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, M.A.H.; Mailah, M.; Jabbar, W.A.; Moiduddin, K.; Ameen, W.; Alkhalefah, H. Autonomous Road Roundabout Detection and Navigation System for Smart Vehicles and Cities Using Laser Simulator–Fuzzy Logic Algorithms and Sensor Fusion. Sensors 2020, 20, 3694. https://doi.org/10.3390/s20133694

Ali MAH, Mailah M, Jabbar WA, Moiduddin K, Ameen W, Alkhalefah H. Autonomous Road Roundabout Detection and Navigation System for Smart Vehicles and Cities Using Laser Simulator–Fuzzy Logic Algorithms and Sensor Fusion. Sensors. 2020; 20(13):3694. https://doi.org/10.3390/s20133694

Chicago/Turabian StyleAli, Mohammed A. H., Musa Mailah, Waheb A. Jabbar, Khaja Moiduddin, Wadea Ameen, and Hisham Alkhalefah. 2020. "Autonomous Road Roundabout Detection and Navigation System for Smart Vehicles and Cities Using Laser Simulator–Fuzzy Logic Algorithms and Sensor Fusion" Sensors 20, no. 13: 3694. https://doi.org/10.3390/s20133694

APA StyleAli, M. A. H., Mailah, M., Jabbar, W. A., Moiduddin, K., Ameen, W., & Alkhalefah, H. (2020). Autonomous Road Roundabout Detection and Navigation System for Smart Vehicles and Cities Using Laser Simulator–Fuzzy Logic Algorithms and Sensor Fusion. Sensors, 20(13), 3694. https://doi.org/10.3390/s20133694