Estimation of Relative Hand-Finger Orientation Using a Small IMU Configuration

Abstract

1. Introduction

2. Methods

2.1. Sensor Model

2.2. Process Model

2.3. Measurement Model

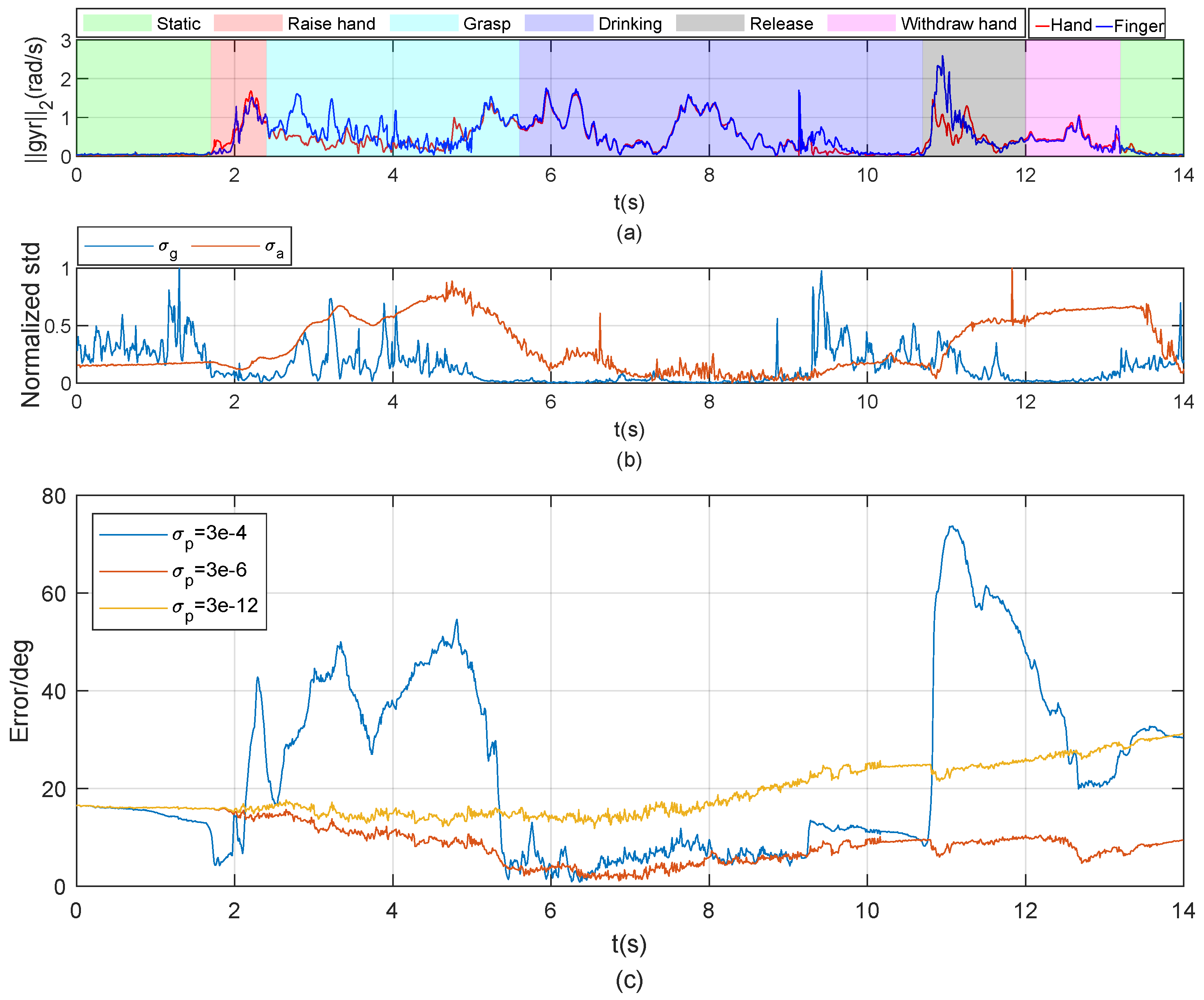

2.4. Uncertainty Error Variance

3. Experiments

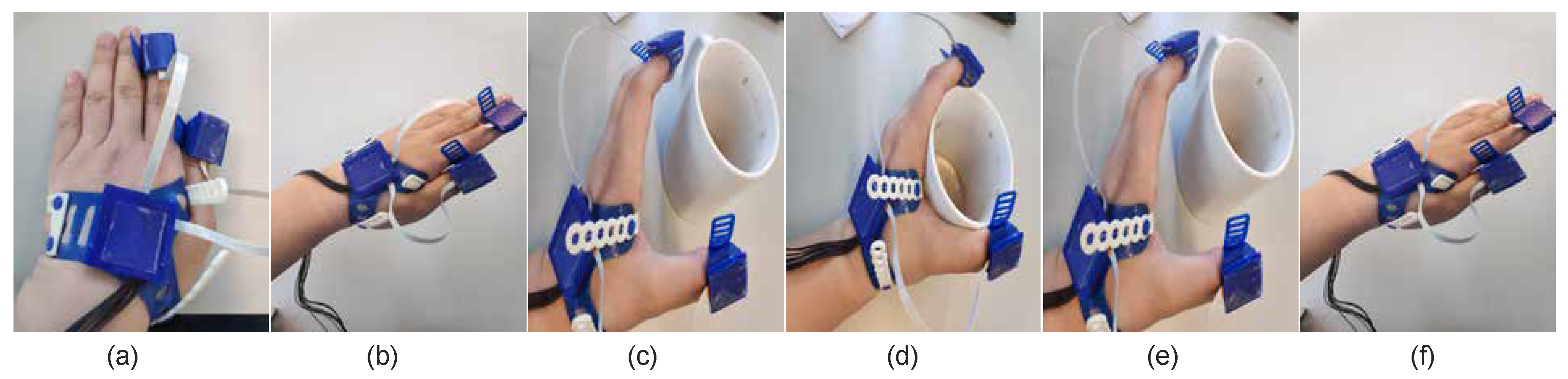

3.1. Experiment Setup

3.2. Alignment of the IMU and Reference Marker Frame for the Validation Experiment

3.3. Sensor to Segment Calibration

3.4. Synchronization of Vicon and IMU System

3.5. Protocols for the Experiment

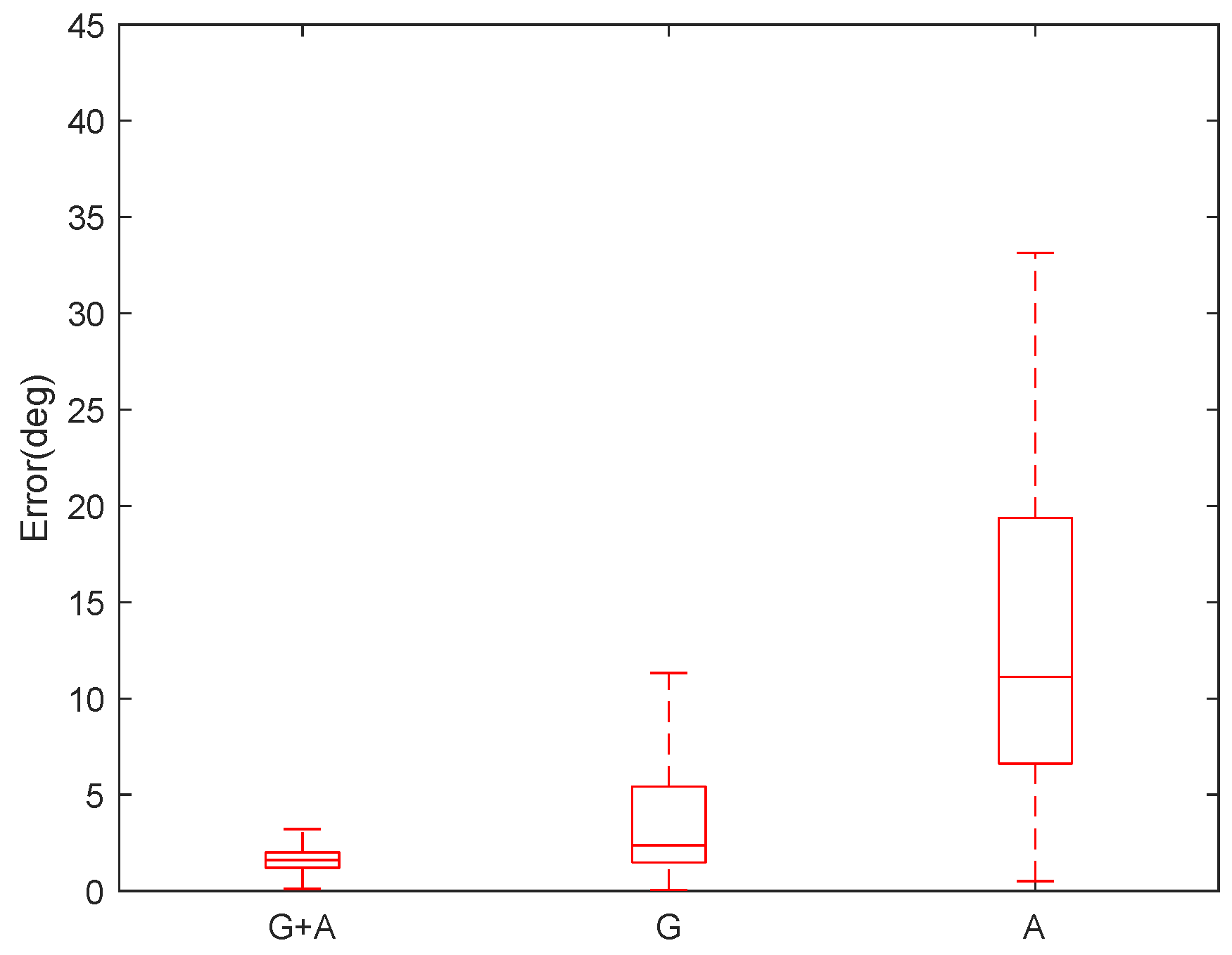

4. Results

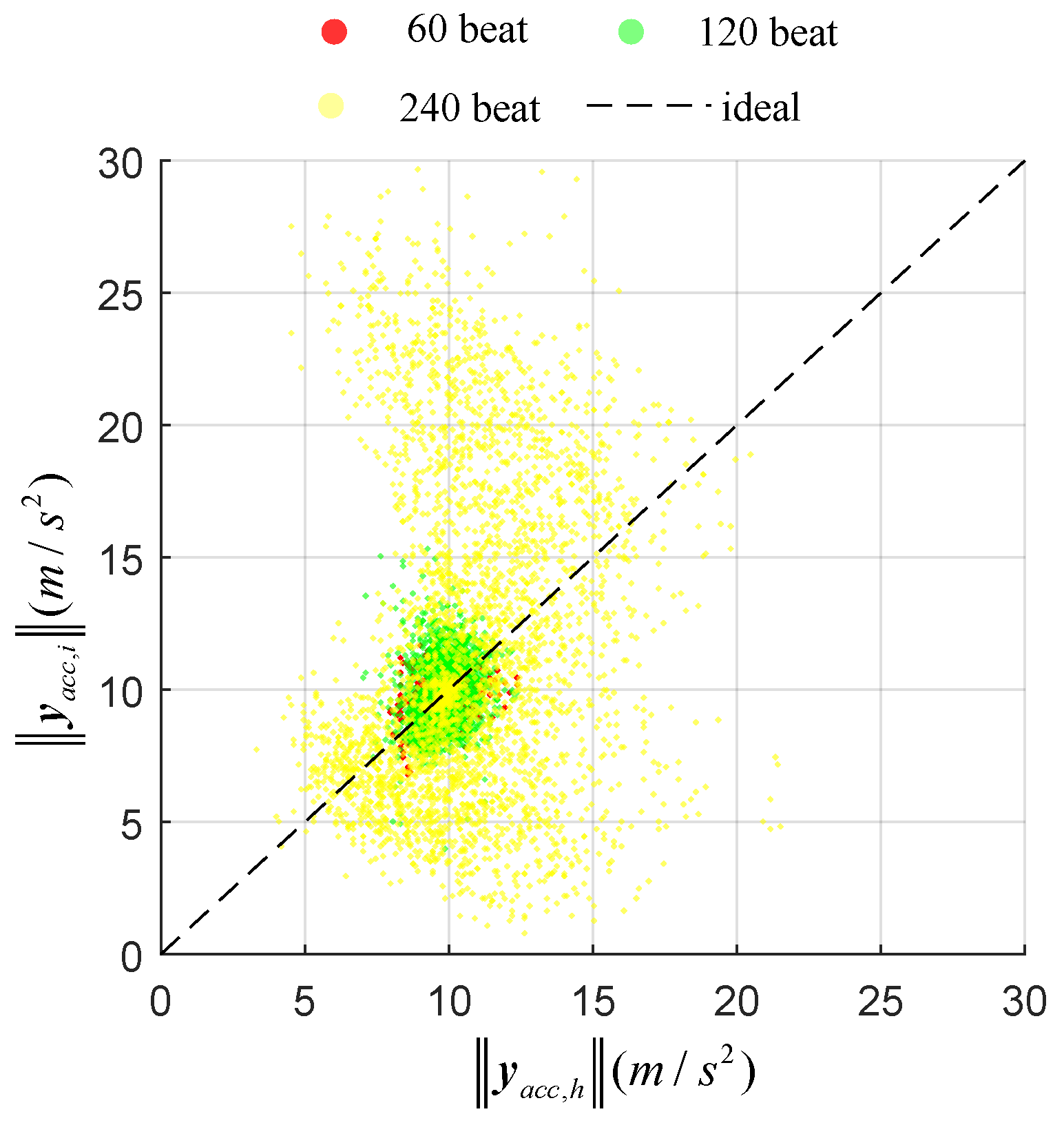

4.1. Movements and Rotations of the Hand, While Not Varying Relative Orientations between the Hand and Finger (Task 1)

Influence of Repetition Rate of Movement

4.2. Simple Functional Task (Task 2)

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Oka, K.; Sato, Y.; Koike, H. Real-time fingertip tracking and gesture recognition. IEEE Comput. Graph. Appl. 2002, 22, 64–71. [Google Scholar] [CrossRef]

- Tunik, E.; Saleh, S.; Adamovich, S.V. Visuomotor discordance during visually-guided hand movement in virtual reality modulates sensorimotor cortical activity in healthy and hemiparetic subjects. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 198–207. [Google Scholar] [CrossRef] [PubMed]

- Vignais, N.; Miezal, M.; Bleser, G.; Mura, K.; Gorecky, D.; Marin, F. Innovative system for real-time ergonomic feedback in industrial manufacturing. Appl. Ergon. 2013, 44, 566–574. [Google Scholar] [CrossRef] [PubMed]

- Szturm, T.; Peters, J.F.; Otto, C.; Kapadia, N.; Desai, A. Task-specific rehabilitation of finger-hand function using interactive computer gaming. Arch. Phys. Med. Rehabil. 2008, 89, 2213–2217. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on various inertial measurement unit (IMU) sensor applications. Int. J. Signal Process. Syst. 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Jebsen, R.H.; Taylor, N.; Trieschmann, R.B.; Trotter, M.J.; Howard, L.A. An objective and standardized test of hand function. Arch. Phys. Med. Rehabil. 1969, 50, 311–319. [Google Scholar]

- Platz, T.; Pinkowski, C.; van Wijck, F.; Kim, I.H.; Di Bella, P.; Johnson, G. Reliability and validity of arm function assessment with standardized guidelines for the Fugl-Meyer Test, Action Research Arm Test and Box and Block Test: A multicentre study. Clin. Rehabil. 2005, 19, 404–411. [Google Scholar] [CrossRef]

- Kapur, A.; Virji-Babul, N.; Tzanetakis, G.; Driessen, P.F. Gesture-Based Affective Computing on Motion Capture Data. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Beijing, China, 22–24 October 2005; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3784, pp. 1–7. [Google Scholar]

- Guna, J.; Jakus, G.; Pogačnik, M.; Tomažič, S.; Sodnik, J. An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking. Sensors 2014, 14, 3702–3720. [Google Scholar] [CrossRef]

- Smeragliuolo, A.H.; Hill, N.J.; Disla, L.; Putrino, D. Validation of the Leap Motion Controller using markered motion capture technology. J. Biomech. 2016, 49, 1742–1750. [Google Scholar] [CrossRef]

- Lu, W.; Tong, Z.; Chu, J. Dynamic hand gesture recognition with leap motion controller. IEEE Signal Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Mohandes, M.; Aliyu, S.; Deriche, M. Arabic sign language recognition using the leap motion controller. In Proceedings of the 2014 IEEE 23rd International Symposium on Industrial Electronics (ISIE), Istanbul, Turkey, 1–4 June 2014; pp. 960–965. [Google Scholar]

- Borghetti, M.; Sardini, E.; Serpelloni, M. Sensorized Glove for Measuring Hand Finger Flexion for Rehabilitation Purposes. IEEE Trans. Instrum. Meas. 2013, 62, 3308–3314. [Google Scholar] [CrossRef]

- Chen, S.; Lou, Z.; Chen, D.; Jiang, K.; Shen, G. Polymer-Enhanced Highly Stretchable Conductive Fiber Strain Sensor Used for Electronic Data Gloves. Adv. Mater. Technol. 2016, 1, 1600136. [Google Scholar] [CrossRef]

- Chen, K.Y.; Patel, S.N.; Keller, S. Finexus. In CHI 2016; Kaye, J., Druin, A., Lampe, C., Morris, D., Hourcade, J.P., Eds.; The Association for Computing Machinery: New York, NY, USA, 2016; pp. 1504–1514. [Google Scholar] [CrossRef]

- Ma, Y.; Mao, Z.H.; Jia, W.; Li, C.; Yang, J.; Sun, M. Magnetic Hand Tracking for Human-Computer Interface. IEEE Trans. Magn. 2011, 47, 970–973. [Google Scholar] [CrossRef]

- Lin, B.S.; Hsiao, P.C.; Yang, S.Y.; Su, C.S.; Lee, I.J. Data Glove System Embedded With Inertial Measurement Units for Hand Function Evaluation in Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2204–2213. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.T.; Chang, J.Y. Sensor Glove based on Novel Inertial Sensor Fusion Control Algorithm for 3D Real-time Hand Gestures Measurements. IEEE Trans. Ind. Electron. 2019, 1. [Google Scholar] [CrossRef]

- Salchow-Hömmen, C.; Callies, L.; Laidig, D.; Valtin, M.; Schauer, T.; Seel, T. A Tangible Solution for Hand Motion Tracking in Clinical Applications. Sensors 2019, 19, 208. [Google Scholar] [CrossRef]

- Seel, T.; Ruppin, S. Eliminating the Effect of Magnetic Disturbances on the Inclination Estimates of Inertial Sensors. IFAC-PapersOnLine 2017, 50, 8798–8803. [Google Scholar] [CrossRef]

- Madgwick, S.O.; Harrison, A.J.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Teufl, W.; Miezal, M.; Taetz, B.; Fröhlich, M.; Bleser, G. Validity, Test-Retest Reliability and Long-Term Stability of Magnetometer Free Inertial Sensor Based 3D Joint Kinematics. Sensors 2018, 18, 1980. [Google Scholar] [CrossRef]

- Seel, T.; Schauer, T.; Raisch, J. Joint axis and position estimation from inertial measurement data by exploiting kinematic constraints. In Proceedings of the IEEE International Conference on Control Applications (CCA), Dubrovnik, Croatia, 3–5 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 45–49. [Google Scholar] [CrossRef]

- Laidig, D.; Lehmann, D.; Bégin, M.A.; Seel, T. Magnetometer-free realtime inertial motion tracking by exploitation of kinematic constraints in 2-dof joints. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1233–1238. [Google Scholar]

- Lehmann, D.; Laidig, D.; Seel, T. Magnetometer-free motion tracking of one-dimensional joints by exploiting kinematic constraints. Proc. Autom. Med. Eng. 2020, 1, 027. [Google Scholar]

- Tedaldi, D.; Pretto, A.; Menegatti, E. A robust and easy to implement method for IMU calibration without external equipments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 3042–3049. [Google Scholar]

- Fong, W.; Ong, S.; Nee, A. Methods for in-field user calibration of an inertial measurement unit without external equipment. Meas. Sci. Technol. 2008, 19, 085202. [Google Scholar] [CrossRef]

- Kortier, H.G.; Sluiter, V.I.; Roetenberg, D.; Veltink, P.H. Assessment of hand kinematics using inertial and magnetic sensors. J. NeuroEng. Rehabil. 2014, 11, 70. [Google Scholar] [CrossRef] [PubMed]

- Shepperd, S.W. Quaternion from rotation matrix. J. Guid. Control 1978, 1, 223–224. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; van Beijnum, B.-J.F.; Li, B.; Yan, S.; Veltink, P.H. Estimation of Relative Hand-Finger Orientation Using a Small IMU Configuration. Sensors 2020, 20, 4008. https://doi.org/10.3390/s20144008

Yang Z, van Beijnum B-JF, Li B, Yan S, Veltink PH. Estimation of Relative Hand-Finger Orientation Using a Small IMU Configuration. Sensors. 2020; 20(14):4008. https://doi.org/10.3390/s20144008

Chicago/Turabian StyleYang, Zhicheng, Bert-Jan F. van Beijnum, Bin Li, Shenggang Yan, and Peter H. Veltink. 2020. "Estimation of Relative Hand-Finger Orientation Using a Small IMU Configuration" Sensors 20, no. 14: 4008. https://doi.org/10.3390/s20144008

APA StyleYang, Z., van Beijnum, B.-J. F., Li, B., Yan, S., & Veltink, P. H. (2020). Estimation of Relative Hand-Finger Orientation Using a Small IMU Configuration. Sensors, 20(14), 4008. https://doi.org/10.3390/s20144008