In this section, we provide a detailed description of our algorithm which is summarized in Algorithm 1.

First, we compute the curvilinear abscissa for each pose of the trajectory based on Equation (

13) (line 1). The surface prior

is then estimated by fitting an elliptic cylinder to the vertical sonar points (line 2). The Gaussian process (GP) modeling the environment surface is trained using the vertical sonar measurements and their corresponding curvilinear abscissa (line 3). Once done, for each horizontal sonar data, we estimate the most likely elevation angles

(line 5) and compute their corresponding uncertainties (line 7). In the following, we detail the process for each step.

4.2.1. Environment Prior

In this section, we explain how our environment prior is defined. Based on typical karstic environment, the natural choice is to use an elliptic cylinder as a prior shape. For a given pose on the trajectory (equivalently its curvilinear abscissa) and a given sonar rotation angle , returns the distance to the prior shape.

In order to fit this primitive on the vertical sonar measurements, we follow an approach similar to [

31]. First, as for any non-linear optimization approach, we need a good initial shape. It is obtained by computing robust principal component analysis (RPCA) [

32] on the vertical sonar point cloud. It provides three principals vectors which, in the case of a perfect elliptic cylinder, should correspond to the cylinder axis, the major and the minor elliptic axis. If the length of the estimated cylinder is higher than its width, then the eigenvector with the highest eigenvalue is the cylinder axis. However, we cannot make this assumption in our case. To find the cylinder axis among those principal vectors, we used the fact that every tangent on a cylinder surface is orthogonal to its axis. This means that by approximating tangent vectors by the vector formed by two successive points, their projections on the PCA bases should be minimal along the cylinder axis.

Then, the elliptic section is estimated by fitting the projections of all the points in the plane spanned by the two other principals axis [

33]. The PCA base is then rotated around the cylinder axis to be aligned with the ellipse axis.

As can be seen with the orange elliptic cylinder in

Figure 5, the dissymetric distribution of the vertical sonar points has a huge influence on the RPCA result. To further improve our estimation, we use the Levendberg–Marquardt (LM) algorithm to obtain the shape which minimizes orthogonal distances to the elliptic cylinder. The estimation vector

representing our elliptic cylinder is composed of a 6D pose in

and the scales along the minor and major ellipse axis. Here, we use on-manifold optimization on

, as described in [

22]. The final prior can be seen as the purple cylinder in

Figure 5.

4.2.2. Gaussian Process Regression and Kernel Choices

In this section, we explain the choice of kernels for the following Gaussian process regression. Based on our formulation of the surface through the functional

f (cf.

Section 4.1), we exploit its cylindrical structure by combining a kernel on the trajectory abscissa and a kernel on the sonar rotation angle:

can be any kernel as proposed in

Section 3.4. For

, as we deal with angles, we cannot use a simple euclidean distance. When choosing the metric to use, we have to ensure that the resulting kernel is positive definite [

34,

35]. We can define two distance

and

based respectively on the chordal distance and the geodesic distance on

:

In [

35], it is shown that for geodesic distance

a

-Wendland function [

36] can be used to define a valid kernel

For , any kernel can be used.

In this paper, we only consider exponential and Matern52 kernels with chordal distance for angles. In the experimental section, we investigate the effects of those different kernels and distances on the surface estimation.

4.2.3. Maximum Likelihood Estimation of

Originally, we cannot obtain the 3D points observed by the horizontal sonar as any point in its beam may return the same measurement. We exploit the estimated surface by the vertical sonar to infer the elevation angles

for each horizontal sonar measurements. The idea is that elevation angles corresponding to 3D points near the estimated surface are good estimate. This is illustrated in

Figure 6. Hence, for each horizontal sonar measurement

, we search for the elevation angles solutions of:

where

is the estimated range we would have obtained if this point was observed by the vertical sonar. In fact, depending on the environment configuration, several angles can correspond to one measure as previously illustrated in the

Figure 4. Thus, instead of solving Equation (

18), we search for local log-maximum likelihood values

:

The corresponding algorithm is described in Algorithm 2 and detailed in the following.

As it is difficult to obtain a closed-form expression of

relative to

, we uniformly sample

values in the range

. In practice,

where

b is the beam vertical aperture. We denote those samples

. For each sample

, we compute the corresponding virtual 3D points

with

defined by Equation (

1). To assess the likelihood of having measured this point on the surface, we need to use the previously trained GP model

f. We therefore have to express this point in terms of

f inputs and output. In other words, we need the curvilinear abscissa

, the rotation angle

and range

as if the point was virtually seen by the vertical sonar. This is illustrated in

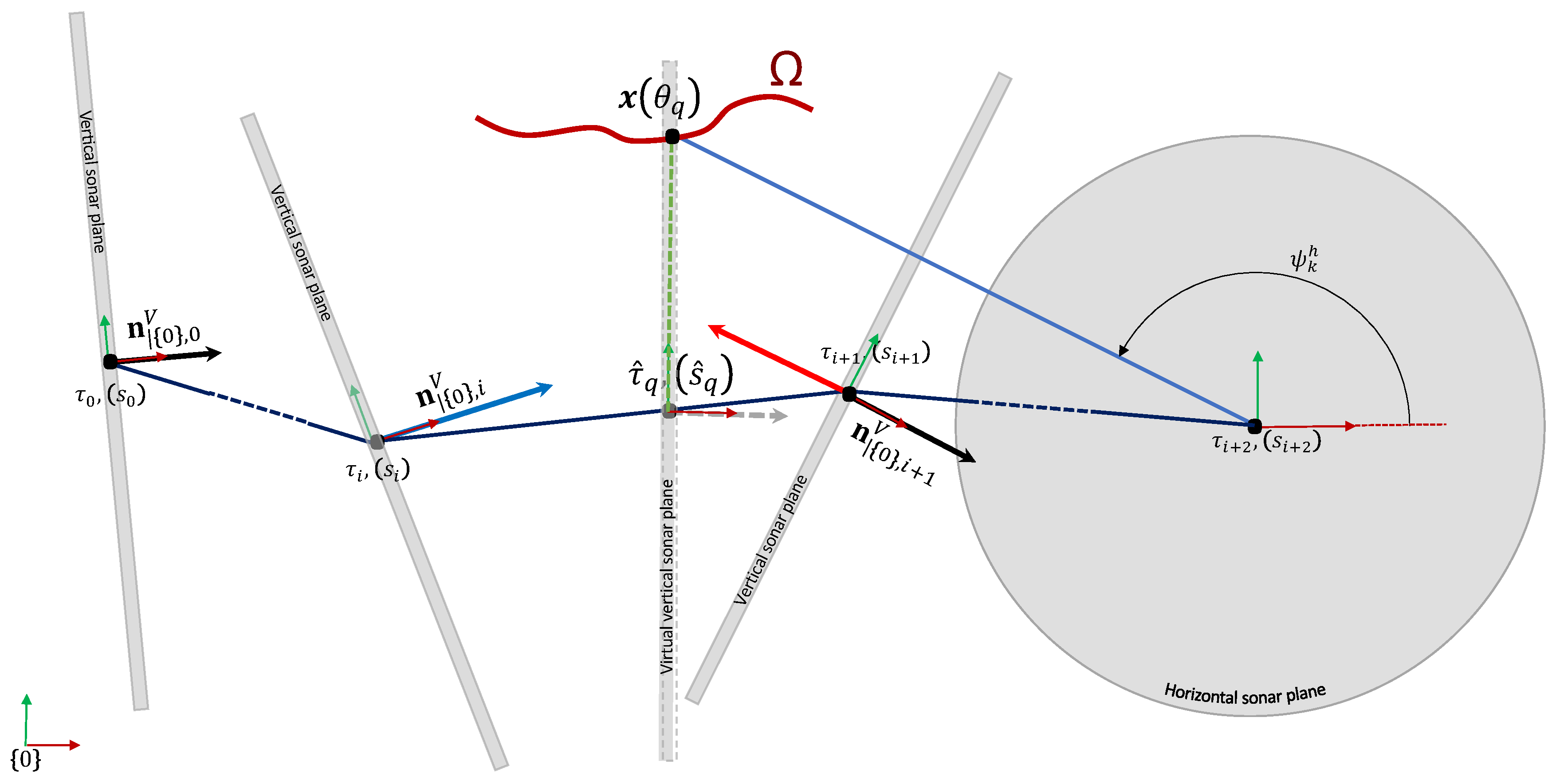

Figure 7.

To obtain those parameters, we proceed as follows. First, let us denote

the rotation axis of the vertical sonar expressed in its local frame. It is also the normal vector of the sonar plane. We are searching a pose

along the trajectory such that

belongs to the vertical sonar plane. To do so, we need to interpolate the robot trajectory. The first step is to determine in which segments of the trajectory

could belong to (several segments can be found). We recall that we note the global robot position by

and its orientation by the quaternion

. The vertical sonar orientation quaternion is also defined by

. The rotation axis of the vertical sonar expressed in the global frame

when the robot is in its

i-th pose

is given by

A segment (represented by indexes

) is considered if the projection of the vector

on the vertical rotation axis

has a change of sign between the beginning and end of the segment (

Figure 8). Indeed, thanks to the intermediate value theorem, such a segment contains the pose for which the projection is null. Formally, we consider the set

of starting index such that

For each selected segment

, we denote

the linear interpolation between the poses

and

.

is defined by the first three coordinates of

and is simply given by

Let define the relative angular pose express in yaw–pitch–roll Euler angles between

and

:

We then have the associated interpolated rotation matrix

:

The global rotation matrix

is given by

We can now define the linear interpolation of the vertical sonar rotation axis

:

We search for the interpolation value

such that

belongs to the sonar plane with normal

, that is

We assume small angle variation so that we can approximate

to the first order

is the root in

of the following order 2 polynomial expression

We then have

the robot pose corresponding to the computed

. We can now compute the curvilinear abscissa

corresponding to

To compute

, we express

in the vertical sonar frame coordinates corresponding to the robot pose

Finally, the estimated range

is simply the L2 norm of

We can now compute the log-likelihood

The expressions for Equation (

35) can be found in ([

23], p17 Equation (2.25/2.26)) and are obtained using the GP regression trained to estimate

f (

Section 3.4).

4.2.4. Estimation Uncertainty

In the previous section, we explained how the set

is obtained. We are also interested in its related uncertainties. In other words, we want to define the probability distributions

. A normal distribution is not adapted as its support is infinite while the width of our horizontal sonar beam is restricted to its aperture. A good choice is a scaled beta distribution [

37] defined by two parameters

In our case, we consider

where

b is the horizontal sonar beam width. We then have the following probability density function

where

is the gamma function which extends the factorial function to complex numbers and is defined by

For each estimated

from the previous section, the parameters

can be computed from a given mean

and variance

:

In our case, the mean is simply the estimated

ie

. The variance

requires more computation and is estimated as the inverse of the Fisher information

:

Direct computation of Equation (43) gives us (see

Appendix A for the derivation):

Note that a uniform distribution can still be represented by a beta distribution with shape parameters

. Indeed, from Equations (

39) and (

38) we have

which is the density probability for a uniform distribution on

.

We now define a threshold

to filter out variances

leading to invalid Beta distribution parameters . First, the parameters

of a Beta distribution must be strictly positive:

Furthermore, as we consider each distribution as unimodal, we must have

or

. (In the case

, the beta distribution is bimodal with modes at

. A mode corresponds to a local maxima of a probability density function (pdf).) From the expressions (

40) and (

41), we have

It is also easy to verify that the first constraint

is included in the second as we have

We also add another threshold

to filter out valid but too uncertain estimations. It is defined by

The parameter

k controls the filter sensibility, with higher values being more restrictive. In our implementation, we used a default value

. The final threshold

is then

Figure 9 shows the graphs of

and

.

Figure 10 illustrates the uncertainty estimation process. The colors used to represent the beta distributions directly on the measurement arc are obtained through the following mapping

Note that the central color (turquoise) is obtained for , which is the pdf for a uniform distribution.

| Algorithm 1 Scan merging. |

| Input: Sonar measurements , , Robot trajectory H |

| Output: Estimated horizontal measurements |

| 1: | ▹ Curvilinear abscissa, Equation (12) |

| 2: | ▹ Surface prior |

| 3: | ▹Surface Estimation by Gaussian Process |

| 4: for each do | ▹ Estimation |

| 5: | ▹ See Algorithm 2 |

| 6: for do |

| 7: |

| 8: end for |

| 9: end for |

| Algorithm 2 Elevation angle MLE. |

| Input: Trained surface GP f, a horizontal sonar measure , robot trajectory H and the corresponding curvilinear abscissa , Rotation axis of vertical scan expressed in the vertical sonar frame , Number of samples |

| Output: Estimated local maximum elevation angles |

| 1: | , |

| 2: | forqdo |

| 3: | |

| 4: | | ▹ Equation (20) |

| 5: | |

| 6: | for do |

| 7: | | ▹ Equation (21) |

| 8: | if then | ▹ Equation (22) |

| 9: | |

| 10: | end if |

| 11: | end for |

| 12: | |

| 13: | | ▹ Maximum likelihood value |

| 14: | for do |

| 15: | |

| 16: | |

| 17: | |

| 18: | | ▹ Equations (28) and (30) |

| 19: | |

| 20: | | ▹ Equation (32) |

| 21: | | ▹ Equation (31) |

| 22: | | ▹ Equation (33) |

| 23: | | ▹ Equation (34) |

| 24: | | ▹ Equation (36) |

| 25: | if then |

| 26: | |

| 27: | |

| 28: | end if |

| 29: | end for |

| 30: | if then | ▹ , Equation (19) |

| 31: | |

| 32: | end if |

| 33: | end for |

| Algorithm 3 Uncertainty estimate. |

| Input: Likelihood , local maximum likelihood , beam width b |

| Output: Posterior |

| 1: | ▹ Fisher information Equation (43) |

| 2: |

| 3: | ▹ Beta distr. parameters |

| 4: if then | ▹ Equation (52) |

| 5: |

| 6: |

| 7: end if |

| 8: |