A Review of Environmental Context Detection for Navigation Based on Multiple Sensors

Abstract

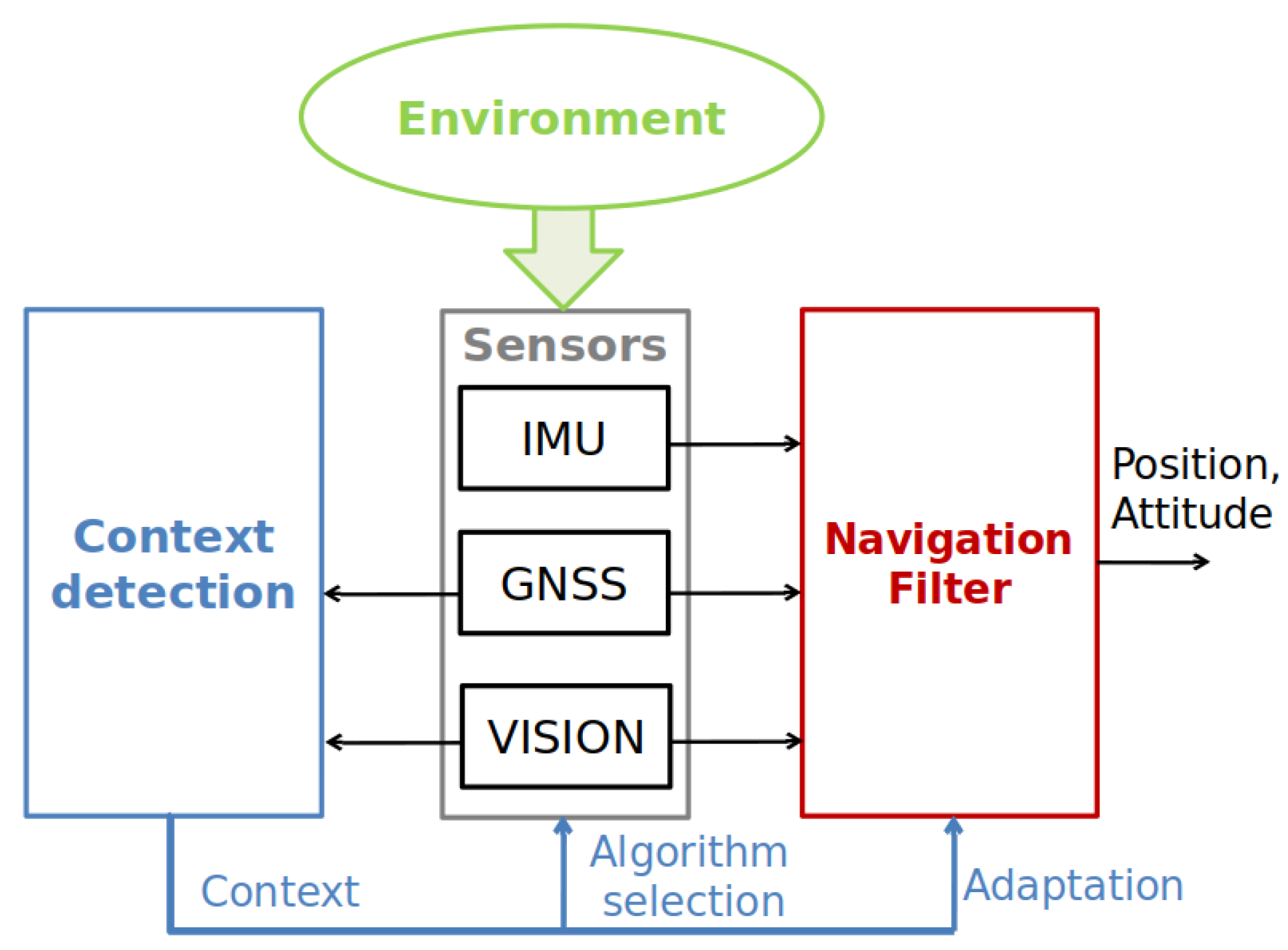

:1. Introduction

- Diffuse multipath typically happens when the signal encounters a cluttered metallic surface such as overhead wires. The signal is diffused in a wide variety of directions, creating an error of positioning, which can go up to 10 m.

- Specular multipath appears with reflective surfaces such as a mirror and glass and can lead to positioning errors between two and six m.

- Water reflections are linked to the presence of a water surface next to the antenna and can create positioning errors of an order of 10 m.

2. GNSS Signal-Based Context Indicators

2.1. C/N0

- The average C/N0 value is higher in outdoor environments than in indoor ones.

- The standard deviation is larger in outdoor environments than in indoor ones, which makes sense since signal occlusion is more likely to happen in outdoor environments because a small change in the satellite constellation can greatly affect the satellite visibility in a cluttered environment.

- The number of satellites with a C/N0 > 25 dB-Hz. This idea of the number of visible satellites was also exploited in [21] (SatProbe) to classify indoors from outdoors (only based on the GPS constellation).

- The sum of the C/N0 of satellites > 25 dB-Hz

- The sum of squares of the pseudo-range residuals, which is defined as follows:with N the number of satellites, the measured pseudo-range, and the estimated range.

- The sum of the C/N0 values of satellites >25 dB-Hz

2.2. Pseudo-Range

2.3. Satellite Elevations

2.4. Auto-Correlation Function

2.5. Combination of Multiple Indicators

- Signal strength (C/N0 value)

- Change rate of the received signal strength

- Pseudo-range residuals

- Spatial geometry

- -

- Azimuth distribution of the satellite

- -

- Satellite azimuth distribution proportion

- -

- Proportion of the number of satellites within a range of 90 of the azimuth

- -

- Proportion of the number of satellites within a range of 180 of the azimuth

- -

- Position Dilution of Precision (PDoP), Vertical Dilution of Precision (VDoP), Horizontal Dilution of Precision (HDoP)

- Time sequence

- -

- The number of visible satellites from time t2 to time t1

- -

- The ratio of satellites, the CNR (Carrier-to-Noise Ratio) of which decreases from time t2 to time t1

- -

- The ratio of satellites, the CNR of which holds from time t2 to time t1

- -

- The ratio of satellites, the CNR of which decreases from time t2 to time t1

- Statistical

- -

- Number of satellites at the current time

- -

- Mean, variance, standard deviation, minimum, maximum, median, range, interquartile range, skewness, kurtosis of all visible satellites’ CNR

- -

- Mean, variance, standard deviation, minimum, maximum, median, range, interquartile range, skewness, kurtosis of visible satellites’ CNR under different sliding window lengths

- -

- Mean of PDoP, VDoP, and HDoP

2.6. Summary of GNSS Indicators

3. Vision-Based Context Indicators

3.1. Sky Extraction

3.2. Scene Analysis/Classification

3.3. Satellite Imagery

3.4. Aerial Photography

3.5. Combination of Vision-Based Techniques

3.6. Summary of Vision-Based Indicators

4. Context Detection Based on Other Sensors

5. Summary of the Different Solutions and Perspectives

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Groves, P.; Martin, H.; Voutsis, K.; Walter, D.; Wang, L. Context Detection, Categorization and Connectivity for Advanced Adaptive Integrated Navigation. In Proceedings of the 26th International Technical Meeting of the Satellite Division of the Institute of Navigation, ION GNSS 2013, Nashville, TN, USA, 16–20 September 2013; Volume 2, pp. 15–19. [Google Scholar]

- Groves, P.D.; Wang, L.; Walter, D.; Martin, H.; Voutsis, K.; Jiang, Z. The four key challenges of advanced multisensor navigation and positioning. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium—PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 773–792. [Google Scholar] [CrossRef] [Green Version]

- Gao, H.; Groves, P.D. Improving environment detection by behavior association for context-adaptive navigation. Navigation 2020, 67, 43–60. [Google Scholar] [CrossRef] [Green Version]

- Frank, K.; Nadales, M.J.V.; Robertson, P.; Angermann, M. Reliable Real-Time Recognition of motion related human activities using MEMS inertial sensors. In Proceedings of the 23rd International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2010), Portland, OR, USA, 21–24 September 2010. [Google Scholar]

- Pei, L.; Chen, R.; Liu, J.; Kuusniemi, H.; Chen, Y.; Tenhunen, T. Using Motion-Awareness for the 3D Indoor Personal Navigation on a Smartphone. In Proceedings of the 24th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2011), Portland, OR, USA, 20–23 September 2011; Volume 4. [Google Scholar]

- Esmaeili Kelishomi, A.; Garmabaki, A.; Bahaghighat, M.; Dong, J. Mobile User Indoor-Outdoor Detection Through Physical Daily Activities. Sensors 2019, 19, 511. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ali, M.; ElBatt, T.; Youssef, M. SenseIO: Realistic Ubiquitous Indoor Outdoor Detection System Using Smartphones. IEEE Sens. J. 2018, 18, 3684–3693. [Google Scholar] [CrossRef]

- López-Salcedo, J.A.; Parro-Jimenez, J.; Seco-Granados, G. Multipath detection metrics and attenuation analysis using a GPS snapshot receiver in harsh environments. In Proceedings of the 2009 3rd European Conference on Antennas and Propagation, Berlin, Germany, 23–27 March 2009; pp. 3692–3696. [Google Scholar]

- Zhu, Y.; Luo, H.; Wang, Q.; Zhao, F.; Ning, B.; Ke, Q.; Zhang, C. A Fast Indoor/Outdoor Transition Detection Algorithm Based on Machine Learning. Sensors 2019, 19, 786. [Google Scholar] [CrossRef] [Green Version]

- Attia, D.; Meurie, C.; Ruichek, Y.; Marais, J.; Flancquart, A. Image analysis based real time detection of satellites reception state. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Madeira Island, Portugal, 19–22 September 2010; pp. 1651–1656. [Google Scholar] [CrossRef]

- Gakne, P.; Petovello, M. Assessing image segmentation algorithms for sky identification in GNSS. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Marais, J.; Meurie, C.; Attia, D.; Ruichek, Y.; Flancquart, A. Toward accurate localization in guided transport: Combining GNSS data and imaging information. Transp. Res. Part C Emerg. Technol. 2013, 43. [Google Scholar] [CrossRef] [Green Version]

- Meguro, J.; Murata, T.; Takiguchi, J.; Amano, Y.; Hashizume, T. GPS Multipath Mitigation for Urban Area Using Omnidirectional Infrared Camera. IEEE Trans. Intell. Transp. Syst. 2009, 10, 22–30. [Google Scholar] [CrossRef]

- Mubarak, O. Analysis of early late phase for multipath mitigation. In Proceedings of the 21st International Technical Meeting of the Satellite Division of the US Institute of Navigation, Savannah, GA, USA, 10–12 December 2008; pp. 16–19. [Google Scholar]

- Cleve, C.; Kelly, M.; Kearns, F.R.; Moritz, M. Classification of the wildland–urban interface: A comparison of pixel- and object-based classifications using high-resolution aerial photography. Comput. Environ. Urban Syst. 2008, 32, 317–326. [Google Scholar] [CrossRef]

- Ghiasi, M.; Amirfattahi, R. Fast semantic segmentation of aerial images based on color and texture. In Proceedings of the Iranian Conference on Machine Vision and Image Processing, MVIP, Zanjan, Iran, 10–12 September 2013; pp. 324–327. [Google Scholar] [CrossRef]

- Zhang, G.; Lei, T.; Cui, Y.; Jiang, P. A Dual-Path and Lightweight Convolutional Neural Network for High-Resolution Aerial Image Segmentation. ISPRS Int. J. Geo-Inf. 2019, 8, 582. [Google Scholar] [CrossRef] [Green Version]

- Lim, E.H.; Suter, D. 3D terrestrial LIDAR classifications with super-voxels and multi-scale Conditional Random Fields. Comput.-Aided Des. 2009, 41, 701–710. [Google Scholar] [CrossRef]

- Lin, T.; O’Driscoll, C.; Lachapelle, G. Development of a Context-Aware Vector-Based High-Sensitivity GNSS Software Receiver. Proc. Int. Tech. Meet. Inst. Navig. 2011, 2, 1043–1055. [Google Scholar]

- Gao, H.; Groves, P. Environmental Context Detection for Adaptive Navigation using GNSS Measurements from a Smartphone. Navigation 2018, 65, 99–116. [Google Scholar] [CrossRef]

- Chen, K.; Tan, G. SatProbe: Low-energy and fast indoor/outdoor detection based on raw GPS processing. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Skournetou, D.; Lohan, E.S. Indoor location awareness based on the non-coherent correlation function for GNSS signals. In Proceedings of the Finnish Signal Processing Symposium, FINSIG’07, Oulu, Finland, 30 August 2007. [Google Scholar]

- Deng, J.; Zhang, J. Combining Multiple Precision-Boosted Classifiers for Indoor-Outdoor Scene Classification. Inform. Technol. Appl. 2005, 1, 720–725. [Google Scholar] [CrossRef]

- Raja, R.; Roomi, S.M.M.; Dharmalakshmi, D.; Rohini, S. Classification of indoor/outdoor scene. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Computing Research, Enathi, India, 26–28 December 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Tahir, W.; Majeed, A.; Rehman, T. Indoor/Outdoor Image Classification Using GIST Image Features and Neural Network Classifiers. In Proceedings of the 2015 12th International Conference on High-capacity Optical Networks and Enabling/Emerging Technologies (HONET), Islamabad, Iran, 21–23 December 2015. [Google Scholar] [CrossRef]

- Wu, J.; Rehg, J.M. CENTRIST: A Visual Descriptor for Scene Categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1489–1501. [Google Scholar] [CrossRef] [PubMed]

- Raja, R.; Roomi, S.M.M.; Dharmalakshmi, D. Robust indoor/outdoor scene classification. In Proceedings of the 2015 Eighth International Conference on Advances in Pattern Recognition (ICAPR), Kolkata, India, 4–7 January 2015; pp. 1–5. [Google Scholar]

- Lipowezky, U.; Vol, I. Indoor-outdoor detector for mobile phone cameras using gentle boosting. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, CVPRW 2010, San Francisco, CA, USA, 13–18 June 2010; pp. 31–38. [Google Scholar] [CrossRef]

- Chen, C.; Ren, Y.; Kuo, C.C. Large-Scale Indoor/Outdoor Image Classification via Expert Decision Fusion (EDF). In Proceedings of the Computer Vision—ACCV 2014 Workshops, Singapore, 1–5 November 2014; pp. 426–442. [Google Scholar] [CrossRef]

- Pillai, I.; Satta, R.; Fumera, G.; Roli, F. Exploiting Depth Information for Indoor-Outdoor Scene Classification. In Proceedings of the Image Analysis and Processing—ICIAP 2011, Ravenna, Italy, 14–16 September 2011; Volume 2, pp. 130–139. [Google Scholar] [CrossRef] [Green Version]

- Meng, X.; Wang, Z.; Wu, L. Building global image features for scene recognition. Pattern Recognit. 2012, 45, 373–380. [Google Scholar] [CrossRef]

- Ganesan, A.; Balasubramanian, A. Indoor versus outdoor scene recognition for navigation of a micro aerial vehicle using spatial color gist wavelet descriptors. Vis. Comput. Ind. Biomed. Art 2019, 2, 20. [Google Scholar] [CrossRef] [PubMed]

- Balasubramanian, A.; Anitha, G. Indoor Scene Recognition for Micro Aerial Vehicles Navigation using Enhanced-GIST Descriptors. Def. Sci. J. 2018, 68, 129–137. [Google Scholar] [CrossRef]

- Seco-Granados, G.; López-Salcedo, J.A.; Jimenez-Banos, D.; Lopez-Risueno, G. Challenges in Indoor Global Navigation Satellite Systems: Unveiling its core features in signal processing. IEEE Signal Process. Mag. 2012, 29, 108–131. [Google Scholar] [CrossRef]

- Payne, A.; Singh, S. Indoor vs. outdoor scene classification in digital photographs. Pattern Recognit. 2005, 38, 1533–1545. [Google Scholar] [CrossRef]

- Ma, C.; Jee, G.I.; MacGougan, G.; Lachapelle, G.; Bloebaum, S.; Cox, G.; Garin, L.; Shewfelt, J. Gps signal degradation modeling. In Proceedings of the International Technical Meeting of the Satellite Division of the Institute of Navigation, Salt Lake City, UT, USA, 11–14 September 2001. [Google Scholar]

- Lehner, A.; Steingaß, A. On land mobile satellite navigation performance degraded by multipath reception. In Proceedings of the 2007 European Navigation Conference (ENC-GNSS), ENC-GNSS 2007, Geneva, Switzerland, 29–31 May 2007. [Google Scholar]

- MacGougan, G.; Lachapelle, G.; Klukas, R.; Siu, K. Degraded GPS Signal Measurements with A Stand-Alone High Sensitivity Receiver. In Proceedings of the 2002 National Technical Meeting of the Institute of Navigation, San Diego, CA, USA, 28–30 September 2002. [Google Scholar]

- Gómez-Casco, D.; López-Salcedo, J.A.; Seco-Granados, G. Optimal Post-Detection Integration Technique for the Reacquisition of Weak GNSS Signals. IEEE Trans. Aerosp. Electron. Syst. 2019, 56, 2302–2311. [Google Scholar] [CrossRef]

- Matera, E.R.; Garcia Peña, A.J.; Julien, O.S.; Milner, C.; Ekambi, B. Characterization of Line-of-Sight and Non-Line-of-Sight Pseudorange Multipath Errors in Urban Environment for GPS and Galileo. In ITM 2019, International Technical Meeting of The Institute of Navigation; ION: Reston, VA, USA, 2019; pp. 177–196. [Google Scholar] [CrossRef]

- Tranquilla, J.M.; Carr, J.P. GPS Multipath Field Observations at Land and Water Sites. Navigation 1990, 37, 393–414. [Google Scholar] [CrossRef]

- Fenton, P.; Jones, J. The Theory and Performance of NovAtel Inc.’s Vision Correlator. In Proceedings of the 18th International Technical Meeting of the Satellite Division of The Institute of Navigation, Long Beach, CA, USA, 13–16 September 2005. [Google Scholar]

- Bhuiyan, M.Z.H.; Lohan, E.S.; Renfors, M. Code Tracking Algorithms for Mitigating Multipath Effects in Fading Channels for Satellite-Based Positioning. EURASIP J. Adv. Signal Process. 2008, 2008, 863629. [Google Scholar] [CrossRef] [Green Version]

- Sahmoudi, M.; Amin, M.G. Fast Iterative Maximum-Likelihood Algorithm (FIMLA) for Multipath Mitigation in the Next Generation of GNSS Receivers. IEEE Trans. Wirel. Commun. 2008, 7, 4362–4374. [Google Scholar] [CrossRef]

- Spangenberg, M.; Julien, O.; Calmettes, V.; Duchâteau, G. Urban Navigation System for Automotive Applications Using HSGPS, Inertial and Wheel Speed Sensors. OATAO. 2008. Available online: https://oatao.univ-toulouse.fr/3183/ (accessed on 23 April 2008).

- Vincent, F.; Vilà-Valls, J.; Besson, O.; Medina, D.; Chaumette, E. Doppler-aided positioning in GNSS receivers—A performance analysis. Signal Process. 2020, 176, 107713. [Google Scholar] [CrossRef]

- Azemi, G.; Senadji, B.; Boashash, B. Estimating the Ricean K-factor for mobile communication applications. In Proceedings of the Seventh International Symposium on Signal Processing and Its Applications, Paris, France, 1–4 July 2003; Volume 2, pp. 311–314. [Google Scholar]

- Kumar, G.; Rao, G.; Kumar, M. GPS Signal Short-Term Propagation Characteristics Modeling in Urban Areas for Precise Navigation Applications. Positioning 2013, 4, 192–199. [Google Scholar] [CrossRef] [Green Version]

- Yozevitch, R.; Moshe, B.B.; Weissman, A. A Robust GNSS LOS/NLOS Signal Classifier. Navigation 2016, 63, 429–442. [Google Scholar] [CrossRef]

- Piñana-Diaz, C.; Toledo-Moreo, R.; Bétaille, D.; Gómez-Skarmeta, A.F. GPS multipath detection and exclusion with elevation-enhanced maps. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 19–24. [Google Scholar]

- Miura, S.; Hisaka, S.; Kamijo, S. GPS multipath detection and rectification using 3D maps. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1528–1534. [Google Scholar] [CrossRef]

- Groves, P.; Jiang, Z.; Wang, L.; Ziebart, M. Intelligent Urban Positioning using Multi-Constellation GNSS with 3D Mapping and NLOS Signal Detection. In Proceedings of the 25th International Technical Meeting of the Satellite Division of the Institute of Navigation (Ion Gnss 2012), Nashville, TN, USA, 17–21 September 2012; Volume 1, pp. 458–472. [Google Scholar]

- Peyraud, S.; Bétaille, D.; Renault, S.; Ortiz, M.; Mougel, F.; Meizel, D.; Peyret, F. About Non-Line-Of-Sight Satellite Detection and Exclusion in a 3D Map-Aided Localization Algorithm. Sensors 2013, 13, 829–847. [Google Scholar] [CrossRef] [Green Version]

- Groves, P.; Jiang, Z. Height Aiding, C/N0 Weighting and Consistency Checking for GNSS NLOS and Multipath Mitigation in Urban Areas. J. Navig. 2013, 66, 653–669. [Google Scholar] [CrossRef] [Green Version]

- Hsu, L. GNSS multipath detection using a machine learning approach. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Groves, P. Shadow Matching: A New GNSS Positioning Technique for Urban Canyons. J. Navig. 2011, 64, 417–430. [Google Scholar] [CrossRef]

- Wang, L.; Groves, P.D.; Ziebart, M. GNSS Shadow Matching Using a 3D Model of London in Urban Canyons. In Proceedings of the European Navigation Conference 2011, London, UK, 28 November–1 December 2011. [Google Scholar]

- Yozevitch, R.; Moshe, B.b. A Robust Shadow Matching Algorithm for GNSS Positioning. Navigation 2015, 62, 95–109. [Google Scholar] [CrossRef]

- Pagot, J.B.; Thevenon, P.; Julien, O.; Gregoire, Y.; Amarillo-Fernandez, F.; Maillard, D. Estimation of GNSS Signals Nominal Distortions from Correlation and Chip Domain. In Proceedings of the 2015 International Technical Meeting of The Institute of Navigation, Dana Point, CA, USA, 26–28 January 2015. [Google Scholar]

- Egea, D.; Seco-Granados, G.; López-Salcedo, J.A. Comprehensive Overview of Quickest Detection Theory and its Application to GNSS Threat Detection. Giroskopiya Navig. 2016, 95, 76–97. [Google Scholar] [CrossRef]

- Li, G.; Li, G.x.; Lv, J.; Chang, J.; Jie, X. A method of multipath detection in navigation receiver. In Proceedings of the 2009 International Conference on Wireless Communications Signal Processing, Nanjing, China, 13–15 November 2009; pp. 1–3. [Google Scholar] [CrossRef]

- Mubarak, O. Performance comparison of multipath detection using early late phase in BPSK and BOC modulated signals. In Proceedings of the 2013 7th International Conference on Signal Processing and Communication Systems, ICSPCS 2013—Proceedings, Carrara, VIC, Australia, 16–18 December 2013. [Google Scholar] [CrossRef]

- Xu, B.; Jia, H.; Luo, Y.; Hsu, L.T. Intelligent GPS L1 LOS/Multipath/NLOS Classifiers Based on Correlator-, RINEX- and NMEA-Level Measurements. Remote Sens. 2019, 11, 1851. [Google Scholar] [CrossRef] [Green Version]

- Klebe, D.; Blatherwick, R.; Morris, V. Ground-based all-sky mid-infrared and visible imagery for purposes of characterizing cloud properties. Atmos. Meas. Tech. 2014, 7, 637. [Google Scholar] [CrossRef] [Green Version]

- Dev, S.; Lee, Y.H.; Winkler, S. Color-Based Segmentation of Sky/Cloud Images From Ground-Based Cameras. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 231–242. [Google Scholar] [CrossRef]

- Meguro, J.; Murata, T.; Amano, Y.; Hashizume, T.; Takiguchi, J.i. Development of a Positioning Technique for an Urban Area Using Omnidirectional Infrared Camera and Aerial Survey Data. Adv. Robot. 2008, 22, 731–747. [Google Scholar] [CrossRef]

- Serrano, N.; Savakis, A.E.; Luo, J. Improved scene classification using efficient low-level features and semantic cues. Pattern Recognit. 2004, 37, 1773–1784. [Google Scholar] [CrossRef]

- Stone, T.; Mangan, M.; Ardin, P.; Webb, B. Sky segmentation with ultraviolet images can be used for navigation. In Proceedings of the Conference: Robotics: Science and Systems 2014, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Mihail, R.P.; Workman, S.; Bessinger, Z.; Jacobs, N. Sky segmentation in the wild: An empirical study. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–6. [Google Scholar]

- Tao, L.; Yuan, L.; Sun, J. SkyFinder: Attribute-based sky image search. ACM Trans. Graph. 2009, 28, 68. [Google Scholar] [CrossRef]

- Liu, C.; Yuen, J.; Torralba, A. Nonparametric scene parsing: Label transfer via dense scene alignment. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1972–1979. [Google Scholar]

- Nafornita, C.; David, C.; Isar, A. Preliminary results on sky segmentation. In Proceedings of the International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 9–10 July 2015. [Google Scholar]

- Shytermeja, E.; Pena, A.G.; Julien, O. Proposed architecture for integrity monitoring of a GNSS/MEMS system with a Fisheye camera in urban environment. In Proceedings of the International Conference on Localization and GNSS 2014 (ICL-GNSS 2014), Helsinki, Finland, 24–26 June 2014; pp. 1–6. [Google Scholar]

- El Merabet, Y.; Ruichek, Y.; Ghaffarian, S.; Samir, Z.; Boujiha, T.; Touahni, R.; Messoussi, R. Horizon Line Detection from Fisheye Images Using Color Local Image Region Descriptors and Bhattacharyya Coefficient-Based Distance. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Lecce, Italy, 24–27 October 2016; Volume 10016. [Google Scholar] [CrossRef]

- Chapman, L.; Thornes, J.E.; Muller, J.; McMuldroch, S. Potential Applications of Thermal Fisheye Imagery in Urban Environments. IEEE Geosci. Remote Sens. Lett. 2007, 4, 56–59. [Google Scholar] [CrossRef]

- Wen, W.; Bai, X.; Kan, Y.C.; Hsu, L. Tightly Coupled GNSS/INS Integration via Factor Graph and Aided by Fish-Eye Camera. IEEE Trans. Veh. Technol. 2019, 68, 10651–10662. [Google Scholar] [CrossRef] [Green Version]

- Tsai, Y.H.; Shen, X.; Lin, Z.; Sunkavalli, K.; Yang, M.H. Sky is not the limit: Semantic-aware sky replacement. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Deng, L.; Yang, M.; Qian, Y.; Wang, C.; Wang, B. CNN based semantic segmentation for urban traffic scenes using fisheye camera. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 231–236. [Google Scholar]

- Russell, B.; Torralba, A.; Murphy, K.; Freeman, W. LabelMe: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Blott, G.; Takami, M.; Heipke, C. Semantic Segmentation of Fisheye Images. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Böker, C.; Niemeijer, J.; Wojke, N.; Meurie, C.; Cocheril, Y. A System for Image-Based Non-Line-Of-Sight Detection Using Convolutional Neural Networks. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 535–540. [Google Scholar]

- Khan, S.H.; Hayat, M.; Bennamoun, M.; Togneri, R.; Sohel, F.A. A Discriminative Representation of Convolutional Features for Indoor Scene Recognition. IEEE Trans. Image Process. 2016, 25, 3372–3383. [Google Scholar] [CrossRef] [PubMed]

- Boutell, M.; Luo, J. Beyond pixels: Exploiting camera metadata for photo classification. Pattern Recognit. 2005, 38, 935–946. [Google Scholar] [CrossRef]

- Boutell, M.; Luo, J. Bayesian fusion of camera metadata cues in semantic scene classification. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 2, pp. 1063–6919. [Google Scholar]

- Gupta, L.; Pathangay, V.; Patra, A.; Dyana, A.; Das, S. Indoor versus Outdoor Scene Classification Using Probabilistic Neural Network. EURASIP J. Adv. Signal Process. 2007, 2007, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Tao, L.; Kim, Y.H.; Kim, Y.T. An efficient neural network based indoor-outdoor scene classification algorithm. In Proceedings of the 2010 Digest of Technical Papers International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–13 January 2010; pp. 317–318. [Google Scholar]

- Vailaya, A.; Figueiredo, M.; Jain, A.; Zhang, H.J. Content-based hierarchical classification of vacation images. In Proceedings of the IEEE International Conference on Multimedia Computing and Systems, Florence, Italy, 7–11 June 1999; Volume 1, pp. 518–523. [Google Scholar]

- Serrano, N.; Savakis, A.; Luo, A. A computationally efficient approach to indoor/outdoor scene classification. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 4, pp. 146–149. [Google Scholar]

- Szummer, M.; Picard, R.W. Indoor-outdoor image classification. In Proceedings of the 1998 IEEE International Workshop on Content-Based Access of Image and Video Database, Bombay, India, 3 January 1998; pp. 42–51. [Google Scholar]

- Luo, J.; Savakis, A. Indoor vs outdoor classification of consumer photographs using low-level and semantic features. In Proceedings of the 2001 International Conference on Image Processing, Thessaloniki, Greece, 7–10 October 2001; pp. 745–748. [Google Scholar] [CrossRef]

- Kim, W.; Park, J.; Kim, C. A Novel Method for Efficient Indoor–Outdoor Image Classification. Signal Process. Syst. 2010, 61, 251–258. [Google Scholar] [CrossRef]

- Tong, Z.; Shi, D.; Yan, B.; Wei, J. A Review of Indoor-Outdoor Scene Classification. In Proceedings of the 2017 2nd International Conference on Control, Automation and Artificial Intelligence (CAAI 2017), Sanya, China, 25–26 June 2017. [Google Scholar] [CrossRef] [Green Version]

- Wu, R.; Wang, B.; Wang, W.; Yu, Y. Harvesting Discriminative Meta Objects with Deep CNN Features for Scene Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning Deep Features for Scene Recognition using Places Database. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 487–495. [Google Scholar]

- Extended Scene Classification Image Database Version 2 (IITM-SCID2). Available online: http://www.cse.iitm.ac.in/~vplab/SCID/ (accessed on 4 July 2020).

- The Fifteen Scene Categories. Available online: https://figshare.com/articles/15-Scene_Image_Dataset/7007177 (accessed on 4 July 2020).

- SUN Database. Available online: https://groups.csail.mit.edu/vision/SUN/ (accessed on 4 July 2020).

- INRIA Holidays Dataset. Available online: http://lear.inrialpes.fr/people/jegou/data.php (accessed on 4 July 2020).

- Torralba’s Indoor Database. Available online: http://web.mit.edu/torralba/www/indoor.html (accessed on 4 July 2020).

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 413–420. [Google Scholar]

- MIT Places Database. Available online: http://places.csail.mit.edu/index.html (accessed on 4 July 2020).

- Greene, M.R.; Oliva, A. Recognition of natural scenes from global properties: Seeing the forest without representing the trees. Cogn. Psychol. 2009, 58, 137–176. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Oliva, A.; Torralba, A. Building the gist of a scene: The role of global image features in recognition. Prog. Brain Res. 2006, 155, 23–36. [Google Scholar]

- Oliva, A. Gist of the Scene. In Neurobiology of Attention; Elsevier: Amsterdam, The Netherlands, 2005; Volume 696, pp. 251–256. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. Modeling the Shape of the Scene: A Holistic Representation of the Spatial Envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Hu, Q.; Wenbin, W.; Xia, T.; Yu, Q.; Yang, P.; Li, Z.; Song, Q. Exploring the Use of Google Earth Imagery and Object-Based Methods in Land Use/Cover Mapping. Remote Sens. 2013, 5, 6026–6042. [Google Scholar] [CrossRef] [Green Version]

- Kabir, S.; He, D.C.; Sanusi, M.A.; Wan Hussina, W.M.A. Texture analysis of IKONOS satellite imagery for urban land use and land cover classification. Imaging Sci. J. 2010, 58, 163–170. [Google Scholar] [CrossRef]

- Tabib Mahmoudi, F.; Samadzadegan, F.; Reinartz, P. Object Recognition based on the Context Aware Decision Level Fusion in Multi Views Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 12–22. [Google Scholar] [CrossRef] [Green Version]

- Ok, A.O.; Senaras, C.; Yuksel, B. Automated Detection of Arbitrarily Shaped Buildings in Complex Environments From Monocular VHR Optical Satellite Imagery. IEEE Trans. Geosci. Remote. Sens. 2013, 51, 1701–1717. [Google Scholar] [CrossRef]

- Maurya, R.; Gupta, P.; Shukla, A. Road extraction using K-Means clustering and morphological operations. In Proceedings of the 2011 IEEE International Conference on Image Information Processing (ICIIP), Brussels, Belgium, 11–14 September 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Road Network Extraction from VHR Satellite Images Using Context Aware Object Feature Integration and Tensor Voting. Remote Sens. 2016, 8, 637. [Google Scholar] [CrossRef] [Green Version]

- Ardila, J.; Bijker, W.; Tolpekin, V.; Stein, A. Context–sensitive extraction of tree crown objects in urban areas using VHR satellite images. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 57–69. [Google Scholar] [CrossRef] [Green Version]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2018, arXiv:1801.04381. [Google Scholar]

- Hu, S.; Ning, Q.; Chen, B.; Lei, Y.; Zhou, X.; Yan, H.; Zhao, C.; Tang, T.; Hu, R. Segmentation of Aerial Image with Multi-scale Feature and Attention Model. In Artificial Intelligence in China; Springer: Berlin/Heidelberg, Germany, 2020; pp. 58–66. [Google Scholar] [CrossRef]

- Levinshtein, A.; Stere, A.; Kutulakos, K.N.; Fleet, D.J.; Dickinson, S.J.; Siddiqi, K. TurboPixels: Fast Superpixels Using Geometric Flows. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2290–2297. [Google Scholar] [CrossRef] [Green Version]

- Ahonen, T.; Matas, J.; He, C.; Pietikäinen, M. Rotation Invariant Image Description with Local Binary Pattern Histogram Fourier Features. In Image Analysis; Salberg, A.B., Hardeberg, J.Y., Jenssen, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 61–70. [Google Scholar]

- Frank, K.; Vera, M.J.; Robertson, P.; Angermann, M. Reliable Real-Time Recognition of Motion Related Human Activities Using MEMS Inertial Sensors. In Proceedings of the ION GNSS 2010, Portland, OR, USA, 24–24 September 2010; Volume 4. [Google Scholar]

- Ramanandan, A.; Chen, A.; Farrell, J.; Suvarna, S. Detection of stationarity in an inertial navigation system. In Proceedings of the 23rd International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2010), Portland, Oregon, 1–24 September 2010; Volume 1, pp. 238–244. [Google Scholar]

- Martí, E.; Martín Gómez, D.; Garcia, J.; de la Escalera, A.; Molina, J.; Armingol, J. Context-Aided Sensor Fusion for Enhanced Urban Navigation. Sensors 2012, 12, 16802–16837. [Google Scholar] [CrossRef] [Green Version]

- Saeedi, S.; Moussa, A.; El-Sheimy, N. Context-Aware Personal Navigation Using Embedded Sensor Fusion in Smartphones. Sensors 2014, 14, 5742–5767. [Google Scholar] [CrossRef]

- Gusenbauer, D.; Isert, C.; Krösche, J. Self-contained indoor positioning on off-the-shelf mobile devices. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–9. [Google Scholar]

- Zhang, R.; Zakhor, A. Automatic identification of window regions on indoor point clouds using LiDAR and cameras. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 107–114. [Google Scholar] [CrossRef]

- Börcs, A.; Nagy, B.; Benedek, C. Instant Object Detection in Lidar Point Clouds. IEEE Geosci. Remote Sens. Lett. 2017, 14, 992–996. [Google Scholar] [CrossRef] [Green Version]

- Wang, A.; Lu, J.; Cai, J.; Wang, G.; Cham, T. Unsupervised Joint Feature Learning and Encoding for RGB-D Scene Labeling. IEEE Trans. Image Process. 2015, 24, 4459–4473. [Google Scholar] [CrossRef]

- Gupta, S.; Arbelaez, P.; Girshick, R.; Malik, J. Indoor Scene Understanding with RGB-D Images: Bottom-up Segmentation, Object Detection and Semantic Segmentation. Int. J. Comput. Vis. 2014, 112, 133–149. [Google Scholar] [CrossRef]

- Xu, W.; Chen, R.; Chu, T.; Kuang, L.; Yang, Y.; Li, X.; Liu, J.; Chen, Y. A context detection approach using GPS module and emerging sensors in smartphone platform. In Proceedings of the 2014 Ubiquitous Positioning Indoor Navigation and Location Based Service (UPINLBS), Corpus Christ, TX, USA, 20–21 November 2014; pp. 156–163. [Google Scholar]

- Li, S.; Qin, Z.; Song, H.; Yang, X.; Zhang, R. A lightweight and aggregated system for indoor/outdoor detection using smart devices. Future Gener. Comput. Syst. 2017. [Google Scholar] [CrossRef]

- Shtar, G.; Shapira, B.; Rokach, L. Clustering Wi-Fi fingerprints for indoor–outdoor detection. Wirel. Netw. 2019, 25, 1341–1359. [Google Scholar] [CrossRef] [Green Version]

- Zou, H.; Jiang, H.; Luo, Y.; Zhu, J.; Lu, X.; Xie, L. BlueDetect: An iBeacon-Enabled Scheme for Accurate and Energy-Efficient Indoor-Outdoor Detection and Seamless Location-Based Service. Sensors 2016, 16, 268. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, P.; Zheng, Y.; Li, Z.; Shen, G. IODetector: A Generic Service for Indoor/Outdoor Detection. ACM Trans. Sens. Netw. 2012, 11, 361–362. [Google Scholar] [CrossRef]

- Wang, W.; Chang, Q.; Li, Q.; Shi, Z.; Chen, W. Indoor-Outdoor Detection Using a Smart Phone Sensor. Sensors 2016, 16, 1563. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Roth, J.; Riedel, T.; Beigl, M.; Yao, J. AudioIO: Indoor Outdoor Detection on Smartphones via Active Sound Probing. In Proceedings of the EAI International Conference on IoT in Urban Space, Guimarães, Portugal, 21–22 November 2018; pp. 81–95. [Google Scholar] [CrossRef]

- Sankaran, K.; Zhu, M.; Guo, X.F.; Ananda, A.L.; Chan, M.C.; Peh, L.S. Using mobile phone barometer for low-power transportation context detection. In Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems, Memphis, TN, USA, 3–6 November 2014; pp. 191–205. [Google Scholar]

- Vanini, S.; Giordano, S. Adaptive context-agnostic floor transition detection on smart mobile devices. In Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), San Diego, CA, USA, 18–22 March 2013; pp. 2–7. [Google Scholar]

- Ashraf, I.; Hur, S.; Park, Y. MagIO: Magnetic Field Strength Based Indoor- Outdoor Detection with a Commercial Smartphone. Micromachines 2018, 9, 534. [Google Scholar] [CrossRef] [Green Version]

- Krumm, J.; Hariharan, R. TempIO: Inside/Outside Classification with Temperature. In Proceedings of the Second International Workshop on Man-Machine Symbiotic Systems, Kyoto, Japan, 23–24 November 2004. [Google Scholar]

- Chen, G.; Hay, G.J.; Carvalho, L.M.T.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

| Context | GNSS | Vision | Articles | ||

|---|---|---|---|---|---|

| Impact | Adaptation | Impact | Adaptation | ||

| Urban canyon (narrow street with tall buildings) | Signal not available/ high positioning errors | Tight-coupling, NLOS filtering, shadow matching | None | Point- or line-based feature extraction | [8,9,10,11,12,13] |

| Dense urban area (residential area) | High NLOS and multipath risk | Tight-coupling, NLOS filtering | None | Classical point- or line-based feature extraction | [10,11,12,13,14,15,16,17,18] |

| Low density urban area (suburban area) | Low NLOS risk, but multipath effect possible | Doppler aiding, multipath mitigation, loose-coupling | None | Classical point- or line-based feature extraction | [10,11,12,13,14,15,16,17,18] |

| Deep indoor (no line of sight to the exterior) | Signal not available | Vision/INS coupling | Lake of texture, few robust point features | Line-based feature extraction | [1,9,16,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33] |

| Light indoor (close to door, window, or balcony, also called semi-indoor) | Signal with high errors (due to both attenuation and reflection) | Vision/INS coupling | Lake of texture, few robust point features, glare effect | Line-based feature extraction, additional image processing step | [1,9,16,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33] |

| Open sky | Perfect quality of the signal | GNSS/INS loose-coupling | None | Switch off | [1,8,10,11,12,13,15,34] |

| Dense forest | Signal attenuated and multipath | Extension of coherent integration time, Doppler aiding, multipath mitigation | Unstructured environment | Combination of points and colour features | [15,16,17,18] |

| Light forest (couple of trees) | Signal attenuated | Extension of coherent integration time, Doppler aiding | Unstructured environment, glare effect | Combination of points and colour features, additional image processing step | [15,16,17,18] |

| Near water surface | Tremendous number of reflections | Doppler aiding, multipath mitigation | No texture and landmarks | Switch off | [16] |

| Outdoor | Soft-Indoor | Intermediate | Deep-Indoor | |

|---|---|---|---|---|

| C/N0 (dB.Hz) | 35–45 | 25–35 | 10–25 | <10 |

| NMEA-Level | RINEX-Level | Correlator-Level | |||||

|---|---|---|---|---|---|---|---|

| Classification Results | Classification Results | Classification Results | |||||

| LOS | NLOS | LOS | NLOS | LOS | NLOS | ||

| Labelled | LOS | 1194 | 98 | 1153 | 139 | 1279 | 17 |

| Results | NLOS | 286 | 522 | 288 | 520 | 179 | 633 |

| F1 Score | 80.42 | 78.11 | 90.39 | ||||

| Overall Accuracy (%) | 81.71 | 79.67 | 90.70 | ||||

| Average Processing | Accuracy (%) | ||

|---|---|---|---|

| Algorithm | Time Per Image (s) | Sunny | Cloudy |

| Otsu | 0.015 | 80.8 | 94.7 |

| Mean shift | 35.5 | 55.4 | 90.5 |

| HMRF-EM | 73.9 | 36.3 | 82.7 |

| Graph cut | 1.8 | 59.8 | 82.8 |

| Indicators/Context | OpenSky | LowDensityUrban | Dense Urban | Urban Canyon | Light Indoor | Deep Indoor | LightForest | Dense forest | Water | |

|---|---|---|---|---|---|---|---|---|---|---|

| GNSS | C/N0 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ? | ? | ✘ |

| K-Rician | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ? | ? | ✘ | |

| Pseudo-range | ? | ? | ? | ? | ? | ? | ? | ? | ✘ | |

| Satellite elevation | ? | ? | ? | ? | ? | ? | ? | ? | ✘ | |

| Vision | Sky extraction | ✔ | ? | ? | ? | ✔ | ✔ | ? | ? | ✘ |

| No. of NLOS satellites | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ | |

| Aerial photography | ✔ | ? | ? | ? | ? | ? | ✔ | ✔ | ✔ | |

| Scene classification | ✔ | ✘ | ✘ | ✘ | ✔ | ✔ | ✘ | ✘ | ✘ |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feriol, F.; Vivet, D.; Watanabe, Y. A Review of Environmental Context Detection for Navigation Based on Multiple Sensors. Sensors 2020, 20, 4532. https://doi.org/10.3390/s20164532

Feriol F, Vivet D, Watanabe Y. A Review of Environmental Context Detection for Navigation Based on Multiple Sensors. Sensors. 2020; 20(16):4532. https://doi.org/10.3390/s20164532

Chicago/Turabian StyleFeriol, Florent, Damien Vivet, and Yoko Watanabe. 2020. "A Review of Environmental Context Detection for Navigation Based on Multiple Sensors" Sensors 20, no. 16: 4532. https://doi.org/10.3390/s20164532

APA StyleFeriol, F., Vivet, D., & Watanabe, Y. (2020). A Review of Environmental Context Detection for Navigation Based on Multiple Sensors. Sensors, 20(16), 4532. https://doi.org/10.3390/s20164532