Multi-Modality Emotion Recognition Model with GAT-Based Multi-Head Inter-Modality Attention

Abstract

1. Introduction

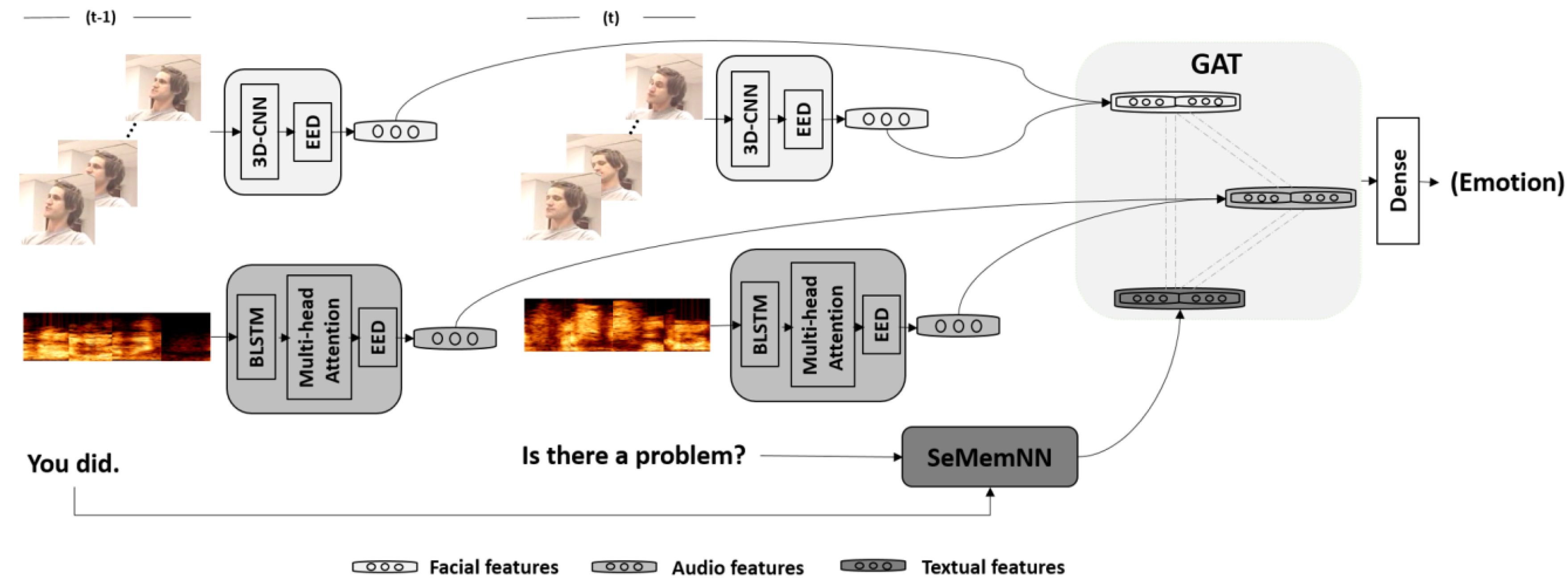

- We propose a strategy for augmenting audio samples based on the entropy weight method.

- We propose an emotion encoder-decoder to select decisive timesteps.

- We propose a multi-modality emotion recognition model that combines visual, audio, and text modalities.

- We propose a decision-level fusion strategy with a graph attention network.

2. Related Works

2.1. Emotion Recognition with Deep Learning Approach

2.1.1. Facial Expression Recognition (FER)

2.1.2. Speech Emotion Recognition (SER)

2.1.3. Text Emotion Recognition

2.2. Multi-Tasks

2.3. Multi-Modality

2.4. Graph Neural Networks

3. Proposed Methods

3.1. Preprocessing

3.2. Emotion Encoder-Decoder (EED)

3.3. Model

4. Experiment

4.1. Dataset

4.2. Setting

4.3. Baseline

- Without audio sample augmentation: We trained the audio model with and without augmentation samples and compared and analyzed the performances.

- Replacing EED with LSTM: The internal mechanism of our proposed EED resembles LSTM, but we added more trainable matrices U to analyze the time-series information and learn the linear transformation of each timestep. We therefore trained a model for comparison, in which the EED was replaced with a bi-directional LSTM.

- Comparing SeMemNN to BERT: Although BERT’s superior performance is recognized in the field of text classification, few studies have applied BERT to dialogue text emotion recognition. In our study, we adopted SeMemNN, which performs well on small-sample training, and compared its performance to a pre-trained BERT [53].

- DialogueRNN [23]: This recurrent network adopts two GRUs to track the individual speaker states by considering the global context during the conversation and uses another GRU to track the flow of the emotional states.

- DialogueGCN [27]: This method employs a graph convolutional network (GCN) to leverage the self- and inter-speaker dependency of the interlocutors with contextual information.

5. Results

5.1. Speech Emotion Recognition

5.2. Facial Expression Recognition

5.3. Text Emotion Recognition

5.4. Multi-Modality

6. Discussions

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Scheutz, M.; Schermerhorn, P.; Kramer, J.; Anderson, D. First steps toward natural human-like HRI. Auton. Robot. 2007, 22, 411–423. [Google Scholar] [CrossRef]

- Gonsior, B.; Sosnowski, S.; Mayer, C.; Blume, J.; Radig, B.; Wollherr, D.; KÃhnlenz, K. Improving aspects of empathy and subjective performance for HRI through mirroring facial expressions. In Proceedings of the 2011 RO-MAN, Atlanta, GA, USA, 31 July–3 August 2011; pp. 350–356. [Google Scholar]

- Fu, C.; Yoshikawa, Y.; Iio, T.; Ishiguro, H. Sharing Experiences to Help a Robot Present Its Mind and Sociability. Int. J. Soc. Robot. 2020, 1–12. [Google Scholar] [CrossRef]

- Byeon, Y.H.; Kwak, K.C. Facial expression recognition using 3d convolutional neural network. Int. J. Adv. Comput. Sci. Appl. 2014, 5. [Google Scholar] [CrossRef]

- Zhang, S.; Pan, X.; Cui, Y.; Zhao, X.; Liu, L. Learning affective video features for facial expression recognition via hybrid deep learning. IEEE Access 2019, 7, 32297–32304. [Google Scholar] [CrossRef]

- Lotfian, R.; Busso, C. Curriculum learning for speech emotion recognition from crowdsourced labels. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 815–826. [Google Scholar] [CrossRef]

- Fu, C.; Dissanayake, T.; Hosoda, K.; Maekawa, T.; Ishiguro, H. Similarity of Speech Emotion in Different Languages Revealed by a Neural Network with Attention. In Proceedings of the 2020 IEEE 14th International Conference on Semantic Computing (ICSC), San Diego, CA, USA, 3–5 February 2020; pp. 381–386. [Google Scholar]

- Ahmed, F.; Gavrilova, M.L. Two-layer feature selection algorithm for recognizing human emotions from 3d motion analysis. In Proceedings of the Computer Graphics International Conference, Calgary, AB, Canada, 17–20 June 2019; Springer: Cham, Switzerland, 2019; pp. 53–67. [Google Scholar]

- Ajili, I.; Mallem, M.; Didier, J.Y. Human motions and emotions recognition inspired by LMA qualities. Vis. Comput. 2019, 35, 1411–1426. [Google Scholar] [CrossRef]

- Hazarika, D.; Poria, S.; Zimmermann, R.; Mihalcea, R. Emotion Recognition in Conversations with Transfer Learning from Generative Conversation Modeling. arXiv 2019, arXiv:1910.04980. [Google Scholar]

- Chetty, G.; Wagner, M.; Goecke, R. A multilevel fusion approach for audiovisual emotion recognition. In Proceedings of the AVSP, Moreton Island, Australia, 26–29 September 2008; pp. 115–120. [Google Scholar]

- Ratliff, M.S.; Patterson, E. Emotion recognition using facial expressions with active appearance models. In Proceedings of the HRI, Amsterdam, The Netherlands, 12 March 2008. [Google Scholar]

- Wang, K.; An, N.; Li, B.N.; Zhang, Y.; Li, L. Speech emotion recognition using Fourier parameters. IEEE Trans. Affect. Comput. 2015, 6, 69–75. [Google Scholar] [CrossRef]

- Chao, L.; Tao, J.; Yang, M.; Li, Y. Improving generation performance of speech emotion recognition by denoising autoencoders. In Proceedings of the 9th International Symposium on Chinese Spoken Language Processing, Singapore, 12–14 September 2014; pp. 341–344. [Google Scholar]

- Costantini, G.; Iaderola, I.; Paoloni, A.; Todisco, M. Emovo corpus: An italian emotional speech database. In Proceedings of the International Conference on Language Resources and Evaluation (LREC 2014), Reykjavik, Iceland, 26–31 May 2014; European Language Resources Association (ELRA): Reykjavik, Iceland, 2014; pp. 3501–3504. [Google Scholar]

- Battocchi, A.; Pianesi, F.; Goren-Bar, D. Dafex: Database of facial expressions. In Proceedings of the International Conference on Intelligent Technologies for Interactive Entertainment, Madonna di Campiglio, Italy, 30 November–2 December 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 303–306. [Google Scholar]

- Pan, S.; Tao, J.; Li, Y. The CASIA audio emotion recognition method for audio/visual emotion challenge 2011. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Memphis, TN, USA, 9–12 October 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 388–395. [Google Scholar]

- Satt, A.; Rozenberg, S.; Hoory, R. Efficient Emotion Recognition from Speech Using Deep Learning on Spectrograms. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 1089–1093. [Google Scholar]

- Tripathi, S.; Tripathi, S.; Beigi, H. Multi-Modal Emotion Recognition on Iemocap with Neural Networks. arXiv 2018, arXiv:1804.05788. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335. [Google Scholar] [CrossRef]

- Asghar, M.A.; Khan, M.J.; Amin, Y.; Rizwan, M.; Rahman, M.; Badnava, S.; Mirjavadi, S.S. EEG-Based Multi-Modal Emotion Recognition using Bag of Deep Features: An Optimal Feature Selection Approach. Sensors 2019, 19, 5218. [Google Scholar] [CrossRef] [PubMed]

- Tsiourti, C.; Weiss, A.; Wac, K.; Vincze, M. Multimodal integration of emotional signals from voice, body, and context: Effects of (in) congruence on emotion recognition and attitudes towards robots. Int. J. Soc. Robot. 2019, 11, 555–573. [Google Scholar] [CrossRef]

- Majumder, N.; Poria, S.; Hazarika, D.; Mihalcea, R.; Gelbukh, A.; Cambria, E. Dialoguernn: An attentive rnn for emotion detection in conversations. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6818–6825. [Google Scholar]

- Le, D.; Aldeneh, Z.; Provost, E.M. Discretized Continuous Speech Emotion Recognition with Multi-Task Deep Recurrent Neural Network. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 1108–1112. [Google Scholar]

- Sahu, G. Multimodal Speech Emotion Recognition and Ambiguity Resolution. arXiv 2019, arXiv:1904.06022. [Google Scholar]

- Li, J.L.; Lee, C.C. Attentive to Individual: A Multimodal Emotion Recognition Network with Personalized Attention Profile. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 211–215. [Google Scholar]

- Ghosal, D.; Majumder, N.; Poria, S.; Chhaya, N.; Gelbukh, A. Dialoguegcn: A graph convolutional neural network for emotion recognition in conversation. arXiv 2019, arXiv:1908.11540. [Google Scholar]

- Fasel, B. Robust face analysis using convolutional neural networks. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 2, pp. 40–43. [Google Scholar]

- Fasel, B. Head-pose invariant facial expression recognition using convolutional neural networks. In Proceedings of the Fourth IEEE International Conference on Multimodal Interfaces, Pittsburgh, PA, USA, 16 October 2002; pp. 529–534. [Google Scholar]

- Qawaqneh, Z.; Mallouh, A.A.; Barkana, B.D. Deep convolutional neural network for age estimation based on VGG-face model. arXiv 2017, arXiv:1709.01664. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Prasanna Teja Reddy, S.; Teja Karri, S.; Ram Dubey, S.; Mukherjee, S. Spontaneous Facial Micro-Expression Recognition using 3D Spatiotemporal Convolutional Neural Networks. arXiv 2019, arXiv:1904.01390. [Google Scholar]

- Li, H.; Liu, Q.; Wei, X.; Chai, Z.; Chen, W. Facial Expression Recognition: Disentangling Expression Based on Self-attention Conditional Generative Adversarial Nets. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xi’an, China, 8–11 November 2019; Springer: Cham, Switzerland, 2019; pp. 725–735. [Google Scholar]

- Du, H.; Zheng, H.; Yu, M. Facial Expression Recognition Based on Region-Wise Attention and Geometry Difference. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018. [Google Scholar]

- Tzinis, E.; Potamianos, A. Segment-based speech emotion recognition using recurrent neural networks. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 190–195. [Google Scholar]

- Rao, K.S.; Koolagudi, S.G.; Vempada, R.R. Emotion recognition from speech using global and local prosodic features. Int. J. Speech Technol. 2013, 16, 143–160. [Google Scholar] [CrossRef]

- Cao, H.; Benus, S.; Gur, R.C.; Verma, R.; Nenkova, A. Prosodic cues for emotion: Analysis with discrete characterization of intonation. In Proceedings of the 7th International Conference on Speech Prosody, Dublin, Ireland, 20–23 May 2014; pp. 130–134. [Google Scholar]

- An, N.; Verma, P. Convoluted Feelings Convolutional and Recurrent Nets for Detecting Emotion From Audio Data; Technical Report; Stanford University: Stanford, CA, USA, 2015. [Google Scholar]

- Trigeorgis, G.; Ringeval, F.; Brueckner, R.; Marchi, E.; Nicolaou, M.A.; Schuller, B.; Zafeiriou, S. Adieu features? End-to-end speech emotion recognition using a deep convolutional recurrent network. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5200–5204. [Google Scholar]

- Zahiri, S.M.; Choi, J.D. Emotion detection on tv show transcripts with sequence-based convolutional neural networks. In Proceedings of the Workshops at the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Köper, M.; Kim, E.; Klinger, R. IMS at EmoInt-2017: Emotion intensity prediction with affective norms, automatically extended resources and deep learning. In Proceedings of the 8th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Copenhagen, Denmark, 8 September 2017; pp. 50–57. [Google Scholar]

- Li, P.; Li, J.; Sun, F.; Wang, P. Short Text Emotion Analysis Based on Recurrent Neural Network. In ICIE ’17: Proceedings of the 6th International Conference on Information Engineering; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–5. [Google Scholar]

- Hazarika, D.; Poria, S.; Zadeh, A.; Cambria, E.; Morency, L.P.; Zimmermann, R. Conversational memory network for emotion recognition in dyadic dialogue videos. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 1, pp. 2122–2132. [Google Scholar]

- Zhang, Z.; Wu, B.; Schuller, B. Attention-augmented end-to-end multi-task learning for emotion prediction from speech. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6705–6709. [Google Scholar]

- Zhao, H.; Han, Z.; Wang, R. Speech Emotion Recognition Based on Multi-Task Learning. In Proceedings of the 2019 IEEE 5th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing, (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Washington, DC, USA, 27–29 May 2019; pp. 186–188. [Google Scholar]

- Kollias, D.; Zafeiriou, S. Expression, Affect, Action Unit Recognition: Aff-Wild2, Multi-Task Learning and ArcFace. arXiv 2019, arXiv:1910.04855. [Google Scholar]

- Xia, R.; Liu, Y. Leveraging valence and activation information via multi-task learning for categorical emotion recognition. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 5301–5305. [Google Scholar]

- Zhang, B.; Provost, E.M.; Essl, G. Cross-corpus acoustic emotion recognition from singing and speaking: A multi-task learning approach. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5805–5809. [Google Scholar]

- Xia, R.; Liu, Y. A multi-task learning framework for emotion recognition using 2D continuous space. IEEE Trans. Affect. Comput. 2015, 8, 3–14. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, X.; Yang, D. Multimodel Music Emotion Recognition Using Unsupervised Deep Neural Networks. In Proceedings of the 6th Conference on Sound and Music Technology (CSMT), Xiamen, China, 12–13 November 2018; Springer: Singapore, 2019; pp. 27–39. [Google Scholar]

- Zhang, T.; Wang, X.; Xu, X.; Chen, C.P. GCB-Net: Graph convolutional broad network and its application in emotion recognition. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Fu, C.; Liu, C.; Ishi, C.; Yoshikawa, Y.; Ishiguro, H. SeMemNN: A Semantic Matrix-Based Memory Neural Network for Text Classification. In Proceedings of the 2020 IEEE 14th International Conference on Semantic Computing (ICSC), San Diego, CA, USA, 3–5 February 2020; pp. 123–127. [Google Scholar]

- Ying, W.; Xiang, R.; Lu, Q. Improving Multi-label Emotion Classification by Integrating both General and Domain Knowledge. In Proceedings of the 5th Workshop on Noisy User-Generated Text (W-NUT 2019), Hong Kong, China, 3–4 November 2019; p. 316. [Google Scholar]

- Kant, N.; Puri, R.; Yakovenko, N.; Catanzaro, B. Practical Text Classification With Large Pre-Trained Language Models. arXiv 2018, arXiv:1812.01207. [Google Scholar]

| Notation | Description | Value |

|---|---|---|

| m | Mel scale | 384 |

| n | length of Mel spectrogram | 256 |

| number of audio features | 256 | |

| number of facial features | 64 | |

| number of hidden emotion features | 64 | |

| number of EED cells | 128 | |

| K | attention heads for GAT | 8 |

| Methods | Happy | Sad | Neutral | Angry | Excited | Frustrated | Acc.(w) | F1(w) |

|---|---|---|---|---|---|---|---|---|

| AM | 44.05 | 72.87 | 47.95 | 60.00 | 58.25 | 53.74 | 56.30 | 55.73 |

| AM | 40.38 | 57.75 | 47.16 | 59.17 | 66.45 | 44.07 | 52.11 | 51.21 |

| AM | 35.90 | 65.87 | 49.10 | 67.02 | 56.94 | 46.77 | 54.04 | 53.34 |

| AM | 42.11 | 65.41 | 37.30 | 58.60 | 52.53 | 38.60 | 47.87 | 46.91 |

| Methods | Happy | Sad | Neutral | Angry | Excited | Frustrated | Acc.(w) | F1(w) |

|---|---|---|---|---|---|---|---|---|

| VM | 37.11 | 52.63 | 60.43 | 48.81 | 56.28 | 49.04 | 52.29 | 51.52 |

| VM | 50.00 | 47.38 | 56.42 | 42.64 | 46.59 | 55.66 | 50.81 | 48.18 |

| Methods | Happy | Sad | Neutral | Angry | Excited | Frustrated | Acc.(w) | F1(w) |

|---|---|---|---|---|---|---|---|---|

| TM | 48.24 | 55.51 | 46.10 | 61.24 | 56.22 | 48.13 | 52.99 | 51.51 |

| TM | 34.43 | 44.29 | 30.76 | 31.90 | 55.13 | 38.79 | 39.62 | 36.13 |

| Methods | Happy | Sad | Neutral | Angry | Excited | Frustrated | Acc.(w) | F1(w) |

|---|---|---|---|---|---|---|---|---|

| DialogueRNN | 25.69 | 75.10 | 58.59 | 64.71 | 80.27 | 61.15 | 63.40 | 62.75 |

| DialogueGCN | 40.62 | 89.14 | 61.92 | 67.53 | 65.46 | 64.18 | 65.25 | 64.18 |

| – our model | ||||||||

| MulM | 66.99 | 80.73 | 64.66 | 65.88 | 74.34 | 57.63 | 67.26 | 66.74 |

| MulM | 88.00 | 75.19 | 64.07 | 70.78 | 68.27 | 66.40 | 69.88 | 68.31 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Liu, C.; Ishi, C.T.; Ishiguro, H. Multi-Modality Emotion Recognition Model with GAT-Based Multi-Head Inter-Modality Attention. Sensors 2020, 20, 4894. https://doi.org/10.3390/s20174894

Fu C, Liu C, Ishi CT, Ishiguro H. Multi-Modality Emotion Recognition Model with GAT-Based Multi-Head Inter-Modality Attention. Sensors. 2020; 20(17):4894. https://doi.org/10.3390/s20174894

Chicago/Turabian StyleFu, Changzeng, Chaoran Liu, Carlos Toshinori Ishi, and Hiroshi Ishiguro. 2020. "Multi-Modality Emotion Recognition Model with GAT-Based Multi-Head Inter-Modality Attention" Sensors 20, no. 17: 4894. https://doi.org/10.3390/s20174894

APA StyleFu, C., Liu, C., Ishi, C. T., & Ishiguro, H. (2020). Multi-Modality Emotion Recognition Model with GAT-Based Multi-Head Inter-Modality Attention. Sensors, 20(17), 4894. https://doi.org/10.3390/s20174894