Scaling Effects on Chlorophyll Content Estimations with RGB Camera Mounted on a UAV Platform Using Machine-Learning Methods

Abstract

:1. Introduction

2. Materials and Methods

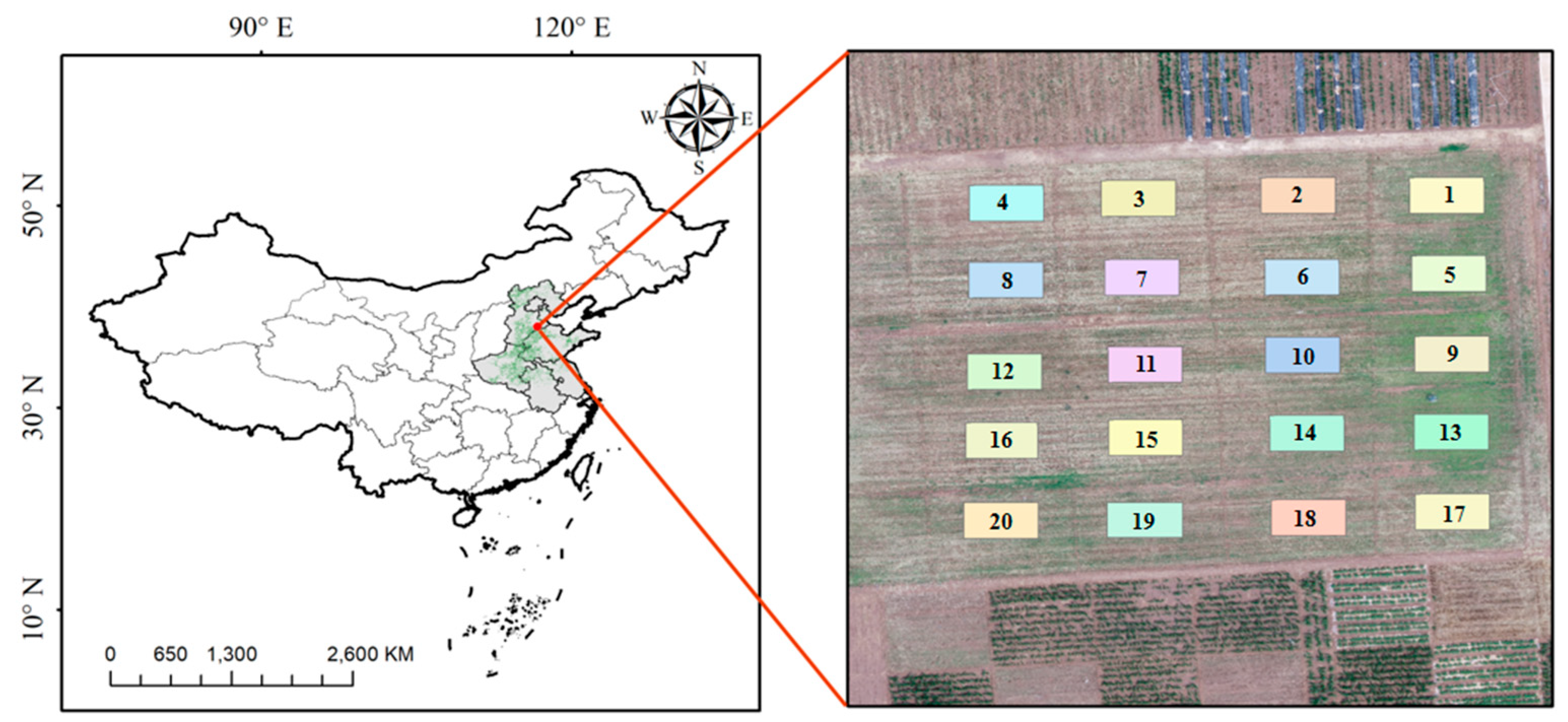

2.1. Study Area

2.2. Data Collection and Pre-Processing

2.2.1. UAV Data Collection and Pre-Processing

2.2.2. Chlorophyll Field Measurements Data

2.3. Methods

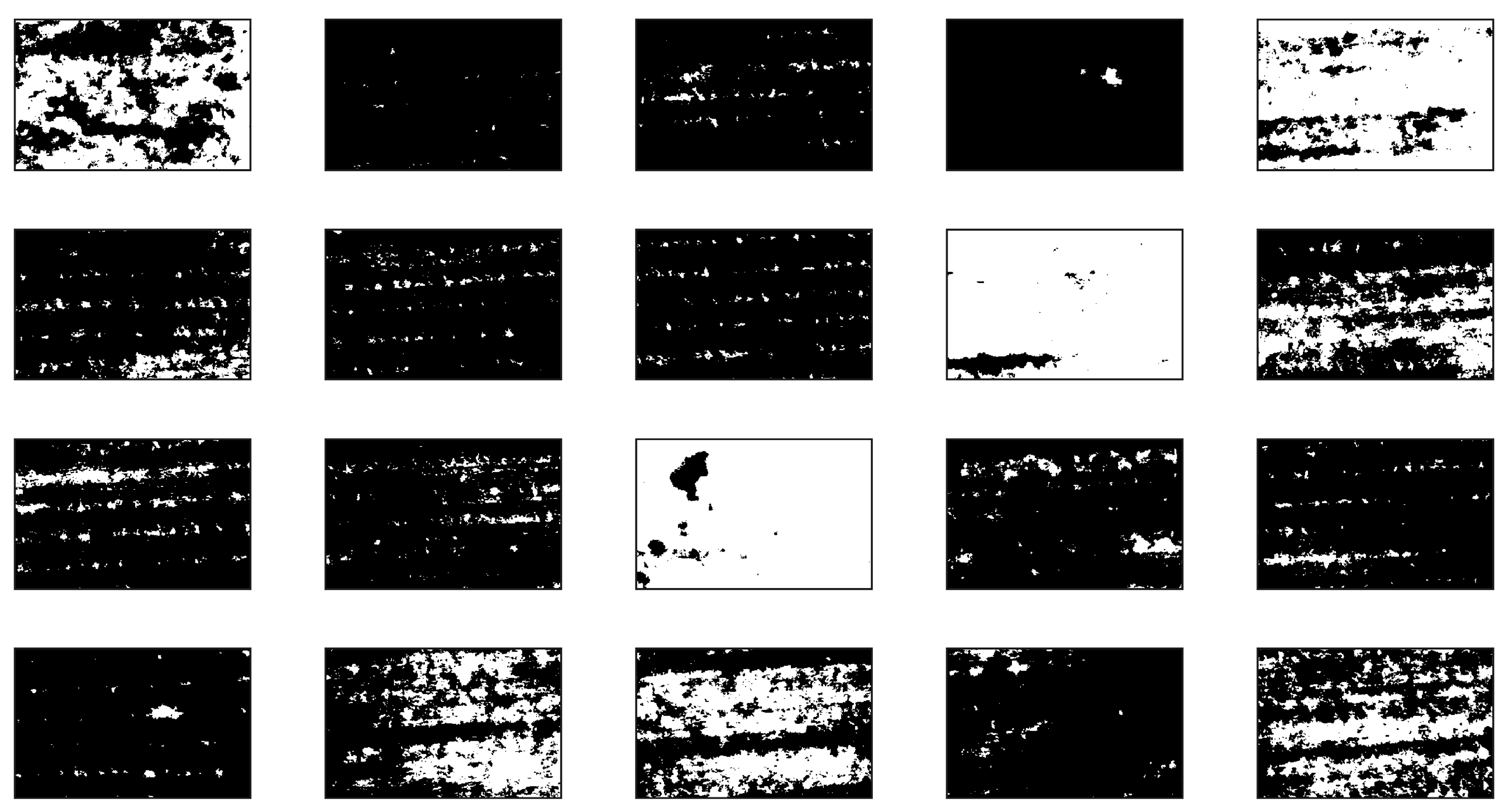

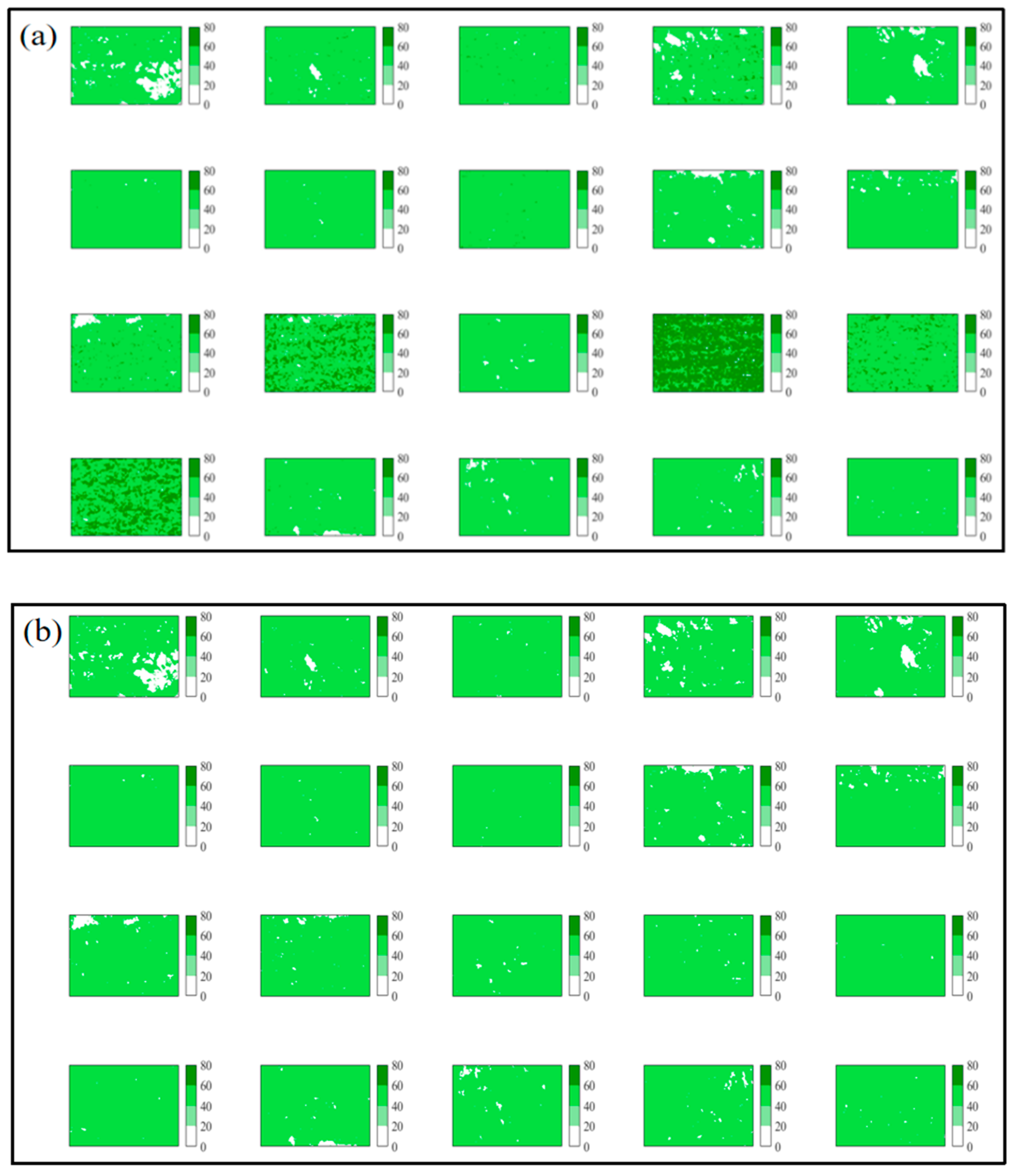

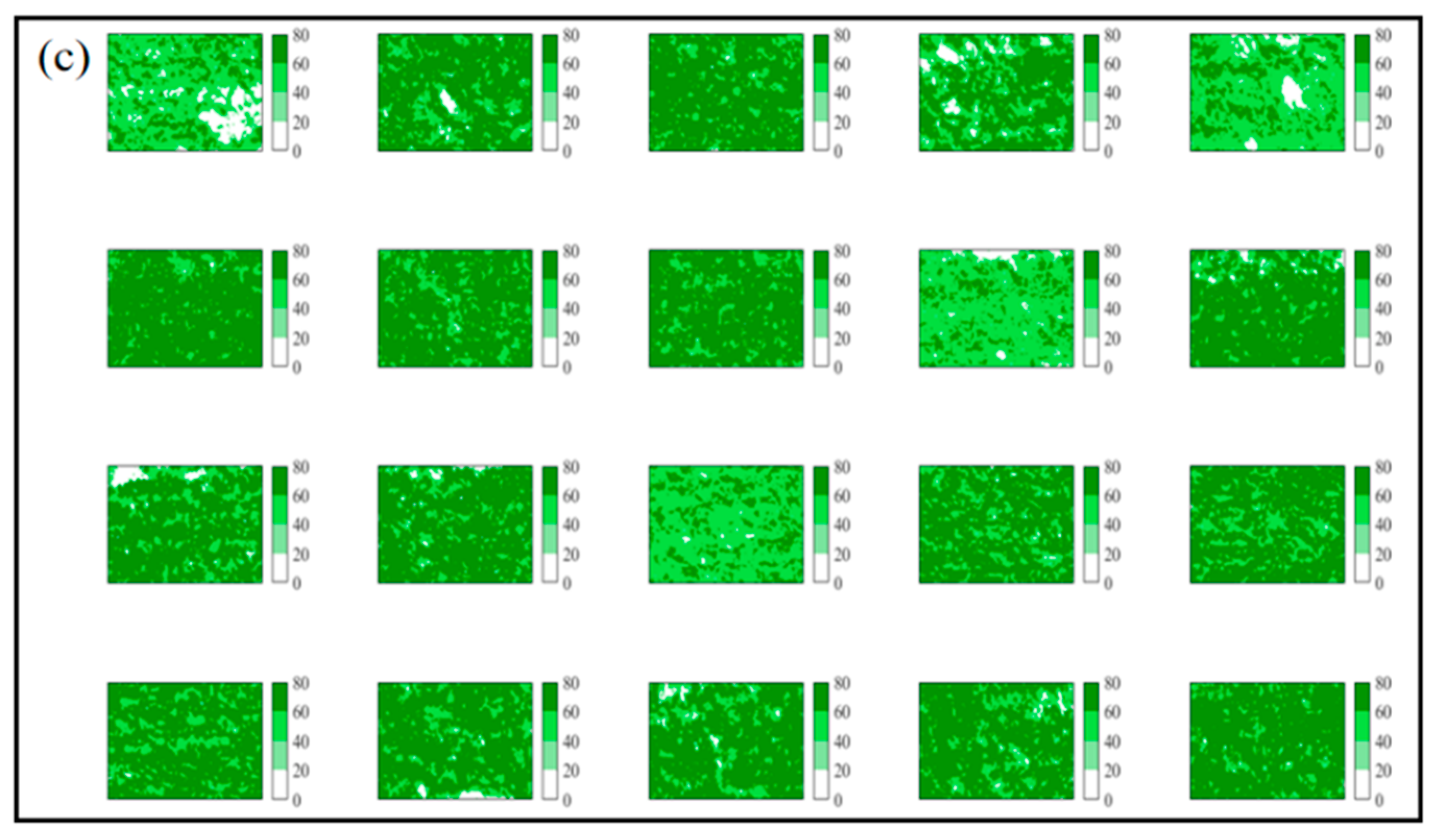

2.3.1. Scale Effects Using Vegetation Index Methods

2.3.2. Estimating the Chlorophyll Contents Using Machine-Learning Techniques

3. Results

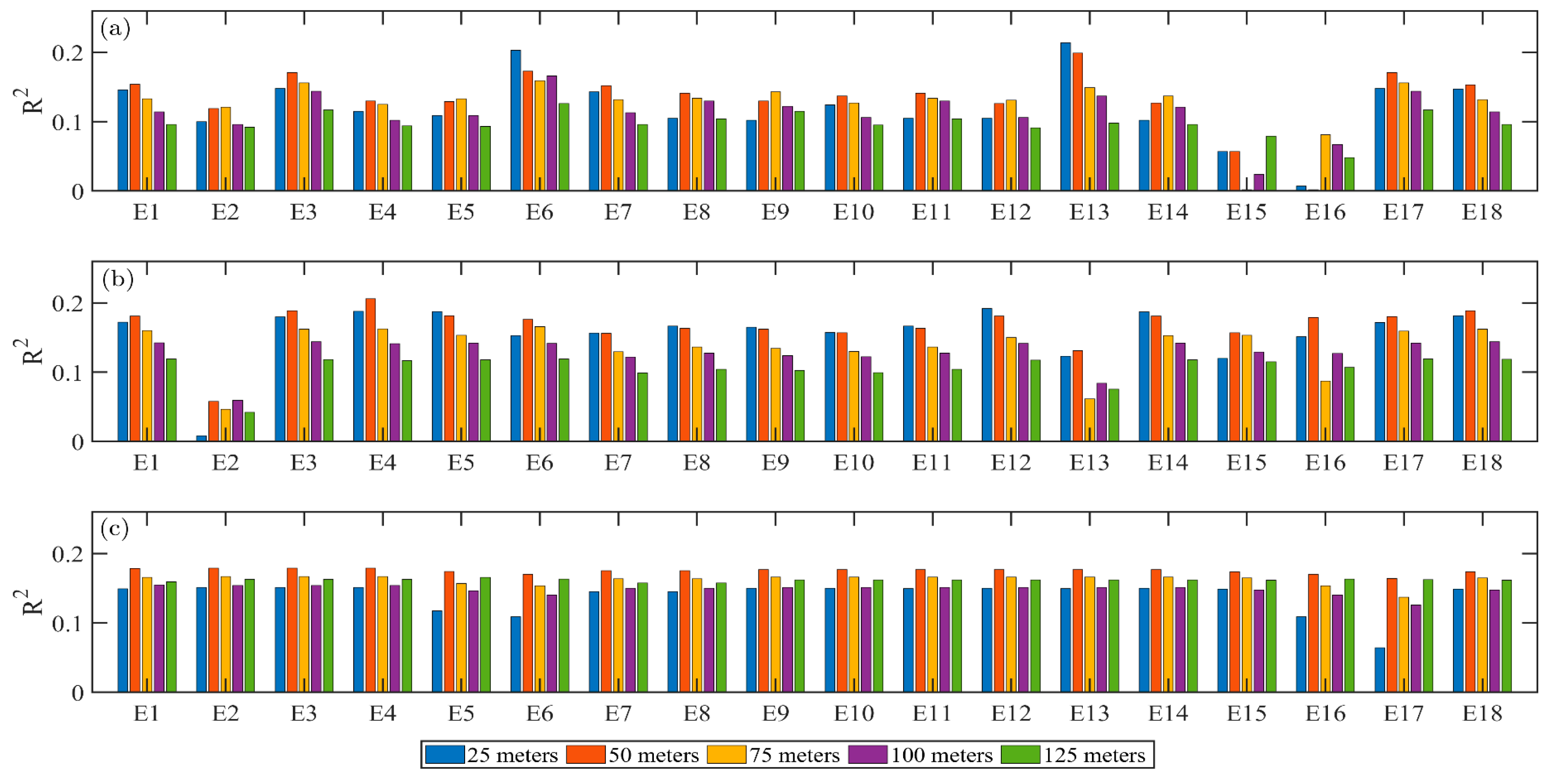

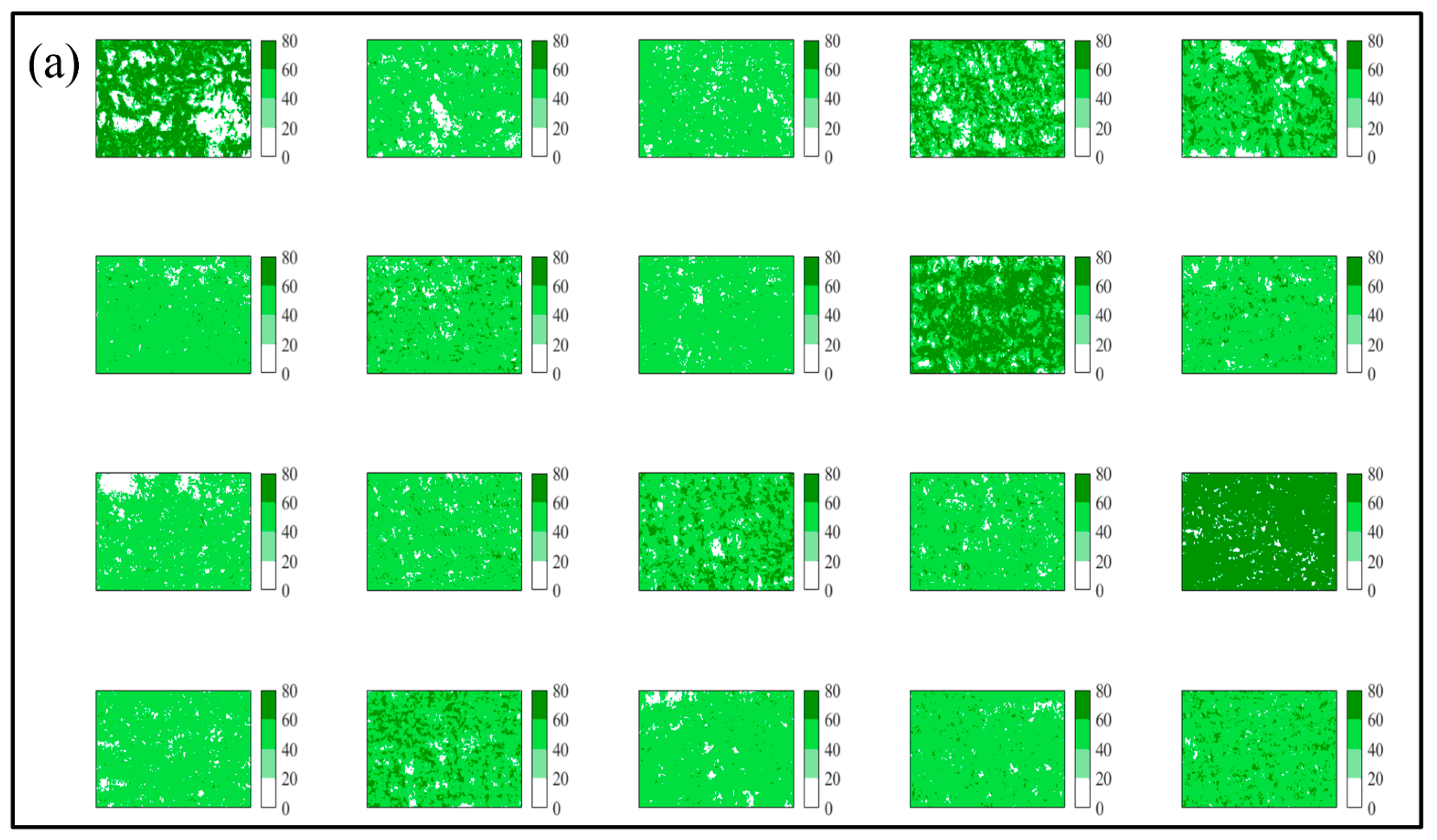

3.1. The Results of Scale Impacts Using Images from Different Flight Altitudes

3.2. Performance of Machine-Learning Methods and Chlorophyll Contents Prediction

4. Discussion

4.1. Limitations in Assessing the Sscale Impacts

4.2. Machine-Learning-Based Chlorophyll Content Estimation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Column 1 | Column 2 | Column 3 | Column 4 | |

|---|---|---|---|---|

| Row 1 | N2+straw (4) | N3P3K1 (3) | N3P1K1 (2) | N1P1K2 (1) |

| Row 2 | N2+Organic fertilizer (8) | N3P2K1 (7) | N3P3K2 (6) | N1P1K1 (5) |

| Row 3 | N3+straw (12) | N4P3K1 (11) | N2P2K2 (10) | N1P2K1 (9) |

| Row 4 | N3+Organic fertilizer (16) | N4P2K1 (15) | N2P1K1 (14) | N1P3K1 (13) |

| Row 5 | N4P2K2 (20) | N4P1K1 (19) | N2P2K1 (18) | N2P3K1 (17) |

| RGB Index with Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.146 | 0.154 | 0.133 | 0.114 | 0.096 |

| index2 | 0.100 | 0.119 | 0.121 | 0.096 | 0.092 |

| index3 | 0.148 | 0.171 | 0.156 | 0.144 | 0.117 |

| index4 | 0.115 | 0.130 | 0.125 | 0.102 | 0.094 |

| index5 | 0.109 | 0.129 | 0.133 | 0.109 | 0.093 |

| index6 | 0.203 | 0.173 | 0.159 | 0.166 | 0.126 |

| index7 | 0.143 | 0.152 | 0.132 | 0.113 | 0.096 |

| index8 | 0.105 | 0.141 | 0.134 | 0.130 | 0.104 |

| index9 | 0.102 | 0.130 | 0.143 | 0.122 | 0.115 |

| index10 | 0.124 | 0.137 | 0.127 | 0.106 | 0.095 |

| index11 | 0.105 | 0.141 | 0.134 | 0.130 | 0.104 |

| index12 | 0.105 | 0.126 | 0.131 | 0.106 | 0.091 |

| index13 | 0.214 | 0.199 | 0.149 | 0.137 | 0.098 |

| index14 | 0.102 | 0.127 | 0.137 | 0.121 | 0.096 |

| index15 | 0.057 | 0.057 | 0.002 | 0.024 | 0.079 |

| index16 | 0.007 | 0.002 | 0.081 | 0.067 | 0.048 |

| index17 | 0.148 | 0.171 | 0.156 | 0.144 | 0.117 |

| index18 | 0.147 | 0.153 | 0.132 | 0.114 | 0.096 |

| RGB Index without Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.172 | 0.181 | 0.160 | 0.142 | 0.119 |

| index2 | 0.008 | 0.058 | 0.046 | 0.059 | 0.042 |

| index3 | 0.180 | 0.189 | 0.162 | 0.144 | 0.118 |

| index4 | 0.188 | 0.206 | 0.162 | 0.141 | 0.116 |

| index5 | 0.187 | 0.181 | 0.153 | 0.142 | 0.118 |

| index6 | 0.153 | 0.176 | 0.166 | 0.141 | 0.119 |

| index7 | 0.157 | 0.156 | 0.129 | 0.122 | 0.099 |

| index8 | 0.166 | 0.163 | 0.136 | 0.127 | 0.104 |

| index9 | 0.165 | 0.162 | 0.134 | 0.124 | 0.102 |

| index10 | 0.157 | 0.157 | 0.130 | 0.122 | 0.099 |

| index11 | 0.166 | 0.163 | 0.136 | 0.127 | 0.104 |

| index12 | 0.192 | 0.181 | 0.150 | 0.142 | 0.117 |

| index13 | 0.123 | 0.131 | 0.062 | 0.084 | 0.075 |

| index14 | 0.187 | 0.181 | 0.153 | 0.142 | 0.118 |

| index15 | 0.120 | 0.157 | 0.153 | 0.129 | 0.115 |

| index16 | 0.151 | 0.179 | 0.087 | 0.127 | 0.107 |

| index17 | 0.172 | 0.180 | 0.159 | 0.142 | 0.119 |

| index18 | 0.181 | 0.189 | 0.162 | 0.144 | 0.118 |

| HSV Index without Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.149 | 0.179 | 0.166 | 0.155 | 0.159 |

| index2 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index3 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index4 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index5 | 0.117 | 0.174 | 0.157 | 0.146 | 0.166 |

| index6 | 0.109 | 0.170 | 0.153 | 0.140 | 0.163 |

| index7 | 0.145 | 0.175 | 0.164 | 0.150 | 0.158 |

| index8 | 0.145 | 0.175 | 0.164 | 0.150 | 0.158 |

| index9 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index10 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index11 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index12 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index13 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index14 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index15 | 0.149 | 0.174 | 0.165 | 0.148 | 0.162 |

| index16 | 0.109 | 0.170 | 0.153 | 0.140 | 0.163 |

| index17 | 0.064 | 0.164 | 0.137 | 0.126 | 0.163 |

| index18 | 0.149 | 0.174 | 0.165 | 0.148 | 0.162 |

References

- FAO; IFAD; UNICEF; WFP; WHO. The state of food security and nutrition in the world 2017. In Building Climate Resilience for Food Security and Nutrition; FAO: Rome, Italy, 2019. [Google Scholar]

- Lobell, D.B.; Field, C.B. Global scale climate–crop yield relationships and the impacts of recent warming. Environ. Res. Lett. 2007, 2, 014002. [Google Scholar] [CrossRef]

- Cole, M.B.; Augustin, M.A.; Robertson, M.J.; Manners, J.M. The science of food security. NPJ Sci. Food 2018, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Declan, C.; Erda, L.; Yinlong, X.; Hui, J.; Jinhe, J.; Ian, H.; Yan, L. Future cereal production in China: The interaction of climate change, water availability and socio-economic scenarios. Glob. Environ. Chang. 2009, 19, 34–44. [Google Scholar] [CrossRef]

- Lv, S.; Yang, X.; Lin, X.; Liu, Z.; Zhao, J.; Li, K.; Mu, C.; Chen, X.; Chen, F.; Mi, G. Yield gap simulations using ten maize cultivars commonly planted in Northeast China during the past five decades. Agric. For. Meteorol. 2015, 205, 1–10. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, X.; Hubbard, K.G.; Lin, X. Maize potential yields and yield gaps in the changing climate of northeast China. Glob. Chang. Biol. 2012, 18, 3441–3454. [Google Scholar] [CrossRef]

- Huang, M.; Wang, J.; Wang, B.; Liu, D.L.; Yu, Q.; He, D.; Wang, N.; Pan, X. Optimizing sowing window and cultivar choice can boost China’s maize yield under 1.5 °C and 2 °C global warming. Environ. Res. Lett. 2020, 15, 024015. [Google Scholar] [CrossRef]

- Kar, G.; Verma, H.N. Phenology based irrigation scheduling and determination of crop coefficient of winter maize in rice fallow of eastern India. Agric. Water Manag. 2005, 75, 169–183. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, S.; Zhang, Z.; Rötter, R.P. Temporal and spatial changes of maize yield potentials and yield gaps in the past three decades in China. Agric. Ecosyst. Environ. 2015, 208, 12–20. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, X.; Lin, X.; Hubbard, K.G.; Lv, S.; Wang, J. Maize yield gaps caused by non-controllable, agronomic, and socioeconomic factors in a changing climate of Northeast China. Sci. Total Environ. 2016, 541, 756–764. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, S.; Zhang, Z.; Rötter, R.P. Maize growing duration was prolonged across China in the past three decades under the combined effects of temperature, agronomic management, and cultivar shift. Glob. Chang. Biol. 2014, 20, 3686–3699. [Google Scholar] [CrossRef]

- Baker, N.R.; Rosenqvist, E. Applications of chlorophyll fluorescence can improve crop production strategies: An examination of future possibilities. J. Exp. Bot. 2004, 55, 1607–1621. [Google Scholar] [CrossRef] [Green Version]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Wood, C.; Reeves, D.; Himelrick, D. Relationships between Chlorophyll Meter Readings and Leaf Chlorophyll Concentration, N Status, and Crop Yield: A Review. Available online: https://www.agronomysociety.org.nz/uploads/94803/files/1993_1._Chlorophyll_relationships_-_a_review.pdf (accessed on 8 September 2020).

- Uddling, J.; Gelang-Alfredsson, J.; Piikki, K.; Pleijel, H. Evaluating the relationship between leaf chlorophyll concentration and SPAD-502 chlorophyll meter readings. Photosynth. Res. 2007, 91, 37–46. [Google Scholar] [CrossRef]

- Markwell, J.; Osterman, J.C.; Mitchell, J.L. Calibration of the Minolta SPAD-502 leaf chlorophyll meter. Photosynth. Res. 1995, 46, 467–472. [Google Scholar] [CrossRef] [PubMed]

- Villa, F.; Bronzi, D.; Bellisai, S.; Boso, G.; Shehata, A.B.; Scarcella, C.; Tosi, A.; Zappa, F.; Tisa, S.; Durini, D. SPAD imagers for remote sensing at the single-photon level. In Electro-Optical Remote Sensing, Photonic Technologies, and Applications VI; International Society for Optics and Photonics: Bellingham, WA, USA, 2012; p. 85420G. [Google Scholar]

- Zhengjun, Q.; Haiyan, S.; Yong, H.; Hui, F. Variation rules of the nitrogen content of the oilseed rape at growth stage using SPAD and visible-NIR. Trans. Chin. Soc. Agric. Eng. 2007, 23, 150–154. [Google Scholar]

- Wang, Y.-W.; Dunn, B.L.; Arnall, D.B.; Mao, P.-S. Use of an active canopy sensor and SPAD chlorophyll meter to quantify geranium nitrogen status. HortScience 2012, 47, 45–50. [Google Scholar] [CrossRef] [Green Version]

- Hawkins, T.S.; Gardiner, E.S.; Comer, G.S. Modeling the relationship between extractable chlorophyll and SPAD-502 readings for endangered plant species research. J. Nat. Conserv. 2009, 17, 123–127. [Google Scholar] [CrossRef]

- Giustolisi, G.; Mita, R.; Palumbo, G. Verilog-A modeling of SPAD statistical phenomena. In Proceedings of the 2011 IEEE International Symposium of Circuits and Systems (ISCAS), Rio de Janeiro, Brazil, 15−18 May 2011; pp. 773–776. [Google Scholar]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-Mashharawi, S.; Al-Amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A Calibration Procedure for Field and UAV-Based Uncooled Thermal Infrared Instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef]

- Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability 2019, 11, 978. [Google Scholar] [CrossRef] [Green Version]

- Bilal, D.K.; Unel, M.; Yildiz, M.; Koc, B. Realtime Localization and Estimation of Loads on Aircraft Wings from Depth Images. Sensors 2020, 20, 3405. [Google Scholar] [CrossRef]

- Guo, Y.; Guo, J.; Liu, C.; Xiong, H.; Chai, L.; He, D. Precision Landing Test and Simulation of the Agricultural UAV on Apron. Sensors 2020, 20, 3369. [Google Scholar] [CrossRef] [PubMed]

- Senthilnath, J.; Dokania, A.; Kandukuri, M.; Ramesh, K.N.; Anand, G.; Omkar, S.N. Detection of tomatoes using spectral-spatial methods in remotely sensed RGB images captured by UAV. Biosyst. Eng. 2016, 146, 16–32. [Google Scholar] [CrossRef]

- Riccardi, M.; Mele, G.; Pulvento, C.; Lavini, A.; d’Andria, R.; Jacobsen, S.-E. Non-destructive evaluation of chlorophyll content in quinoa and amaranth leaves by simple and multiple regression analysis of RGB image components. Photosynth. Res. 2014, 120, 263–272. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.-C. Introduction to Color Imaging Science; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating above-ground biomass of maize using features derived from UAV-based RGB imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef] [Green Version]

- Kefauver, S.C.; Vicente, R.; Vergara-Díaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.; Serret Molins, M.D.; Araus, J.L. Comparative UAV and field phenotyping to assess yield and nitrogen use efficiency in hybrid and conventional barley. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Maeda, M.; Landivar, J. A Comparative Study of RGB and Multispectral Sensor-Based Cotton Canopy Cover Modelling Using Multi-Temporal UAS Data. Remote Sens. 2019, 11, 2757. [Google Scholar] [CrossRef] [Green Version]

- Mazzia, V.; Comba, L.; Khaliq, A.; Chiaberge, M.; Gay, P. UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture. Sensors 2020, 20, 2530. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Onion biomass monitoring using UAV-based RGB imaging. Precis. Agric. 2018, 19, 840–857. [Google Scholar] [CrossRef]

- Matese, A.; Gennaro, S.D. Practical Applications of a Multisensor UAV Platform Based on Multispectral, Thermal and RGB High Resolution Images in Precision Viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef] [Green Version]

- Dong-Wook, K.; Yun, H.; Sang-Jin, J.; Young-Seok, K.; Suk-Gu, K.; Won, L.; Hak-Jin, K. Modeling and Testing of Growth Status for Chinese Cabbage and White Radish with UAV-Based RGB Imagery. Remote Sens. 2018, 10, 563. [Google Scholar]

- Barrero, O.; Perdomo, S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precis. Agric. 2018, 19, 809–822. [Google Scholar] [CrossRef]

- Das, J.; Cross, G.; Qu, C.; Makineni, A.; Tokekar, P.; Mulgaonkar, Y.; Kumar, V. Devices, systems, and methods for automated monitoring enabling precision agriculture. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 462–469. [Google Scholar]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Primicerio, J.; Gennaro, S.F.D.; Fiorillo, E.; Genesio, L.; Vaccari, F.P. A flexible unmanned aerial vehicle for precision agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Miao, Y.; Mulla, D.J.; Randall, G.W.; Vetsch, J.A.; Vintila, R. Predicting chlorophyll meter readings with aerial hyperspectral remote sensing for in-season site-specific nitrogen management of corn. Precis. Agric. 2007, 7, 635–641. [Google Scholar]

- Wang, J.; Xu, Y.; Wu, G. The integration of species information and soil properties for hyperspectral estimation of leaf biochemical parameters in mangrove forest. Ecol. Indic. 2020, 115, 106467. [Google Scholar] [CrossRef]

- Jin, X.; Zarco-Tejada, P.; Schmidhalter, U.; Reynolds, M.P.; Hawkesford, M.J.; Varshney, R.K.; Yang, T.; Nie, C.; Li, Z.; Ming, B. High-throughput estimation of crop traits: A review of ground and aerial phenotyping platforms. IEEE Geosci. Remote Sens. Mag. 2020, 20, 1–32. [Google Scholar] [CrossRef]

- Jin, X.; Kumar, L.; Li, Z.; Feng, H.; Xu, X.; Yang, G.; Wang, J. A review of data assimilation of remote sensing and crop models. Eur. J. Agron. 2018, 92, 141–152. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Santoro, A.; Marris, L.; Akerman, C.J.; Hinton, G. Backpropagation and the brain. Nat. Rev. Neuroence 2020, 21, 335–346. [Google Scholar] [CrossRef]

- Kosson, A.; Chiley, V.; Venigalla, A.; Hestness, J.; Köster, U. Pipelined Backpropagation at Scale: Training Large Models without Batches. arXiv 2020, arXiv:2003.11666. [Google Scholar]

- Pix4D SA. Pix4Dmapper 4.1 User Manual; Pix4d SA: Lausanne, Switzerland, 2017. [Google Scholar]

- Da Silva, D.C.; Toonstra, G.W.A.; Souza, H.L.S.; Pereira, T.Á.J. Qualidade de ortomosaicos de imagens de VANT processados com os softwares APS, PIX4D e PHOTOSCAN. V Simpósio Brasileiro de Ciências Geodésicas e Tecnologias da Geoinformação Recife-PE 2014, 1, 12–14. [Google Scholar]

- Barbasiewicz, A.; Widerski, T.; Daliga, K. The Analysis of the Accuracy of Spatial Models Using Photogrammetric Software: Agisoft Photoscan and Pix4D. In E3S Web of Conferences; EDP Sciences: Les Ulis, France, 2018; p. 12. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Z.; Wu, H.; Song, X.; Liao, J. Study on the Monitoring of Karst Plateau Vegetation with UAV Aerial Photographs and Remote Sensing Images. IOP Conf. Ser. Earth Environ. Sci. 2019, 384, 012188. [Google Scholar] [CrossRef] [Green Version]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Peltoniemi, J.I. Acquisition of bidirectional reflectance factor dataset using a micro unmanned aerial vehicle and a consumer camera. Remote Sens. 2010, 2, 819–832. [Google Scholar] [CrossRef] [Green Version]

- Xiaoqin, W.; Miaomiao, W.; Shaoqiang, W.; Yundong, W. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 5. [Google Scholar]

- Wan, L.; Li, Y.; Cen, H.; Zhu, J.; Yin, W.; Wu, W.; Zhu, H.; Sun, D.; Zhou, W.; He, Y. Combining UAV-based vegetation indices and image classification to estimate flower number in oilseed rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef] [Green Version]

- Neto, J.C. A Combined Statistical-Soft Computing Approach for Classification and Mapping Weed Species in Minimum-Tillage Systems. Ph.D. Thesis, The University of Nebraska-Lincoln, Lincoln, NE, USA, 2004. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Beniaich, A.; Naves Silva, M.L.; Avalos, F.A.P.; Menezes, M.D.; Candido, B.M. Determination of vegetation cover index under different soil management systems of cover plants by using an unmanned aerial vehicle with an onboard digital photographic camera. Semin. Cienc. Agrar. 2019, 40, 49–66. [Google Scholar] [CrossRef] [Green Version]

- Ponti; Moacir, P. Segmentation of Low-Cost Remote Sensing Images Combining Vegetation Indices and Mean Shift. IEEE Geoence Remote Sens. Lett. 2013, 10, 67–70. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Joao, T.; Joao, G.; Bruno, M.; Joao, H. Indicator-based assessment of post-fire recovery dynamics using satellite NDVI time-series. Ecol. Indic. 2018, 89, 199–212. [Google Scholar] [CrossRef]

- Jayaraman, V.; Srivastava, S.K.; Kumaran Raju, D.; Rao, U.R. Total solution approach using IRS-1C and IRS-P3 data. IEEE Trans. Geoence Remote Sens. 2000, 38, 587–604. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.; Wheeler, H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.; Burgos-Artizzu, X.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.R. A modified soil adjusted vegetation index. Remote Sens. Envrion. 2015, 48, 119–126. [Google Scholar]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Hashimoto, N.; Saito, Y.; Maki, M.; Homma, K. Simulation of Reflectance and Vegetation Indices for Unmanned Aerial Vehicle (UAV) Monitoring of Paddy Fields. Remote Sens. 2019, 11, 2119. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef] [Green Version]

- Saberioon, M.M.; Gholizadeh, A. Novel approach for estimating nitrogen content in paddy fields using low altitude remote sensing system. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 1011–1015. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Yeom, J.; Jung, J.; Chang, A.; Ashapure, A.; Landivar, J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019, 11, 1548. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, R.; Tanaka, S.; Yasunari, T. Relationships between meridional profiles of satellite-derived vegetation index (NDVI) and climate over Siberia. Int. J. Climatol. 2015, 20, 955–967. [Google Scholar] [CrossRef] [Green Version]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Del Campo, A.; Moreno, M.A. Combined use of agro-climatic and very high-resolution remote sensing information for crop monitoring. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 66–75. [Google Scholar] [CrossRef]

- Henry, C.; Martina, E.; Juan, M.; José-Fernán, M. Efficient Forest Fire Detection Index for Application in Unmanned Aerial Systems (UASs). Sensors 2016, 16, 893. [Google Scholar]

- Lussem, U.; Bolten, A.; Gnyp, M.; Jasper, J.; Bareth, G. Evaluation of RGB-based vegetation indices from UAV imagery to estimate forage yield in grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2018, 42, 1215–1219. [Google Scholar] [CrossRef] [Green Version]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Bareth, G. Multi-Temporal Crop Surface Models Combined With The Rgb Vegetation Index From Uav-Based Images For Forage Monitoring In Grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 991–998. [Google Scholar] [CrossRef]

- Bareth, G.; Bolten, A.; Gnyp, M.L.; Reusch, S.; Jasper, J. Comparison Of Uncalibrated Rgbvi With Spectrometer-Based Ndvi Derived From Uav Sensing Systems On Field Scale. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 837–843. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef] [Green Version]

- Cantrell, K.; Erenas, M.; de Orbe-Payá, I.; Capitán-Vallvey, L. Use of the hue parameter of the hue, saturation, value color space as a quantitative analytical parameter for bitonal optical sensors. Anal. Chem. 2010, 82, 531–542. [Google Scholar] [CrossRef] [PubMed]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Choi, M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1672–1682. [Google Scholar] [CrossRef] [Green Version]

- Kandi, S.G. Automatic defect detection and grading of single-color fruits using HSV (hue, saturation, value) color space. J. Life Sci. 2010, 4, 39–45. [Google Scholar]

- Grenzdörffer, G.; Niemeyer, F. UAV based BRDF-measurements of agricultural surfaces with pfiffikus. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 229–234. [Google Scholar] [CrossRef] [Green Version]

- Cui, L.; Jiao, Z.; Dong, Y.; Sun, M.; Zhang, X.; Yin, S.; Ding, A.; Chang, Y.; Guo, J.; Xie, R. Estimating Forest Canopy Height Using MODIS BRDF Data Emphasizing Typical-Angle Reflectances. Remote Sens. 2019, 11, 2239. [Google Scholar] [CrossRef] [Green Version]

- Tu, J.V. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J. Clin. Epidemiol. 1996, 49, 1225–1231. [Google Scholar] [CrossRef]

- Kruschke, J.K.; Movellan, J.R. Benefits of gain: Speeded learning and minimal hidden layers in back-propagation networks. IEEE Trans. Syst. Man Cybern. 1991, 21, 273–280. [Google Scholar] [CrossRef] [Green Version]

- Lawrence, S.; Giles, C.L. Overfitting and neural networks: Conjugate gradient and backpropagation. In Proceedings of the IEEE-INNS-ENNS International Joint Conference on Neural Networks (IJCNN 2000), Como, Italy, 27 July 2000; pp. 114–119. [Google Scholar]

- Karystinos, G.N.; Pados, D.A. On overfitting, generalization, and randomly expanded training sets. IEEE Trans. Neural Netw. 2000, 11, 1050–1057. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. Tist 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Maulik, U.; Chakraborty, D. Learning with transductive SVM for semisupervised pixel classification of remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2013, 77, 66–78. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

| Index | Name | Equation | Reference |

|---|---|---|---|

| E1 | EXG | [50,51] | |

| E2 | EXR | [52] | |

| E3 | VDVI | [53,54,55,56] | |

| E4 | EXGR | [57] | |

| E5 | NGRDI | [55,58] | |

| E6 | NGBDI | [54,59] | |

| E7 | CIVE | [60,61] | |

| E8 | CRRI | [62,63,64] | |

| E9 | VEG | [65,66] | |

| E10 | COM | [67,68] | |

| E11 | RGRI | [69,70] | |

| E12 | VARI | [71,72] | |

| E13 | EXB | [67,73] | |

| E14 | MGRVI | [74,75] | |

| E15 | WI | [72,76] | |

| E16 | IKAW | [58,77] | |

| E17 | GBDI | G − B | [52,78] |

| E18 | RGBVI | (G × G − B × R)/(G × G + B × R) | [79,80,81] |

| Date | E1 | E2 | E3 | E4 | E5 | E6 | E7 | E8 | E9 |

| 8 July | 0.182 | 0.139 | 0.181 | 0.169 | 0.185 | 0.177 | 0.178 | 0.181 | 0.178 |

| 18 August | 0.240 | 0.499 | 0.210 | 0.091 | 0.514 | 0.362 | 0.040 | 0.506 | 0.530 |

| 1 September | 0.273 | 0.648 | 0.228 | 0.487 | 0.629 | 0.291 | 0.001 | 0.581 | 0.606 |

| 16 September | 0.471 | 0.832 | 0.462 | 0.722 | 0.845 | 0.342 | 0.047 | 0.825 | 0.842 |

| Date | E10 | E11 | E12 | E13 | E14 | E15 | E16 | E17 | E18 |

| 8 July | 0.179 | 0.181 | 0.186 | 0.170 | 0.185 | 0.170 | 0.202 | 0.182 | 0.182 |

| 18 August | 0.010 | 0.506 | 0.733 | 0.365 | 0.493 | 0.729 | 0.804 | 0.001 | 0.211 |

| 1 September | 0.003 | 0.581 | 0.671 | 0.400 | 0.622 | 0.591 | 0.674 | 0.263 | 0.314 |

| 16 September | 0.103 | 0.825 | 0.751 | 0.450 | 0.849 | 0.855 | 0.838 | 0.481 | 0.534 |

| R2 | 8 July | 18 August | 1 September | 16 September |

| BP | 0.001 | 0.454 | 0.595 | 0.703 |

| SVM | 0.001 | 0.332 | 0.587 | 0.702 |

| RF | 0.001 | 0.227 | 0.465 | 0.599 |

| RMSE | 8 July | 18 August | 1 September | 16 September |

| BP | 3.868 | 3.533 | 3.411 | 4.600 |

| SVM | 2.500 | 3.575 | 3.328 | 3.043 |

| RF | 2.622 | 2.541 | 3.333 | 3.095 |

| MAE | 8 July | 18 August | 1 September | 16 September |

| BP | 2.973 | 2.765 | 2.347 | 3.701 |

| SVM | 1.802 | 2.757 | 2.844 | 2.438 |

| RF | 2.174 | 2.138 | 2.736 | 2.509 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Yin, G.; Sun, H.; Wang, H.; Chen, S.; Senthilnath, J.; Wang, J.; Fu, Y. Scaling Effects on Chlorophyll Content Estimations with RGB Camera Mounted on a UAV Platform Using Machine-Learning Methods. Sensors 2020, 20, 5130. https://doi.org/10.3390/s20185130

Guo Y, Yin G, Sun H, Wang H, Chen S, Senthilnath J, Wang J, Fu Y. Scaling Effects on Chlorophyll Content Estimations with RGB Camera Mounted on a UAV Platform Using Machine-Learning Methods. Sensors. 2020; 20(18):5130. https://doi.org/10.3390/s20185130

Chicago/Turabian StyleGuo, Yahui, Guodong Yin, Hongyong Sun, Hanxi Wang, Shouzhi Chen, J. Senthilnath, Jingzhe Wang, and Yongshuo Fu. 2020. "Scaling Effects on Chlorophyll Content Estimations with RGB Camera Mounted on a UAV Platform Using Machine-Learning Methods" Sensors 20, no. 18: 5130. https://doi.org/10.3390/s20185130

APA StyleGuo, Y., Yin, G., Sun, H., Wang, H., Chen, S., Senthilnath, J., Wang, J., & Fu, Y. (2020). Scaling Effects on Chlorophyll Content Estimations with RGB Camera Mounted on a UAV Platform Using Machine-Learning Methods. Sensors, 20(18), 5130. https://doi.org/10.3390/s20185130