1. Introduction

The measurement of six-degrees-of-freedom (6-DOF) is important in industrial production and the 6-DOF of a measured object represents its position information, which will help the machine to operate efficiently, thus 6-DOF measurements are often used in fields such as precision machining, spacecraft docking, and manufacturing assembly [

1].

The 6-DOF of a rigid body include the rotational degrees of freedom (

Ψ,

θ,

φ) around the

x,

y, and

z axes as well as the movement degrees of freedom (

,

,

) along the

x,

y, and

z axes. The commonly used measuring instruments or methods include laser, Hall sensor, inertial measurement unit (IMU), total station, and vision. Laser measurement methods, which include laser interferometer [

2], laser tracker [

3], and laser collimation method [

4], have high accuracy, but a special optical path needs to be designed using a lens [

5,

6,

7]. As a result, it has requirements on the size or range of motion of the measured object. In addition, the refractive index of the laser is susceptible to environmental factors such as humidity and temperature, which may cause errors [

8,

9]. Finally, specialized instruments such as laser trackers and laser interferometers are used for measurement in laser methods, which limits the widespread use of such methods [

10,

11]. In addition to the laser measurement, the Hall sensor is often used as a position sensing device for 6-DOF measurement [

12] and to sense the 6-DOF in different positions and directions [

13]. The accuracy of the Hall sensor is high, but most of them can only be used for micro measurement. On the other hand, when multiple Hall sensors are used for measurement, it is necessary to assemble the position of each sensor [

14], so it is very necessary to calibrate the sensors, which is still a problem [

15,

16]. After assemble, the Hall sensor is fixed, which results in the inability to measure large objects or poor environmental adaptability [

17,

18,

19]. Total stations are often used for measurement in long-distance and engineering environments, and have the characteristics of low cost and strong environmental adaptability. However, the total station alone cannot achieve dynamic 6-DOF measurement [

5], and requires the use of overly complex cooperation goals [

20]. In addition, there are measurement methods that use tools such as inertial measurement unit (IMU) [

21] and laser scanning [

22]. However, they are often used in combination with other sensors because of some limitations.

Compared with the above-mentioned 6-DOF measurement instruments or methods, the vision method has the advantages of non-contact, high accuracy, and wide measurement range [

23,

24,

25,

26]. With the development of image processing and deep learning, visual measurement methods have strong environmental adaptability [

27,

28]. Vision measurement can be divided into monocular [

29,

30,

31] and multi-vision [

32,

33] measurement systems. The monocular vision measurement system has low hardware complexity and the shooting field of view is large [

34,

35], but it is difficult to measure the depth accurately [

36]. For example, Hui Pan [

37] proposed an estimation algorithm of relative pose of cooperative space targets based on monocular vision imaging, in which a modified gravity model approach and multiple targets tracking methods were used to improve the accuracy. In his experimental result, the translational error along the

z-axis was obviously greater than that of the other two axes because of the module of monocular vision. On the other hand, monocular vision measurement of 6-DOF often converts into a Perspective-n-Point (PnP) problem, which requires knowing the coordinates of some points in the measured object coordinate system, and it only calculates the 6-DOF of the measured object in the camera coordinate system [

38]. For example, Gangfeng Liu [

39] proposed a monocular vision pose measurement method, which uses the guide petals of the docking mechanism to calculate the relative pose. In his work, it is necessary to extract the guide petals and obtain the pixel coordinates of key points to solve the PnP problem. If the measured object is changed, the proposed method will not be able to measure because it cannot extract the other measured objects.

Compared with monocular vision measurement, multi-vision measurement is more versatile. Multi-vision can measure depth more accurately, which is different from monocular vision [

40]. Zhiyuan Niu [

41] proposed an immersive positioning and measuring method based on multi-camera and designed the active light emitting diode (LED) markers as control points to deal with complicated industrial environment. However, the measurement result of this method is the pose between the measured object coordinate system and the camera coordinate system. The same situation also occurs in monocular vision measurement [

39]. In this case, as long as the camera is moved, the 6-DOF of the measured object will be lost and the measurement needs to be performed again. In addition, the movement of the camera makes it difficult to reproduce the position and posture relationship between the measured object and the camera. On the other hand, some methods have a cooperative target that is complicated [

40,

41,

42]. As a result, it has an influence on the versatility of the method. In multi-vision, the calibration between cameras is the key link, which determines the accuracy of measurements [

43]. The commonly used calibration methods include the Zhang Zhengyou calibration method, Direct Linear Transformation (DLT) method, and so on, among which the Zhang Zhengyou calibration method is widely used because of its ease of operation and accuracy.

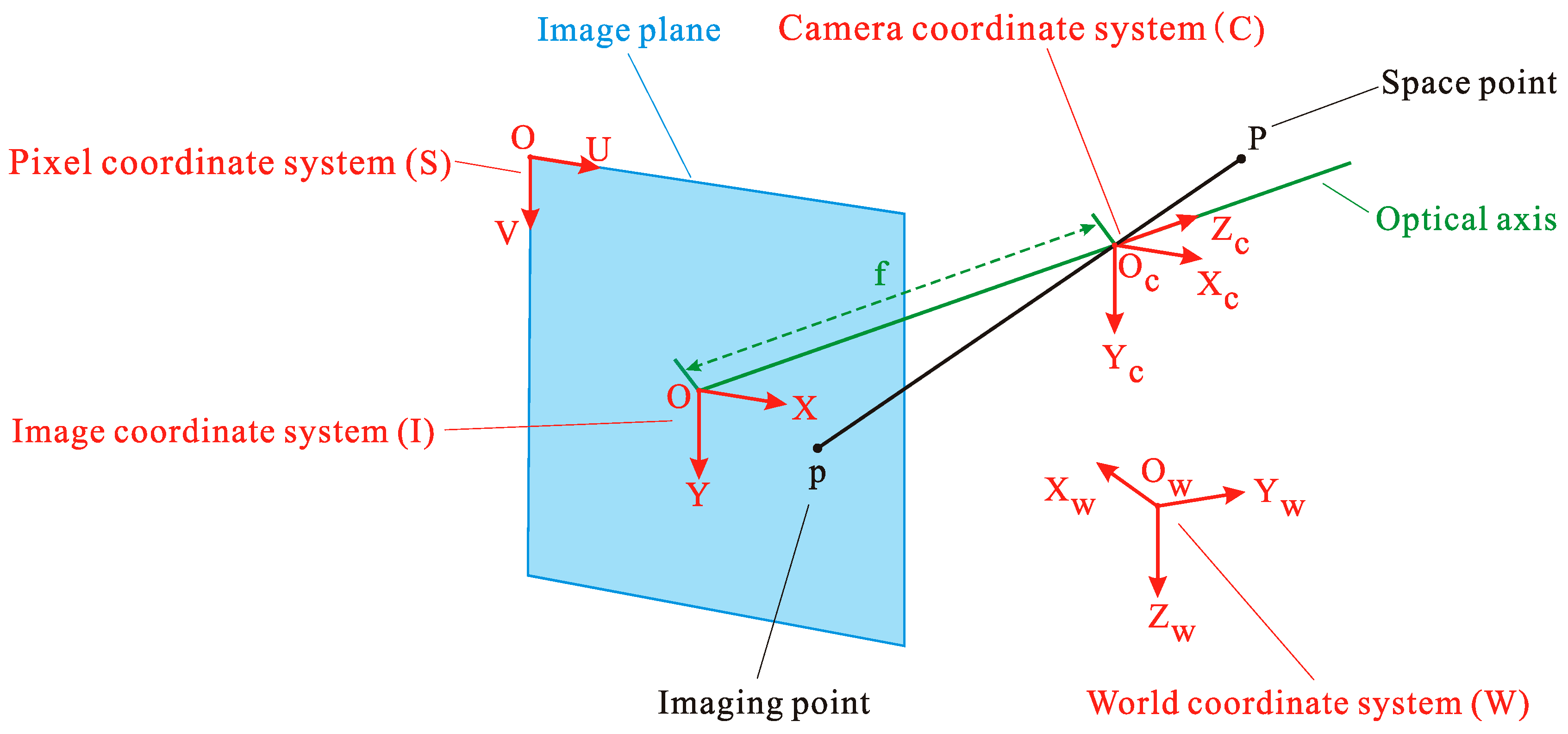

According to the above situation and problems, it is very necessary to propose a measurement method that is versatile, does not require professional instruments, and is suitable for most of the measured objects. Therefore, this paper proposes a multi-camera measurement method of 6-DOF for rigid bodies in the world coordinate system. First, multi-camera calibration based on Zhang Zhengyou’s calibration method is introduced. In addition to the intrinsic and extrinsic parameters of multiple cameras, it uses a checkerboard to calibrate the relationship between the camera coordinate system and the world coordinate system. Secondly, a universal measurement method of 6-DOF is proposed, and it only needs to arrange at least four non-coplanar control points on the rigid body. The coordinates of the control points in the rigid body coordinate system and the pixel coordinates on the image are used to calculate the 6-DOF of the rigid body. The 6-DOF measured by this method is the pose of the measured rigid body relative to the world coordinate system, which is not affected by the movement of cameras. Theoretically, the proposed method is suitable for dynamic and static measurement, but in order to better explain the principle and versatility of the method, only the static measurement is introduced.

The rest of this article is structured as follows. In

Section 2, the principle of camera calibration and 6-DOF measurement is introduced. In

Section 3, experiments are carried out and results are discussed. In

Section 4, a summary is provided.

4. Conclusions

In this paper, a multi-camera universal measurement method for 6-DOF of rigid bodies in the world coordinate system is proposed. This method only needs to use at least two cameras to achieve measurement, which is made available for most morphological rigid bodies. First of all, on the basis of Zhang Zhengyou’s calibration method, multi-camera calibration is introduced. The pose relationship between the camera coordinate system and the world coordinate system is obtained, which is different from other calibrations. Meanwhile, the intrinsic and extrinsic parameters of the camera are estimated. Secondly, on the basis of the pinhole camera model, the 6-DOF solution model of the proposed method is gradually analyzed by the matrix analysis method. The proposed method uses the control points on the measured rigid body to achieve the calculation of the 6-DOF by least squares methods. Finally, P3HPS (Phantom 3D high-speed photogrammetry system) with an accuracy of 0.1 mm/m was used to evaluate the performance of proposed method. The experiment results show that the average error of the rotational DOF is less than 1.1 deg, and the average error of the movement DOF is less than 0.007 m.

The proposed method does not need expensive and professional instruments. The measurement process is simple and the principle is not complicated. As the final measurement results of the proposed method are the 6-DOF between the world coordinate system and the measured rigid body coordinate system, the measurement is reproducible and the measurement results of the 6-DOF of the rigid body are not restricted by the movement of the camera, which is different from other vision measurement methods. Certainly, the proposed method still has limitations. On the one hand, for a measured rigid body with a too large moving range, it may exceed the camera’s field of view, resulting in an inability to measure. On the other hand, there are more dynamic measurement problems to be solved [

48,

49,

50], which will be the key problem of our research in the future.