An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor

Abstract

:1. Introduction

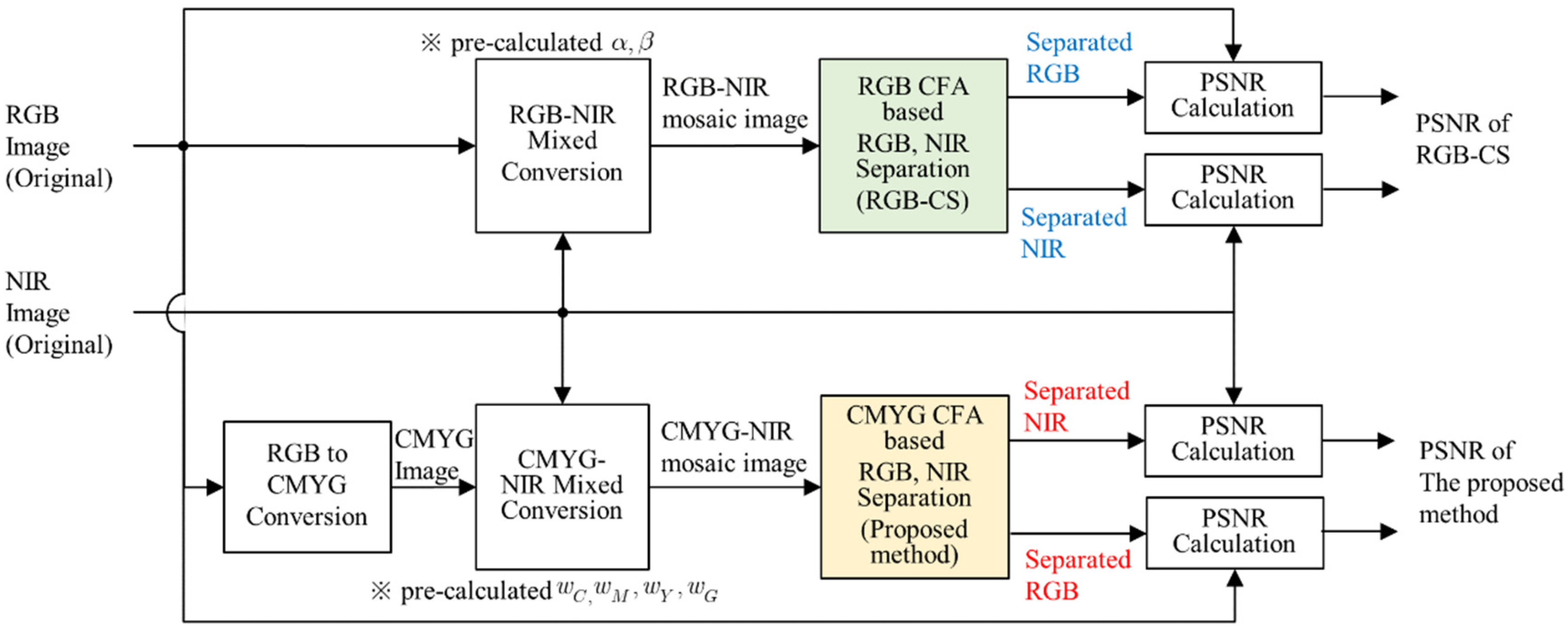

2. Proposed Method

2.1. Mathematical Model

2.2. Color Conversion to XYZ Color Space

2.3. Calculation of the NIR Weighting Coefficient

2.4. Noise Analysis

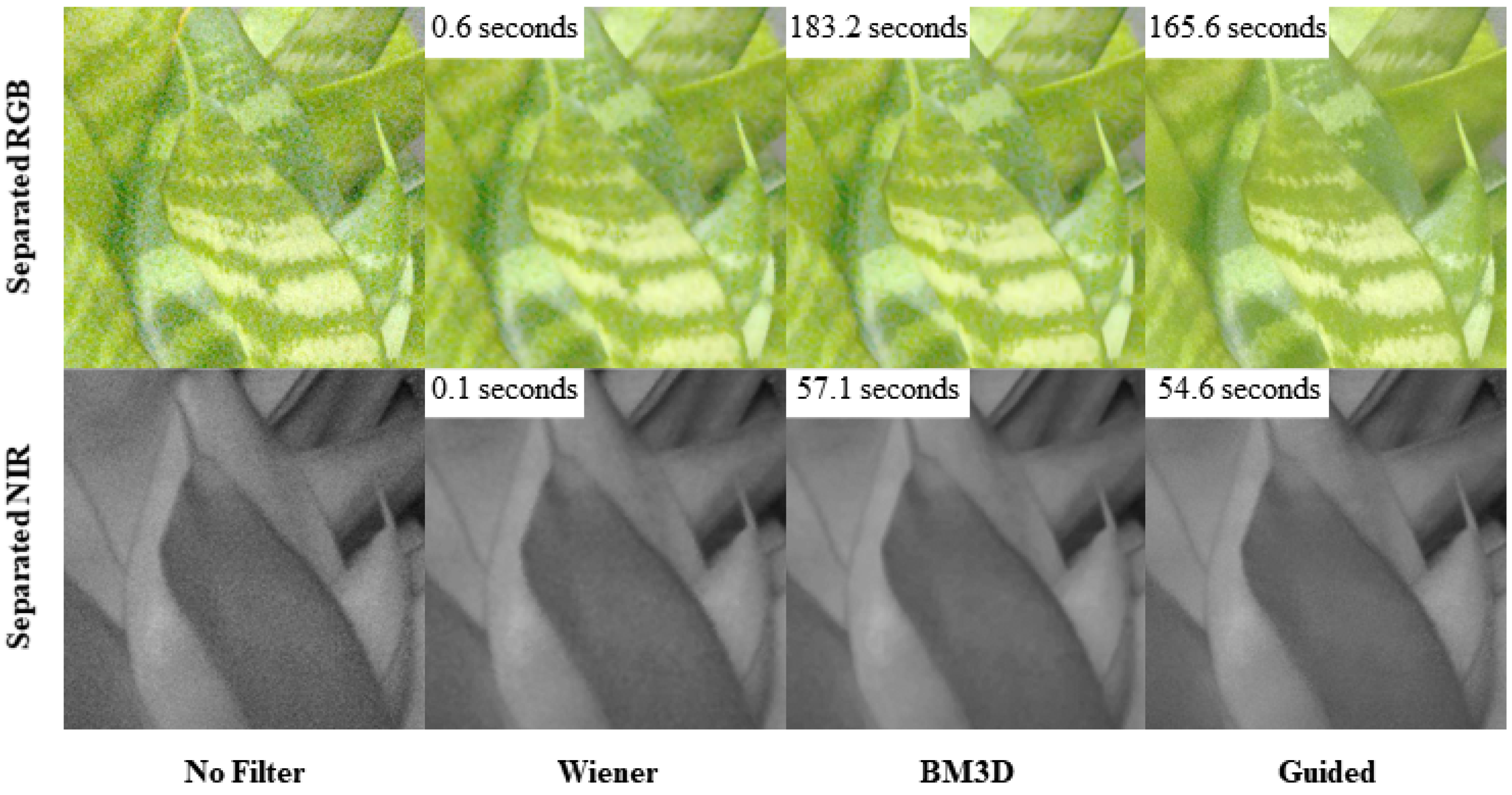

2.5. Denoising Method

3. Experimental Results

3.1. Experimental Condition

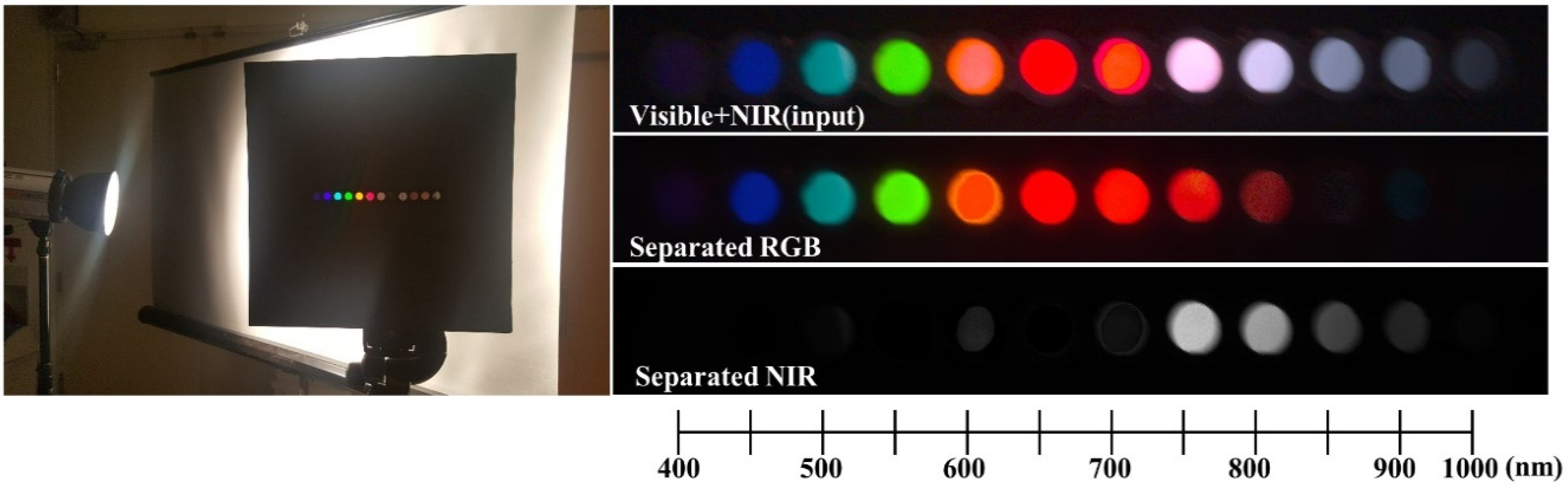

3.2. The Separation of Band Spectrum

3.3. Separation under a NIR Spot Light

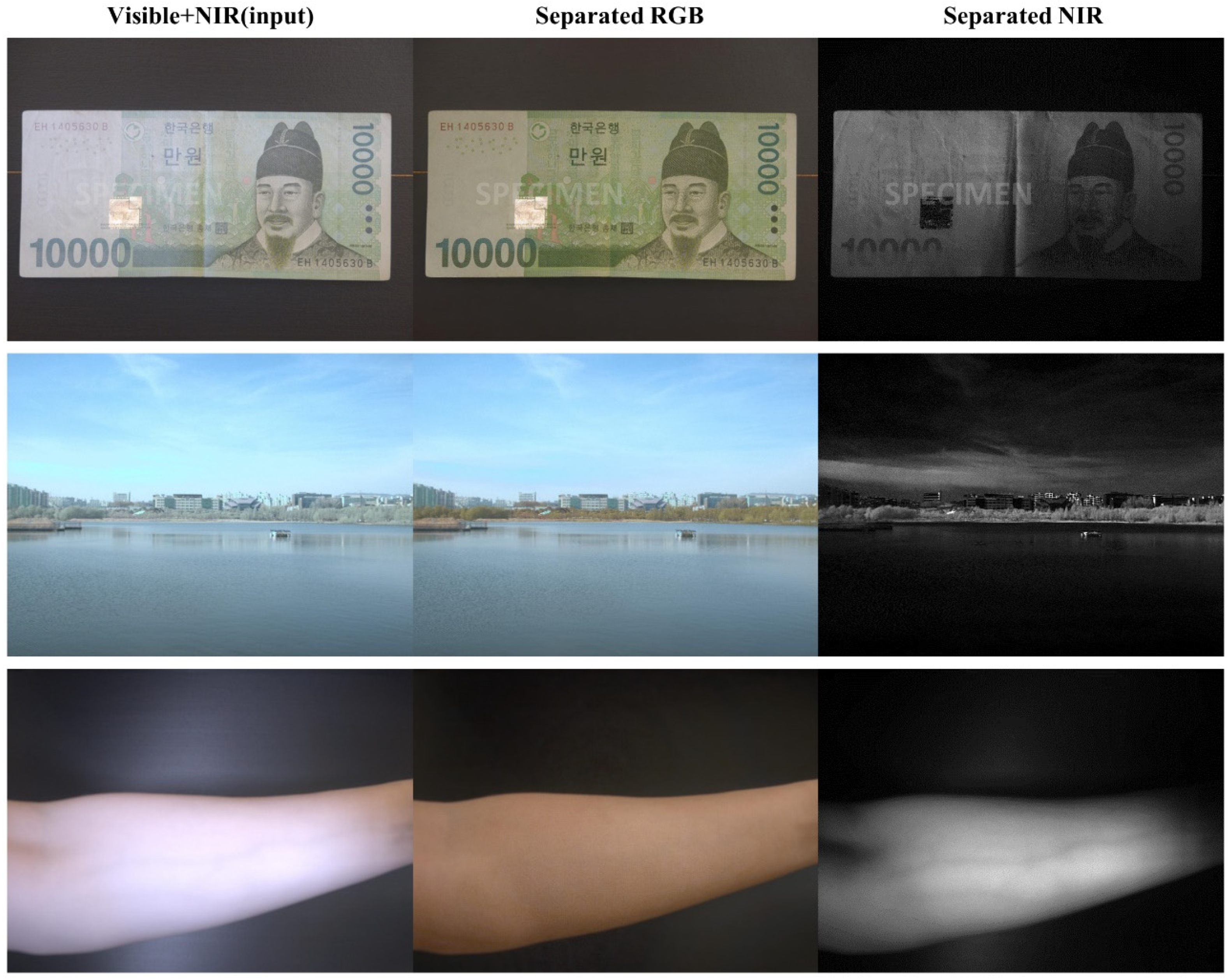

3.4. Separation Results on Applications

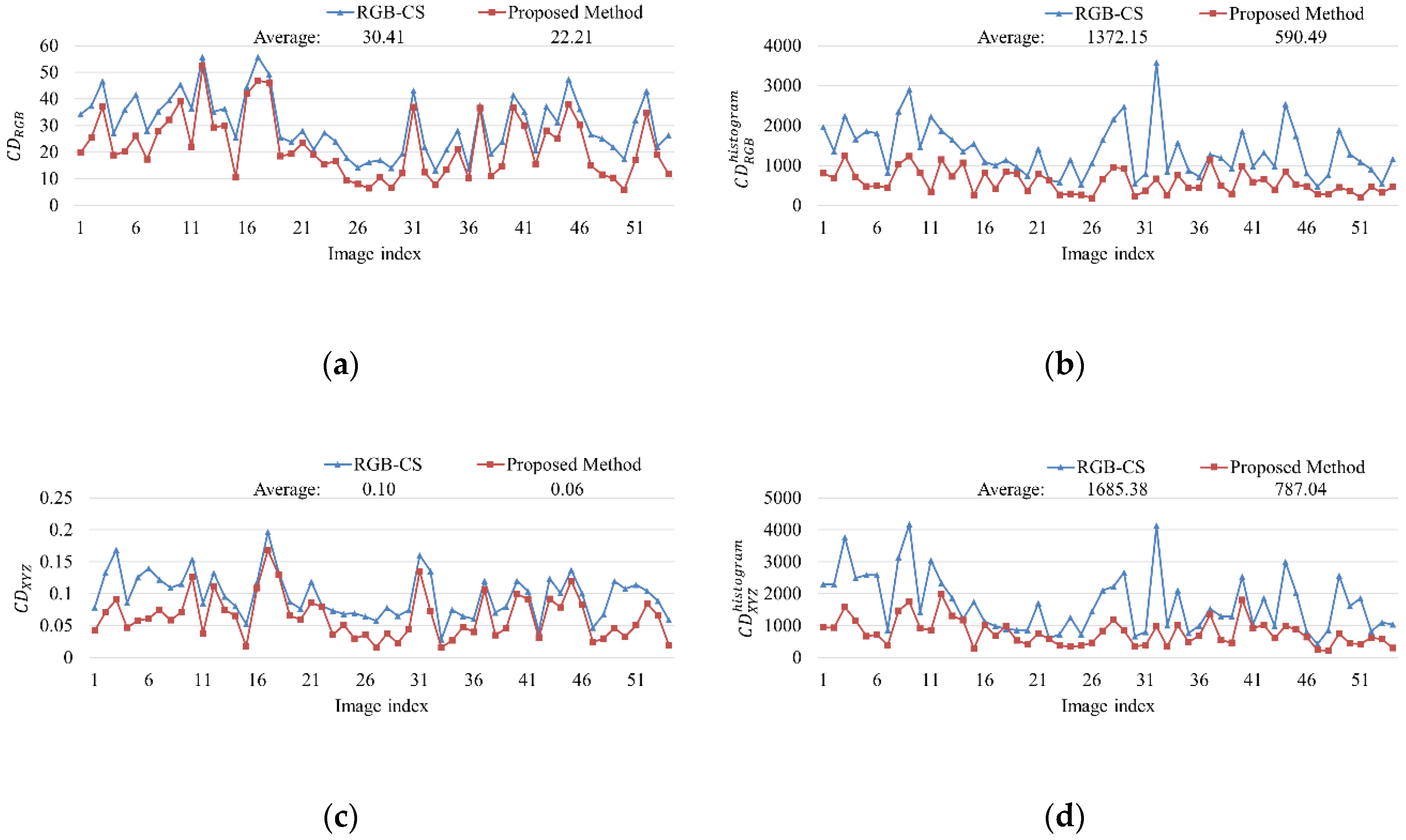

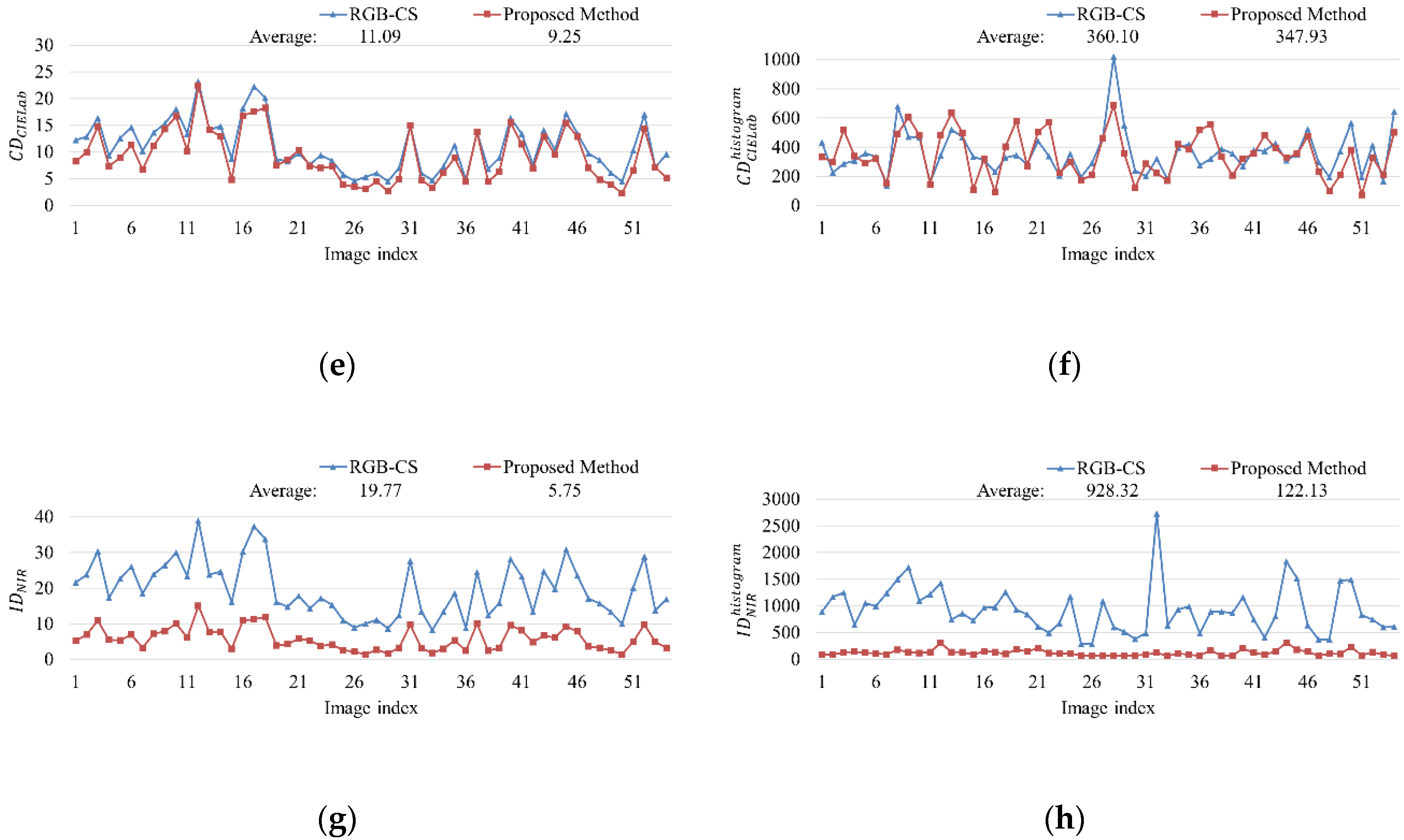

3.5. Objective Quality Comparison

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- ISO 20473:2007(E) Optics and Photonics–Spectral Bands; ISO: Geneva, Switzerland, 2007.

- Süsstrunk, S.; Fredembach, C. Enhancing the Visible with the Invisible: Exploiting Near- Infrared to Advance Computational Photography and Computer Vision. SID Symp. Dig. Tech. Pap. 2010, 41, 90–93. [Google Scholar] [CrossRef] [Green Version]

- Varjo, S.; Hannuksela, J.; Alenius, S. Comparison of near infrared and visible image fusion methods. In Proceedings of the International Workshop on Applications Systems and Services for Camera Phone Sensing (MobiPhoto2011), Penghu, Taiwan, 12 June 2011; University of Oulu: Penghu, Taiwan, 2011; pp. 7–11. [Google Scholar]

- Gao, B.C. NDWI-A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Schaul, L.; Fredembach, C.; Süsstrunk, S. Color image dehazing using the near-infrared. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1629–1632. [Google Scholar]

- Nicolai, B.M.; Beullens, K.; Bobelyn, E.; Peirs, A.; Saeys, W.; Theron, K.I.; Lammertyn, J. Nondestructive measurement of fruit and vegetable quality by means of NIR spectroscopy: A review. Postharvest Biol. Technol. 2007, 46, 99–118. [Google Scholar] [CrossRef]

- Ariana, D.P.; Lu, R.; Guyer, D.E. Near-infrared hyperspectral reflectance imaging for detection of bruises on pickling cucumbers. Comput. Electron. Agric. 2006, 53, 60–70. [Google Scholar] [CrossRef]

- Mehl, P.M.; Chen, Y.R.; Kim, M.S.; Chan, D.E. Development of hyperspectral imaging technique for the detection of apple surface defects and contaminations. J. Food Eng. 2004, 61, 67–81. [Google Scholar] [CrossRef]

- Crisan, S.; Tarnovan, I.G.; Crisan, T.E. A Low Cost Vein Detection System Using Near Infrared Radiation. In Proceedings of the 2007 IEEE Sensors Applications Symposium, San Diego, CA, USA, 6–8 February 2007; pp. 1–6. [Google Scholar]

- Miyake, R.K.; Zeman, R.D.; Duarte, F.H.; Kikuchi, R.; Ramacciotti, E.; Lovhoiden, G.; Vrancken, C. Vein imaging: A new method of near infrared imaging, where a processed image is projected onto the skin for the enhancement of vein treatment. Dermatol. Surg. 2006, 32, 1031–1038. [Google Scholar] [CrossRef] [PubMed]

- Matsui, S.; Okabe, T.; Shimano, M.; Sato, Y. Image enhancement of low-light scenes with near-infrared flash images. Inf. Media Technol. 2011, 6, 202–210. [Google Scholar]

- Zhuo, S.; Zhang, X.; Miao, X.; Sim, T. Enhancing Low Light Images using Near Infrared Flash Images. In Proceedings of the 2010 IEEE 17th International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2537–2540. [Google Scholar]

- JAI’s 2-CCD Camera. Available online: https://www.jai.com/products/ad-080-cl (accessed on 28 September 2020).

- LangfeJder, G.; Malzbender, T.; Longoni, A.F.; Zaraga, F. A device and an algorithm for the separation of visible and near infrared signals in a monolithic silicon sensor. In Proceedings of the SPIE 7882. Visual Information Processing and Communication II, San Francisco Airport, CA, USA, 25–26 January 2011; International Society for Optics and Photonics: Washington, DC, USA, 2011; p. 788207. [Google Scholar]

- Koyama, S.; Inaba, Y.; Kasano, M.; Murata, T. A day and night vision MOS imager with robust photonic-crystal-based RGB-and-IR. IEEE Trans. Electron Devices 2008, 55, 754–759. [Google Scholar] [CrossRef]

- Lu, Y.M.; Fredembach, C.; Vetterli, M.; Süsstrunk, S. Designing color filter arrays for the joint capture of visible and near-infrared images. In Proceedings of the 2009 IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 3797–3800. [Google Scholar]

- OmniVision’s RGB-IR CMOS Sensor. Available online: https://www.ovt.com/sensors/OV2736 (accessed on 28 September 2020).

- Monno, Y.; Teranaka, H.; Yoshizaki, K.; Tanaka, M.; Okutomi, M. Single-Sensor RGB-NIR Imaging: High-Quality System Design and Prototype Implementation. IEEE Sens. J. 2019, 19, 497–507. [Google Scholar] [CrossRef]

- Hu, X.; Heide, F.; Dai, Q.; Wetzstein, G. Convolutional Sparse Coding for RGB+NIR Imaging. IEEE Trans. Image Process. 2018, 27, 1611–1625. [Google Scholar] [CrossRef]

- Park, C.; Kang, M.G. Color Restoration of RGBN Multispectral Filter Array Sensor Images Based on Spectral Decomposition. Sensors 2016, 16, 719. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sadeghipoor, Z.; Lu, Y.M.; Süsstrunk, S. A novel compressive sensing approach to simultaneously acquire color and near-infrared images on a single sensor. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1646–1650. [Google Scholar]

- Park, Y.; Shim, H.J.; Dinh, K.Q.; Jeon, B. Visible and Near-Infrared Image Separation from CMYG Color Filter Array based Sensor. In Proceedings of the 58th International Symposium ELMAR, Zadar, Croatia, 12–14 September 2016; pp. 209–212. [Google Scholar]

- Baer, R.L.; Holland, W.D.; Hoim, J.; Vora, P. A Comparison of Primary and Complementary Color Filters for CCD based Digital Photography. In Sensors, Cameras, and Applications for Digital Photography; International Society for Optics and Photonics: Washington, DC, USA, 1999; pp. 16–25. [Google Scholar]

- Axis Communications, CCD and CMOS Sensor Technology. Technical White Paper; Axis Communications: Waterloo, ON, Canada, 2011. [Google Scholar]

- Smith, T.; Guild, J. The C.I.E. colorimetric standards and their use. Trans. Opt. Soc. 1931, 33, 73–134. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson: Upper Saddle River, NJ, USA, 2010; pp. 57–125. [Google Scholar]

- Pointer, M.R.; Attridge, G.G.; Jacobson, R.E. Practical camera characterization for colour measurement. Imaging Sci. J. 2001, 49, 63–80. [Google Scholar] [CrossRef]

- Nakamura, J. Image Sensors and Signal Processing for Digital Still Cameras; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- ColorChecker. Product No. 50105 (Standard) or No. 50111 (Mini); Manufactured by the Munsell Color Services Laboratory of GretagMacbeth; X-Rite: Grand Rapids, MI, USA, 1976. [Google Scholar]

- International Electrotechnical Commission. Multimedia Systems and Equipment–Colour Measurement and Management–Part 2-1: Colour Management–Default RGB Colour Space–sRGB; IEC 61966-2-1; International Electrotechnical Commission: Geneva, Switzerland, 1999. [Google Scholar]

- Stokes, M.; Anderson, M.; Chandrasekar, S.; Motta, R. A Standard Default Color Space for the Internet-sRGB. W3C Document 1996. Available online: https://www.w3.org/Graphics/Color/sRGB.html (accessed on 29 September 2020).

- Johnson, R.A.; Wichern, D.W. Matrix algebra and random vectors. In Applied Multivariate Statistical Analysis, 6th ed.; Pearson: Upper Saddle River, NJ, USA, 2007; pp. 49–110. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. BM3D Image Denoising with Shape-Adaptive Principal Component Analysis. In SPARS’09-Signal Processing with Adaptive Sparse Structured Representations; Inria Rennes-Bretagne Atlantique: Saint Malo, France, 2009. [Google Scholar]

- Darmont, A. Spectral Response of Silicon Image Sensor. In Aphesa White Paper; Aphesa: Harzé, Belgium, 2009. [Google Scholar]

- Bruna, A.; Farinella, G.M.; Guarnera, G.C.; Battiato, S. Forgery Detection and Value Identification of Euro Banknotes. Sensors 2013, 13, 2515–2529. [Google Scholar] [CrossRef] [PubMed]

- Young, A.T. Rayleigh scattering. Phys. Today 1982, 35, 42–48. [Google Scholar] [CrossRef]

- Pope, R.M.; Fry, E.S. Absorption spectrum (380–700 nm) of pure water. II. Integrating cavity measurements. Appl. Opt. 1997, 36, 8710–8723. [Google Scholar] [CrossRef]

- Kou, L.; Labrie, D.; Chýlek, P. Refractive indices of water and ice the 0.65- to 2.5-µm spectral range. Appl. Opt. 1993, 32, 3531–3540. [Google Scholar] [CrossRef] [Green Version]

- Peñuelas, J.; Filella, I. Visible and near-infrared reflectance techniques for diagnosing plant physiological status. Trends Plant Sci. 1998, 3, 151–156. [Google Scholar] [CrossRef]

- Kim, D.; Kim, Y.; Yoon, S.; Lee, D. Preliminary Study for Designing a Novel Vein-Visualizing Device. Sensors 2017, 17, 304. [Google Scholar] [CrossRef] [Green Version]

- Arsalan, M.; Naqvi, R.A.; Kim, D.S.; Nguyen, P.H.; Owais, M.; Park, K.R. IrisDenseNet: Robust Iris Segmentation Using Densely Connected Fully Convolutional Networks in the Images by Visible Light and Near-Infrared Light Camera Sensors. Sensors 2018, 18, 1501. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brown, M.; Süsstrunk, S. Multispectral SIFT for Scene Category Recognition. In Proceedings of the Computer Vision and Pattern Recognition (CVPR 2011), Providence, RI, USA, 20–25 June 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 177–184. [Google Scholar]

- Sayood, K. Mathematical preliminaries for lossy coding. In Introduction to Data Compression, 3rd ed.; Morgan Kaufmann: San Francisco, CA, USA, 2006; pp. 195–226. [Google Scholar]

- Mohimani, H.; Babaie-Zadeh, M.; Jutten, C. A fast approach for overcomplete sparse decomposition based on smoothed norm. IEEE Trans. Signal Process. 2009, 57, 289–301. [Google Scholar] [CrossRef] [Green Version]

- ISO 11664–4:2008(E)/CIE S 014-4/E: Joint ISO/CIE Standard Colorimetry-Part4: CIE 1976 L*a*b* Colour Space; ISO: Geneva, Switzerland, 2007.

| Method | RGB-CS [21] | The Proposed Method |

|---|---|---|

| Sample image info. | 54 images (508 × 768–1024 × 762) [43] | |

| Type of CFA 1 | RGB | CMYG |

| Separation matrix | Pre-defined | |

| Sparsifying matrix | Discrete cosine transform | N/A |

| Demosaicing | Bilinear (GRBG pattern) | Bilinear (GMYC pattern) |

| Separation method | SL0 sparse decomposition [45] | Matrix multiplication |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, Y.; Jeon, B. An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor. Sensors 2020, 20, 5578. https://doi.org/10.3390/s20195578

Park Y, Jeon B. An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor. Sensors. 2020; 20(19):5578. https://doi.org/10.3390/s20195578

Chicago/Turabian StylePark, Younghyeon, and Byeungwoo Jeon. 2020. "An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor" Sensors 20, no. 19: 5578. https://doi.org/10.3390/s20195578

APA StylePark, Y., & Jeon, B. (2020). An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor. Sensors, 20(19), 5578. https://doi.org/10.3390/s20195578