Spectral Color Management in Virtual Reality Scenes

Abstract

:1. Introduction

2. Materials and Methods

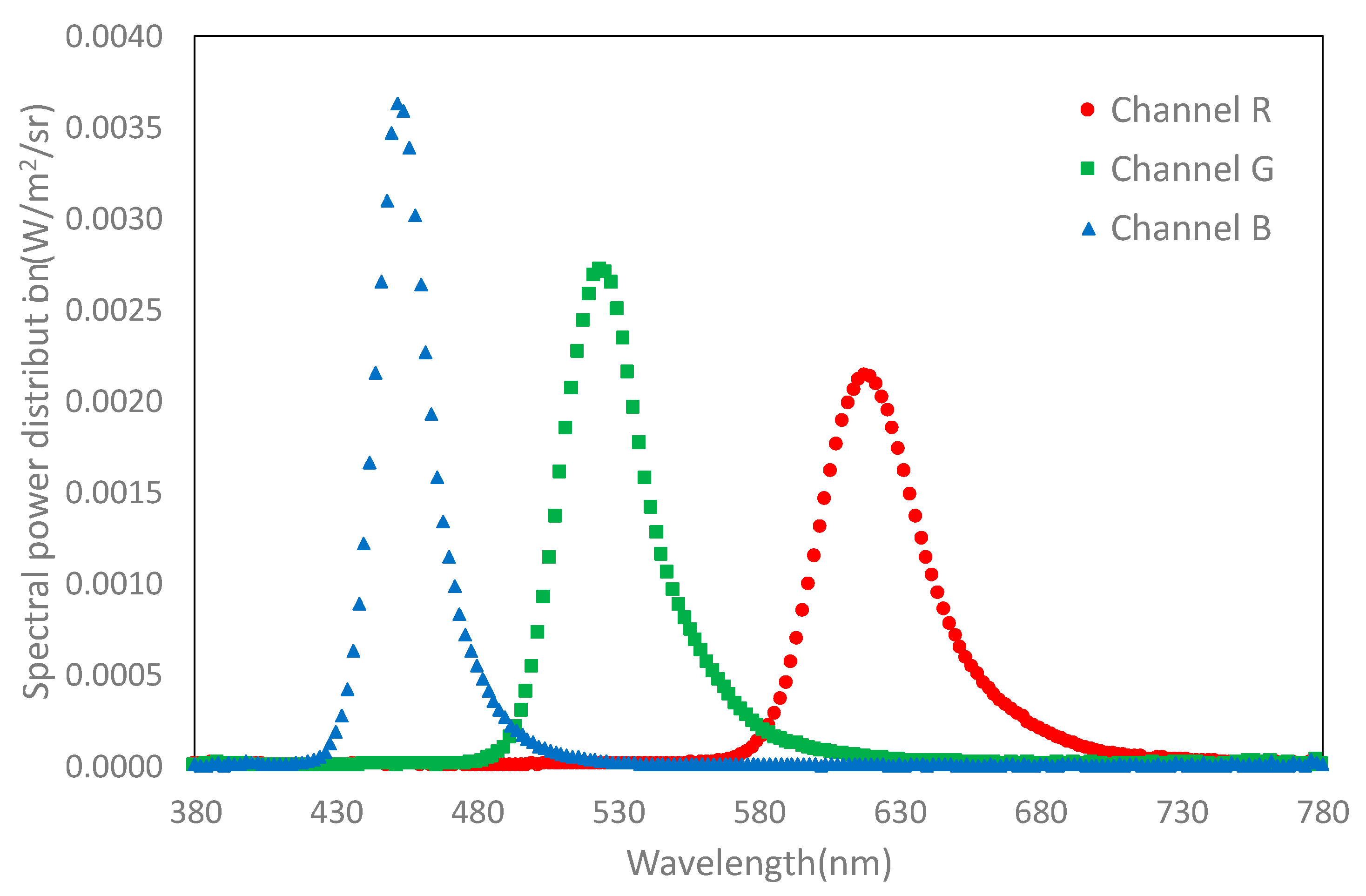

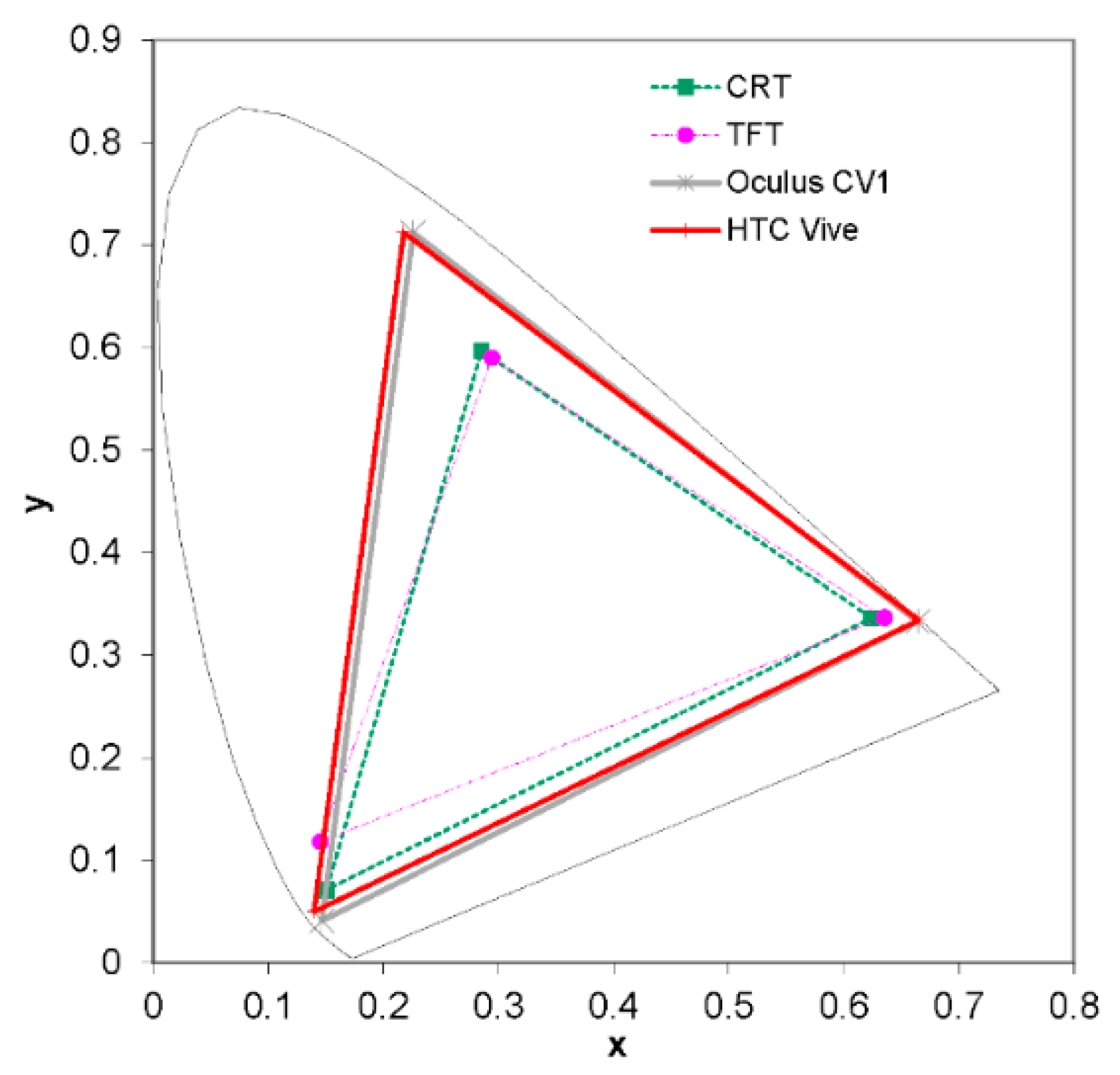

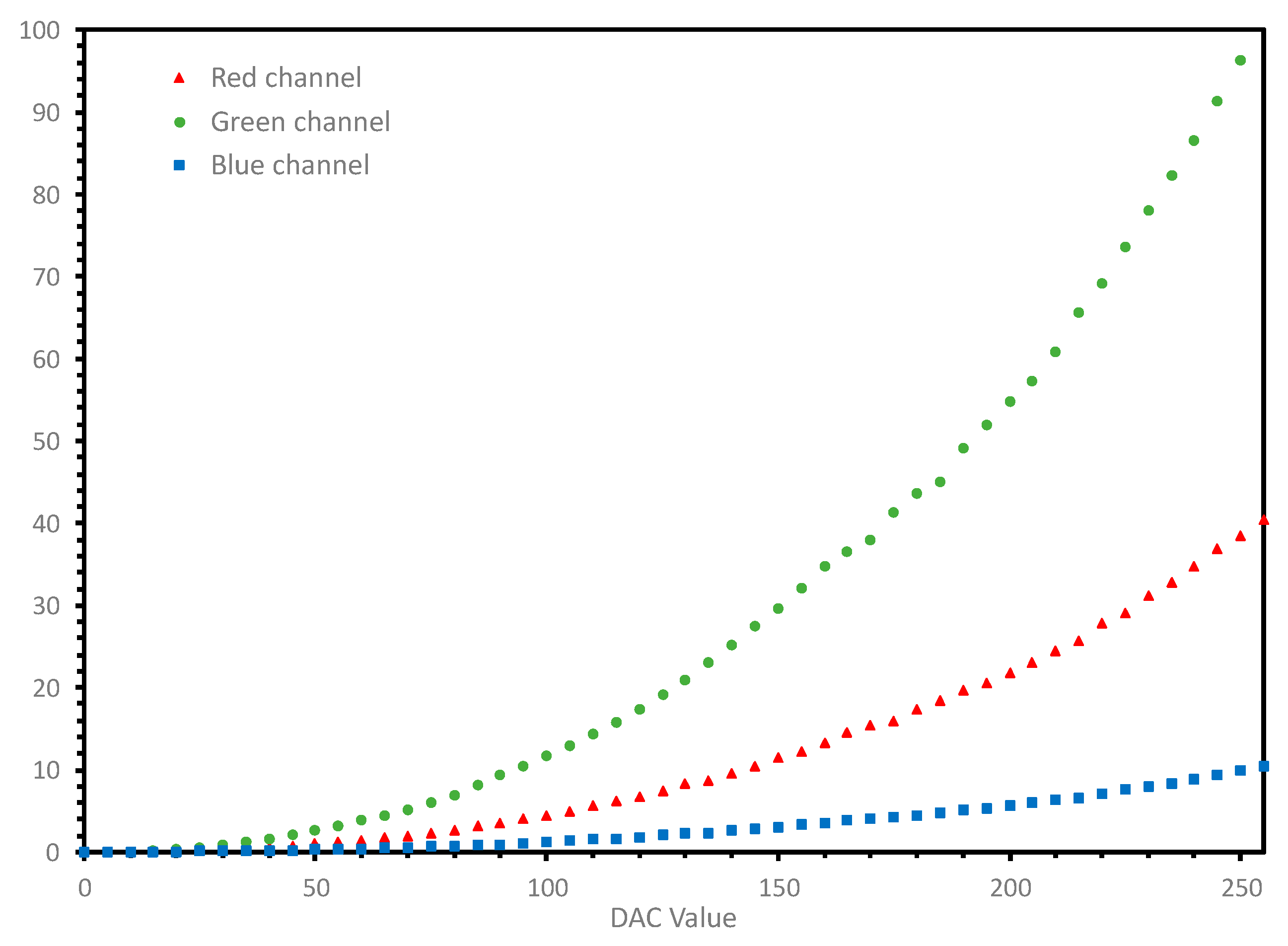

2.1. Chromatic Characterization of a VR Device

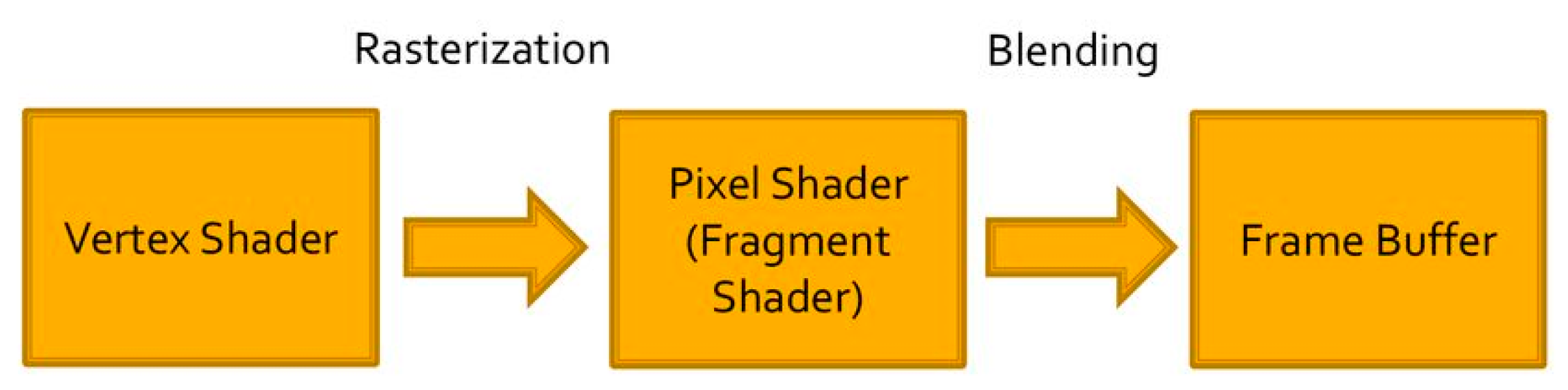

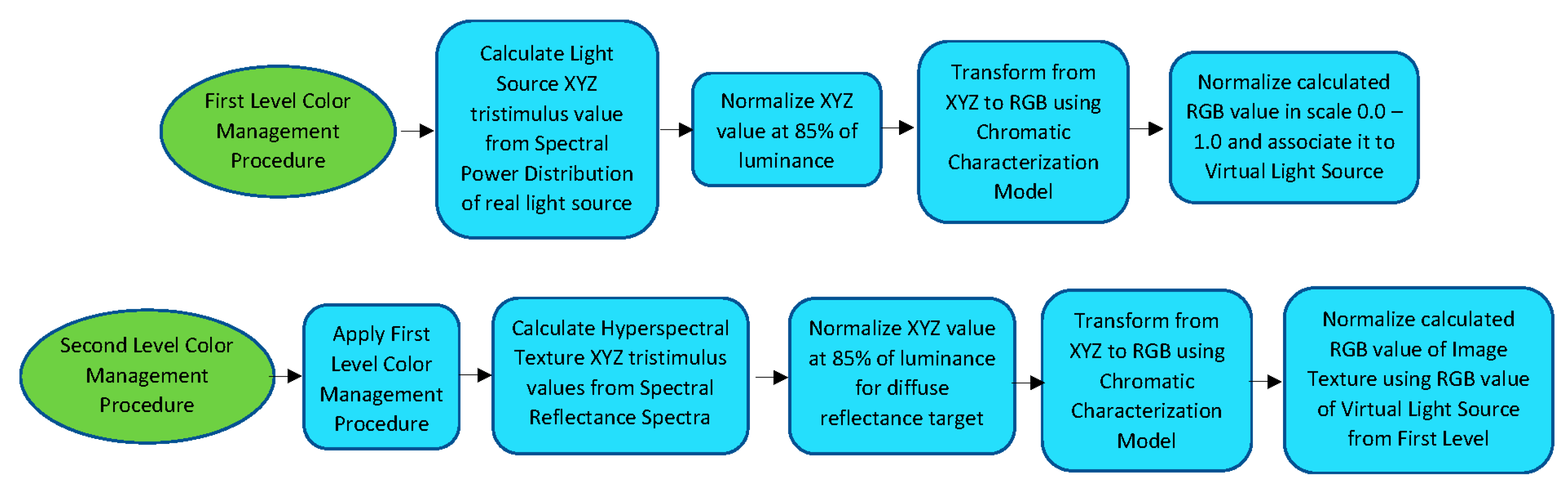

2.2. Implementation of a Color Management Procedure Adapted to VR Systems

- We focus on the color matter, disregarding the participation of glossy objects and deactivating the secondary reflections.

- We limited the 3D software processing to real time processing, disabling the Baked and Global illumination options.

- We used Unity’s standard shader and configured the player using its linear option with forward rendering activated.

3. Results

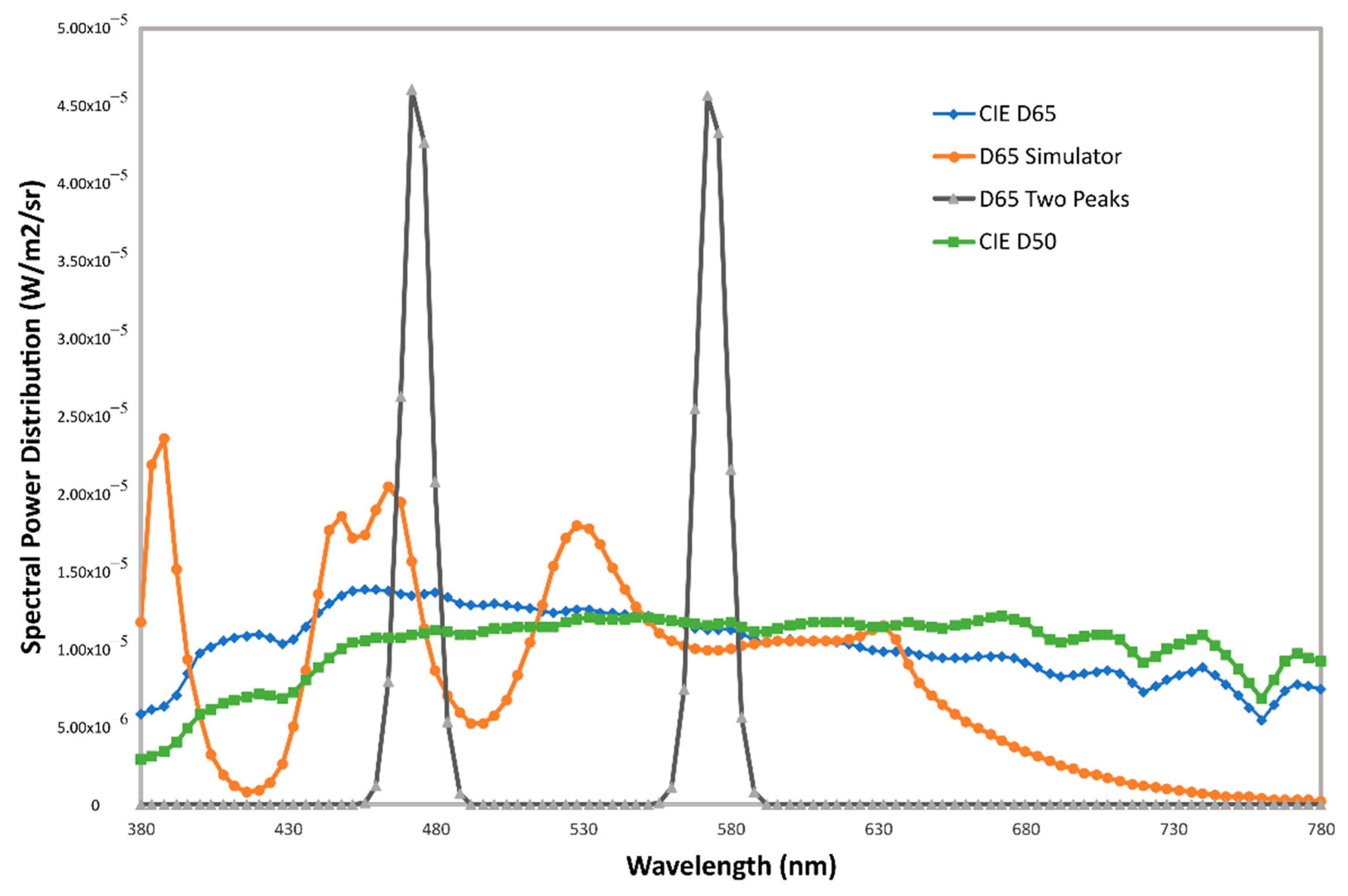

3.1. Design of the Virtual Lightbooth and Reflectance Diffuse Reference Pattern

3.2. Hyperspectral Textures

3.3. Validation of the Procedure of Color Management in VR

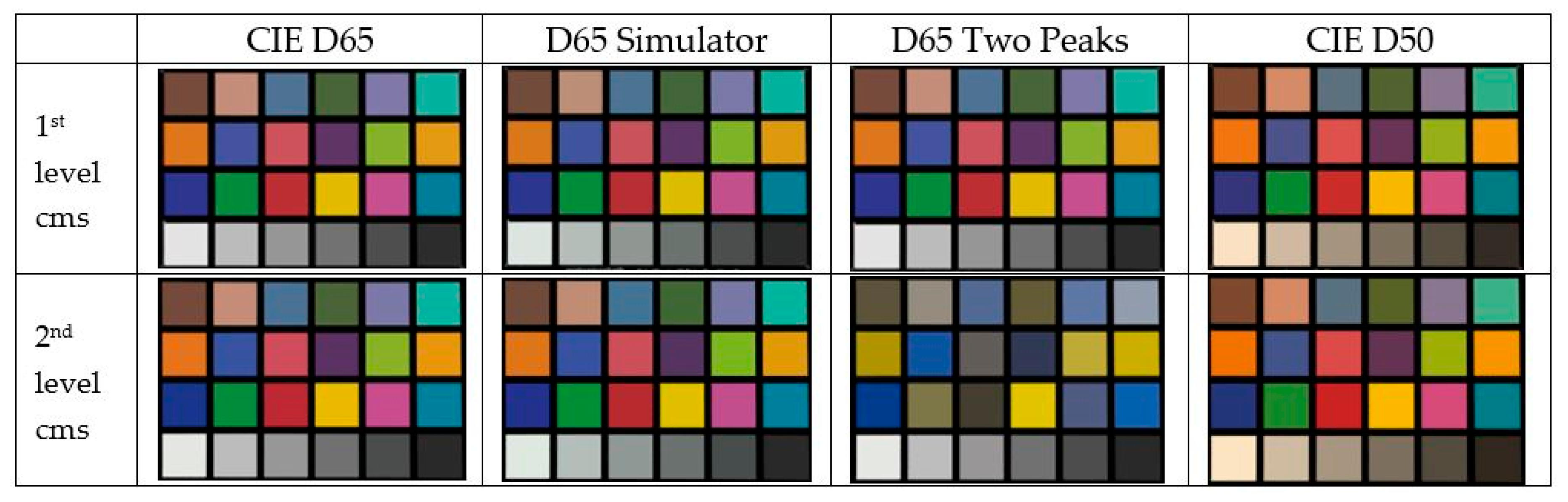

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- LeGrand, Y.; ElHage, S.G. Physiological Optics; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Berns, R.S. Methods for characterizing CRT displays. Displays 1996, 16, 173–182. [Google Scholar] [CrossRef]

- Pardo, P.J.; Pérez, A.L.; Suero, M.I. Validity of TFT-LCD displays for color vision deficiency research and diagnosis. Displays 2004, 25, 159–163. [Google Scholar] [CrossRef]

- Penczek, J.; Boynton, P.A.; Meyer, F.M.; Heft, E.L.; Austin, R.L.; Lianza, T.A.; Leibfried, L.V.; Gacy, L.W. 65-1: Distinguished Paper: Photometric and Colorimetric Measurements of Near-Eye Displays. SID Symp. Dig. Tech. Pap. 2017, 48, 950–953. [Google Scholar] [CrossRef]

- Penczek, J.; Boynton, P.A.; Meyer, F.M.; Heft, E.L.; Austin, R.L.; Lianza, T.A.; Leibfried, L.V.; Gacy, L.W. Absolute radiometric and photometric measurements of near-eye displays. J. Soc. Inf. Disp. 2017, 25, 215–221. [Google Scholar] [CrossRef]

- Suero, M.I.; Pardo, P.J.; Pérez, A.L. Color characterization of handheld game console displays. Displays 2010, 31, 205–209. [Google Scholar] [CrossRef]

- Morovič, J. Color Gamut Mapping; Wiley: Hoboken, NJ, USA, 2008; Volume 10. [Google Scholar]

- Hassani, N.; Murdoch, M.J. Investigating color appearance in optical see-through augmented reality. Color Res. Appl. 2019, 44, 492–507. [Google Scholar] [CrossRef]

- ElBamby, M.S.; Perfecto, C.; Bennis, M.; Doppler, K. Toward Low-Latency and Ultra-Reliable Virtual Reality. IEEE Netw. 2018, 32, 78–84. [Google Scholar] [CrossRef] [Green Version]

- Shao, X.; Xu, W.; Lin, L.; Zhang, F. A multi-GPU accelerated virtual-reality interaction simulation framework. PLoS ONE 2019, 14, e0214852. [Google Scholar] [CrossRef] [PubMed]

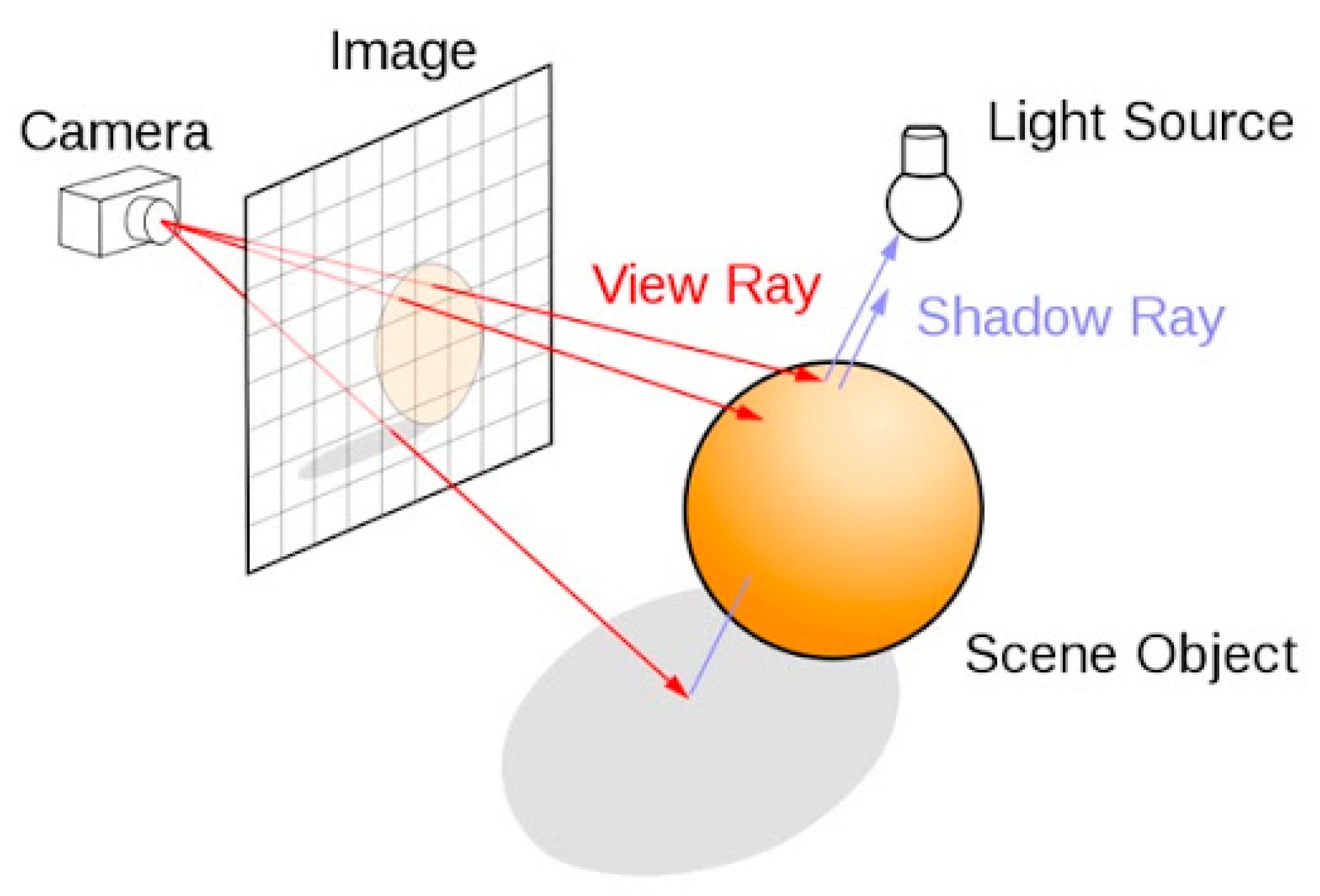

- Ray Tracing on Programmable Graphics Hardware. Available online: https://dl.acm.org/doi/10.1145/566654.566640 (accessed on 29 September 2020).

- Ray Trace diagram.svg. Available online: https://en.wikipedia.org/wiki/Ray_tracing_(graphics)#/media/File:Ray_trace_diagram.svg (accessed on 17 September 2020).

- Schnack, A.; Wright, M.; Holdershaw, J.L. Immersive virtual reality technology in a three-dimensional virtual simulated store: Investigating telepresence and usability. Food Res. Int. 2019, 117, 40–49. [Google Scholar] [CrossRef] [PubMed]

- Oculus Rifts. Available online: https://www.oculus.com/rift-s/?locale=es_ES (accessed on 16 July 2020).

- HTC Vive. Available online: https://www.vive.com/mx/product/ (accessed on 16 July 2020).

- Daydream. Available online: https://arvr.google.com/daydream/ (accessed on 16 July 2020).

- Manual de las Gafas VR ONE Plus. Available online: https://www.zeiss.com/content/dam/virtual-reality/english/downloads/pdf/manual/20170505_zeiss_vr-one-plus_manual_and_safety_upd_digital_es.pdf (accessed on 16 July 2020).

- Unreal Engine. Available online: https://www.unrealengine.com/en-US/ (accessed on 16 July 2020).

- Unity. Available online: https://unity.com/es (accessed on 16 July 2020).

- Pardo, P.J.; Suero, M.I.; Pérez, Á.L. Correlation between perception of color, shadows, and surface textures and the realism of a scene in virtual reality. J. Opt. Soc. Am. A 2018, 35, B130–B135. [Google Scholar] [CrossRef] [PubMed]

- Shen, H.; Zhang, Z.; Shang, Z. Fast global rendering in virtual reality via linear integral operators. Int. J. Innov. Comput. Inf. Control 2019, 15, 67–77. [Google Scholar]

- Tuliniemi, J. Physically Based Rendering for Embedded Systems. Master’s Thesis, University of Oulu, Oulu, Finland, 2018. [Google Scholar]

- Díaz-Barranca, F.; Pardo, P.J.; Suero, M.I.; Pérez, A.L. Is it possible to apply colour management technics in Virtual Reality devices? In Proceedings of the XIV Conferenza del Colore, Firenze, Italy, 11–12 September, 2018.

- CIE 2017 Colour Fidelity Index for Accurate Scientific Use. Available online: http://cie.co.at/publications/cie-2017-colour-fidelity-index-accurate-scientific-use (accessed on 29 September 2020).

- Anstis, S.; Cavanagh, P.; Maurer, D.; Lewis, T.; MacLeod, D.A.I.; Mather, G. Computer-generated screening test for colorblindness. Color Res. Appl. 1986, 11, S63–S66. [Google Scholar]

- Diaz-Barrancas, F.; Cwierz, H.C.; Pardo, P.J.; Perez, A.L.; Suero, M.I. Visual fidelity improvement in virtual reality through spectral textures applied to lighting simulations. Electron. Imaging 2020, 259, 1–4. [Google Scholar] [CrossRef]

- Díaz-Barrancas, F.; Cwierz, H.; Pardo, P.J.; Suero, M.I.; Perez, A.L. Hyperspectral textures for a better colour reproduction in virtual reality. In Proceedings of the XV Conferenza del Colore, Macerata, Italy, 5–7 September 2019. [Google Scholar]

- Diaz-Barrancas, F.; Cwierz, H.C.; Pardo, P.J.; Perez, A.L.; Suero, M.I. Improvement of Realism Sensation in Virtual Reality Scenes Applying Spectral and Colour Management Techniques. In Proceedings of the 12th International Conference on Computer Vision Systems (ICVS 2019), Riga, Latvia, 5–9 July 2019. [Google Scholar]

- Martínez-Domingo, M.Á.; Gómez-Robledo, L.; Valero, E.M.; Huertas, R.; Hernández-Andrés, J.; Ezpeleta, S.; Hita, E. Assessment of VINO filters for correcting red-green Color Vision Deficiency. Opt. Express 2019, 27, 17954–17967. [Google Scholar] [CrossRef] [PubMed]

- Wyszecki, G.; Stiles, W.S. Color Science; Wiley: New York, NY, USA, 1982. [Google Scholar]

| Channel | Chromaticity | Luminance | Gamma | ||

|---|---|---|---|---|---|

| x | y | Y (Relative) | Value | R2 | |

| White | 0.299 ± 0.002 | 0.315 ± 0.002 | 100.0 | ||

| Red | 0.667 ± 0.004 | 0.332 ± 0.003 | 30.3 ± 1.1 | 2.43 | 0.999 |

| Green | 0.217 ± 0.007 | 0.710 ± 0.002 | 75.4 ± 2.4 | 2.38 | 0.999 |

| Blue | 0.139 ± 0.002 | 0.051 ± 0.002 | 7.8 ± 0.5 | 2.38 | 0.998 |

| Black | 0.311 ± 0.01 | 0.307 ± 0.004 | 0.4 ± 0.2 | ||

| ColorChecker | Reference | Measured | ||||

|---|---|---|---|---|---|---|

| Patch Number | R | G | B | R | G | B |

| 1 | 115 | 82 | 68 | 115 | 82 | 67 |

| 2 | 194 | 150 | 130 | 195 | 149 | 129 |

| 3 | 98 | 122 | 157 | 93 | 123 | 157 |

| 4 | 87 | 108 | 67 | 90 | 108 | 65 |

| 5 | 133 | 128 | 177 | 130 | 129 | 176 |

| 6 | 103 | 189 | 170 | 99 | 191 | 171 |

| 7 | 214 | 126 | 44 | 219 | 123 | 45 |

| 8 | 80 | 91 | 166 | 72 | 92 | 168 |

| 9 | 193 | 90 | 99 | 194 | 85 | 98 |

| 10 | 94 | 60 | 108 | 91 | 59 | 105 |

| 11 | 157 | 188 | 64 | 161 | 189 | 63 |

| 12 | 224 | 163 | 46 | 229 | 161 | 41 |

| 13 | 56 | 61 | 150 | 43 | 62 | 147 |

| 14 | 70 | 148 | 73 | 72 | 149 | 72 |

| 15 | 175 | 54 | 60 | 176 | 49 | 56 |

| 16 | 231 | 199 | 31 | 238 | 199 | 24 |

| 17 | 187 | 86 | 149 | 188 | 84 | 150 |

| 18 | 8 | 133 | 161 | 0 | 136 | 166 |

| 19 | 243 | 243 | 242 | 245 | 245 | 240 |

| 20 | 200 | 200 | 200 | 200 | 202 | 201 |

| 21 | 160 | 160 | 160 | 160 | 161 | 161 |

| 22 | 122 | 122 | 121 | 120 | 121 | 121 |

| 23 | 85 | 85 | 85 | 83 | 84 | 85 |

| 24 | 52 | 52 | 52 | 50 | 50 | 50 |

| Light Source | CIE Rf | CCT | CIE 1931 x,y | CIE 1931 XYZ | RGB |

|---|---|---|---|---|---|

| CIE D65 | 100 | 6503 | 0.3127, 0.3289 | 80.81, 85.00, 92.57 | 237, 237, 237 |

| D65 Simulator | 88.2 | 6568 | 0.3107, 0.3344 | 79.00, 85.00, 90.31 | 232, 239, 234 |

| D65 Two Peaks | 3.2 | 6501 | 0.3127, 0.3291 | 80.74, 85.00, 92. 53 | 237, 237, 237 |

| CIE D50 | 100 | 5000 | 0.3458, 0.3585 | 81.98, 85.00, 70.11 | 255, 235, 205 |

| Light Source | 1st Level CMS | 2nd Level CMS | ||||

|---|---|---|---|---|---|---|

| R | G | B | R | G | B | |

| CIE D65 | 3.1 ± 3 | 1.4 ± 1 | 2.0 ± 2 | 0.2 ± 0.4 | 0.5 ± 0.5 | 0.2 ± 0.4 |

| Simulator D65 | 2.6 ± 2 | 1.7 ± 1 | 3.3 ± 5 | 0.5 ± 0.5 | 0.2 ± 0.4 | 0.4 ± 0.6 |

| D65 Two Peaks | 30 ± 29 | 9.0 ± 8 | 6.5 ± 7 | 0.5 ± 0.5 | 0.4 ± 0.5 | 0.5 ± 0.5 |

| CIE D50 | 3.1 ± 3 | 1.7 ± 2 | 4.6 ± 7 | 0.1 ± 0.3 | 0.4 ± 0.5 | 1.2 ± 0.9 |

| Color Dot | Calculated Color | Measured Color | ΔE | ||||

|---|---|---|---|---|---|---|---|

| x | y | Y | x | y | Y | DE2000 | |

| P1 Green Dark | 0.3560 | 0.3854 | 24.6 | 0.3557 | 0.3850 | 25.4 | 1.00 |

| P2 Green Medium | 0.3602 | 0.3906 | 29.1 | 0.3601 | 0.3903 | 30.0 | 1.05 |

| P3 Green Light | 0.3734 | 0.3986 | 38.4 | 0.3731 | 0.3984 | 41.0 | 0.82 |

| P4 Ochre Dark | 0.3901 | 0.3656 | 27.1 | 0.3895 | 0.3655 | 28.1 | 0.70 |

| P5 Ochre Medium | 0.4022 | 0.3746 | 33.1 | 0.4014 | 0.3743 | 34.3 | 0.76 |

| P6 Ochre Light | 0.3945 | 0.3834 | 41.5 | 0.3941 | 0.3834 | 42.8 | 1.28 |

| P7 Purple Dark | 0.3713 | 0.3282 | 24.2 | 0.3709 | 0.3282 | 25.6 | 1.14 |

| P8 Purple Light | 0.3884 | 0.3576 | 36.1 | 0.3878 | 0.3573 | 38.0 | 0.72 |

| P9 Bluish-Green Dark | 0.3158 | 0.3759 | 23.7 | 0.3159 | 0.3763 | 22.7 | 0.64 |

| P10 Bluish-Green Light | 0.3182 | 0.3881 | 33.3 | 0.3182 | 0.3887 | 31.7 | 1.47 |

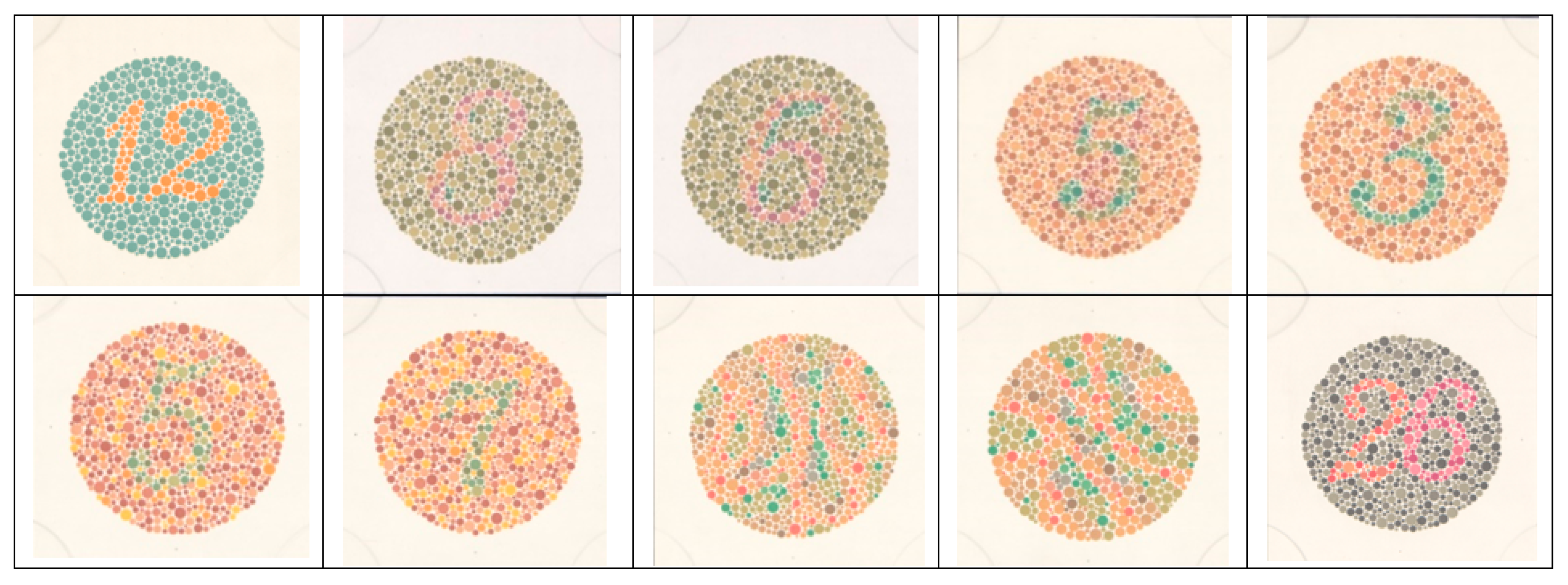

| Observer | Qualification | P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref1 | Normal | 12 | 8 | 6 | 5 | 3 | 7 | 3 | - | - | 26 |

| Ref2 | Protan | 12 | 3 | 5 | 2 | 5 | - | - | 5 | 8 | 6 |

| Ref3 | Deutan | 12 | 3 | 5 | 2 | 5 | - | - | 5 | 8 | 2 |

| Ob1 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | 8 | 26 |

| Ob2 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | - | 26 |

| Ob3 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | - | 26 |

| Ob4 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | - | 26 |

| Ob5 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | - | 26 |

| Ob6 | Normal | 12 | 8 | 6 | 5 | 3 | 5 | 7 | - | - | 26 |

| Ob7 | Protan | 12 | 3 | 5 | 2 | - | - | - | 5 | 2 | 6 |

| Ob8 | Protan | 12 | 3 | 5 | 2 | 5 | - | - | 5 | 8 | 6 |

| Ob9 | Deutan | 12 | 3 | 5 | 2 | - | - | - | 5 | 8 | 2 |

| Ob10 | Protan | 12 | 3 | 5 | 2 | 5 | - | - | 5 | 8 | 6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz-Barrancas, F.; Cwierz, H.; Pardo, P.J.; Pérez, Á.L.; Suero, M.I. Spectral Color Management in Virtual Reality Scenes. Sensors 2020, 20, 5658. https://doi.org/10.3390/s20195658

Díaz-Barrancas F, Cwierz H, Pardo PJ, Pérez ÁL, Suero MI. Spectral Color Management in Virtual Reality Scenes. Sensors. 2020; 20(19):5658. https://doi.org/10.3390/s20195658

Chicago/Turabian StyleDíaz-Barrancas, Francisco, Halina Cwierz, Pedro J. Pardo, Ángel Luis Pérez, and María Isabel Suero. 2020. "Spectral Color Management in Virtual Reality Scenes" Sensors 20, no. 19: 5658. https://doi.org/10.3390/s20195658

APA StyleDíaz-Barrancas, F., Cwierz, H., Pardo, P. J., Pérez, Á. L., & Suero, M. I. (2020). Spectral Color Management in Virtual Reality Scenes. Sensors, 20(19), 5658. https://doi.org/10.3390/s20195658