A Complementary Filter Design on SE(3) to Identify Micro-Motions during 3D Motion Tracking

Abstract

1. Introduction

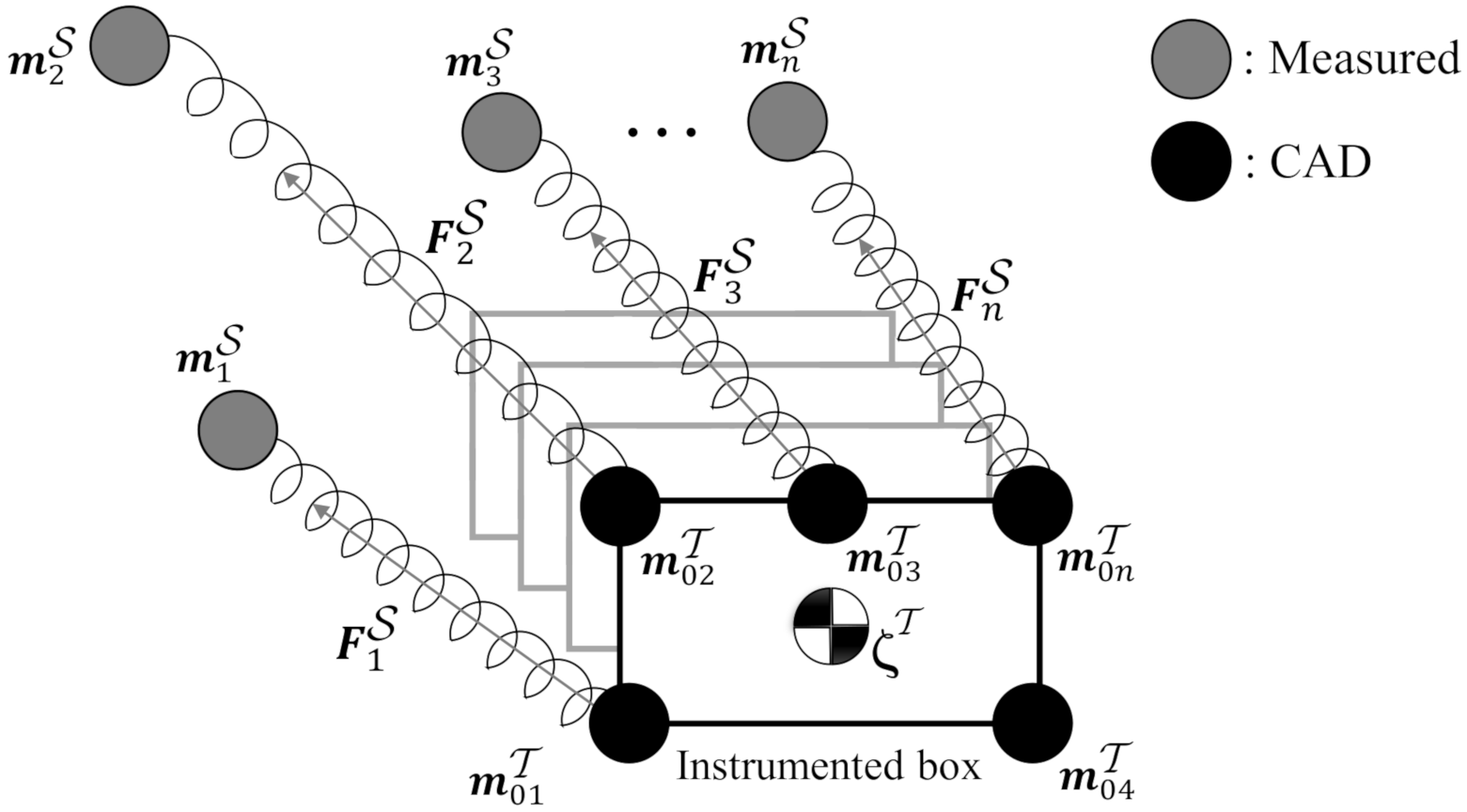

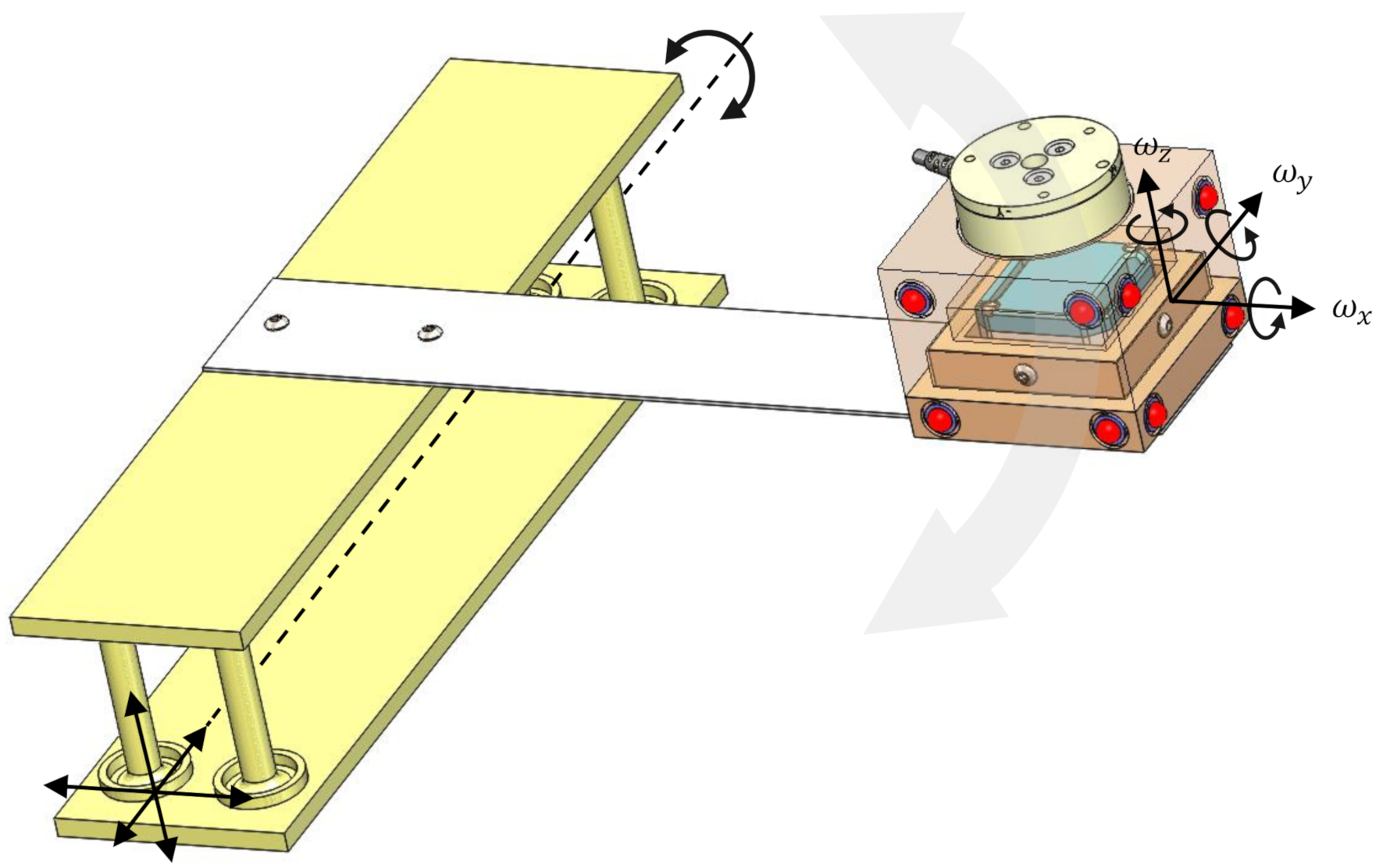

2. Multimodal Sensor Fusion for Motion Tracking

- task frame : located at the center of one of the side face of the box.

- loadcell frame : attached to the center of the mounting-plate of the loadcell.

- IMU frame: located at the center of the box.

- global frame : defined by the motion tracker, via external markers mounted on the workbench.

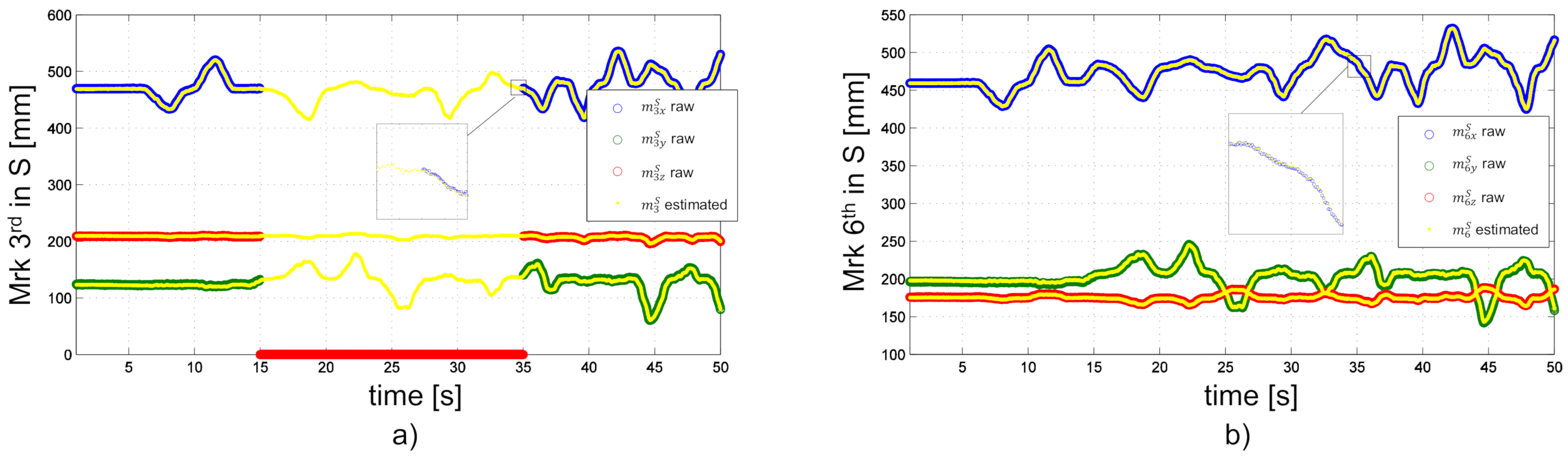

2.1. Damped Least Square Algorithm for the Filtering Motion Tracker’s Signal

| Algorithm 1:Damped least square algorithm for the filtering motion tracker’s signal. |

|

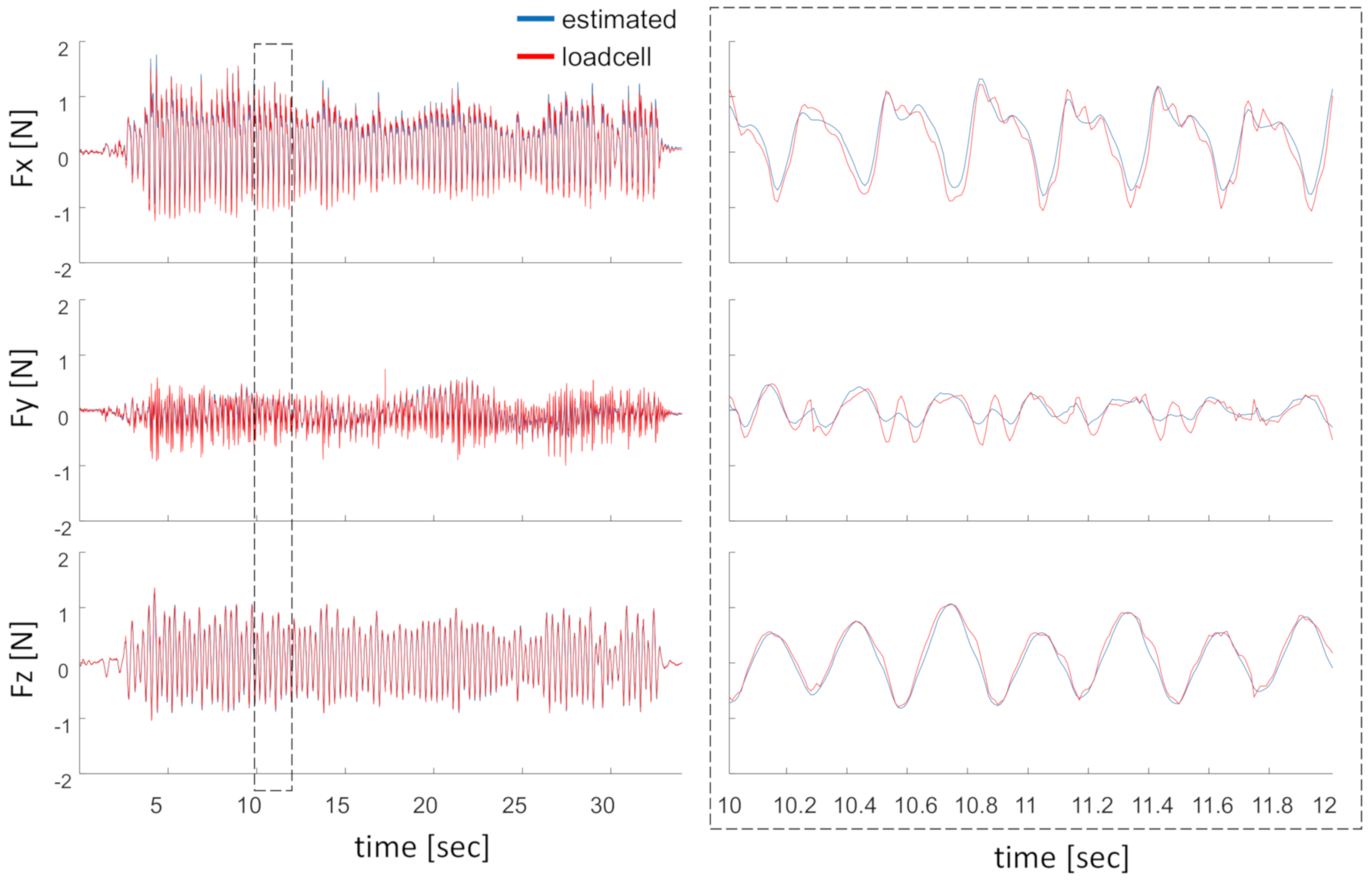

2.2. Experimental Verification of the Filtered Motion Capture Signal

3. Complementary Filter on

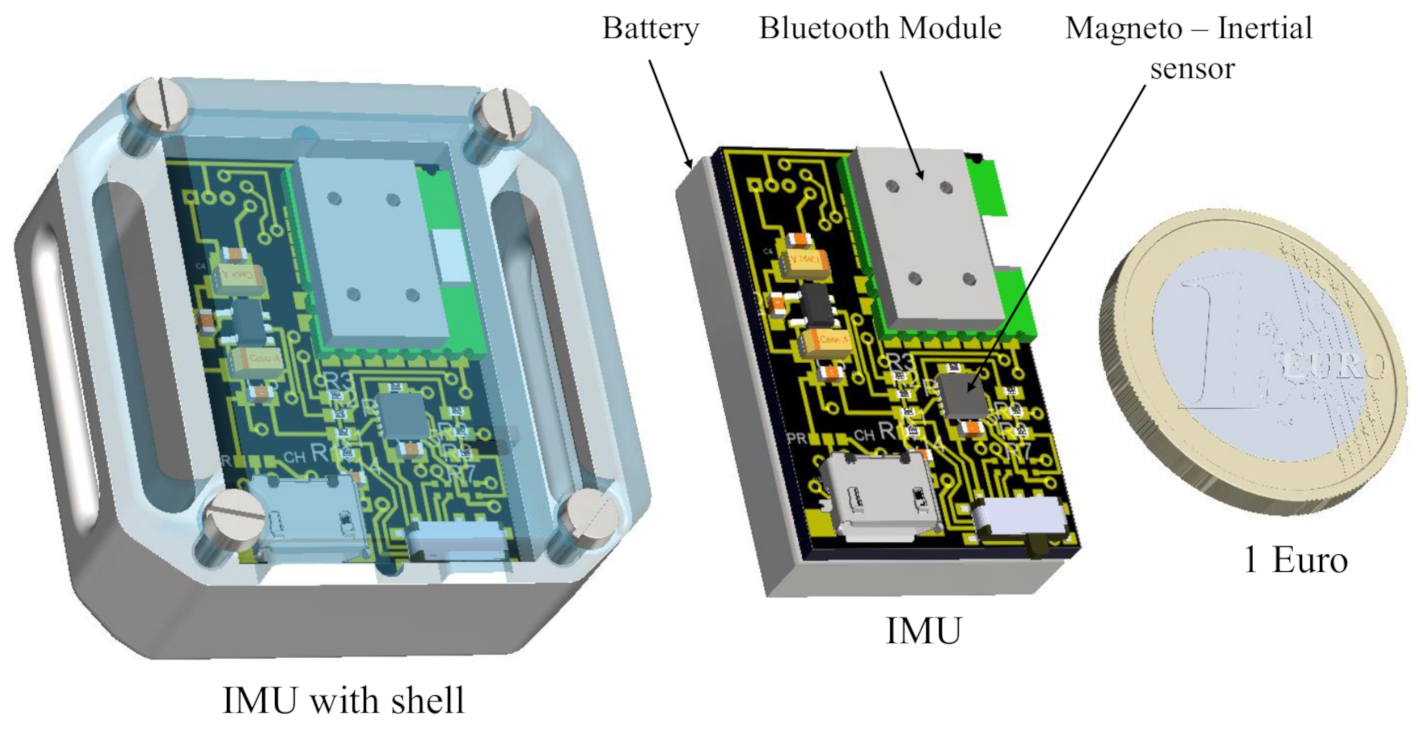

3.1. High-Frequency Motion Capture Imu Sensor Specifications

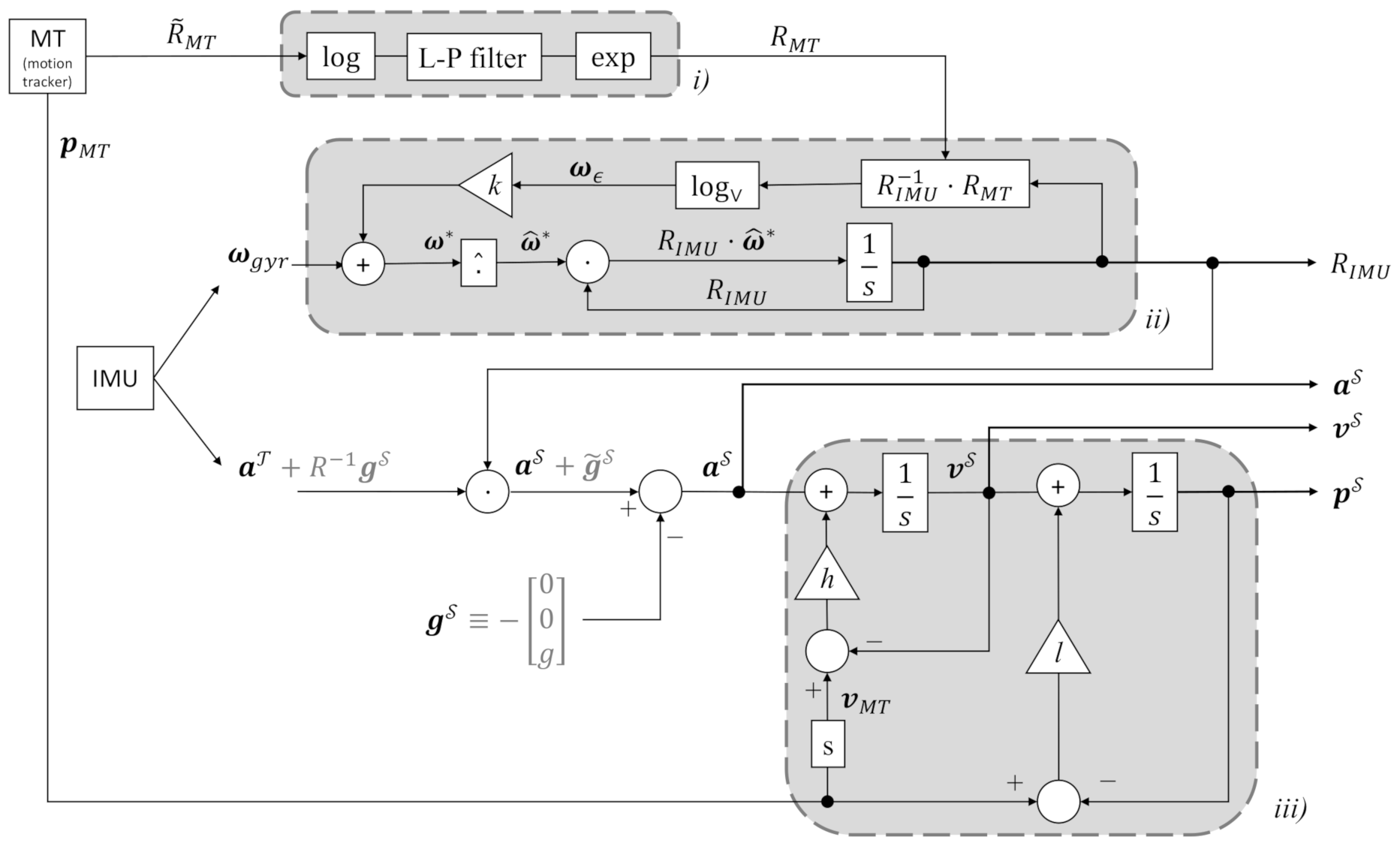

3.2. Complementary Filter

- The rotation and denotes the relative orientation of the instrumented box with respect to the global frame which is measured by using, respectively, the motion tracker and IMU.

- Vectors presents the translation of frame in global frame .

- denotes the angular velocity of the rigid object measured by the IMU.

- denotes the output of the accelerometers embedded in the IMU and are expressed in moving frame coordinates.

- denotes the oscillation force which is caused by the plate of the loadcell.

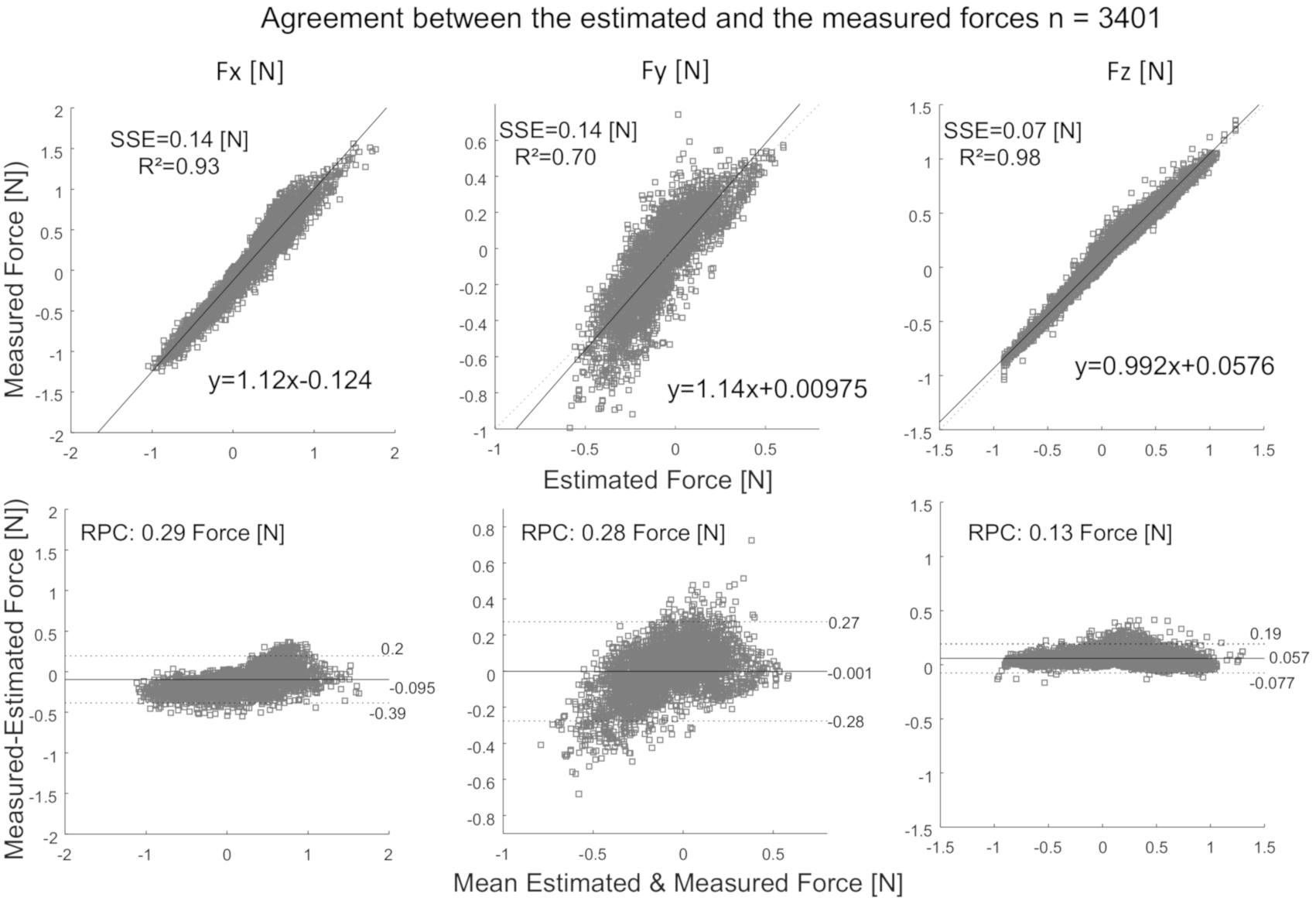

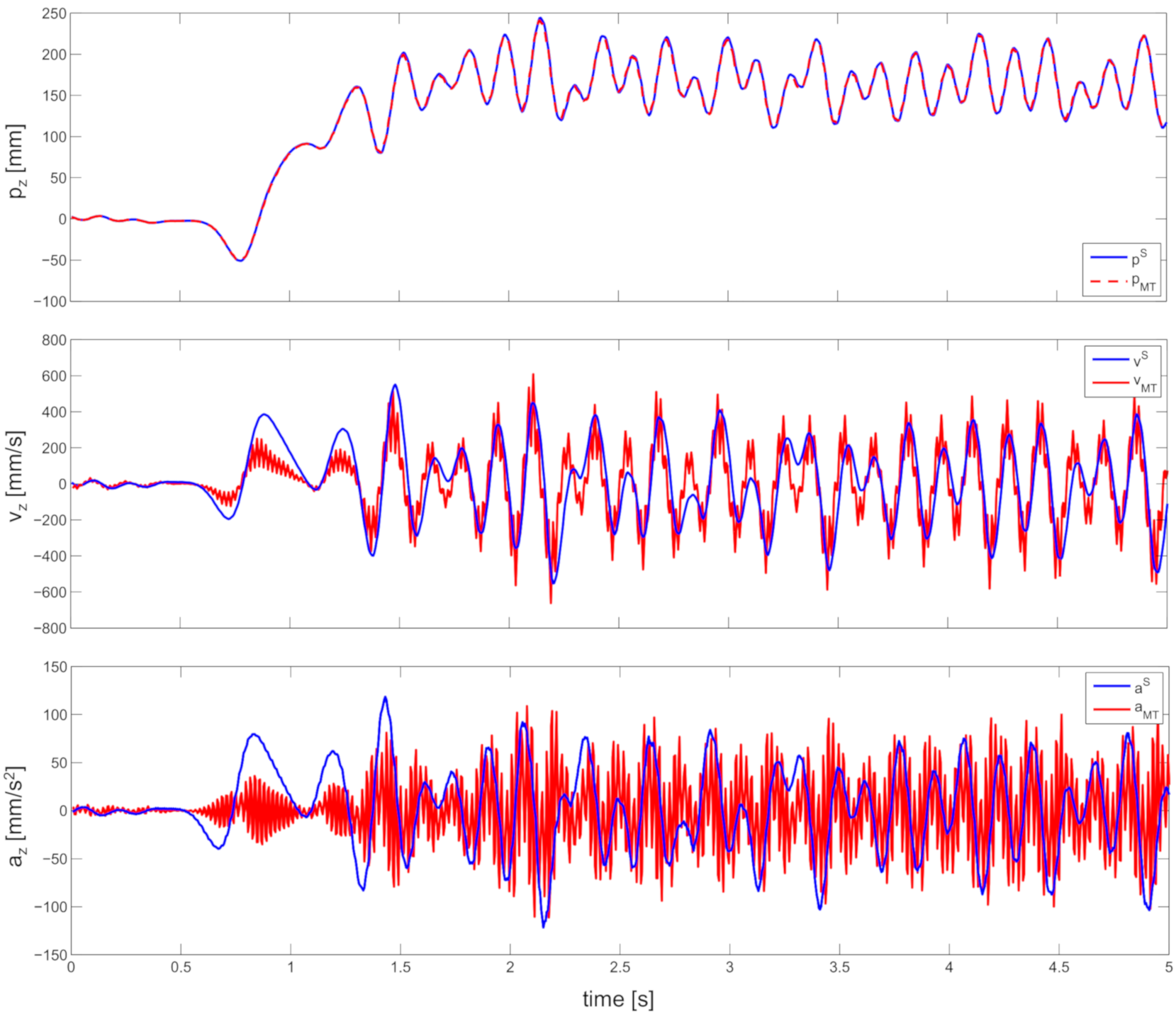

4. Experimental Verification

| Algorithm 2:Complementary filter algorithm on . |

|

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Beecks, C.; Hassani, M.; Brenger, B.; Hinnell, J.; Schüller, D.; Mittelberg, I.; Seidl, T. Efficient query processing in 3D motion capture gesture databases. Int. J. Semant. Comput. 2016, 10, 5–25. [Google Scholar] [CrossRef]

- Lim, C.K.; Luo, Z.; Chen, I.M.; Yeo, S.H. A low cost wearable optical-based goniometer for human joint monitoring. Front. Mech. Eng. 2011, 6, 13–22. [Google Scholar] [CrossRef]

- Mayagoitia, R.E.; Nene, A.V.; Veltink, P.H. Accelerometer and rate gyroscope measurement of kinematics: An inexpensive alternative to optical motion analysis systems. J. Biomech. 2002, 35, 537–542. [Google Scholar] [CrossRef]

- Takeda, R.; Tadano, S.; Natorigawa, A.; Todoh, M.; Yoshinari, S. Gait posture estimation using wearable acceleration and gyro sensors. J. Biomech. 2009, 42, 2486–2494. [Google Scholar] [CrossRef] [PubMed]

- Chung, P.; Ng, G. Comparison between an accelerometer and a three-dimensional motion analysis system for the detection of movement. Physiotherapy 2012, 98, 256–259. [Google Scholar] [CrossRef] [PubMed]

- Stone, J.; Currier, B.; Niebur, G.; An, K.N. The use of a direct current electromagnetic tracking device in a metallic environment. Biomed. Sci. Instrum. 1995, 32, 305–311. [Google Scholar]

- Perie, D.; Tate, A.; Cheng, P.; Dumas, G. Evaluation and calibration of an electromagnetic tracking device for biomechanical analysis of lifting tasks. J. Biomech. 2002, 35, 293–297. [Google Scholar] [CrossRef]

- Bull, A.; Berkshire, F.; Amis, A. Accuracy of an electromagnetic measurement device and application to the measurement and description of knee joint motion. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 1998, 212, 347–355. [Google Scholar] [CrossRef] [PubMed]

- Phan, G.H.; Hansen, C.; Tommasino, P.; Budhota, A.; Mohan, D.M.; Hussain, A.; Burdet, E.; Campolo, D. Estimating Human Wrist Stiffness during a Tooling Task. Sensors 2020, 20, 3260. [Google Scholar] [CrossRef] [PubMed]

- Lasenby, J.; Stevenson, A. Using geometric algebra for optical motion capture. In Geometric Algebra with Applications in Science and Engineering; Springer: Berlin/Heidelberg, Germany, 2001; pp. 147–169. [Google Scholar]

- De Canio, G.; de Felice, G.; De Santis, S.; Giocoli, A.; Mongelli, M.; Paolacci, F.; Roselli, I. Passive 3D motion optical data in shaking table tests of a SRG-reinforced masonry wall. Earthquakes Struct. 2016, 40, 53–71. [Google Scholar] [CrossRef]

- Filippeschi, A.; Schmitz, N.; Miezal, M.; Bleser, G.; Ruffaldi, E.; Stricker, D. Survey of motion tracking methods based on inertial sensors: A focus on upper limb human motion. Sensors 2017, 17, 1257. [Google Scholar] [CrossRef] [PubMed]

- Campolo, D.; Keller, F.; Guglielmelli, E. Inertial/magnetic sensors based orientation tracking on the group of rigid body rotations with application to wearable devices. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4762–4767. [Google Scholar]

- Mahony, R.; Hamel, T.; Pflimlin, J.M. Nonlinear complementary filters on the special orthogonal group. IEEE Trans. Autom. Control 2008, 53, 1203–1218. [Google Scholar] [CrossRef]

- Campolo, D.; Schenato, L.; Pi, L.; Deng, X.; Guglielmelli, E. Attitude estimation of a biologically inspired robotic housefly via multimodal sensor fusion. Adv. Robot. 2009, 23, 955–977. [Google Scholar] [CrossRef]

- Jung, H.H.; Kim, M.K.; Lyou, J. Realization of a hybrid human motion capture system. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 18–21 October 2017; pp. 1581–1585. [Google Scholar]

- Vergara, M.; Sancho, J.L.; Rodríguez, P.; Pérez-González, A. Hand-transmitted vibration in power tools: Accomplishment of standards and users’ perception. Int. J. Ind. Ergon. 2008, 38, 652–660. [Google Scholar] [CrossRef]

- Zheng, Z.; Yu, T.; Li, H.; Guo, K.; Dai, Q.; Fang, L.; Liu, Y. Hybridfusion: Real-time performance capture using a single depth sensor and sparse imus. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 384–400. [Google Scholar]

- Guzsvinecz, T.; Szucs, V.; Sik, C.L. Developing movement recognition application with the use of Shimmer sensor and Microsoft Kinect sensor. Stud. Health Technol. Inform. 2015, 217, 767–772. [Google Scholar] [PubMed]

- Gia Hoang, P.; Asif, H.; Campolo, D. Preparation for capturing human skills during tool. In Applied Mechanics and Materials; Trans Tech: Warwick, NY, USA, 2016; Volume 842, pp. 293–302. [Google Scholar]

- Dorfmüller-Ulhaas, K. Robust optical user motion tracking using a kalman filter. In Proceedings of the 10th ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 1–3 October 2003. [Google Scholar]

- Welch, G.; Bishop, G.; Vicci, L.; Brumback, S.; Keller, K.; Colucci, D.N. The HiBall tracker: High-performance wide-area tracking for virtual and augmented environments. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, London, UK, 20–22 December 1999. [Google Scholar]

- Campolo, D.; Schenato, L.; Pi, L.; Deng, X.; Guglielmelli, E. Multimodal sensor fusion for attitude estimation of micromechanical flying insects: A geometric approach. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3859–3864. [Google Scholar]

- Baldwin, G.; Mahony, R.; Trumpf, J.; Hamel, T.; Cheviron, T. Complementary filter design on the Special Euclidean group SE (3). In Proceedings of the 2007 European Control Conference (ECC), Kos, Greece, 2–5 July 2007; pp. 3763–3770. [Google Scholar]

- Murray, R.M.; Li, Z.; Sastry, S.S. A Mathematical Introduction to Robotic Manipulation; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Brown, R.G.; Hwang, P.Y. Introduction to random signals and applied Kalman filtering: With MATLAB exercises and solutions. In Introduction to Random Signals and Applied Kalman Filtering: With MATLAB Exercises and Solutions; Brown, R.G., Hwang, P.Y.C., Eds.; Wiley: New York, NY, USA, 1997; Volume 1. [Google Scholar]

- Daum, F. Nonlinear filters: Beyond the Kalman filter. Aerosp. Electron. Syst. Mag. IEEE 2005, 20, 57–69. [Google Scholar] [CrossRef]

- Madgwick, S.O.; Harrison, A.J.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the 2011 IEEE International Conference Rehabilitation Robotics (ICORR), Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Giavarina, D. Understanding bland altman analysis. Biochem. Med. Biochem. Med. 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Machireddy, A.; Van Santen, J.; Wilson, J.L.; Myers, J.; Hadders-Algra, M.; Song, X. A video/IMU hybrid system for movement estimation in infants. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; pp. 730–733. [Google Scholar]

- Phan, G.H.; Hansen, C.; Tommasino, P.; Hussain, A.; Campolo, D. Design and characterization of an instrumented hand-held power tool to capture dynamic interaction with the workpiece during manual operations. Int. J. Adv. Manuf. Technol. 2020, 111, 1–14. [Google Scholar] [CrossRef]

- Phan, G.H.; Tommasino, P.; Hussain, A.; Hansen, C.; Castagne, S.; Campolo, D. Geometry of contact during tooling tasks via dynamic estimation. Int. J. Adv. Manuf. Technol. 2018, 94, 2895–2904. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Phan, G.-H.; Hansen, C.; Tommasino, P.; Hussain, A.; Formica, D.; Campolo, D. A Complementary Filter Design on SE(3) to Identify Micro-Motions during 3D Motion Tracking. Sensors 2020, 20, 5864. https://doi.org/10.3390/s20205864

Phan G-H, Hansen C, Tommasino P, Hussain A, Formica D, Campolo D. A Complementary Filter Design on SE(3) to Identify Micro-Motions during 3D Motion Tracking. Sensors. 2020; 20(20):5864. https://doi.org/10.3390/s20205864

Chicago/Turabian StylePhan, Gia-Hoang, Clint Hansen, Paolo Tommasino, Asif Hussain, Domenico Formica, and Domenico Campolo. 2020. "A Complementary Filter Design on SE(3) to Identify Micro-Motions during 3D Motion Tracking" Sensors 20, no. 20: 5864. https://doi.org/10.3390/s20205864

APA StylePhan, G.-H., Hansen, C., Tommasino, P., Hussain, A., Formica, D., & Campolo, D. (2020). A Complementary Filter Design on SE(3) to Identify Micro-Motions during 3D Motion Tracking. Sensors, 20(20), 5864. https://doi.org/10.3390/s20205864