Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review

Abstract

1. Introduction

2. Problem Statement

2.1. Terminology and Reference Frames

- Absolute Localisation—in a complex environment, true global positioning (a position estimate relative to the earth centred, earth-fixed reference frame for example) is often not possible and not usually relevant. Due to the high ferro-magnetic content of structures in complex environments, magnetic navigation is also often not feasible. The most appropriate alternative is to reference to a world-fixed reference frame, which is likely to be defined by the boundaries of the environment or the position of external beacons, markers or features of the fixed environment, whose position is known, as shown in Figure 1. This type of localisation is known as Absolute Localisation.

- Relative Localisation—this form of localisation estimates changes in the robot’s body frame relative to an arbitrary point in the environment. Relative localisation methods primarily generate velocities rather than position fixes. Dead reckoning may then be used the calculate the location relative to a position fix. If exteroceptive sensors are used, such as cameras, localisation could be relative to several features in the environment.

2.2. Application Areas and Environmental Features

2.2.1. Application Areas

- (A)

- Modern Nuclear Storage Ponds—there are over 1000 wet nuclear storage facilities globally which require continual monitoring. These are static, structured environments with clear water and good illumination levels to facilitate visual inspections [14]. These facilities are usually indoors and can be a few meters in dimension, up to 50 m × 25 m × 10 m.

- (B)

- Legacy Nuclear Storage Ponds—legacy nuclear storage ponds are those that were constructed in the 1950’s and 60’s and which have operated well beyond their original lifespan. There are only a few of these globally, however they present significant decommissioning challenges [15]. Many of these facilities are outdoors and open to the elements and can be up to 50 m × 25 m × 10 m in size.

- (C)

- Legacy Nuclear Storage Silos—as well as large ponds, nuclear waste was also stored in silos in the 19050’s and 60’s, such as those found on the Sellafield site in the UK [16]. These are around 5 m in diameter and 16 m deep and contain nuclear material stored in water.

- (D)

- Nuclear Reactor Pressure Vessels—reactor Pressure Vessels (RPVs) are a critical component of nuclear reactors and they require periodic inspection to ensure there are no structural defects [17,18]. In 2011, the incident at the Fukushima Daiichi Nuclear Power Plant, Japan, led to the fuel rods melting through the RPV into the flooded pedestal below. This led to the formation of a highly complex and radioactive aquatic environment with very restricted access, which requires investigation [19].

- (E)

- Offshore Asset Decommissioning—there are a significant number of offshore assets globally, primarily associated with energy generation; either Oil & Gas (O&G) or wind. Over the next 30 years, many of these will need to be decommissioned [20,21]. The decommissioning process is likely to generate a range of complex environments which UUVs will have to operate in.

- (F)

- Ship Hulls—the inspection of ship hulls is very important in the maritime industry as structural defects can lead to significant reductions in revenue. Traditionally, inspections have been conducted in dry-docks or by divers, which is both expensive and dangerous [22]. UUVs have recently been developed to undertake inspections of both active ships [7] and ship wrecks [23].

- (G)

- Liquid Storage Tanks—liquid storage tanks are widely used all over the world and periodic inspection is required to ensure that structural defects are not present which could lead to catastrophic failures [9]. If the tanks are used to store water, inspections are also required to ensure the water quality is kept high [24,25]. These inspections can be undertaken when the tank is empty (dry) or full (wet). Wet inspections are lower cost and they can be conducted by UUVs; however, they are often limited to manual inspections/interactions due to a lack of localisation technologies. UUVs have also been used to inspect water ballast tanks on ships [26,27].

- (H)

- Marinas, Harbours and Boatyards—Marinas, harbours, and boatyards are vital areas that allow for the use of yachts and small boats. Periodic inspections are required to ensure there are no structural defects and, more recently, for security purposes [28]. Often, these inspections are conducted by divers, which is very hazardous [29]. UUVs have started to be developed to replace divers for these inspections [30].

- (I)

- Tunnels, Sewers, and Flooded Mines—there are 1000 s of km of flooded mine shafts, tunnels, waterways and sewers globally which require inspection to ensure their continued safe operation [8,31,32]. Many of these areas have never been inspected and UUVs offer a safe option to do this. The environments may be natural formations which have been re-purposed, or man-made constructions.

2.2.2. Environmental Characteristics

- (i)

- Scale—these are the characteristic dimensions of the environment which needs to be explored. This size of the environment will have a direct impact on the size of the UUV that can be deployed and the accuracy the localisation system has to provide. Dimensions are given in meters as either [width × length × depth] or [ (diameter) × depth].

- (ii)

- Obstacles—the majority of the applications can be considered as vessels or facilities which contain water. They will be bounded by a floor, walls, and often a ceiling. Obstacles are defined as objects that are not part of this bounding infrastructure. If they are free-standing, they will likely be placed on the floor. Alternatively, they may be a significant protrusion from one of the surfaces.

- (iii)

- Structure—if there are obstacles in the environment, they can be defined as either structured or unstructured. Structured means that they have been placed in the environment in an ordered manner, such as containers that have been stacked. Unstructured means that there is no order to the placement of them. An environment can have unstructured obstacles, even if they have been purposely plac ed and there is clear knowledge of where they are.

- (iv)

- Obstacle Type—if there are obstacles, they can also be classified as static or dynamic. Static obstacles are fixed and they will not move for the duration of a mission. Dynamic obstacles will move during the mission.

- (v)

- Access—two methods of access will be considered: surface deployment and restricted access. Surface deployment is where there is no ceiling to the environment and the UUV can be deployed directly into the water from the edge. Restricted access is where there is a ceiling and the UUV needs to be deployed through a hatch or similar entry port.

- (vi)

- Additional Infrastructure—some environments will allow for additional infrastructure to be placed in them. Quite often, these are the more open environments, where, for example, beacons could be installed around the edges. Other, more closed environments with restricted access, do not allow this.

- (vii)

- Line-of-sight (LoS)—if an environment has obstacles, their disposition may inhibit LoS from the UUV to various points. For this analysis LoS will be considered to the surface and to the edges of the environment.

- (viii)

- Turbidity—urbidity is a measure of water clarity and is affected by the presence of suspended particulates. Light is scattered by the particles, so the more there are, the more light is scattered and the higher the turbidity. If there are no particles, then the turbidity is very low and the water will be clear.

- (ix)

- Ambient Illumination Levels—some environments will have external light sources that will provide a certain level of ambient lighting (i.e., not provided by lights mounted on the UUV). Other environments will not and the only light will be generated from on board the UUV.

- (x)

- Salient Features—certain localisation technologies require the identification of features in the environment. Detectable features are known as salient features. A long smooth, uniform surface will provide no salient features; however, if you placed defined objects, such as QR codes, on the wall, then these could then be detected.

- (xi)

- Variance of Environment—whilst objects have been defined as static or dynamic for the duration of a single mission, the variance of the environment over a number of missions also needs to be considered. Some environments will not change over an extended duration (years), whereas others will change over hours or days.

2.2.3. Analysis

2.3. Missions

- 0.

- No Autonomy—the UUV is entirely tele-operated by a human.

- 1.

- Robot Assistance—the UUV provides some automated functionality, for example staying at a set depth (set by the operator) or prohibiting the operator to maneuver into obstacles. The operator is still in full control of the UUV.

- 2.

- Task Autonomy—the UUV is able to execute motions under the guidance of the operator. For example way-points could be set to which the UUV will travel with no further input from the operator.

- 3.

- Conditional Autonomy—the UUV generates task strategies, but requires a human to select which one to undertake. For example, when exploring an environment, the UUV may identify several different routes to take, with the human selecting the most appropriate one.

- 4.

- High Autonomy—the UUV can plan and execute missions based on a set of boundary conditions specified by the operator. The operator does not require to select which one the UUV should do, however they are there to oversee the task execution.

- 5.

- Full Autonomy—the UUV requires no human input at all. It is deployed into the environment and left with no operator oversight.

Analysis

2.4. UUVs

Analysis

3. Localisation Technologies

3.1. Inertial Navigation

Suitable Hardware

3.2. Dynamic System Models

Suitable Hardware

3.3. Acoustic Localisation Methods

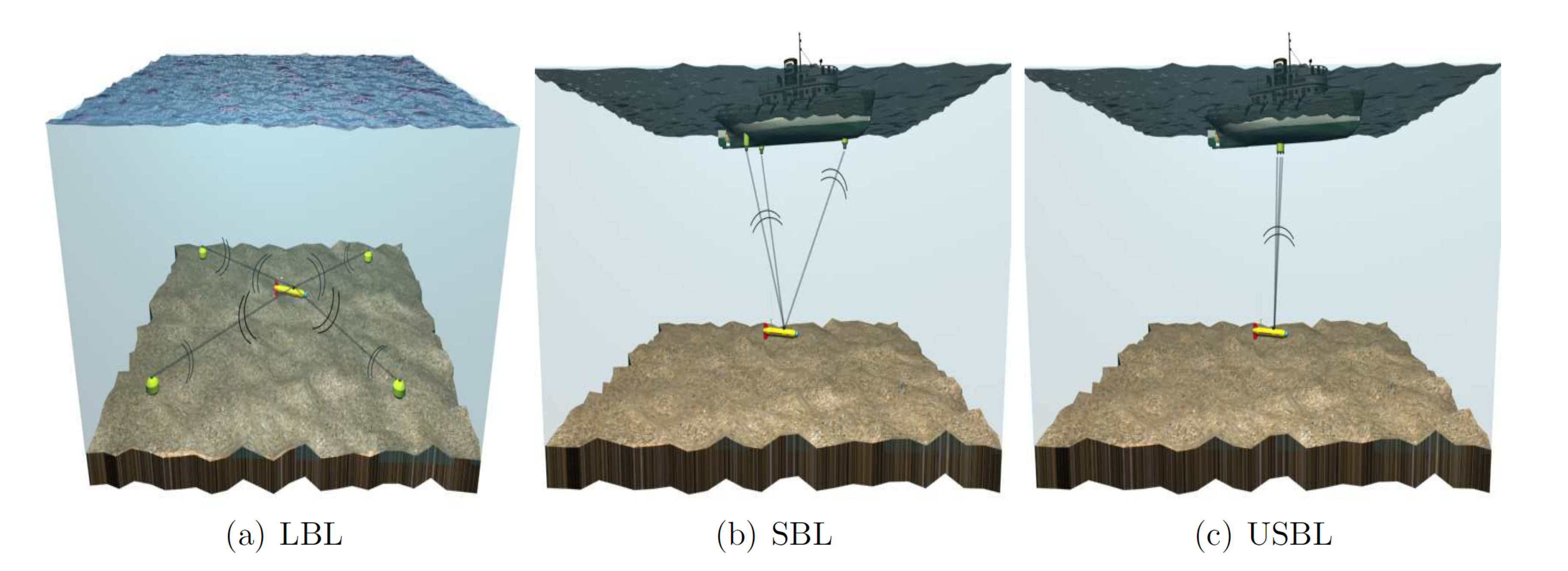

3.3.1. Acoustic Beacons

Suitable Hardware

3.3.2. Doppler Velocity Log—DVL

Suitable Hardware

3.3.3. Sonar SLAM (SSLAM)

Suitable Hardware

3.3.4. Summary on Acoustic Localisation

3.4. Visual Localisation

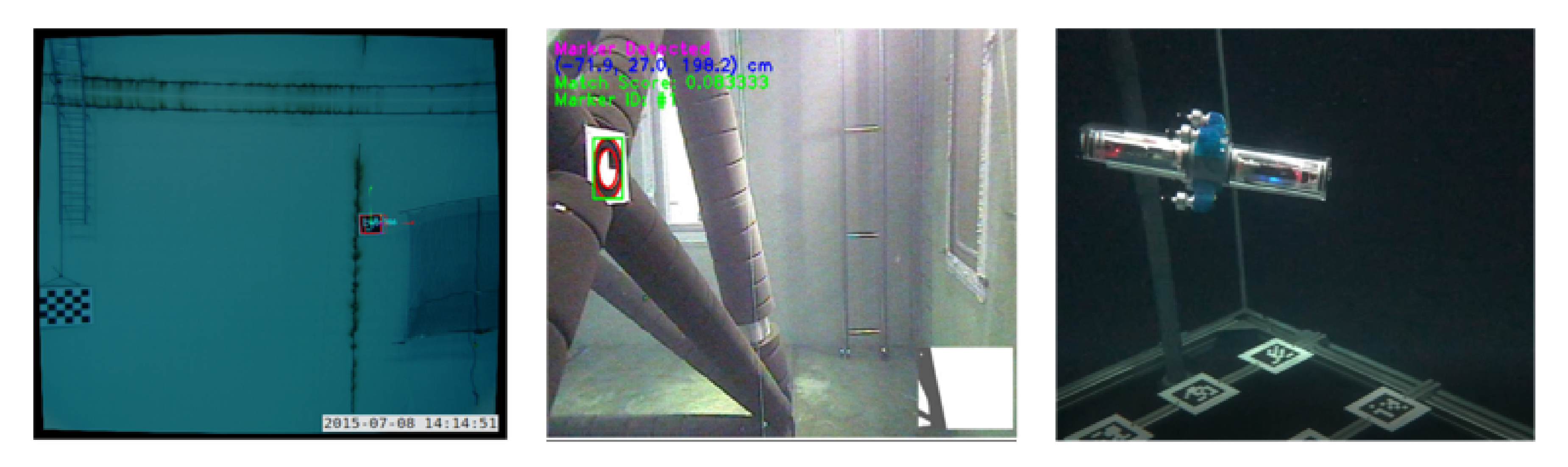

3.4.1. Augmented Reality Marker

Suitable Hardware

3.4.2. External Tracking Systems

Suitable Hardware

3.4.3. Vision-Based SLAM

Suitable Hardware

3.4.4. Summary on Visual Localisation

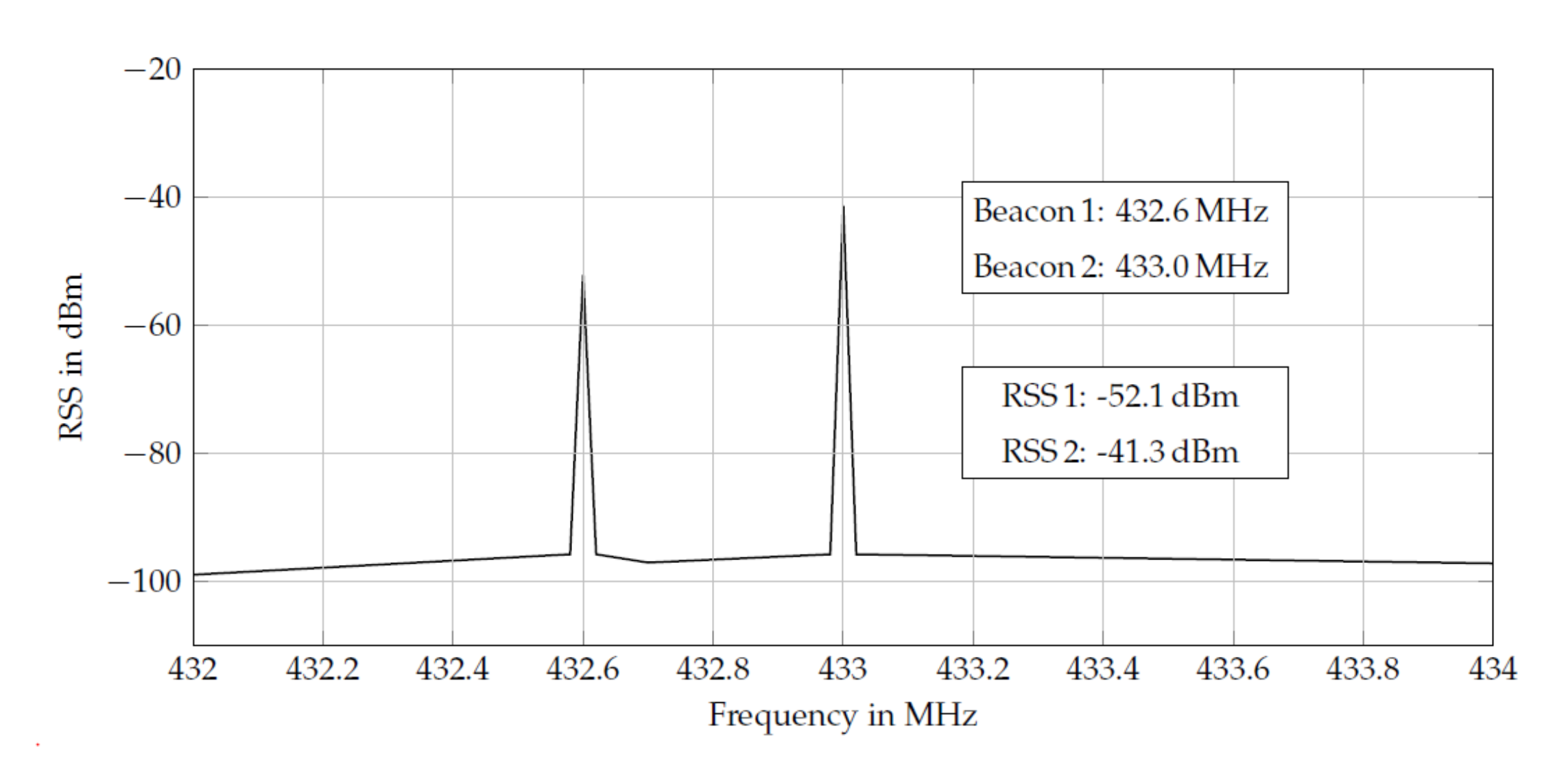

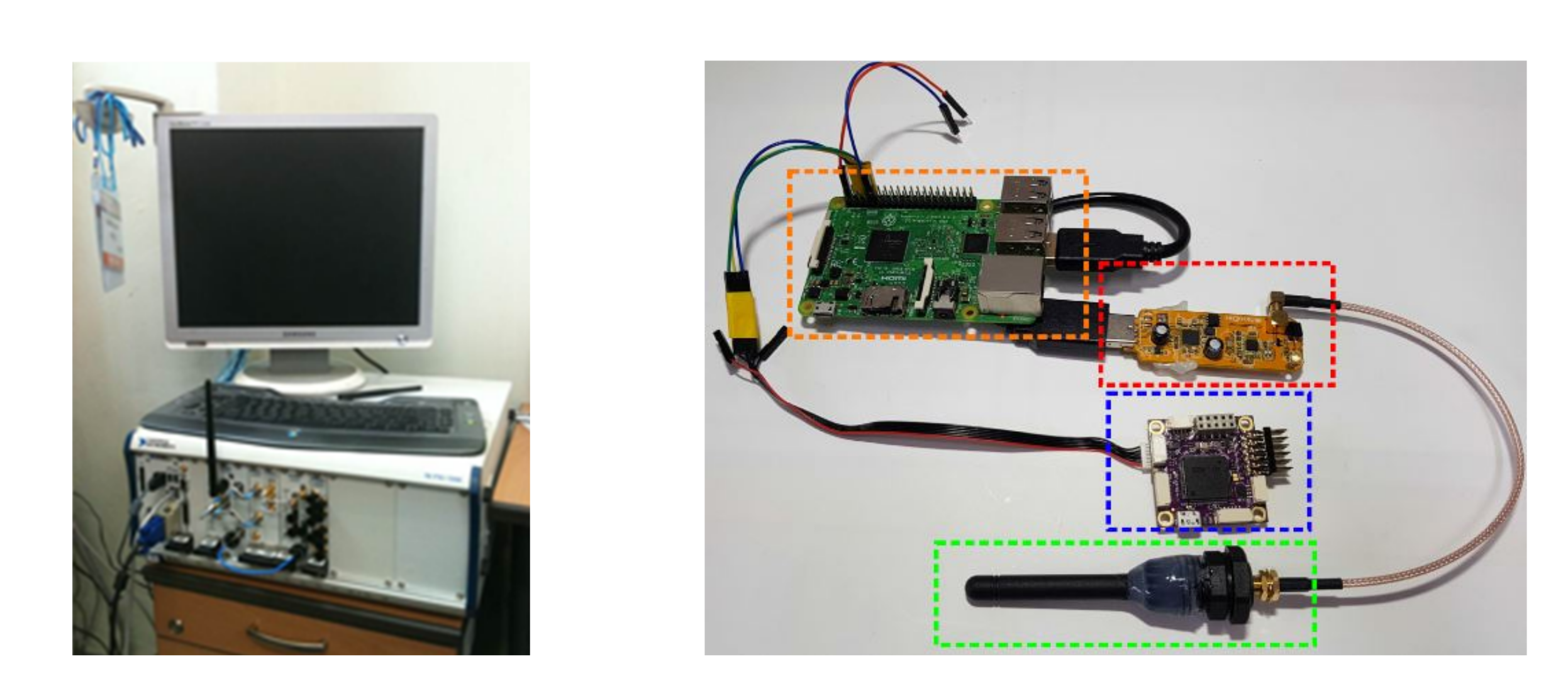

3.5. Electromagnetic Localisation

3.6. Suitable Hardware

4. Discussion

| Criteria | Acoustic | Dynamics | Electromagnetic | Vision | |||||

|---|---|---|---|---|---|---|---|---|---|

| Acoustic Beacons [61] | DVL [66] | Sonar SLAM [68] | Inertial MEMS IMU [45] | Dynamic Models | EM-Signals [108,109] | AR Tags [82] | Ext. Tracking [93,95,97] | VSLAM | |

| Local. Type | Absolute | Relative | Absolute | Relative | Relative | Absolute | Absolute | Absolute | Relative |

| Stand Alone | Y | Y | N | N | N | Y | Y | Y | N |

| Range | 100 m | Infinite | 75 m | Infinite | Infinite | 2 m | 5–10 m | 10–30 m | 5 m |

| Reflections | Severe | Mild | Mild | Unaffected | Unaffected | Mild | Low | Low | Low |

| Add. Infra. | Y | N | N | N | N | Y | Y | Y | N |

| Infra. Size | 27 × 24.6 × 12.4 cm | N/A | N/A | N/A | N/A | 20 × 10 × 10 cm | 10 × 10 × 1 cm | 25 × 15 cm | N/A |

| LoS | Important | N/A | N/A | N/A | N/A | Important | Important | Important | N/A |

| Turbidity | Unaffected | Unaffected | Unaffected | Unaffected | Unaffected | N/A | Important | Important | Important |

| Amb. Illum. | Unaffected | Unaffected | Unaffected | Unaffected | Unaffected | N/A | Important | Important | Important |

| Salient Features | N/A | Mild | Medium | N/A | N/A | N/A | Important | N/A | Important |

| Cost | ≈5K GBP | ≈6K GBP | ≈5K GBP | ≈3K GBP | N/A | 100–12k GBP | ≈100 GBP | 500–20k GBP | 500 GBP |

| On-board Size | 20 × 41 mm | 66 × 25 mm | 56 × 79 mm | 5 × 5 × 1 cm | N/A | 10 × 6 × 5 cm | 10 × 6 × 3 cm | N/A | 10 × 5 × 5 cm |

| Power | 5 W | 4 W | 4 W | 3 W | N/A | 5 W | 5 W | 5–20 W | 10 W |

| Update Rate | 2–4 Hz | 4–26 Hz | 5–20 s | 4 kHz | Variable | 10 Hz/1 kHz | 10–30 Hz | 100–300 Hz | 10 Hz |

| Accuracy | ≈1% of range | ±0.1 cm/s | m | km/h | Variable | ±1–5 cm | ±1–5 cm | ±1 cm | variable |

| Area | Acoustic Beacons | DVL | Sonar SLAM | Inertial | Dynamic Models | EM-Signals | AR Tags | External Tracking | VSLAM |

|---|---|---|---|---|---|---|---|---|---|

| Modern Nuclear Storage Pond | Low | Med | Med | Med | Med | Low | Med | Med | Med |

| Legacy Nuclear Storage Pond | Low | Med | Med | Med | Med | Low | Low | Med | Med |

| Legacy Nuclear Storage Silo | Low | Low | Low | Med | Med | Low | Low | Low | Low |

| Nuclear Reactor Pressure Vessel | Low | Low | Low | Med | Med | Low | Low | Low | Low |

| Offshore Asset Decommissioning | Low | Low | Med | Med | Med | Low | Low | Low | Med |

| Ship Hulls | Med | Low | Med | Med | Med | Low | Med | Low | High |

| Liquid Storage Tanks Marinas, Harbours and Boatyards | Low | Low | Low | Med | Med | Low | Low | Med | Med |

| High | Med | Med | Med | Med | Low | Med | Med | Med | |

| Tunnels, Sewers and Flooded Mines | Low | Med | Med | Med | Med | Low | Low | Low | Med |

4.1. Acoustic-Based Systems

4.1.1. Acoustic Beacons

4.1.2. DVL

4.1.3. Sonar SLAM

4.2. Dynamic Localisation Systems

4.2.1. Inertial Systems

4.2.2. Dynamic Models

4.3. EM-Signals

4.4. Vision-Based Systems

4.4.1. AR Tags

4.4.2. External Tracking

4.4.3. VSLAM

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AHRS | Attitude and Heading Reference System |

| AR | Augmented Reality |

| ASV | Autonomous Surface Vehicle |

| AUV | Autonomous Underwater Vehicle |

| DOF | Degree of Freedom |

| DVB-T | Digital Video Broadcast |

| DVL | Doppler Velocity Log |

| EM | Electro-magnetic |

| GPS | Global Positioning System |

| IMR | Inspection, Maintenance and Repair |

| IMU | Inertial Measurement Unit |

| LBL | Long Base Line |

| LOA | Level of Autonomy |

| LoS | Line-of-Sight |

| MEMS | Micro-Electro-Mechanical Systems |

| MSIS | Mechanically Scanned Imaging Sonar |

| ROV | Remotely Operated Vehicle |

| RSM | Range Sensor Model |

| RSS | Received Signal Strength |

| SBL | Short Base Line |

| SDR | Software Defined Radio |

| SLAM | Simultaneous localisation and Mapping |

| SSLAM | Sonar-based Simultaneous localisation and Mapping |

| USBL | Ultra Short Base Line |

| UUV | Unmanned Underwater Vehicle |

| VIO | Visual Inertial Odometry |

| VSLAM | Vision-based Simultaneous localisation and Mapping |

References

- Huvenne, V.A.; Robert, K.; Marsh, L.; Lo Iacono, C.; Le Bas, T.; Wynn, R.B. ROVs and AUVs. In Submarine Geomorphology; Springer International Publishing: Cham, Switzerland, 2018; pp. 93–108. [Google Scholar] [CrossRef]

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater Robots: From Remotely Operated Vehicles to Intervention-Autonomous Underwater Vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Yu, L.; Yang, E.; Ren, P.; Luo, C.; Dobie, G.; Gu, D.; Yan, X. Inspection Robots in Oil and Gas Industry: A Review of Current Solutions and Future Trends. In Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 September 2019; pp. 1–6. [Google Scholar]

- Shukla, A.; Karki, H. Application of robotics in offshore oil and gas industry—A review Part II. Robot. Auton. Syst. 2016, 75, 508–524. [Google Scholar] [CrossRef]

- Sands, T.; Bollino, K.; Kaminer, I.; Healey, A. Autonomous Minimum Safe Distance Maintenance from Submersed Obstacles in Ocean Currents. J. Mar. Sci. Eng. 2018, 6, 98. [Google Scholar] [CrossRef]

- Griffiths, A.; Dikarev, A.; Green, P.R.; Lennox, B.; Poteau, X.; Watson, S. AVEXIS—Aqua Vehicle Explorer for In-Situ Sensing. IEEE Robot. Autom. Lett. 2016, 1, 282–287. [Google Scholar] [CrossRef]

- Hong, S.; Chung, D.; Kim, J.; Kim, Y.; Kim, A.; Yoon, H.K. In-water visual ship hull inspection using a hover-capable underwater vehicle with stereo vision. J. Field Robot. 2019, 36, 531–546. [Google Scholar] [CrossRef]

- Alvarez-Tuñón, O.; Rodríguez, A.; Jardón, A.; Balaguer, C. Underwater Robot Navigation for Maintenance and Inspection of Flooded Mine Shafts. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1482–1487. [Google Scholar]

- Krejtschi, J.K. In Service Above Ground Storgae Tank Inspection with a Remotely Operated Vehicle (ROV). Ph.D. Thesis, University of Glamorgan, Pontypridd, UK, 2005. [Google Scholar]

- Duecker, D.A.; Geist, A.; Kreuzer, E.; Solowjow, E. Learning Environmental Field Exploration with Computationally Constrained Underwater Robots: Gaussian Processes Meet Stochastic Optimal Control. Sensors 2019, 19, 2094. [Google Scholar] [CrossRef]

- Tan, H.P.; Diamant, R.; Seah, W.K.; Waldmeyer, M. A survey of techniques and challenges in underwater localization. Ocean. Eng. 2011, 38, 1663–1676. [Google Scholar] [CrossRef]

- Saeed, N.; Celik, A.; Al-Naffouri, T.Y.; Alouini, M.S. Underwater optical wireless communications, networking, and localization: A survey. Ad Hoc Netw. 2019, 94, 101935. [Google Scholar] [CrossRef]

- González-García, J.; Gómez-Espinosa, A.; Cuan-Urquizo, E.; García-Valdovinos, L.; Salgado-Jiménez, T.; Cabello, J. Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions. Appl. Sci. 2020, 10, 1256. [Google Scholar] [CrossRef]

- Beer, H.F.; Borque Linan, J.; Brown, G.A.; Carlisle, D.; Demner, R.; Irons, B.; Laraia, M.; Michal, V.; Pekar, A.; Šotić, O.; et al. IAEA Nuclear Energy Series No. NW-T-2.6: Decommissioning of Pools in Nuclear Facilities; Technical Report; IAEA: Vienna, Austria, 2015. [Google Scholar]

- Jackson, S.F.; Monk, S.D.; Riaz, Z. An investigation towards real time dose rate monitoring, and fuel rod detection in a First Generation Magnox Storage Pond (FGMSP). Appl. Radiat. Isot. 2014, 94, 254–259. [Google Scholar] [CrossRef] [PubMed]

- Ayoola, O. In-Situ Monitoring of the Legacy Ponds and Silos at Sellafield. Ph.D. Thesis, The University of Manchester, Manchester, UK, 2019. [Google Scholar]

- Lee, T.E.; Michael, N. State Estimation and Localization for ROV-Based Reactor Pressure Vessel Inspection. In Field and Service Robotics; Hutter, M., Siegwart, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 699–715. [Google Scholar]

- Dong, M.; Chou, W.; Fang, B.; Yao, G.; Liu, Q. Implementation of remotely operated vehicle for direct inspection of reactor pressure vessel and other water-filled infrastructure. J. Nucl. Sci. Technol. 2016, 53, 1086–1096. [Google Scholar] [CrossRef]

- Nancekievill, M.; Espinosa, J.; Watson, S.; Lennox, B.; Jones, A.; Joyce, M.J.; Katakura, J.I.; Okumura, K.; Kamada, S.; Katoh, M.; et al. Detection of Simulated Fukushima Daichii Fuel Debris Using a Remotely Operated Vehicle at the Naraha Test Facility. Sensors 2019, 19, 4602. [Google Scholar] [CrossRef] [PubMed]

- Topham, E.; McMillan, D. Sustainable decommissioning of an offshore wind farm. Renew. Energy 2017, 102, 470–480. [Google Scholar] [CrossRef]

- Sommer, B.; Fowler, A.M.; Macreadie, P.I.; Palandro, D.A.; Aziz, A.C.; Booth, D.J. Decommissioning of offshore oil and gas structures—Environmental opportunities and challenges. Sci. Total Environ. 2019, 658, 973–981. [Google Scholar] [CrossRef] [PubMed]

- Ozog, P.; Carlevaris-Bianco, N.; Kim, A.; Eustice, R.M. Long-term Mapping Techniques for Ship Hull Inspection and Surveillance using an Autonomous Underwater Vehicle. J. Field Robot. 2016, 33, 265–289. [Google Scholar] [CrossRef]

- Allotta, B.; Brandani, L.; Casagli, N.; Costanzi, R.; Mugnai, F.; Monni, N.; Natalini, M.; Ridolfi, A. Development of Nemo remotely operated underwater vehicle for the inspection of the Costa Concordia wreck. Proc. Inst. Mech. Eng. Part M J. Eng. Marit. Environ. 2017, 231, 3–18. [Google Scholar] [CrossRef]

- Clark, T.F. Tank Inspections Back Water Quality. Opflow 2017, 43, 18–21. [Google Scholar] [CrossRef]

- Wilke, J. Inspection Guidelines for SPU Water Storgae Facilities; Technical Report; SPU Design Standards and Guidelines: Seattle, WA, USA, 2016. Available online: https://www.seattle.gov/Documents/Departments/SPU/Engineering/5CInspectionGuidelinesforSPUWaterStorageFacilities.pdf. (accessed on 30 October 2020).

- Andritsos, F.; Maddalena, D. ROTIS: Remotely Operated Tanker Inspection System. In Proceedings of the 8th International Marine Design Conference (lMDC), Athens, Greece, 5–8 May 2003. [Google Scholar]

- ROTIS II: Remotely Operated Tanker Inspection System II. Technical Report, European 6th RTD Framework Programme. 2007. Available online: https://trimis.ec.europa.eu/project/remotely-operated-tanker-inspection-system-ii (accessed on 30 October 2020).

- Reed, S.; Wood, J.; Vazquez, J.; Mignotte, P.Y.; Privat, B. A smart ROV solution for ship hull and harbor inspection. In Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Security and Homeland Defense IX; Carapezza, E.M., Ed.; International Society for Optics and Photonics (SPIE): Orlando, USA, 2010; Volume 7666, pp. 535–546. [Google Scholar] [CrossRef]

- Health and Safety Guide 4: Marinas and Boatyards; Technical Report; British Marine: Egham, UK, 2015.

- Choi, J.; Lee, Y.; Kim, T.; Jung, J.; Choi, H. Development of a ROV for visual inspection of harbor structures. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Korea, 21–24 February 2017; pp. 1–4. [Google Scholar]

- Pidic, A.; Aasbøe, E.; Almankaas, J.S.; Wulvik, A.S.; Steinert, M. Low-Cost Autonomous Underwater Vehicle (AUV) for Inspection of Water-Filled Tunnels During Operation (Volume 5B: 42nd Mechanisms and Robotics Conference). In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Quebec City, QC, Canada, 26–29 August 2018. [Google Scholar] [CrossRef]

- Martins, A.; Almeida, J.; Almeida, C.; Matias, B.; Kapusniak, S.; Silva, E. EVA a Hybrid ROV/AUV for Underwater Mining Operations Support. In Proceedings of the 2018 OCEANS—MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–7. [Google Scholar]

- McLeod, D. Emerging capabilities for autonomous inspection repair and maintenance. In Proceedings of the OCEANS 2010 MTS/IEEE SEATTLE, Seattle, WA, USA, 20–23 September 2010; pp. 1–4. [Google Scholar]

- Simetti, E. Autonomous Underwater Intervention. Curr. Robot. Rep. 2020, 1, 117–122. [Google Scholar] [CrossRef]

- Chiou, M.; Stolkin, R.; Bieksaite, G.; Hawes, N.; Shapiro, K.L.; Harrison, T.S. Experimental analysis of a variable autonomy framework for controlling a remotely operating mobile robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 3581–3588. [Google Scholar]

- Yang, G.Z.; Cambias, J.; Cleary, K.; Daimler, E.; Drake, J.; Dupont, P.E.; Hata, N.; Kazanzides, P.; Martel, S.; Patel, R.V.; et al. Medical robotics—Regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci. Robot. 2017, 2. [Google Scholar] [CrossRef]

- Watson, S.A.; Green, P.N. Depth Control for Micro-Autonomous Underwater Vehicles (μAUVs): Simulation and Experimentation. Int. J. Adv. Robot. Syst. 2014, 11, 31. [Google Scholar] [CrossRef]

- Blue Robotics, 2740 California Street, Torrance, CA 90503, USA. Blue Robotics BlueROV2. 2020. Available online: https://bluerobotics.com/store/rov/bluerov2/ (accessed on 3 August 2020).

- Duecker, D.A.; Bauschmann, N.; Hansen, T.; Kreuzer, E.; Seifried, R. HippoCampus X—A hydrobatic open-source micro AUV for confined environments. In Proceedings of the 2020 IEEE/OES Autonomous Underwater Vehicle Symposium (AUV), St. John’s, NL, Canada, 30 September–2 October 2020. [Google Scholar]

- Fernandez, R.A.S.; Milošević, Z.; Dominguez, S.; Rossi, C. Motion Control of Underwater Mine Explorer Robot UX-1: Field Trials. IEEE Access 2019, 7, 99782–99803. [Google Scholar] [CrossRef]

- Barshan, B.; Durrant-Whyte, H.F. Inertial navigation systems for mobile robots. IEEE Trans. Robot. Autom. 1995, 11, 328–342. [Google Scholar] [CrossRef]

- Titterton, D.H. Weston, J.L. Strapdown Inertial Navigation Technology, 2nd ed.; IEE Radar, Sonar, Navigation, and Avionics Series 17; Institution of Electrical Engineers: Stevenage, UK, 2004. [Google Scholar]

- Woodman, O.J. An Introduction to Inertial Navigation; Technical Report; University of Cambridge, Computer Laboratory: Cambridge, UK, 2007. [Google Scholar]

- High-End Gyroscopes, Accelerometers and IMUs for Defense, Aerospace & Industrial. Institiution: Yole Development. 2015. Available online: http://www.yole.fr/iso_upload/Samples/Yole_High_End_Gyro_January_2015_Sample.pdf (accessed on 16 June 2020).

- ADIS16495 Tactical Grade, Six Degrees of Freedom Inertial Sensor. Available online: https://www.analog.com/en/products/adis16495.html (accessed on 16 June 2020).

- Inertial Measurement Units (IMUS) 1750 IMU. Available online: https://www.kvh.com/admin/products/gyros-imus-inss/imus/1750-imu/commercial-1750-imu (accessed on 16 June 2020).

- Fossen, T.I. Guidance and Control of Ocean Vehicles; Wiley: New York, NY, USA, 1994. [Google Scholar]

- Lennox, C.; Groves, K.; Hondru, V.; Arvin, F.; Gornicki, K.; Lennox, B. Embodiment of an Aquatic Surface Vehicle in an Omnidirectional Ground Robot. In Proceedings of the 2019 IEEE International Conference on Mechatronics (ICM), Ilmenau, Germany, 18–20 March 2019; Volume 1, pp. 182–186. [Google Scholar]

- Allotta, B.; Costanzi, R.; Pugi, L.; Ridolfi, A. Identification of the main hydrodynamic parameters of Typhoon AUV from a reduced experimental dataset. Ocean. Eng. 2018, 147, 77–88. [Google Scholar] [CrossRef]

- Groves, K.; Dimitrov, M.; Peel, H.; Marjanovic, O.; Lennox, B. Model identification of a small omnidirectional aquatic surface vehicle: A practical implementation. In Proceedings of the Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Arnold, S.; Medagoda, L. Robust Model-Aided Inertial Localization for Autonomous Underwater Vehicles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Hegrenaes, O.; Hallingstad, O. Model-aided INS with sea current estimation for robust underwater navigation. IEEE J. Ocean. Eng. 2011, 36, 316–337. [Google Scholar] [CrossRef]

- Wu, Y.; Ta, X.; Xiao, R.; Wei, Y.; An, D.; Li, D. Survey of underwater robot positioning navigation. Appl. Ocean. Res. 2019, 90, 101845. [Google Scholar] [CrossRef]

- Taudien, J.; Bilen, S.G. Quantifying error sources that affect long-term accuracy of Doppler velocity logs. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–10. [Google Scholar]

- Kottege, N. Underwater Acoustic Localisation in the Context of Autonomous Submersibles. Ph.D. Thesis, The Australian National University, Canberra, Australia, 2011. [Google Scholar]

- Alcocer, A.; Oliveira, P.; Pascoal, A. Underwater acoustic positioning systems based on buoys with GPS. In Proceedings of the Eighth European Conference on Underwater Acoustics, Carvoeiro, Portugal, 12–15 June 2006; Volume 8, pp. 1–8. [Google Scholar]

- Trackit USBL System. Available online: http://www.teledynemarine.com/trackit?ProductLineID=59 (accessed on 7 July 2020).

- Renner, B.C.; Heitmann, J.; Steinmetz, F. ahoi: Inexpensive, Low-power Communication and Localization for Underwater Sensor Networks and μAUVs. ACM Trans. Sens. Netw. 2020, 16, 1–46. [Google Scholar] [CrossRef]

- ahoi: Open-Source, Inexpensive, Low-Power Underwater Acoustic Modem. Available online: www.ahoi-modem.de (accessed on 16 October 2020).

- Duecker, D.A.; Steinmetz, F.; Kreuzer, E.; Seifried, R. Micro AUV Localization for Agile Navigation with Low-cost Acoustic Modems. In Proceedings of the 2020 IEEE/OES Autonomous Underwater Vehicle Symposium (AUV), St. John’s, NL, Canada, 30 September–2 October 2020. [Google Scholar]

- Underwater GPS. Available online: https://waterlinked.com/underwater-gps/ (accessed on 7 July 2020).

- Yoo, T.; Kim, M.; Yoon, S.; Kim, D. Performance enhancement for conventional tightly coupled INS/DVL navigation system using regeneration of partial DVL measurements. J. Sens. 2020, 2020, 5324349. [Google Scholar] [CrossRef]

- Christ, R.D.; Wernli Sr, R.L. The ROV Manual: A User Guide for Remotely Operated Vehicles; Butterworth-Heinemann: Oxford, UK, 2013. [Google Scholar]

- New to Subsea Navigation? Available online: https://www.nortekgroup.com/knowledge-center/wiki/new-to-subsea-navigation (accessed on 18 September 2020).

- Workhorse Navigator DVL. Available online: http://www.teledynemarine.com/workhorse-navigator-doppler-velocity-log?ProductLineID=34 (accessed on 27 July 2020).

- DVL A50. Available online: https://waterlinked.com/dvl/ (accessed on 9 July 2020).

- Hernàndez Bes, E.; Ridao Rodríguez, P.; Ribas Romagós, D.; Batlle i Grabulosa, J. MSISpIC: A probabilistic scan matching algorithm using a mechanical scanned imaging sonar. J. Phys. Agents 2009, 3, 3–11. [Google Scholar] [CrossRef]

- Mallios, A.; Ridao, P.; Ribas, D.; Hernandez, E. Scan matching SLAM in underwater environments. Auton. Robot. 2014, 36, 181–198. [Google Scholar] [CrossRef]

- Ribas, D.; Ridao, P.; Neira, J. Underwater Slam for Structured Environments Using an Imaging Sonar; Springer: Berlin/Heidelberg, Germany, 2010; pp. 37–46. [Google Scholar]

- Ping360 Scanning Imaging Sonar. Available online: https://bluerobotics.com/store/sensors-sonars-cameras/sonar/ping360-sonar-r1-rp/ (accessed on 27 July 2020).

- Micron—Mechanical Scanning Sonar (Small ROV). Available online: https://www.tritech.co.uk/product/small-rov-mechanical-sector-scanning-sonar-tritech-micron (accessed on 27 July 2020).

- M1200d Dual-Frequency Multibeam Sonar. Available online: https://www.blueprintsubsea.com/pages/product.php?PN=BP01042 (accessed on 9 July 2020).

- Foresti, G.L.; Gentili, S. A vision based system for object detection in underwater images. Int. J. Pattern Recognit. Artif. Intell. 2000, 14, 167–188. [Google Scholar] [CrossRef]

- Carreras, M.; Ridao, P.; Garcia, R.; Nicosevici, T. Vision-based Localization of an Underwater Robot in a Structured Environment. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Taiwan, Taiwan, 14–19 September 2003; Volume 2652, pp. 150–157. [Google Scholar]

- Buchan, A.D.; Solowjow, E.; Duecker, D.A.; Kreuzer, E. Low-Cost Monocular Localization with Active Markers for Micro Autonomous Underwater Vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4181–4188. [Google Scholar]

- Kato, H.; Billinghurst, M. Marker tracking and HMD calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR) 1999, San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar] [CrossRef]

- Wang, J.; Olson, E. AprilTag 2: Efficient and robust fiducial detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 2–7. [Google Scholar]

- Krogius, M.; Haggenmiller, A.; Olson, E. Flexible Tag Layouts for the AprilTag Fiducial System. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1898–1903. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- dos Santos Cesar, D.B.; Gaudig, C.; Fritsche, M.; dos Reis, M.A.; Kirchner, F. An Evaluation of Artificial Fiducial Markers in Underwater Environments. In Proceedings of the IEEE OCEANS, Genoa, Italy, 18–21 May 2015; pp. 1–6. [Google Scholar]

- Duecker, D.A.; Bauschmann, N.; Hansen, T.; Kreuzer, E.; Seifried, R. Towards Micro Robot Hydrobatics: Vision-based Guidance, Navigation, and Control for Agile Underwater Vehicles in Confined Environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Bhat, S.; Stenius, I. Hydrobatics: A Review of Trends, Challenges and Opportunities for Efficient and Agile Underactuated AUVs. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–8. [Google Scholar]

- Ishida, M.; Shimonomura, K. Marker Based Camera Pose Estimation for Underwater Robots. In Proceedings of the IEEE/SICE International Symposium on System Integration, Fukuoka, Japan, 16–18 December 2012; pp. 629–634. [Google Scholar]

- Britto, J.; Cesar, D.; Saback, R.; Arnold, S.; Gaudig, C.; Albiez, J. Model Identification of an Unmanned Underwater Vehicle via an Adaptive Technique and Artificial Fiducial Markers. In Proceedings of the OCEANS 2015—MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Jung, J.; Li, J.H.; Choi, H.T.; Myung, H. Localization of AUVs using visual information of underwater structures and artificial landmarks. Intell. Serv. Robot. 2017, 10, 67–76. [Google Scholar] [CrossRef]

- Jung, J.; Lee, Y.; Kim, D.; Lee, D.; Myung, H.; Choi, H.T. AUV SLAM Using Forward/Downward Looking Cameras and Artificial Landmarks. In Proceedings of the IEEE Underwater Technology (UT), Busan, Korea, 21–24 February 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Hexeberg Henriksen, E.; Schjølberg, I.; Gjersvik, T.B. Vision Based Localization for Subsea Intervention. In Proceedings of the ASME 2017 36th International Conference on Offshore Mechanics and Arctic Engineering (OMAE), Trondheim, Norway, 25–30 June 2017; pp. 1–9. [Google Scholar]

- Heshmati-Alamdari, S.; Karras, G.C.; Marantos, P.; Kyriakopoulos, K.J. A Robust Model Predictive Control Approach for Autonomous Underwater Vehicles Operating in a Constrained workspace. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 6183–6188. [Google Scholar] [CrossRef]

- Lupashin, S.; Schollig, A.; Hehn, M.; D’Andrea, R. The Flying Machine Arena as of 2010. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2970–2971. [Google Scholar] [CrossRef]

- Lupashin, S.; Hehn, M.; Mueller, M.W.; Schoellig, A.P.; Sherback, M.; D’Andrea, R. A platform for aerial robotics research and demonstration: The Flying Machine Arena. Mechatronics 2014, 24, 41–54. [Google Scholar] [CrossRef]

- Pickem, D.; Glotfelter, P.; Wang, L.; Mote, M.; Ames, A.; Feron, E.; Egerstedt, M. The Robotarium: A remotely accessible swarm robotics research testbed. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1699–1706. [Google Scholar] [CrossRef]

- Qualisys. Qualisys-MotionCapture. 2020. Available online: https://www.qualisys.com/ (accessed on 3 August 2020).

- Vicon. Vicon-MotionCapture. 2020. Available online: https://www.vicon.com/ (accessed on 3 August 2020).

- Duecker, D.A.; Eusemann, K.; Kreuzer, E. Towards an Open-Source Micro Robot Oceanarium: A Low-Cost, Modular, and Mobile Underwater Motion-Capture System. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Macau, China, 4–8 November 2019; pp. 8048–8053. [Google Scholar] [CrossRef]

- Bernardina, G.R.; Cerveri, P.; Barros, R.M.; Marins, J.C.; Silvatti, A.P. Action sport cameras as an instrument to perform a 3D underwater motion analysis. PLoS ONE 2016, 11, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Sydney, N.; Napora, S.; Beal, S.; Mohl, P.; Nolan, P.; Sherman, S.; Leishman, A.; Butail, S.; Paley, D.A. A Micro-UUV Testbed for Bio-Inspired Motion Coordination. In Proceedings of the International Symposium Unmanned Untethered Submersible Technology, Durham, NH, USA, 23–26 August 2009; pp. 1–13. [Google Scholar]

- Cui, R.; Li, Y.; Yan, W. Mutual Information-Based Multi-AUV Path Planning for Scalar Field Sampling Using Multidimensional RRT*. IEEE Trans. Syst. Man, Cybern. Syst. 2016, 46, 993–1004. [Google Scholar] [CrossRef]

- Kim, A.; Eustice, R. Pose-graph visual SLAM with geometric model selection for autonomous underwater ship hull inspection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), St. Louis, MO, USA, 10–15 October 2009; pp. 1559–1565. [Google Scholar] [CrossRef]

- Hover, F.S.; Eustice, R.M.; Kim, A.; Englot, B.; Johannsson, H.; Kaess, M.; Leonard, J.J. Advanced perception, navigation and planning for autonomous in-water ship hull inspection. Int. J. Robot. Res. 2012, 31, 1445–1464. [Google Scholar] [CrossRef]

- Westman, E.; Kaess, M. Underwater AprilTag SLAM and Calibration for High Precision Robot Localization; Technical Report October; Carnegie Mellon University: Pittsburgh, PA, USA, 2018. [Google Scholar]

- Rahman, S.; Li, A.Q.; Rekleitis, I. Visual-Acoustic SLAM for Underwater Caves. In Proceedings of the IEEE ICRA Workshop, Underwater Robotic Perception, Montreal, QC, Canada, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Suresh, S.; Westman, E.; Kaess, M. Through-water Stereo SLAM with Refraction Correction for AUV Localization. IEEE Robot. Autom. Lett. 2019, 4, 692–699. [Google Scholar] [CrossRef]

- SubSLAM—Live 3D Vision. Available online: https://www.rovco.com/technology/subslam/ (accessed on 7 September 2020).

- Park, D.; Kwak, K.; Chung, W.K.; Kim, J. Development of Underwater Short-Range Sensor Using Electromagnetic Wave Attenuation. IEEE J. Ocean. Eng. 2015, 41, 318–325. [Google Scholar] [CrossRef]

- Park, D.; Kwak, K.; Kim, J.; Chung, W.K. Underwater sensor network using received signal strength of electromagnetic waves. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1052–1057. [Google Scholar] [CrossRef]

- Kwak, K.; Park, D.; Chung, W.K.; Kim, J. Underwater 3D Spatial Attenuation Characteristics of Electromagnetic Waves with Omnidirectional Antenna. IEEE/ASME Trans. Mechatron. 2016, 21, 1409–1419. [Google Scholar] [CrossRef]

- Park, D.; Kwak, K.; Kim, J.; Chung, W.K. 3D Underwater Localization Scheme using EM Wave Attenuation with a Depth Sensor. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2631–2636. [Google Scholar]

- Duecker, D.A.; Geist, A.R.; Hengeler, M.; Kreuzer, E.; Pick, M.A.; Rausch, V.; Solowjow, E. Embedded spherical localization for micro underwater vehicles based on attenuation of electro-magnetic carrier signals. Sensors 2017, 17, 959. [Google Scholar] [CrossRef]

- Duecker, D.A.; Johannink, T.; Kreuzer, E.; Rausch, V.; Solowjow, E. An Integrated Approach to Navigation and Control in Micro Underwater Robotics using Radio-Frequency Localization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6846–6852. [Google Scholar]

- Park, D.; Chung, W.K.; Kim, J. Analysis of Electromagnetic Waves Attenuation for Underwater Localization in Structured Environments. Int. J. Control. Autom. Syst. 2020, 18, 575–586. [Google Scholar] [CrossRef]

- Park, D.; Jung, J.; Kwak, K.; Chung, W.K.; Kim, J. 3D underwater localization using EM waves attenuation for UUV docking. In Proceedings of the 2017 IEEE OES International Symposium on Underwater Technology, Busan, Korea, 21–24 February 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Jalving, B.; Gade, K.; Svartveit, K.; Willumsen, A.B.; Sørhagen, R. DVL velocity aiding in the HUGIN 1000 integrated inertial navigation system. Model. Identif. Control 2004, 25, 223–235. [Google Scholar] [CrossRef]

- Lee, C.M.; Lee, P.M.; Hong, S.W.; Kim, S.M.; Seong, W. Underwater navigation system based on inertial sensor and doppler velocity log using indirect feedback kalman filter. Int. J. Offshore Polar Eng. 2005, 15, 85–95. [Google Scholar]

- Ribas, D.; Ridao, P.; Tardós, J.D.; Neira, J. Underwater SLAM in man-made structured environments. J. Field Robot. 2008, 25, 898–921. [Google Scholar] [CrossRef]

- Mallios, A.; Ridao, P.; Ribas, D.; Maurelli, F.; Petillot, Y. EKF-SLAM for AUV navigation under probabilistic sonar scan-matching. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4404–4411. [Google Scholar]

- Mallios, A. Sonar Scan Matching for Simultaneous Localization and Mapping in Confined Underwater Environments. Ph.D. Thesis, University of Girona, Girona, Spain, 2014. [Google Scholar]

- Rogne, R.H.; Bryne, T.H.; Fossen, T.I.; Johansen, T.A. MEMS-based inertial navigation on dynamically positioned ships: Dead reckoning. IFAC-PapersOnLine 2016, 49, 139–146. [Google Scholar] [CrossRef]

- Ryu, J.H.; Gankhuyag, G.; Chong, K.T. Navigation system heading and position accuracy improvement through GPS and INS data fusion. J. Sens. 2016, 2016, 7942963. [Google Scholar] [CrossRef]

- Niu, X.; Yu, T.; Tang, J.; Chang, L. An Online Solution of LiDAR Scan Matching Aided Inertial Navigation System for Indoor Mobile Mapping. Mob. Inf. Syst. 2017, 2017, 4802159. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppa. LiDAR scan matching aided inertial navigation system in GNSS-denied environments. Sensors 2015, 15, 16710–16728. [Google Scholar] [CrossRef]

- Li, C.; Jiang, J.; Duan, F.; Liu, W.; Wang, X.; Bu, L.; Sun, Z.; Yang, G. Modeling and experimental testing of an unmanned surface vehicle with rudderless double thrusters. Sensors 2019, 19, 2051. [Google Scholar] [CrossRef]

- Skjetne, R.; Smogeli, O.N.; Fossen, T.I. A nonlinear ship manoeuvering model: Identification and adaptive control with experiments for a model ship. Model. Identif. Control 2004, 25, 3–27. [Google Scholar] [CrossRef]

- Eriksen, B.O.H.; Breivik, M. Modeling, identification and control of high-speed ASVs: Theory and experiments. In Sensing and Control for Autonomous Vehicles; Springer: Cham, Switzerland, 2017; pp. 407–431. [Google Scholar]

- Monroy-Anieva, J.; Rouviere, C.; Campos-Mercado, E.; Salgado-Jimenez, T.; Garcia-Valdovinos, L. Modeling and Control of a Micro AUV: Objects Follower Approach. Sensors 2018, 18, 2574. [Google Scholar] [CrossRef] [PubMed]

- Kinsey, J.C.; Yang, Q.; Howland, J.C. Nonlinear dynamic model-based state estimators for underwater navigation of remotely operated vehicles. IEEE Trans. Control. Syst. Technol. 2014, 22, 1845–1854. [Google Scholar] [CrossRef]

- Ridley, P.; Fontan, J.; Corke, P. Submarine dynamic modelling. In Proceedings of the 2003 Australasian Conference on Robotics & Automation, Australian Robotics & Automation Association, Brisbane, Australia, 1–3 December 2003. [Google Scholar]

- AUV Docking Method in a Confined Reservois with Good Visbility. J. Intell. Robot. Syst. 2020, 100, 349–361. [CrossRef]

| Area | Scale (m) | Obstacles | Structure | Obstacle Type | Access | Additional Infrastr. | LoS | Turbidity | Ambient Illumin. Levels | Salient Features | Variance of Env. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Modern Nuclear Storage Ponds | 50 × 100 × 10 | Y | Structured | Both | Surface | Maybe | Surface | V. Low | Good | Med | V. Low |

| Legacy Nuclear Storage Ponds | 50 × 100 × 10 | Y | Unstructured | Both | Surface | N | Surface | Variable | Good | Med | Med |

| Legacy Nuclear Storage Silos | 5 × 10 | Y | Unstructured | Static | Restricted | Surface | Maybe | Variable | None | Low | Med |

| Nuclear Reactor Pressure Vessels | 5 × 10 | Y | Unstructured | Static | Eith | N | None | V. High | None | Low | V. Low |

| Offshore Asset Decommissioning | 5–50 | Y | Unstructured | Static | Either | N | Maybe | Variable | Low | Med | Low |

| Ship Hulls | 10 × 50 × 2 | Y | Structured | Static | Surface | Maybe | Both | Variable | Med | Med | Low |

| Liquid Storage Tanks Marinas Harbours and Boatyards | 20 × 30 | N | Structured | Static | Restricted | N | Both | Low | None | Low | V. Low |

| 100 × 100 × 10 | Y | Both | Both | Surface | Maybe | Both | Variable | Med | Med | V. High | |

| Tunnels, Sewers and Flooded Mines | 3 × 3 × 100 | Y | Both | Static | Either | N | None | Variable | None | Med | High |

| Area | Inspection | Maintenance/ Repair | LoA0 | LoA1 | LoA2 | LoA3 | LoA4 | LoA5 |

|---|---|---|---|---|---|---|---|---|

| Modern Nuclear Storage Pond | N | N | ||||||

| Legacy Nuclear Storage Pond | Y | Y | X | X | ||||

| Legacy Nuclear Storage Silo | Y | N | X | |||||

| Nuclear Reactor Pressure Vessel | Y | N | X | X | ||||

| Offshore Asset Decommissioning | Y | Y | X | X | ||||

| Ship Hulls | Y | N | X | X | ||||

| Liquid Storage Tanks Marinas, Harbours and Boatyards | Y | Y | X | X | ||||

| Y | N | X | X | |||||

| Tunnels, Sewers and Flooded Mines | Y | N | X | X |

| Area | Inspection | Maintenance/ Repair | LoA0 | LoA1 | LoA2 | LoA3 | LoA4 | LoA5 |

|---|---|---|---|---|---|---|---|---|

| Modern Nuclear Storage Pond | Y | Y | X | X | X | X | X | X |

| Legacy Nuclear Storage Pond | Y | Y | X | X | X | X | X | |

| Legacy Nuclear Storage Silo | Y | Y | X | X | ||||

| Nuclear Reactor Pressure Vessel | Y | Y | X | X | X | X | ||

| Offshore Asset Decommissioning | Y | Y | X | X | X | X | ||

| Ship Hulls | Y | Y | X | X | X | X | X | X |

| Liquid Storage Tanks Marinas, Harbours and Boatyards | Y | Y | X | X | X | X | X | X |

| Y | Y | X | X | X | X | |||

| Tunnels, Sewers and Flooded Mines | Y | Y | X | X | X | X | X | X |

| UUV | Dims. l × w × h (m) | Depth Rating (m) | Tethered | Payload (kg) | Battery Life (hrs) | Commercial/ Research |

|---|---|---|---|---|---|---|

| DTG3 | 0.28 × 0.33 × 0.26 | 200 | Y | Unknown | 8 | C |

| VideoRay Pro 4 | 0.38 × 0.29 × 0.22 | 305 | Y | Unknown | N/A | C |

| AC-ROV 100 | 0.2 × 0.15 × 0.15 | 100 | Y | 0.2 | N/A | C |

| BlueROV2 | 0.46 × 0.34 × 0.25 | 100 | Y | 1 | 2–4 | C |

| UX-1 | 0.6 dia | 500 | Y | Unknown | 5 | R |

| AVEXIS | 0.15 dia × 0.3 | 10 | Y | 1 | N/A | R |

| HippoCampus | 0.15 dia × 0.4 | 10 | N | 1 | 1 | R |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watson, S.; Duecker, D.A.; Groves, K. Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review. Sensors 2020, 20, 6203. https://doi.org/10.3390/s20216203

Watson S, Duecker DA, Groves K. Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review. Sensors. 2020; 20(21):6203. https://doi.org/10.3390/s20216203

Chicago/Turabian StyleWatson, Simon, Daniel A. Duecker, and Keir Groves. 2020. "Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review" Sensors 20, no. 21: 6203. https://doi.org/10.3390/s20216203

APA StyleWatson, S., Duecker, D. A., & Groves, K. (2020). Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review. Sensors, 20(21), 6203. https://doi.org/10.3390/s20216203