1. Introduction

Industrial robots have been widely applied for manufacturing automation in high-volume production due to their good task repeatability features. In a common scenario of the use of these machines, a human operator teaches the robot to move to a desired position; the robot records this position and then repeats the taught path to complete the task. However, robot teaching is usually time-consuming for low-volume applications. Although offline programming can significantly reduce the workload for robot teaching, the generated robot paths are based on the robot’s nominal kinematic model and, therefore, whether the robot can successfully complete the task via offline programming depends on its absolute accuracy. The robot manufacturers provide the nominal values of the Denavit-Hartenberg (D-H) parameters of the robot. However, the actual values of these parameters can deviate from their nominal values due to the errors in manufacturing, assembly, etc., which accordingly cause positioning errors to the robot end-effector. As a result, the absolute accuracy of industrial robots is relatively low compared with many other types of manufacturing equipment such as CNC machine tools [

1]. As a result, industrial robots still face challenges in many low-volume applications where high absolute accuracy (with the positioning error less than 0.50 mm for an industrial robot of a medium to large size) is required, such as milling, drilling, and precise assembly.

Kinematic calibration is a significant way to improve the absolute accuracy of robots [

2]. Two types of calibration methods are available based on measurement methods. One is the open-loop calibration in which the absolute position and orientation of the robot end-effector are measured; the other is closed-loop calibration in which the position and orientation of the end-effector are measured relative to another reference part or gauge.

For open-loop calibration, laser trackers have been adopted as the measurement device with different calibration algorithms. By using a laser tracker in [

3,

4,

5,

6,

7], the absolute positioning accuracy of the robot can reach about 0.10–0.30 mm. Among them, the least squares technique is the most often applied one, which aims at minimizing the sum of squared residuals [

4,

5]. In [

7], a new kinematic calibration method has been presented using the extended Kalman filter (EKF) and particle filter (PF) algorithm that can significantly improve the positioning accuracy of the robot. Thanks to the principle of data driven modeling, artificial neural network (ANN) has a promising application in modeling complex systems such as calibration [

3,

6]. Nguyen [

3] combined a model-based identification method of the robot geometric errors and an artificial neural network to obtain an effective solution for the correction of robot parameters. In [

6], a back propagation neural network (BPNN) and particle swarm optimization (PSO) algorithm have been employed for the kinematic parameter identification of industrial robots with an enhanced convergence response. Coordinate measuring machines (CMM) have also been used in open-loop robot calibration [

8,

9,

10]. For example, Lightcap [

10] has determined the geometric and flexibility parameters of robots to achieve significant reduction of systematic positioning errors. In [

11], an optical CMM and a laser tracker have been combined to calibrate the ABB IRB 120 industrial robot, so that the mean and maximum position errors can be reduced from more than 3.00 mm and 5.00 mm to about 0.15 mm and 0.50 mm, respectively.

For closed-loop calibration, the calibration models are established by incorporating different types of kinematic constraints induced by the extra reference parts or gauges. By using gauges in [

12,

13,

14], the absolute positioning accuracy of the robot also can reach about 0.10–0.30 mm. He [

12] has used point constraints to improve the accuracy of si

x-axis industrial robots. The robot parameters are calibrated by controlling a robot to reach the same location in different poses. In [

13], a non-kinematic calibration method has been developed to improve the accuracy of a si

x-axis serial robot, by using a linear optimization model based on the closed-loop calibration approach with multiple planar constraints. Joubair [

14] has presented a kinematic calibration method using distance and sphere constraints effectively improving the positioning accuracy of the robot. In another category of closed-loop calibration methods, the kinematic constraints are induced by vision systems [

15,

16,

17,

18]. For example, Du [

15] has developed a vision-based robot calibration method that only requires several reference images, and the mean positioning error was reduced from 5–7 mm to less than 2 mm. Zhang [

18] has proposed a stereo vision based self-calibration procedure with the max position error and orientation errors reduced to less than 2.50 mm and 3.50

, respectively.

The calibration procedure is usually not specific to a certain task, it does not account for the influence of the robot end-effector and it is difficult to correct the errors due to structural elastic deformation and other factors. Other research works are focused on the online positioning error compensation, i.e.,, correcting the positioning error directly with the integration of an external metrology system. Online positioning error compensation is usually task-oriented and does not require a precise kinematics model [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29]. For example, Jiang [

20] has proposed an on-line iterative compensation method combining with a feed-forward compensation method to enhance the assembly accuracy of a robot system (MIRS) with the integration of a 6-DoF measurement system (T-Mac) to track the real-time robot movement. In [

21], an online compensation method has been presented based on a laser tracker to increase robot accuracy without precise calibration. Yin [

22] has developed a real-time dynamic thermal error compensation method for a robotic visual inspection system. The method is designed to be applied on the production line and correct the thermal error during the robotic system operation. In [

23], an embedded real-time algorithm has been presented for the compensation of the lateral tool deviation for a robotic friction stir welding system. Shu [

26] has presented a dynamic path tracking scheme that can realize automatic preplanned tasks and improve the tracking accuracy with eye-to-hand photogrammetry measurement feedback. A dynamic pose correction scheme has been proposed by Gharaaty [

27] which adopts the PID controller and generates commands to the FANUC robot controller. In [

28,

29], the authors have adopted an iterative learning control (ILC) to improve the tracking performance of an industrial robot.

In summary, different offline calibration and online compensation methods to improve the absolute accuracy of robots have been reported in the literature. However, to achieve high precision (up to 0.30–0.50 mm), these methods have some drawbacks. For open-loop calibration, those methods usually require external metrology systems such as laser trackers and CMM that are costly and lack of flexibility for production automation. For closed-loop calibration, using gauges to calibrate requires manual teaching and is difficult to automate, which will affect the calibration efficiency; using vision systems to calibrate can be quite flexible for autonomous calibration, but the positioning error can be as high as a few millimeters limited by the precision of vision systems. For online compensation, most methods adopt a laser tracker as an external metrology system with a laser target mounted on the end-effector, which is costly and can be influenced by the visibility of the laser target. Moreover, most of the above methods do not take into account the influence of positioning accuracy caused by tools. For these reasons, improving absolute accuracy is still a bottleneck challenge for robotic applications in low-volume high-precision tasks.

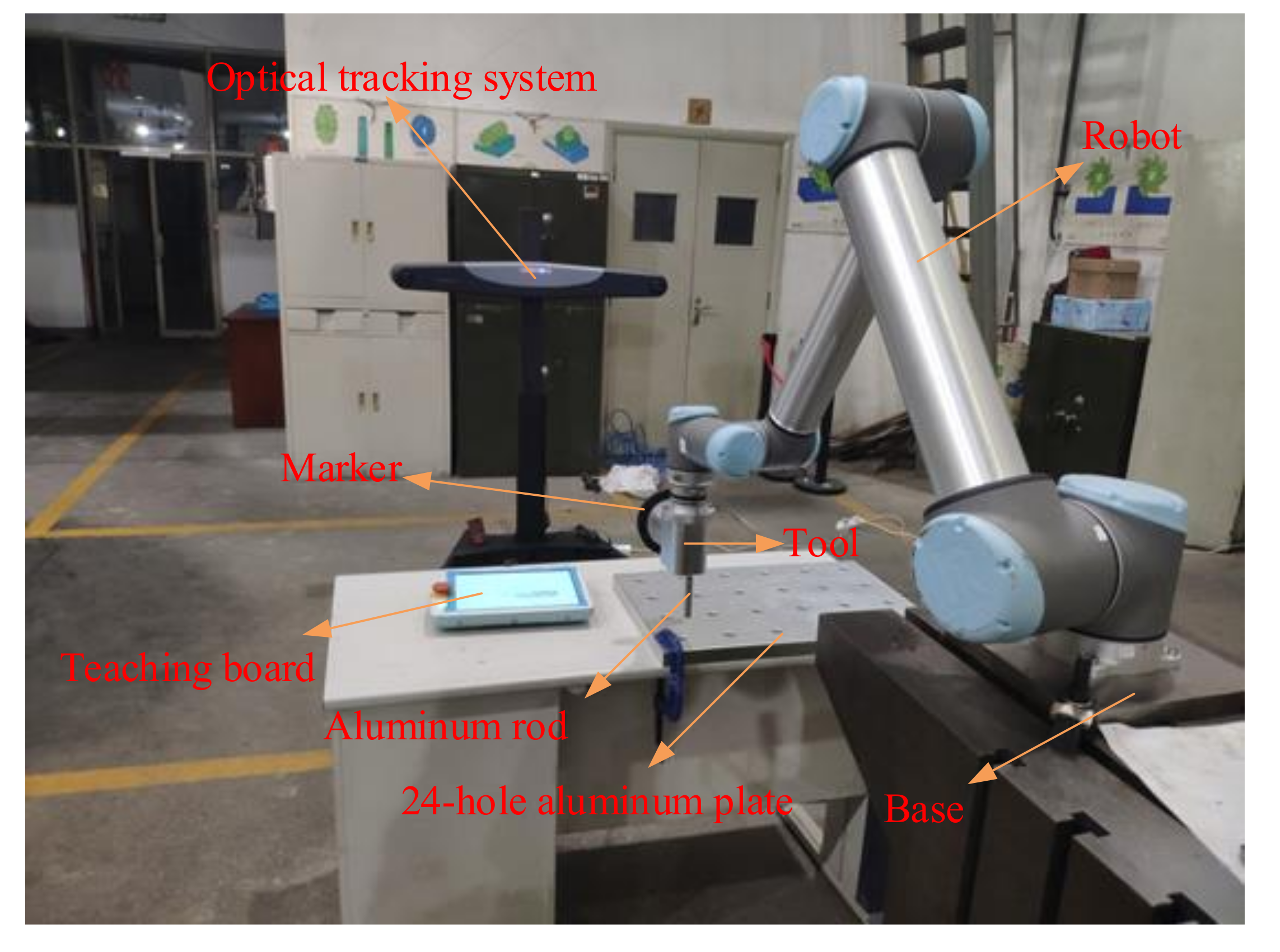

To overcome the above issues, we propose an approach to correct the values of the kinematic parameters through the measurement of the actual end-effector poses via an optical tracking system. This approach integrates an optical tracking system and a rigid-body marker (with multiple marker targets) mounted on the end-effector for online compensation of robot positioning error. The optical tracking system enables online measurement of the 6-DOF motion of the robot end-effector. In the parameter identification process, the influence of the tool, the positioning error of the robot base and the error in the D-H parameters are all considered. A least-square numerical algorithm is then used to correct the errors in these kinematic parameters of the robot. Compared with laser trackers, the cost of an optical tracking system is significantly lower than that of a laser tracking system. Also, the optical tracking system can simultaneously measure the poses of multiple markers attached on the measured object, which brings good visibility of the object and flexibility for integration to the optical tracking system. Compared with closed-loop calibration with gauges, the optical tracking system is convenient and easy to automate to improve the calibration efficiency. Compared with online compensation with laser trackers, this method is more cost effective and can provide better visibility of the robot tools. In summary, the contribution of this work is to propose a comprehensive method to correct not only the errors in the D-H parameters but also the positioning errors in the base and tool frames for the kinematic model, which by adopting the optical tracking system, can lead to an efficient and automatic calibration of the robotic system for the robot users.

In the rest of the paper, the system setup as described in

Section 2, the detailed theoretical development is presented in

Section 3 and

Section 4 for the robot kinematics and the calibration of kinematic parameters respectively and then simulation and experimental results are given in

Section 5 and

Section 6, respectively.

4. Kinematic Parameter Identification

According to Equation (11), the pose of the marker frame relative to the measurement frame is a function of these kinematic parameters as:

where

represent position and orientation of the marker frame relative to the measurement frame. Taking the small disturbance for both sides of Equation (12), the model can be linearized as:

where

represents the disturbance in the pose of the marker frame,

represents the disturbance in each parameter, and

is the 6 × 36 Jacobian matrix of the nonlinear kinematic model Equation (12) defined as follows:

This matrix can be numerically calculated by finite difference. For example, its element on the first row and the first column can be obtained as:

where

is a small disturbance and we use a value of

in this work. We can compare the column vectors in the Jacobian matrix

. If two column vectors are identical, the errors in the two corresponding kinematic parameters have identical effect on the error in the end-effector pose.

According to Equation (11), matrix

is generated from 6 variables as

,

is generated from 6 variables as

, and vector

is generated from 4

m variables as the errors in the D-H parameter values. In this paper, multiple measurement data points are sampled for the pose of the marker frame relative to the measurement frame, and the robot joint angles are recorded simultaneously for each point. For each point, we have:

where subscript

k denotes the

kth sample point,

f is the forward kinematics of the calibration system, and

denotes the robot joint angles. In this study, a least-square numerical algorithm is applied to solve for the errors in the kinematic parameters, so the objective equation can be established as:

where

represents the 2 norm. In the optimization process, we use the

fsolve function with the Levenberg-Marquard algorithm for a numerical solution in MATLAB. Since the system kinematic parameters error is small, we set the initial values of all the kinematic parameter errors to be zero; the maximum number of iterations is set to be 500; the value of the function tolerance is set to be

; and the value of the variable tolerance is set to be

. The optimal solution is obtained as the system kinematic parameters error.

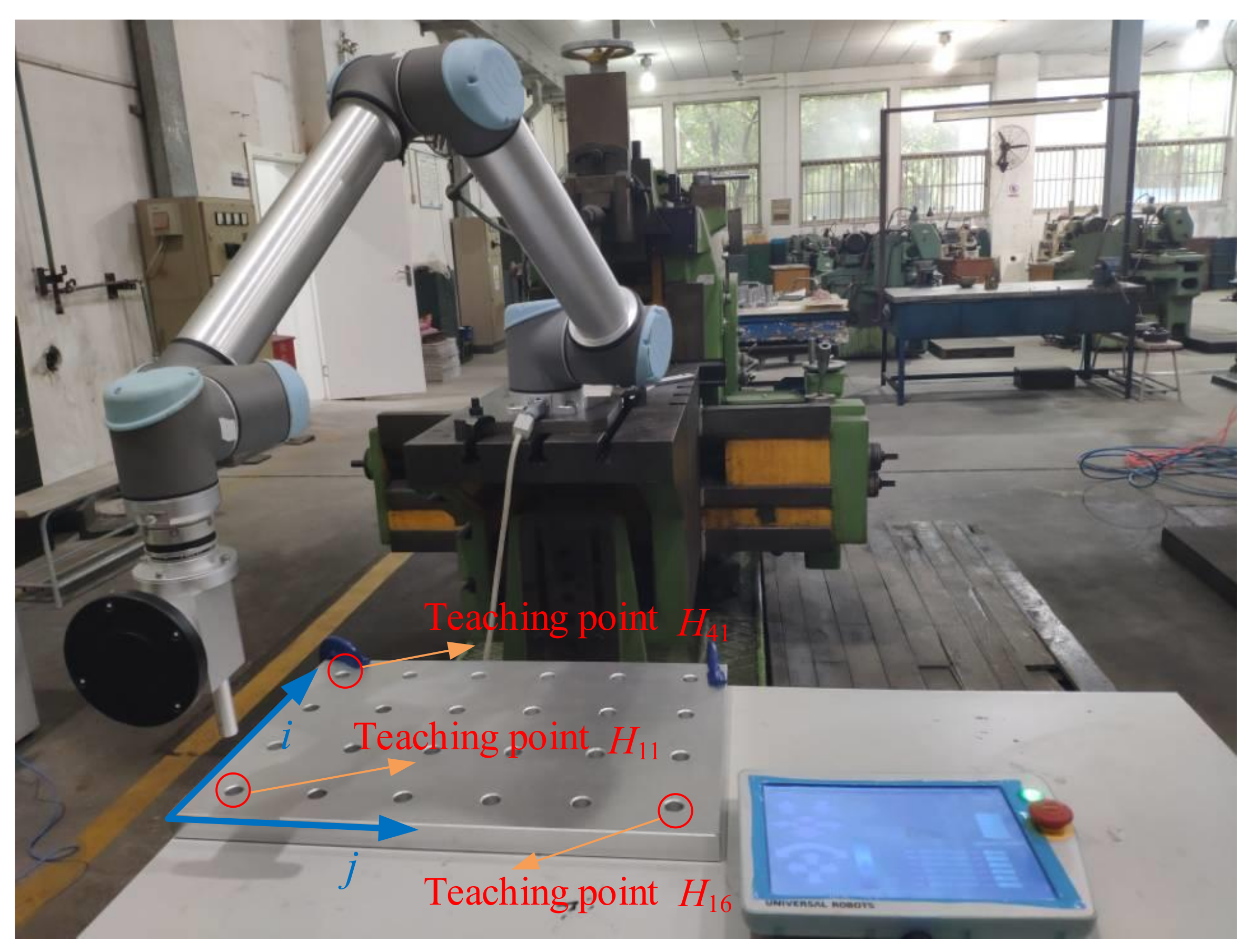

In summary, if we define a trajectory based on the robot base frame, the specific process of the robot to accurately track the trajectory is shown in

Figure 7. After establishing the kinematics model, we can select a series of robot configurations to calibrate the robot. In order to implement our method on a robot, the direct and inverse kinematic problems should be solved for the robot on its controller using the corrected values of the kinematic parameters. By using the optical tracking system to measure the pose error, we can identify the kinematic parameters of the robot. According to the nominal value of the robot, we can solve the joint angle corresponding to the target trajectory. Substituting the joint angle obtained by the nominal value into the calibrated model, we can accurately reach the target position.

7. Conclusions

In this work, we propose a method for the improvement of robot accuracy with an optical tracking system. Compared with existing methods using laser trackers, the proposed method has lower cost and is more flexible due to the advantages of the optical tracking system. In both the simulation and the experiment, the influence of the tool on the robot accuracy can be considered in this method, while most existing calibration methods are performed without the tool for the automation tasks. Furthermore, the proposed calibration procedure can be easily automated, in which the errors in the D-H parameters, the robot base position, and the tool position are all corrected. Instead of updating the kinematic parameters directly on the robot controller, the users can incorporate a separate controller to re-calculate the joint angles with the actual values of the kinematic parameters and then to send these joint angles to the robot to complete the motion. The proposed method provides possibility for a comprehensive calibration of a whole robotic system with a specific tool mounted on the robot. The procedure can be completed without human intervention and thus can be easily automated by robot users.

Simulation and experimental studies are performed to demonstrate the effectiveness of the proposed method for a UR10 robot. It is shown that the robot cannot complete an insertion task for multiple holes smoothly with nominal kinematic parameters by teaching a very limited number of points. However, using the proposed method, the robot can successfully complete the same task. This enables us to use the paths generated from offline programming to complete complicated tasks over a large work envelope. Although the simulation and experimental demo are done on a UR10 robot, it is expected that the proposed method can be extended to other industrial and collaborative serial robots. In future research, theoretical analysis on the convergence of the optimization process is necessary to evaluate the reliability of the final solution and using other equipment like a laser tracking system to calibrate the UR10 robot will be investigated for a comparison with the proposed method.