MMW Radar-Based Technologies in Autonomous Driving: A Review

Abstract

:1. Introduction

- An organized survey of MMW radar-related models and methods applied In perception tasks such as detection and tracking, mapping, and localization.

- Latest DL frameworks applied on radar data are fully investigated.

- A list of the remaining challenge and future direction which can enhance the useful application of MMW radar In autonomous driving.

2. Data Models and Representations from MMW Radar

2.1. Dynamic Target Modeling

2.2. Static Environment Modeling

2.2.1. Occupancy Grid Map (OGM)

2.2.2. Amplitude Grid Map (AGM)

2.2.3. Free Space

2.3. Association between Dynamic and Static Environment

3. MMW Radar Perception Approaches

3.1. Object Detection and Tracking

3.1.1. Radar-Only

3.1.2. Sensor Fusion

3.2. Radar-Based Vehicle Self-Localization

4. Future Trends for Radar-Based Technology

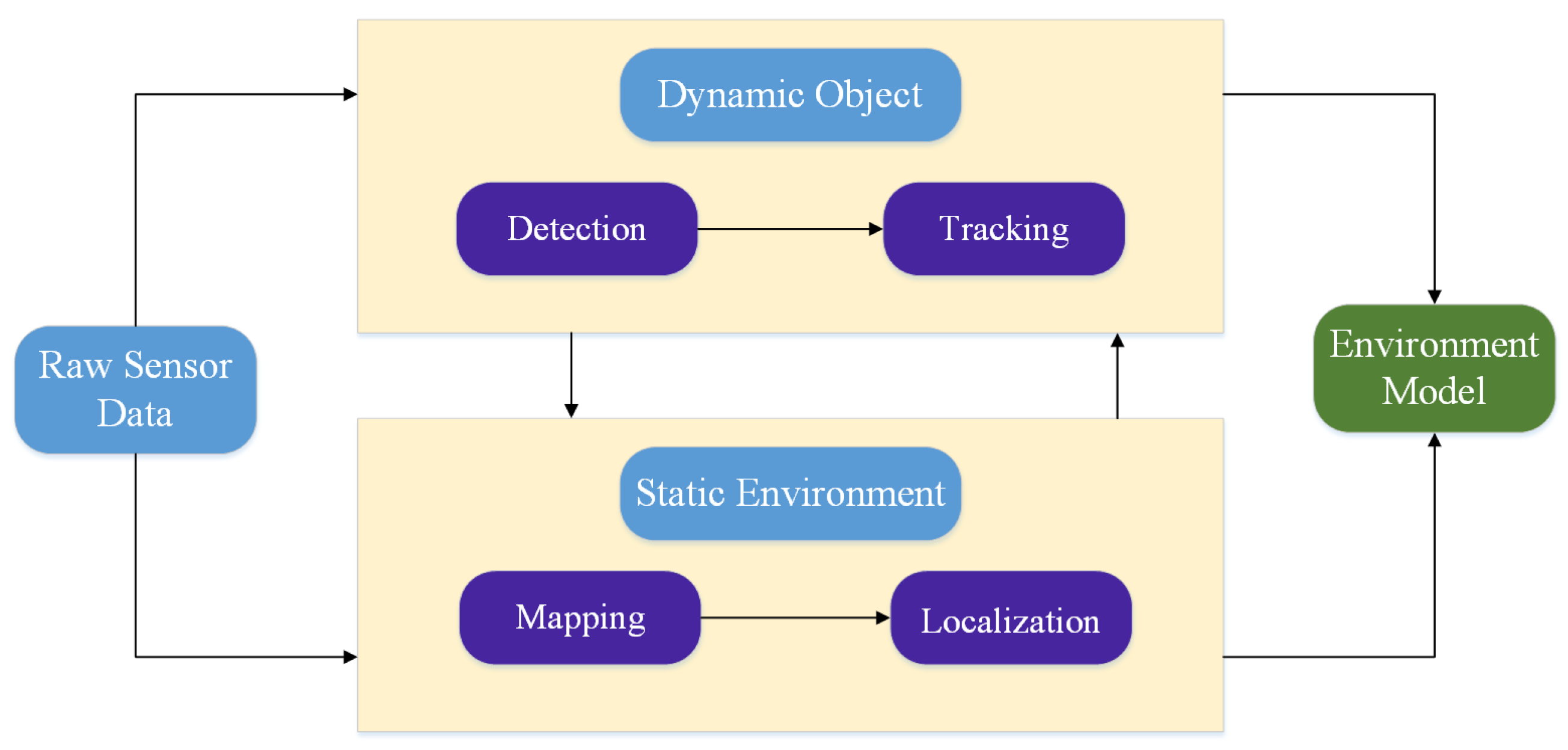

- MMW radar is widely used in perception tasks for autonomous driving. We divide environmental perception tasks towards two types as is shown in Figure 12. For dynamic objects, object detection and tracking can be employed to obtain objects’ position, motion, dimension, orientation, and category etc. For static environment, through SLAM we can get the environmental mapping information and determine the pose of the self-driving vehicle. In the past and present, MMW radar plays an important role in all these tasks. It cannot be replaced by other sensors to the ground. Therefore, studies about MMW radar-based environmental perception algorithm are important.

- Multi-sensor fusion and DL attracts a lot of attention and become increasingly significant for radar-related studies. As fusion combines advantages from different sensors and improve the confidence of single-sensor data processing result, it is a good choice to fuse radar data with others. Radar can provide measurement of speed and other sensors can provide semantic or dimensional information. Moreover, fusion can surely offset against the low resolution of radar data. Radar-related fusion studies include data-level fusion, object-level fusion. In addition, with the release of dataset for autonomous driving which provide radar data, more and more researchers pay attention to train radar data with DNN. Some works which use radar data solely or deep fusion have obtained good results on detection, classification, semantic segmentation and grid-mapping. Although current networks used to process radar data are usually modified from NN used to process image and LIDAR point cloud, we believe with the revealing of more essential characteristics to describe object features, there will be more progress about radar-based deep learning algorithms.

- More dense and various data: In many research works, we find that the main limitation of MMW radar-based algorithms is in its sparse data which is hard to extract effective features. Compared with LIDAR, the lack of height information also restricts radar’s use in highly automated driving. Adding three-dimensional information to radar data can surely contribute to automotive radar’s application [31]. Therefore, the MMW radar imaging ability must be further improved, especially with regards to the angular resolution and increase in height information.

- More sufficient information fusion: Because the perception performance and field of view (FOV) of a single radar is limited, to improve the effect and avoid blind spots, information fusion is necessary [99]. Fused with information of vision, high automated map [100] and connected information [101] will enhance the completeness and the accuracy of radar-based perception tasks, which improve safety and reliability of autonomous driving ultimately. in the process of fusion, how to obtain precise time-space synchronization between multi-sensors, how to realize effective data association between heterogeneous data and how to obtain more meaningful information by fusion deserves careful consideration and more academic exploration.

- Introduction of advanced environmental perception algorithm: Deep learning and pattern recognition should be further introduced in radar data processing, which is important to fully excavate the data characteristics of radar [2]. How to train radar data with DNN effectively is a problem in urgent need of a solution.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| MMW | Millimeter Wave |

| AV | Automated Vehicles |

| ADAS | Advanced Driving-Assistance Systems |

| DL | Deep Learning |

| OGM | Occupancy Grid Map |

| AGM | Amplitude Grid Map |

| SLAM | Simultaneous Localization And Mapping |

References

- Yang, D.; Jiang, K.; Zhao, D.; Yu, C.; Cao, Z.; Xie, S.; Xiao, Z.; Jiao, X.; Wang, S.; Zhang, K. Intelligent and connected vehicles: Current status and future perspectives. Sci. China Technol. Sci. 2018, 61, 1446–1471. [Google Scholar] [CrossRef]

- Dickmann, J.; Klappstein, J.; Hahn, M.; Appenrodt, N.; Bloecher, H.L.; Werber, K.; Sailer, A. Automotive radar the key technology for autonomous driving: From detection and ranging to environmental understanding. In Proceedings of the IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 1–6 May 2016; pp. 1–6. [Google Scholar]

- Dickmann, J.; Appenrodt, N.; Bloecher, H.L.; Brenk, C.; Hackbarth, T.; Hahn, M.; Klappstein, J.; Muntzinger, M.; Sailer, A. Radar contribution to highly automated driving. In Proceedings of the 44th European Microwave Conference, Rome, Italy, 6–9 October 2014; pp. 1715–1718. [Google Scholar]

- On-Road Automated Vehicle Standards Committee and Others. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2018. [Google Scholar]

- Manjunath, A.; Liu, Y.; Henriques, B.; Engstle, A. Radar based object detection and tracking for autonomous driving. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 16–18 April 2018; pp. 1–4. [Google Scholar]

- Vahidi, A.; Eskandarian, A. Research advances In intelligent collision avoidance and adaptive cruise control. IEEE Trans. Intell. Transp. Syst. 2003, 4, 143–153. [Google Scholar] [CrossRef] [Green Version]

- Roos, F.; Kellner, D.; Klappstein, J.; Dickmann, J.; Dietmayer, K.; Muller-Glaser, K.D.; Waldschmidt, C. Estimation of the orientation of vehicles In high-resolution radar images. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Heidelberg, Germany, 27–29 April 2015; pp. 1–4. [Google Scholar]

- Li, M.; Stolz, M.; Feng, Z.; Kunert, M.; Henze, R.; Küçükay, F. An adaptive 3D grid-based clustering algorithm for automotive high resolution radar sensor. In Proceedings of the IEEE International Conference on Vehicular Electronics and Safety (ICVES), Madrid, Spain, 12–14 September 2018; pp. 1–7. [Google Scholar]

- Kellner, D.; Barjenbruch, M.; Klappstein, J.; Dickmann, J.; Dietmayer, K. Instantaneous full-motion estimation of arbitrary objects using dual Doppler radar. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Dearborn, MI, USA, 8–11 June 2014; pp. 324–329. [Google Scholar]

- Knill, C.; Scheel, A.; Dietmayer, K. A direct scattering model for tracking vehicles with high-resolution radars. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 298–303. [Google Scholar]

- Steinhauser, D.; HeId, P.; Kamann, A.; Koch, A.; Brandmeier, T. Micro-Doppler extraction of pedestrian limbs for high resolution automotive radar. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 764–769. [Google Scholar]

- Abdulatif, S.; Wei, Q.; Aziz, F.; Kleiner, B.; Schneider, U. Micro-doppler based human-robot classification using ensemble and deep learning approaches. In Proceedings of the IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1043–1048. [Google Scholar]

- Werber, K.; Rapp, M.; Klappstein, J.; Hahn, M.; Dickmann, J.; Dietmayer, K.; Waldschmidt, C. Automotive radar gridmap representations. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Heidelberg, Germany, 27–29 April 2015; pp. 1–4. [Google Scholar]

- Sless, L.; El Shlomo, B.; Cohen, G.; Oron, S. Road Scene Understanding by Occupancy Grid Learning from Sparse Radar Clusters using Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Lombacher, J.; Hahn, M.; Dickmann, J.; Wöhler, C. Potential of radar for static object classification using deep learning methods. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), San Diego, CA, USA, 19–20 May 2016; pp. 1–4. [Google Scholar]

- Scheel, A.; Knill, C.; Reuter, S.; Dietmayer, K. Multi-sensor multi-object tracking of vehicles using high-resolution radars. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 558–565. [Google Scholar]

- Rapp, M.; Hahn, M.; Thom, M.; Dickmann, J.; Dietmayer, K. Semi-markov process based localization using radar In dynamic environments. In Proceedings of the IEEE 18th International Conference on Intelligent Transportation Systems (ITSC), Gran Canaria, Spain, 15–18 September 2015; pp. 423–429. [Google Scholar]

- Werber, K.; Klappstein, J.; Dickmann, J.; Waldschmidt, C. Interesting areas In radar gridmaps for vehicle self-localization. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), San Diego, CA, USA, 19–20 May 2016; pp. 1–4. [Google Scholar]

- Schuster, F.; Wörner, M.; Keller, C.G.; Haueis, M.; Curio, C. Robust localization based on radar signal clustering. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 839–844. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets In a metric space. In Proceedings of the Advances in Neural Information Processing Systems(NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances In Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Schumann, O.; Hahn, M.; Dickmann, J.; Wöhler, C. Semantic segmentation on radar point clouds. In Proceedings of the 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 2179–2186. [Google Scholar]

- Scheiner, N.; Appenrodt, N.; Dickmann, J.; Sick, B. Radar-based road user classification and novelty detection with recurrent neural network ensembles. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 722–729. [Google Scholar]

- Chadwick, S.; Maddetn, W.; Newman, P. Distant vehicle detection using radar and vision. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8311–8317. [Google Scholar]

- Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors 2020, 20, 956. [Google Scholar] [CrossRef] [Green Version]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 11621–11631. [Google Scholar]

- Meinel, H.H.; Dickmann, J. Automotive radar: From its origins to future directions. Microw. J. 2013, 56, 24–40. [Google Scholar]

- Hammarstrand, L.; Svensson, L.; Sandblom, F.; Sorstedt, J. Extended object tracking using a radar resolution model. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2371–2386. [Google Scholar] [CrossRef] [Green Version]

- Kellner, D.; Klappstein, J.; Dietmayer, K. Grid-based DBSCAN for clustering extended objects In radar data. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Alcala de Henares, Spain, 3–7 June 2012; pp. 365–370. [Google Scholar]

- Degerman, J.; Pernstål, T.; Alenljung, K. 3D occupancy grid mapping using statistical radar models. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 902–908. [Google Scholar]

- Schmid, M.R.; Maehlisch, M.; Dickmann, J.; Wuensche, H.J. Dynamic level of detail 3d occupancy grids for automotive use. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 269–274. [Google Scholar]

- Prophet, R.; Stark, H.; Hoffmann, M.; Sturm, C.; Vossiek, M. Adaptions for automotive radar based occupancy gridmaps. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 16–18 April 2018; pp. 1–4. [Google Scholar]

- Li, M.; Feng, Z.; Stolz, M.; Kunert, M.; Henze, R.; Küçükay, F. High Resolution Radar-based Occupancy Grid Mapping and Free Space Detection. In Proceedings of the VEHITS, Funchal, Madeira, Portugal, 16–18 March 2018; pp. 70–81. [Google Scholar]

- Kellner, D.; Barjenbruch, M.; Dietmayer, K.; Klappstein, J.; Dickmann, J. Instantaneous lateral velocity estimation of a vehicle using Doppler radar. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 877–884. [Google Scholar]

- Wen, Z.; Li, D.; Yu, W. A quantitative Evaluation for Radar Grid Map Construction. In Proceedings of the 2019 International Conference on Electromagnetics In Advanced Applications (ICEAA), Granada, Spain, 9–13 September 2019; pp. 794–796. [Google Scholar]

- Sarholz, F.; Mehnert, J.; Klappstein, J.; Dickmann, J.; Radig, B. Evaluation of different approaches for road course estimation using imaging radar. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 4587–4592. [Google Scholar]

- Dubé, R.; Hahn, M.; Schütz, M.; Dickmann, J.; Gingras, D. Detection of parked vehicles from a radar based occupancy grid. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium (IV), Dearborn, MI, USA, 8–11 June 2014; pp. 1415–1420. [Google Scholar]

- Schütz, M.; Appenrodt, N.; Dickmann, J.; Dietmayer, K. Occupancy grid map-based extended object tracking. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium (IV), Dearborn, MI, USA, 8–11 June 2014; pp. 1205–1210. [Google Scholar]

- Fang, Y.; Masaki, I.; Horn, B. Depth-based target segmentation for intelligent vehicles: Fusion of radar and binocular stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 196–202. [Google Scholar] [CrossRef]

- Muntzinger, M.M.; Aeberhard, M.; Zuther, S.; Maehlisch, M.; Schmid, M.; Dickmann, J.; Dietmayer, K. Reliable automotive pre-crash system with out-of-sequence measurement processing. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 1022–1027. [Google Scholar]

- Prophet, R.; Li, G.; Sturm, C.; Vossiek, M. Semantic Segmentation on Automotive Radar Maps. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 756–763. [Google Scholar]

- Lombacher, J.; Laudt, K.; Hahn, M.; Dickmann, J.; Wöhler, C. Semantic radar grids. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1170–1175. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Prophet, R.; Hoffmann, M.; Vossiek, M.; Li, G.; Sturm, C. Parking space detection from a radar based target list. In Proceedings of the 2017 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Nagoya, Aichi, Japan, 19–21 March 2017; pp. 91–94. [Google Scholar]

- Scheiner, N.; Appenrodt, N.; Dickmann, J.; Sick, B. Radar-based feature design and multiclass classification for road user recognition. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 779–786. [Google Scholar]

- Obst, M.; Hobert, L.; Reisdorf, P. Multi-sensor data fusion for checking plausibility of V2V communications by vision-based multiple-object tracking. In Proceedings of the 2014 IEEE Vehicular Networking Conference (VNC), Paderborn, Germany, 3–5 December 2014; pp. 143–150. [Google Scholar]

- Kadow, U.; Schneider, G.; Vukotich, A. Radar-vision based vehicle recognition with evolutionary optimized and boosted features. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium (IV), Istanbul, Turkey, 13–15 June 2007; pp. 749–754. [Google Scholar]

- Chunmei, M.; Yinong, L.; Ling, Z.; Yue, R.; Ke, W.; Yusheng, L.; Zhoubing, X. Obstacles detection based on millimetre-wave radar and image fusion techniques. In Proceedings of the IET International Conference on Intelligent and Connected Vehicles (ICV), Chongqing, China, 22–23 September 2016. [Google Scholar]

- Alessandretti, G.; Broggi, A.; Cerri, P. Vehicle and guard rail detection using radar and vision data fusion. IEEE Trans. Intell. Transp. Syst. 2007, 8, 95–105. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Chavez-Garcia, R.O.; Burlet, J.; Vu, T.D.; Aycard, O. Frontal object perception using radar and mono-vision. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium (IV), Alcala de Henares, Spain, 3–7 June 2012; pp. 159–164. [Google Scholar]

- Garcia, F.; Cerri, P.; Broggi, A.; de la Escalera, A.; Armingol, J.M. Data fusion for overtaking vehicle detection based on radar and optical flow. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium (IV), Alcala de Henares, Spain, 3–7 June 2012; pp. 494–499. [Google Scholar]

- Zhong, Z.; Liu, S.; Mathew, M.; Dubey, A. Camera radar fusion for increased reliability In adas applications. Electron. Imaging 2018, 2018, 258. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Fortmann, T.; Bar-Shalom, Y.; Scheffe, M. Sonar tracking of multiple targets using joint probabilistic data association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef] [Green Version]

- Blackman, S.S. Multiple hypothesis tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Sorenson, H.W. Kalman Filtering: Theory and Application; IEEE: Piscataway, NJ, USA, 1985. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef] [Green Version]

- Yager, R.R. On the Dempster-Shafer framework and new combination rules. Inf. Sci. 1987, 41, 93–137. [Google Scholar] [CrossRef]

- Chavez-Garcia, R.O.; Vu, T.D.; Aycard, O.; Tango, F. Fusion framework for moving-object classification. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 1159–1166. [Google Scholar]

- Chavez-Garcia, R.O.; Aycard, O. Multiple sensor fusion and classification for moving object detection and tracking. IEEE Trans. Intell. Transp. Syst. 2015, 17, 525–534. [Google Scholar] [CrossRef] [Green Version]

- Yu, R.; Li, A.; Morariu, V.I.; Davis, L.S. Visual relationship detection with internal and external linguistic knowledge distillation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1974–1982. [Google Scholar]

- Kim, H.t.; Song, B. Vehicle recognition based on radar and vision sensor fusion for automatic emergency braking. In Proceedings of the 2013 13th International Conference on Control, Automation and Systems (ICCAS), Gwangju, Korea, 20–23 October 2013; pp. 1342–1346. [Google Scholar]

- Gaisser, F.; Jonker, P.P. Road user detection with convolutional neural networks: An application to the autonomous shuttle WEpod. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Toyoda, Japan, 8–12 May 2017; pp. 101–104. [Google Scholar]

- Kato, T.; Ninomiya, Y.; Masaki, I. An obstacle detection method by fusion of radar and motion stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 182–188. [Google Scholar] [CrossRef]

- Sugimoto, S.; Tateda, H.; Takahashi, H.; Okutomi, M. Obstacle detection using millimeter-wave radar and its visualization on image sequence. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR), Cambridge, UK, 26 August 2004; Volume 3, pp. 342–345. [Google Scholar]

- Bombini, L.; Cerri, P.; Medici, P.; Alessandretti, G. Radar-vision fusion for vehicle detection. In Proceedings of the International Workshop on Intelligent Transportation, Toronto, ON, Canada, 17–20 September 2006; pp. 65–70. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Nabati, R.; Qi, H. RRPN: Radar Region Proposal Network for Object Detection in Autonomous Vehicles. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3093–3097. [Google Scholar]

- John, V.; Mita, S. RVNet: Deep sensor fusion of monocular camera and radar for image-based obstacle detection In challenging environments. In Proceedings of the Pacific-Rim Symposium on Image and Video Technology (PSIVT), Sydney, Australia, 18–22 November 2019; pp. 351–364. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Broßeit, P.; Kellner, D.; Brenk, C.; Dickmann, J. Fusion of doppler radar and geometric attributes for motion estimation of extended objects. In Proceedings of the 2015 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 6–8 October 2015; pp. 1–5. [Google Scholar]

- Schütz, M.; Appenrodt, N.; Dickmann, J.; Dietmayer, K. Simultaneous tracking and shape estimation with laser scanners. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 885–891. [Google Scholar]

- Steinemann, P.; Klappstein, J.; Dickmann, J.; Wünsche, H.J.; Hundelshausen, F.V. Determining the outline contour of vehicles In 3D-LIDAR-measurements. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 479–484. [Google Scholar]

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous localization and map change update for the high definition map-based autonomous driving car. Sensors 2018, 18, 3145. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Z.; Yang, D.; Wen, T.; Jiang, K.; Yan, R. Monocular Localization with Vector HD Map (MLVHM): A Low-Cost Method for Commercial IVs. Sensors 2020, 20, 1870. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yoneda, K.; Hashimoto, N.; Yanase, R.; Aldibaja, M.; Suganuma, N. Vehicle localization using 76GHz omnidirectional millimeter-wave radar for winter automated driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 971–977. [Google Scholar]

- Holder, M.; Hellwig, S.; Winner, H. Real-time pose graph SLAM based on radar. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1145–1151. [Google Scholar]

- Adams, M.; Adams, M.D.; Jose, E. Robotic Navigation and Mapping with Radar; Artech House: Norwood, MA, USA, 2012. [Google Scholar]

- Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef] [Green Version]

- Thrun, S.; Fox, D.; Burgard, W.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 128, 99–141. [Google Scholar] [CrossRef] [Green Version]

- Hahnel, D.; Triebel, R.; Burgard, W.; Thrun, S. Map building with mobile robots In dynamic environments. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (ICRA), Taipei, Taiwan, 14–19 September 2003; Volume 2, pp. 1557–1563. [Google Scholar]

- Schreier, M.; Willert, V.; Adamy, J. Grid mapping In dynamic road environments: Classification of dynamic cell hypothesis via tracking. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 3 May–7 June 2014; pp. 3995–4002. [Google Scholar]

- Rapp, M.; Giese, T.; Hahn, M.; Dickmann, J.; Dietmayer, K. A feature-based approach for group-wise grid map registration. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems (ITSC), Gran Canaria, Spain, 15–18 September 2015; pp. 511–516. [Google Scholar]

- Thrun, S.; Montemerlo, M. The graph SLAM algorithm with applications to large-scale mapping of urban structures. Int. J. Robot. Res. 2006, 25, 403–429. [Google Scholar] [CrossRef]

- Schuster, F.; Keller, C.G.; Rapp, M.; Haueis, M.; Curio, C. Landmark based radar SLAM using graph optimization. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 2559–2564. [Google Scholar]

- Werber, K.; Barjenbruch, M.; Klappstein, J.; Dickmann, J.; Waldschmidt, C. RoughCough—A new image registration method for radar based vehicle self-localization. In Proceedings of the 2015 18th International Conference on Information Fusion (FUSION), Washington, DC, USA, 6–9 July 2015; pp. 1533–1541. [Google Scholar]

- Werber, K.; Klappstein, J.; Dickmann, J.; Waldschmidt, C. Association of Straight Radar Landmarks for Vehicle Self-Localization. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 736–743. [Google Scholar]

- Wirtz, S.; Paulus, D. Evaluation of established line segment distance functions. Pattern Recognit. Image Anal. 2016, 26, 354–359. [Google Scholar] [CrossRef]

- Werber, K.; Klappstein, J.; Dickmann, J.; Waldschmidt, C. Point group associations for radar-based vehicle self-localization. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016; pp. 1638–1646. [Google Scholar]

- Ward, E.; Folkesson, J. Vehicle localization with low cost radar sensors. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 864–870. [Google Scholar]

- Narula, L.; Iannucci, P.A.; Humphreys, T.E. Automotive-radar-based 50-cm urban positioning. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 856–867. [Google Scholar]

- Franke, U.; Pfeiffer, D.; Rabe, C.; Knoeppel, C.; Enzweiler, M.; Stein, F.; Herrtwich, R. Making bertha see. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV), Sydney, Australia, 1–8 December 2013; pp. 214–221. [Google Scholar]

- Jo, K.; Lee, M.; Kim, J.; Sunwoo, M. Tracking and behavior reasoning of moving vehicles based on roadway geometry constraints. IEEE Trans. Intell. Transp. Syst. 2016, 18, 460–476. [Google Scholar] [CrossRef]

- Kim, S.W.; Qin, B.; Chong, Z.J.; Shen, X.; Liu, W.; Ang, M.H.; Frazzoli, E.; Rus, D. Multivehicle cooperative driving using cooperative perception: Design and experimental validation. IEEE Trans. Intell. Transp. Syst. 2014, 16, 663–680. [Google Scholar] [CrossRef]

| Task | Data Format | Algorithm | Advantages and Usefulness | Ref. |

|---|---|---|---|---|

| Dynamic Targets Modeling | Cluster-layer data | Estimation extended objects by Doppler effect | 1. Estimate the full 2D motion of extended objects; 2. Used to track dynamic extended object | [9,10,29] |

| Dynamic Targets Modeling | Cluster-layer data | Clustering based on DBSCAN | 1. Estimate the dimension of extended objects | [7,8,30] |

| Dynamic Targets Modeling | R-D Map | Frequency spectrum analysis | 1. ObtaIn the category of dynamic objects | [11,12] |

| Static Environment Modeling | Cluster-layer data | Occupancy grid maps | 1. Used to realized road scene understanding and localization | [13,14,31,32] |

| Static Environment Modeling | Cluster-layer data | Amplitude grid maps | 1. Reflect the characteristics of objects besides environmental mapping | [13] |

| Static Environment Modeling | Cluster-layer data | Free Space | 1. Display of available driving areas Valuable to vehicle trajectory planning | [33,34] |

| Algorithm | Baseline | Performance on nuScenes [27] | Improvement |

|---|---|---|---|

| SAF-FCOS [26] | FCOS [76] | mAP 72.4% | mAP 7.7% |

| RVNet [74] | TinyYOLOv3 [77] | mAP 56% | mAP 16% |

| CRF-Net [25] | RetinaNet [75] | mAP 55.99% | mAP 12.96% |

| Method | Strengths | Shortcomings |

|---|---|---|

| Occupancy Grid Map | Most common algorithms used in radar-based SLAM | Require lots of computation cost when updating map |

| Amplitude Grid Map | Distinguish different materials according to reflection characteristics | Less clear position representation compared to OGMs |

| Point cloud Map | A robust and efficient mapping method saving lots of time and memory | Difficulty of adjusting parameters of particle filter |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, T.; Yang, M.; Jiang, K.; Wong, H.; Yang, D. MMW Radar-Based Technologies in Autonomous Driving: A Review. Sensors 2020, 20, 7283. https://doi.org/10.3390/s20247283

Zhou T, Yang M, Jiang K, Wong H, Yang D. MMW Radar-Based Technologies in Autonomous Driving: A Review. Sensors. 2020; 20(24):7283. https://doi.org/10.3390/s20247283

Chicago/Turabian StyleZhou, Taohua, Mengmeng Yang, Kun Jiang, Henry Wong, and Diange Yang. 2020. "MMW Radar-Based Technologies in Autonomous Driving: A Review" Sensors 20, no. 24: 7283. https://doi.org/10.3390/s20247283

APA StyleZhou, T., Yang, M., Jiang, K., Wong, H., & Yang, D. (2020). MMW Radar-Based Technologies in Autonomous Driving: A Review. Sensors, 20(24), 7283. https://doi.org/10.3390/s20247283