3D Measurement of Human Chest and Abdomen Surface Based on 3D Fourier Transform and Time Phase Unwrapping

Abstract

1. Introduction

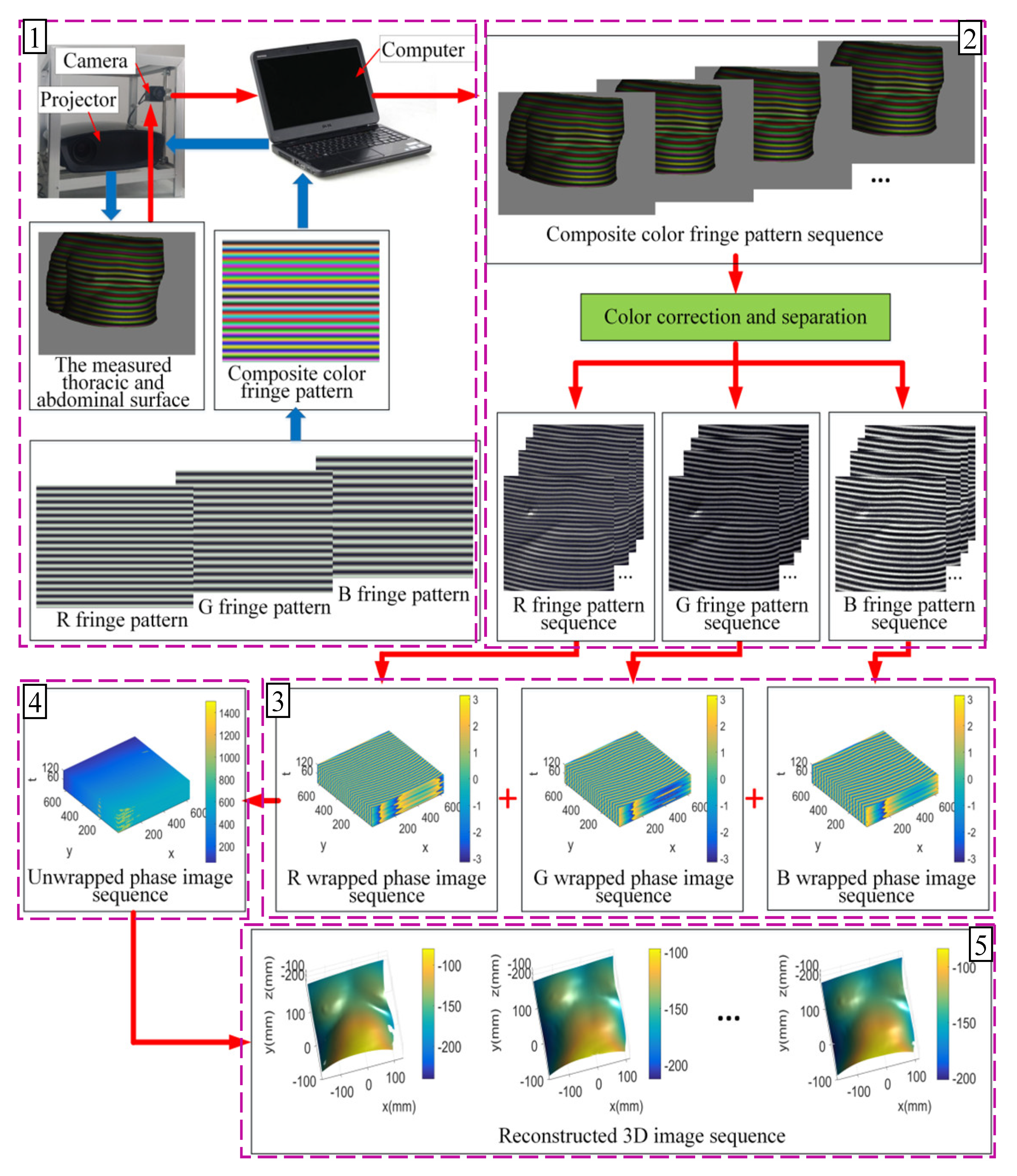

2. 3D Measurement System Description

- (1)

- Pattern projection and image acquisition. The computer generates different periods of three RGB primary color cosine stripe patterns, and these three parts are combined into a composite color stripe pattern, then this pattern is projected to the chest and abdomen surface of the human. The camera captures stripe images of the chest and abdomen surface, which change with breathing movements at regular intervals to get a composite color stripe image sequence. The projection pattern in the proposed approach does not change, which can reduce the time of projection pattern conversion and setup. In addition, the measurement system only collects one composite color stripe pattern, which can decrease the image acquisition time. All these advantages can lay the foundation of the dynamic 3D measurement for the human chest and abdomen surface.

- (2)

- Image color correction and separation. For compound color stripe image sequences, color coupling correction and color separation should be made based on the correction matrix of each pixel, and the three RGB primary color fringe image sequences of different periods can be separated. Because the color coupling phenomenon exists at the coincident intersection of the three color channel spectral response curves in the 3CCD industrial camera [14], the color calibration based on hardware equipment should be completed before the measurement. That is to say, the projector projects four patterns of full red, full green, full blue, and full black to the chest and abdomen surface, and the four images will be captured by the camera. Using these four images, the correction matrix of each pixel is obtained according to the Casti illumination model [15].

- (3)

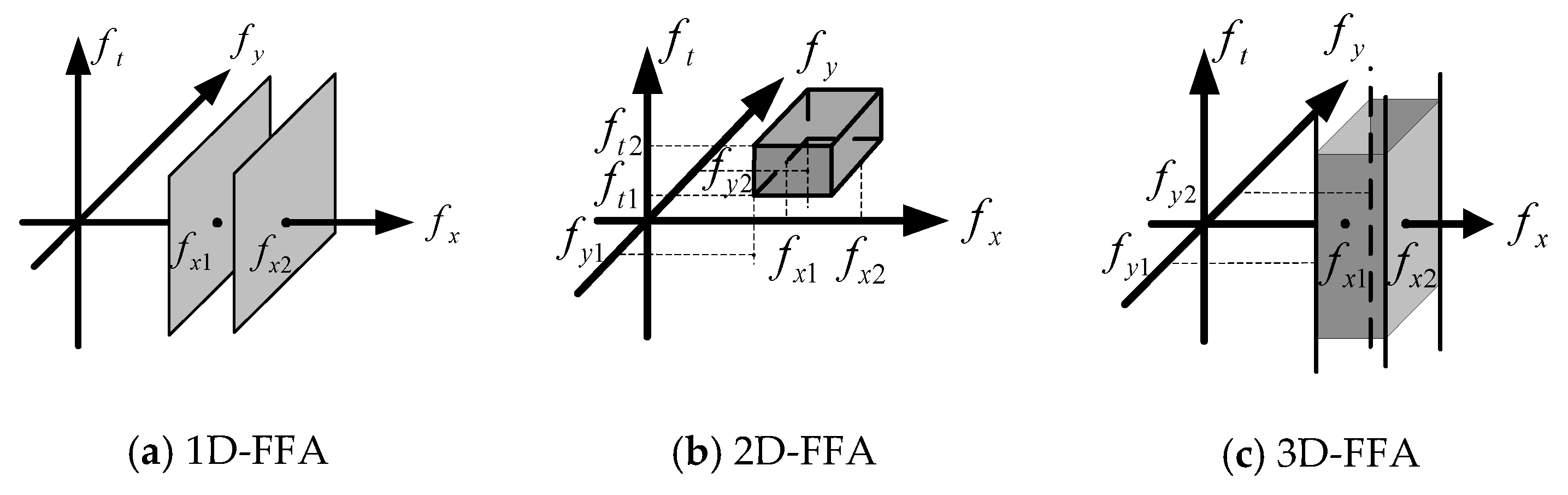

- Folded phase extraction. For each image in the RGB stripe image sequence, three-dimensional Fourier fringe analysis (3D-FFA) is used to extract the folding phase of each pixel and get the folded phase map of each image, and then, RGB folding phase map sequences can be formed. Fourier fringe analysis (FFA) is extended from one-dimensional Fourier fringe analysis (1D-FFA) to two-dimensional Fourier fringe analysis (2D-FFA) by using the properties of 2D fringe images. After this process, useful signals and interference can be separated better. This method becomes an effective measurement for 3D measurement of flat surfaces [16]. In this paper, the image sequences of the chest and abdomen surface are taken as a 3D one, which is analyzed by 3D Fourier transformation. Useful signals and interference can be separated further by increasing the time dimension, so as to reduce the influence of interference and improve the accuracy of measurement.

- (4)

- Folded phase unwrapping. According to the RGB folding phase diagram at the same time, with the proposed method of three-frequency time phase unwrapping in this paper, the folded phase is expanded into a continuous absolute phase, and the absolute phase diagram at that moment is obtained; thus, an absolute phase sequence can be formed. In the phase unwrapping method of this paper, the unwrapping operation depends on the difference of the decimal part of the measured folded phase, which can ensure that the absolute phase error does not exceed the folded phase error under certain conditions. In addition, we can judge whether there is any big error based on the absolute phase value, which can eliminate or reduce the effect of large absolute phase error by eliminating or interpolating operation. The phase unwrapping is achieved by solving the remainder equation set in the maximum range.

- (5)

- Three-dimensional image sequence acquisition. According to the absolute phase diagram sequence, the 3D coordinates are calculated to form a 3D image sequence of the human chest and abdomen surface based on the triangulation principle. The sequence expresses the 3D shape of the human chest and abdomen surface at each sampling moment during respiratory movement.

3. Folded Phase Extraction Method

3.1. Folded Phase Extraction Principle

3.2. Three-Dimensional Gauss Filter

4. Tri-Frequency Time Phase Unwrapping Method

5. Experimental Results and Analysis

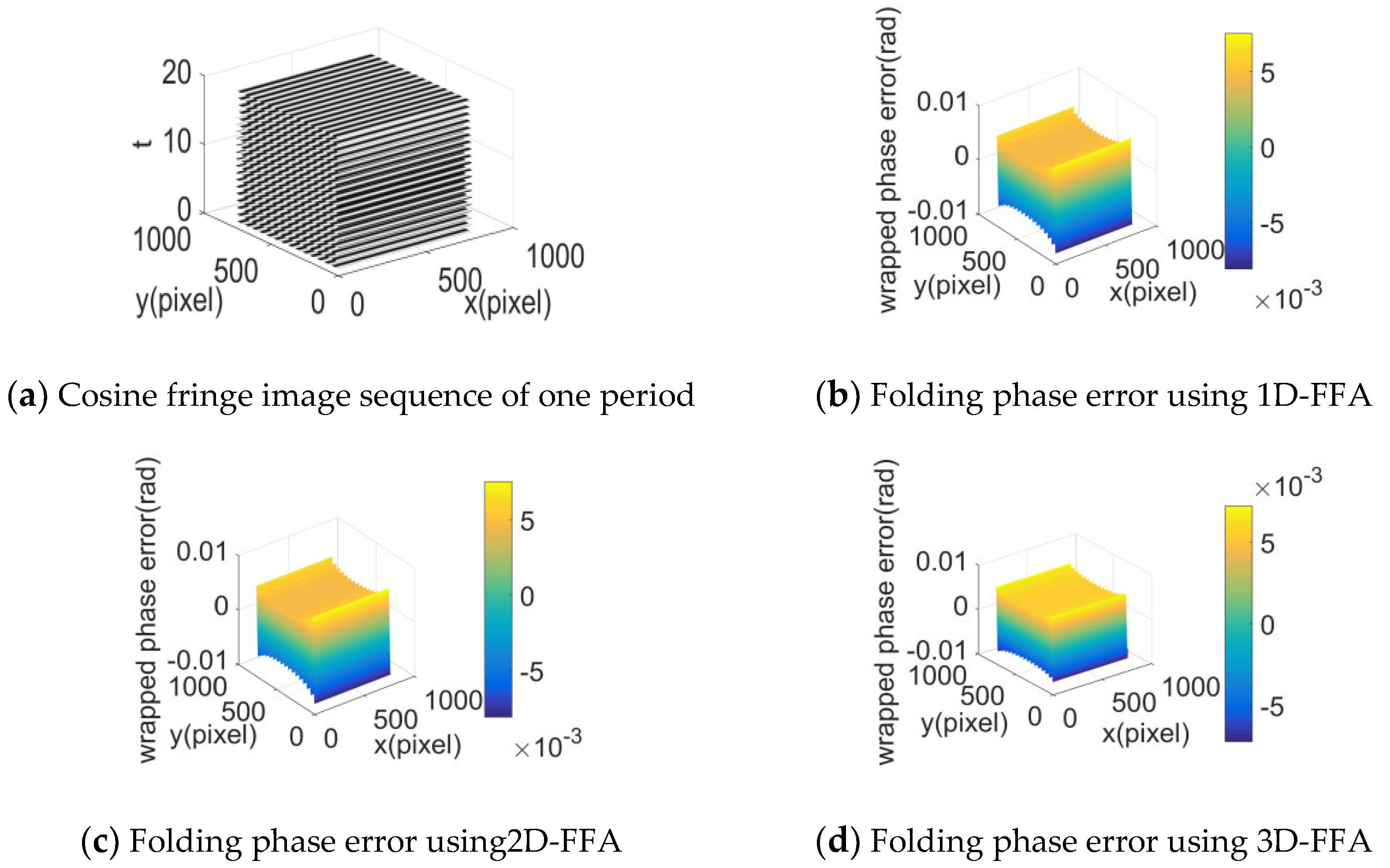

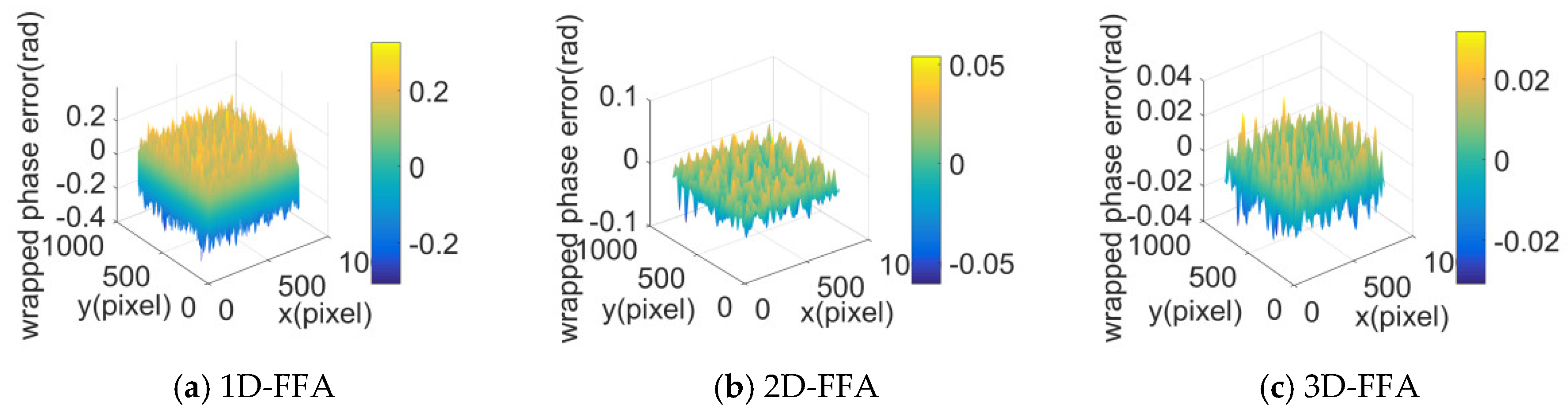

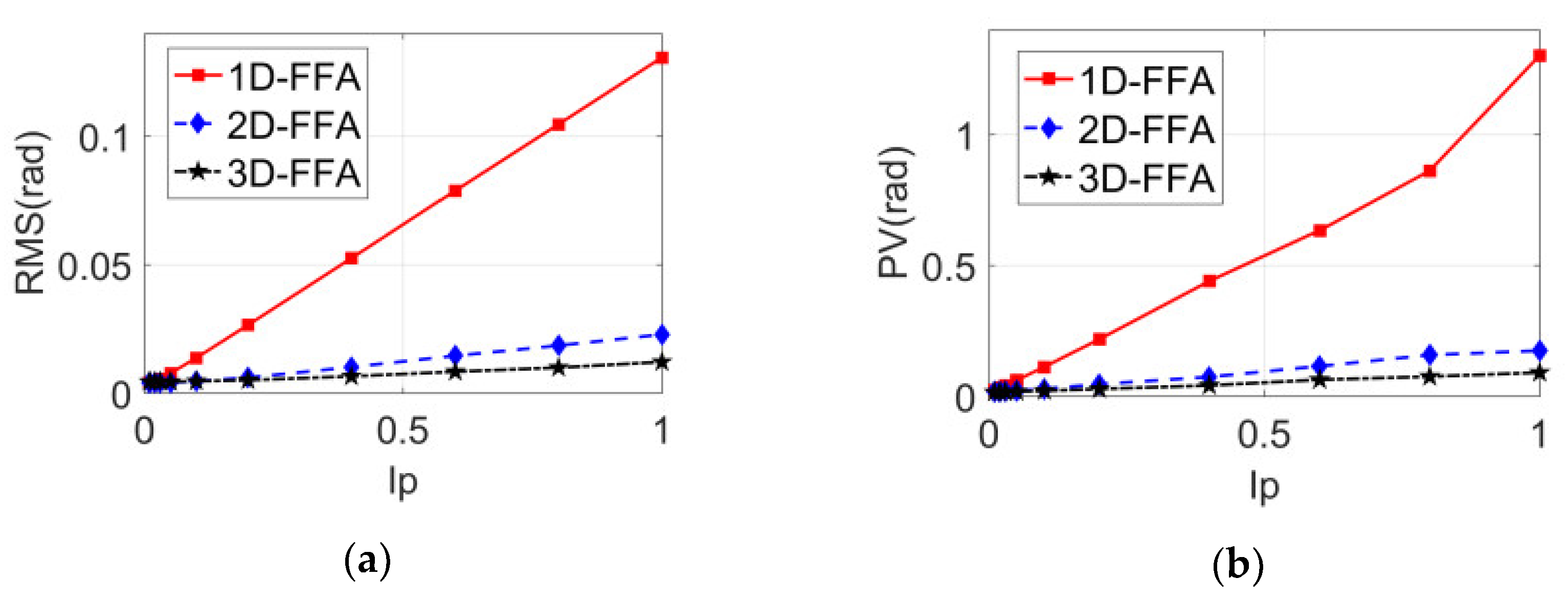

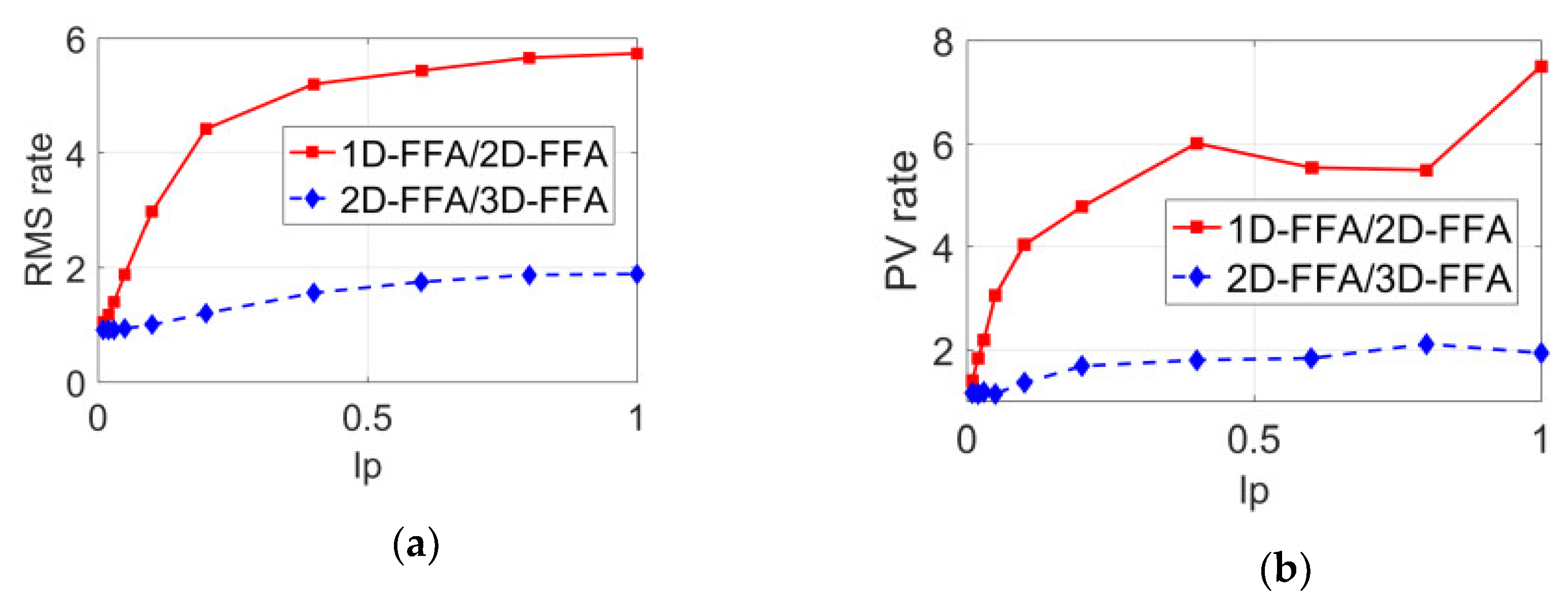

5.1. Simulation Experiments of Folding Phase Extraction

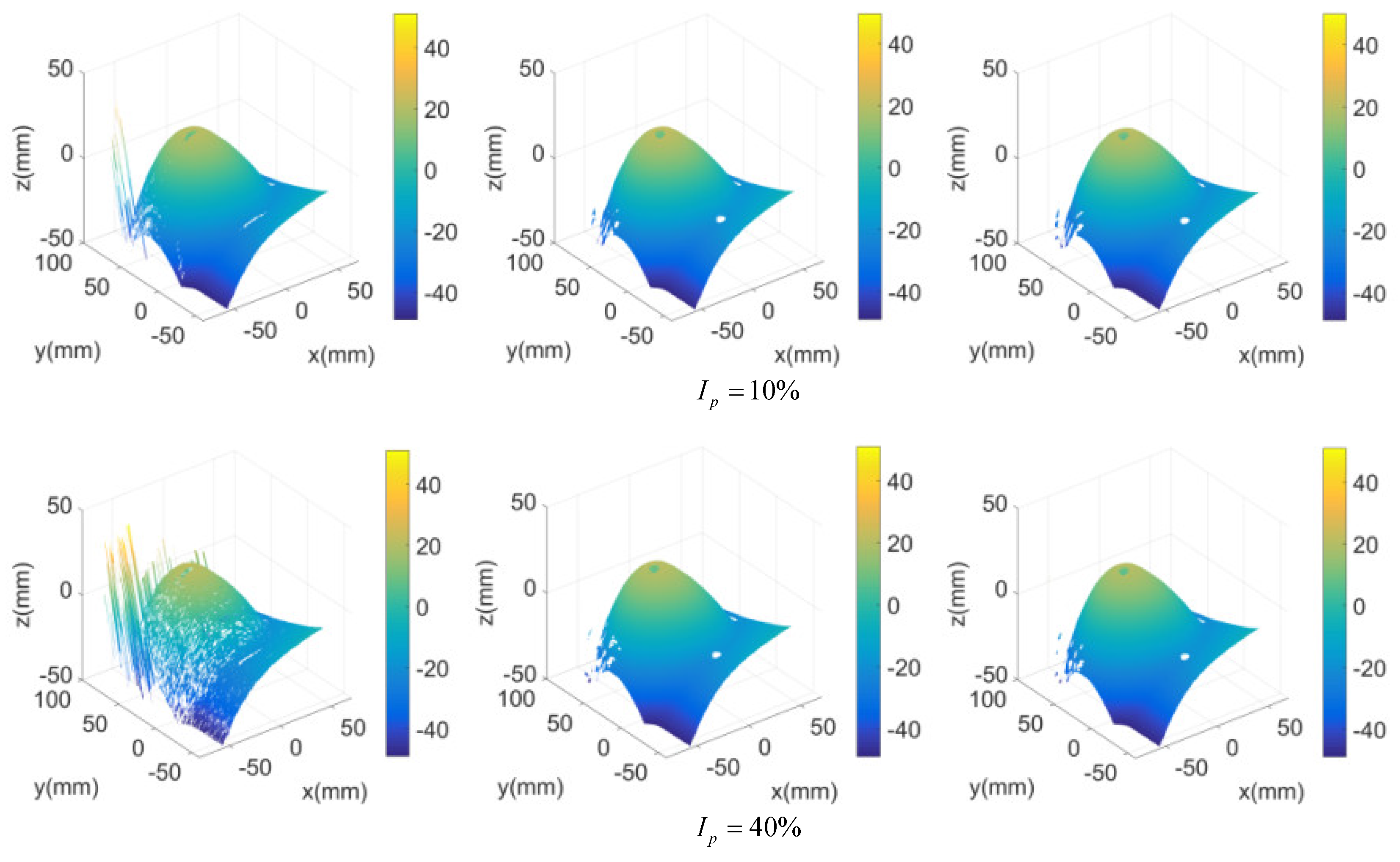

5.2. Chest Model Measurement Experiments

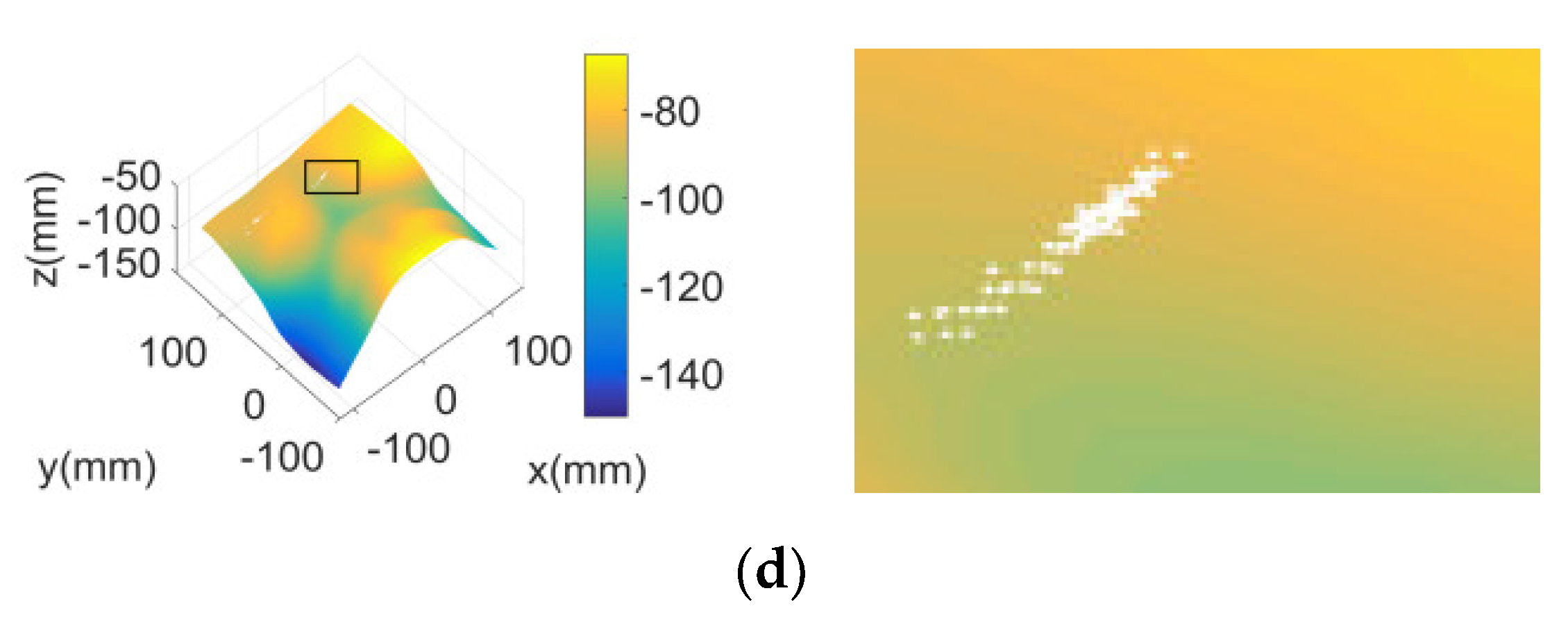

5.3. Measurement Experiments of the Human Chest and Abdomen Surface

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fassi, A.; Schaerer, J.; Fernandes, M.; Riboldi, M.; Sarrut, D.; Baroni, G. Tumor tracking method based on a deformable 4D CT breathing motion model driven by an external surface surrogate. Int. J. Radiat. Oncol. Biol. Phys. 2014, 88, 182–188. [Google Scholar] [CrossRef] [PubMed]

- Fayad, H.; Pan, T.; Pradier, O.; Visvikis, D. Patient specific respiratory motion modeling using a 3D patient’s external surface. Med. Phys. 2012, 39, 3386–3395. [Google Scholar] [CrossRef] [PubMed]

- Povsic, K.; Jezersek, M.; Mozina, J. Real-time 3D visualization of the thoraco-abdominal surface during breathing with body movement and deformation extraction. Physiol. Meas. 2015, 36, 1497–1516. [Google Scholar] [CrossRef] [PubMed]

- Xiao, H.; Hou, L.; You, Y. A Measurement Method of Multi-line Structure light Stereo vision based on Image Fusion. J. Sichuan Univ. (Eng. Sci. Ed.) 2015, 47, 154–158. [Google Scholar]

- Sam, V.D.J.; Dirckx, J.J.J. Real-time structured light profilometry: A review. Opt. Lasers Eng. 2016, 87, 18–31. [Google Scholar]

- Zuo, C.; Tao, T.; Feng, S.; Huang, L.; Asundi, A.; Chen, Q. Micro Fourier Transform Profilometry (mu FTP): 3D shape measurement at 10,000 frames per second. Opt. Lasers Eng. 2018, 102, 70–91. [Google Scholar] [CrossRef]

- Lu, L.; Ding, Y.; Luan, Y.; Luan, Y.; Yin, Y.; Liu, Q.; Xi, J. Automated approach for the surface profile measurement of moving objects based on PSP. Opt. Express 2017, 25, 32120–32131. [Google Scholar] [CrossRef]

- Zhong, M.; Su, X.Y.; Chen, W.J.; You, Z.S.; Lu, M.T.; Jing, H.L. Modulation measuring profilometry with auto-synchronous phase shifting and vertical scanning. Opt. Express 2014, 22, 31620–31634. [Google Scholar] [CrossRef]

- EI-Haddad, M.T.; Tao, Y.K.K. Automated stereo vision instrument tracking for intraoperative OCT guided anterior segment ophthalmic surgical maneuvers. Biomed. Opt. Express 2015, 6, 3014–3031. [Google Scholar] [CrossRef]

- Su, X.Y.; Zhang, Q.C. Dynamic 3-D shape measurement method: A review. Opt. Lasers Eng. 2010, 48, 191–204. [Google Scholar] [CrossRef]

- Shirato, H.; Shimizu, S.; Kitamura, K.; Nishioka, T. Four-dimensional treatment planning and fluoroscopic real-time tumor tracking radiotherapy for moving tumor. Int. J. Radiat. Oncol. Biol. Phys. 2000, 48, 435–442. [Google Scholar] [CrossRef]

- Cao, S.P.; Cao, Y.P.; Zhang, Q.C. Fourier transform profilometry of a single-field fringe for dynamic objects using an interlaced scanning camera. Opt. Commun. 2016, 367, 130–136. [Google Scholar] [CrossRef]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Padilla, M.; Servin, M.; Garnica, G. Fourier analysis of RGB fringe-projection profilometry and robust phase-demodulation methods against crosstalk distortion. Opt. Express 2016, 24, 15417–15428. [Google Scholar] [CrossRef] [PubMed]

- Caspi, D.; Kiryati, N.; Shamir, J. Range imaging with adaptive color structured light. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 470–480. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Q.; Zhang, Y.Z.; Feng, S.J.; Tao, T.Y.; Li, H.; Yin, W.; Zuo, C. Dynamic microscopic 3D shape measurement based on marker-embedded Fourier transform profilometry. Appl. Opt. 2018, 57, 772–780. [Google Scholar] [CrossRef]

- Shi, H.J.; Zhu, F.P.; He, X.Y. Low-Frequency Vibration Measurement Based on Spatiotemporal Analysis of Shadow Moire. Acta Opt. Sin. 2011, 31, 120–124. [Google Scholar]

- Abdul-Rahman, H.S.; Gdeisat, M.A.; Burton, D.R.; Lalor, M.J.; Lilley, F.; Abid, A. Three-dimensional Fourier fringe analysis. Opt. Lasers Eng. 2008, 46, 446–455. [Google Scholar] [CrossRef]

- Zhang, Q.C.; Hou, Z.L.; Su, X.Y. 3D fringe analysis and phase calculation for the dynamic 3D measurement. AIP Conf. Proc. 2010, 1236, 395–400. [Google Scholar]

- Bu, P.; Chen, W.J.; Su, X.Y. Analysis on measuring accuracy of Fourier transform profilometry due to different filtering window. Laser J.. 2003, 24, 43–45. [Google Scholar]

- Zheng, D.L.; Da, F.P.; Kemao, Q.; Seah, H.S. Phase-shifting profilometry combined with Gray-code patterns projection: Unwrapping error removal by an adaptive median filter. Opt. Express 2017, 25, 4700–4713. [Google Scholar] [CrossRef] [PubMed]

| Methods | 1D-FFA | 2D-FFA | 3D-FFA |

|---|---|---|---|

| PV | 0.0156 | 0.0156 | 0.0145 |

| RMS | 0.0040 | 0.0040 | 0.0044 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Yu, S.; Yu, X. 3D Measurement of Human Chest and Abdomen Surface Based on 3D Fourier Transform and Time Phase Unwrapping. Sensors 2020, 20, 1091. https://doi.org/10.3390/s20041091

Wu H, Yu S, Yu X. 3D Measurement of Human Chest and Abdomen Surface Based on 3D Fourier Transform and Time Phase Unwrapping. Sensors. 2020; 20(4):1091. https://doi.org/10.3390/s20041091

Chicago/Turabian StyleWu, Haibin, Shuang Yu, and Xiaoyang Yu. 2020. "3D Measurement of Human Chest and Abdomen Surface Based on 3D Fourier Transform and Time Phase Unwrapping" Sensors 20, no. 4: 1091. https://doi.org/10.3390/s20041091

APA StyleWu, H., Yu, S., & Yu, X. (2020). 3D Measurement of Human Chest and Abdomen Surface Based on 3D Fourier Transform and Time Phase Unwrapping. Sensors, 20(4), 1091. https://doi.org/10.3390/s20041091