Human Activity Sensing with Wireless Signals: A Survey

Abstract

:1. Introduction

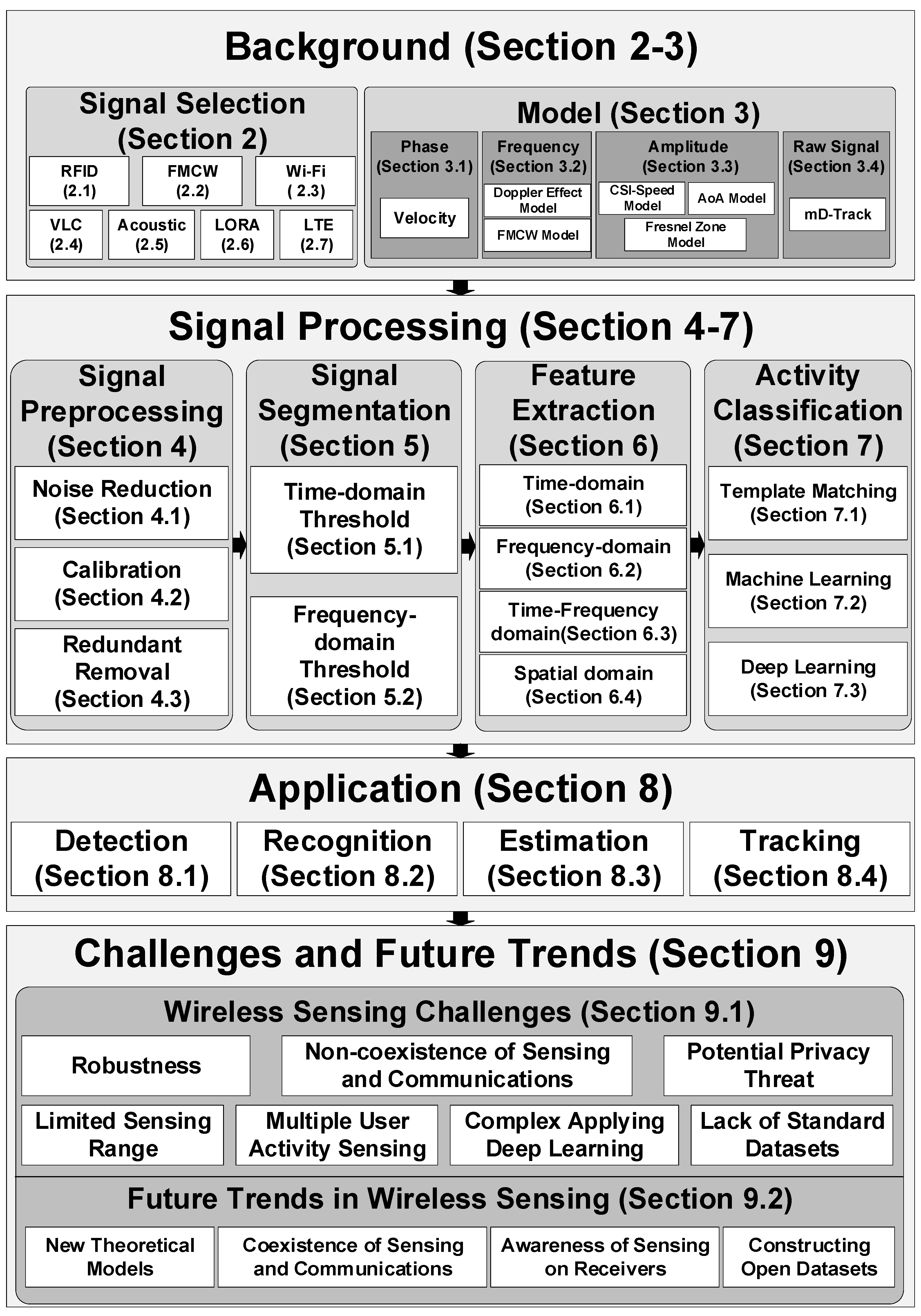

- We provide a comprehensive review of human activity sensing with wireless signals from seven perspectives, including wireless signals, theoretical models, signal preprocessing techniques, activity segmentation, feature extraction, classification, and application.

- We discuss the future trends on human activity sensing with wireless signals, including new theoretical models, the coexistence of sensing and communications, awareness of sensing on receivers, and constructing open datasets.

2. Related Works

2.1. RFID

2.2. FMCW

- High sensitivity: Phases are extremely sensitive to small changes in the object position, which help estimate the tiny vibration frequency of the target (e.g., vibrations of breathing and heartbeat).

- High resolution: The wireless bandwidth determines distance resolution. FMCW radar usually has a large bandwidth, so it achieves a high distance resolution.

2.3. Wi-Fi

2.4. Visible Light Communication

- Low cost: VLC uses low-cost, high-efficiency photodiodes (LED), which can reuse the existing lighting infrastructure.

- High transmission efficiency: VLC transmission process is fast and not subject to electromagnetic interference.

- Deployment effort: For perception accuracy, it needs to deploy hundreds of photodiodes.

- High maintenance costs: Photodiodes age fast and have a weak anti-fouling ability. In order to ensure the perception accuracy of human actions, it is necessary to replace the old photodiodes in time, resulting in higher maintenance costs.

- Vulnerable to ambient light: different intensity levels of ambient light may push photodiodes up into the saturation region, affecting the accuracy of motion perception.

2.5. Acoustic

2.6. LoRa

2.7. LTE

3. Modeling Human Activity with Wireless Signal

3.1. Phase

3.2. Frequency

3.3. Amplitude

3.4. Raw Signals

4. Signal Preprocessing

4.1. Noise Reduction

4.2. Calibration

4.3. Redundant Removal

5. Signal Segmentation

5.1. Time-Domain Threshold

5.2. Frequency-Domain Threshold

6. Feature Extraction

7. Activity Classification

7.1. Templated Matching

7.2. Machine Learning

7.3. Deep Learning

8. Applications of Wireless Sensing

8.1. Detection Applications

8.2. Recognition Applications

8.3. Estimation Applications

8.4. Tracking Applications

9. Challenges and Future Trends of Wireless Sensing

9.1. Wireless Sensing Challenges

9.2. Feature Trends in Wireless Sensing

10. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yang, Z.; Zhou, Z.; Liu, Y. From RSSI to CSI: Indoor localization via channel response. ACM Comput. Surv. 2013, 46, 1–32. [Google Scholar] [CrossRef]

- Xiao, J.; Zhou, Z.; Yi, Y.; Ni, L.M. A Survey on Wireless Indoor Localization from the Device Perspective. ACM Comput. Surv. 2016, 49, 1–31. [Google Scholar] [CrossRef]

- Zou, Y.; Liu, W.; Wu, K.; Ni, L.M. Wi-Fi Radar: Recognizing Human Behavior with Commodity Wi-Fi. IEEE Commun. Mag. 2017, 55, 105–111. [Google Scholar] [CrossRef]

- Wu, D.; Zhang, D.; Xu, C.; Wang, H.; Li, X. Device-Free WiFi Human Sensing: From Pattern-Based to Model-Based Approaches. IEEE Commun. Mag. 2017, 55, 91–97. [Google Scholar] [CrossRef]

- Yousefi, S.; Narui, H.; Dayal, S.; Ermon, S.; Valaee, S. A Survey of Human Activity Recognition Using WiFi CSI. arXiv 2017, arXiv:170807129. [Google Scholar]

- Al-Qaness, M.; Abd Elaziz, M.; Kim, S.; Ewees, A.; Abbasi, A.; Alhaj, Y.; Hawbani, A. Channel State Information from Pure Communication to Sense and Track Human Motion: A Survey. Sensors 2019, 19, 3329. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Jian, K.; Hou, Y.; Dou, W.; Zhang, C.; Huang, Z.; Guo, Y. A Survey on Human Behavior Recognition Using Channel State Information. IEEE Access 2019, 7, 155986–156024. [Google Scholar] [CrossRef]

- Ma, Y.; Zhou, G.; Wang, S. WiFi Sensing with Channel State Information: A Survey. ACM Comput. Surv. 2019, 52, 1–36. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Liu, H.; Chen, Y.; Wang, Y.; Wang, C. Wireless Sensing for Human Activity: A Survey. IEEE Commun. Surv. Tutor. 2019, 1–17. [Google Scholar] [CrossRef]

- Wang, J.; Vasisht, D.; Katabi, D. RF-IDraw: Virtual touch screen in the air using RF signals. In Proceedings of the 2014 ACM conference on SIGCOMM-SIGCOMM ’14, Chicago, IL, USA, 17–22 August 2014; pp. 235–246. [Google Scholar] [CrossRef] [Green Version]

- Sekine, M.; Maeno, K. Activity Recognition Using Radio Doppler Effect for Human Monitoring Service. J. Inf. Process. 2012, 20, 396–405. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Ye, C.; Sample, A.P. IDSense: A Human Object Interaction Detection System Based on Passive UHF RFID. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems-CHI ’15, Seoul, Korea, 18–23 April 2015; pp. 2555–2564. [Google Scholar] [CrossRef]

- Kellogg, B.; Talla, V.; Gollakota, S. Bringing Gesture Recognition To All Devices. In Proceedings of the 11th USENIX Symposium on. Networked Systems Design and Implementation, Settle, WA, USA, 2–4 April 2014; pp. 303–316. [Google Scholar]

- Ding, H.; Han, J.; Shangguan, L.; Xi, W.; Jiang, Z.; Yang, Z.; Zhou, Z.; Yang, P.; Zhao, J. A Platform for Free-Weight Exercise Monitoring with Passive Tags. IEEE Trans. on Mobile Comput. 2017, 16, 3279–3293. [Google Scholar] [CrossRef]

- Adib, F.; Hsu, C.-Y.; Mao, H.; Katabi, D.; Durand, F. Capturing the human figure through a wall. ACM Trans. Graph. 2015, 34, 1–13. [Google Scholar] [CrossRef]

- Zhao, M.; Li, T.; Alsheikh, M.A.; Tian, Y.; Zhao, H.; Torralba, A.; Katabi, D. Through-Wall Human Pose Estimation Using Radio Signals. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7356–7365. [Google Scholar]

- Hsu, C.-Y.; Hristov, R.; Lee, G.-H.; Zhao, M.; Katabi, D. Enabling Identification and Behavioral Sensing in Homes using Radio Reflections. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems-CHI ’19, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Sigg, S.; Shi, S.; Ji, Y. RF-Based device-free recognition of simultaneously conducted activities. In Proceedings of the 2013 ACM conference on Pervasive and ubiquitous computing adjunct publication-UbiComp ’13 Adjunct, Zurich, Switzerland, 8–12 September 2013; pp. 531–540. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. WiGest: A Ubiquitous WiFi-based Gesture Recognition System. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Kowloon, Hong Kong, China, 18 May 2015; pp. 1472–1480. [Google Scholar]

- Tan, S.; Yang, J. WiFinger: Leveraging commodity WiFi for fine-grained finger gesture recognition. In Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing-MobiHoc ’16, Paderborn, Germany, 5–8 July 2016; pp. 201–210. [Google Scholar] [CrossRef]

- Ma, Y.; Zhou, G.; Wang, S.; Zhao, H.; Jung, W. SignFi: Sign Language Recognition Using WiFi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–21. [Google Scholar] [CrossRef]

- Li, X.; Zhang, D.; Lv, Q.; Xiong, J.; Li, S.; Zhang, Y.; Mei, H. IndoTrack: Device-Free Indoor Human Tracking with Commodity Wi-Fi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–22. [Google Scholar] [CrossRef]

- Minh, H.; O’Brien, D.; Faulkner, G.; Zeng, L.; Lee, K. 100-Mb/s NRZ Visible Light Communications Using a Postequalized White LED. IEEE Photonics Technol. Lett. 2009, 21, 1063–1065. [Google Scholar] [CrossRef]

- Li, T.; An, C.; Tian, Z.; Campbell, A.T.; Zhou, X. Human Sensing Using Visible Light Communication. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking-MobiCom ’15, Paris, France, 7–11 September 2015; pp. 331–344. [Google Scholar] [CrossRef]

- Li, L.; Hu, P.; Peng, C.; Shen, G.; Zhao, F. Epsilon: A Visible Light Based Positioning System. In Proceedings of the 11th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 14), {USENIX} Association, Seattle, WA, USA, 2–4 April 2014; pp. 331–343. [Google Scholar]

- Yun, S.; Chen, Y.-C.; Zheng, H.; Qiu, L.; Mao, W. Strata: Fine-Grained Acoustic-based Device-Free Tracking. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services-MobiSys ’17, Niagara Falls, NY, USA, 19–23 June 2017; pp. 15–28. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Sun, K. Device-free gesture tracking using acoustic signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking-MobiCom ’16, New York City, NY, USA, 3–7 October 2016; pp. 82–94. [Google Scholar] [CrossRef] [Green Version]

- Talla, V.; Hessar, M.; Kellogg, B.; Najafi, A.; Smith, J.R.; Gollakota, S. LoRa Backscatter: Enabling the Vision of Ubiquitous Connectivity. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–24. [Google Scholar] [CrossRef]

- Chen, L.; Xiong, J.; Chen, X.; Lee, S.; Chen, K.; Han, D. WideSee: Towards wide-area contactless wireless sensing. In Proceedings of the 17th Conference on Embedded Networked Sensor Systems-SenSys ’19, New York, NY, USA, 10–13 November 2019; pp. 258–270. [Google Scholar] [CrossRef]

- Ferrero, F.; Truong, H.-N.-S.; Le-Quoc, H. Multi-harvesting solution for autonomous sensing node based on LoRa technology. In Proceedings of the 2017 International Conference on Advanced Technologies for Communications (ATC), Quy Nhon, Vietnam, 18–20 October 2017; pp. 250–253. [Google Scholar] [CrossRef]

- Augustin, A.; Yi, J.; Clausen, T.; Townsley, W. A Study of LoRa: Long Range & Low Power Networks for the Internet of Things. Sensors 2016, 16, 1466. [Google Scholar] [CrossRef]

- Pecoraro, G.; Domenico, S.; Cianca, E.; Sanctis, M. CSI-based Fingerprinting for Indoor Localization Using LTE Signals. J. Adv. Signal Process. 2018, 2018, 49. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Tian, Y. Device-Free Motion Detection via On-the-Air LTE Signals. IEEE Commun. Lett. 2018, 22, 1934–1937. [Google Scholar] [CrossRef]

- Wigren, T. LTE Fingerprinting Localization with Altitude. In Proceedings of the 2012 IEEE Vehicular Technology Conference (VTC Fall), Quebec City, QC, Canada, 3–6 September 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Ye, X.; Yin, X.; Cai, X.; Yuste, A.P.; Xu, H. Neural-Network-Assisted UE Localization Using Radio-Channel Fingerprints in LTE Networks. IEEE Access 2017, 5, 12071–12087. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and Modeling of WiFi Signal Based Human Activity Recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking-MobiCom ’15, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Wang, Y.; Ma, J.; Wang, Y.; Li, S. RT-Fall: A Real-Time and Contactless Fall Detection System with Commodity WiFi Devices. IEEE Trans. Mobile Comput. 2017, 16, 511–526. [Google Scholar] [CrossRef]

- Gong, L.; Yang, W.; Man, D.; Dong, G.; Yu, M.; Lv, J. WiFi-Based Real-Time Calibration-Free Passive Human Motion Detection. Sensors 2015, 15, 32213–32229. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Melgarejo, P.; Zhang, X.; Ramanathan, P.; Chu, D. Leveraging directional antenna capabilities for fine-grained gesture recognition. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing-UbiComp ’14 Adjunct, Seattle, WA, USA, 13–17 September 2014; pp. 541–551. [Google Scholar] [CrossRef]

- Arshad, S.; Feng, C.; Liu, Y.; Hu, Y.; Yu, R.; Zhou, S.; Li, H. Wi-chase: A WiFi based human activity recognition system for sensorless environments. In Proceedings of the 2017 IEEE 18th International Symposium on A World of Wireless, Mobile and Multimedia Networks (WoWMoM), Macau, China, 12–15 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Joshi, K.; Bharadia, D.; Kotaru, M.; Katti, S. WiDeo: Fine-grained Device-free Motion Tracing using RF Backscatter. In Proceedings of the 12th USENIX Symposium on Networked Systems Sedign and Implementation, Oakland, CA, USA, 4–6 May 2015. [Google Scholar]

- Li, H.; He, X.; Chen, X.; Fang, Y.; Fang, Q. Wi-Motion: A Robust Human Activity Recognition Using WiFi Signals. IEEE Access 2019, 7, 153287–153299. [Google Scholar] [CrossRef]

- Fang, B.; Lane, N.D.; Zhang, M.; Kawsar, F. HeadScan: A Wearable System for Radio-Based Sensing of Head and Mouth-Related Activities. In Proceedings of the 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Vienna, Austria, 11–14 April 2016; pp. 1–12. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Q.; Xing, J.; Li, J.; Yang, Q. A Device-Free Number Gesture Recognition Approach Based on Deep Learning. In Proceedings of the 2016 12th International Conference on Computational Intelligence and Security (CIS), Wuxi, China, 16–19 December 2016; pp. 57–63. [Google Scholar] [CrossRef]

- Jia, W.; Peng, H.; Ruan, N.; Tang, Z.; Zhao, W. WiFind: Driver fatigue detection with fine-grained Wi-Fi signal features. IEEE Trans. Big Data 2018, 1. [Google Scholar] [CrossRef]

- Li, H.; Yang, W.; Wang, J.; Xu, Y.; Huang, L. WiFinger: talk to your smart devices with finger-grained gesture. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing-UbiComp ’16, Heidelberg, Germany, 12–16 September 2016; pp. 250–261. [Google Scholar] [CrossRef]

- Gao, Q.; Wang, J.; Ma, X.; Feng, X.; Wang, H. CSI-Based Device-Free Wireless Localization and Activity Recognition Using Radio Image Features. IEEE Trans. Veh. Technol. 2017, 66, 10346–10356. [Google Scholar] [CrossRef]

- Ding, E.; Li, X.; Zhao, T.; Zhang, L.; Hu, Y. A Robust Passive Intrusion Detection System with Commodity WiFi Devices. J. Sens. 2018, 2018, 1–12. [Google Scholar] [CrossRef]

- Wu, C.; Yang, Z.; Zhou, Z.; Liu, X.; Liu, Y.; Cao, J. Non-Invasive Detection of Moving and Stationary Human with WiFi. IEEE J. Sel. Areas Commun. 2015, 33, 2329–2342. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, H.; Wang, Y.; Ma, J. Anti-fall: A Non-intrusive and Real-Time Fall Detector Leveraging CSI from Commodity WiFi Devices. In Inclusive Smart Cities and e-Health, 9102; Springer International Publishing: Cham, Switzerland, 2015; pp. 181–193. [Google Scholar]

- Raja, M.; Ghaderi, V.; Sigg, S. WiBot! In-Vehicle Behaviour and Gesture Recognition Using Wireless Network Edge. In Proceedings of the 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), Vienna, Austria, 2–5 July 2018; pp. 376–387. [Google Scholar] [CrossRef] [Green Version]

- Di Domenico, S.; de Sanctis, M.; Cianca, E.; Bianchi, G. A Trained-once Crowd Counting Method Using Differential WiFi Channel State Information. In Proceedings of the 3rd International on Workshop on Physical Analytics-WPA ’16, Singapore, 26–30 June 2016; 42, pp. 37–42. [Google Scholar] [CrossRef]

- Xiao, J.; Wu, K.; Yi, Y.; Wang, L.; Ni, L.M. Pilot: Passive Device-Free Indoor Localization Using Channel State Information. In Proceedings of the 2013 IEEE 33rd International Conference on Distributed Computing Systems, Philadelphia, PA, USA, 8–11 July 2013; pp. 236–245. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.; Yang, Z.; Wu, C.; Shangguan, L.; Liu, Y. Towards omnidirectional passive human detection. In Proceedings of the IEEE INFOCOM, Turin, Italy, 14–19 April 2013; pp. 3057–3065. [Google Scholar] [CrossRef] [Green Version]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th annual international conference on Mobile computing & networking-MobiCom ’13, Miami, FL, USA, 12–16 August 2013; p. 27. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Li, X.; Lv, Q.; Tian, G.; Zhang, D. WiFit: Ubiquitous Bodyweight Exercise Monitoring with Commodity Wi-Fi Devices. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 530–537. [Google Scholar] [CrossRef]

- Qian, K.; Wu, C.; Zhou, Z.; Zheng, Y.; Yang, Z.; Liu, Y. Inferring Motion Direction using Commodity Wi-Fi for Interactive Exergames. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems-CHI ’17, Denver, CO, USA, 6–11 May 2017; pp. 1961–1972. [Google Scholar] [CrossRef]

- Qian, K.; Wu, C.; Yang, Z.; Liu, Y.; Jamieson, K. Widar: Decimeter-Level Passive Tracking via Velocity Monitoring with Commodity Wi-Fi. In Proceedings of the 18th ACM International Symposium on Mobile Ad Hoc Networking and Computing-Mobihoc ’17, Chennai, India, 10–14 July 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Yang, H.; Zhu, L.; Lv, W. A HCI Motion Recognition System Based on Channel State Information with Fine Granularity. In Wireless Algorithms, Systems, and Applications; Springer: Cham, Switzerland, 27 May 2017; pp. 780–790. [Google Scholar]

- Kim, Y.; Ling, H. Human Activity Classification Based on Micro-Doppler Signatures Using a Support Vector Machine. IEEE Trans. Geosci. Remote Sensing 2009, 47, 1328–1337. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar] [CrossRef]

- Lien, J.; Gillian, N.; Karagozler, M.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar. ACM Trans. Graph. 2016, 35, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Kalgaonkar, K.; Raj, B. One-handed gesture recognition using ultrasonic Doppler sonar. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 1889–1892. [Google Scholar] [CrossRef] [Green Version]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-Person Motion Tracking via RF Body Reflections; 2014 Computer Science and Artificial Intelligence Laboratory Technical Report; Massachusetts Institute of Technology: Cambridge, MA, USA, 2014. [Google Scholar]

- Feger, R.; Wagner, C.; Schuster, S.; Scheiblhofer, S.; Jager, H.; Stelzer, A. A 77-GHz FMCW MIMO Radar Based on an SiGe Single-Chip Transceiver. IEEE Trans. Microw. Theory Tech. 2009, 57, 1020–1035. [Google Scholar] [CrossRef]

- Adib, F.; Kabelac, Z.; Katabi, D.; Mille, R. 3D tracking via body radio reflections. In Proceedings of the 11th USENIX Conference on Networked Systems Design and Implementation (NSDI’14), Seattle, WA, USA, 2–4 April 2014; pp. 317–329. [Google Scholar]

- Gierlich, R.; Huettner, J.; Ziroff, A.; Weigel, R.; Huemer, M. A Reconfigurable MIMO System for High-Precision FMCW Local Positioning. IEEE Trans. Microw. Theory Tech. 2011, 59, 3228–3238. [Google Scholar] [CrossRef]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-Person Localization via RF Body Reflections. In Proceedings of the 12th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 15), Oakland, CA, USA, 4–6 May 2015; pp. 279–292. [Google Scholar]

- Venkatnarayan, R.H.; Page, G.; Shahzad, M. Multi-User Gesture Recognition Using WiFi. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services-MobiSys ’18, Munich, Germany, 10–15 June 2018; pp. 401–413. [Google Scholar] [CrossRef]

- Guo, X.; Liu, B.; Shi, C.; Liu, H.; Chen, Y.; Chuah, M.C. WiFi-Enabled Smart Human Dynamics Monitoring. In Proceedings of the 15th ACM Conference on Embedded Network Sensor Systems-SenSys ’17, Delft, The Netherlands, 6–8 November 2017; pp. 1–13. [Google Scholar] [CrossRef] [Green Version]

- Gu, Y.; Zhan, J.; Ji, Y.; Li, J.; Ren, F.; Gao, S. MoSense: An RF-Based Motion Detection System via Off-the-Shelf WiFi Devices. IEEE Internet Things J. 2017, 4, 2326–2341. [Google Scholar] [CrossRef]

- Liu, J.; Wang, L.; Guo, L.; Fang, J.; Lu, B.; Zhou, W. A research on CSI-based human motion detection in complex scenarios. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Han, C.; Wu, K.; Wang, Y.; Ni, L. WiFall: Device-free Fall Detection by Wireless Networks. IEEE Trans. Mob. Comput. 2017, 16, 581–594. [Google Scholar]

- Xiao, F.; Chen, J.; Xie, X.H.; Gui, L.; Sun, J.L.; Ruchuan, W. SEARE: A System for Exercise Activity Recognition and Quality Evaluation Based on Green Sensing. IEEE Trans. Emerg. Topics Comput. 2018, 1. [Google Scholar] [CrossRef]

- Guo, L.; Wang, L.; Liu, J.; Zhou, W.; Lu, B. HuAc: Human Activity Recognition Using Crowdsourced WiFi Signals and Skeleton Data. Wireless Commun. Mob. Comput. 2018, 2018, 1–15. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, X.; Cao, R.; Wang, X. Robust Indoor Human Activity Recognition Using Wireless Signals. Sensors 2015, 15, 17195–17208. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, W.; Miao, C.; Ma, F.; Yao, S.; Wang, Y.; Yuan, Y.; Xue, H.; Song, C.; Ma, X.; Koutsonikolas, D.; et al. Towards Environment Independent Device Free Human Activity Recognition. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking-MobiCom ’18, New Delhi, India, October 29–November 2 2018; pp. 289–304. [Google Scholar] [CrossRef]

- He, W.; Wu, K.; Zou, Y.; Ming, Z. WiG: WiFi-Based Gesture Recognition System. In Proceedings of the 2015 24th International Conference on Computer Communication and Networks (ICCCN), Las Vegas, NV, USA, 3–6 August 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Xiao, N.; Yang, P.; Yan, Y.; Zhou, H.; Li, X. Motion-Fi: Recognizing and Counting Repetitive Motions with Passive Wireless Backscattering. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 15–19 April 2018; pp. 2024–2032. [Google Scholar]

- Al-qaness, M. Device-free human micro-activity recognition method using WiFi signals. Geo-Spatial Inf. Sci. 2019, 22, 128–137. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-eyes: Device-free location-oriented activity identification using fine-grained WiFi signatures. In Proceedings of the 20th annual international conference on Mobile computing and networking-MobiCom ’14, Maui, HI, USA, 7–11 September 2014; pp. 617–628. [Google Scholar] [CrossRef]

- Virmani, A.; Shahzad, M. Position and Orientation Agnostic Gesture Recognition Using WiFi. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services-MobiSys ’17, Niagara Falls, NY, USA, 19–23 June 2017; pp. 252–264. [Google Scholar] [CrossRef] [Green Version]

- Cohn, G.; Morris, D.; Patel, S.; Tan, D. Humantenna: using the body as an antenna for real-time whole-body interaction. In Proceedings of the 2012 ACM annual conference on Human Factors in Computing Systems-CHI ’12, Austin, TX, USA, 5–10 May 2012; p. 1901. [Google Scholar] [CrossRef]

- Fang, B.; Lane, N.D.; Zhang, M.; Boran, A.; Kawsar, F. BodyScan: Enabling Radio-based Sensing on Wearable Devices for Contactless Activity and Vital Sign Monitoring. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services-MobiSys ’16, Singapore, 26–30 June 2016; pp. 97–110. [Google Scholar] [CrossRef]

- Zeng, Y.; Pathak, P.H.; Xu, C.; Mohapatra, P. Your AP knows how you move: fine-grained device motion recognition through WiFi. In Proceedings of the 1st ACM workshop on Hot topics in wireless - HotWireless ’14, Maui, HI, USA, 11 September 2014; pp. 49–54. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, M.; Lu, L.; Gong, L. Wi-Run: Multi-Runner Step Estimation Using Commodity Wi-Fi. In Proceedings of the 2018 15th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Hong Kong, China, 11–13 June 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, W.; Wang, J.; Zhou, X.; Li, H.; Huang, L. WiStep: Device-free Step Counting with WiFi Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–23. [Google Scholar] [CrossRef]

- Guo, X.; Liu, J.; Shi, C.; Liu, H.; Chen, Y.; Chuah, M.C. Device-free Personalized Fitness Assistant Using WiFi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–23. [Google Scholar] [CrossRef]

- Won, M.; Zhang, S.; Son, S.H. WiTraffic: Low-Cost and Non-Intrusive Traffic Monitoring System Using WiFi. In Proceedings of the 2017 26th International Conference on Computer Communication and Networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Soltanaghaei, E.; Kalyanaraman, A.; Whitehouse, K. Peripheral WiFi Vision: Exploiting Multipath Reflections for More Sensitive Human Sensing. In Proceedings of the 4th International on Workshop on Physical Analytics-WPA ’17, Niagara Falls, NY, USA, 12–19 April 2017; pp. 13–18. [Google Scholar] [CrossRef] [Green Version]

- Zhang, O.; Srinivasan, K. Mudra: User-friendly Fine-grained Gesture Recognition using WiFi Signals. In Proceedings of the 12th International on Conference on emerging Networking EXperiments and Technologies-CoNEXT ’16, Irvine, CA, USA, 12–15 December 2016; pp. 83–96. [Google Scholar] [CrossRef]

- Palipana, S.; Rojas, D.; Agrawal, P.; Pesch, D. FallDeFi: Ubiquitous Fall Detection using Commodity Wi-Fi Devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–25. [Google Scholar] [CrossRef]

- Wang, G.; Zou, Y.; Zhou, Z.; Wu, K.; Ni, L.M. We can hear you with Wi-Fi! In Proceedings of the 20th annual international conference on Mobile computing and networking-MobiCom’14, Maui, HI, USA, 7–11 September 2014; pp. 593–604. [Google Scholar] [CrossRef]

- Xi, W.; Zhao, J.; Li, X.; Zhao, K.; Tang, S.; Liu, X.; Jiang, Z. Electronic frog eye: Counting crowd using WiFi. In Proceedings of the IEEE INFOCOM 2014 - IEEE Conference on Computer Communications, Toronto, ON, Canada, 27 April–2 May 2014; pp. 361–369. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Zhang, X.; Gao, Q.; Yue, H.; Wang, H. Device-Free Wireless Localization and Activity Recognition: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2017, 66, 6258–6267. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, L.; Gao, Q.; Pan, M.; Wang, H. Device-Free Wireless Sensing in Complex Scenarios Using Spatial Structural Information. IEEE Trans. Wireless Commun. 2018, 17, 2432–2442. [Google Scholar] [CrossRef]

- Feng, C.; Arshad, S.; Zhou, S.; Cao, D.; Liu, Y. Wi-Multi: A Three-Phase System for Multiple Human Activity Recognition with Commercial WiFi Devices. IEEE Internet Things J. 2019, 6, 7293–7304. [Google Scholar] [CrossRef]

- Zieger, C.; Brutti, A.; Svaizer, P. Acoustic Based Surveillance System for Intrusion Detection. In Proceedings of the 2009 Sixth IEEE International Conference on Advanced Video and Signal. Based Surveillance, Genova, Italy, 2–4 September 2009; pp. 314–319. [Google Scholar] [CrossRef]

- Sigg, S.; Shi, S.; Buesching, F.; Ji, Y.; Wolf, L. Leveraging RF-channel fluctuation for activity recognition: Active and passive systems, continuous and RSSI-based signal features. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia-MoMM ’13, Vienna, Austria, 2–4 December 2013; pp. 43–52. [Google Scholar]

- Youssef, M.; Mah, M.; Agrawala, A. Challenges: Device-free passive localization for wireless environments. In Proceedings of the 13th annual ACM International Conference on Mobile Computing and Networking - MobiCom’07, Montréal, QC, Canada, 9–14 September 2007; pp. 222–229. [Google Scholar] [CrossRef]

- Sen, S.; Radunovic, B.; Choudhury, R.R.; Minka, T. You are facing the Mona Lisa: spot localization using PHY layer information. In Proceedings of the 10th international conference on Mobile systems, applications, and services-MobiSys ’12, Low Wood Bay, Lake District, UK, 25–29 June 2012; pp. 183–196. [Google Scholar] [CrossRef]

- Bahl, P.; Padmanabhan, V.N. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the IEEE INFOCOM 2000. Conference on Computer Communications. Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies (Cat. No.00CH37064), Tel Aviv, Israel, 26–30 March 2000; pp. 775–784. [Google Scholar] [CrossRef]

- Paul, A.S.; Wan, E.A. RSSI-Based Indoor Localization and Tracking Using Sigma-Point Kalman Smoothers. IEEE J. Sel. Top. Signal. Process. 2009, 3, 860–873. [Google Scholar] [CrossRef]

- Chintalapudi, K.; Iyer, A.P.; Padmanabhan, V.N. Indoor localization without the pain. In Proceedings of the sixteenth annual international conference on Mobile computing and networking-MobiCom ’10, Chicago, IL, USA, 20–24 September 2010; pp. 173–184. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Q.; Yu, Y.; Cheng, P.; Wu, L.; Wang, H. Robust Device-Free Wireless Localization Based on Differential RSS Measurements. IEEE Trans. Ind. Electron. 2013, 60, 5943–5952. [Google Scholar] [CrossRef]

- Wu, D.; Zhang, D.; Xu, C.; Wang, Y.; Wang, H. WiDir: Walking direction estimation using wireless signals. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing-UbiComp ’16, Heidelberg, Germany, 12–16 September 2016; pp. 351–362. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, H.; Wu, D. Toward Centimeter-Scale Human Activity Sensing with Wi-Fi Signals. Computer 2017, 50, 48–57. [Google Scholar] [CrossRef]

- Zhang, F.; Niu, K.; Xiong, J.; Jin, B.; Gu, T.; Jiang, Y.; Zhang, D. Towards a Diffraction-based Sensing Approach on Human Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–25. [Google Scholar] [CrossRef]

- Duan, S.; Yu, T.; He, J. WiDriver: Driver Activity Recognition System Based on WiFi CSI. Int. J. Wirel. Inf. Netw. 2018, 25, 146–156. [Google Scholar] [CrossRef]

- Wu, K.; Xiao, J.; Yi, Y.; Gao, M.; Ni, L.M. FILA: Fine-grained indoor localization. In Proceedings of the 2012 Proceedings IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 2210–2218. [Google Scholar] [CrossRef] [Green Version]

- Tian, Z.; Wang, J.; Yang, X.; Zhou, M. WiCatch: A Wi-Fi Based Hand Gesture Recognition System. IEEE Access 2018, 6, 16911–16923. [Google Scholar] [CrossRef]

- Adib, F.; Katabi, D. See through walls with WiFi! In Proceedings of the ACM SIGCOMM 2013 conference on SIGCOMM (SIGCOMM ’13), Association for Computing Machinery, New York, NY, USA, 13 September 2013; pp. 75–86. [Google Scholar] [CrossRef] [Green Version]

- Karanam, C.R.; Korany, B.; Mostofi, Y. Tracking from one side: multi-person passive tracking with WiFi magnitude measurements. In Proceedings of the 18th International Conference on Information Processing in Sensor Networks-IPSN ’19, Montreal, QC, Canada, 16–18 April 2019; pp. 181–192. [Google Scholar] [CrossRef]

- Sen, S.; Lee, J.; Kim, K.-H.; Congdon, P. Avoiding multipath to revive in building WiFi localization. In Proceedings of the 11th annual international conference on Mobile systems, applications, and services-MobiSys ’13, Taipei, Taiwan, 25–28 June 2013; pp. 249–262. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Sen, S.; Koutsonikolas, D.; Kim, K.-H. WiDraw: Enabling Hands-free Drawing in the Air on Commodity WiFi Devices. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking-MobiCom ’15, Paris, France, 7–11 September 2015; pp. 77–89. [Google Scholar] [CrossRef]

- Xie, Y.; Xiong, J.; Li, M.; Jamieson, K. mD-Track: Leveraging Multi-Dimensionality for Passive Indoor Wi-Fi Tracking. In Proceedings of the 25th Annual International Conference on Mobile Computing and Networking-MobiCom ’19, Los Cabos, Mexico, 1–5 September 2019; pp. 1–16. [Google Scholar] [CrossRef]

- Gjengset, J.; Xiong, J.; McPhillips, G.; Jamieson, K. Phaser: Enabling phased array signal processing on commodity WiFi access points. In Proceedings of the 20th annual international conference on Mobile computing and networking-MobiCom ’14, Maui, HI, USA, 7–11 September 2014; pp. 153–164. [Google Scholar] [CrossRef] [Green Version]

- Niu, K.; Zhang, F.; Xiong, J.; Li, X.; Yi, E.; Zhang, D. Boosting fine-grained activity sensing by embracing wireless multipath effects. In Proceedings of the 14th International Conference on emerging Networking EXperiments and Technologies-CoNEXT ’18, Heraklion, Greece, 4–7 December 2018; pp. 139–151. [Google Scholar] [CrossRef]

- Xiong, J.; Jamieson, K. ArrayTrack: A Fine-Grained Indoor Location System. In Proceedings of the 10th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 13, New Chicago, IL, USA, 2–5 April 2013. [Google Scholar]

- IEEE Standard for Information technology– Local and metropolitan area networks– Specific requirements–Part. 11: Wireless LAN Medium Access Control. (MAC) and Physical Layer (PHY) Specifications Amendment 5: Enhancements for Higher Throughput. 2009. Available online: https://ieeexplore.ieee.org/servlet/opac?punumber=5307291 (accessed on 14 February 2020).

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. B 1977, 39, 1–38. [Google Scholar]

- Feder, M.; Weinstein, E. Parameter estimation of superimposed signals using the EM algorithm. IEEE Trans. Acoust. Speech Signal. Process. 1988, 36, 477–489. [Google Scholar] [CrossRef] [Green Version]

- Tan, S.; Zhang, L.; Wang, Z.; Yang, J. MultiTrack: Multi-User Tracking and Activity Recognition Using Commodity WiFi. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems-CHI ’19, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Li, H.; Chen, X.; Jing, G.; Wang, Y.; Cao, Y.; Li, F.; Zhang, X. An Indoor Continuous Positioning Algorithm on the Move by Fusing Sensors and Wi-Fi on Smartphones. Sensors 2015, 15, 31244–31267. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Cao, J.; Liu, X.; Liu, X. Wi-Count: Passing People Counting with COTS WiFi Devices. In Proceedings of the 2018 27th International Conference on Computer Communication and Networks (ICCCN), Hangzhou, China, 30 July–2 August 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Yang, Y.; Cao, J.; Liu, X.; Liu, X. Door-Monitor: Counting In-and-out Visitors with COTS WiFi Devices. IEEE Internet Things J. 2019, 1. [Google Scholar] [CrossRef]

- Zou, H.; Zhou, Y.; Yang, J.; Gu, W.; Xie, L.; Spanos, C. FreeCount: Device-Free Crowd Counting with Commodity WiFi. In Proceedings of the GLOBECOM 2017-2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Wilson, J.; Patwari, N. Radio Tomographic Imaging with Wireless Networks. IEEE Trans. Mob. Comput. 2010, 9, 621–632. [Google Scholar] [CrossRef] [Green Version]

- Wilson, J.; Patwari, N. See-Through Walls: Motion Tracking Using Variance-Based Radio Tomography Networks. IEEE Trans. Mob. Comput. 2011, 10, 612–621. [Google Scholar] [CrossRef]

- Feng, C.; Arshad, S.; Liu, Y. MAIS: Multiple Activity Identification System Using Channel State Information of WiFi Signals. In WASA 2017: Wireless Algorithms, Systems, and Applications; Ma, L., Khreishah, A., Zhang, Y., Yan, M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 419–432. [Google Scholar]

- Vossiek, M.; Roskosch, R.; Heide, P. Precise 3-D Object Position Tracking using FMCW Radar. In Proceedings of the 29th European Microwave Conference, Munich, Germany, 5–7 October 1999; pp. 234–237. [Google Scholar] [CrossRef]

- Vasisht, D.; Kumar, S.; Katabi, D. Decimeter-Level Localization with a Single WiFi Access Point. In Proceedings of the 13th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 16), Santa Clara, CA, USA, 16–18 March 2016; pp. 165–178. [Google Scholar]

- Domenico, S.D.; Sanctis, M.D.; Cianca, E.; Giuliano, F.; Bianchi, G. Exploring Training Options for RF Sensing Using CSI. IEEE Commun. Mag. 2018, 56, 116–123. [Google Scholar] [CrossRef]

- Xiao, J.; Wu, K.; Yi, Y.; Wang, L.; Ni, L.M. FIMD: Fine-grained Device-free Motion Detection. In Proceedings of the 2012 IEEE 18th International Conference on Parallel and Distributed Systems, Singapore, 17–19 December 2012; pp. 229–235. [Google Scholar] [CrossRef]

- Kong, H.; Lu, L.; Yu, J.; Chen, Y.; Kong, L.; Li, M. FingerPass: Finger Gesture-based Continuous User Authentication for Smart Homes Using Commodity WiFi. In Proceedings of the Twentieth ACM International Symposium on Mobile Ad Hoc Networking and ComputingJuly, Catania, Italy, 2–5 July 2019; pp. 201–210. [Google Scholar]

- Guvenc, I.; Abdallah, C.T.; Jordan, R.; Dedeoglu, O. Enhancements to RSS Based Indoor Tracking Systems Using Kalman Filters. In Proceedings of the International Signal Processing Conference (ISPC) and Global Signal Processing Expo (GSPx), Dallas, TX, USA, 31 March–3 April 2003; pp. 1–6. [Google Scholar]

- Wu, K.; Xiao, J.; Yi, Y.; Chen, D.; Luo, X.; Ni, L.M. CSI-Based Indoor Localization. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 1300–1309. [Google Scholar] [CrossRef] [Green Version]

- Bekkali, A.; Sanson, H.; Matsumoto, M. RFID Indoor Positioning Based on Probabilistic RFID Map and Kalman Filtering. In Proceedings of the Third IEEE International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob 2007), White Plains, NY, USA, 8–10 October 2007. [Google Scholar] [CrossRef]

- Wang, Z.; Xiao, F.; Ye, N. A See-through-Wall System for Device-Free Human Motion Sensing Based on Battery-Free RFID. ACM Trans. Embed. Comput. Syst. 2017, 17, 1–21. [Google Scholar] [CrossRef]

- Wang, J.; Katabi, D. Dude, Where’s My Card? RFID Positioning That Works with Multipath and Non-Line of Sight. In Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM, Hong Kong, China, 12–16 August 2013; pp. 51–62. [Google Scholar] [CrossRef]

- Tong, W.; Buglass, S.; Li, J.; Chen, L.; Ai, C. Smart and Private Social Activity Invitation Framework Based on Historical Data from Smart Devices. In Proceedings of the 10th EAI International Conference on Mobile Multimedia Communications (MOBIMEDIA’17) 2017, Chongqing, China, 13–14 July 2017. [Google Scholar] [CrossRef]

| Reference | Signals | Topic Focus | Application Scope |

|---|---|---|---|

| Yang et al. [1], 2013 | Wi-Fi (RSS, CSI) | RSS and CSI-based solutions | indoor localization |

| Xiao et al. [2], 2016 | UWB, RFID, Wi-Fi, acoustic | models, basic principles, and data fusion techniques | indoor localization |

| Zou et al. [3], 2017 | Wi-Fi (CSI) | model-based (CSI-Speed model, Fresnel zone model) approaches | human behavior recognition |

| Wu et al. [4], 2017 | Wi-Fi (CSI) | pattern-based and model-based (CSI-Speed model, AoA model, Fresnel zone model) | human behavior recognition, respiration detection |

| Yousefi et al. [5], 2017 | Wi-Fi (CSI) | deep learning classification | human behavior recognition |

| Al-qaness et al. [6], 2019 | Wi-Fi (CSI) | CSI-based sensing mechanism, methodology (signal pre-processing, feature extraction, classification), limitations and challenges | detection (motion), recognition (daily activity, hand gesture), localization |

| Wang et al. [7], 2019 | Wi-Fi (CSI) | base signal selection, signal pre-processing feature extraction, classification, issues, future trends | behavior recognition |

| Ma et al. [8], 2019 | Wi-Fi (CSI) | signal processing, model-based and learning-based algorithms, performance, challenges, future trends | detection, recognition, estimation, tracking, |

| Liu et al. [9], 2019 | Wi-Fi (RSSI, CSI), FMCW, Doppler shift | basic principles, techniques and system structures, future directions and limitations | detection, recognition, localization, tracking |

| This survey | RFID, FMCW, Wi-Fi, visible light, LoRa, acoustic, LTE | model (Doppler, Fresnel zone, FMCW, AoA, mD-Track), signal pre-processing, segmentation, feature extraction, classification, challenges, future trends | detection, recognition, estimation, tracking |

| Type | Energy | Frequency | Distance | Penetration |

|---|---|---|---|---|

| LF | p | 125 kHz | ≤10 cm | blocked by metal |

| HF | p/a | 13.56 MHz | ≤1.2 m | blocked by metal |

| UHF | p/a | 860~960 MHz | ≤4 m | blocked by metal, liquid |

| microwave | p/a | 2.45 GHz, 5.8 GHz | ≤100 m | blocked by metal, liquid |

| Attribute | RSS | CSI |

|---|---|---|

| network layer | MAC | physical |

| access | communications equipment | CSI tool |

| generalization | all devices | some devices |

| sensitivity | low | high |

| time resolution | packet-scale | multi-path signal cluster scale |

| frequency resolution | / | subcarrier scale |

| Signal Feature | Motion Feature | Models |

|---|---|---|

| Phase | Velocity | Coarse-grained estimation [13,20,21,26,33,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54] |

| Frequency | Velocity | Doppler effect model [10,12,22,55,56,57,58,59,60,61,62,63], FMCW chirp model [17,64] |

| Direction | Doppler effect model [22,57,58] | |

| Distance (dRX) | FMCW chirp model [15,16,17,64,65,66,67,68] | |

| Amplitude | Velocity | CSI-Speed model [36,69], Coarse-grained estimation [14,18,19,23,24,25,26,37,40,42,43,46,47,49,50,52,53,54,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105] |

| Distance (dLoS) | Fresnel zone model [106,107,108,109,110] | |

| Direction | Fresnel zone model [106], AoA with antenna array [11,15,65,111,112,113,114,115] | |

| aw Signal | Distance, Direction, Velocity | mD-Track [116] |

| Category | Example | Pros and Cons |

|---|---|---|

| Noise Reduction | time-domain filter: moving average [14,45,53,54,70,73,100,123], median [74], single-sideband Gaussian [76], weighted moving average [42,46,73,75,87,103,124], local outlier factor [59], Hampel filter [49,59,77,86], Savitzky–Golay filter [86,108,125,126] | Pros: low computation cost, suitable for coarse-grained motion recognition and tracking; Cons: poor sensitivity to fine-grained gestures |

| Noise Reduction | frequency-domain filter: passband [37,41,44,57,58,87,93], wavelet [19,59,87,92,93,95,97,127], Kalman [10,24,25,32,41,50,66,103,124], Butterworth [12,19,39,40,43,46,51,52,70,71,74,75,80,81,82,83,89,108], Birge–Massart [78] | Pros: high sensitivity to all activities including finger gestures; Cons: complex calculation. |

| Calibration | interpolation [37,51,66,92], normalization [50,77,79,94,128,129], phase calibration [21,42,44,51,52,68,90] | |

| Redundant Information Removal | first PC selected [42,45,57,58,84], first PC discarded [36,80], second PC selected [51], third PC selected [69], first two PCs selected [43], top-5 subcarriers [39], static environment removal [15,22,65], multipath mitigation [20,68,87,93,102,105,110,111,113,114] | |

| Category | Type | Pros and Cons | Examples |

|---|---|---|---|

| Time-domain threshold | Phase | Pros: high sensitivity; Cons: poor robustness | phase difference [23,26,37,71] |

| Amplitude | Pros: easy to access; Cons: noise variation | amplitude [19,20,82,83,84,86], amplitude difference [14,44], amplitude variance [23,24,40,85,97,108] | |

| Statistics | Pros: accurate; Cons: complex computation | cumulative moving variance [75,76,81], average RSS [39], movement indicator threshold [36,46,87], CV threshold [45], SVR and LVR [38], LOF anomaly detection [73,76,78] | |

| Energy | Pros: easy to access; Cons: susceptible to noise | energy [45,89] | |

| Similarity | Pros: suitable for repetitive motion; Cons: poor tolerance | autocorrelation [82], optimize template and cutting segments alternately [79] | |

| Frequency-domain threshold | Peak | Pros: directly available; Cons: susceptible to noise | spectrum [25,26,28,29,69], Doppler shift [55,56,57,58] |

| Energy | Pros: easy to collect; Cons: susceptible to noise | energy [88,89,98,99,105,113] | |

| Similarity | Pros: suitable for cutting repetitive motion; Cons: poor tolerance | Kullback–Leibler divergence [10], impulse [56] |

| Category | Pros and Cons | Features |

|---|---|---|

| Time-domain | Pros: relatively simple calculation; Cons: vulnerable to environmental changes and noise | maximum, minimum, mean, standard deviation, kurtosis, skewness, variance, median and median absolute deviation, percentiles, root sum square, interquartile range [13,18,37,40,42,43,46,48,49,50,62,72,73,75,76,78,79,80,83,84,85,88,89,90,97,108,127,130], time lag [37], power decline ratio [37], amplitude sequence [20,54,74,91], phase sequence [39,51,107], CV [38] |

| Frequency-domain | Pros: capture the periodical characteristics of human motion; Cons: large amount of calculation | FFT coefficient [12,83], dominant frequency [43], power spectrum density [88], spectral entropy [43,57,70,72,73,80,90,92], fractal dimension [92], frequency domain energy [85], Doppler velocity intensity [56], frequency sequence [10,26,28,29,49,55,60,95,98,104,113,114,124], spectrograms [19,47] |

| Time-Frequency domain | Pros: reflects both time and frequency domain information; Cons: heavy calculation | DWT coefficient [23,36,42,82,93,97,99,103,123,125,126,131], HHT [45,80] |

| Spatial domain | Pros: suitable for localization and tracking; Cons: often need specific equipment | AoA [15,22,25,41,65,66,112,113,114,115], distance [15,26,100,105,106,110], ToF [41,64,66,68,113,132] |

| Category | Pros and Cons | Examples |

|---|---|---|

| Template Matching | Pros: no training needed; Cons: accuracy depending on the specific template | Euclidean distance [19,94], DTW [10,20,39,42,55,74,91,93,97], EMD [54,70,81,89], Jaccard coefficients [69] |

| Machine Learning | Pros: high efficiency and robustness; Cons: a lot of training data required | KNN [18,40,46,48,51,52,59,82,99,124], SVM [12,13,32,37,40,42,44,45,47,48,49,56,59,60,70,72,73,75,76,78,79,83,84,89,90,92,97,127,130], decision tree [10,57,85], K-means [76,81], Naive Bayes [85], HMM [36], sparse representation via ℓ1 minimization [43] |

| Deep Learning | Pros: strong learning ability and portability; Cons: large amount of training data required | CNN [16,17,21,48,67,77,108,126], DNN [59,88], SOM [96], sparse autoencoder network [95], LSTM [97] |

| Application | Reference | Signal | Model | Signal Processing | Classification |

|---|---|---|---|---|---|

| fall detection | WiFall [73], 2014 | Wi-Fi (CSI) | amplitude estimation | moving average filter | time domain feature + SVM |

| fall detection | Anti-fall [50], 2015 | Wi-Fi (CSI) | amplitude and phase estimation | low-pass filter, normalization | time domain feature + SVM |

| fall detection | RT-fall [37], 2017 | Wi-Fi (CSI) | amplitude estimation | passband filter, interpolation + phase difference | time domain feature + SVM |

| fall detection | FallDefi [92], 2018 | Wi-Fi (CSI) | amplitude estimation | wavelet filter, interpolation | frequency domain feature + SVM |

| intrusion detection | Zieger [98], 2009 | acoustic | amplitude estimation | frequency quantity | / |

| intrusion detection | DeMan [49], 2015 | Wi-Fi (CSI) | amplitude and phase estimation | Hampel filter, linear fitting | time and frequency domain feature + SVM |

| motion detection | FRID [38], 2015 | Wi-Fi (CSI) | phase estimation | SVR and LVR threshold | / |

| motion detection | Liu et al. [72], 2017 | Wi-Fi (CSI) | amplitude estimation | segmentation by skewness | time and frequency domain feature + SVM |

| walking step detection, counting | WiStep [87], 2018 | Wi-Fi (CSI) | amplitude estimation | passband filter wavelet filter, weighted moving average, multipath mitigation | / |

| walking direction detection, respiration rate detection | Zhang et al. [107], 2017 | Wi-Fi (CSI) | Fresnel zone model | multiple carrier frequencies | / |

| Application | Reference | Signal | Model | Signal Processing | Classification |

|---|---|---|---|---|---|

| fitness activity recognition and counting | FEMO [10], 2017 | RFID | Doppler effect model | Kalman filter + Kullback–Leibler divergence | frequency sequence feature + decision tree, DTW |

| fitness activity recognition, user identification | Guo et al. [88], 2018 | Wi-Fi (CSI) | amplitude estimation | low-order polynomial fitting + subtract mean value | DNN |

| fitness activity recognition, counting | WiFit [56], 2018 | Wi-Fi (CSI) | Doppler effect model | Doppler frequency shift peak threshold | frequency-domain feature + SVM |

| fitness activity recognition, counting | Motion-Fi [79], 2018 | RFID | amplitude estimation | normalization + optimize template, cut segments alternately | time domain feature + SVM |

| fitness activity recognition, counting | Zhang et al. [108], 2019 | Wi-Fi (CSI) | Fresnel zone model | Savitzky–Golay filter + amplitude variance threshold | time domain feature + CNN |

| fitness activity recognition | SEARE [74], 2019 | Wi-Fi (CSI) | amplitude estimation | Butterworth low-pass filter, median filter | time domain feature + DTW |

| daily activity recognition | Kim et al. [60], 2009 | Doppler radar | Doppler effect model | noise threshold filtering based on Gaussian distribution | frequency domain feature + SVM/DT |

| daily activity recognition | Sekine et al. [12], 2012 | Doppler sensor | Doppler effect model | Butterworth low-pass filter | frequency domain feature + SVM |

| daily activity recognition | Sigg et al. [99], 2013 | RF signal (RSSI) | RSSI fingerprints model | normalized spectral energy | time-frequency domain feature + template matching |

| daily activity recognition | Sigg et al. [18], 2013 | Wi-Fi (CSI) | amplitude estimation | / | time domain feature + KNN |

| daily activity recognition | E-eyes [81], 2014 | Wi-Fi (CSI) | amplitude estimation | Butterworth low-pass filter + cumulative moving variance threshold | time domain feature + EMD/ K-means |

| daily activity recognition | IDSense [12], 2015 | RFID | phase estimation | 2-s sliding window | time domain feature + SVM |

| daily activity recognition | Wang et al. [76], 2015 | Wi-Fi (CSI) | amplitude estimation | single-sideband Gaussian filter+ LOF Anomaly Detection | time domain feature + SVM |

| daily activity recognition | CARM [36], 2015 | Wi-Fi (CSI) | CSI-Speed model | first PC discard + movement indicator threshold | DWT feature + HMM |

| daily activity recognition | Headscan [43], 2016 | Wi-Fi (CSI) | amplitude estimation | Butterworth low-pass filter, first two PC selection | time and frequency domain feature + sparse representation via ℓ1 minimization |

| daily activity recognition | Mudra [91], 2016 | Wi-Fi (CSI) | amplitude estimation | finite impulse | time-domain feature + DTW |

| daily activity recognition | BodyScan [84], 2016 | Wi-Fi (CSI) | amplitude estimation | first PC selection + amplitude threshold | time domain feature + SVM |

| daily activity recognition | DFL [95], 2017 | Zigbee RSS | amplitude estimation | wavelet filter | sparse autoencoder network |

| daily activity recognition | PeriFi [90], 2017 | Wi-Fi (CSI) | amplitude estimation | phase calibration | time and frequency domain feature + SVM |

| daily activity recognition | WiChase [40], 2017 | Wi-Fi (CSI) | amplitude and phase estimation | Butterworth low-pass filter + amplitude variance threshold | time domain feature + KNN, SVM |

| daily activity recognition | EI [77], 2018 | Wi-Fi, ultrasound | amplitude estimation | Hampel filter, normalization | CNN |

| daily activity recognition | Wang et al. [96], 2018 | Wi-Fi (CSI) | amplitude estimation | median filter, linear | SOM |

| daily activity recognition | HuAc [75], 2018 | Wi-Fi (CSI) | amplitude estimation | Butterworth filter, weighted moving average + moving variance threshold | time domain feature + SVM |

| daily activity recognition | WiMotion [42], 2019 | Wi-Fi (CSI) | amplitude and phase estimation | weighted moving average, phase calibration, first PC selection | time domain feature + DTW, SVM |

| daily activity recognition | Wi-Multi [97], 2019 | Wi-Fi (CSI) | amplitude estimation | PCA, DWT + amplitude variance | time and frequency domain + LSTM |

| daily activity recognition | MultiTrack [123], 2019 | Wi-Fi (CSI) | amplitude estimation | DWT + moving average filter | time and frequency domain+ DTW |

| moving direction recognition | WiDance [57], 2017 | Wi-Fi (CSI) | Doppler effect model | first PC selection + peak threshold of Doppler frequency shift | frequency domain feature + DTW |

| moving direction recognition | WiSome [59], 2017 | Wi-Fi (CSI) | Doppler effect model | local outlier factor, wavelet filter | frequency domain feature + DNN, SVM |

| sign language gesture recognition | SignFi [21], 2018 | Wi-Fi (CSI) | phase estimation | phase calibration | CNN |

| limb gesture recognition | Humanten-na [83], 2012 | wireless | amplitude estimation | Butterworth low-pass filter + amplitude threshold | time and frequency domain feature + SVM |

| limb gesture recognition | WiSee [55], 2013 | Wi-Fi (CSI) | Doppler effect model | Doppler frequency shift threshold | frequency domain feature + DTW |

| limb gesture recognition | Soli [62], 2016 | FMCW | Doppler effect model | soli processing pipeline + high temporal resolution | time-frequency domain feature + random forest, Bayesian network |

| limb gesture recognition | WIAG [82], 2017 | Wi-Fi (CSI) | amplitude estimation | Butterworth filter + amplitude threshold | DWT feature + KNN |

| limb gesture recognition | Mohamm-ed et al. [80], 2019 | Wi-Fi (CSI) | amplitude estimation | Butterworth, first PC discard | time domain feature + random forest |

| coarse gesture estimation | RF-Pose [16], 2018 | FMCW | FMCW chirp model | spectrogram | CNN |

| finger/hand ~gesture recognition | Kalgaonkar et al. [63], 2009 | ultrasonic | Doppler effect model | downsampling + PCA | Gaussian mixture model + Bayesian |

| finger/hand ~gesture recognition | Melgarejo et al. [39], 2014 | directional antenna | phase estimation | Butterworth low-pass filter, 5 top subcarriers selection + average RSS threshold | time domain feature + DTW |

| finger/hand gesture recognition | Apsense [85], 2014 | Wi-Fi (CSI) | amplitude estimation | amplitude variance threshold | time and frequency domain feature + decision tree, naive Bayes |

| finger/hand gesture recognition | AllSee [14], 2014 | RFID | amplitude estimation | moving average filter + amplitude difference threshold | time domain feature + template matching |

| finger/hand gesture recognition | Molchanov et al. [61], 2015 | FMCW | Doppler effect model | static background subtraction | frequency domain feature + template matching |

| finger/hand gesture recognition | WiGest [19], 2015 | Wi-Fi (CSI) | amplitude estimation | Butterworth low-pass filter, wavelet filter + amplitude threshold | time domain feature + template matching |

| finger/hand gesture recognition | WiG [78], 2015 | Wi-Fi (CSI) | amplitude estimation | Birge–Massart filter + LOF Anomaly Detection | time domain feature + SVM |

| finger/hand gesture recognition | Demum [44], 2016 | Wi-Fi (CSI) | phase estimation | passband filter, phase calibration + amplitude difference | time domain feature + SVM |

| finger/hand gesture recognition | Tan et al. [20], 2016 | Wi-Fi (CSI) | phase estimation | multipath mitigation + amplitude threshold | time domain feature + DTW |

| finger/hand gesture recognition | Li et al. [46], 2016 | Wi-Fi (CSI) | amplitude and phase estimation | Butterworth filter, weighted moving average + movement indicator threshold | time domain feature + KNN |

| finger/hand gesture recognition | DELAR [47], 2017 | Wi-Fi (CSI) | amplitude and phase estimation | phase and amplitude threshold | heat map + DNN |

| finger/hand gesture recognition | WIMU [69], 2018 | Wi-Fi (CSI) | CSI-Speed model | third PC selection + frequency quantity threshold | frequency domain feature + Jaccard coefficients |

| finger/hand gesture recognition | WiCatch [111], 2018 | Wi-Fi (CSI) | AoA model with antenna array | multipath mitigation | spectrum feature + SVM |

| fatigue driving posture recognition | WiFind [45], 2018 | Wi-Fi (CSI) | phase estimation | moving average filter, first PC selection + CV threshold | HHT feature + SVM |

| driving gestures recognition | WiTraffic [89], 2017 | Wi-Fi (CSI) | amplitude estimation | Butterworth low-pass filter + energy threshold | time domain feature + SVM/EMD |

| driving gestures recognition | WiBot [51], 2018 | Wi-Fi (CSI) | phase estimation | Butterworth low-pass filter, interpolation, phase calibration, second PC selection + impulse window detection | time domain feature + KNN |

| driving Gestures recognition | WiDriver [109], 2018 | Wi-Fi (CSI) | Fresnel zone model | subcarrier selection | finite automata model + BP |

| mouth movement recognition | WiHear [93], 2014 | Wi-Fi (CSI) | amplitude estimation | passband filter, wavelet filter, multipath mitigation | DWT feature + DTW |

| Application | Reference | Signal | Model | Signal Processing | Classification |

|---|---|---|---|---|---|

| fitness activity recognition, counting | FEMO [10], 2017 | RFID | Doppler effect model | Kalman filter + Kullback–Leibler divergence | frequency domain feature + DTW, decision tree |

| fitness activity recognition, counting | WiFit [56], 2018 | Wi-Fi (CSI) | Doppler effect model | Doppler frequency shift peak threshold | frequency domain feature + SVM |

| fitness activity recognition, counting | Motion-Fi [79], 2018 | RFID | amplitude estimation | normalization + optimize template, cut segments alternately | time domain feature + SVM |

| fitness activity recognition, counting, user identification | Guo et al. [88], 2018 | Wi-Fi (CSI) | amplitude estimation | low-order polynomial fitting + subtract | DNN |

| fitness activity recognition, counting | Zhang et al. [108], 2019 | Wi-Fi (CSI) | Fresnel zone model | Savitzky–Golay filter + amplitude variance threshold | time domain feature + CNN |

| walking step detection, counting | WiStep [87], 2018 | Wi-Fi (CSI) | amplitude estimation | passband filter + wavelet filter + weighted moving average + multipath mitigation | / |

| running step counting | Wi-Run [86], 2018 | Wi-Fi (CSI) | amplitude estimation | Savitzky–Golay filter, Hampel filter + amplitude threshold | time domain feature+ Frechet distance |

| human counting | FCC [94], 2014 | Wi-Fi (CSI) | Grey Verhulst model | percentage of zero elements | time domain feature + SVM |

| human counting | Domenico et al. [52], 2016 | Wi-Fi (CSI) | amplitude estimation | normalization | time domain feature+ Euclidean distance, linear discriminant classifier |

| human counting | MAIS [130], 2017 | Wi-Fi (CSI) | amplitude and phase estimation | low pass filter, phase calibration | time domain feature + KNN |

| human counting | FreeCount [127], 2017 | Wi-Fi (CSI) | phase estimation | wavelet-based filter | time domain feature + SVM |

| human counting | Wi-Count [125], 2018 | Wi-Fi (CSI) | phase estimation | Savitzky–Golay filter, amplitude threshold | time and frequency domain feature + K-means |

| human counting | Door-Monitor [126], 2019 | Wi-Fi (CSI) | amplitude and phase estimation | Savitzky–Golay filter, phase calibration | time domain feature + CNN |

| Application | Reference | Signal | Model | Signal Processing | Localization Feature |

|---|---|---|---|---|---|

| human tracking | Youssef et al. [100], 2007 | Wi-Fi (RSSI) | RSSI estimation | moving average filter + RSSI threshold | distance feature |

| human tracking | Feger et al. [65], 2009 | FMCW | FMCW chirp model + AoA with antenna array | static environment partial removal | AoA in spatial domain feature |

| human indoor localization, tracking | SPKS [103], 2009 | Wi-Fi (RSSI) | amplitude estimation | Kalman filter + weighted moving average threshold | RSSI maps incorporated into a Bayesian framework |

| human tracking | Wilson et al. [128], 2010 | RF signal (RSSI) | radio tomograph-ic imaging | normalization, weighted threshold | spatial covariance feature |

| human tracking | Gierlich et al. [67], 2011 | FMCW | FMCW chirp model | spectrogram | CNN |

| human tracking | VRTI [129], 2011 | RF signal (RSSI) | radio tomograph-y | Kalman filter, normalization | radio tomographic imaging feature |

| human tracking | FILA [110], 2012 | Wi-Fi (CSI) | Fresnel zone model | multipath mitigation | distance feature |

| human tracking | WiVi [112], 2013 | Wi-Fi | AoA with antenna array | initial nulling | AoA in spatial domain feature |

| human tracking | WiTrack [66], 2013 | FMCW | FMCW chirp model | Kalman filter + interpolation | ToF, AoA feature |

| human tracking | Pilot [53], 2013 | Wi-Fi (CSI) | amplitude and phase estimation | moving average filter RSSI threshold | time and frequency domain feature + fingerprinting |

| human tracking | Zhou et al. [54], 2013 | Wi-Fi (RSSI) | amplitude and phase estimation | low-pass filter, moving average filter | time domain feature+ EMD |

| human tracking | Wang et al. [105], 2013 | Zigbee RSS | amplitude estimation | multipath mitigation + frequency-domain threshold | distance feature |

| human tracking | WIZ [64], 2014 | FMCW | FMCW chirp model | map them into 2D heatmaps | ToF feature |

| human tracking | RF-Capture [15], 2015 | FMCW | FMCW chirp model + AoA with antenna array | static environment partial removal | AoA, distance in spatial domain feature |

| human tracking | LiSense [24], 2015 | VLC | amplitude estimation | Kalman filter + amplitude variance + frequency shift | human skeleton feature |

| human tracking | WiTrack2.0 [68], 2015 | FMCW | FMCW chirp model | multipath mitigation, phase calibration | ToF feature |

| human tracking | IndoTrack [22], 2017 | Wi-Fi (CSI) | Doppler effect model | static environment partial removal | AoA in spatial domain feature |

| human tracking | Widar [58], 2017 | Wi-Fi (CSI) | Doppler effect model | passband filter + first PC selection + peak threshold + Doppler frequency shift | frequency domain feature+ searching with least fitting error |

| human tracking | Guo et al. [70], 2017 | Wi-Fi (CSI) | amplitude estimation | moving average filter, Butterworth | time and frequency domain feature + EMD, SVM |

| human tracking | Backscatt-er [28], 2017 | LoRa | reconfigurable antenna model | Doppler frequency shift | / |

| human tracking | Strata et al. [26], 2017 | acoustic | amplitude and phase estimation | frequency quantity, phase difference | distance feature |

| human tracking | PhaseMo-de [48], 2018 | Wi-Fi (CSI) | phase estimation | median filter + phase threshold | time domain feature + SVM, random forest, KNN |

| human tracking | Karanam et al. [113], 2019 | Wi-Fi (CSI) | AoA model with antenna array | multipath mitigation | AoA, ToF |

| human tracking | Chan et al. [17], 2019 | FMCW | FMCW chirp model | spectrogram | CNN |

| human tracking | WideSee [29], 2019 | LoRa | reconfigurable antenna model | Doppler frequency shift | direction-related feature |

| human indoor localization | RADAR [102], 2000 | RF signal | amplitude estimation | multipath mitigation | frequency-domain feature + K-means |

| human indoor localization | EZ [104], 2010 | Wi-Fi (RSSI) | amplitude estimation | / | time domain feature + resolution generation algorithm |

| human indoor localization | PinLoc [101], 2012 | Wi-Fi (CSI) | amplitude estimation | divergence | frequency-domain feature + K-means |

| human indoor localization | CUPID [114], 2013 | Wi-Fi (CSI) | AoA with antenna array | multipath mitigation | AoA feature |

| human indoor localization | Epsilon [25], 2014 | VLC | amplitude estimation | Kalman filter + frequency quantity threshold | AoA feature |

| human indoor localization | TCPF [124], 2015 | Wi-Fi (RSSI) | amplitude estimation | Kalman filter, weighted moving average | Frequency-domain feature + KNN |

| human localization | Chronos [132], 2016 | Wi-Fi (CSI) | amplitude estimation | packet detection delay removal, multi-path separation | ToF feature |

| human motion tracking | WiDeo [41], 2015 | Wi-Fi (CSI) | phase estimation | Kalman filter | AoA, ToF feature |

| human motion tracking | MoSense [71], 2017 | RF signal | amplitude estimation | Butterworth low-pass filter + phase difference threshold | time domain feature + binary classification |

| in-air hand tracking | RF-IDraw [10], 2014 | RFID | AoA with antenna array | / | multi-resolution positioning algorithm |

| in-air hand tracking | WiDraw [115], 2015 | Wi-Fi (CSI) | AoA with antenna array | low pass filter | AoA feature |

| walking direction tracking | WiDir [106], 2016 | Wi-Fi (CSI) | Fresnel zone mode | cross-correlation denoising, polynomial smoothing filter + angle threshold | spatial and time domain feature |

| / | Minh [23], 2009 | VLC | amplitude estimation | phase difference + amplitude variance | / |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Teng, G.; Hong, F. Human Activity Sensing with Wireless Signals: A Survey. Sensors 2020, 20, 1210. https://doi.org/10.3390/s20041210

Liu J, Teng G, Hong F. Human Activity Sensing with Wireless Signals: A Survey. Sensors. 2020; 20(4):1210. https://doi.org/10.3390/s20041210

Chicago/Turabian StyleLiu, Jiao, Guanlong Teng, and Feng Hong. 2020. "Human Activity Sensing with Wireless Signals: A Survey" Sensors 20, no. 4: 1210. https://doi.org/10.3390/s20041210