Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor

Abstract

:1. Introduction

- A spatial attention fusion (SAF) block to integrate radar data and vision data is proposed, which is built on the FCOS vision detection framework;

- We generate the 2D annotations of nuScenes dataset for model training and inference;

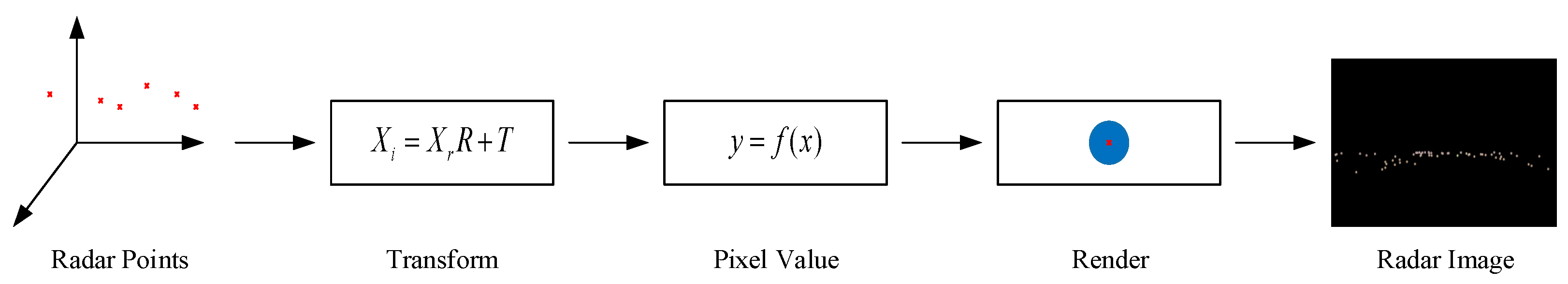

- An improved generation model to transform the radar information to an RGB image is proposed, which is used as the input data of our proposed SAF block;

- A lot of experiments to select the optimal hyperparameters involved in the radar image generation model are carried out;

2. Related Works

2.1. Object Detection by Vision Sensors

2.2. Object Detection by Decision Level Fusion

2.3. Object Detection by Data Level Fusion

2.4. Object Detection by Feature-Level Fusion

2.5. Object Detection by Mixed Fusion

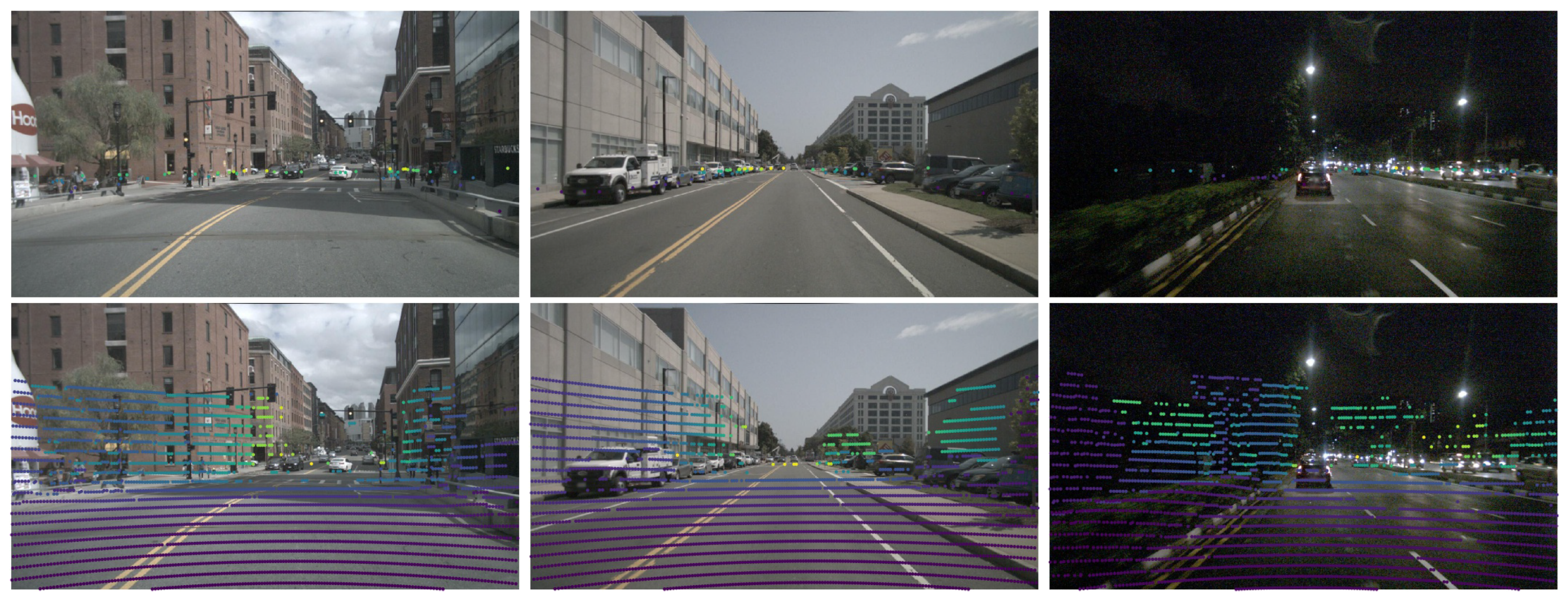

3. Training Dataset

4. Proposed Algorithm

4.1. Detection Framework

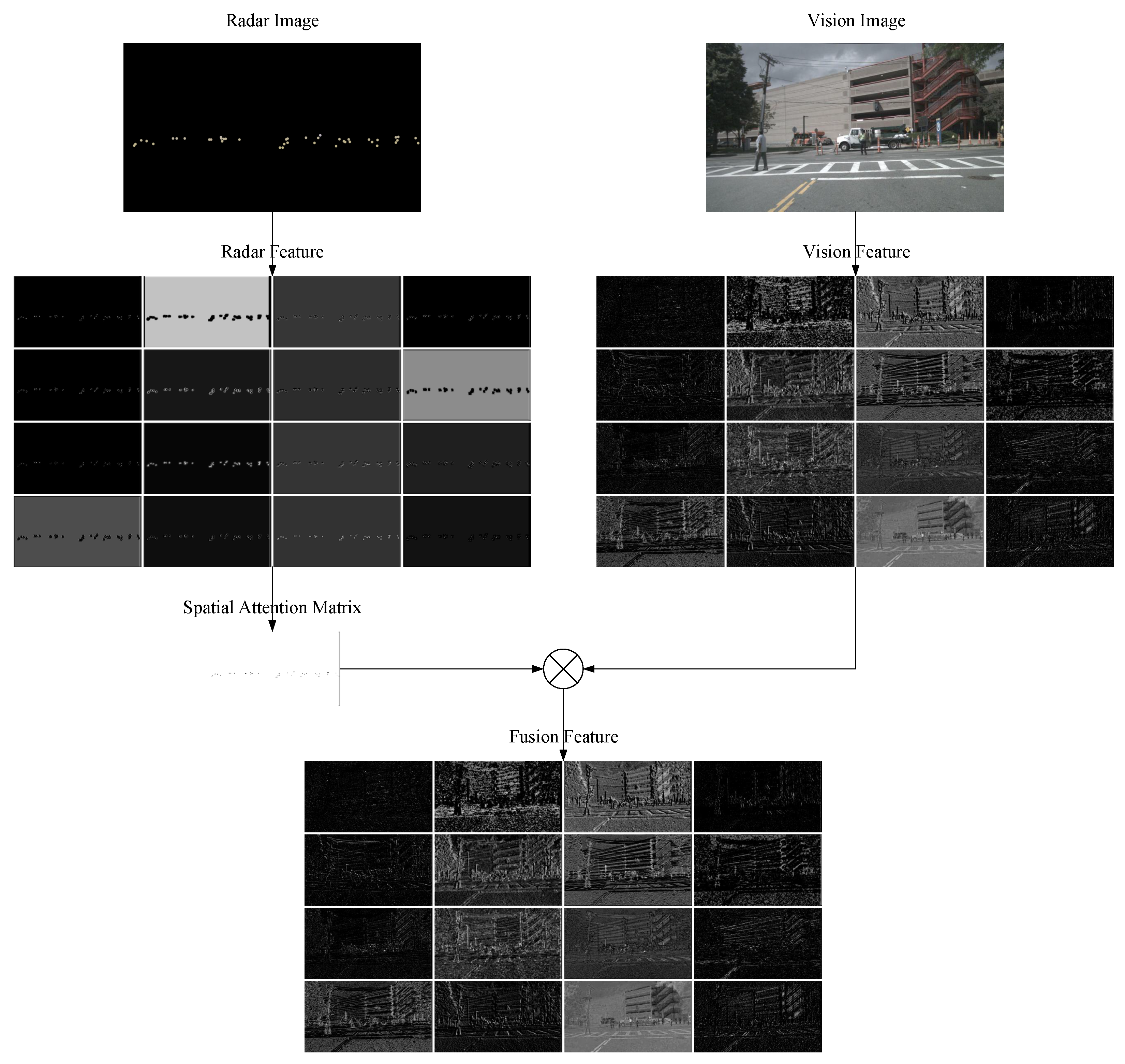

4.2. Spatial Attention Fusion

5. Experimental Validations

5.1. Implementation Details

5.2. Detection Comparisons

5.3. The Comparison of Different Fusion Blocks

5.4. The Comparison of Different Rendering Radius

5.5. The Comparison of Different SAF Configurations

5.6. The Evaluation of SAF-Faster R-CNN

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision Workshops (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2014), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV2015), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 9:1904–9:1916. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 6:1137–6:1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, T.L.; Piotr, D.; Ross, G.; He, K.M.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2017), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.M.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV2017), Venice, Italy, 22–29 October 2017; pp. 770–778. [Google Scholar]

- Zhao, X.T.; Li, W.; Zhang, Y.F.; Chang, S.; Feng, Z.Y.; Zhang, P. Aggregated Residual Dilation-Based Feature Pyramid Network for Object Detection. IEEE Access 2019, 7, 134014–134027. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.H.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Simon, C.; Will, M.; Paul, N. Distant Vehicle Detection Using Radar and Vision. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA2019), Montreal, QC, Canada, 20–24 May 2019; pp. 8311–8317. [Google Scholar]

- Langer, D.; Jochem, T. Fusing radar and vision for detecting, classifying and avoiding roadway obstacles. In Proceedings of the IEEE Conference on Intelligent Vehicles, Tokyo, Japan, 19–20 September 1996; pp. 333–338. [Google Scholar]

- Coué, C.; Fraichard, T.; Bessiere, P.; Mazer, E. Multi-sensor data fusion using Bayesian programming: An automotive application. In Proceedings of the IEEE 2002 Intelligent Vehicles Symposium, Versailles, France, 17–21 June 2002; pp. 442–447. [Google Scholar]

- Kawasaki, N.; Kiencke, U. Standard platform for sensor fusion on advanced driver assistance system using bayesian network. In Proceedings of the IEEE 2004 Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 250–255. [Google Scholar]

- Ćesić, J.; Marković, I.; Cvišić, I.; Petrović, I. Radar and stereo vision fusion for multitarget tracking on the special Euclidean group. Robot. Auton. Syst. 2016, 83, 338–348. [Google Scholar] [CrossRef]

- Obrvan, M.; Ćesić, J.; Petrović, I. Appearance based vehicle detection by radar-stereo vision integration. In Proceedings of the Robot 2015: Second Iberian Robotics Conference, Lisbon, Portugal, 19–21 November 2015; pp. 437–449. [Google Scholar]

- Wu, S.G.; Decker, S.; Chang, P.; Camus, T.; Eledath, J. Collision sensing by stereo vision and radar sensor fusion. IEEE Trans. Intell. Transp. Syst. 2009, 10, 4:606–4:614. [Google Scholar] [CrossRef]

- Chavez-Garcia, R.O.; Burlet, J.; Vu, T.D.; Aycard, O. Frontal object perception using radar and mono-vision. In Proceedings of the IEEE 2012 Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 159–164. [Google Scholar]

- Zhong, Z.G.; Liu, S.; Mathew, M.; Dubey, A. Camera radar fusion for increased reliability in ADAS applications. Electron. Imaging 2018, 2018, 17:258-1–17:258-4. [Google Scholar] [CrossRef]

- Kim, D.Y.; Jeon, M. Data fusion of radar and image measurements for multi-object tracking via Kalman filtering. Inf. Sci. 2014, 278, 641–652. [Google Scholar] [CrossRef]

- Steux, B.; Laurgeau, C.; Salesse, L.; Wautier, D. Fade: A vehicle detection and tracking system featuring monocular color vision and radar data fusion. In Proceedings of the IEEE 2002 Intelligent Vehicles Symposium, Versailles, France, 17–21 June 2002; pp. 632–639. [Google Scholar]

- Streubel, R.; Yang, B. Fusion of stereo camera and MIMO-FMCW radar for pedestrian tracking in indoor environments. In Proceedings of the IEEE 19th International Conference on Information Fusion, Heidelberg, Germany, 5–8 July 2016; pp. 565–572. [Google Scholar]

- Long, N.B.; Wang, K.W.; Cheng, R.Q.; Yang, K.L.; Bai, J.S. Fusion of millimeter wave radar and RGB-depth sensors for assisted navigation of the visually impaired. In Proceedings of the Millimetre Wave and Terahertz Sensors and Technology XI, Berlin, Germany, 10–13 September 2018; pp. 21–28. [Google Scholar]

- Long, N.B.; Wang, K.W.; Cheng, R.Q.; Hu, W.J.; Yang, K.L. Unifying obstacle detection, recognition, and fusion based on millimeter wave radar and RGB-depth sensors for the visually impaired. Revi. Sci. Instrum. 2019, 90, 4:044102-1–4:044102-12. [Google Scholar] [CrossRef]

- Milch, S.; Behrens, M. Pedestrian detection with radar and computer vision. In Proceedings of the 2001 PAL Symposium—Progress in Automobile Lighting, Darmstadt, Germany, 25–26 September 2001; pp. 657–664. [Google Scholar]

- Bombini, L.; Cerri, P.; Medici, P.; Alessandretti, G. Radar-vision fusion for vehicle detection. In Proceedings of the 3rd International Workshop on Intelligent Transportation, Hamburg, Germany, 14–15 March 2006; pp. 65–70. [Google Scholar]

- Alessandretti, G.; Broggi, A.; Cerri, P. Vehicle and guard rail detection using radar and vision data fusion. IEEE Trans. Intell. Transp. Syst. 2007, 8, 1:95–1:105. [Google Scholar] [CrossRef] [Green Version]

- Kadow, U.; Schneider, G.; Vukotich, A. Radar-vision based vehicle recognition with evolutionary optimized and boosted features. In Proceedings of the IEEE 2007 Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 749–754. [Google Scholar]

- Haselhoff, A.; Kummert, A.; Schneider, G. Radar-vision fusion for vehicle detection by means of improved haar-like feature and adaboost approach. In Proceedings of the IEEE 2007 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 2070–2074. [Google Scholar]

- Ji, Z.P.; Prokhorov, D. Radar-vision fusion for object classification. In Proceedings of the IEEE 2008 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 1–7. [Google Scholar]

- Serfling, M.; Loehlein, O.; Schweiger, R.; Dietmayer, K. Camera and imaging radar feature level sensor fusion for night vision pedestrian recognition. In Proceedings of the IEEE 2009 Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 597–603. [Google Scholar]

- Kato, T.; Ninomiya, Y.; Masaki, I. An obstacle detection method by fusion of radar and motion stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 3:182–3:188. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, N.N.; Xin, J.M.; Ma, Z. Integrating millimeter wave radar with a monocular vision sensor for on-road obstacle detection applications. Sensors 2011, 11, 9:8992–9:9008. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, X.P.; Du, J.S.; Gao, J.; Wang, W. Pedestrian Detection Based on Fusion of Millimeter Wave Radar and Vision. In Proceedings of the 2018 International Conference on Artificial Intelligence and Pattern Recognition, Beijing, China, 18–20 August 2018; pp. 38–42. [Google Scholar]

- John, V.; Mita, S. RVNet: Deep Sensor Fusion of Monocular Camera and Radar for Image-Based Obstacle Detection in Challenging Environments. In Proceedings of the 2019 Pacific-Rim Symposium on Image and Video Technology, Sydney, Australia, 18–22 November 2019; pp. 351–364. [Google Scholar]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF2019), Bonn, Germany, 15–17 Octomber 2019; pp. 1–7. [Google Scholar]

- Holger, C.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. arXiv 2019, arXiv:1903.11027. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV2014), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- FCOS Model. Available online: https://github.com/tianzhi0549/FCOS (accessed on 10 February 2020).

- Uijlings, J.R.R.; Van, D.S.; Koen, E.A.; Gevers, T.; Smeulders, A.W.M. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 2:154–2:171. [Google Scholar] [CrossRef] [Green Version]

- Zitnick, C.L.; Dollár, P. Edge boxes: Locating object proposals from edges. In Proceedings of the European Conference on Computer Vision Workshops (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 391–405. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 2:303–2:338. [Google Scholar] [CrossRef] [Green Version]

- Musicki, D.; Evans, R. Joint integrated probabilistic data association: JIPDA. IEEE Trans. Aerosp. Electro. Syst. 2004, 40, 3:1093–3:1099. [Google Scholar] [CrossRef]

- Sugimoto, S.; Tateda, H.; Takahashi, H.; Okutomi, M. Obstacle detection using millimeter-wave radar and its visualization on image sequence. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR2004), Cambridge, UK, 26 Augest 2004; pp. 342–345. [Google Scholar]

- Cheng, Y.Z. Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intel. 1995, 17, 8:790–8:799. [Google Scholar] [CrossRef] [Green Version]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Cision (ICCV2017), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Weng, J.Y.; Zhang, N. Optimal in-place learning and the lobe component analysis. In Proceedings of the IEEE International Joint Conference on Neural Network, Vancouver, BC, Canada, 16–21 July 2006; pp. 3887–3894. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Fizyr. Keras Retinanet. Available online: https://github:com/fizyr/keras-retinanet (accessed on 10 February 2020).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Lindl, R.; Walchshäusl, L. Three-Level Early Fusion for Road User Detection. PReVENT Fus. Forum e-J. 2006, 1, 1:19–1:24. [Google Scholar]

- Chavez-Garcia, R.O.; Aycard, O. Multiple sensor fusion and classification for moving object detection and tracking. IEEE Trans. Intell. Transp. Syst. 2015, 17, 2:525–2:534. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Xu, L.H.; Sun, H.B.; Xin, J.M.; Zheng, N.N. On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 7:2075–7:2084. [Google Scholar] [CrossRef]

- Liu, X.; Sun, Z.P.; He, H.G. On-road vehicle detection fusing radar and vision. In Proceedings of the IEEE 2011 International Conference on Vehicular Electronics and Safety, Beijing, China, 10–12 July 2011; pp. 150–154. [Google Scholar]

- Yu, J.H.; Jiang, Y.N.; Wang, Z.Y.; Cao, Z.M.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 10–12 December 2019; pp. 8024–8035. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 3:211–3:252. [Google Scholar] [CrossRef] [Green Version]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Cision (ICCV2015), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

| Model | Backbone | Scale | (100) | (100) | (100) | (100) | (100) | (100) |

|---|---|---|---|---|---|---|---|---|

| FCOS | ResNet-50 | 800 | 64.7 | 86.2 | 70.9 | 46.0 | 62.7 | 76.7 |

| SAF-FCOS | ResNet-50 | 800 | 72.4 | 90.0 | 79.3 | 55.3 | 70.1 | 83.1 |

| Model | Backbone | Scale | (1) | (10) | (100) | (100) | (100) | (100) |

| FCOS | ResNet-50 | 800 | 13.7 | 62.3 | 73.5 | 60.0 | 72.5 | 82.3 |

| SAF-FCOS | ResNet-50 | 800 | 14.5 | 68.4 | 79.0 | 69.6 | 77.4 | 86.5 |

| Model | Backbone | Scale | (100) | (100) | (100) | (100) | (100) | (100) |

|---|---|---|---|---|---|---|---|---|

| CAT-FCOS | ResNet-50 | 800 | 59.8 | 85.7 | 64.4 | 36.4 | 57.9 | 74.8 |

| MUL-FCOS | ResNet-50 | 800 | 61.6 | 86.4 | 67.5 | 39.8 | 60.9 | 74.5 |

| ADD-FCOS | ResNet-50 | 800 | 64.2 | 87.6 | 70.9 | 42.7 | 62.8 | 77.4 |

| SAF-FCOS | ResNet-50 | 800 | 72.4 | 90.0 | 79.3 | 55.3 | 70.1 | 83.1 |

| Model | Backbone | Scale | (1) | (10) | (100) | (100) | (100) | (100) |

| CAT-FCOS | ResNet-50 | 800 | 13.3 | 58.4 | 68.1 | 48.7 | 66.6 | 80.6 |

| MUL-FCOS | ResNet-50 | 800 | 13.4 | 60.1 | 70.2 | 52.6 | 69.3 | 80.9 |

| ADD-FCOS | ResNet-50 | 800 | 13.7 | 61.9 | 71.9 | 55.1 | 70.9 | 82.5 |

| SAF-FCOS | ResNet-50 | 800 | 14.5 | 68.4 | 79.0 | 69.6 | 77.4 | 86.5 |

| Radius | Backbone | Scale | (100) | (100) | (100) | (100) | (100) | (100) |

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 800 | 70.1 | 90.4 | 77.1 | 52.3 | 68.4 | 81.0 | |

| ResNet-50 | 800 | 69.6 | 89.5 | 75.8 | 52.3 | 67.9 | 80.4 | |

| ResNet-50 | 800 | 64.5 | 87.1 | 70.0 | 47.1 | 63.2 | 75.2 | |

| ResNet-50 | 800 | 70.2 | 89.9 | 76.7 | 54.3 | 68.6 | 80.6 | |

| ResNet-50 | 800 | 69.7 | 89.9 | 75.7 | 52.8 | 68.5 | 79.8 | |

| ResNet-50 | 800 | 68.3 | 89.0 | 74.5 | 52.9 | 66.6 | 79.2 | |

| Radius | Backbone | Scale | (1) | (10) | (100) | (100) | (100) | (100) |

| ResNet-50 | 800 | 12.8 | 64.5 | 76.9 | 66.6 | 75.6 | 84.9 | |

| ResNet-50 | 800 | 12.7 | 64.5 | 76.6 | 65.2 | 75.5 | 84.7 | |

| ResNet-50 | 800 | 12.4 | 60.2 | 73.3 | 59.9 | 73.2 | 80.8 | |

| ResNet-50 | 800 | 12.7 | 65.2 | 77.4 | 66.8 | 76.3 | 85.0 | |

| ResNet-50 | 800 | 12.6 | 64.1 | 76.7 | 65.5 | 76.0 | 84.2 | |

| ResNet-50 | 800 | 12.6 | 63.1 | 76.3 | 65.5 | 75.2 | 84.2 |

| SAF | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| (100) | (100) | (100) | (100) | (100) | (100) | ||||

| 60.7 | 84.6 | 65.6 | 43.6 | 58.7 | 72.7 | ||||

| ✓ | 58.6 | 84.5 | 62.9 | 39.6 | 57.5 | 70.9 | |||

| ✓ | 68.8 | 88.7 | 75.3 | 51.7 | 67.2 | 80.1 | |||

| ✓ | 69.8 | 89.8 | 76.2 | 53.2 | 67.9 | 80.6 | |||

| ✓ | 68.1 | 88.8 | 74.0 | 49.8 | 66.8 | 79.0 | |||

| ✓ | ✓ | 69.4 | 89.7 | 76.5 | 52.7 | 67.6 | 80.1 | ||

| ✓ | ✓ | 67.0 | 88.3 | 72.8 | 49.0 | 65.2 | 78.5 | ||

| ✓ | ✓ | 54.7 | 82.5 | 58.0 | 33.6 | 53.3 | 68.8 | ||

| ✓ | ✓ | ✓ | 70.2 | 89.9 | 76.7 | 54.3 | 68.6 | 80.6 | |

| ✓ | ✓ | ✓ | 69.8 | 89.6 | 75.9 | 53.6 | 67.7 | 80.6 | |

| ✓ | ✓ | ✓ | ✓ | 70.1 | 89.9 | 76.7 | 52.4 | 68.8 | 80.3 |

| SAF | |||||||||

| (1) | (10) | (100) | (100) | (100) | (100) | ||||

| 12.2 | 56.9 | 70.5 | 55.8 | 70.0 | 79.7 | ||||

| ✓ | 12.0 | 55.5 | 68.4 | 50.6 | 68.1 | 78.5 | |||

| ✓ | 12.6 | 63.7 | 77.0 | 64.9 | 76.2 | 85.0 | |||

| ✓ | 12.6 | 64.9 | 77.2 | 66.0 | 76.4 | 84.8 | |||

| ✓ | 12.6 | 63.0 | 76.2 | 64.5 | 75.5 | 83.8 | |||

| ✓ | ✓ | 12.7 | 63.8 | 76.6 | 66.5 | 75.1 | 84.7 | ||

| ✓ | ✓ | 12.6 | 62.1 | 75.4 | 63.9 | 74.2 | 83.9 | ||

| ✓ | ✓ | 11.7 | 52.8 | 64.7 | 45.7 | 63.8 | 76.8 | ||

| ✓ | ✓ | ✓ | 12.7 | 65.2 | 77.4 | 66.8 | 76.3 | 85.0 | |

| ✓ | ✓ | ✓ | 12.6 | 64.3 | 77.0 | 66.8 | 75.8 | 84.7 | |

| ✓ | ✓ | ✓ | ✓ | 12.8 | 64.3 | 76.8 | 65.8 | 75.8 | 84.5 |

| Model | Backbone | Scale | (100) | (100) | (100) | (100) | (100) | (100) |

|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | ResNet-50 | 800 | 71.9 | 89.3 | 78.5 | 55.1 | 68.9 | 83.5 |

| SAF-Faster R-CNN | ResNet-50 | 800 | 72.2 | 89.7 | 78.7 | 55.3 | 69.4 | 83.6 |

| Model | Backbone | Scale | (1) | (10) | (100) | (100) | (100) | (100) |

| Faster R-CNN | ResNet-50 | 800 | 14.4 | 68.7 | 78.1 | 68.9 | 75.9 | 86.7 |

| SAF-Faster R-CNN | ResNet-50 | 800 | 14.5 | 68.7 | 78.5 | 68.9 | 76.6 | 86.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors 2020, 20, 956. https://doi.org/10.3390/s20040956

Chang S, Zhang Y, Zhang F, Zhao X, Huang S, Feng Z, Wei Z. Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors. 2020; 20(4):956. https://doi.org/10.3390/s20040956

Chicago/Turabian StyleChang, Shuo, Yifan Zhang, Fan Zhang, Xiaotong Zhao, Sai Huang, Zhiyong Feng, and Zhiqing Wei. 2020. "Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor" Sensors 20, no. 4: 956. https://doi.org/10.3390/s20040956

APA StyleChang, S., Zhang, Y., Zhang, F., Zhao, X., Huang, S., Feng, Z., & Wei, Z. (2020). Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors, 20(4), 956. https://doi.org/10.3390/s20040956