Degenerate Near-Planar 3D Reconstruction from Two Overlapped Images for Road Defects Detection

Abstract

:1. Introduction

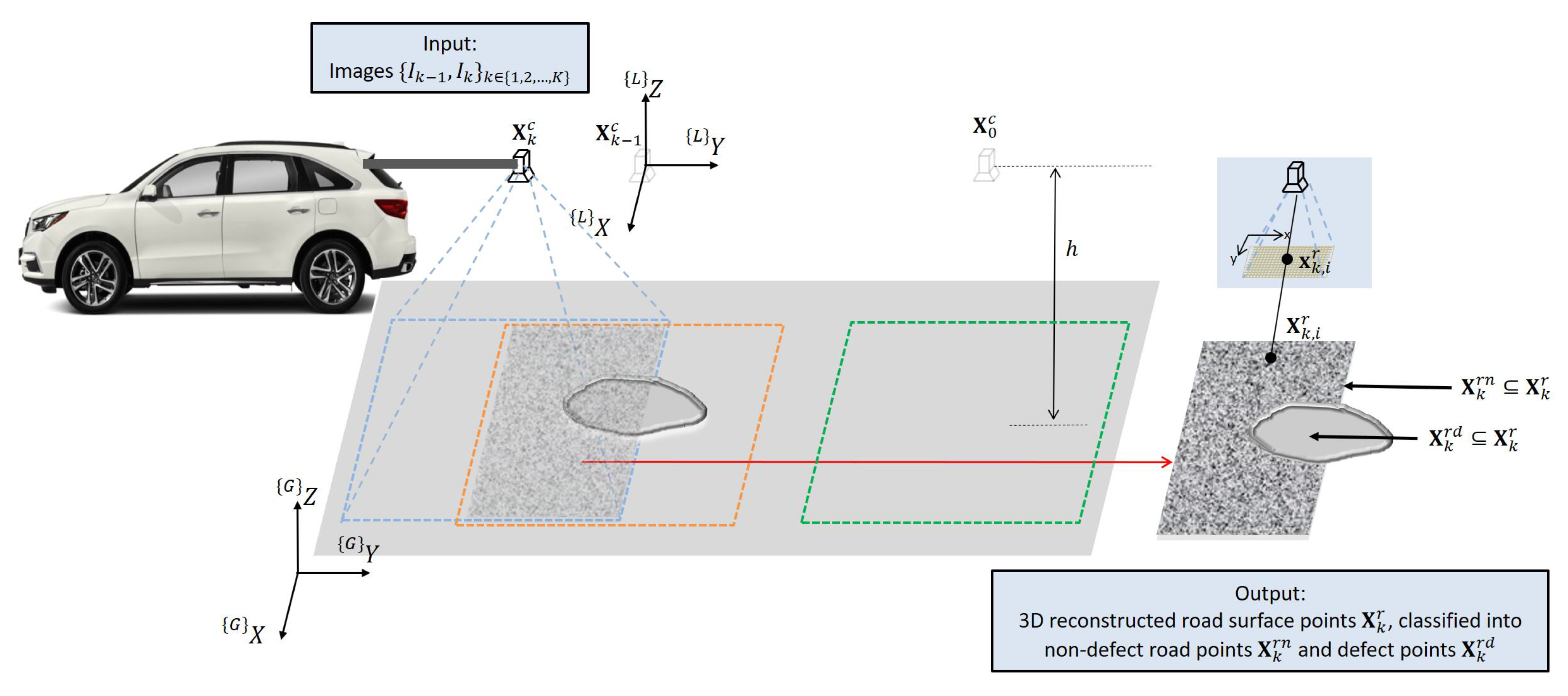

2. 3D Road Surface Reconstruction from Two Overlapped Images

2.1. Problem Formulation

2.2. Two-image 3D Road Surface Reconstruction

2.3. Planar Surface Degeneracy Problem

3. Proposed Degenerate Near-Planar 3D Reconstruction for Road Defects Detection

3.1. Overview

3.2. Preprocessing

3.3. 3D Reconstruction for Near-Planar Road Surface

3.4. Post-Processing

4. Experimental Results

4.1. Experiments in Simulation Environment

4.2. Experiments on Real Road Surface

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Mechanical Vibration and Shock-Evaluation of Human Exposure to Whole-Body Vibration-Part 1: General Requirements. Available online: https://www.iso.org/standard/76369.html (accessed on 30 April 1997).

- Tighe, S.; Li, N.; Falls, L.; Haas, R. Incorporating road safety into pavement management. Transp. Res. Rec. J. Transp. Res. Board 2000, 1699, 1–10. [Google Scholar] [CrossRef]

- Huang, Y.; Xu, B. Automatic inspection of pavement cracking distress. J. Electron. Imaging 2006, 15, 013017. [Google Scholar] [CrossRef]

- Herold, M.; Roberts, D. Spectral characteristics of asphalt road aging and deterioration: Implications for remote-sensing applications. Appl. Opt. 2005, 44, 4327–4334. [Google Scholar] [CrossRef] [PubMed]

- Saarenketo, T.; Scullion, T. Road evaluation with ground penetrating radar. J. Appl. Geophys. 2000, 43, 119–138. [Google Scholar] [CrossRef]

- Bursanescu, L.; Blais, F. Automated pavement distress data collection and analysis: A 3-D approach. Proceedings of International Conference on Recent Advances in 3-D Digital Imaging and Modeling (Cat. No.97TB100134), Ottawa, ON, Canada, 12–15 May 1997; pp. 311–317. [Google Scholar]

- Kil, D.H.; Shin, F.B. Automatic road-distress classification and identification using a combination of hierarchical classifiers and expert systems-subimage and object processing. In Proceedings of the International Conference on Image Processing, Santa Barbara, CA, USA, 26–29 October 1997; pp. 414–417. [Google Scholar]

- Fukuhara, T.; Terada, K.; Nagao, M.; Kasahara, A.; Ichihashi, S. Automatic pavement-distress-survey system. J. Transp. Eng. 1990, 116, 280–286. [Google Scholar] [CrossRef]

- Yu, B.X.; Yu, X. Vibration-based system for pavement condition evaluation. Appl. Adv.Tech. Trans. 2006, 183–189. [Google Scholar] [CrossRef]

- Vittorio, A.; Rosolino, V.; Teresa, I.; Vittoria, C.M.; Vincenzo, P.G.; Francesco, D.M. Automated sensing system for monitoring of road surface quality by mobile devices. Procedia Soc. Behav. Sci. 2014, 111, 242–251. [Google Scholar] [CrossRef] [Green Version]

- Tai, Y.C.; Chan, C.W.; Hsu, J.Y.j. Automatic road anomaly detection using smart mobile device. In Proceedings of the conference on technologies and applications of artificial intelligence, Hsinchu, Taiwan, 25–27 January 2010. [Google Scholar]

- Eriksson, J.; Girod, L.; Hull, B.; Newton, R.; Madden, S.; Balakrishnan, H. The pothole patrol: Using a mobile sensor network for road surface monitoring. In Proceedings of the 6th International Conference on Mobile Systems, Applications, and Services (ACM), Breckenridge, CO, USA, 17–20 June 2008; pp. 29–39. [Google Scholar] [CrossRef]

- Xue, G.; Zhu, H.; Hu, Z.; Yu, J.; Zhu, Y.; Luo, Y. Pothole in the dark: Perceiving pothole profiles with participatory urban vehicles. IEEE Trans. Mob. Comput. 2017, 16, 1408–1419. [Google Scholar] [CrossRef]

- Mednis, A.; Strazdins, G.; Zviedris, R.; Kanonirs, G.; Selavo, L. Real time pothole detection using android smartphones with accelerometers. In Proceedings of the 2011 International Conference on Distributed Computing in Sensor Systems and Workshops (DCOSS), Barcelona, Spain, 27–29 June 2011; pp. 1–6. [Google Scholar]

- Tedeschi, A.; Benedetto, F. A real-time automatic pavement crack and pothole recognition system for mobile Android-based devices. Adv. Eng. Inf. 2017, 32, 11–25. [Google Scholar] [CrossRef]

- Koch, C.; Brilakis, I. Pothole detection in asphalt pavement images. Adv. Eng. Inf. 2011, 25, 507–515. [Google Scholar] [CrossRef]

- Koch, C.; Jog, G.M.; Brilakis, I. Automated pothole distress assessment using asphalt pavement video data. J. Comput. Civil Eng. 2012, 27, 370–378. [Google Scholar] [CrossRef]

- Jo, Y.; Ryu, S. Pothole detection system using a black-box camera. Sensors 2015, 15, 29316–29331. [Google Scholar] [CrossRef] [PubMed]

- Banharnsakun, A. Hybrid ABC-ANN for pavement surface distress detection and classification. Inter. J. Mach. Learn. Cybern. 2017, 8, 699–710. [Google Scholar] [CrossRef]

- Ryu, S.K.; Kim, T.; Kim, Y.R. Image-based pothole detection system for ITS service and road management system. Math. Prob. Eng. 2015, 2015. [Google Scholar] [CrossRef] [Green Version]

- Chang, K.; Chang, J.; Liu, J. Detection of pavement distresses using 3D laser scanning technology. J. Comput. Civ. Eng. 2005, 1–11. [Google Scholar] [CrossRef]

- Yu, S.J.; Sukumar, S.R.; Koschan, A.F.; Page, D.L.; Abidi, M.A. 3D reconstruction of road surfaces using an integrated multi-sensory approach. Opt. Lasers Eng. 2007, 45, 808–818. [Google Scholar] [CrossRef]

- Yu, X.; Salari, E. Pavement pothole detection and severity measurement using laser imaging. In Proceedings of the 2011 IEEE International Conference on Electro/Information Technology, Mankato, MN, USA, 15–17 May 2011; pp. 1–5. [Google Scholar]

- Hou, Z.; Wang, K.C.; Gong, W. Experimentation of 3D pavement imaging through stereovision. In Proceedings of the International Conference on Transportation Engineering 2007, Chengdu, China, 22–24 July 2007; pp. 376–381. [Google Scholar] [CrossRef]

- Fan, R.; Ozgunalp, U.; Hosking, B.; Liu, M.; Pitas, I. Pothole detection based on disparity transformation and road surface modeling. IEEE Trans. Image Process. 2019, 29, 897–908. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- El Gendy, A.; Shalaby, A.; Saleh, M.; Flintsch, G.W. Stereo-vision applications to reconstruct the 3D texture of pavement surface. Int. J. Pavement Eng. 2011, 12, 263–273. [Google Scholar] [CrossRef]

- Ahmed, M.; Haas, C.; Haas, R. Toward low-cost 3D automatic pavement distress surveying: The close range photogrammetry approach. Can. J. Civ. Eng. 2011, 38, 1301–1313. [Google Scholar]

- Antol, S.; Ryu, K.; Furukawa, T. A New Approach for Measuring Terrain Profiles. In Proceedings of the ASME 2013 International Design Engineering Technical Conferences And Computers and Information in Engineering Conference, Portland, OR, USA, 4–7 August 2013. [Google Scholar] [CrossRef]

- Moazzam, I.; Kamal, K.; Mathavan, S.; Usman, S.; Rahman, M. Metrology and visualization of potholes using the microsoft kinect sensor. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1284–1291. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Maynila, Philippines, 2003. [Google Scholar]

- Hu, Y.; Furukawa, T. A High-Resolution Surface Image Capture and Mapping System for Public Roads. SAE Int. J. Passenger Cars Electron. Electr. Syst. 2017, 10, 301–309. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Road unevenness: [mm] | 0.1, 5, 10 |

| Image noise: [pixel] | 0.001, 0.002, …, 0.1 |

| [mm] | 500, 800, 1100, 1400, 1700 |

| [degree] | 0.05, 0.10, …, 5 |

| [degree] | 0.05, 0.10, …, 5 |

| [degree] | 0.05, 0.10, …, 5 |

| Two-view translation | |

| t [mm, mm, mm] | |

| Change of h: [mm] | 0.2, 0.4, …,20 |

| Parameter | Value |

|---|---|

| Camera Field of View | |

| Road unevenness: [mm] | |

| Camera to road distance: h [mm] | 900, 1000, …,1600 |

| Image noise: [pixel] | |

| Mismatched feature rejection constant: | 1.5 |

| Two-view camera translation: | |

| t [mm, mm, mm] |

| Proposed | SfM | |

|---|---|---|

| TP | 632 | 658 |

| TN | 5602 | 4382 |

| FP | 38 | 1258 |

| FN | 28 | 2 |

| Accuracy | 98.95% | 80% |

| Precision | 94.33% | 34.34% |

| Recall | 95.76% | 99.70% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Furukawa, T. Degenerate Near-Planar 3D Reconstruction from Two Overlapped Images for Road Defects Detection. Sensors 2020, 20, 1640. https://doi.org/10.3390/s20061640

Hu Y, Furukawa T. Degenerate Near-Planar 3D Reconstruction from Two Overlapped Images for Road Defects Detection. Sensors. 2020; 20(6):1640. https://doi.org/10.3390/s20061640

Chicago/Turabian StyleHu, Yazhe, and Tomonari Furukawa. 2020. "Degenerate Near-Planar 3D Reconstruction from Two Overlapped Images for Road Defects Detection" Sensors 20, no. 6: 1640. https://doi.org/10.3390/s20061640