Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements †

Abstract

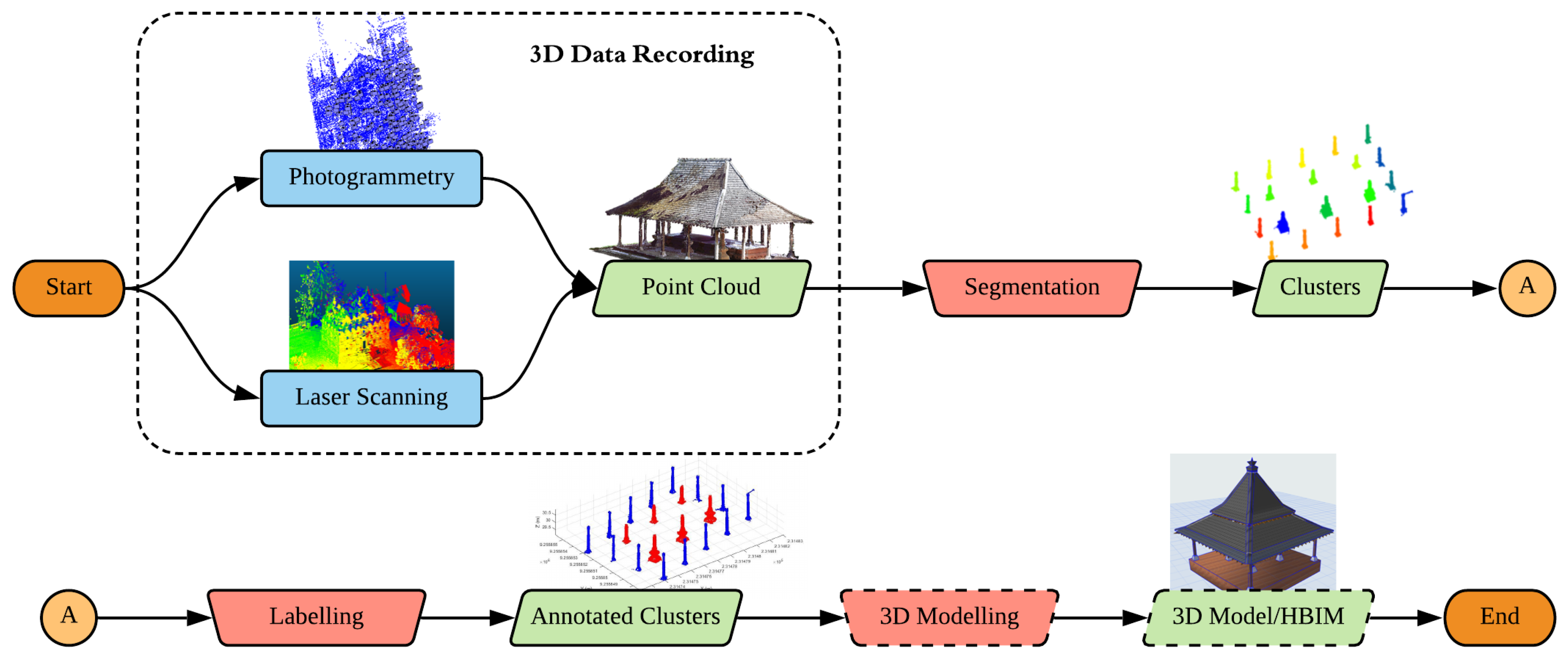

1. Introduction

2. General State-of-the-Art

2.1. Point Cloud Processing

2.1.1. Machine Learning and Deep Learning Approaches

2.1.2. Algorithmic Approach

2.2. Automation in 3D Modeling

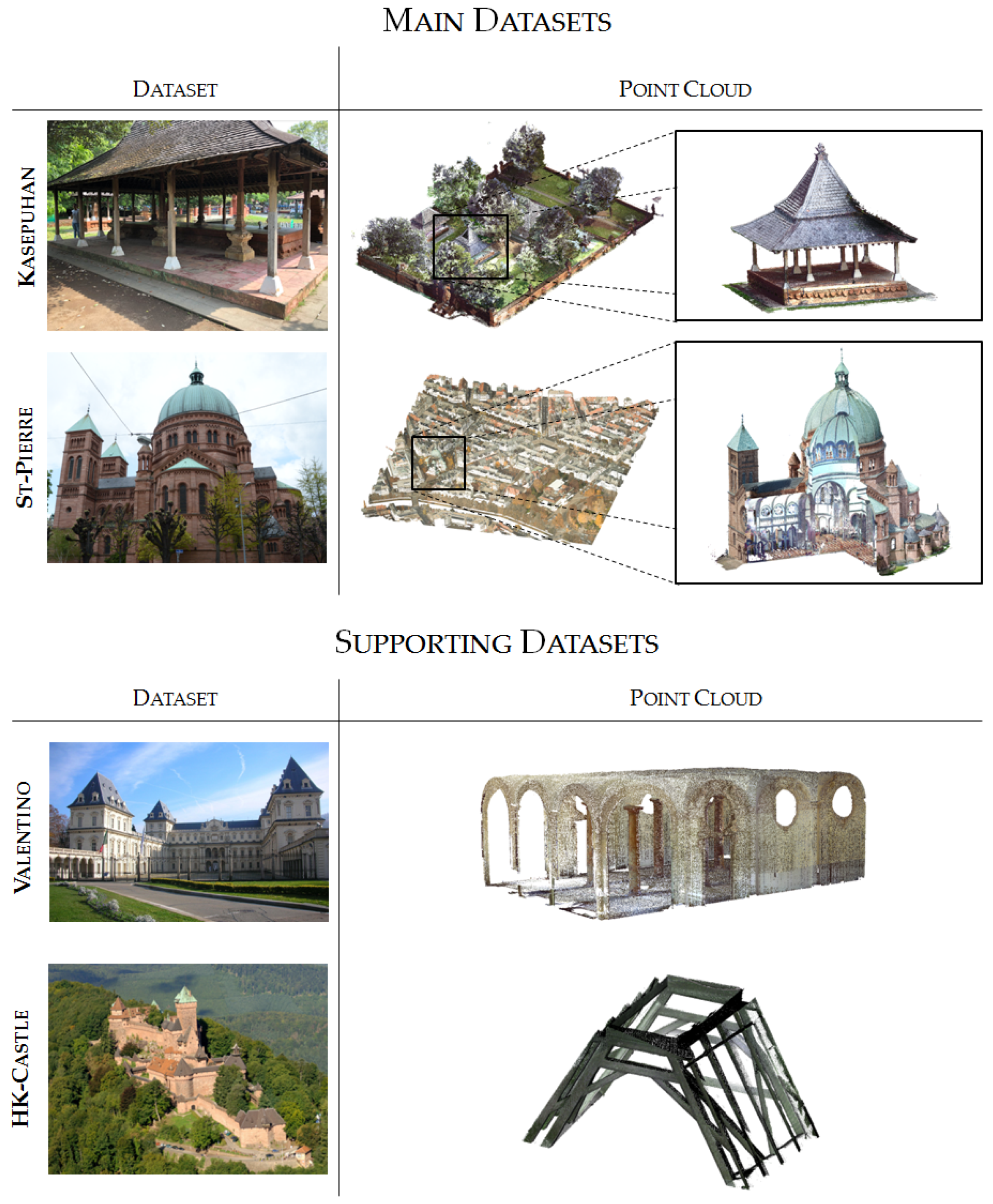

3. Nature of Available Datasets

- Kasepuhan Royal Palace, Cirebon, Indonesia (“Kasepuhan”): This historic area dated to the 13th century and includes several historical buildings within its 1200 m2 brick-walled perimeters. A particular area of the dataset called Siti Inggil is of particular interest to the conservators as they represent the earliest architectural style in the palace compound. In this paper, the Siti Inggil area is used as a focal point, with one its pavilions (the Central Pavilion) used as a case study for the more detailed scale level. Heavy vegetation was present within Siti Inggil often overlapping with the buildings, which will provide a particular challenge for the algorithm described in Section 4.1. The site was digitized in May 2018 using a combination of TLS and photogrammetry (both terrestrial and drone), and was georeferenced to the Indonesian national projection system.

- St-Pierre-le-Jeune Catholic Church, Strasbourg, France (“St-Pierre”): The St-Pierre-le-Jeune Catholic Church was built between 1889 and 1893 in Strasbourg during the German era. The church is located in a UNESCO-listed district, the Neustadt, which comprises some other historical buildings of interest such as the Palais du Rhin, formerly the Imperial palace during the German Reichsland era between 1871 and 1918. It is an example of neo-Romanesque architecture crowned by a 50 m high and 19 m wide dome. The neighborhood around the church was used as a case study in the research along with its interior. The church’s surroundings was scanned by aerial LIDAR in 2016 by the city’s geomatics service; the point cloud data have since been published as open data (https://data.strasbourg.eu/explore/dataset/odata3d_lidar, retrieved 24 January 2020). The exterior of the church was also recorded using drones in May 2016 to get a larger-scale and thus more detailed data, while the interior was scanned using a TLS in April 2017.

- Valentino Castle, Turin, Italy (“Valentino”): The Castle of Valentino is a 17th century edifice located in the city of Turin, Italy. It was used as the royal residence of the House of Savoy and was inscribed into the UNESCO World Heritage list in 1997. Today, the building is used by the architecture department of the Polytechnic University of Turin. The particular “Sala delle Colonne” or Room of Columns inside the castle was used in this study. This point cloud has been graciously shared by the Turin Polytechnic team for our experimental use. The Valentino dataset is used exclusively for the pillar detection part of the research.

- Haut-Koenigsbourg Castle, Orschwiller, France (“HK-Castle”): The Haut-Koenigsbourg is a medieval castle (dated to at least the 12th century) located in the Alsace region of France. Badly ruined during the Thirty Years’ War, it underwent a massive, if somewhat controversial, restoration from 1900 to 1908. The resulting reconstruction shows the romantic and nationalistic ideas of the German empire at the time, the sponsors of the restoration. The castle has been listed as a historical monument by the French Ministry of Culture since 1993. In this research, only a part of the timber beam frame structure of the castle scanned using a TLS was used to perform tests on the beam detection algorithm. The beams are mostly oblique and distributed in the 3D space. The beams are of very regular shape and relatively unbroken [55]. The HK-Castle dataset is used exclusively for the beam detection part of the research.

4. The M_HERACLES Toolbox

4.1. Step 1: Using GIS to Aid the Segmentation of Large Area Point Clouds into Clusters of Objects

4.1.1. Rationale and Description of the Developed Approach

| Algorithm 1: Semantic segmentation of heritage complexes aided by GIS data |

|

4.1.2. Results and Discussion

4.1.3. Comparison with a Commercial Solution

4.2. Step 2 (1): Automatic Segmentation and Classification of Structural Supports

4.2.1. Rationale and Algorithm Description

- The function first creates slices of horizontal cross-sections of this point cloud, whereas the middle slice was taken. In this regard, the 3D problem was reduced to a 2D problem; a similar approach was undertaken in [44].

- A Euclidean-distance based region growing is performed on this middle slice, thereby creating “islands” of candidate pillars.

- A point cloud filtering is performed using the convex hull area criterion to distinguish the “islands” into potential pillars, walls, or noise.

- From the list of potential pillars, a further division was made between “columns” and “non-columns”, depending on the circularity of its cross-section. A circular cross-section was classified as potential columns, while the rest are identified as non-columns.

- A “cookie-cutter”-like method similar to the one explained in Section 4.1 is then implemented using the cross-section of each candidate pillar to segment the 3D point cloud. All points located within the buffer area are considered as part of the entity.

- In the aftermath of the cookie-cutter segmentation, some horizontally planar elements such as floors and/or clings might still linger in the cluster; a RANSAC plane fitting function was therefore implemented to identify these horizontal planes and suppress them.

- A final distance-based region growing was performed to eliminate any remaining noise. Thus, the output of the function is a structure of point cloud clusters, labeled as columns or non-columns.

| Algorithm 2: Pillar segmentation and classification |

|

4.2.2. Results and Analysis

4.3. From Edifices to Architectural Elements (2): Automatic Segmentation of Building Framework

- Adjacency constraint: the neighborhood or adjacency constraint was enforced to limit candidate facets of each beam to only facet clusters which are located adjacent to the current facet reference. In [72], this constraint was defined by the distance between the facet centroids. In M_HERACLES, we modified this approach by performing another octree-based region growing on the facets, this time around by enforcing a distance threshold between adjacent octrees from different facets. In this way, adjacency is defined by whether any edge of the facet cluster is near another one.

- Parallelism constraint: once the adjacency between the different facets is defined (via an adjacency matrix), the search for candidate beam facets is reduced to neighbors. Between neighbors, another geometric constraint on the parallelism of clusters was enforced. Firstly, the major principal axis of the facet clusters was computed using PCA. Two facets are considered parallel if their first PCA components satisfy Equation (1):where is the first PCA component of the first (or reference) facet cluster, and the analogous vector for the second (or tested) facet cluster. Since the first adjacency constraint already limited the candidate facet clusters for a beam, this second geometric constraint was deemed enough to detect the beam.

5. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Banning, E. Archaeological Survey; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2002; p. 273. [Google Scholar]

- Bryan, P.; Barber, D.; Mills, J. Towards a Standard Specification for Terrestrial Laser Scanning in Cultural Heritage—One Year on. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 966–971. [Google Scholar]

- Remondino, F.; Rizzi, A. Reality-based 3D documentation of natural and cultural heritage sites-techniques, problems, and examples. Appl. Geomat. 2010, 2, 85–100. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Hanke, K.; Streilein, A. Architectural Photogrammety. In Digital Photogrammetry; Kasser, M., Egels, Y., Eds.; Taylor & Francis: Abingdon, UK, 2002; pp. 300–339. [Google Scholar]

- Murtiyoso, A.; Grussenmeyer, P.; Koehl, M.; Freville, T. Acquisition and Processing Experiences of Close Range UAV Images for the 3D Modeling of Heritage Buildings. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection: 6th International Conference, EuroMed 2016, Nicosia, Cyprus, October 31–November 5, 2016, Proceedings, Part I; Ioannides, M., Fink, E., Moropoulou, A., Hagedorn-Saupe, M., Fresa, A., Liestøl, G., Rajcic, V., Grussenmeyer, P., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 420–431. [Google Scholar]

- Hanke, K.; Grussenmeyer, P.; Grimm-Pitzinger, A.; Weinold, T. First, Experiences with the Trimble GX Scanner. In Proceedings of the ISPRS Comm. V Symposium, Dresden, Germany, 25–27 September 2006; pp. 1–6. [Google Scholar]

- Lachat, E.; Landes, T.; Grussenmeyer, P. First, Experiences with the Trimble SX10 Scanning Total Station for Building Facade Survey. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 405–412. [Google Scholar] [CrossRef]

- Lachat, E.; Landes, T.; Grussenmeyer, P. Comparison of Point Cloud Registration Algorithms for Better Result Assessment—Towards an Open-Source Solution. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 551–558. [Google Scholar] [CrossRef]

- Hillemann, M.; Weinmann, M.; Mueller, M.S.; Jutzi, B. Automatic extrinsic self-calibration of mobile mapping systems based on geometric 3D features. Remote Sens. 2019, 11, 1955. [Google Scholar] [CrossRef]

- Barsanti, S.G.; Remondino, F.; Fenández-Palacios, B.J.; Visintini, D. Critical factors and guidelines for 3D surveying and modeling in Cultural Heritage. Int. J. Herit. Digit. Era 2014, 3, 141–158. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Murphy, M.; McGovern, E.; Pavia, S. Historic Building Information Modelling—Adding intelligence to laser and image based surveys of European classical architecture. ISPRS J. Photogramm. Remote Sens. 2013, 76, 89–102. [Google Scholar] [CrossRef]

- Murphy, M.; McGovern, E.; Pavia, S. Historic building information modeling (HBIM). Struct. Surv. 2009, 27, 311–327. [Google Scholar] [CrossRef]

- Yang, X.; Koehl, M.; Grussenmeyer, P.; Macher, H. Complementarity of Historic Building Information Modelling and Geographic Information Systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 437–443. [Google Scholar] [CrossRef]

- Hassani, F. Documentation of cultural heritage techniques, potentials and constraints. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 207–214. [Google Scholar] [CrossRef]

- Bedford, J. Photogrammetric Applications for Cultural Heritage; Historic England: Swindon, UK, 2017; p. 128. [Google Scholar]

- Fangi, G. Aleppo—Before and after. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII, 333–338. [Google Scholar] [CrossRef]

- Fiorillo, F.; Jiménez Fernández-Palacios, B.; Remondino, F.; Barba, S. 3d Surveying and modeling of the Archaeological Area of Paestum, Italy. Virtual Archaeol. Rev. 2013, 4, 55–60. [Google Scholar] [CrossRef]

- Herbig, U.; Stampfer, L.; Grandits, D.; Mayer, I.; Pöchtrager, M.; Setyastuti, A. Developing a Monitoring Workflow for the Temples of Java. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 555–562. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Naumann, M.; Niemeyer, F.; Frank, A. Symbiosis of UAS Photogrammetry and TLS for Surveying and 3D Modeling of Cultural Heritage Monuments - a Case Study About the Cathedral of St. Nicholas in the City of Greifswald. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 91–96. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Guillemin, S.; Prilaux, G. Centenary of the Battle of Vimy (France, 1917): Preserving the Memory of the Great War through 3D recording of the Maison Blanche souterraine. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 171–177. [Google Scholar] [CrossRef]

- Farella, E.M.; Torresani, A.; Remondino, F. Quality Features for the Integration of Terrestrial and UAV Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 339–346. [Google Scholar] [CrossRef]

- Munumer, E.; Lerma, J.L. Fusion of 3D data from different image-based and range-based sources for efficient heritage recording. In Proceedings of the 2015 Digital Heritage, Granada, Spain, 28 September–2 October 2015; Volume 304, pp. 83–86. [Google Scholar]

- Nguyen, A.; Le, B. 3D Point Cloud Segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D.; Ruwanpura, J.Y. Automatic recognition of common structural elements from point clouds for automated progress monitoring and dimensional quality control in reinforced concrete construction. Remote Sens. 2019, 11, 1102. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M.; Van Genechten, B. Automated Classification of Heritage Buildings for As-Built BIM using Machine Learning Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 25–30. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A Review of Point Clouds Segmentation and Classification Algorithms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef]

- Bassier, M.; Bonduel, M.; Genechten, B.V.; Vergauwen, M. Octree-Based Region Growing and Conditional Random Fields. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII, 28–29. [Google Scholar]

- Vo, A.v.; Truong-hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Boulaassal, H.; Landes, T.; Grussenmeyer, P.; Kurdi, F. Automatic segmentation of building facades using terrestrial laser data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, XXXVI, 65–70. [Google Scholar]

- Sanchez, V.; Zakhor, A. Planar 3D modeling of building interiors from point cloud data. In Proceedings of the International Conference on Image Processing, ICIP, Orlando, FL, USA, 30 September–3 October 2012; pp. 1777–1780. [Google Scholar] [CrossRef]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep learning on point clouds and its application: A survey. Sensors 2019, 19, 4188. [Google Scholar] [CrossRef]

- Antonopoulos, A.; Antonopoulou, S. 3D survey and BIM-ready modeling of a Greek Orthodox Church in Athens. In Proceedings of the IMEKO International Conference on Metrology for Archaeology and Cultural Heritage, Lecce, Italy, 23–25 October 2017. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar] [CrossRef]

- Grilli, E.; Özdemir, E.; Remondino, F. Application of Machine and Deep Learning Strategies for the Classification of Heritage Point Clouds. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W18, 447–454. [Google Scholar] [CrossRef]

- Malinverni, E.S.; Pierdicca, R.; Paolanti, M.; Martini, M.; Morbidoni, C.; Matrone, F.; Lingua, A. Deep learning for semantic segmentation of point cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 735–742. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, L.; Fang, T.; Mathiopoulos, P.T.; Tong, X.; Qu, H.; Xiao, Z.; Li, F.; Chen, D. A multiscale and hierarchical feature extraction method for terrestrial laser scanning point cloud classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2409–2425. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D digital heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Rizaldy, A.; Persello, C.; Gevaert, C.M.; Oude Elberink, S.J. Fully Convolutional Networks for Ground Classification from LiDAR Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-2, 231–238. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P. Point clouds segmentation as base for as-built BIM creation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II-5/W3, 191–197. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Nys, G.A.; Billen, R. 3D point cloud semantic modeling: Integrated framework for indoor spaces and furniture. Remote Sens. 2018, 10, 1412. [Google Scholar] [CrossRef]

- Lu, Y.C.; Shih, T.Y.; Yen, Y.N. Research on Historic Bim of Built Heritage in Taiwan -a Case Study of Huangxi Academy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 615–622. [Google Scholar] [CrossRef]

- Drap, P.; Papini, O.; Pruno, E.; Nucciotti, M.; Vannini, G. Ontology-based photogrammetry survey for medieval archaeology: Toward a 3D geographic information system (GIS). Geosciences 2017, 7, 93. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P. From Point Clouds to Building Information Models: 3D Semi-Automatic Reconstruction of Indoors of Existing Buildings. Appl. Sci. 2017, 7, 1030. [Google Scholar] [CrossRef]

- Riveiro, B.; Dejong, M.J.; Conde, B. Automated processing of large point clouds for structural health monitoring of masonry arch bridges. Autom. Constr. 2016, 72, 258–268. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P.; Suwardhi, D.; Awalludin, R. Multi-Scale and Multi-Sensor 3D Documentation of Heritage Complexes in Urban Areas. ISPRS Int. J. Geo-Inf. 2018, 7, 483. [Google Scholar] [CrossRef]

- Dore, C.; Murphy, M. Semi-Automatic Modelling of Building Façades With Shape Grammars Using Historic Building Information Modelling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W1, 57–64. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Automatic extraction of building features from terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 25–27. [Google Scholar]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P. Validation of Point Clouds Segmentation Algorithms through their Application to Several Case Studies for Indoor Building Modelling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI, 12–19. [Google Scholar] [CrossRef]

- Dore, C.; Murphy, M.; McCarthy, S.; Brechin, F.; Casidy, C.; Dirix, E. Structural simulations and conservation analysis-historic building information model (HBIM). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5-W4, 351–357. [Google Scholar] [CrossRef]

- Oreni, D.; Brumana, R.; Della Torre, S.; Banfi, F.; Barazzetti, L.; Previtali, M. Survey turned into HBIM: The restoration and the work involved concerning the Basilica di Collemaggio after the earthquake (L’Aquila). ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-5, 267–273. [Google Scholar] [CrossRef]

- Elizabeth, O.; Prizeman, C. HBIM and matching techniques: Considerations for late nineteenth- and early twentieth-century buildings. J. Archit. Conserv. 2015, 21, 145–159. [Google Scholar] [CrossRef]

- Oreni, D.; Brumana, R.; Georgopoulos, A.; Cuca, B. HBIM for Conservation and Management of Built Heritage: Towards a Library of Vaults and Wooden Bean Floors. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W1, 215–221. [Google Scholar] [CrossRef]

- Yang, X.; Koehl, M.; Grussenmeyer, P. Parametric modeling of as-built beam framed structure in bim environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2017, 42, 651–657. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Point cloud segmentation and semantic annotation aided by GIS data for heritage complexes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII, 523–528. [Google Scholar] [CrossRef]

- Fabbri, S.; Sauro, F.; Santagata, T.; Rossi, G.; De Waele, J. High-resolution 3D mapping using terrestrial laser scanning as a tool for geomorphological and speleogenetical studies in caves: An example from the Lessini mountains (North Italy). Geomorphology 2017, 280, 16–29. [Google Scholar] [CrossRef]

- Fletcher, R.; Johnson, I.; Bruce, E.; Khun-Neay, K. Living with heritage: Site monitoring and heritage values in Greater Angkor and the Angkor World Heritage Site, Cambodia. World Archaeol. 2007, 39, 385–405. [Google Scholar] [CrossRef]

- Seker, D.Z.; Alkan, M.; Kutoglu, H.; Akcin, H.; Kahya, Y. Development of a GIS Based Information and Management System for Cultural Heritage Site; Case Study of Safranbolu. In Proceedings of the FIG Congress 2010, Sydney, Australia, 11–16 April 2010; Number 1-10. [Google Scholar]

- Kastuari, A.; Suwardhi, D.; Hanan, H.; Wikantika, K. State of the Art of the Landscape Architecture Spatial Data Model From a Geospatial Perspective. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, IV-2/W1, 20–21. [Google Scholar] [CrossRef]

- Omidalizarandi, M.; Saadatseresht, M. Segmentation and classification of point clouds from dense aerial image matching. Int. J. Multimed. Its Appl. 2013, 5, 33–51. [Google Scholar] [CrossRef]

- Spina, S.; Debattista, K.; Bugeja, K.; Chalmers, A. Point Cloud Segmentation for Cultural Heritage Sites. In Proceedings of the VAST11: The 12th International Symposium on Virtual Reality, Archaeology and Intelligent Cultural Heritage, Prato, Italy, 18–21 October 2011; pp. 41–48. [Google Scholar]

- Kim, E.; Medioni, G. Urban scene understanding from aerial and ground LIDAR data. Mach. Vis. Appl. 2011, 22, 691–703. [Google Scholar] [CrossRef]

- Liu, C.J.; Krylov, V.; Dahyot, R. 3D point cloud segmentation using GIS. In Proceedings of the 20th Irish Machine Vision and Image Processing Conference, Belfast, UK, 29–31 August 2018; pp. 41–48. [Google Scholar]

- Kaiser, P.; Wegner, J.D.; Lucchi, A.; Jaggi, M.; Hofmann, T.; Schindler, K. Learning Aerial Image Segmentation from Online Maps. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6054–6068. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Automatic Heritage Building Point Cloud Segmentation and Classification Using Geometrical Rules. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 821–827. [Google Scholar] [CrossRef]

- Luo, D.; Wang, Y. Rapid extracting pillars by slicing point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 215–218. [Google Scholar]

- da Costa Salavessa, M.E. Historical Timber-Framed Buildings: Typology and Knowledge. J. Civ. Eng. Archit. 2012, 6, 151–166. [Google Scholar] [CrossRef]

- Menou, J.C. Requiem pour la charpente de Notre-Dame de Paris. Commentaire 2019, 166, 395–397. [Google Scholar] [CrossRef]

- Pöchtrager, M.; Styhler-Aydın, G.; Döring-Williams, M.; Pfeifer, N. Digital reconstruction of historic roof structures: Developing a workflow for a highly automated analysis. Virtual Archaeol. Rev. 2018, 9, 21. [Google Scholar] [CrossRef]

- Pöchtrager, M.; Styhler-Aydln, G.; Döring-Williams, M.; Pfeifer, N. Automated reconstruction of historic roof structures from point clouds - development and examples. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 195–202. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Dewez, T.J.; Girardeau-Montaut, D.; Allanic, C.; Rohmer, J. Facets: A cloudcompare plugin to extract geological planes from unstructured 3d point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2016, 41, 799–804. [Google Scholar] [CrossRef]

- Semler, Q.; Suwardhi, D.; Alby, E.; Murtiyoso, A.; Macher, H. Registration of 2D Drawings on a 3D Point Cloud As a Support for the Modeling of Complex Architectures. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 1083–1087. [Google Scholar] [CrossRef]

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| BUILDINGS1 | 703 500 | 680 386 | 10 592 | 33 706 | 669 794 | 4.79 | 98.44 | 95.21 | 96.80 |

| BUILDINGS2 | 643 350 | 633 897 | 6 630 | 16 083 | 627 267 | 2.50 | 98.95 | 97.50 | 98.22 |

| BUILDINGS3 | 317 459 | 300 283 | 9 873 | 27 049 | 290 410 | 8.52 | 96.71 | 91.48 | 94.02 |

| BUILDINGS4 | 58 532 | 60 838 | 8 296 | 5 990 | 52 542 | 10.23 | 86.36 | 89.77 | 88.03 |

| BUILDINGS5 | 52 026 | 58 047 | 7 415 | 1 394 | 50 632 | 2.68 | 87.23 | 97.32 | 92.00 |

| GATES1 | 101 196 | 95 754 | 4 017 | 9 459 | 91 737 | 9.35 | 95.80 | 90.65 | 93.16 |

| GATES2 | 151 040 | 146 133 | 4 955 | 9 862 | 141 178 | 6.53 | 96.61 | 93.47 | 95.01 |

| WALLS1 | 216 951 | 151 520 | 683 | 66 114 | 150 837 | 30.47 | 99.55 | 69.53 | 81.87 |

| WALLS2 | 417 768 | 351 818 | 3 168 | 69 118 | 348 650 | 16.54 | 99.10 | 83.46 | 90.61 |

| WALLS3 | 84 516 | 81 520 | 5 762 | 8 758 | 75 758 | 10.36 | 92.93 | 89.64 | 91.25 |

| WALLS4 | 64 877 | 56 804 | 4 595 | 12 668 | 52 209 | 19.53 | 91.91 | 80.47 | 85.81 |

| WALLS5 | 63 014 | 34 752 | 1 814 | 30 076 | 32 938 | 47.73 | 94.78 | 52.27 | 67.38 |

| WALLS6 | 177 399 | 175 862 | 13 371 | 14 908 | 162 491 | 8.40 | 92.40 | 91.60 | 91.99 |

| Mean | 13.66 | 94.68 | 86.34 | 89.71 | |||||

| Median | 6.53 | 95.80 | 90.65 | 91.99 | |||||

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| COLLFOCH | 34 011 | 32 384 | 217 | 1 844 | 32 167 | 5.69 | 99.33 | 94.58 | 96.90 |

| STPIERRE | 36 858 | 34 960 | 757 | 2 655 | 34 203 | 7.59 | 97.83 | 92.80 | 95.25 |

| DIRIMPOTS | 52 520 | 56 586 | 6 099 | 2 033 | 50 487 | 3.59 | 89.22 | 96.13 | 92.55 |

| PLSJUSTICE | 81 074 | 69 559 | 637 | 12 152 | 68 922 | 17.47 | 99.08 | 85.01 | 91.51 |

| PLSFETES | 37 663 | 35 823 | 0 | 1 840 | 35 823 | 5.14 | 100.00 | 95.11 | 97.50 |

| PLSRHIN | 84 833 | 74 738 | 1 026 | 11 121 | 73 712 | 14.88 | 98.63 | 86.89 | 92.39 |

| Mean | 9.06 | 97.35 | 91.75 | 94.35 | |||||

| Median | 6.64 | 98.86 | 93.69 | 93.90 | |||||

| Class | %Precision | %Recall | %F1 Score | |||

|---|---|---|---|---|---|---|

| Metashape | M_HERACLES | Metashape | M_HERACLES | Metashape | M_HERACLES | |

| Buildings | 51.44 | 95.40 | 73.49 | 77.14 | 60.52 | 85.30 |

| Walls | 6.48 | 96.61 | 3.17 | 77.21 | 4.26 | 85.83 |

| Trees | 92.15 | 88.23 | 85.12 | 74.80 | 88.50 | 80.96 |

| Median | 51.44 | 95.40 | 73.49 | 77.14 | 60.52 | 85.30 |

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| K01 | 2 963 | 2 106 | 2 106 | 0 | 857 | 28.92 | 100.00 | 71.08 | 83.09 |

| K02 | 2 543 | 1 819 | 1 815 | 4 | 728 | 28.63 | 99.78 | 71.37 | 83.22 |

| K03 | 2 577 | 1 787 | 1 783 | 4 | 794 | 30.81 | 99.78 | 69.19 | 81.71 |

| K04 | 2 379 | 1 618 | 1 618 | 0 | 761 | 31.99 | 100.00 | 68.01 | 80.96 |

| K05 | 3 698 | 2 340 | 2 340 | 0 | 1 358 | 36.72 | 100.00 | 63.28 | 77.51 |

| K06 | 3 440 | 2 158 | 2 158 | 0 | 1 282 | 37.27 | 100.00 | 62.73 | 77.10 |

| K07 | 3 646 | 2 282 | 2 282 | 0 | 1 364 | 37.41 | 100.00 | 62.59 | 76.99 |

| K08 | 3 361 | 2 117 | 2 117 | 0 | 1 244 | 37.01 | 100.00 | 62.99 | 77.29 |

| Mean | 33.60 | 99.94 | 66.40 | 79.73 | |||||

| Median | 34.36 | 100.00 | 65.64 | 79.23 | |||||

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| S01 | 72 587 | 54 995 | 47 709 | 7 286 | 24 878 | 34.27 | 86.75 | 65.73 | 74.79 |

| S02 | 66 298 | 64 952 | 52 922 | 12 030 | 13 376 | 20.18 | 81.48 | 79.82 | 80.64 |

| S03 | 74 430 | 55 979 | 50 435 | 5 544 | 23 995 | 32.24 | 90.10 | 67.76 | 77.35 |

| S04 | 71 667 | 59 277 | 43 647 | 15 630 | 28 020 | 39.10 | 73.63 | 60.90 | 66.67 |

| S05 | 64 893 | 54 969 | 54 343 | 626 | 10 550 | 16.26 | 98.86 | 83.74 | 90.68 |

| S06 | 66 678 | 61 804 | 50 018 | 11 786 | 16 660 | 24.99 | 80.93 | 75.01 | 77.86 |

| S07 | 67 316 | 75 062 | 51 996 | 23 066 | 15 320 | 22.76 | 69.27 | 77.24 | 73.04 |

| S08 | 60 165 | 49 212 | 35 814 | 13 398 | 24 351 | 40.47 | 72.77 | 59.53 | 65.49 |

| Mean | 28.78 | 81.72 | 71.22 | 75.81 | |||||

| Median | 28.61 | 81.20 | 71.39 | 76.07 | |||||

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| V01 | 35 370 | 46 666 | 15 594 | 4 298 | 31 072 | 12.15 | 66.58 | 87.85 | 75.75 |

| V02 | 35 845 | 47 358 | 15 744 | 4 231 | 31 614 | 11.80 | 66.76 | 88.20 | 75.99 |

| V03 | 39 169 | 51 853 | 17 333 | 4 649 | 34 520 | 11.87 | 66.57 | 88.13 | 75.85 |

| V04 | 40 155 | 51 923 | 17 010 | 5 242 | 34 913 | 13.05 | 67.24 | 86.95 | 75.83 |

| V05 | 38 288 | 52 623 | 17 575 | 3 240 | 35 048 | 8.46 | 66.60 | 91.54 | 77.10 |

| V06 | 39 689 | 53 016 | 17 406 | 4 079 | 35 610 | 10.28 | 67.17 | 89.72 | 76.82 |

| Mean | 11.27 | 66.82 | 88.73 | 76.23 | |||||

| Median | 11.84 | 66.68 | 88.16 | 75.92 | |||||

| Object | Point Number | Misclassed | True Positive | %Unclassed | %P | %R | %F1 | ||

|---|---|---|---|---|---|---|---|---|---|

| Manual | Auto | Overclassed | Unclassed | ||||||

| Beam1 | 15 036 | 10 960 | 608 | 4 684 | 10 352 | 42.74 | 94.45 | 68.85 | 79.64 |

| Beam2 | 57 986 | 43 826 | 0 | 14 160 | 43 826 | 32.31 | 100.00 | 75.58 | 86.09 |

| Beam3 | 28 789 | 26 141 | 2 355 | 5 003 | 23 786 | 19.14 | 90.99 | 82.62 | 86.60 |

| Mean | 31.40 | 95.15 | 75.68 | 84.11 | |||||

| Median | 32.31 | 94.45 | 75.58 | 86.09 | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murtiyoso, A.; Grussenmeyer, P. Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements. Sensors 2020, 20, 2161. https://doi.org/10.3390/s20082161

Murtiyoso A, Grussenmeyer P. Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements. Sensors. 2020; 20(8):2161. https://doi.org/10.3390/s20082161

Chicago/Turabian StyleMurtiyoso, Arnadi, and Pierre Grussenmeyer. 2020. "Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements" Sensors 20, no. 8: 2161. https://doi.org/10.3390/s20082161

APA StyleMurtiyoso, A., & Grussenmeyer, P. (2020). Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements. Sensors, 20(8), 2161. https://doi.org/10.3390/s20082161