1. Introduction

Emerging infectious diseases are serious threats to global health. During the last two decades, there have been travel-related outbreaks of infectious diseases, such as severe acute respiratory syndrome and novel Coronavirus (2019-nCoV), around the world in 2003 and 2019 [

1,

2]. To contain the outbreak of emerging viral diseases, infrared thermography (IRT) has been applied for fever screening of passengers with suspected infection in many international quarantine stations [

3,

4,

5]. IRT is an effective method for measuring elevated body temperature. However, monitoring body temperature alone is insufficient for accurate detection of infected patients, as IRT monitoring facial surface temperature can be affected by many factors such as antipyretic consumption [

6]. The positive predictive values of fever-based screening using IRT vary from 3.5% to 65.4%, indicating the limited efficacy for detecting symptomatic passengers [

7].

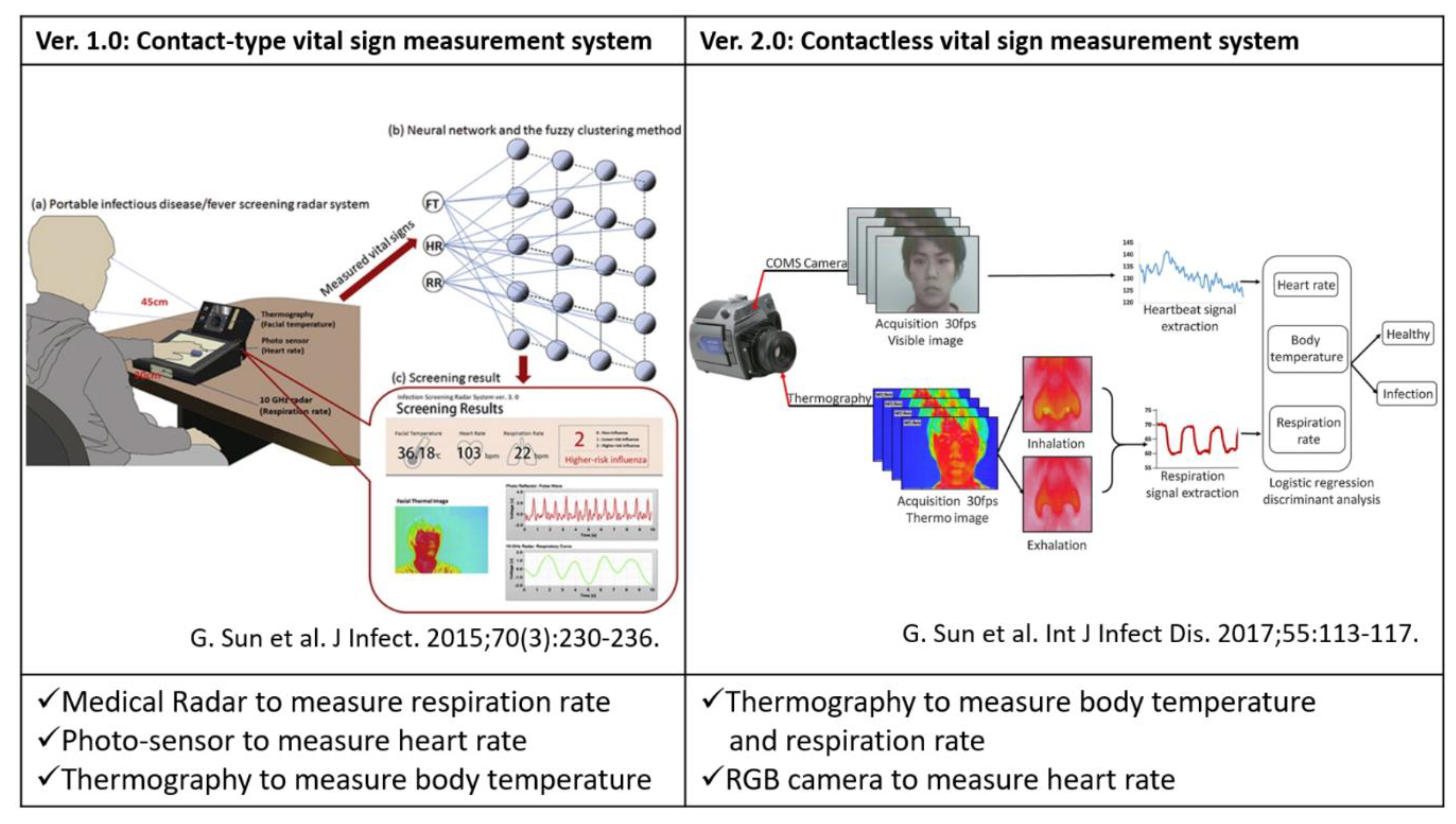

To overcome the drawbacks of fever-based screening, we previously proposed a screening method based on simultaneously measuring three vital signs—body temperature, heart rate (HR) and respiration rate (RR)—using multiple sensors, that is, medical radar, thermograph, photo-sensor and RGB cameras [

8,

9,

10]. These three vital signs were included in the criteria of the systemic inflammatory response syndrome [

11]. Symptoms of the most infectious diseases tend to include an elevated HR and RR; hence, a screening that combines these three vital signs will improve the precision of detecting patients with such symptoms. Therefore, we developed contact and contactless vital sign measurement systems to investigate the feasibility of our screening method (

Figure 1). In brief, the contact-type system (Ver.1.0) comprises three sensors, that is, medical radar, photo-sensor and thermograph [

8]. The medical radar detects tiny body surface movements caused by respiration, the thermograph measures the highest temperature of the face and the photo-sensor monitors pulse waves to calculate the HR. To enable a completely contactless system (Ver.2.0), we combined RGB and the thermal image to extract multiple vital signs from the facial image [

10]. The RR can be measured by monitoring the temperature changes around the nasal and oral areas accompanying inspiration and expiration. The RGB camera measures the blood volume pulse (BVP) through variations in the light absorption from the human facial area. We tested the systems on patients with seasonal influenza and dengue fever and the results indicate a sensitivity ranging from 81.5–98% [

12].

In this study, to promote the widespread use of our vital sign-based infection screening method, we enhanced the function of the Ver.2.0 contactless system to enable a stable, reliable and real-time system. We improved the stability of HR and RR measurement with the RGB-thermal image fusion approach for a highly reliable facial region-of-interest (ROI) tracking [

13]. Moreover, we focused on improving the robustness of extracting BVP and respiration signal from the RGB camera and IRT. We proposed a signal processing method for reconstructing the BVP waveform using all RGB channels and selecting nasal or oral breathing based on signal quality index (SQI), for improving the signal-to-noise ratio. To enable a real-time system, we implemented a multiple signal classification (MUSIC) algorithm to estimate the pseudo-spectrum from limited time-domain BVP and respiration signals within 15 s [

14]. Finally, we tested the system on 22 healthy subjects and 41 patients with influenza-like symptoms (28 diagnosed influenza patients and 13 undiagnosed patients).

The remainder of this paper is organized as follows. In the Section “Materials and Methods,” we describe an overview of our system and proposed signal and image processing methods. The Section “Results” contains the results of comparison between our contactless system with contact-type reference devices and screening performance on detecting influenza patients using a support vector machine (SVM). In the Section “Discussion and Conclusion,” we discuss our findings and draw conclusions.

2. Materials and Methods

2.1. Related Work on Vision based Clinical Screening

Vision-based clinical screening using RGB and thermal image sensors have recently attracted increasing attention in academia and industry. Ming-Zher Poh et al. developed a robust method for measuring HR and HRV from digital RGB video recording of skin color changes [

15]. He Liu et al. proposed a novel method using dual cameras to estimate arterial oxygen saturation [

16]. Philips Research has been launching an app called “

Vital Signs Camera” in 2012. Moreover, the thermal camera-based approaches have been widely applied in clinical screening and research, such as fever screening and human pose estimation [

5]. To enable such specific applications, image processing method for keypoint detection has been proposed using a stacked hourglass network and feature boosting networks [

17,

18,

19].

2.2. Overview of Infectious Screening System using RGB-thermal Image Sensors

In our previous work, a dual image sensor-based infectious screening system was developed for predicting the possibility of infection [

10]. It comprises an RGB camera and an IRT for measuring HR, RR and body temperature. We used DFK23U618 (The Imaging Source Co. Ltd., Germany) as the RGB camera and FLIR A315 (FLIR Systems, Inc., USA) as the IRT. The visible video was recorded at a speed of 15 frames per second (fps) with a pixel resolution of 640 × 480 and the thermal video was recorded at a speed of 15 fps with a pixel resolution of 320 × 240. An RGB camera senses fluctuations in hemoglobin absorption derived from the volumetric change in facial blood vessels and obtains heartbeat signals. An IRT detects temperature changes between inhalation and exhalation in the nasal or oral area. In addition, the facial skin temperature is measured by the IRT. Multiple vital signs distinguish between patients with influenza and healthy subjects.

Figure 2 shows an overview of an infectious screening system.

2.3. Sensor Fusion Combining RGB sensor and IRT for ROI Detection

A stable measurement of the body temperature and RR using an IRT needs a detailed ROI detection of facial landmarks (i.e., face, nose and mouth) because temperature is estimated at the facial area and respiration occurs at the nose and mouth. An RGB camera can detect facial landmarks finely using previous methods [

20]. Therefore, we introduced a sensor fusion method to obtain facial landmarks in a thermal video determined by an RGB video.

The facial landmarks in a thermal video are detected by homography of the RGB image coordinates of the nose and mouth, detected by “dlib” of an open-source library to thermal image coordinates. The homography between the images is represented by equation (1) and the homography matrix H is represented as

where

,

,

and

are image coordinates in the RGB and thermal images. Each

in Equation (1) is an element of the homography matrix H.

Figure 3 shows a flowchart of image processing conducted to estimate the homography matrix H. Its standard is the face profile between the RGB and thermal images using pattern matching. First, from the RGB and thermal images shown in

Figure 3a,b, the profile part is abstracted using the “grabcut” method [

21] of OpenCV, to obtain the profile images shown in

Figure 3c. The combination of coordinates between the images is found by obtaining the oriented fast and rotated BRIEF (ORB) characteristics of the two profile images and by performing a full search of the corresponding points from the characteristic points of each image obtained [

22]. The homography matrix for the combination of image coordinates obtained is estimated using the random sample consensus method [

23]. Finally, the facial landmarks in the thermal image (

Figure 3e) are detected by applying the homography matrix to RGB’s facial landmarks (

Figure 3d).

2.4. RGB Sensor Processing for HR Estimation Using Tapered Window, Signal Reconstruction based on Softsig and MUSIC Algorithm

The fundamental method of HR estimation using an RGB camera has been described previously [

15]. The RGB camera senses tiny color fluctuations in the facial skin with other noise. To remove the noise components, methods such as independent component analysis (ICA) and soft signature-based extraction (Softsig) [

24] are used. In this study, we introduce the tapered window and signal reconstruction method into HR estimation for a stable measurement, which achieved an infection screening system. The observed RGB time-series data have components of heartbeat, motion artifact and noise from other light sources. The tapered window and signal reconstruction method is based on the Softsig demix heartbeat signal.

Figure 4 shows an overview of HR estimation in this system.

Tapered window, which is a general window function, was applied to the detected facial ROI (

Figure 4b). In facial ROI, the edge area suffers from the lag affected by the face tracker. On the other hand, the ROI center can achieve a stable tracking of the facial skin. Therefore, we adopted tapered window to weighted ROI to reduce the noise raised by facial tracking. A 1d-tapered window is represented as

where

m indicates the tapered portion and has a value of

. To apply the tapered window to a 2d-image, the 2d-tapered window is expressed as

where

x and

y are the x-coordinates and y-coordinates of ROI, respectively.

The aim of signal reconstruction is to find a reconstruction vector

for extracting the heartbeat signal by utilizing the difference among RGB absorption. Reconstructing a BVP signal using three RGB channels to optimize a linear function for improving the signal-to-noise ratio. According to a previous study, the reflection strength of the heartbeat is referred to as the relation in G>B>R order among the RGB channels. Using this relation, signal reconstruction can be expressed as

where

are the reconstruction vector. While this method is based on the Softsig method, we improved the determined method for vector V. To recover the pulse signal, we selected V to maximize the kurtosis of the spectra in the HR range of [0.75–4.0 Hz] (

Figure 4c).

Finally, the MUSIC method was introduced to realize HR and RR measurements within a short time period. This method permits the realization of high-resolution HR and RR frequency estimation based on short-period measurement data Equation (5) expresses the spectrum estimation formula of the MUSIC method [

14]:

where

represents a complex sinusoidal wave vector and

represents the eigenvector of the correlation matrix. This system applies the MUSIC method separately to the HR and RR time-series data obtained from the video. In the case of heartbeat, the peak of 0.75–3.0 Hz (45–180 beats per minute (bpm)) of the obtained spectrum was assumed to be the HR.

2.5. IRT Sensor Processing for RR Estimation Using Nasal and Oral Breathing Decision based on SQI and MUSIC Algorithm and Body Temperature Estimation

The current approach of respiration measurement using an IRT is based on nasal temperature change. However, mouth breathing is reported in 17% of the total population [

25]. For a stable RR measurement using an IRT, we must also measure oral temperature changes and select nasal or oral temperature changes dependent on strongly including respiration. To choose nasal or oral breathing, we quantified temperature traces via nasal and oral areas using SQI. Moreover, the MUSIC algorithm achieved rapid measurement for RR estimation.

Figure 5 shows an overview of the respiration measurement that introduces nasal and oral breathing measurement method and MUSIC algorithm.

First, the nasal and oral areas were detected using the fusion sensor system described in

Section 2. The possible respiration signals were extracted by the two areas. The mean temperature fluctuation

in each ROI and the min temperature fluctuation

in each ROI are expressed as

where

I(x,y,t) is the pixel temperature at the image coordinate (

x, y) in the ROI and time

t, m is the width of the ROI and n is the height of the ROI.

and

include the respiration signals.

Second, the respiration signal is selected from nasal and oral temperature traces using the four extracted signals: , , and . Selection of the proposed respiration signal is conducted using the nasal SQI and oral SQI, based on the agreement of frequency estimated by power spectral density (PSD), autocorrelation (ACR) and cross-power spectral density (CPSD). The frequency of PSD using was estimated from the peak of power spectra from 0.1–0.75 Hz, to provide the range of RR measurement. The frequency of ACR using was estimated from the average peak interval. The frequency of CPSD using and was estimated from the peak of cross-power spectra ranging from 0.1–0.75 Hz. If the temperature change in the nasal or oral area includes dominant respiration frequency, CPSD indicates the frequency by strengthening the respiration frequency between and in the ROI. The following two rules are adopted sequentially:

Rule 1 (nasal SQI): If the ratio of to and that of to obtained by the nasal area lie between 0.85 and 1.15, we select the nasal temperature change as the respiration signal. (This index shows that the nasal area includes the respiration signal because a ratio close to 1 indicates that the respiration frequency is dominant)

Rule 2 (oral SQI): If the ratio of to and that of to obtained by the oral area lie between 0.85 and 1.15, we select the oral temperature change as the respiration signal. (This index shows that the oral area includes the respiration signal because a ratio close to 1 indicates that the respiration frequency is dominant)

If the two rules are not satisfied, we select nasal area as the respiration signal.

This system applies the MUSIC method separately to the HR and RR time-series data obtained from the video. In the case of respiration, the peak of 0.1– 0.75 Hz (6–45 bpm) of the spectrum obtained was assumed to be the RR. Temperature was also determined as the max facial temperature in the detected facial ROI using the sensor fusion technique.

2.6. SVM Discriminant Analysis to Predict Patients with Seasonal Influenza based on the Three Vital Signs Measured

Aiming at screening using features of HR, RR and body temperature of patients with infection, we proposed a classification model based on SVM. SVM is a method that predicts the separating hyperplane to maximize the margin between the two classes and achieves a high generalization capability. The SVM discriminant function is defined as

where

is a constant that indicates the SVM coefficients corresponding to HR, RR and temperature;

is a category of health or infection;

is the penalty parameter and

is the slack parameter;

is linear discriminant function formula

. The calculation of SVM is performed using the MATLAB software.

2.7. Evaluation of the System in Laboratory and Clinical Settings

Laboratory and clinical testing of the system was conducted in 2019. Twenty-two healthy control subjects with no symptoms of fever (23.4 years of average age) participated in the laboratory test at the University of Electro-Communications. A total of 41 patients (45.0 years of average age) with symptoms such as influenza were included, who visited Takasaka Clinic, Fukushima, Japan. Their RR, HR and body temperature were measured using the contactless system; reference measurements were simultaneously obtained using a contact-type electrocardiogram (ECG) (LRR-03, GMS Co. Ltd., Tokyo, Japan) or pulse oximeter (SAT-2200 Oxypal mini, NIHONKOHDEN Co., Tokyo, Japan), clinical thermometer (TERUMO electric thermometer C230, TERUMO Co., Tokyo, Japan) and a respiration effort belt (DL-231, S&ME Inc.,Tokyo, Japan). It should be noted that, some patients may show increased heart rate due to white-coat hypertension. This study was approved by the Committee on Human Research of the Faculty of System Design, Tokyo Metropolitan University and the University of Electro-Communications. All subjects gave their informed written consent.

2.8. Statistical Analysis

The Bland–Altman plot and scatter plot were utilized for statistical and graphical proof of the agreement between the proposed method and reference method [

26]. The reference vital signs were measured by ECG or a pulse oximeter for HR, respiration effort belt for RR and electronic thermometer for axillary temperature. The results from the SVM classification model were used to calculate the sensitivity, specificity negative predictive value (NPV) and positive predictive value (PPV). A leave-one-out cross-validation was performed to avoid overfitting.

4. Discussion and Conclusions

The outbreak of 2019-nCoV was first reported in Wuhan, China, in December 2019 and was confirmed to have spread to more than 110 countries as of March 2020. When such a novel virus outbreaks, enhanced public health quarantine and isolation is essential. For this purpose, we developed a multiple vital sign measurement system for the mass screening of infected individuals in places of mass gathering. In this study, we focused on developing our system to measure three vital signs, to achieve automation, stability and swiftness for practical use in real-world settings. From a technical perspective, we proposed specific signal and image processing methods for highly reliable vital sign measurements and compared them with conventional methods (

Table 1 and

Table 2). Tapered window, RGB signal reconstruction and MUSIC were applied for HR measurement. Automatic ROI tracking using sensor fusion and nasal or oral breathing selection using SQI and MUSIC were applied for HR measurement. The proposed method showed agreement with their reference devices (HR: [−10.4, 12.6] bpm, RR: [−2.97, 3.67] bpm, temperature: [−0.449, 2.56] °C). The reliability and stability of our system on vital sign measurement were significantly improved.

Moreover, we tested multiple vital sign-based screening in a laboratory and a clinic. The proposed method’s sensitivity and specificity (85.7%, 90.1%) were found to be higher than those of fever-based screening (60.7%, 86.4%). The tendency of the three vital signs measured by healthy control subjects and influenza patients is shown in

Figure 12. The medians of facial skin temperature of influenza patients and healthy control subjects were 37.3 and 35.5 °C, respectively. The medians of HR of influenza patients and healthy control subjects were 99.3 and 76.4 bpm. The medians of RR of influenza patients and healthy control subjects were 18.9 and 14.0 bpm. Each vital sign of patients with influenza was found to be elevated. This contributed to improvement in SVM classification based on the three vital signs.

However, the proposed method has some limitations. The ROI detection of sensor fusion may fail when the background has the color of skin or hair. In terms of the classification test based on SVM, the facial skin temperature may include the influence of the ambient environment. The measurement environment at a laboratory is different from that at a clinic, even at the same ambient temperature. This causes a difference in facial skin temperature regardless of the seasonal influenza. Therefore, we need to develop environment-invariant temperature estimation using an IRT.

In conclusion, we proposed automatic, stable and rapid HR, RR and body temperature measurements using an RGB-thermal sensor and its application for the screening of infectious diseases. This method introduces (1) the sensor fusion approach for the detection of detailed facial landmarks in a thermal image, (2) HR estimation, which introduces tapered window, signal reconstruction and MUSIC and (3) RR estimation, which implements nasal or oral breathing selection using SQI and MUSIC. Moreover, we demonstrated a classification model based on SVM using healthy control subjects and patients with seasonal influenza. The results indicate that the proposed method is indispensable for the high performance of contactless multiple vital sign measurements for infection screening.