Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges

Abstract

:1. Introduction

2. Background

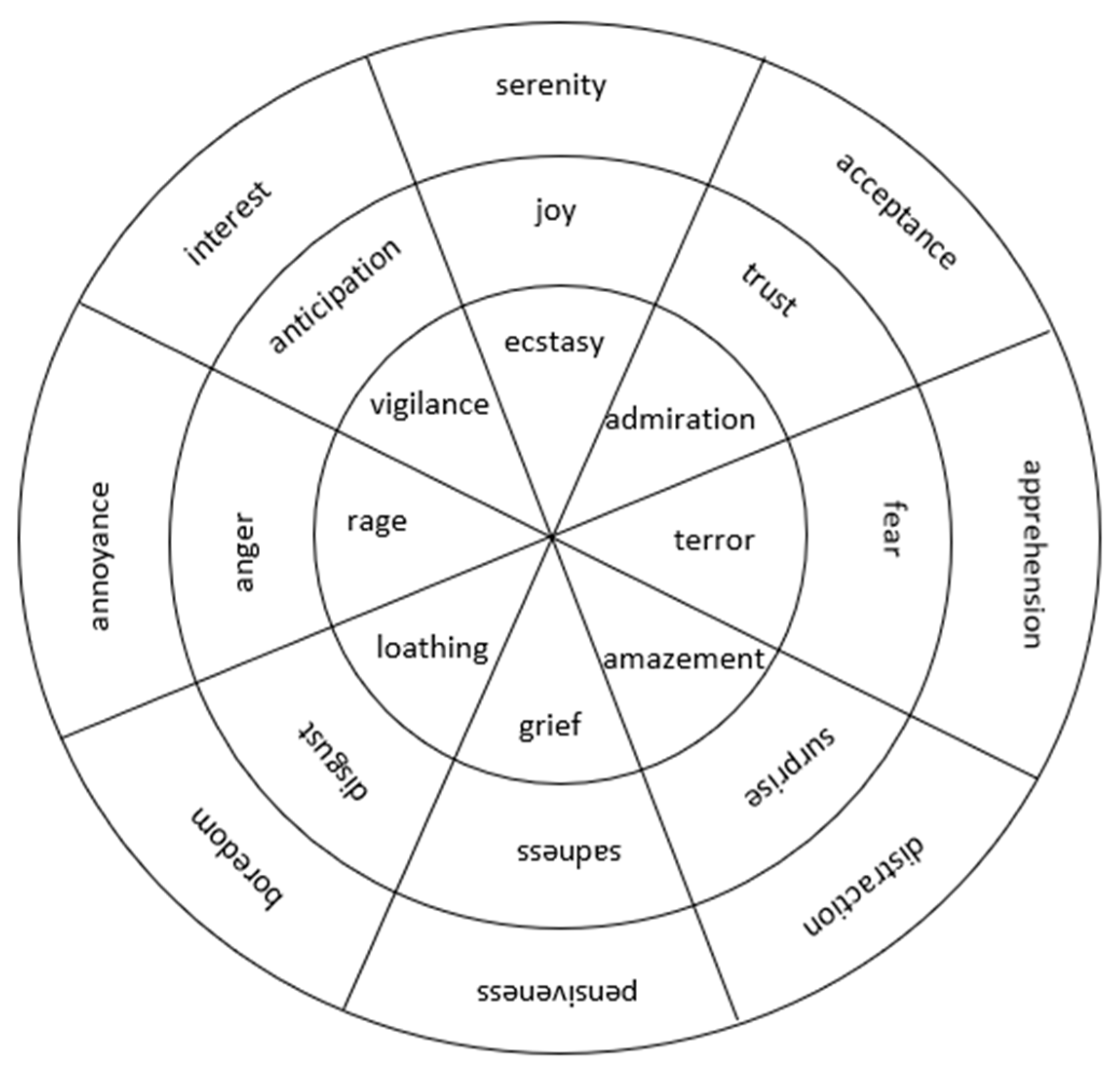

2.1. Human Emotions

2.2. Emotion Stimulation Tools

2.3. Eye-Tracking

2.3.1. Desktop Eye-Tracking

2.3.2. Mobile Eye-Tracking

2.3.3. Eye-Tracking in Virtual Reality

3. Emotional-Relevant Features from Eye-tracking

3.1. Pupil Diameter

3.2. Electrooculography (EOG)

3.3. Pupil Position

3.4. Fixation Duration

3.5. Distance Between Sclera and Iris

3.6. Eye Motion Speed

3.7. Pupillary Responses

4. Summary

5. Directions

5.1. Stimulus of the Experiment

5.2. Recognition of Complex Emotions

5.3. The Most Relevant Eye Features for Classification of Emotions

5.4. The Usage of Classifier

5.5. Multimodal Emotion Detection Using the Combination of Eye-Tracking Data with Other Physiological Signals

5.6. Subjects Used in the Experiment

5.7. Significant Difference of Accuracy Between Emotion Classes

5.8. Inter-Subject and Intra-Subject Variability

5.9. Devices and Applications

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Verschuere, B.; Crombez, G.; Koster, E.H.; Uzieblo, K. Psychopathy and Physiological Detection of Concealed Information: A review. Psychol. Belg. 2006, 46, 99. [Google Scholar] [CrossRef] [Green Version]

- Card, S.K.; Moran, T.P.; Newell, A. The keystroke-level model for user performance time with interactive systems. Commun. ACM 1980, 23, 396–410. [Google Scholar] [CrossRef]

- Fischer, G. User Modeling in Human–Computer Interaction. User Model. User-Adapt. Interact. 2001, 11, 65–86. [Google Scholar] [CrossRef]

- Cowie, R.; Douglas-Cowie, E.; Tsapatsoulis, N.; Votsis, G.; Kollias, S.; Fellenz, W.; Taylor, J. Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 2001, 18, 32–80. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Yang, Z.-J.; Lu, H.; Zhou, X.-X.; Phillips, P.; Liu, Q.-M.; Wang, S. Facial Emotion Recognition based on Biorthogonal Wavelet Entropy, Fuzzy Support Vector Machine, and Stratified Cross Validation. IEEE Access 2016, 4, 1. [Google Scholar] [CrossRef]

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074. [Google Scholar] [CrossRef] [Green Version]

- Hess, E.H.; Polt, J.M.; Suryaraman, M.G.; Walton, H.F. Pupil Size as Related to Interest Value of Visual Stimuli. Science 1960, 132, 349–350. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K. The 35th Sir Frederick Bartlett Lecture: Eye movements and attention in reading, scene perception, and visual search. Q. J. Exp. Psychol. 2009, 62, 1457–1506. [Google Scholar] [CrossRef] [PubMed]

- Lohse, G.L.; Johnson, E. A Comparison of Two Process Tracing Methods for Choice Tasks. Organ. Behav. Hum. Decis. Process. 1996, 68, 28–43. [Google Scholar] [CrossRef]

- Bulling, A.; A Ward, J.; Gellersen, H.; Tröster, G. Eye Movement Analysis for Activity Recognition Using Electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef]

- Cabanac, M. What is emotion? Behav. Process. 2002, 60, 69–83. [Google Scholar] [CrossRef]

- Daniel, L. Psychology, 2nd ed.; Worth: New York, NY, USA, 2011. [Google Scholar]

- Mauss, I.B.; Robinson, M.D. Measures of emotion: A review. Cogn. Emot. 2009, 23, 209–237. [Google Scholar] [CrossRef] [PubMed]

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Colombetti, G. From affect programs to dynamical discrete emotions. Philos. Psychol. 2009, 22, 407–425. [Google Scholar] [CrossRef] [Green Version]

- Ekman, P. Basic Emotions. Handb. Cogn. Emot. 2005, 98, 45–60. [Google Scholar]

- Plutchik, R. Nature of emotions. Am. Sci. 2002, 89, 349. [Google Scholar] [CrossRef]

- Jabreel, M.; Moreno, A. A Deep Learning-Based Approach for Multi-Label Emotion Classification in Tweets. Appl. Sci. 2019, 9, 1123. [Google Scholar] [CrossRef] [Green Version]

- Russell, J. A circumplex model of affect. J. Personality Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Rubin, D.C.; Talarico, J.M. A comparison of dimensional models of emotion: Evidence from emotions, prototypical events, autobiographical memories, and words. Memory 2009, 17, 802–808. [Google Scholar] [CrossRef] [Green Version]

- Soleymani, M.; Pantic, M.; Pun, T. Multimodal Emotion Recognition in Response to Videos. IEEE Trans. Affect. Comput. 2011, 3, 211–223. [Google Scholar] [CrossRef] [Green Version]

- Choi, K.-H.; Kim, J.; Kwon, O.S.; Kim, M.J.; Ryu, Y.; Park, J.-E. Is heart rate variability (HRV) an adequate tool for evaluating human emotions? – A focus on the use of the International Affective Picture System (IAPS). Psychiatry Res. Neuroimaging 2017, 251, 192–196. [Google Scholar] [CrossRef]

- Lang, P.J. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical report; University of Florida: Gainesville, FL, USA, 2005. [Google Scholar]

- Jacob, R.J.; Karn, K.S. Eye Tracking in Human-Computer Interaction and Usability Research. In The Mind’s Eye; Elsevier BV: Amsterdam, Netherlands, 2003; pp. 573–605. [Google Scholar]

- Singh, H.; Singh, J. Human eye-tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Alghowinem, S.; AlShehri, M.; Goecke, R.; Wagner, M. Exploring Eye Activity as an Indication of Emotional States Using an Eye-Tracking Sensor. In Advanced Computational Intelligence in Healthcare-7; Springer Science and Business Media LLC: Berlin, Germany, 2014; Volume 542, pp. 261–276. [Google Scholar]

- Hess, E.H. The Tell-Tale Eye: How Your Eyes Reveal Hidden thoughts and Emotions; Van Nostrand Reinhold: New York, NY, USA, 1995. [Google Scholar]

- Isaacowitz, D.M.; Wadlinger, H.A.; Goren, D.; Wilson, H.R. Selective preference in visual fixation away from negative images in old age? An eye-tracking study. Psychol. Aging 2006, 21, 40. [Google Scholar] [CrossRef] [PubMed]

- Looxid Labs, “What Happens When Artificial Intelligence Can Read Our Emotion in Virtual Reality,” Becoming Human: Artificial Intelligence Magazine. 2018. Available online: https://becominghuman.ai/what-happens-when-artificial-intelligence-can-read-our-emotion-in-virtual-reality-305d5a0f5500 (accessed on 28 February 2018).

- Mala, S.; Latha, K. Feature Selection in Classification of Eye Movements Using Electrooculography for Activity Recognition. Comput. Math. Methods Med. 2014, 2014, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Zheng, W.L.; Li, B.; Lu, B.L. Combining eye movements and EEG to enhance emotion recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar]

- Partala, T.; Surakka, V. Pupil size variation as an indication of affective processing. Int. J. Hum. -Comput. Stud. 2003, 59, 185–198. [Google Scholar] [CrossRef]

- Bradley, M.; Lang, P.J. The International Affective Digitized Sounds (IADS): Stimuli, Instruction Manual and Affective Ratings; NIMH Center for the Study of Emotion and Attention, University of Florida: Gainesville, FL, USA, 1999. [Google Scholar]

- Cohen, J.; MacWhinney, B.; Flatt, M.; Provost, J. PsyScope: An interactive graphic system for designing and controlling experiments in the psychology laboratory using Macintosh computers. Behav. Res. Methods Instrum. Comput. 1993, 25, 257–271. [Google Scholar] [CrossRef]

- Oliva, M.; Anikin, A. Pupil dilation reflects the time course of emotion recognition in human vocalizations. Sci. Rep. 2018, 8, 4871. [Google Scholar] [CrossRef] [Green Version]

- Gilzenrat, M.S.; Nieuwenhuis, S.; Jepma, M.; Cohen, J.D. Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cogn. Affect. Behav. Neurosci. 2010, 10, 252–269. [Google Scholar] [CrossRef]

- Peirce, J.W. PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef] [Green Version]

- Belin, P.; Fillion-Bilodeau, S.; Gosselin, F. The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behav. Res. Methods 2008, 40, 531–539. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hastie, T.; Tibshirani, R. Generalized Additive Models; Monographs on Statistics & Applied Probability; Chapman and Hall/CRC: London, UK, 1990. [Google Scholar]

- Mehler, M.F.; Purpura, M.P. Autism, fever, epigenetics and the locus coeruleus. Brain Res. Rev. 2008, 59, 388–392. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, W.-L.; Dong, B.-N.; Lu, B.-L. Multimodal emotion recognition using EEG and eye-tracking data. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2014; Volume 2014, pp. 5040–5043. [Google Scholar]

- Lanatà, A.; Armato, A.; Valenza, G.; Scilingo, E.P. Eye tracking and pupil size variation as response to affective stimuli: A preliminary study. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011; European Alliance for Innovation: Ghent, Belgium, 2011; pp. 78–84. [Google Scholar]

- Schreiber, K.M.; Haslwanter, T. Improving Calibration of 3-D Video Oculography Systems. IEEE Trans. Biomed. Eng. 2004, 51, 676–679. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Er, M.J.; Wu, S. Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain. IEEE Trans. Syst. ManCybern. Part B (Cybern) 2006, 36, 458–466. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H.; McCann, J.J. Lightness and Retinex Theory. J. Opt. Soc. Am. 1971, 61, 1. [Google Scholar] [CrossRef] [PubMed]

- Sheer, P. A software Assistant for Manual Stereo Photometrology. Ph.D. Thesis, University of the Witwatersrand, Johannesburg, South Africa, 1997. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote. Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Wong, B.S.F.; Ho, G.T.S.; Tsui, E. Development of an intelligent e-healthcare system for the domestic care industry. Ind. Manag. Data Syst. 2017, 117, 1426–1445. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Sangaiah, A.K.; Sodhro, G.H.; Lohano, S.; Pirbhulal, S. An Energy-Efficient Algorithm for Wearable Electrocardiogram Signal Processing in Ubiquitous Healthcare Applications. Sensors 2018, 18, 923. [Google Scholar] [CrossRef] [Green Version]

- Begum, S.; Barua, S.; Ahmed, M.U. Physiological Sensor Signals Classification for Healthcare Using Sensor Data Fusion and Case-Based Reasoning. Sensors 2014, 14, 11770–11785. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Lv, Z.; Zheng, Y. Automatic Emotion Perception Using Eye Movement Information for E-Healthcare Systems. Sensors 2018, 18, 2826. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paul, S.; Banerjee, A.; Tibarewala, D.N. Emotional eye movement analysis using electrooculography signal. Int. J. Biomed. Eng. Technol. 2017, 23, 59–70. [Google Scholar] [CrossRef]

- Primer, A.; Burrus, C.S.; Gopinath, R.A. Introduction to Wavelets and Wavelet Transforms; Prentice-Hall: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Hjorth, B. EEG analysis based on time domain properties. Electroencephalogr. Clin. Neurophysiol. 1970, 29, 306–310. [Google Scholar] [CrossRef]

- Aracena, C.; Basterrech, S.; Snael, V.; Velasquez, J.; Claudio, A.; Sebastian, B.; Vaclav, S.; Juan, V. Neural Networks for Emotion Recognition Based on Eye Tracking Data. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, 9–12 October 2015; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2015; pp. 2632–2637. [Google Scholar]

- Jänig, W. The Autonomic Nervous System. In Fundamentals of Neurophysiology; Springer Science and Business Media LLC: Berlin, Germany, 1985; pp. 216–269. [Google Scholar]

- Cheng, B.; Titterington, D.M. Neural Networks: A Review from a Statistical Perspective. Stat. Sci. 1994, 9, 2–30. [Google Scholar] [CrossRef]

- Palm, R.B. Prediction as a Candidate for Learning Deep Hierarchical Models of Data; Technical University of Denmark: Lyngby, Denmark, 2012. [Google Scholar]

- Anwar, S.A. Real Time Facial Expression Recognition and Eye Gaze Estimation System (Doctoral Dissertation); University of Arkansas at Little Rock: Little Rock, AR, USA, 2019. [Google Scholar]

- Cootes, T.F.; Taylor, C.; Cooper, D.; Graham, J. Active Shape Models-Their Training and Application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef] [Green Version]

- Edwards, G.J.; Taylor, C.; Cootes, T.F. Interpreting face images using active appearance models. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 1998; pp. 300–305. [Google Scholar]

- Gomez-Ibañez, A.; Urrestarazu, E.; Viteri, C. Recognition of facial emotions and identity in patients with mesial temporal lobe and idiopathic generalized epilepsy: An eye-tracking study. Seizure 2014, 23, 892–898. [Google Scholar] [CrossRef] [Green Version]

- Meletti, S.; Benuzzi, F.; Rubboli, G.; Cantalupo, G.; Maserati, M.S.; Nichelli, P.; Tassinari, C.A. Impaired facial emotion recognition in early-onset right mesial temporal lobe epilepsy. Neurol. 2003, 60, 426–431. [Google Scholar] [CrossRef] [Green Version]

- Circelli, K.S.; Clark, U.; Cronin-Golomb, A. Visual scanning patterns and executive function in relation to facial emotion recognition in aging. AgingNeuropsychol. Cogn. 2012, 20, 148–173. [Google Scholar] [CrossRef] [Green Version]

- Firestone, A.; Turk-Browne, N.B.; Ryan, J.D. Age-Related Deficits in Face Recognition are Related to Underlying Changes in Scanning Behavior. AgingNeuropsychol. Cogn. 2007, 14, 594–607. [Google Scholar] [CrossRef]

- Wong, B.; Cronin-Golomb, A.; Neargarder, S. Patterns of Visual Scanning as Predictors of Emotion Identification in Normal Aging. Neuropsychol. 2005, 19, 739–749. [Google Scholar] [CrossRef]

- Malcolm, G.L.; Lanyon, L.; Fugard, A.; Barton, J.J.S. Scan patterns during the processing of facial expression versus identity: An exploration of task-driven and stimulus-driven effects. J. Vis. 2008, 8, 2. [Google Scholar] [CrossRef] [Green Version]

- Nusseck, M.; Cunningham, D.W.; Wallraven, C.; De Tuebingen, M.A.; De Tuebingen, D.A.G.-; De Tuebingen, C.A.; Bülthoff, H.H.; De Tuebingen, H.A. The contribution of different facial regions to the recognition of conversational expressions. J. Vis. 2008, 8, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ekman, P.; Friesen, W.V. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues; Malor Books: Los Altos, CA, USA, 2003. [Google Scholar]

- Benton, A.L.; Abigail, B.; Sivan, A.B.; Hamsher, K.D.; Varney, N.R.; Spreen, O. Contributions to Neuropsychological Assessment: A clinical Manual; Oxford University Press: Oxford, UK, 1994. [Google Scholar]

- Tsang, V.; Tsang, K.L.V. Eye-tracking study on facial emotion recognition tasks in individuals with high-functioning autism spectrum disorders. Autism 2016, 22, 161–170. [Google Scholar] [CrossRef] [PubMed]

- Bal, E.; Harden, E.; Lamb, D.; Van Hecke, A.V.; Denver, J.W.; Porges, S.W. Emotion Recognition in Children with Autism Spectrum Disorders: Relations to Eye Gaze and Autonomic State. J. Autism Dev. Disord. 2009, 40, 358–370. [Google Scholar] [CrossRef] [PubMed]

- Carl, L. On the influence of respiratory movements on blood flow in the aortic system [in German]. Arch Anat Physiol Leipzig. 1847, 13, 242–302. [Google Scholar]

- Hayano, J.; Sakakibara, Y.; Yamada, M.; Kamiya, T.; Fujinami, T.; Yokoyama, K.; Watanabe, Y.; Takata, K. Diurnal variations in vagal and sympathetic cardiac control. Am. J. Physiol. Circ. Physiol. 1990, 258, H642–H646. [Google Scholar] [CrossRef]

- Porges, S.W. Respiratory Sinus Arrhythmia: Physiological Basis, Quantitative Methods, and Clinical Implications. In Cardiorespiratory and Cardiosomatic Psychophysiology; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1986; pp. 101–115. [Google Scholar]

- Pagani, M.; Lombardi, F.; Guzzetti, S.; Rimoldi, O.; Furlan, R.; Pizzinelli, P.; Sandrone, G.; Malfatto, G.; Dell’Orto, S.; Piccaluga, E. Power spectral analysis of heart rate and arterial pressure variabilities as a marker of sympatho-vagal interaction in man and conscious dog. Circ. Res. 1986, 59, 178–193. [Google Scholar] [CrossRef] [Green Version]

- Porges, S.W.; Cohn, J.F.; Bal, E.; Lamb, D. The Dynamic Affect Recognition Evaluation [Computer Software]; Brain-Body Center, University of Illinois at Chicago: Chicago, IL, USA, 2007. [Google Scholar]

- Grossman, P.; Beek, J.; Wientjes, C. A Comparison of Three Quantification Methods for Estimation of Respiratory Sinus Arrhythmia. Psychophysiology 1990, 27, 702–714. [Google Scholar] [CrossRef]

- Kamen, G. Electromyographic kinesiology. In Research Methods in Biomechanics; Human Kinetics Publ.: Champaign, IL, USA, 2004. [Google Scholar]

- Boraston, Z.; Blakemore, S.J. The application of eye-tracking technology in the study of autism. J. Physiol. 2007, 581, 893–898. [Google Scholar] [CrossRef]

- Pioggia, G.; Igliozzi, R.; Ferro, M.; Ahluwalia, A.; Muratori, F.; De Rossi, D. An Android for Enhancing Social Skills and Emotion Recognition in People With Autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2005, 13, 507–515. [Google Scholar] [CrossRef]

- Lischke, A.; Berger, C.; Prehn, K.; Heinrichs, M.; Herpertz, S.C.; Domes, G. Intranasal oxytocin enhances emotion recognition from dynamic facial expressions and leaves eye-gaze unaffected. Psychoneuroendocrinology 2012, 37, 475–481. [Google Scholar] [CrossRef] [PubMed]

- Heinrichs, M.; Von Dawans, B.; Domes, G. Oxytocin, vasopressin, and human social behavior. Front. Neuroendocr. 2009, 30, 548–557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rajakumari, B.; Selvi, N.S. HCI and eye-tracking: Emotion recognition using hidden markov model. Int. J. Comput. Sci. Netw. Secur. 2016, 16, 74. [Google Scholar]

- Baum, L.E.; Petrie, T. Statistical Inference for Probabilistic Functions of Finite State Markov Chains. Ann. Math. Stat. 1966, 37, 1554–1563. [Google Scholar] [CrossRef]

- Baum, L.E.; Eagon, J.A. An inequality with applications to statistical estimation for probabilistic functions of Markov processes and to a model for ecology. Bull. Am. Math. Soc. 1967, 73, 360–364. [Google Scholar] [CrossRef] [Green Version]

- Baum, L.E.; Sell, G. Growth transformations for functions on manifolds. Pac. J. Math. 1968, 27, 211–227. [Google Scholar] [CrossRef] [Green Version]

- Baum, L.E.; Petrie, T.; Soules, G.; Weiss, N. A Maximization Technique Occurring in the Statistical Analysis of Probabilistic Functions of Markov Chains. Ann. Math. Stat. 1970, 41, 164–171. [Google Scholar] [CrossRef]

- Baum, L.E. An Inequality and Associated Maximization Technique in Statistical Estimation of Probabilistic Functions of a Markov Process. Inequalities 1972, 3, 1–8. [Google Scholar]

- Ulutas, B.H.; Ozkan, N.; Michalski, R. Application of hidden Markov models to eye tracking data analysis of visual quality inspection operations. Cent. Eur. J. Oper. Res. 2019, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Chuk, T.; Chan, A.B.; Hsiao, J.H. Understanding eye movements in face recognition using hidden Markov models. J. Vis. 2014, 14, 8. [Google Scholar] [CrossRef] [Green Version]

- Raudonis, V.; Dervinis, G.; Vilkauskas, A.; Paulauskaite, A.; Kersulyte, G. Evaluation of Human Emotion from Eye Motions. Int. J. Adv. Comput. Sci. Appl. 2013, 4. [Google Scholar] [CrossRef] [Green Version]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Boil. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Alhargan, A.; Cooke, N.; Binjammaz, T. Affect recognition in an interactive gaming environment using eye tracking. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2017; pp. 285–291. [Google Scholar]

- De Melo, C.M.; Paiva, A.; Gratch, J. Emotion in Games. In Handbook of Digital Games; Wiley: Hoboken, NJ, USA, 2014; pp. 573–592. [Google Scholar]

- Zeng, Z.; Pantic, M.; Roisman, G.; Huang, T.S. A Survey of Affect Recognition Methods: Audio, Visual, and Spontaneous Expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 39–58. [Google Scholar] [CrossRef] [PubMed]

- Rani, P.; Liu, C.; Sarkar, N.; Vanman, E.J. An empirical study of machine learning techniques for affect recognition in human–robot interaction. Pattern Anal. Appl. 2006, 9, 58–69. [Google Scholar] [CrossRef]

- Purves, D. Neuroscience. Sch. 2009, 4, 7204. [Google Scholar] [CrossRef]

- Alhargan, A.; Cooke, N.; Binjammaz, T. Multimodal affect recognition in an interactive gaming environment using eye tracking and speech signals. In Proceedings of the 19th ACM International Conference on Multimodal Interaction - ICMI 2017, Glasgow, Scotland, UK, 13–17 November 2017; Association for Computing Machinery (ACM): New York, NY, USA, 2017; pp. 479–486. [Google Scholar]

- Giannakopoulos, T. A Method for Silence Removal and Segmentation of Speech Signals, Implemented in Matlab; University of Athens: Athens, Greece, 2009. [Google Scholar]

- Rosenblatt, F. Principles of Neurodynamics. Perceptrons and the Theory of Brain Mechanisms; (No. VG-1196-G-8); Cornell Aeronautical Lab Inc.: Buffalo, NY, USA, 1961. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Brousseau, B.; Rose, J.; Eizenman, M. Hybrid Eye-Tracking on a Smartphone with CNN Feature Extraction and an Infrared 3D Model. Sensors 2020, 20, 543. [Google Scholar] [CrossRef] [Green Version]

- Chang, K.-M.; Chueh, M.-T.W. Using Eye Tracking to Assess Gaze Concentration in Meditation. Sensors 2019, 19, 1612. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.Q.; Lee, S. Gaze and Eye Tracking: Techniques and Applications in ADAS. Sensors 2019, 19, 5540. [Google Scholar] [CrossRef] [Green Version]

- Bissoli, A.; Lavino-Junior, D.; Sime, M.; Encarnação, L.F.; Bastos-Filho, T.F. A Human–Machine Interface Based on Eye Tracking for Controlling and Monitoring a Smart Home Using the Internet of Things. Sensors 2019, 19, 859. [Google Scholar] [CrossRef] [Green Version]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors 2020, 20, 2384. https://doi.org/10.3390/s20082384

Lim JZ, Mountstephens J, Teo J. Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors. 2020; 20(8):2384. https://doi.org/10.3390/s20082384

Chicago/Turabian StyleLim, Jia Zheng, James Mountstephens, and Jason Teo. 2020. "Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges" Sensors 20, no. 8: 2384. https://doi.org/10.3390/s20082384

APA StyleLim, J. Z., Mountstephens, J., & Teo, J. (2020). Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors, 20(8), 2384. https://doi.org/10.3390/s20082384