Analyzing the Effectiveness of the Brain–Computer Interface for Task Discerning Based on Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Building the Dataset

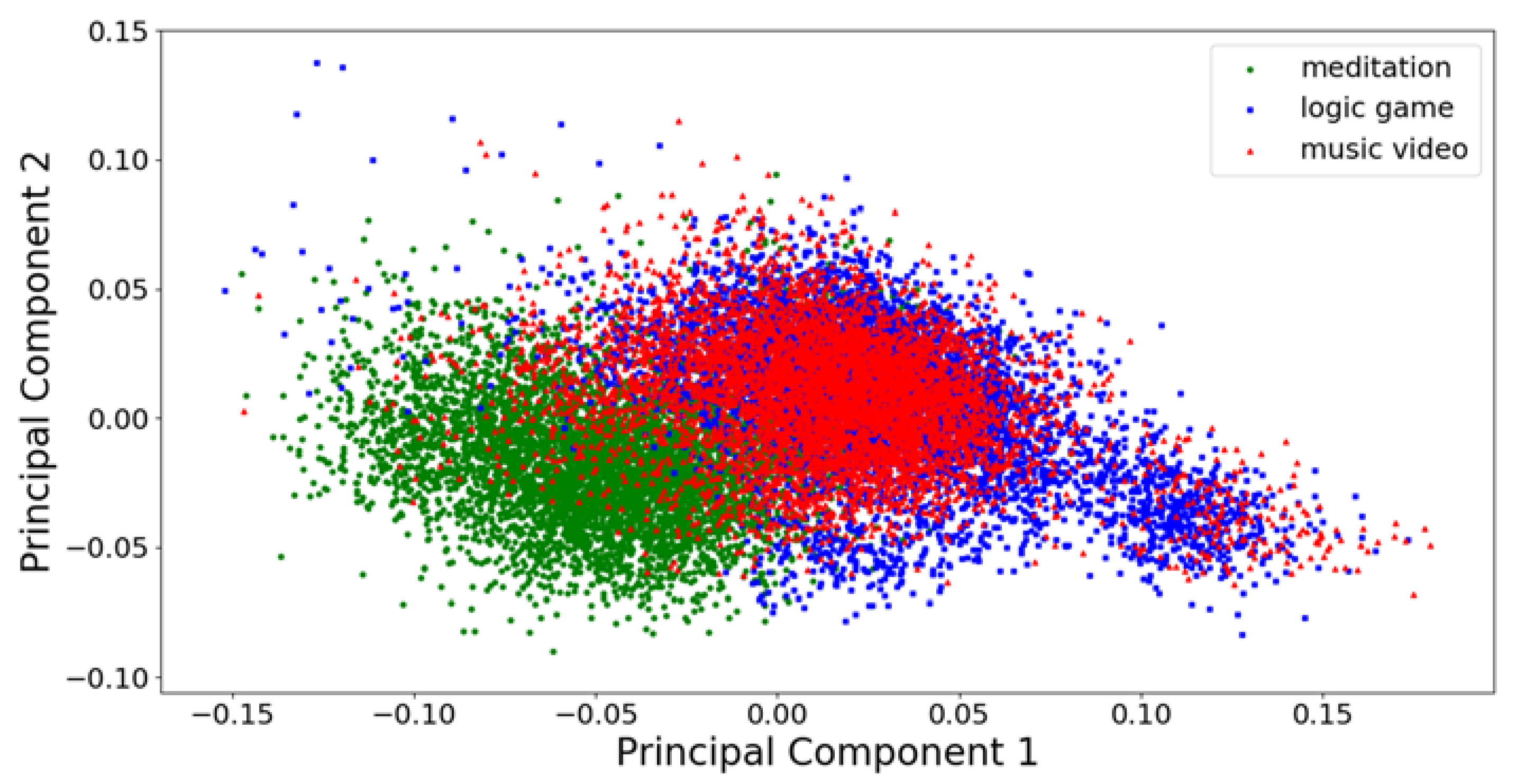

2.2. Data Preprocessing

- ar16: for each channel of every frame, 16th order autoregressive models were computed using the Burg algorithm. The arburg function from the spectrum library was used for that. Only the real values of computed model coefficients were utilized (imaginary values were all equal to 0). After concatenating model coefficients from all channels with previously computed mean values and variances, final feature vectors of 252 elements were obtained. The code employed for the aforementioned calculations is contained in Appendix A.

- ar24: like ar16, but autoregressive models were of the 24th order. The final feature vectors contained 364 elements.

- welch16: for every channel in every frame, an estimate of power spectral density (PSD) was computed using the Welch method. Function welch from the scipy library was used for that. Samples from each channel in every frame were divided into eight nonoverlapping subframes of 16 samples each. Subsequently, nine coefficients were obtained per every channel. Final feature vectors (with pre-computed mean values and variances) contained 154 elements. Calculations were conducted with the use of the code shown in Appendix A.

- welch32: like welch16, but frames were divided into four nonoverlapping subframes of 32 samples each. Final feature vectors consisted of 266 elements.

- welch64: like welch16 and welch32, but frames were divided into two nonoverlapping subframes, each of 64 samples per channel. Final feature vectors consisted of 490 elements.

- dwt: each channel of every frame was decomposed using the 4th level discrete wavelet transform with db4 wavelet using the wavedec function from pywt library. The resulted vectors contained 14, 14, 22, 37, and 67 coefficients, respectively. After concatenating coefficient vectors with pre-computed mean values and variances, final feature vectors of 2184 elements were obtained. The transcription of this algorithm is provided in a listing contained in Appendix A.

- dwt_stat: each channel of every frame was decomposed with the discrete wavelet transform as in the dwt scheme. Subsequently, for each of five wavelet coefficient-based vectors, the following descriptive parameters are computed; mean value, mean value of absolute values, variance, skewness, kurtosis, zero-crossing rate, and the sum of squares (see Appendix A for the code used).

3. Experiments, Results, and Discussion

- ar16: 38 in both cases,

- ar24: 61 in both cases,

- welch16: 61 in both cases,

- welch32: 110 in both cases,

- welch64: 204 in both cases,

- dwt: 1019 for k-NN and SVM, 1016 for neural networks, and

- dwt_stat: 136 in both cases.

3.1. Experiment 1: k-Nearest Neighbors

Experiment 1

3.2. Experiment 2: Support Vector Machines with a Linear Kernel

3.3. Experiment 3: Support Vector Machines with Radial Basis Function Kernel

3.4. Experiment 4—Neural Networks

Summary

- adding more hidden layers,

- using parametric ReLU activation function,

- using adaptive optimization methods like Adam,

- adding batch normalization or dropout layers,

- adding L1 or L2 weight decay,

- adding additional features: skewness, kurtosis, and energy computed for every channel from raw, unprocessed frames

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

- # independent component analysis with whitening

- # performed for single frame

- from sklearn.decomposition import FastICA

- ica = FastICA(n_components=14, whiten=True)

- new_dataframe = ica.fit_transform(dataframe)

- # computing autoregressive models for single frame

- import spectrum

- new_dataframe = []

- for channel in dataframe:

- model = spectrum.arburg(channel,order=16,criteria=None)[0]

- model = [item.real for item in model]

- new_dataframe.append(model)

- # computing power spectral density with Welch’s method for single frame

- import scipy.signal

- psd = scipy.signal.welch(dataframe,nperseg=16,axis=0)[1]

- # computing discrete wavelet transform

- # for single frame

- import pywt, numpy as np

- new_dataframe = []

- for channel in dataframe:

- dwt = pywt.wavedec(channel,wavelet=“db4”,level=4,axis=0)

- vector = np.ndarray((0,))

- for item in dwt:

- vector = np.append(vector,item)

- new_dataframe.append(vector)

- # computing descriptive parameters from wavelet coefficients

- # for single frame

- import numpy as np, scipy.stats, pywt

- def zero_crossings(data):

- return ((data[:−1] ∗ data[1:]) < 0).sum()

- dwt = pywt.wavedec(channel,wavelet=“db4”,level=4,axis=0)

- vector = []

- for item in dwt:

- vector.append(np.mean(item))

- vector.append(np.mean(np.abs(item)))

- vector.append(np.var(item))

- vector.append(scipy.stats.skew(item))

- vector.append(scipy.stats.kurtosis(item))

- vector.append(zero_crossings(item))

- vector.append(sum(np.power(item,2)))

- # dimensionality reduction via principal component analysis

- from sklearn.decomposition import PCA

- pca = PCA(n_components=0.95)

- pca.fit(train_data)

- reduced_train_data = pca.transform(train_data)

- reduced_val_data = pca.transform(val_data)

- reduced_test_data = pca.transform(test_data)

- # dividing dataset into training, validation and test data

- import random

- data_train = {}, data_val = {}, data_test = {}

- a = int(0.7 ∗ 8265)

- b = a + int(0.1 ∗ 8265)

- for class_name in eeg.keys():

- # eeg.keys() are [‘meditation’,‘music_video’,‘logic_game’]

- indices = [i for i in range(8265)]

- random.shuffle(indices)

- data_train[class_name] = [eeg[class_name][i] for i in indices[:a]] data_val[class_name] = [eeg[class_name][i] for i in indices[a:b]]

- data_test[class_name] = [eeg[class_name][i] for i in indices[b:]]

- # encoding classes via one-hot encoding

- classes = {}

- classes[‘meditation’] = np.array([1,0,0])

- classes[‘music_video’] = np.array([0,1,0])

- classes[‘logic_game’] = np.array([0,0,1])

- for i in range(len(train_data_classes)):

- train_data_classes[i] = classes[train_data_classes[i]]

- for i in range(len(test_data_classes)):

- test_data_classes[i] = classes[test_data_classes[i]]

- for i in range(len(val_data_classes)):

- val_data_classes[i] = classes[val_data_classes[i]]

- # training k-NN classifiers, test data classification

- # computing accuracy, confusion matrix, precision, recall and F1

- from sklearn.neighbors import KNeighborsClassifier

- from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

- knn = KNeighborsClassifier(n_neighbors=k)

- knn.fit(reduced_train_data,train_data_classes)

- results = knn.predict(reduced_test_data)

- score = accuracy_score(test_data_classes,results)

- conf_matrix = confusion_matrix(test_data_classes,results)

- report = classification_report(test_data_classes,results)

- # training SVM classifiers with linear kernel

- # and test data classification from sklearn.svm import SVC

- svm = SVC(C=C,kernel=‘linear’)

- svm.fit(reduced_train_data,train_data_classes)

- results = svm.predict(reduced_test_data)

- # training SVM classifier with radial basis function kernel

- # and test data classification from sklearn.svm import SVC

- svm = SVC(C=C,kernel=‘rbf’,gamma=gamma)

- svm.fit(reduced_train_data,train_data_classes)

- results = svm.predict(reduced_test_data)

- # traning neural networks with single hidden layer

- # and training data classification

- from keras.layers import Dense, Activation from keras.models import Sequential

- from keras.optimizers import SGD

- from keras.callbacks import EarlyStopping n_inputs = reduced_train_data.shape[1] model = Sequential()

- model.add(Dense(n_inputs,activation=‘relu’,input_dim=n_inputs,

- kernel_initializer=‘he_uniform’,bias_initializer=‘zeros’))

- model.add(Dense(3,activation=‘softmax’,

- kernel_initializer=‘he_uniform’,bias_initializer=‘zeros’))

- sgd = SGD(lr=0.01,decay=1e-6,momentum=0.9,nesterov=True)

- model.compile(loss=‘categorical_crossentropy’, optimizer=sgd,metrics=[‘accuracy’])

- es = EarlyStopping(monitor=‘val_loss’,mode=‘min’,patience=50,restore_best_weights=True)

- history = model.fit(reduced_train_data,train_data_classes,batch_size=64,

- validation_data=(reduced_val_data,val_data_classes), callbacks=[es],epochs=2000)

- predictions = model.predict(reduced_test_data,batch_size=128)

- score = model.evaluate(reduced_test_data, test_data_classes,batch_size=128)

References

- Jiang, X.; Bian, G.B.; Tian, Z. Removal of Artifacts from EEG Signals: A Review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cabañero-Gómez, L.; Hervas, R.; Bravo, J.; Rodriguez-Benitez, L. Computational EEG Analysis Techniques When Playing Video Games: A Systematic Review. Proceedings 2018, 2, 483. [Google Scholar] [CrossRef] [Green Version]

- Machado, S.; Araujo, F.; Paes, F.; Velasques, B.; Cunha, M.; Budde, H.; Basile, L.F.; Anghinah, R.; Arias-Carrión, O.; Cagy, M.; et al. EEG-based Brain–computer Interfaces: An Overview of Basic Concepts and Clinical Applications in Neurorehabilitation. Rev. Neurosci. 2010, 21, 451–468. [Google Scholar] [CrossRef] [PubMed]

- Kaplan, P.; Sutter, R. Electroencephalographic patterns in coma: When things slow down. Epileptologie 2012, 29, 201–209. [Google Scholar]

- Kübler, A. Brain–computer interfacing: Science fiction has come true. Brain 2013, 136, 2001–2004. [Google Scholar] [CrossRef] [Green Version]

- Choubey, H.; Pandey, A. A new feature extraction and classification mechanisms for EEG signal processing. Multidim. Syst. Sign. Process. 2018, 30. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-based spatio-temporal convolutional neural network for driver fatigue evaluation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef]

- Acharya, U.R.; Faust, O.; Kannathal, N.; Chua, T.J.; Laxminarayan, S. Dynamical analysis of EEG signals at various sleep stages. Comput. Methods Programs Biomed. 2005, 80, 37–45. [Google Scholar] [CrossRef]

- Kannathal, N.; Acharya, U.R.; Fadilah, A.; Tibelong, T.; Sadasivan, P.K. Nonlinear analysis of EEG signals at different mental states. Biomed. Eng. Online 2004, 3. [Google Scholar] [CrossRef] [Green Version]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- He, B.; Gao, S.; Yuan, H.; Wolpaw, J.R. Brain–computer interfaces. In Neural Engineering; He, B., Ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 87–151. [Google Scholar] [CrossRef]

- Yuan, H.; He, B. Brain–computer interfaces using sensorimotor rhythms: Current state and future perspectives. IEEE Trans. Biomed. Eng. 2014, 61, 1425–1435. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Zhao, Y.; Sun, H.; Chen, J.; Ke, A.; Xu, G.; Zhang, H.; Zhou, J.; Wang, C. A Fast, Open EEG Classification Framework Based on Feature Compression and Channel Ranking. Front. Neurosci. 2018, 12. [Google Scholar] [CrossRef] [Green Version]

- Charles, W.A.; James, N.K.; O’Connor, T.; Michael, J.K.; Artem, S. Geometric subspace methods and time-delay embedding for EEG artifact removal and classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 142–146. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Jeong, M.Y. Effect of EOG Signal Filtering on the Removal of Ocular Artifacts and EEG-Based Brain–computer Interface: A Comprehensive Study. Complexity 2018, 18–36. [Google Scholar] [CrossRef]

- Subasi, A. EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst. Appl. 2007, 32, 1084–1093. [Google Scholar] [CrossRef]

- Calderon, H.; Sahonero-Alvarez, G. A Comparison of SOBI, FastICA, JADE and Infomax Algorithms. In Proceedings of the 8th International Multi-Conference on Complexity, Informatics and Cybernetics (IMCIC 2017), Orlando, FL, USA, 21–24 March 2017. [Google Scholar]

- Himberg, J.; Hyvärinen, A. Icasso: Software for investigating the reliability of ICA estimates by clustering and visualization. In Proceedings of the IEEE 13th Workshop on Neural Networks for Signal Processing (NNSP’03), Toulouse, France, 17–19 September 2003; pp. 259–268. [Google Scholar]

- Hyvärinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; Wiley: Hoboken, NJ, USA, 2001. [Google Scholar]

- Parsopoulos, K.E.; Varhatis, M.N. Recent approaches to global optimization problems through particle swarm optimization. Nat. Comput. 2002, 116, 235–306. [Google Scholar] [CrossRef]

- Vigário, R.; Särelä, J.; Jousmäki, V.; Hämäläinen, M.; Oja, E. Independent component approach to the analysis of EEG and MEG recordings. IEEE Trans. Biomed. Eng. 2000, 47, 589–593. [Google Scholar] [CrossRef] [Green Version]

- James, C.J.; Gibson, O.J. Temporally constrained ICA: An application to artifact rejection in electromagnetic brain signal analysis. IEEE Trans Biomed. Eng. 2003, 50, 1108–1116. [Google Scholar] [CrossRef]

- Langlois, D.; Chartier, S.; Gosselin, D. An Introduction to Independent Component Analysis: InfoMax and FastICA algorithms. Tutor. Quant. Methods Psychol. 2010, 6, 31–38. [Google Scholar] [CrossRef] [Green Version]

- Palmer, J.A.; Kreutz-Delgado, K.; Makeig, S. AMICA: An Adaptive Mixture of Independent Component Analyzers with Shared Components; University of California: San Diego, CA, USA, 2011. [Google Scholar]

- Iriarte, J.; Urrestarazu, E.; Valencia, M.; Alegre, M.; Malanda, A.; Viteri, C.; Artieda, J. Independent component analysis as a tool to eliminate artifacts in EEG: A quantitative study. J. Clin. Neurophysiol. 2003, 20, 249–257. [Google Scholar] [CrossRef] [Green Version]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chang, K.-M.; Lo, P.F. Meditation EEG interpretation based on novel fuzzy-merging strategies and wavelet features. Biomed. Eng. Appl. Basis Commun. 2005, 17, 167–175. [Google Scholar] [CrossRef] [Green Version]

- Jahankhani, P.; Kodogiannis, V.; Revett, K. EEG Signal Classification Using Wavelet Feature Extraction and Neural Networks. In Proceedings of the IEEE John Vincent Atanasoff 2006 International Symposium on Modern Computing (JVA’06), Sofia, Bulgaria, 3–6 October 2006; pp. 120–124. [Google Scholar]

- Suk, H.; Lee, S. A Novel Bayesian Framework for Discriminative Feature Extraction in Brain–computer Interfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 286–299. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lotte, F. A Tutorial on EEG Signal Processing Techniques for Mental State Recognition in Brain–computer Interfaces. In Guide to Brain–computer Music Interfacing; Miranda, E.R., Castet, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Wen, T.; Zhang, Z. Deep Convolution Neural Network and Autoencoders-Based Unsupervised Feature Learning of EEG Signals. IEEE Access 2018, 6, 25399–25410. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Yuan, F. Adversarial Variational Embedding for Robust Semi-supervised Learning. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AL, USA, 4–8 August 2019; pp. 139–147. [Google Scholar] [CrossRef] [Green Version]

- Wu, Q.; Zhang, Y.; Liu, J.; Sun, J.; Cichocki, A.; Gao, F. Regularized Group Sparse Discriminant Analysis for P300-Based Brain–Computer Interface. Int. J. Neural Syst. 2019, 29, 6. [Google Scholar] [CrossRef]

- Edla, D.R.; Mangalorekar, K.; Dhavalikar, G.; Dodia, S. Classification of EEG data for human mental state analysis using Random Forest Classifier. Procedia Comput. Sci. 2018, 132, 1523–1532. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Wang, X.; Monaghan, J.; McAlpine, D.; Zhang, Y. A Survey on Deep Learning based Brain–computer Interface: Recent Advances and New Frontiers. arXiv 2019, arXiv:1905.04149. [Google Scholar]

- Kurowski, A.; Mrozik, K.; Kostek, B.; Czyżewski, A. Comparison of the effectiveness of automatic EEG signal class separation algorithms. J. Intel. Fuzzy Sys. 2019, 10, 1–7. [Google Scholar] [CrossRef]

- Bashivan, P.; Rish, I.; Yeasin, M.; Codella, N. Learning Representations from EEG with Deep Recurrent-Convolutional Neural Networks. arXiv 2015, arXiv:1511.06448v3. [Google Scholar]

- Gonfalonieri, A. Deep Learning Algorithms and Brain–Computer Interfaces. Available online: https://towardsdatascience.com/deep-learning-algorithms-and-brain–computer-interfaces-7608d0a6f01 (accessed on 10 April 2020).

- Kurowski, A.; Mrozik, K.; Kostek, B.; Czyżewski, A. Method for Clustering of Brain Activity Data Derived from EEG Signals. Fundam. Inform. 2019, 168, 249–268. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [Green Version]

- Kotsiantis, S.B.; Zaharakis, I.D.; Pintelas, P.E. Machine learning: A review of classification and combining techniques. Artif. Intell. Rev. 2006, 26, 159–190. [Google Scholar] [CrossRef]

- Salzberg, S.L. On Comparing Classifiers: Pitfalls to Avoid and a Recommended Approach. Data Min. Knowl. Discov. 1997, 1, 317–328. [Google Scholar] [CrossRef]

- Zheng., W.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Gu, X.; Cao, Z.; Jolfaei, A.; Xu, P.; Wu, D.; Jung, T.-P.; Lin, C.-T. Fellow, IEEE, EEG-based Brain–computer Interfaces (BCIs): A Survey of Recent Studies on Signal Sensing Technologies and Computational Intelligence Approaches and Their Applications. arXiv 2020, arXiv:2001.11337. [Google Scholar]

- Subha, D.P.; Joseph, P.K.; Acharya, U.R.; Min, L.C. EEG Signal Analysis: A Survey. J. Med. Syst. 2010, 34, 195–212. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhou, G.; Jin, J.; Zhao, Q.; Wang, X.; Cichocki, A. Sparse Bayesian Classification of EEG for Brain–Computer Interface. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2256–2267. [Google Scholar] [CrossRef] [PubMed]

- Jebelli, H.; Khalili, M.M.; Lee, S. Mobile EEG-based workers stress recognition by applying deep neural network. In Advances in Informatics and Computing in Civil and Construction Engineering; Springer: Berlin/Heidelberg, Germany, 2019; pp. 173–180. [Google Scholar]

- Moon, S.-E.; Jang, S.; Lee, J.-S. Convolutional neural network approach for EEG-based emotion recognition using brain connectivity and its spatial information. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2556–2560. [Google Scholar]

- DEAP Dataset. Available online: https://www.eecs.qmul.ac.uk/mmv/datasets/deap/ (accessed on 11 April 2020).

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 2019, 1. [Google Scholar] [CrossRef] [Green Version]

- SEED Dataset. BCMI Resources. Available online: http://bcmi.sjtu.edu.cn/resource.html (accessed on 11 April 2020).

- Attia, M.; Hettiarachchi, I.; Hossny, M.; Nahavandi, S. A time domain classification of steady-state visual evoked potentials using deep recurrent-convolutional neural networks. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 766–769. [Google Scholar]

- Katsigiannis, S.; Ramzan, N. DREAMER: A Database for Emotion Recognition Through EEG and ECG Signals from Wireless Low-cost Off-the-Shelf Devices. IEEE J. Biomed. Heal. Inform. 2018, 22, 98–107. [Google Scholar] [CrossRef] [Green Version]

- Mousavi, Z.; Rezaii, T.Y.; Sheykhivand, S.; Farzamnia, A.; Razavi, S. Deep convolutional neural network for classification of sleep stages from single-channel EEG signals. J. Neurosci. Methods 2019, 324, 108312. [Google Scholar] [CrossRef]

- Moinnereau, M.-A.; Brienne, T.; Brodeur, S.; Rouat, J.; Whittingstall, K.; Plourde, E. Classification of auditory stimuli from EEG signals with a regulated recurrent neural network reservoir. arXiv 2018, arXiv:1804.10322. [Google Scholar]

- Spampinato, C.; Palazzo, S.; Kavasidis, I.; Giordano, D.; Souly, N.; Shah, M. Deep learning human mind for automated visual classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6809–6817. [Google Scholar]

- Dose, H.; Møller, J.S.; Iversen, H.K.; Puthusserypady, S. An end-to-end deep learning approach to MI-EEG signal classification for BCIs. Expert Syst. Appl. 2018, 114, 532–542. [Google Scholar] [CrossRef]

- Talathi, S.S. Deep recurrent neural networks for seizure detection and early seizure detection systems. arXiv 2017, arXiv:1706.03283. [Google Scholar]

- Kannathal, N.; Choo, M.; Acharya, U.R.; Sadasivan, P. Entropies for detection of epilepsy in EEG. Comput. Methods Programs Biomed. 2005, 80, 187–194. [Google Scholar] [CrossRef]

- Golmohammadi, M.; Ziyabari, S.; Shah, V.; Lopez de Diego, S.; Obeid, I.; Picone, J. Deep Architectures for Automated Seizure Detection in Scalp EEGs. arXiv 2017, arXiv:1712.09776. [Google Scholar]

- Harati, A.; Lopez, S.; Obeid, I.; Jacobson, M.; Tobochnik, S.; Picone, J. THE TUH EEG CORPUS: A Big Data Resource for Automated EEG Interpretation. In Proceedings of the IEEE Signal Processing in Medicine and Biology Symposium, Philadelphia, PE, USA, 13 December 2014. [Google Scholar]

- Ruffini, G.; Ibanez, D.; Castellano, M.; Dunne, S.; Soria-Frisch, A. EEG-driven RNN classification for prognosis of neurodegeneration in at-risk patients. In Proceedings of the International Conference on Artificial Neural Networks, Barcelona, Spain, 6–9 September 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 306–313. [Google Scholar]

- Morabito, F.C.; Campolo, M.; Ieracitano, C.; Ebadi, J.M.; Bonanno, L.; Bramanti, A.; Desalvo, S.; Mammone, N.; Bramanti, P. Deep convolutional neural networks for classification of mild cognitive impaired and Alzheimer’s disease patients from scalp EEG recordings. In Proceedings of the 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a better tomorrow (RTSI), Bologna, Italy, 7–9 September 2016; pp. 1–6. [Google Scholar]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H.; Subha, D.P. Automated eeg-based screening of depression using deep convolutional neural network. Methods Programs Biomed. 2018, 161, 103–113. [Google Scholar] [CrossRef]

- Sheikhani, A.; Behnam, H.; Mohammadi, M.R.; Noorozian, M. Analysis of EEG background activity in Autism disease patients with bispectrum and STFT measure. In Proceedings of the 11th Conference on 11t WSEAS International Conference on Communications, Madrid, Spain, 22–23 August 2007; pp. 318–322. [Google Scholar]

- Jin, Z.; Zhou, G.; Gao, D.; Zhang, Y.L. EEG classification using sparse Bayesian extreme learning machine for brain–computer interface. Neural Comput. Appl. 2018, 1–9. [Google Scholar] [CrossRef]

- ADNI Data and Samples. Available online: http://adni.loni.usc.edu/data-samples/access-data/ (accessed on 11 April 2020).

- AMIGOS Dataset. Available online: http://www.eecs.qmul.ac.uk/mmv/datasets/amigos/readme.html (accessed on 11 April 2020).

- BCI Competitions. Available online: http://www.bbci.de/competition/ (accessed on 11 April 2020).

- BCI2000 Wiki. Available online: https://www.bci2000.org/mediawiki/index.php/Main_Page (accessed on 11 April 2020).

- CHB-MIT Scalp EEG Database. Available online: http://archive.physionet.org/pn6/chbmit/ (accessed on 11 April 2020).

- EEG Resources. Available online: https://www.isip.piconepress.com/projects/tuh_eeg/ (accessed on 11 April 2020).

- MICCAI BraTS 2018 Data. Available online: http://www.med.upenn.edu/sbia/brats2018/data.html (accessed on 11 April 2020).

- Montreal Archive of Sleep Studies. Available online: http://massdb.herokuapp.com/en/ (accessed on 11 April 2020).

- OpenMIIR Dataset. Available online: https://owenlab.uwo.ca/research/the_openmiir_dataset.html (accessed on 11 April 2020).

- SHHS Polysomnography Database. Available online: http://archive.physionet.org/pn3/shhpsgdb/ (accessed on 11 April 2020).

- Szczuko, P.; Lech, M.; Czyżewski, A. Comparison of Methods for Real and Imaginary Motion Classification from EEG Signals. In Intelligent Methods and Big Data in Industrial Applications; Bembenik, R., Skonieczny, Ł., Protaziuk, G., Krzyszkiewicz, M., Rybinski, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 247–257. [Google Scholar] [CrossRef]

- Szczuko, P.; Lech, M.; Czyżewski, A. Comparison of Classification Methods for EEG Signals of Real and Imaginary Motion. In Advances in Feature Selection for Data and Pattern Recognition; Stanczyk, U., Zielosko, B., Jain, L.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 227–239. [Google Scholar] [CrossRef]

- Emotiv EPOC±Technical Specifications. Available online: https://emotiv.gitbook.io/epoc-user-manual/introduction-1/technical_specifications (accessed on 12 March 2020).

- Jasper, H.H. The Ten-Twenty Electrode System of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 371–375. [Google Scholar] [CrossRef]

- Nuwer, M.R.; Comi, G.; Emerson, R.; Fuglsang-Frederiksen, A.; Guérit, J.M.; Hinrichs, H.; Ikeda, A.; Luccas, F.J.; Rappelsburger, P. IFCN standards for digital recording of clinical EEG. Electroencephalogr. Clin. Neurophysiol. 1999, 106, 259–261. [Google Scholar] [CrossRef]

- Gwizdka, J.; Hosseini, R.; Cole, M.; Wang, S. Temporal dynamics of eye-tracking and EEG during reading and relevance decisions. J. Assoc. Inf. Sci. Techol. 2017, 68. [Google Scholar] [CrossRef]

- Joseph, P.; Kannathal, N.; Acharya, U.R. Complex Encephalogram Dynamics during Meditation. J. Chin. Clin. Med. 2007, 2, 220–230. [Google Scholar]

- Pizarro, J.; Guerrero, E.; Galindo, P.L. Multiple comparison procedures applied to model selection. Neurocomputing 2002, 48, 155–173. [Google Scholar] [CrossRef] [Green Version]

- Oliphant, T.E. Python for scientific computing. Comput. Sci. Eng. 2007, 9, 10–20. [Google Scholar] [CrossRef] [Green Version]

- Keras Documentation. Available online: https://keras.io/ (accessed on 12 March 2020).

- scikit-Learn Documentation. Available online: https://scikit-learn.org/stable/documentation.html (accessed on 12 March 2020).

- TensorFlow Guide. Available online: https://www.tensorflow.org/guide (accessed on 12 March 2020).

- Beyer, K.; Goldstein, J.; Ramakrishnan, R.; Shaft, U. When Is Nearest Neighbor Meaningful? In Proceedings of the 7th International Conference on Database Theory (ICDT), Jerusalem, Israel, 10–12 January 1999; pp. 217–235, ISBN 3-540-65452-6. [Google Scholar]

- Pestov, V. Is the k-NN classifier in high dimensions affected by the curse of dimensionality? arXiv 2012, arXiv:1110.4347. [Google Scholar] [CrossRef]

- Galecki, A.; Burzykowski, T. Linear Mixed-Effects Models Using, R. A Step-by-Step Approach. In Springer Texts in Statistics; Casella, G., Fienberg, S.E., Olkin, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Online Documentation for the Statsmodels Method Used for Calculation of MLM-Based Statistical Tests. Available online: https://www.statsmodels.org/devel/mixed_glm.html (accessed on 12 March 2020).

- Signorell, A.; Aho, K.; Alfons, A.; Anderegg, N.; Aragon, T.; Arppe, A. DescTools: Tools for Descriptive Statistics. R Package Version 0.99.34. 2020. Available online: https://cran.r-project.org/package=DescTools (accessed on 9 April 2020).

- Bengio, Y.; Glorot, X.; Bordes, A. Deep Sparse Rectifier Neural Networks. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 11–13 April 2011. [Google Scholar]

- Hinton, G.E.; Nair, V. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2015), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef] [Green Version]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. In Proceedings of the 30th International Conference on Machine Learning (ICML), Atlanta, GA, USA, 17–19 June 2013; pp. 1139–1147. [Google Scholar]

- Demšar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Garcia, S.; Herrera, F. An Extension on “Statistical Comparisons of Classifiers over Multiple Data Sets” for all Pairwise Comparisons. J. Mach. Learn. Res. 2008, 9, 2677–2694. [Google Scholar]

- Haselsteiner, E.; Pfurtscheller, G. Using time-dependent neural networks for EEG classification. IEEE Trans. Rehabil. Eng. 2000, 8, 457–463. [Google Scholar] [CrossRef] [Green Version]

- Ziyabari, S.; Shah, V.; Golmohammadi, M.; Obeid, I.; Picone, J. Objective evaluation metrics for automatic classification of EEG events. arXiv 2017, arXiv:1712.10107. [Google Scholar]

- Lu, H.; Wang, M.; Yu, H. EEG Model and Location in Brain when Enjoying Music. In Proceedings of the 27th Annual IEEE Engineering in Medicine and Biology Conference, Shanghai, China, 1–4 September 2005; pp. 2695–2698. [Google Scholar]

- Han, J.; Kamber, M.; Jian, P. Data Mining: Concepts and Techniques, Morgan Kaufmann; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar] [CrossRef]

| EEG-Related Task | Literature Source | Algorithm | Dataset | Classification Effectiveness |

|---|---|---|---|---|

| event-related potential | [46] | SVM, SWLDA, BLDA, SBL, SBLaplace | two experimental datasets | the best approach—approximately up to 100% |

| fatigue | [7] | spatial-temporal convolutional neural network (ESTCNN) | experimental, local dataset | 97.3% |

| stress | [47] | DNN and deep CNN | experimental, local dataset | 86.62 |

| emotion | [48] | CNN | DEAP [49] | 99.72% |

| emotion | [50] | dynamical graph CNN (DGCNN) | SEED [51] | 90.4% |

| emotion | [52] | RNN with LSTM (Recurrent Neural Networks/Long Short-Term Memory | SSVEP (steady-state visually evoked potentials) | 93.0% |

| temporal analysis | [50] | dynamical graph CNN (DGCNN) | DREAMER [53] | 86.23% |

| sleep disturbance detection | [54] | CNN (no feature extraction) | [54] | 93.55% to 98.10% depending on the number of classess |

| auditory stimulus classification | [55] | RNN | experimental, local dataset | 83.2% |

| automated visual object categorization | [56] | RNN, CNN-based regressor | experimental, local dataset | 83% |

| MI (Motor Imaginery) EEG | [57] | CNN, transfer learning | [57] | two classes: 86.49%, three classes: 79.25%, four classes: 68.51% |

| epileptic seizure detection | [58] | Gated Recurrent Unit RNN | BUD [58] | 98% |

| epileptic seizure detection | [59] | Neuro-fuzzy | Local (EEG database—Bonn University) [59] | ~90% |

| epileptic seizure detection | [60] | CNNs/LSTM | TUH EEG Seizure Corpus [61]/Duke University Seizure Corpus | sensitivity: 0.3083; specificity: 0.9686 |

| Behavioral Disorder (RBD) | [62] | Echo State Networks (ESNs) | experimental, local dataset (118 subjects) | 85% |

| Alzheimer disease detection | [63] | multiple convolutional-subsampling | experimental, local dataset | 80% |

| depression screening | [64] | CNN | experimental, local dataset (patients with Mild Cognitive Impairment and healthy control group) | left hemisphere: 93.5% right hemisphere: 96% |

| autism | [65] | bispectrum transform, ST Fourier Transform (STFT)/STFT at a bandwidth of total spectrum (STFT-BW) | experimental, local dataset (10 autism patients and 7 control subjects) | 82.4% |

| Feature Extraction Scheme | ||||||||

|---|---|---|---|---|---|---|---|---|

| k | ar16 | ar24 | dwt | dwt stat | welch16 | welch32 | welch64 | mean |

| 5 | 0.4742 | 0.4605 | 0.3529 | 0.4192 | 0.5999 | 0.6304 | 0.6052 | 0.5060 |

| 7 | 0.4891 | 0.4756 | 0.3559 | 0.4327 | 0.6084 | 0.6338 | 0.6145 | 0.5010 |

| 11 | 0.4941 | 0.4875 | 0.3535 | 0.4403 | 0.6163 | 0.6386 | 0.6245 | 0.5157 |

| 14 | 0.5030 | 0.4927 | 0.3533 | 0.4456 | 0.6141 | 0.6370 | 0.6358 | 0.5259 |

| 17 | 0.5066 | 0.4998 | 0.3563 | 0.4569 | 0.6129 | 0.6362 | 0.6322 | 0.5287 |

| mean | 0.4934 | 0.4832 | 0.3544 | 0.4389 | 0.6103 | 0.6352 | 0.6224 | |

| Coeff. | Std. Err. | z | P > |z| | Left c.f. Boundary | Right c.f. Boundary | |

|---|---|---|---|---|---|---|

| Intercept (welch32-based influence) | 0.635 | 0.012 | 53.682 | 0.000 | 0.612 | 0.658 |

| ar16 | −0.142 | 0.017 | −8.474 | 0.000 | −0.175 | −0.109 |

| ar24 | −0.152 | 0.017 | −9.082 | 0.000 | −0.185 | −0.119 |

| dwt | −0.281 | 0.017 | −16.782 | 0.000 | −0.314 | −0.248 |

| dwt_stat | −0.196 | 0.017 | −11.728 | 0.000 | −0.229 | −0.163 |

| welch16 | −0.025 | 0.017 | −1.487 | 0.137 | −0.058 | 0.008 |

| welch64 | −0.013 | 0.017 | −0.763 | 0.446 | −0.046 | 0.020 |

| Confusion Matrix for 11-NN welch32 | Confusion Matrix for 17-NN ar16 | |||||||

|---|---|---|---|---|---|---|---|---|

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

| meditation | 0.82 | 0.09 | 0.08 | meditation | 0.72 | 0.19 | 0.10 | |

| music video | 0.11 | 0.47 | 0.42 | music video | 0.43 | 0.32 | 0.25 | |

| logic game | 0.04 | 0.34 | 0.62 | logic game | 0.27 | 0.25 | 0.48 | |

| Confusion Matrix for 17-NN dwt | Confusion Matrix for 17-NN dwt_stat | |||||||

|---|---|---|---|---|---|---|---|---|

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

| meditation | 0.09 | 0.26 | 0.64 | meditation | 0.73 | 0.14 | 0.13 | |

| music video | 0.08 | 0.26 | 0.67 | music video | 0.43 | 0.29 | 0.28 | |

| logic game | 0.05 | 0.23 | 0.72 | logic game | 0.38 | 0.28 | 0.35 | |

| Scenario | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| 11-NN welch32 | meditation | 0.8497 | 0.8234 | 0.8363 |

| logic game | 0.5521 | 0.6215 | 0.5847 | |

| music video | 0.5204 | 0.4710 | 0.4944 | |

| 17-NN ar16 | meditation | 0.5049 | 0.7177 | 0.5928 |

| logic game | 0.5824 | 0.4807 | 0.5267 | |

| music video | 0.4270 | 0.3216 | 0.3669 | |

| 17-NN dwt | meditation | 0.4274 | 0.0925 | 0.1521 |

| logic game | 0.3545 | 0.7201 | 0.4751 | |

| music video | 0.3408 | 0.2563 | 0.2926 | |

| 17-NN dwt stat | meditation | 0.4772 | 0.7334 | 0.5782 |

| logic game | 0.4593 | 0.3476 | 0.3957 | |

| music video | 0.4101 | 0.2896 | 0.3395 |

| Scenario | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| 11-NN welch32 | meditation | 0.8621 | 0.8300 | 0.8458 |

| logic game | 0.5794 | 0.5621 | 0.5706 | |

| music video | 0.5018 | 0.5354 | 0.5180 | |

| 17-NN dwt | meditation | 0.4402 | 0.0957 | 0.1572 |

| logic game | 0.3587 | 0.6098 | 0.4517 | |

| music video | 0.3370 | 0.3649 | 0.3504 |

| Feature Extraction Scheme | ||||||||

|---|---|---|---|---|---|---|---|---|

| C | ar16 | ar24 | dwt | dwt stat | welch16 | welch32 | welch64 | mean |

| 0.01 | 0.5072 | 0.5397 | 0.3353 | 0.5149 | 0.5653 | 0.6122 | 0.6290 | 0.5291 |

| 0.1 | 0.5083 | 0.5397 | 0.3351 | 0.5129 | 0.6070 | 0.6378 | 0.6528 | 0.5491 |

| 1 | 0.5085 | 0.5393 | 0.3287 | 0.5131 | 0.6249 | 0.6671 | 0.6612 | 0.5490 |

| 10 | 0.5085 | 0.5395 | - | 0.5145 | 0.6550 | 0.6628 | 0.6598 | 0.5900 |

| 100 | 0.5085 | 0.5397 | - | - | 0.6548 | 0.6638 | 0.6548 | 0.6043 |

| mean | 0.5082 | 0.5396 | 0.3330 | 0.5138 | 0.6214 | 0.6487 | 0.6515 | |

| Coeff. | Std. Err. | z | P > |z| | Left c.f. Boundary | Right c.f. Boundary | |

|---|---|---|---|---|---|---|

| Intercept (welch64-based influence) | 0.652 | 0.012 | 53.764 | 0.000 | 0.628 | 0.675 |

| ar16 | −0.143 | 0.017 | −8.291 | 0.000 | −0.177 | −0.109 |

| ar24 | −0.112 | 0.019 | −5.965 | 0.000 | −0.149 | −0.075 |

| dwt | −0.318 | 0.022 | −14.386 | 0.000 | −0.362 | −0.275 |

| dwt_stat | −0.138 | 0.018 | −7.680 | 0.000 | −0.173 | −0.103 |

| welch16 | −0.030 | 0.026 | −1.144 | 0.253 | −0.082 | 0.021 |

| welch32 | −0.003 | 0.024 | −0.118 | 0.906 | −0.049 | 0.043 |

| Feature Extraction Scheme | ||

|---|---|---|

| C | dwt | welch32 |

| 0.01 | 0.3330 | - |

| 1 | - | 0.6595 |

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

|---|---|---|---|---|---|---|---|---|

| meditation | 0.85 | 0.12 | 0.03 | meditation | 0.73 | 0.15 | 0.12 | |

| music video | 0.13 | 0.50 | 0.37 | music video | 0.40 | 0.28 | 0.31 | |

| logic game | 0.04 | 0.31 | 0.66 | logic game | 0.27 | 0.22 | 0.51 |

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

|---|---|---|---|---|---|---|---|---|

| meditation | 0.30 | 0.35 | 0.35 | meditation | 0.68 | 0.20 | 0.13 | |

| music video | 0.30 | 0.35 | 0.35 | music video | 0.25 | 0.37 | 0.38 | |

| logic game | 0.31 | 0.34 | 0.35 | logic game | 0.18 | 0.32 | 0.50 |

| Variant | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| welch32 C = 1 | meditation | 0.8369 | 0.8501 | 0.8434 |

| logic game | 0.6179 | 0.6560 | 0.6364 | |

| music video | 0.5367 | 0.4952 | 0.5151 | |

| ar16 C = 1 | meditation | 0.5209 | 0.7310 | 0.6083 |

| logic game | 0.5400 | 0.5103 | 0.5247 | |

| music video | 0.4360 | 0.2842 | 0.3441 | |

| dwt C = 0.01 | meditation | 0.3324 | 0.3017 | 0.3163 |

| logic game | 0.3345 | 0.3525 | 0.3432 | |

| music video | 0.3388 | 0.3519 | 0.3452 | |

| dwt stat C = 0.01 | meditation | 0.6134 | 0.6753 | 0.6429 |

| logic game | 0.4946 | 0.5000 | 0.4973 | |

| music video | 0.4159 | 0.3694 | 0.3913 |

| Variant | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| welch32 C = 1 | meditation | 0.8472 | 0.8594 | 0.8533 |

| logic game | 0.6052 | 0.6246 | 0.6147 | |

| music video | 0.5187 | 0.4946 | 0.5063 | |

| dwt C = 0.01 | meditation | 0.3288 | 0.3134 | 0.3209 |

| logic game | 0.3344 | 0.3394 | 0.3369 | |

| music video | 0.3356 | 0.3463 | 0.3408 |

| Feature Extraction Scheme | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| C | γ | ar16 | ar24 | dwt | dwt stat | welch16 | welch32 | welch64 | mean |

| 0.01 | 0.1 | 0.3769 | 0.3392 | 0.4097 | 0.3976 | 0.5651 | 0.6133 | 0.6231 | 0.4750 |

| 1 | 0.3333 | 0.3333 | 0.3414 | 0.3523 | 0.5689 | 0.6193 | 0.6332 | 0.4545 | |

| 10 | 0.4252 | 0.4180 | 0.3557 | 0.3333 | 0.6072 | 0.6378 | 0.6380 | 0.4879 | |

| 0.1 | 0.1 | 0.4821 | 0.3734 | 0.4097 | 0.3976 | 0.5651 | 0.6169 | 0.6310 | 0.4965 |

| 1 | 0.3333 | 0.3333 | 0.3392 | 0.3529 | 0.6161 | 0.6453 | 0.6578 | 0.4683 | |

| 10 | 0.4252 | 0.4178 | 0.3557 | 0.3333 | 0.6334 | 0.6683 | 0.6713 | 0.5007 | |

| 1 | 0.1 | 0.5286 | 0.5107 | 0.4222 | 0.3333 | 0.6157 | 0.6455 | 0.6604 | 0.5309 |

| 1 | 0.3597 | 0.3333 | 0.4319 | 0.3535 | 0.6338 | 0.6655 | 0.6709 | 0.4927 | |

| 10 | 0.3333 | 0.4178 | 0.3557 | 0.3333 | 0.6578 | 0.6846 | 0.6866 | 0.4956 | |

| 10 | 0.1 | 0.4998 | 0.5070 | - | 0.3535 | 0.6334 | 0.6650 | 0.6626 | 0.5535 |

| 1 | 0.3636 | 0.3366 | - | 0.3333 | 0.6578 | 0.6681 | 0.6765 | 0.5060 | |

| 10 | 0.3333 | 0.4210 | - | - | 0.6632 | 0.6933 | 0.6644 | 0.5550 | |

| 100 | 0.1 | 0.5000 | - | - | 0.3327 | 0.6133 | 0.6632 | 0.6626 | 0.5544 |

| 1 | 0.3636 | - | - | 0.3535 | 0.6548 | 0.6820 | 0.6725 | 0.5453 | |

| 10 | 0.3333 | - | - | 0.3333 | 0.6683 | 0.6701 | 0.6606 | 0.5331 | |

| mean | 0.3994 | 0.3951 | 0.3801 | 0.3495 | 0.6236 | 0.6559 | 0.6581 | ||

| Coeff. | Std. Err. | z | P > |z| | Left c.f. Boundary | Right c.f. Boundary | |

|---|---|---|---|---|---|---|

| Intercept (welch6-based influence) | 0.658 | 0.042 | 15.639 | 0.000 | 0.576 | 0.741 |

| ar16 | −0.259 | 0.051 | −5.049 | 0.000 | −0.359 | −0.158 |

| ar24 | −0.263 | 0.053 | −4.977 | 0.000 | −0.367 | −0.159 |

| dwt | −0.278 | 0.062 | −4.480 | 0.000 | −0.400 | −0.156 |

| dwt_stat | −0.309 | 0.062 | −4.946 | 0.000 | −0.431 | −0.186 |

| welch16 | −0.035 | 0.064 | −0.542 | 0.588 | −0.159 | 0.090 |

| welch32 | −0.002 | 0.063 | −0.035 | 0.972 | −0.125 | 0.121 |

| Feature Extraction Scheme | |||

|---|---|---|---|

| C | γ | dwt_stat | welch32 |

| 0.01 | 0.1 | 0.3229 | - |

| 10 | 10 | - | 0.6905 |

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

|---|---|---|---|---|---|---|---|---|

| meditation | 0.86 | 0.11 | 0.03 | meditation | 0.62 | 0.24 | 0.14 | |

| music video | 0.10 | 0.53 | 0.37 | music video | 0.28 | 0.37 | 0.35 | |

| logic game | 0.01 | 0.30 | 0.69 | logic game | 0.18 | 0.22 | 0.59 |

| Meditation | Music Video | Logic Game | Meditation | Music Video | Logic Game | |||

|---|---|---|---|---|---|---|---|---|

| meditation | 0.70 | 0.16 | 0.14 | meditation | 0.52 | 0.26 | 0.22 | |

| music video | 0.51 | 0.23 | 0.26 | music video | 0.37 | 0.34 | 0.29 | |

| logic game | 0.40 | 0.23 | 0.37 | logic game | 0.35 | 0.32 | 0.33 |

| Variant | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| welch32 | meditation | 0.8876 | 0.8597 | 0.8735 |

| C = 10 | logic game | 0.6287 | 0.6898 | 0.6578 |

| γ = 10 | music video | 0.5676 | 0.5302 | 0.5483 |

| ar16 | meditation | 0.5716 | 0.6203 | 0.5950 |

| C = 1 | logic game | 0.5496 | 0.5931 | 0.5705 |

| γ = 0.1 | music video | 0.4457 | 0.3724 | 0.4058 |

| dwt | meditation | 0.4344 | 0.6983 | 0.5356 |

| C = 1 | logic game | 0.4791 | 0.3664 | 0.4152 |

| γ = 1 | music video | 0.3680 | 0.2310 | 0.2838 |

| dwt_stat | meditation | 0.4178 | 0.5193 | 0.4631 |

| C = 0.01 | logic game | 0.3997 | 0.3337 | 0.3638 |

| γ = 0.1 | music video | 0.3685 | 0.3398 | 0.3536 |

| Variant | Class | Precision | Recall | F1 |

|---|---|---|---|---|

| welch32 | meditation | 0.8848 | 0.8722 | 0.8785 |

| C = 10 | logic game | 0.6269 | 0.6665 | 0.6461 |

| γ = 10 | music video | 0.5601 | 0.5326 | 0.5460 |

| dwt_stat | meditation | 0.3271 | 0.3925 | 0.3568 |

| C = 0.01 | logic game | 0.3211 | 0.3854 | 0.3503 |

| γ = 0.1 | music video | 0.3182 | 0.1909 | 0.2387 |

| ar16 | ar24 | dwt | dwt stat | welch16 | welch32 | welch64 |

|---|---|---|---|---|---|---|

| 0.5149 | 0.5296 | 0.3428 | 0.4911 | 0.6705 | 0.7031 | 0.6894 |

| 0.5191 | 0.5339 | 0.3313 | 0.4986 | 0.6721 | 0.7048 | 0.6961 |

| 0.5131 | 0.5266 | 0.3386 | 0.4962 | 0.6713 | 0.7046 | 0.6941 |

| 0.5163 | 0.5240 | 0.3400 | 0.4847 | 0.6763 | 0.6963 | 0.6913 |

| 0.5266 | 0.5347 | 0.3424 | 0.4974 | 0.6653 | 0.7058 | 0.6894 |

| 0.5208 | 0.5341 | 0.3424 | 0.4736 | 0.6755 | 0.7003 | 0.6955 |

| 0.5155 | 0.5236 | 0.3434 | 0.4942 | 0.6717 | 0.6997 | 0.6904 |

| 0.5169 | 0.5353 | 0.3428 | 0.4923 | 0.6626 | 0.7035 | 0.6870 |

| ar16 | ar24 | dwt | welch16 | welch32 | welch64 | dwt_stat | |

|---|---|---|---|---|---|---|---|

| ar_16 | 0.307 | 0.025 | 0.031 | <10−3 | 0.001 | 0.255 | |

| ar_24 | 0.307 | 0.001 | 0.255 | <10−3 | 0.025 | 0.0301 | |

| dwt | 0.025 | 0.001 | <10−3 | <10−3 | <10−3 | 0.272 | |

| welch16 | 0.031 | 0.255 | <10−3 | 0.028 | 0.272 | <10−3 | |

| welch32 | <10−3 | <10−3 | <10−3 | 0.028 | 0.272 | <10−3 | |

| welch64 | 0.001 | 0.025 | <10-3 | 0.272 | 0.272 | <10−3 | |

| dwt_stat | 0.255 | 0.031 | 0.272 | <10−3 | <10−3 | <10−3 |

| Hidden Layers | Activation Function | SGD Parameters | Patience | Max Epochs | Accuracy |

|---|---|---|---|---|---|

| 3 | LReLU (a = 0.2) | lr = 0.01 decay = 10−6 momentum = 0.9 | 50 | 2000 | 0.7477 |

| 4 | tanh + LReLU (a = 0.2) | lr = 0.005 decay = 10−6 momentum = 0.9 | 250 | 3000 | 0.7469 |

| 6 | ReLU | lr = 0.01 decay = 10−6 momentum = 0.9 | 70 | 2000 | 0.7467 |

| 3 | tanh | lr = 0.01 decay = 10−6 momentum = 0.9 | 250 | 3000 | 0.7446 |

| Training/Validation/Test | Meditation | Music Video | Logic Game | 10-Fold Cross-Validation | Meditation | Music Video | Logic Game |

|---|---|---|---|---|---|---|---|

| meditation | 0.87 | 0.11 | 0.02 | meditation | 0.90 | 0.08 | 0.02 |

| music video | 0.06 | 0.69 | 0.26 | music video | 0.07 | 0.63 | 0.29 |

| logic game | 0.02 | 0.30 | 0.68 | logic game | 0.02 | 0.29 | 0.69 |

| Class | Precision | Recall | F1 Score |

|---|---|---|---|

| meditation | 0.9203 | 0.8724 | 0.8957 |

| logic game | 0.7116 | 0.6832 | 0.6971 |

| music video | 0.6296 | 0.6874 | 0.6572 |

| Class | Precision | Recall | F1 Score |

|---|---|---|---|

| meditation | 0.9079 | 0.8973 | 0.9026 |

| logic game | 0.6840 | 0.6933 | 0.6886 |

| music video | 0.6343 | 0.6332 | 0.6337 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Browarczyk, J.; Kurowski, A.; Kostek, B. Analyzing the Effectiveness of the Brain–Computer Interface for Task Discerning Based on Machine Learning. Sensors 2020, 20, 2403. https://doi.org/10.3390/s20082403

Browarczyk J, Kurowski A, Kostek B. Analyzing the Effectiveness of the Brain–Computer Interface for Task Discerning Based on Machine Learning. Sensors. 2020; 20(8):2403. https://doi.org/10.3390/s20082403

Chicago/Turabian StyleBrowarczyk, Jakub, Adam Kurowski, and Bozena Kostek. 2020. "Analyzing the Effectiveness of the Brain–Computer Interface for Task Discerning Based on Machine Learning" Sensors 20, no. 8: 2403. https://doi.org/10.3390/s20082403