1. Introduction

Because technology is becoming more ubiquitous and pervasive, people interact with an increasing number of devices, like a range of intelligent appliances integrated with their home or office space. The widespread development of such Intelligent Systems (IS) used by individuals requires not only intelligent methods for problem solving, but, more importantly, intelligent methods of the adaptation of these systems. Furthermore, there is a persistent need to design user interfaces that are not only functional, but also accessible and user-friendly. The new concept of natural user interfaces was proposed over two decades ago, in order to consider ones that would learn how the user engages with them and how they adapt to the user’s needs. Because people are anthropomorphic towards everything that they may interact with, e.g., “we verbally praise them when they do a good job for us or blame them when they refuse to perform as we had wished” [

1], there is a need to incorporate information regarding user emotions into the adaptation, including the interface. This is particularly important in mobile and ambient systems, because they assist us during many activities. In general, the incorporation of emotion processing into intelligent systems can make them more natural and humanized in operation and interaction [

2]. The development of such systems lies in the area of affective computing (AfC). It is an interdisciplinary field of study that provides a general framework for the development of methods, models, and tools that are widely related to the processing and use of data on human emotions in computer systems [

3,

4].

In our work, we are aiming at the development of practical technology for everyday use based on mobile and wearable devices. This assumption lead to the choice of context-aware systems (CAS) as the basis. Context is understood here as “any information that can be used to characterize the situation of a subject” [

5], e.g., current Facebook posts stream, geospatial localization, movement speed, or calendar events. Most of the modern CAS solutions are developed for the use of mobile and wearable devices (e.g., smartphones or Internet of Things devices), where the use of many independent sensors is facilitated. They include various types of biomedical devices that can collect emotion-related physiological signals, like wristbands, and external DIY hardware platforms, such as Arduino, Raspberry Pi, or BITalino (for comparison of mobile electrocardiogram (ECG) and galvanic skin response (GSR) sensors see [

6]). The contextual data in mobile CAS are usually fused from multiple sources, like mobile device sensors (such as GPS), third-party services (e.g., weather forecasts), and the users themselves (via the form of questionnaires or a feedback loop). In such a setting, the system needs to be able to cope with heterogeneous and vague or missing data. We previously defined four requirements for building such a system [

7].

We have developed a framework that allows for us to meet the described requirements. Furthermore, we extended this framework to support affect-aware systems, leading to the creation of a general architecture of mobile platform for emotion recognition and processing that we call the “Affective Computing with Context Awareness for Ambient Intelligence (AfCAI)” framework (for a summary of the history of our approach, see [

8,

9]). In our framework, we follow James–Lange non-cognitive view on emotions, where they are understood as perceptions of bodily changes [

10]. This theory was extended by Prinz [

11] with a second element, specifically a relationship between the subject and environment. From the CAS systems’ point of view, we can treat this information as a context. For example, in a specific situation, a faster heart rate (a bodily change) and perception of a danger, e.g., a predator (a context), build up fear. The importance of the context in predicting emotions must be emphasized, e.g., the same smile may appear in many different situations and it may not necessarily always mean joy. We restrict ourselves to analysis of a limited set of bodily signals regarding heart activity and skin conductance, as we are aiming at the usage of cheap and affordable technology. In the general framework, these bodily changes can be complemented by any context. In addition, current affective state can be considered to be a part of context for the whole system.

The incorporation of affective information into IS can take various forms. On the one hand, there are studies in which models are trained on the whole data set and general associations are examined, e.g., “what are the differences in the ECG between low and high emotion intensity in the whole collected data sample?”. If one attempts to infer about the entire population in the study, it will take a form of a “classical” psychological methodology used, e.g., in personality science. However, the direction of development of modern affective technology is quite opposite. Researching how people, in general, react to a given stimulus is not the main focus of this paper. Instead, in our approach, we emphasize the need for the personalization of affective systems in order to meet the expectations and needs of individual users (for a current trends review at the intersection of personality science and personalized systems, see [

2]). Therefore, the computational models of emotions need to be adaptable in order to reflect the individual differences.

Deciding on an affective adaptation mechanism requires a choice between a generic solution, which is easier to prepare, but less accurate from the user’s point of view, and a fully personalized model that is difficult to implement due to many issues, such as the need to collect large amounts of data and develop appropriate individual theories of mind. As a compromise between these two extremes, we propose using personality assessment as an aggregation mechanism, which allows for differentiating the behavior of the system, depending on different types of personality. Our hypothesis is that this psychologically-grounded mechanism will divide users into groups that differ in their reactions to complex emotional stimuli, which will allow for personality-based affective adaptation.

We use two kinds of proof-of-concept demonstrations of our approach in order to verify our assumptions. Firstly, we conducted “classical” experiments, in which we presented stimuli to the subjects and collected their answers: both with questionnaires and physiological signals measurement. Secondly, as a testbed, we developed simple computer games as a specific context, since they provide a controllable environment in which experiments can be easily carried out. Within this context, it is easy to manipulate incoming stimuli and collect information about the subject’s behaviors.

The rest of the paper is organized, as follows: we begin with short methodological introduction on selected methods for measuring emotions and their links with personality in

Section 2. Subsequently, in

Section 3, we discuss our experimental setup. The analysis of results starts with the validation of our own widgets for subjective assessment of emotions in

Section 4. We then move on to the analysis of relationships between personality and affective content: simple audio-visual stimuli are discussed in

Section 5, while complex environments (games) are addressed in

Section 6.

Section 7 concludes the paper.

3. Materials and Methods

We conducted an experiment consisting of two main parts in order to verify our hypothesis about the usefulness of personality profiles as a grouping mechanism for the effective adaptation of intelligent systems (see

Section 3.1). In order to address the shortcomings of existing datasets (see

Section 2.1), the presented study was carried out on more than 200 subjects to provide a bigger dataset to AfC community. Equally importantly, our dataset combines emotion-related data with contextual information. Finally, in our opinion, the presentation of longer stimuli, such as the movie clips that were used in two personality-related datasets (51–150 s in ASCERTAIN, 51–128 s in AMIGOS; see

Section 2.2), makes later analysis difficult, as emotions may have changed many times during this interval. Therefore, in our study, we do not only compile psychophysiological signals with information of complex stimuli presented to the subjects. Our final dataset contains the results of the experimental phase with short stimuli, which can be used, e.g., for system calibration. Logs from games (a complex stimuli) complement this, which can be divided into detailed series of events (recorded in logs), making their analysis simpler than movie clips.

The data that were collected in the experiment have been processed, i.e., the physiological signals have been filtered, the images have been analyzed using MS API to recognize facial emotions, and the “Big Five” factors were calculated. The final version of the collected dataset, called BIRAFFE: Bio-Reactions and Faces for Emotion-based Personalization is publicly available at Zenodo under CC BY-NC-ND 4.0 license (

http://doi.org/10.5281/zenodo.3442143) [

41]. In the remainder of this section, key elements of the study design will be outlined. For detailed technical description of the dataset itself, see [

42].

3.1. Study Design

The study was carried out on 206 participants (31% female) between 19 and 33 (M = 22.02, = 1.96; the statistics were calculated for 183 subjects for whom information about age and sex is included in the final dataset). Information regarding recruitment was made available to students of the Artificial Intelligence Basics course at AGH University of Science and Technology, Kraków, Poland. Participation was not an obligatory part of the course, although one could get bonus points for a personal participation or the invitation of friends.

In one part of the study, simple sound and visual stimuli from standardized affective stimulus databases were presented (see

Section 3.3). Subjects evaluated emotions that were evoked by them using our two proof-of-concept widgets (see

Section 3.4). In the second phase of the experiment, the subjects played two affective games that exposed them to complex emotional stimuli (see

Section 3.5). During the whole experiment, the ECG and GSR signals were collected with the BITalino (r)evolution kit platform (

https://bitalino.com) and the photos were taken with the Creative Live! Cam Sync HD 720p camera. The whole experiment was controlled by the Sony PlayStation DualShock 4 gamepad. In addition to the computer-based part, to measure the “Big Five” personality traits, the subjects filled in the paper-and-pen Polish adaptation [

43] of the NEO Five Factor Inventory (NEO-FFI) [

29].

The study was carried out in a designated room at the university. During the experiment, there were three people present in the room: the researcher and two participants. The subjects were sitting in front of the computer stands that were arranged at the opposite walls, i.e., they were sitting with their backs turned to each other. The instructions and explanations were presented to both subjects at the same time. During the procedure, the researcher was sitting at a separate desk with his or her back to the subjects in order to overcome Hawthorne effect.

3.2. Ethics Statement

The Research Ethics Committee of the Faculty of Philosophy of the Jagiellonian University reviewed the described study and it received a favourable opinion. Informed written consent was obtained from all of the participants.

3.3. Stimuli Selection

Standardized emotionally-evocative images and sounds from IAPS [

44] and IADS [

45] sets were used as stimuli. Both of the data sets are provided together with information on the emotional characteristics of each stimulus, written in the form of coordinates in the Valence–Arousal space.

The analysis of IADS set sounds’ valence and arousal scores led us to the observation that there is a clear trend in this set: the arousal of emotions increases when the valence of sound is more extreme (positive or negative).

Figure 1 depicts this observation. In the IAPS set, the Valence–Arousal space is better covered and we do not observe any trends. This is probably due to the fact that IAPS collection is much more numerous (167 sounds in IADS, 1194 pictures in IAPS).

For the purpose of the experiment, we divided the stimuli into three groups according to their arousal and valence index: + (positive valence and high arousal), 0 (neutral valence and medium arousal), and – (negative valence and high arousal). Afterwards, sounds and pictures were paired in two ways. First condition involved consistent types of pairs: + picture was paired with + sound (p+s+), 0 picture was paired with 0 sound (), and – picture was paired with – sound (p–s–). Second condition was inconsistent, composed of types: + picture matched with – sound (p+s–), – picture matched with + sound (p–s+).

Because we aimed to preserve equal proportion of pairs of each condition, and due to the different cardinality pair types inside each condition (three in consistent, two in inconsistent), the overall proportion of types of pairs presented itself, as follows: 20 for each type in consistent group, 30 for each in inconsistent; resulting in 120 stimuli for the whole experiment.

3.4. Emotion Evaluation Widgets

When considering the emotional assessment, the most widespread method is Self-Assessment Mankin (SAM) [

22] evaluating the human emotional response to stimuli on three separate dimensions: arousal, valence, and dominance. Yet, it was reported [

46,

47] that, nowadays, SAM pictures lack clarity, especially due to the technology development. More fluent emotional assessment tools are better understood by users and they can more efficiently capture nuances of rating [

47]. At the same time, modern affective rating tools that were developed for the purpose of continuous reaction assessment [

48,

49,

50] did not meet the needs of our paradigm.

In order to maximize the amount of information, we could obtain and fit to the time constraints assumed in the experiment (nine second rating window); we decided to develop our own assessment tools. We also decided to exclude dominance dimension present in SAM, as this one was said to be the hardest to comprehend [

51]. Finally, we proposed two widgets: “Valence-arousal faces” and “5-faces”. Both were controlled by a left joystick on a gamepad that was used by the participants in the experiment.

3.4.1. Valence-Arousal Faces Widget

This widget is a composition of two state-of-the-art methods for emotion rating: Valence–Arousal space [

52] and AffectButton [

53]. The former gives a possibility to select a point on a two dimensional space, where one dimension ranges from negative to positive (valence), while second dimension—from low to high arousal. These dimensions are abstractive and difficult to use, as participants in our previous experiments indicated (see, e.g., [

54]). In the latter case, AffectButton makes it easier to understand and reason about the options provided, since it uses emoticons as points of reference. That being said, its manipulation is not intuitive, and long training is required in order to know how to navigate towards the selected emotion.

In our valence-arousal faces widget, there is a simple two dimensional space with ratings translated to [−1, 1] range, but, as a hint, we also placed eight emoticons from the AffectButton. These heads are placed on the most characteristic points of the space, i.e., −1 (the lowest score), 0 (neutral score), and 1 (the highest score). What is more, we used a simplified EmojiButton in response to the overly complex emoticons in the original AffectButton [

55].

Figure 2 presents the final widget. It should be noted that our widget has both axes’ names and emoticons, which makes it different from EmojiGrid, where the authors replaced text with emoticons [

47].

3.4.2. 5-Faces Widget

This widget was introduced in order to provide a simple emotion evaluation tool. It consists of five emoticons (see

Figure 3) that reflect the trend that was observed in IADS, i.e., the arousal is higher when the valence is more extreme (as depicted in

Figure 1). Our intuition is that such a simple widget can give us enough information to address the hypotheses, and it is easier to use by the participants.

3.5. Games

Two affective games were used in the study. They were both designed and developed by our team. Both are fully controllable by a gamepad.

3.5.1. Affective SpaceShooter 2

The game is a variation of the classic Asteroids game in which the player controls a spaceship and its task is to shoot down or avoid floating obstacles. In the version used in the experiment, as in our previous prototype [

9,

56], the player’s ship is always at the bottom of the screen, and asteroids are coming from the top. The game uses two types of asteroids: grey (neutral) and colored (affective). Shooting down the latter causes the presentation of sound and visual stimuli (see

Figure 4), according to the random assignment of color to one of the conditions:

p+s+, p–s+, p+s–, p–s– (as described in

Section 3.3). In the second half of the game, the stimuli are presented randomly (regardless of the color of the asteroid) in order to check the player’s reaction to the Inconsistent Reality Logic design pattern.

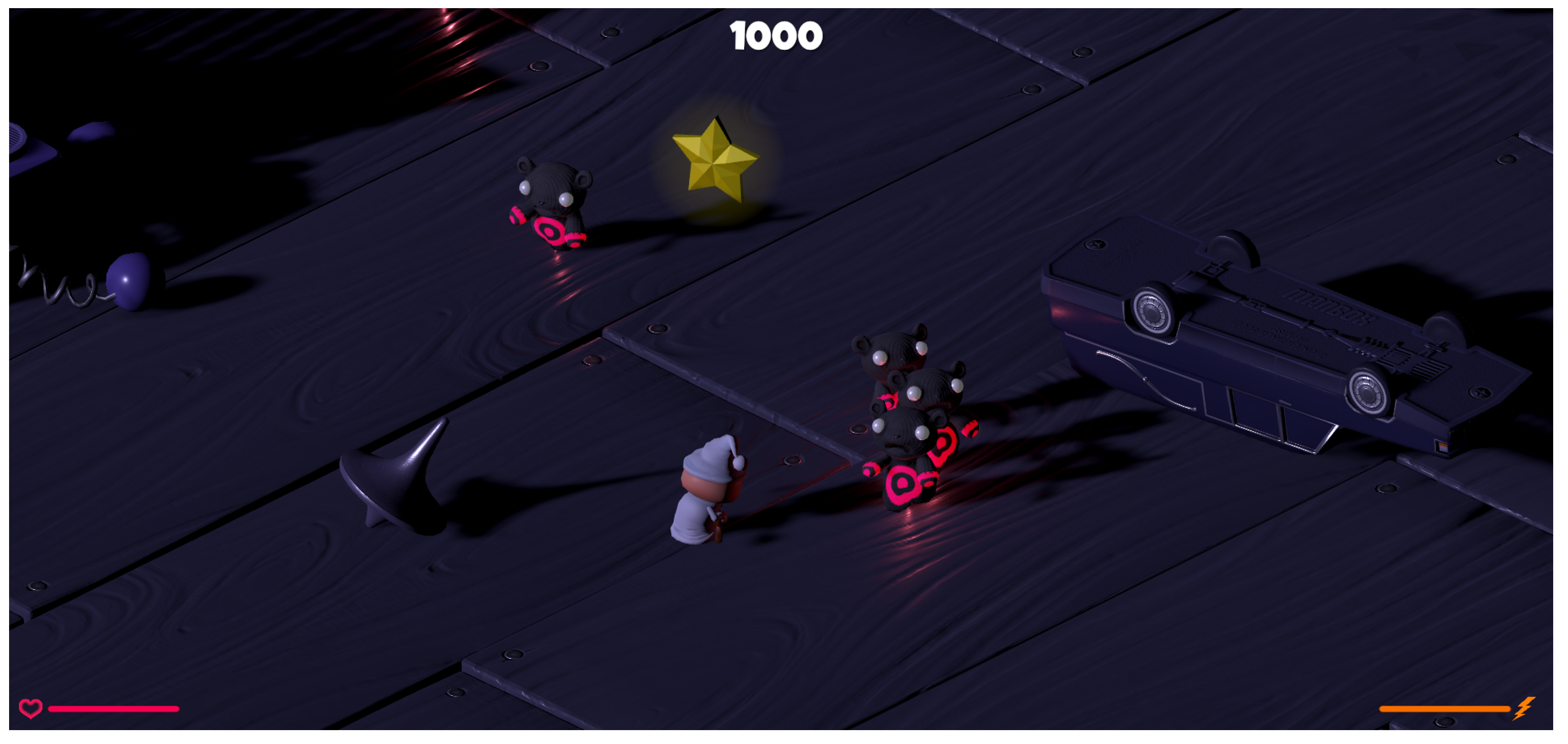

3.5.2. Freud Me Out 2

In this isometric view game, which is the modification of our previous prototype [

9,

56], the player’s task is to defeat enemies—nightmares—and/or collect stars (see

Figure 5). Players can freely combine these two types of activities, so long as they obtain the score that is required to complete each level. One can use both a regular handgun and a “SuperPower” to fight. The latter allows for attacking several creatures in a certain area around the protagonist at once. During the experiment, after the second of the five weeks of study, the maximum number of opponents was reduced from 30 to 12. As it turned out, the initial amount of creatures prevented the users from being able to choose a strategy of only collecting stars.

3.6. Analyses Overview

Over 100 variables that were related to all of the aforementioned elements of the procedure were collected in the study (stimuli presented, widgets’ responses, games’ logs, psychophysiological signals, and face emotions). For their detailed list and operationalization, see [

42].

The remaining part of the paper provides the results of three investigations. Firstly,

Section 4 provides the validation of two proposed widgets for emotion assessment. The next two sections refer directly to the main concern of this paper, i.e., verification of the usefulness of personality as a base for IS adaptation mechanism. In order to assess this, the relationships between emotions and personality were checked, revealing the potential for grouping individuals with similar characteristics of emotional responses (both self-assessment and physiological) using personality (see

Section 5). Next, the relationship between the personality and actions taken in a rich (game) environment was investigated. The obtained relationships indicate the possibility of adapting this environment (and, ultimately, the IS) to different personality-based groups (see

Section 6).

Statistical analysis was performed while using the R environment (version 3.5.3) [

57] with lme4 library (version 1.1-23) [

58] and MASS library (version 7.3-51.1) [

59]. Models with the outcomes being numbers of occurrences of emoscale ratings clusters (A–P; defined in

Section 4.1), as well as models with emospace ratings, were fitted while using Generalised Linear Mixed Models [

60] with the Poisson distribution. Models with emospace responses (valence and arousal axes) as outcomes, as well as all of the models with biosignal responses as outcomes, were fitted using the Linear Mixed Models [

60] with normal distribution. For all mixed models that are presented in the manuscript (in this and following sections), the visual inspection of residual plots, as well as other assumptions of fit, were checked and revealed no manifest deviations. The models were fitted by maximum likelihood Laplace Approximation [

58] method. Deviance was obtained and compared for all models, each time by juxtaposing the full model, one including the fixed effect in question, with the model without the fixed effect in question, in order to establish the final structure of the random effects [

61,

62]:

For the emoscale ratings that were clustered (A–P) as outcomes, multinomial logistic regression models were fitted by multinom function from nnet library (version 7.3-14) [

59]. Deviance was obtained and compared for all models, each time by juxtaposing the full model, one including the fixed effect in question, with the model without the fixed effect in question.

4. Widgets Validation

In this study, two new widgets were proposed to assess the emotions of the subjects. The use of both “Valence-arousal faces” and “5-faces” widgets (further referred as “emospace” and “emoscale”, respectively) was analysed in order to establish whether they are suitable for this purpose.

4.1. Co-Validation of Both Widgets

Visual inspection of emospace results (see

Figure 6) led to the following observations:

As expected, more extreme emotions appeared less frequently and the ones that were closer to neutrality occurred more often.

The respondents often chose the coordinates where emoticons are located, which may suggest that the widget was not fully understood by everyone.

In order to compare responses from emospace widget with the rating form emoscale, we arbitrarily divided the two dimensional space into 16 separate clusters (A–P), each covering the one fourth of available arousal and valence vectors (see

Figure 6). Next, the intercept of data was stated as an emergent “_0_” cluster (see

Figure 6) covering the central area (one-fourth of the available valence and arousal scales).

Based on the trend we observed when comparing both widgets, we assumed that the emoscale levels should be assigned to the emospace clusters, as presented in the “assumed” column in

Table 1. We counted the number of assignments of the specific stimuli pairs for each of five levels of emoscale and for each of the emospace clusters (A–P). Subsequently, for each pair, the most frequently assigned level and cluster was selected. The frequency of co-assigning every cluster with every rating revealed the pattern presented in the actual column in

Table 1 (for full frequency assignment, see

Table A1 in the

Appendix A).

4.2. Validation of both Widgets Using IADS and IAPS Sets

Two analyses were carried out in order to investigate the reasonableness of widgets responses to the IAPS and IADS stimuli. The first one considered the binary condition (consistent or inconsistent), while the second one considered the specific condition (p+s+, , p–s–, p+s–, and p–s+).

Regarding the binary approach, the following model corresponds to the hypothesis tested:

The emoscale widget response showed a significant effect (

,

Figure 7) when compared to the null model (

,

Table A2 in the

Appendix B). Additionally, in the emospace widget responses, we observed the above-mentioned significant dependence (

,

Figure 8) as compared to the null model (

) and the model without interaction (

,

Table A3 in the

Appendix B).

When considering responses separately on two vectors, valence (

,

Figure 7) and arousal (

,

Figure 7) both performed better when comparing to the null models (

,

Table A4 in the

Appendix B and

,

Table A5 in the

Appendix B, respectively).

In the specific approach, the following model corresponds to the hypothesis tested:

Emoscale widget response showed significant effect (

,

Figure 9) as compared to the null model (

,

Table A6 in the

Appendix B). Additionally, in the emospace widget responses, we observed the above mentioned significant dependence and the interaction effect (

,

Figure 10), compared to the null model (

) and the model without interaction (

,

Table A7 in the

Appendix B).

When separately considering responses on two vectors, in both valence and arousal, we observed significant effects. Valence (

,

Figure 9) performed better when comparing to the null model (

,

Table A8 in the

Appendix B). Regarding the arousal model (

,

Figure 9), it also performed better when comparing to the null model (

,

Table A9 in the

Appendix B).

4.3. Validation of both Widgets Using Psychophysiological Reactions

In this analysis, we wanted to search for connections between bodily responses and subjective widget answers. The following model corresponds to the hypothesis tested:

We observed significant effects when only considering the arousal vector of the emospace widget response. Not only the mean RR interval (

), but also GSR response latency (

), are towering over the null counterparts (

,

Table A10 and

,

Table A11 in the

Appendix C, respectively). Both bodily responses increase values with the increasing arousal, based on the emospace widget responses.

4.4. Discussion on Widgets Validation

Analyses of the use of both widgets indicate that they are good means of interaction with users. Emospace requires further minor improvements, such as moving the emoticons out of the selection space to remove the bias that is associated with selecting emoticon coordinates (compare

Figure 2 and

Figure 6).

For binary and specific conditions, data analysis indicates that the consistence of the stimuli pairs on the affective dimension is well recognized. Emospace widget response captures the difference best in the clusters laying on the ascending diagonal of valence-arousal space. Nevertheless, regarding the comparison with psychophysiological reactions, only the arousal dimension in the emospace effectively expresses the bio-markers of emotions.

Because of the completely different nature of both widgets, further analysis will be carried out on emotions that are considered in three different ways:

emoscale widget responses,

emospace widget responses (clustered), and

emospace vector widget responses (arousal and valence vectors, separately).

Interestingly, as it is indicated by the specific approach analysis, the picture of negative valence seems to overpower the positive sound that comes with it (p–s+ condition), presenting comparable results as the stimuli pair of consistent negative valence (p–s– condition). Additionally, the positive valence of the picture cancels out the negative character of sound in pair (p+s– condition), which brings the results down to the level of neutral stimuli pair ( condition). The presented conclusions are particularly interesting from a cognitive perspective. They could potentially be of use in the process of designing affective interfaces which serve to induce the appropriate emotional state or modulate existing stimuli affective scores.

6. Personality vs. Complex Stimuli (Games)

An analysis of personality relationships was undertaken in response to more complex stimuli, which here took the form of a controlled game environment, as significant relationships between personality and responses to simple stimuli were found. In such an environment, the whole context was stored in logs, which were the basis of the described analyses.

6.1. Analysis

A series of MANOVAs were conducted with five personality traits (each on one of three levels: high, medium, low) that were gathered by NEO-FFI as independent variables, and with several game-related statistics gathered from game logs as dependent variables.

For Affective SpaceShooter 2 the following values were calculated:

total number of shots fired (),

total number of all asteroids destroyed (),

total number of affective asteroids in positive picture/positive sound condition destroyed to total number of asteroids destroyed ratio (),

total number of affective asteroids in negative picture/negative sound condition destroyed to total number of asteroids destroyed ratio (),

total number of affective asteroids in positive picture/negative sound condition destroyed to total number of asteroids destroyed ratio (), and

total number of affective asteroids in negative picture/positive sound condition destroyed to total number of asteroids destroyed ratio ().

Two variables used for Freud me out 2:

There were also other values calculated, e.g., total number of player’s deaths or total number of enemies killed, although we obtained no significant results for them.

The results are presented in

Table 2 and

Table 3 for Affective SpaceShooter 2 and Freud me out 2, respectively. In order to shorten the tables, only

results were reported in them. Significant associations were examined further by non-parametric testing with Tukey’s HSD (see

Table A19,

Table A20 and

Table A21 in the

Appendix F).

6.2. Discussion on Personality vs. Complex Stimuli (Games)

Several significant associations between game activities and personality traits are a promising result. It indicates that personality can be useful in assessing emotions, not only with simple stimuli, such as movies [

33], but also in a more complex experimental environment, like games. The most interesting seems to be the extroversion, generally connected to the increase of activity in game (i.e., number of shots, number of killed enemies). At the same time, it is now clear to us that the better understanding of those relations calls for the better game design—one that would allow us to capture the more nuance effects. In the future work, we are planning to log the game more densely and increase our control over the aims and goals of the player.

7. Conclusions

The aim of this paper was to investigate the possibility of using personality assessment as an affective adaptation mechanism in intelligent systems. We were particularly interested in the use of emotion measurement through affordable wearable sensors. We used two affective games of our own as an experimental environment, which allowed for us to present complex stimuli under the complete control of the whole context.

We reported on the results of a large experiment that we conducted to collect the affective data set, called BIRAFFE [

42]. Unlike two existing datasets, ASCERTAIN [

31] and AMIGOS [

32], which also collect affective data along with the personality assessment, BIRAFFE provides details of changes in stimuli over time in a form of game logs, which facilitates the analysis of subjects emotions in reaction to the complex affective stimuli presented. In addition, this study has several more features that distinguish it from existing affective data sets [

17,

26], such as: a large sample (206 individuals), the inclusion of conflicting stimuli (

p+s– and

p–s+), the use of affective games as an experimental environment, and the introduction of new widgets for the self-assessment of emotions by the subjects.

We discovered several significant links between different personality traits, as measured by the NEO-FFI questionnaire, and the characteristics of psychophysiological reactions (ECG, GSR). These are in line with the existing research [

33]. Furthermore, we discovered links between personality traits and reactions to complex stimulus environment (games). The above-mentioned results are promising, and they indicate the potential for the adaptation of intelligent systems’ with the use of wearable sensors that we used in our experiments. We believe that the further investigation of those relations will allow for us to introduce personality profiles as an effective tool for the pre-usage adaptation of games and, ultimately, intelligent systems in general.

Determining the type of personality at the beginning of the device usage, e.g., with the use of a personality stories methodology [

39], will allow for the prediction of expected user reactions and the proper adjustment of the user interface. This will be made possible by the analysis of game logs, as there are correlations between personality and user behavior. The calibration phase is still required for personalization for a particular person. However, thanks to the pre-coded knowledge regarding different types of personality, it will be shorter and more accurate, as the system will be initially adapted to the specific personality profile. One of the Automatic Personality Recognition methods can also be used if it is feasible to collect relevant data [

40]. Consequently, the user will start using the device more quickly. This can also be used in an opposite direction. Users can take a short session with the device, during which psychophysiological signals and usage logs will be collected. Analysis of the data will potentially allow for a rough estimate of personality type without the need for filling out the personality questionnaire. We do not assume that the questionnaire can be replaced by a physiological measurement. However, it is not possible to carry out such a reliable personality measurement when working with a mobile system. Physiological measurement may be a sufficient approximation for such applications.

Moreover, to be able to gain data regarding subjects’ self-assessment more efficiently, we made an attempt to improve the subjective rating tools available, introducing and evaluating our own widgets. The emospace widget turned out to be successive in rating the simple audio-visual stimuli. The personality itself, being our main object of focus, presented promising relations to gathered subjective ratings. As a side outcome, we also presented an analysis that indicates that the image valence dominates over the sound valence. This conclusion can be potentially useful in affective human–computer interfaces design.

This study has potential limitations. The sample was taken from a very narrow demographic range—most of the subjects were students of a technical university. This should not be a big drawback, because physiological reactions and personality are fairly universal. There have also been various technical problems with data recording, so we do not provide complete data for every participant. However, the data set contains a summary of information that is available for each subject [

42]. Additionally, our methodology uses games as an example of context-aware intelligent systems. No attempts to use this methodology on real IS, e.g., voice assistants, have been made yet. Finally, the study used the BITalino platform, which can be uncomfortable as a wearable. However, we assume that the rapid development of the technology will lead to superior devices upon which the proposed methodology will then be used.

In the future, we will work on our approach to collect different types of user context, including the emotional one, for system adaptation and personalization. We are working on developing different efficient context providers. As a part of the context analysis, we are also considering the analysis of non-atomic emotional states, i.e., the situation where the person can be in more than one unique emotional state. When considering the games application, we are planning to log the game more densely and increase our control over the aims and goals of a gamer. We will also prepare a catalog of dependencies between the user and the game environment, which can be formalized while using decision rules. The model will then be used to achieve user’s interaction with the system in the “affective” feedback loop.