Figure 1.

The histogram of the distribution of the labels in the training and validation set.

Figure 1.

The histogram of the distribution of the labels in the training and validation set.

Figure 2.

The histogram of the distribution of the labels in the challenge test set.

Figure 2.

The histogram of the distribution of the labels in the challenge test set.

Figure 3.

The difference between the raw sample and the augmented sample. The blue line is the raw sample and the orange line the augmented sample.

Figure 3.

The difference between the raw sample and the augmented sample. The blue line is the raw sample and the orange line the augmented sample.

Figure 4.

The architecture of the model. Each sensor modality had its own input, and the intermediate features were fused in the concatenation layer in the second dimension.

Figure 4.

The architecture of the model. Each sensor modality had its own input, and the intermediate features were fused in the concatenation layer in the second dimension.

Figure 5.

A detailed view of the three different blocks of layers used in our architecture.

Figure 5.

A detailed view of the three different blocks of layers used in our architecture.

Figure 6.

The autoencoder architecture used for dimensionality reduction. The first neuron of the latent space is the x-value and the second neuron of the latent space is the y-value.

Figure 6.

The autoencoder architecture used for dimensionality reduction. The first neuron of the latent space is the x-value and the second neuron of the latent space is the y-value.

Figure 7.

The progress of the score for the final training for 100 epochs. The progress shows an asymptotic behavior after around about 40 epochs.

Figure 7.

The progress of the score for the final training for 100 epochs. The progress shows an asymptotic behavior after around about 40 epochs.

Figure 8.

The progress of the loss for the final training for 100 epochs. The progress shows an asymptotic behavior after around about 40 epochs. The progress corresponds to the progress of the score.

Figure 8.

The progress of the loss for the final training for 100 epochs. The progress shows an asymptotic behavior after around about 40 epochs. The progress corresponds to the progress of the score.

Figure 9.

The 2D encoded representation of the last activations of the LSTM layer for the acceleration sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 9.

The 2D encoded representation of the last activations of the LSTM layer for the acceleration sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 10.

The 2D encoded representation of the last activations of the LSTM layer for the gravity software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 10.

The 2D encoded representation of the last activations of the LSTM layer for the gravity software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

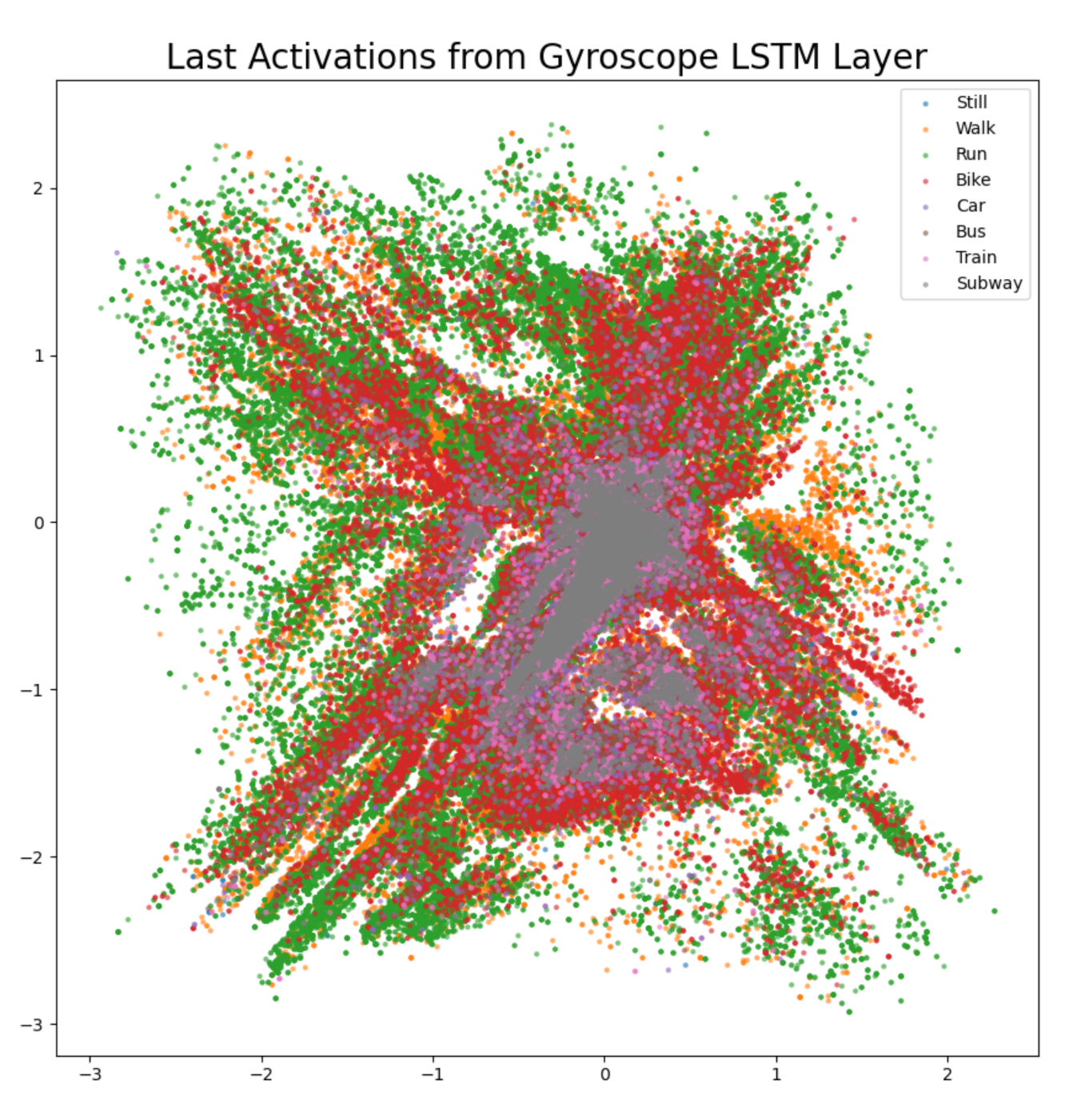

Figure 11.

The 2D encoded representation of the last activations of the LSTM layer for the gyroscope. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 11.

The 2D encoded representation of the last activations of the LSTM layer for the gyroscope. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 12.

The 2D encoded representation of the last activations of the LSTM layer for the linear acceleration software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 12.

The 2D encoded representation of the last activations of the LSTM layer for the linear acceleration software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 13.

The 2D encoded representation of the last activations of the LSTM layer for the magnetometer. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 13.

The 2D encoded representation of the last activations of the LSTM layer for the magnetometer. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 14.

The 2D encoded representation of the last activations of the LSTM layer for the orientation software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 14.

The 2D encoded representation of the last activations of the LSTM layer for the orientation software sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 15.

The 2D encoded representation of the last activations of the LSTM layer for the pressure sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Figure 15.

The 2D encoded representation of the last activations of the LSTM layer for the pressure sensor. Color codes: blue (still), orange (walk), green (run), red (bike), violet (car), brown, (bus), pink (train), grey (subway).

Table 1.

An overview about the scores and best epochs of the networks trained with one sensor modality left-out. For the sake of comparison, the first row shows the baseline values of the network trained with all sensor modalities.

Table 1.

An overview about the scores and best epochs of the networks trained with one sensor modality left-out. For the sake of comparison, the first row shows the baseline values of the network trained with all sensor modalities.

| Left-Out Sensor | Best Epoch | Validation Score | Private Test Score | Challenge Score |

|---|

| None | 77 | 98.93% | 98.96% | 52.80% |

| Acceleration | 50 | 98.73% | 98.77% | 52.83% |

| Gravity | 50 | 98.58% | 98.65% | 56.49% |

| Gyroscope | 50 | 98.21% | 98.22% | 52.51% |

| linear Acceleration | 49 | 98.66% | 98.68% | 60.12% |

| Magnetometer | 50 | 98.76% | 98.80% | 55.95% |

| Orientation | 50 | 98.42% | 98.45% | 51.79% |

| Pressure | 50 | 98.16% | 98.11% | 57.68% |

Table 2.

An overview about the change of the scores for each class and left-out sensor. The smallest drop in accuracy is marked in green and the largest drop in red.

Table 2.

An overview about the change of the scores for each class and left-out sensor. The smallest drop in accuracy is marked in green and the largest drop in red.

| Left-Out Sensor | Still | Walk | Run | Bike | Car | Bus | Train | Subway | Sum |

|---|

| Acceleration | −14 | −15 | −6 | −10 | −23 | 12 | −98 | −25 | −179 |

| Gravity | −4 | −9 | −6 | 1 | −27 | −8 | −86 | −19 | −158 |

| Gyroscope | −24 | −33 | −11 | −17 | −37 | −17 | −104 | −52 | −295 |

| linear Acceleration | −42 | −16 | −3 | 0 | −45 | −21 | −101 | −44 | −272 |

| Magnetometer | −205 | −31 | −7 | −24 | −66 | −108 | −142 | −124 | −707 |

| Orientation | −48 | −61 | −3 | −19 | −53 | −61 | −150 | −101 | −496 |

| Pressure | −123 | −62 | −13 | −33 | −67 | −93 | −191 | −247 | −829 |

Table 3.

The Confusion Matrix for the private test set. The classes with the most false classifications still and subway. The classes with the best true classifications are run and walk. t = true label, p = predicted label.

Table 3.

The Confusion Matrix for the private test set. The classes with the most false classifications still and subway. The classes with the best true classifications are run and walk. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | 17,619 | 75 | 0 | 28 | 15 | 75 | 117 | 71 |

| walk | 95 | 17,825 | 9 | 15 | 4 | 10 | 17 | 25 |

| run | 6 | 7 | 17,981 | 1 | 0 | 2 | 3 | 0 |

| bike | 30 | 39 | 5 | 17,900 | 3 | 9 | 10 | 4 |

| car | 11 | 6 | 1 | 4 | 17,857 | 59 | 44 | 18 |

| bus | 56 | 31 | 0 | 11 | 31 | 17,711 | 103 | 57 |

| train | 49 | 39 | 1 | 10 | 19 | 52 | 17,685 | 145 |

| subway | 52 | 35 | 0 | 2 | 21 | 42 | 274 | 17,574 |

Table 4.

The difference confusion matrix of the network trained without the acceleration sensor. The largest loss in classification occurred in the class bus and the smallest in class run. t = true label, p = predicted label.

Table 4.

The difference confusion matrix of the network trained without the acceleration sensor. The largest loss in classification occurred in the class bus and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −14 | 6 | 2 | 8 | 2 | 2 | −16 | 10 |

| walk | −1 | −15 | 0 | 0 | 1 | 5 | 5 | 5 |

| run | 4 | 2 | −6 | 1 | 0 | −3 | −1 | 3 |

| bike | 8 | 7 | 0 | −10 | −4 | 2 | 1 | −4 |

| car | 0 | 5 | 0 | 2 | −23 | 20 | −2 | −2 |

| bus | 4 | 7 | 0 | 0 | 3 | 12 | −32 | 6 |

| train | 17 | −4 | 1 | 0 | 1 | 28 | −98 | 55 |

| subway | 18 | 6 | 0 | 2 | −1 | 18 | −18 | −25 |

Table 5.

The difference confusion matrix of the network trained without the gravity software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

Table 5.

The difference confusion matrix of the network trained without the gravity software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −4 | 10 | 0 | 1 | 7 | 2 | −21 | 5 |

| walk | −1 | −9 | 0 | −4 | 1 | 3 | 12 | −2 |

| run | −1 | 5 | −6 | 2 | 0 | −2 | 1 | 1 |

| bike | −1 | 5 | 4 | 1 | −1 | −5 | 1 | −4 |

| car | 0 | 2 | 0 | 2 | −27 | 19 | −2 | 6 |

| bus | 9 | 6 | 0 | −1 | 8 | −8 | −16 | 2 |

| train | 13 | 4 | 0 | 1 | 12 | 9 | −86 | 47 |

| subway | 11 | 2 | 0 | 1 | 5 | 9 | −9 | −19 |

Table 6.

The difference confusion matrix of the network trained without the gyroscope. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

Table 6.

The difference confusion matrix of the network trained without the gyroscope. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −24 | 4 | 1 | 2 | 12 | 1 | −15 | 19 |

| walk | −1 | −33 | 2 | 0 | 2 | 12 | 5 | 13 |

| run | −3 | 8 | −11 | 1 | 5 | −1 | 1 | 0 |

| bike | 9 | 4 | 2 | −17 | −1 | 8 | −1 | −4 |

| car | 7 | 4 | 1 | 1 | −37 | 22 | 0 | 2 |

| bus | 5 | 3 | 0 | 3 | 21 | −17 | −13 | −2 |

| train | 15 | 1 | 0 | −1 | 12 | 11 | −104 | 66 |

| subway | 10 | 6 | 0 | 0 | 1 | 21 | 14 | −52 |

Table 7.

The difference confusion matrix of the network trained without the linear acceleration software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

Table 7.

The difference confusion matrix of the network trained without the linear acceleration software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −42 | 7 | 1 | 9 | 9 | 22 | −20 | 14 |

| walk | −5 | −16 | 4 | −3 | 1 | 4 | −2 | 17 |

| run | −1 | 5 | −3 | 3 | 0 | −3 | −1 | 0 |

| bike | −2 | 1 | 0 | 0 | 0 | 6 | −3 | −2 |

| car | −2 | 4 | 0 | 2 | −45 | 35 | −4 | 10 |

| bus | 18 | 3 | 0 | 3 | 12 | −21 | −14 | −1 |

| train | 7 | 1 | 0 | −2 | 17 | 19 | −101 | 59 |

| subway | 18 | −1 | 0 | 2 | 1 | 24 | 0 | −44 |

Table 8.

The difference confusion matrix of the network trained without the magnetometer. The largest loss in classification occurred in the class still and the smallest in class run. t = true label, p = predicted label.

Table 8.

The difference confusion matrix of the network trained without the magnetometer. The largest loss in classification occurred in the class still and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −205 | 17 | 0 | 0 | 20 | 27 | 49 | 92 |

| walk | 16 | −31 | 2 | 4 | 3 | −1 | 2 | 5 |

| run | −2 | 5 | −7 | 0 | 0 | 1 | 2 | 1 |

| bike | 0 | 7 | 1 | −24 | −1 | 7 | 10 | 0 |

| car | 7 | 3 | 0 | 12 | −66 | 46 | −6 | 4 |

| bus | 12 | 0 | 0 | 1 | 28 | −108 | 14 | 53 |

| train | 50 | 9 | 0 | 4 | 12 | 30 | −142 | 37 |

| subway | 63 | 9 | 0 | 6 | 5 | 51 | −10 | −124 |

Table 9.

The difference confusion matrix of the network trained without the orientation software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

Table 9.

The difference confusion matrix of the network trained without the orientation software sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −48 | 22 | 0 | 4 | 13 | 4 | −15 | 20 |

| walk | 24 | −61 | 3 | −3 | 3 | 8 | 6 | 20 |

| run | −2 | 5 | −3 | 1 | 1 | −1 | −1 | 0 |

| bike | 18 | 8 | 0 | −19 | −1 | −2 | −1 | −3 |

| car | 6 | 5 | 0 | 1 | −53 | 45 | −6 | 2 |

| bus | 18 | 7 | 0 | 2 | 11 | −61 | 4 | 19 |

| train | 4 | 1 | 0 | 4 | 4 | 29 | −150 | 108 |

| subway | 35 | 3 | 0 | 3 | 2 | 20 | 38 | −101 |

Table 10.

The difference confusion matrix of the network trained without the pressure sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

Table 10.

The difference confusion matrix of the network trained without the pressure sensor. The largest loss in classification occurred in the class subway and the smallest in class run. t = true label, p = predicted label.

| t\p | Still | Walk | Run | Bike | Car | Bus | Train | Subway |

|---|

| still | −123 | 34 | 0 | 7 | 4 | 20 | 20 | 38 |

| walk | 23 | −62 | 5 | 6 | 1 | 8 | 4 | 15 |

| run | 4 | 12 | −13 | 0 | 0 | −2 | −1 | 0 |

| bike | 17 | 17 | 0 | −33 | 5 | −3 | 3 | −6 |

| car | 3 | 4 | 0 | 1 | −67 | 40 | 5 | 14 |

| bus | 17 | 18 | 0 | 3 | 19 | −93 | 7 | 29 |

| train | 32 | 4 | 0 | 1 | 10 | 29 | −191 | 115 |

| subway | 54 | 5 | 0 | 2 | −3 | 22 | 167 | −247 |