1. Introduction

Three-dimensional (3D) models of indoor spaces are widely used in the field of virtual reality (VR), augmented reality (AR), indoor localization, and indoor navigation. Modeling methods of the indoor structure are important topics of study in various fields, such as computer vision, computer graphics, civil engineering, and robotics. Nevertheless, the reconstruction of indoor structures that include inter-room connections and inter-floor connections is a challenge. A recent survey and tutorial indicated that modeling with inter-room and inter-floor connections approach is reserved for future work. Pintore et al. [

1] presented the modeling of an entire building; however, the connections between rooms and levels is still a complex problem. Hence, a global solution is required to reconstruct complex environments [

2].

Previous works address this problem based on divide-and-conquer techniques. Floor segmentation of buildings and room segmentation are traditional approaches that convert the difficult problem into simpler individual sub-problems for modeling of indoor spaces. The floor segmentation approach divides multi-level buildings into several single-level spaces, whereas the room segmentation approach divides single-level multi-room spaces into several rooms. Models of single-level indoor spaces are represented by a combination of models in individual room and that of multi-level buildings are represented by a combination of such single-level models.

Oesau et al. [

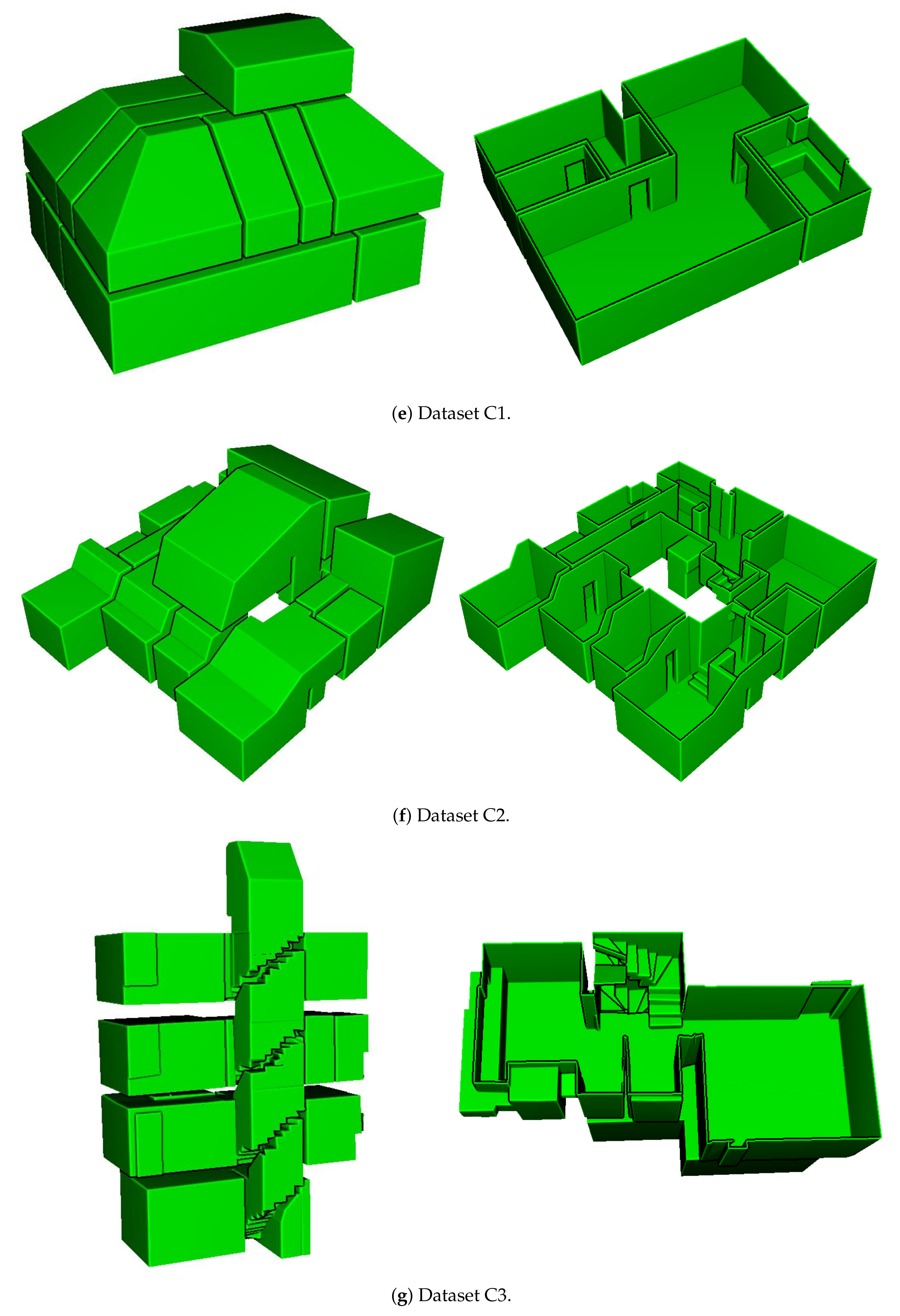

3] introduced a reconstruction method for separating multi-level buildings into individual single-level spaces using horizontal slicing approach. The horizontal slicing algorithm was used to cut the target space using peaks in vertical histogram of point cloud distributions. However, this approach cannot be used to separate each level of complex multi-level buildings depicted in

Figure 1, because the indoor space, which is C2 in our datasets, comprises multiple levels of varying height.

Some studies conducted room segmentation using Markov clustering [

4,

5,

6] or space over-segmentation and merging [

7,

8,

9]. These approaches may be suitable for modeling of multi-room environments but not for room-less environments.

Furthermore, certain existing approaches still use the room segmentation for modeling multi-room environments, modelling methods for inter-room connections have not been addressed [

10,

11]. The inter-room and inter-floor connections can be modeled as doors (or openings) and stairs, respectively, as depicted in

Figure 2. The figure illustrates the reconstructed model of the dataset C2 using the proposed method.

Previtali et al. [

12] detected inter-room connections using lay-tracing algorithm, whereas Wang et al. [

13] and Yang et al. [

14] used specific parameters, such as upper and lower bound of doors or width of doors. Oesau et al. [

3] dealt with the modeling of multi-level buildings but did not model inter-floor connections. This approach generated multi-level building models by stacking multiple single-level spaces. Nikoohemat et al. [

15] divided the target multi-level building into floor and stair parts and constructed the multi-level building model as a combination of multiple single-level spaces and stair models.

In this paper, we propose an automatic reconstruction method for multi-level indoor spaces with complex environments. The proposed method can generate indoor space models that include inter-room and inter-floor connections from point cloud and trajectory. The continuous trajectory through multiple rooms and multiple floors within a building is used to reconstruct an indoor space as a unique model. Furthermore, the proposed method does not segment structural points into specific components such as walls, floors, ceilings, doors, or stairs, and does not segment indoor spaces into sub-spaces such as individual rooms or single-level floors. The proposed method provides a general approach to indoor space modeling that reconstructs indoor structures into piece-wise planar segments and builds the target indoor space to a unique model, even if the target indoor space consists of multiple rooms or multiple floors. In addition, the proposed method conducts energy minimization using graph cut, which enables automatic reconstruction of indoor space models. We validated the performance of the proposed approach by evaluating the error in the distance between point cloud and generated mesh for seven datasets. Furthermore, by considering datasets from various environments, this approach validates the wide applicability of the proposed method in multi-room, room-less, and multi-level building environments.

The proposed method is improved over our previous work [

16]. Our previous work used random sample consensus (RANSAC) based plane extraction and constructed an adjacency graph to reconstruct indoor spaces, but this approach requires manual work when generating the adjacency graph. However, in this paper, the proposed method uses region-growing based plane extraction to detect small plane patches such as stairs, doors, or openings and automatically reconstructs indoor space models with 3D space decomposition and energy minimization using graph cut. Furthermore, the proposed method uses both point cloud and trajectory to build the indoor space of multi-level building as a unique model that includes inter-room and inter-floor connections. In particular, the data term of the energy function is expressed as a difference in visibility between each decomposed space and trajectory.

The remainder of this paper is organized as follows.

Section 2 presents an overview of the existing literature.

Section 3 describes the proposed method and discusses the construction of indoor space models, including inter-room and inter-floor connections from point cloud and trajectory.

Section 4 presents the experimental results and performance evaluation of the proposed method for seven datasets.

Section 5 presents the conclusion.

2. Related Work

Methods for modeling of indoor spaces are still actively studied in various fields, such as computer vision, computer graphics, civil engineering, and robotics. Existing methods build indoor space models using geometric information, images, or a combination of both. The modeling of indoor spaces from point cloud is the most traditional approach. However, it is optional to use a trajectory, the location of LiDAR scan raw data, in existing methods that use point cloud. Moreover, some studies use only point cloud [

5,

6], whereas some use both point cloud and information of scan position [

4,

17].

Many existing approaches use geometric assumptions to model 3D indoor spaces, such as the Manhattan world assumption or two-and-a-half-dimensional (2.5D) approaches. Ikehata et al. [

18], Murali et al. [

19], and Xie et al. [

20] built models under the Manhattan world assumptions. Mura et al. [

21], Ochmann et al. [

17], and Wang et al. [

13] introduced methods using 2.5D approaches that construct the models by vertical extension of two-dimensional (2D) floor plans. In the 2.5D environments, the walls are orthogonal to a single floor and ceiling. These previous works with 2.5D approaches first detected a floor and ceiling, then projected wall points onto the floor to generate floor plans in 2D.

Recently, 3D approaches have been introduced because the methods using geometric assumptions (i.e., the Manhattan world assumptions and 2.5D approaches) cannot reconstruct indoor spaces with complex environments. Some of the studies address fully 3D modeling approaches that can model more complex environments [

4,

6,

10]. These approaches conduct 3D space decomposition using 3D planar segments extracted from the point cloud. Mura et al. [

4] used binary space partitioning (BSP) to decompose 3D spaces. Ochmann et al. [

6] and Nikoohemat et al. [

10] reconstructed 3D models with volumetric walls.

An extraction of structural components is conducted to model real-world indoor spaces, which are cluttered environments. The geometric information (i.e., point cloud) of the target indoor space can be segmented into structural and object (non-structural) parts. The structural parts are architectural components of the indoor spaces, such as floors, ceilings, walls, and stairs. However, previous methods extracted structural parts without segmenting into structural and non-structural parts or used assumptions to extract structural parts. Previtali et al. [

12] detected wall components under the Manhattan world assumption. Macher et al. [

22] assumed that wall points are located in the boundary of the rooms to detect walls. Mura et al. [

4] detected the permanent components using structural patterns. They assumed that the permanent components are rectangles so that the holes caused due to occlusion by objects can be neglected. Nikoohemat et al. [

10] presented an adjacency graph based permanent structures detection method. Lim et al. [

16] not only segmented structural and object points but also filled in the holes that are used to construct architectural points by projecting object points onto the structural surfaces. Coudron et al. [

23] used deep learning to extract permanent structures.

Structural primitives (e.g., 2D lines or 3D piece-wise planar segments) can be extracted from the structural components using various plane detection algorithms. Xiong et al. [

24] detected structural patches by region-growing algorithm based on plane detection using total least squares. Xiao et al. [

25] used Hough transform to detect wall components in 2D. Turner et al. [

26] segmented planar segments using principal component analysis. Tran et al. [

11]

and Ochmann et al. [

6] used the RANSAC based plane-fitting algorithm [

27] for extracting structural surfaces.

In indoor spaces with multi-room environments, the room segmentation approaches divide the modeling problems to several simpler sub-problems and are conducted using the Markov clustering algorithm [

4,

5,

6] or space over-segmentation and merging approach [

7,

8,

9]. The models of indoor spaces represent a combination of individual rooms divided by room segmentation. Yang et al. [

14] and Mura et al. [

4] conducted room segmentation in 2D and 3D models, respectively. Mura et al. [

4] conducted the procedure by allocating separate rooms using the Markov clustering algorithm and built models through a multi-label energy minimization approach [

28], but the modeling method of inter-room connections was not described. Yang et al. [

14] detected inter-room connections using specific parameters, such as upper and lower bound of doors. Previtali et al. [

12] and Wang et al. [

13] introduced a modeling method through a two-label (

interior and

exterior) energy minimization approach under the Manhattan world assumption and 2.5D approaches, respectively. These approaches detected the inter-room connections using lay-tracing or reasonable parameters (i.e., width of doors).

Oesau et al. [

3] introduced the horizontal slicing approach that separated multi-level buildings through the peak of horizontal (z-axis) histogram of the distribution of point cloud. Then, the models of multi-level buildings were represented as a combination of models for a single-level space. Additionally, this approach can reconstruct the room-less environments by implementing the 2.5D approaches. However, it cannot model the complex multi-level environments as depicted in

Figure 1. Nikoohemat et al. [

15] used a trajectory to segment the point cloud of a multi-level building. The segments of horizontal trajectory and sloped trajectory divided the indoor space into floor and stair parts. Then, the generated models were represented as a combination of floor and stair models.

In this paper, we propose the modeling method that does not use geometric assumptions (i.e., the Manhattan world assumption and 2.5D approach) and convert difficult modeling problems into several simpler sub-problems (i.e., horizontal slicing and room segmentation). Furthermore, the proposed method uses a trajectory through multiple rooms and multiple floors in a building to reconstruct indoor space model that include inter-room and inter-floor connections.

5. Conclusions and Future Work

We proposed a method for automatic reconstruction of indoor structure comprising inter-room and inter-floor connections in multi-level buildings from point cloud and trajectory. The proposed method allows the modeling of multi-room environments with inter-room connections, room-less environments, and multi-level buildings with inter-floor connections. We constructed a structural points from the registered point cloud. Then, piece-wise planar segments were extracted to decompose the indoor spaces. Finally, water-tight meshes of indoor spaces were generated through energy minimization using graph cut algorithm. The trajectory through multiple rooms and multiple floors within the buildings was used to determine the difference in visibility in the data term of an energy function. Experimental results for seven datasets were recorded demonstrating that the proposed method has a wide range of applicability of indoor spaces with complex environments (such as multi-room, room-less, and multi-level building) in a single framework. The proposed method reconstructs the target indoor space as a unique model without segmenting the target indoor space into several sub-spaces. Since both point cloud and trajectory are used, the entire indoor space models, including inter-room and inter-floor connections, can be built in multi-level buildings. However, since reconstruction results rely on the precision of structural points extraction, manual works (e.g., noise removal) can improve the performance of the proposed method.

Future work involves the improvement of the proposed method for large-scale and room-less environments as depicted in

Figure 13. Additionally, experiments in indoor spaces with curved surfaces and cylindrical pillars will be conducted.