Cyber-Physical System for Environmental Monitoring Based on Deep Learning

Abstract

1. Introduction

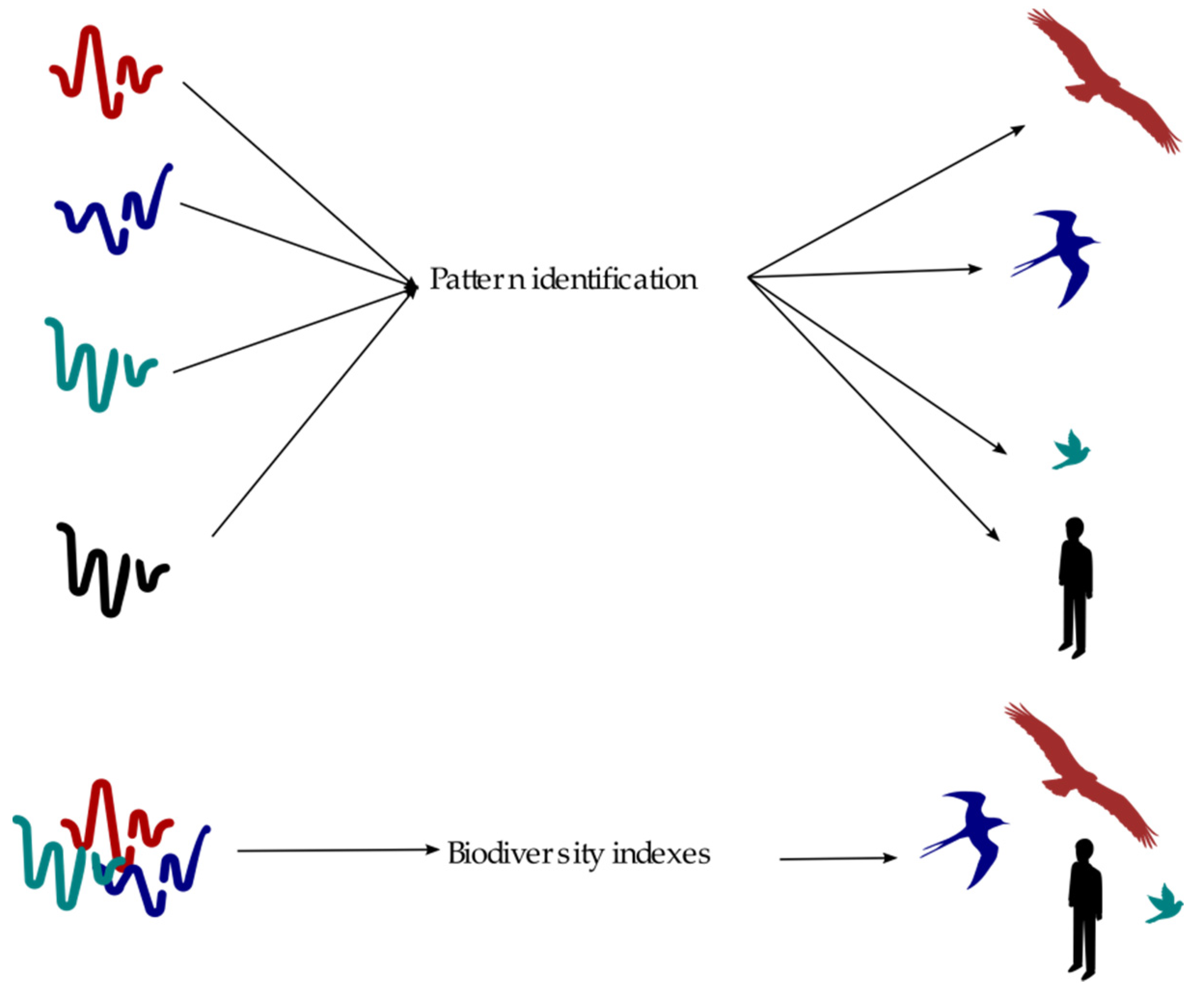

1.1. Environmental Sound Classification

1.2. Convolutional Neural Networks in Audio Classification

1.3. Previous Work

1.4. Research Objectives

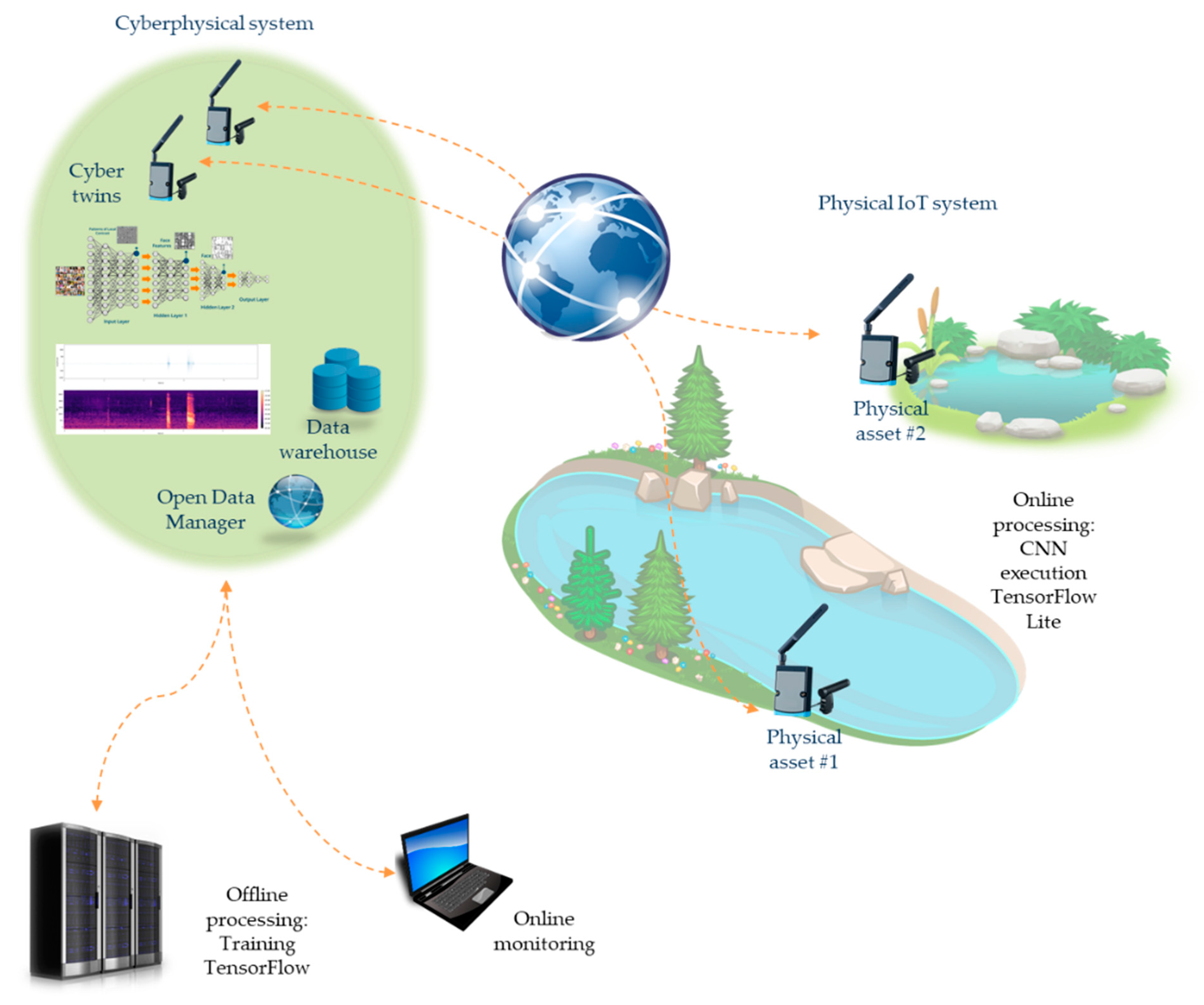

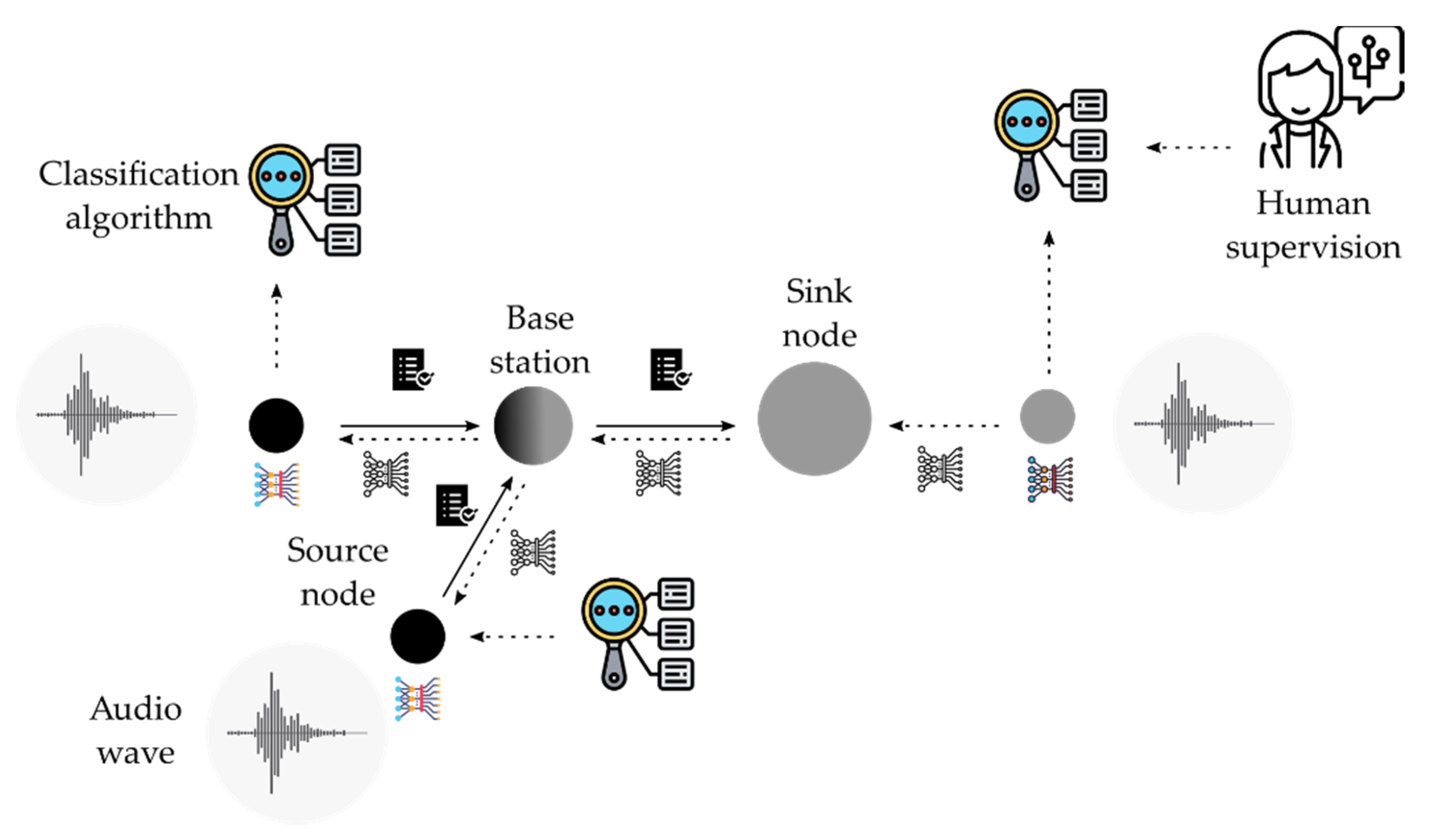

- The implementation of a cyber-physical system to optimize the execution of CNN algorithms: audio wave processing based on CNN [10] has been frequently proposed in the literature, as shown in Section 1.2. However, an optimal execution model must be considered in order to maximize flexibility and adaptability of the algorithms, and to minimize the execution costs (power consumption and time response). Thus, a first research objective is to propose the implementation of a cyber-physical system (CPS) to reach these goals. Figure 3 represents the main components of the CPS proposal. The physical system is composed of IoT nodes that create an ad hoc network. These nodes are equipped with microphones adapted for natural environments. Every node captures the soundscape of its surroundings. The audio signal is converted to a mel-spectrogram [12], which is processed using a set of trained CNNs. This data fusion procedure reduces the size of the information to be sent through the network. The nodes are powered by solar cells and have a wireless interface. The physical system is modeled in a set of servers following the paradigm of digital twins [25]. This constitutes the cyber-physical part of the system. Every physical node has a model (digital twin) that can interact with other models, creating a cooperative network. At this point, the CNNs are trained with the audio data recorded previously by the physical nodes. There are as many CNNs as species or biological acoustic targets being studied. Once a CNN is trained, its structure, its topology, and its weights are sent to the corresponding physical node, where it will be run. The execution of every CNN offers events of identification of a given species. The entire system can be monitored by scientists with a user-friendly interface.

- The design of a CNN classification system for anuran sounds: For this second research, the different types of anuran vocalizations that our research group has worked on in the past (see Section 1.3) would be used as training set. Obtaining good results would allow a demonstration of the feasibility of a CNN-based design for the classification of species sounds and, in general, for biological acoustic targets. At the same time, having previous work in the classification of this type of sounds allows us to compare the results of the CNN with other techniques. Thus, although the results of the most recent of the previous works [23] were satisfactory, there were two areas in need of improvement. The first one focused on creating a system that performs an even finer classification of anuran sounds both in terms of accuracy and in number of classified sounds. A second objective was to remove the previous manual preprocessing of the audio signals carried out in [23]. This would speed up the classification process and save costs. The different stages in the designed classification process are shown in Figure 4.

2. Material and Methods

2.1. Cyber-Physical System

- Sensors acquire data and send them to a base station from where they are forwarded to a central processing entity. In this scenario, data are sent raw or with basic processing. In order to enhance the management of the communications, data could be aggregated. The main drawback to this approach is the huge data traffic that the communication infrastructure must allow. This feature is especially important in low-bandwidth systems.

- Sensors acquire data and process them locally. In this scenario, a data fusion algorithm is executed locally in every remote node or in a cluster of nodes that can cooperate among themselves. Only relevant information is sent to sink nodes, which deliver it to supervising and control entities. This sort of architectures reduces the data traffic and consequently the power consumption. The required bandwidth for these nodes can be minimized, allowing low-power wireless sensor network paradigms such as IEEE 802.15.4TM [26], LoRa® (LoRa Alliance, Fremont, CA, USA https://lora-alliance.org, accessed on 19 May 2021) [27], etc. The main drawback of these systems is the necessity of adapting the data fusion algorithm to nodes with processing and power consumption constraints.

2.2. CNN Classification System

2.2.1. Data Augmentation

- 2 files with two types of white noise added to the audio.

- 4 files with signal time shifts (1, 1.25, 1.75, and 2 seconds, respectively).

- 4 files with modifications in the amplitude of the signal: 2 with amplifications of 20% and 40%, and 2 with attenuations of 20% and 40%. Specifically, dynamic compression [31] was used for this processing. It reduces the volume of loud sounds and amplifies (a percentage) the quiet ones. The aim was to generate new signals with the background sound enhanced and the sound of the anuran reduced.

2.2.2. Mel-Spectrogram Generation

2.2.3. Design and Training

3. Results

3.1. CPS Performance

3.2. CNN Models

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- United Nations. The Millennium Development Goals Report 2014; Department of Economic and Social Affairs: New York, NY, USA, 2014. [Google Scholar]

- Bradfer-Lawrence, T.; Gardner, N.; Bunnefeld, L.; Bunnefeld, N.; Willis, S.G.; Dent, D.H. Guidelines for the use of acoustic indices in environmental research. Methods Ecol. Evol. 2019, 10, 1796–1807. [Google Scholar] [CrossRef]

- Wildlife Acoustics. Available online: http://www.wildlifeacoustics.com (accessed on 23 February 2021).

- Hill, A.P.; Prince, P.; Covarrubias, E.P.; Doncaster, C.P.; Snaddon, J.L.; Rogers, A. AudioMoth: Evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol. Evol. 2018, 9, 1199–1211. [Google Scholar] [CrossRef]

- Campos-Cerqueira, M.; Aide, T.M. Improving distribution data of threatened species by combining acoustic monitoring and occupancy modelling. Methods Ecol. Evol. 2016, 7, 1340–1348. [Google Scholar] [CrossRef]

- Goeau, H.; Glotin, H.; Vellinga, W.P.; Planque, R.; Joly, A. LifeCLEF Bird Identification Task 2016: The arrival of deep learning, CLEF 2016 Work. Notes; Balog, K., Cappellato, L., Eds.; The CLEF Initiative: Évora, Portugal, 5–8 September 2016; pp. 440–449. Available online: http://ceur-ws.org/Vol-1609/ (accessed on 19 May 2021).

- Farina, A.; James, P. The acoustic communities: Definition, description and ecological role. Biosystems 2016, 147, 11–20. [Google Scholar] [CrossRef]

- Mac Aodha, O.; Gibb, R.; Barlow, K.E.; Browning, E.; Firman, M.; Freeman, R.; Harder, B.; Kinsey, L.; Mead, G.R.; Newson, S.E.; et al. Bat detective—Deep learning tools for bat acoustic signal detection. PLoS Comput. Biol. 2018, 14, e1005995. [Google Scholar] [CrossRef] [PubMed]

- Karpištšenko, A. The Marinexplore and Cornell University Whale Detection Challenge. 2013. Available online: https://www.kaggle.com/c/whale-detection-challenge/discussion/4472 (accessed on 19 May 2021).

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2019, 9, 85–112. [Google Scholar] [CrossRef]

- Dorfler, M.; Bammer, R.; Grill, T. Inside the spectrogram: Convolutional Neural Networks in audio processing. In Proceedings of the 2017 International Conference on Sampling Theory and Applications (SampTA), Tallinn, Estonia, 3–7 July 2017; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2017; pp. 152–155. [Google Scholar]

- Nanni, L.; Rigo, A.; Lumini, A.; Brahnam, S. Spectrogram Classification Using Dissimilarity Space. Appl. Sci. 2020, 10, 4176. [Google Scholar] [CrossRef]

- Bento, N.; Belo, D.; Gamboa, H. ECG Biometrics Using Spectrograms and Deep Neural Networks. Int. J. Mach. Learn. Comput. 2020, 10, 259–264. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Su, S.-F. Environmental sound classification using a regularized deep convolutional neural network with data augmentation. Appl. Acoust. 2020, 167, 107389. [Google Scholar] [CrossRef]

- Xie, J.; Hu, K.; Zhu, M.; Yu, J.; Zhu, Q. Investigation of Different CNN-Based Models for Improved Bird Sound Classification. IEEE Access 2019, 7, 175353–175361. [Google Scholar] [CrossRef]

- Chi, Z.; Li, Y.; Chen, C. Deep Convolutional Neural Network Combined with Concatenated Spectrogram for Environmental Sound Classification. In Proceedings of the 2019 IEEE 7th International Conference on Computer Science and Network Technology (ICCSNT), Dalian, China, 19–20 October 2019; Institute of Electrical and Electronics Engineers (IEEE): New York, New York, USA, 2019; pp. 251–254. [Google Scholar]

- Colonna, J.; Peet, T.; Ferreira, C.A.; Jorge, A.M.; Gomes, E.F.; Gama, J. Automatic Classification of Anuran Sounds Using Convolutional Neural Networks. In Proceedings of the Ninth International C* Conference on Computer Science & Software Engineering—C3S2E, Porto, Portugal, 20–22 July 2016; Desai, E., Ed.; Association for Computing Machinery: New York, NY, USA, 2016; pp. 73–78. [Google Scholar] [CrossRef]

- Strout, J.; Rogan, B.; Seyednezhad, S.M.; Smart, K.; Bush, M.; Ribeiro, E. Anuran call classification with deep learning. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: New York, NY, USA, 2017; pp. 2662–2665. [Google Scholar]

- Luque, J.; Larios, D.F.; Personal, E.; Barbancho, J.; León, C. Evaluation of MPEG-7-Based Audio Descriptors for Animal Voice Recognition over Wireless Acoustic Sensor Networks. Sensors 2016, 16, 717. [Google Scholar] [CrossRef] [PubMed]

- Luque, A.; Romero-Lemos, J.; Carrasco, A.; Barbancho, J. Non-sequential automatic classification of anuran sounds for the estimation of climate-change indicators. Expert Syst. Appl. 2018, 95, 248–260. [Google Scholar] [CrossRef]

- Luque, A.; Gómez-Bellido, J.; Carrasco, A.; Barbancho, J. Optimal Representation of Anuran Call Spectrum in Environmental Monitoring Systems Using Wireless Sensor Networks. Sensors 2018, 18, 1803. [Google Scholar] [CrossRef] [PubMed]

- Luque, A.; Romero-Lemos, J.; Carrasco, A.; Barbancho, J. Improving Classification Algorithms by Considering Score Series in Wireless Acoustic Sensor Networks. Sensors 2018, 18, 2465. [Google Scholar] [CrossRef] [PubMed]

- Fonozoo. Available online: www.fonozoo.com (accessed on 23 February 2021).

- Haag, S.; Anderl, R. Digital twin—Proof of concept. Manuf. Lett. 2018, 15, 64–66. [Google Scholar] [CrossRef]

- Howitt, I.; Gutierrez, J. IEEE 802.15.4 low rate—Wireless personal area network coexistence issues. In Proceedings of the 2003 IEEE Wireless Communications and Networking, 2003. WCNC 2003; Institute of Electrical and Electronics Engineers (IEEE): New Orleans, LA, USA, 2004. [Google Scholar]

- Augustin, A.; Yi, J.; Clausen, T.; Townsley, W.M. A Study of LoRa: Long Range & Low Power Networks for the Internet of Things. Sensors 2016, 16, 1466. [Google Scholar] [CrossRef]

- Byrd, R.H.; Chin, G.M.; Nocedal, J.; Wu, Y. Sample size selection in optimization methods for machine learning. Math. Program. 2012, 134, 127–155. [Google Scholar] [CrossRef]

- Petäjäjärvi, J.; Mikhaylov, K.; Pettissalo, M.; Janhunen, J.; Iinatti, J.H. Performance of a low-power wide-area network based on LoRa technology: Doppler robustness, scalability, and coverage. Int. J. Distrib. Sens. Netw. 2017, 13. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 19 May 2021).

- Wilmering, T.; Moffat, D.; Milo, A.; Sandler, M.B. A History of Audio Effects. Appl. Sci. 2020, 10, 791. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Patterson, J.; Gibson, A. Understanding Learning Rates. Deep Learning: A Practitioner’s Approach, 1st ed.; Mike Loukides, Tim McGovern, O’Reilly: Sebastopol, CA, USA, 2017; Chapter 6; pp. 415–530. [Google Scholar]

- Zhong, H.; Chen, Z.; Qin, C.; Huang, Z.; Zheng, V.W.; Xu, T.; Chen, E. Adam revisited: A weighted past gradients perspective. Front. Comput. Sci. 2020, 14, 1–16. [Google Scholar] [CrossRef]

| Type | Vocalization | Number of Original Samples | Number of Augmented Samples |

|---|---|---|---|

| Epidalea calamita | standard | 293 | 3223 |

| Epidalea calamita | chorus | 74 | 814 |

| Epidalea calamita | amplexus | 63 | 693 |

| Alytes obstetricans | standard | 419 | 4609 |

| Alytes obstetricans | distress call | 16 | 176 |

| Predicted Values | |||||

|---|---|---|---|---|---|

| Ep. cal. st&ch | Ep.cal. amplexus | Al. obs. standard | Al. obs. distress | ||

| Actual values | Ep. cal. st&ch | 96.51% (775) | 3.49% (28) | 0 | 0 |

| Ep.cal. amplexus | 4.9% (7) | 95.10% (136) | 0 | 0 | |

| Al. obs. standard | 1.21% (11) | 0 | 98.79% (902) | 0 | |

| Al. obs. distress | 2.28% (1) | 0 | 0 | 97.72% (43) | |

| Predicted Values | ||||||

|---|---|---|---|---|---|---|

| Ep. cal. standard | Ep. cal. chorus | Ep. cal. amplexus | Al. obs. standard | Al. obs. distress | ||

| Actual values | Ep. cal. standard | 94.27% (560) | 1.01% (6) | 4.71% (28) | 0 | 0 |

| Ep. cal. chorus | 4.78% (10) | 95.22% (199) | 0 | 0 | 0 | |

| Ep. cal. amplexus | 9.09% (13) | 0 | 90.91% (130) | 0 | 0 | |

| Al. obs. standard | 1.2% (11) | 0 | 0 | 98.8% (902) | 0 | |

| Al. obs. distress | 2.28% (1) | 0 | 0 | 0 | 97.72% (43) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monedero, Í.; Barbancho, J.; Márquez, R.; Beltrán, J.F. Cyber-Physical System for Environmental Monitoring Based on Deep Learning. Sensors 2021, 21, 3655. https://doi.org/10.3390/s21113655

Monedero Í, Barbancho J, Márquez R, Beltrán JF. Cyber-Physical System for Environmental Monitoring Based on Deep Learning. Sensors. 2021; 21(11):3655. https://doi.org/10.3390/s21113655

Chicago/Turabian StyleMonedero, Íñigo, Julio Barbancho, Rafael Márquez, and Juan F. Beltrán. 2021. "Cyber-Physical System for Environmental Monitoring Based on Deep Learning" Sensors 21, no. 11: 3655. https://doi.org/10.3390/s21113655

APA StyleMonedero, Í., Barbancho, J., Márquez, R., & Beltrán, J. F. (2021). Cyber-Physical System for Environmental Monitoring Based on Deep Learning. Sensors, 21(11), 3655. https://doi.org/10.3390/s21113655