Fault-Adaptive Autonomy in Systems with Learning-Enabled Components

Abstract

:1. Introduction

- The novel system architecture that ensures that the mission execution is robust to faults and anomalies,

- The use of an assurance monitor that complements the FDI LEC predictions with credibility and confidence metrics,

- The design of an assurance evaluator that decides whether a particular classification can be trusted or not; this decision-making process is based on requirements related to the acceptable risk of each decision as well as the desired frequency of accepted classifications.

- The evaluation of the fault-adaptive system using an AUV example in ROS/Gazebo-based simulations with more than 400 executions for various hazardous/faulty environments.

2. Background

2.1. Autonomous Vehicles

2.2. LECs in Autonomous Vehicles

2.3. Behavior Trees for Autonomy

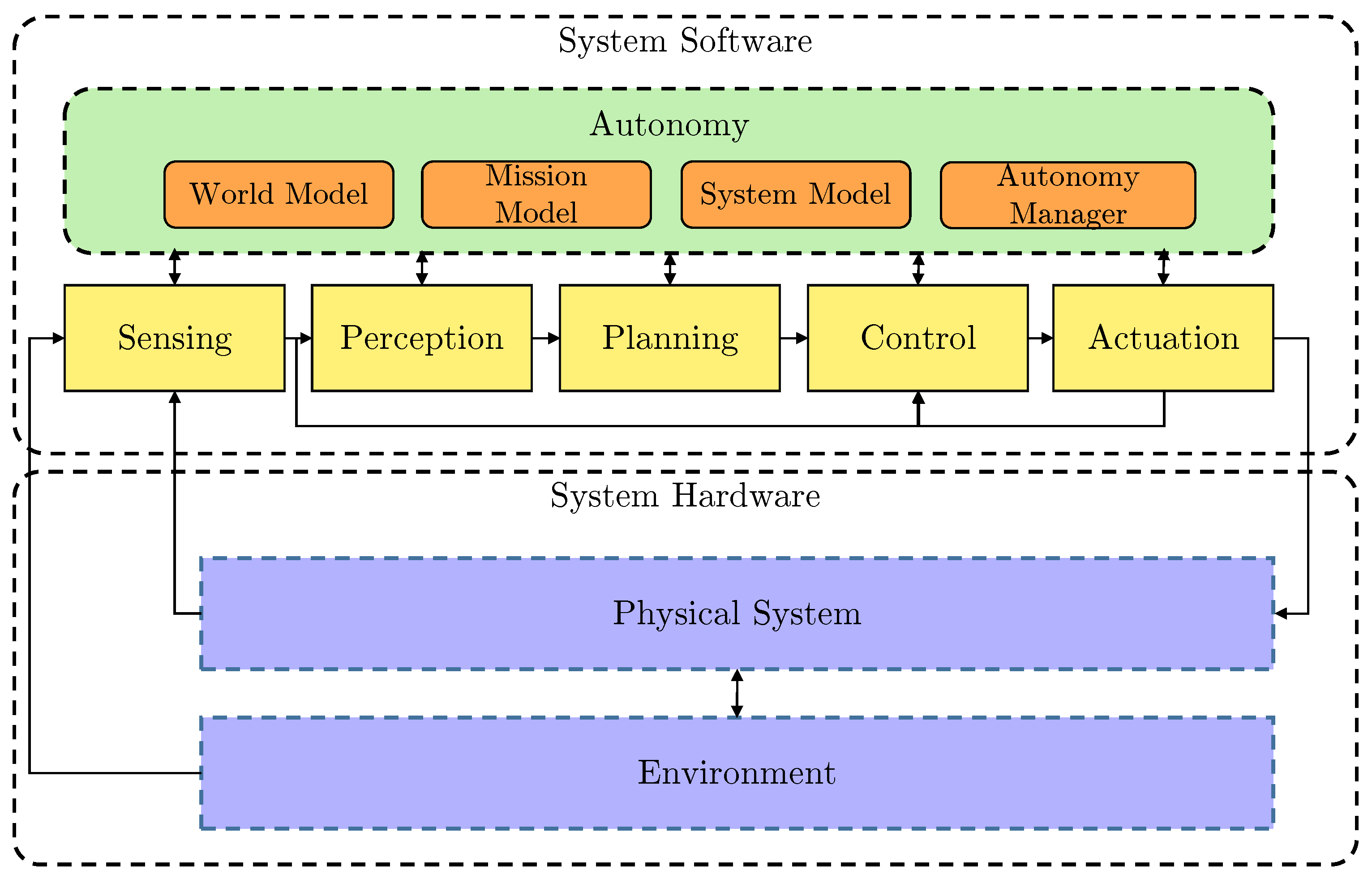

3. System Architecture

3.1. Fault-Adaptive Autonomy

3.2. Evaluation Metrics

- FDI LEC recall and accuracy (Ground truth vs. LEC output)

- FDI LEC recall and accuracy with AM and assurance evaluator (Ground truth vs. LEC + AM + AE output)

- Mission execution time (s)

- Average cross-track error during mission (m)

4. Approach

4.1. Vehicle Details

4.2. Implementation

4.2.1. Autonomy Manager

- BATTERY_LOW, when battery level is equal or less than AUV failsafe battery low threshold. Selected action: Surface AUV.

- SENSOR_FAILURE, when sensor failure occurs, e.g., RPM sensor. Selected action: Surface AUV

- OBSTACLE_STANDOFF, when the detected obstacle is closer than the given mission level threshold

- RTH, when battery level is at a boundary point compared to home distance, meaning it is the last chance to return to home with the actual battery level. Selected action: RTH if function is enabled

- GEOFENCE, when reached maximum distance from home. Selected action: RTH

- PIPE_LOST, when pipe lost more than failsafe tracking lost threshold seconds ago. This is 120 s by default, which is sufficiently long to avoid false-positives due to buried sections of pipe. If the pipe is lost during pipe tracking, the AUV enters loiter mode and will return to pipe tracking mode once the pipe is detected again.

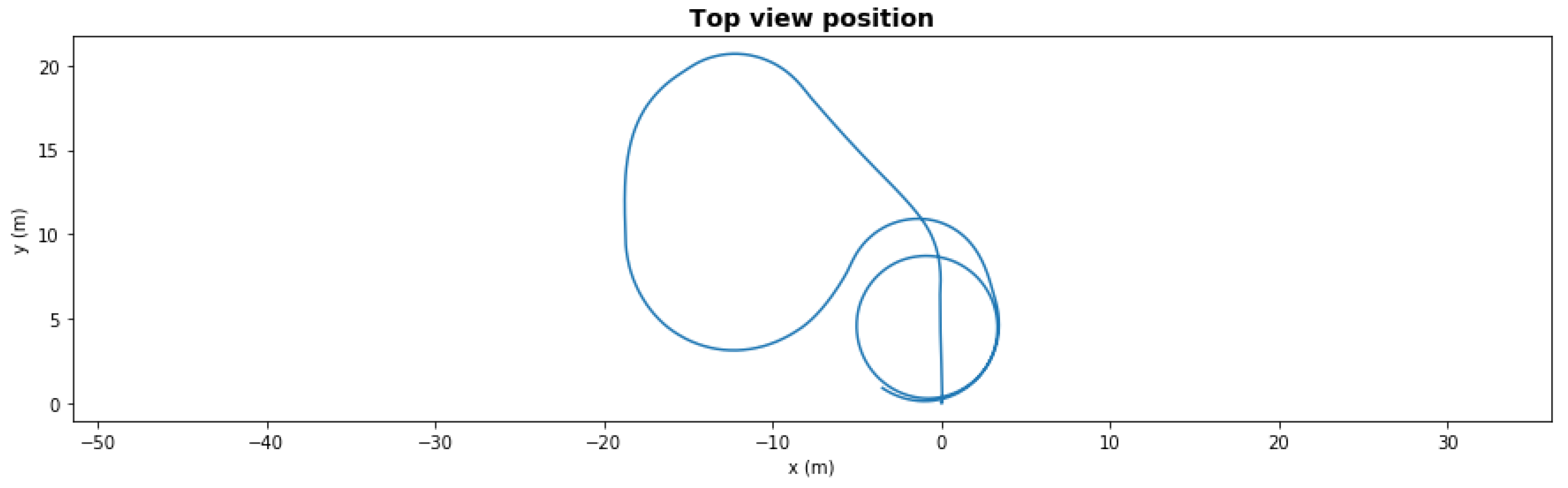

4.2.2. Mission Execution

4.3. LEC Assurance Monitoring and Decision Making

4.3.1. Learning-Enabled Component for Fault Detection and Isolation

4.3.2. Assurance Monitoring

4.3.3. Assurance Evaluator

4.4. Decision Making

4.5. Assurance Monitor Design and Execution

| Algorithm 1 Design time |

| Input: proper training data , calibration data , offline test data . |

| 1: Train the classification LEC f with as training set and as validation set. |

| 2: Train the Siamese network with as training set and as validation set. |

| 3: // Compute the nonconformity scores for using Equation (1). |

| 4: . |

| 5: Compute the p-values for all the classes of the data in using Equation (2). |

| 6: Compute the credibility and confidence for the data in using Equations (3) and (4). |

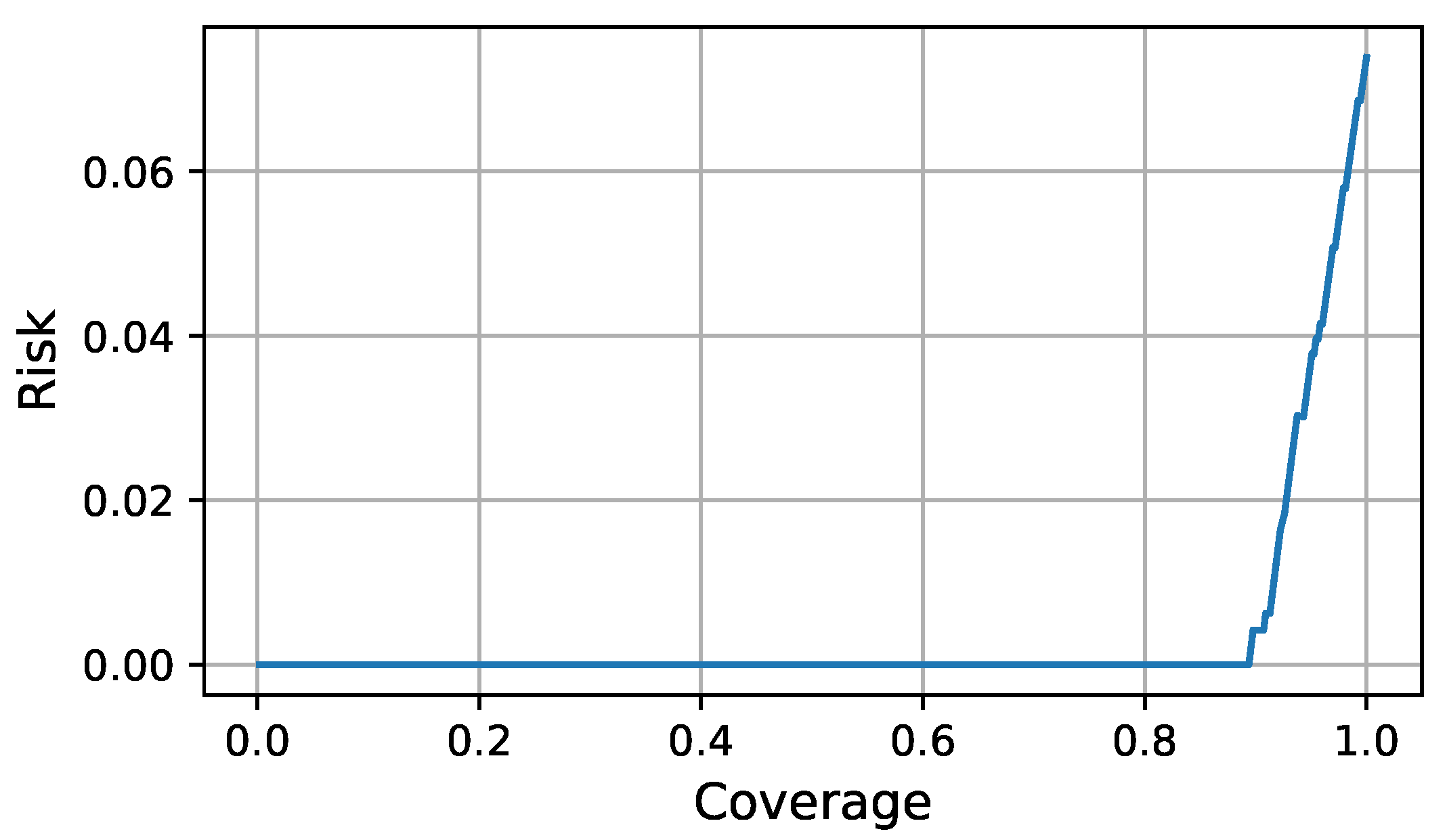

| 7: Perform a grid search to compute the coefficients to define the evaluator function k shown in Equation (9) to minimize the AURC shown in Equation (8). |

| 8: Construct the set . |

| 9: Using every value in as a threshold for the selective function g, plot the Risk-Coverage curve according to Equations (6) and (7). This is used to select an operation point (threshold) according to the application requirements. |

| Algorithm 2 Execution time |

| Input: Classification LEC f, Siamese network , nonconformity scores A, evaluator function k, threshold for the selective function g, test input . |

| 1: Compute the classification . |

| 2: Compute the embedding representation . |

| 3: for each possible class j do |

| 4: Compute the nonconformity score using Equation 1. |

| 5: . |

| 6: end for |

| 7: Compute the credibility and confidence for according to Equations 3 and 4. |

| 8: if then |

| 9: return . |

| 10: else |

| 11: return No decision. |

| 12: end if |

4.6. Decision-Making Execution-AUV Example

| Algorithm 3 Execution-time Steps for BlueROV example |

| Input: Classification LEC f, siamese network , nonconformity scores A, evaluator function k, threshold for the selective function g, real-time input . |

| 1: Compute the classification . |

| 2: Compute the embedding representation . |

| 3: for each possible class j do |

| 4: Compute the nonconformity score using Equation (1). |

| 5: . |

| 6: end for |

| 7: Compute the credibility and confidence for according to Equations (3) and (4). |

| 8: if then |

| 9: Get degraded thruster id and efficiency from class |

| 10: if then |

| 11: return Nominal State. |

| 12: else |

| 13: if is in then |

| 14: Show ‘Z axis degradation warning’ |

| 15: return Degraded State - no control reconfiguration required |

| 16: else |

| 17: if then |

| 18: Show ‘Severe XY axis degradation warning’ |

| 19: Get thruster pair from definition |

| 20: Turn off and |

| 21: else |

| 22: Show ‘Mild XY axis degradation warning’ |

| 23: Get thruster pair from definition |

| 24: Perform control reallocaton - set to to balance torque loss |

| 25: end if |

| 26: return Degraded State - control reconfiguration complete |

| 27: end if |

| 28: end if |

| 29: else |

| 30: return Nominal State - LEC output not trustworthy. |

| 31: end if |

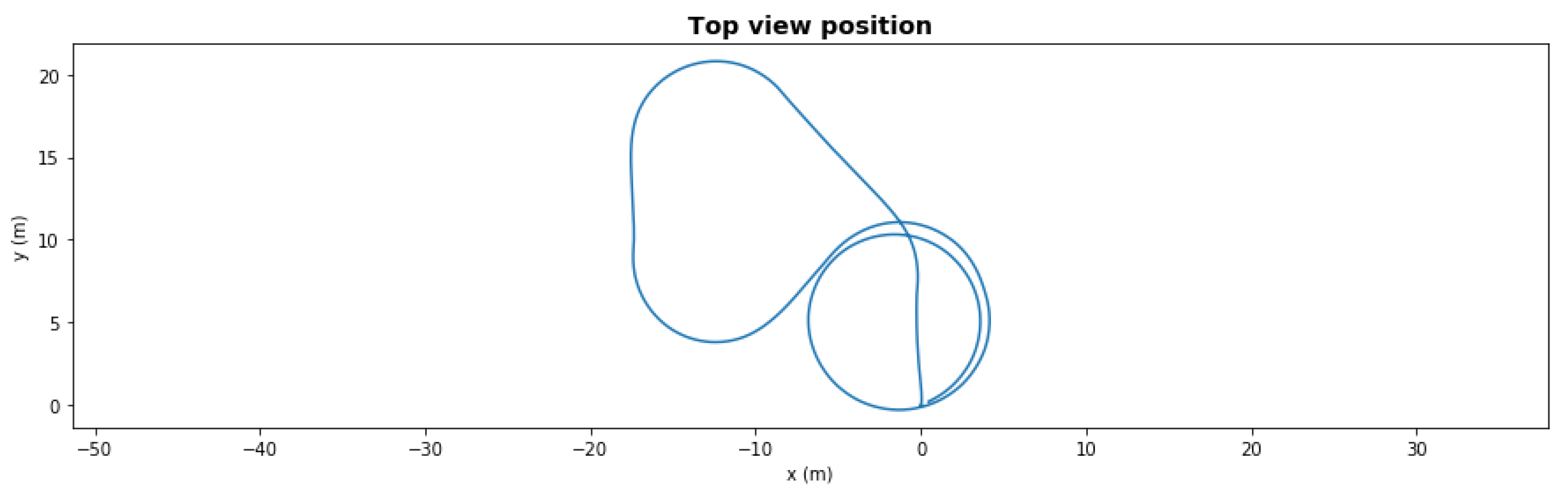

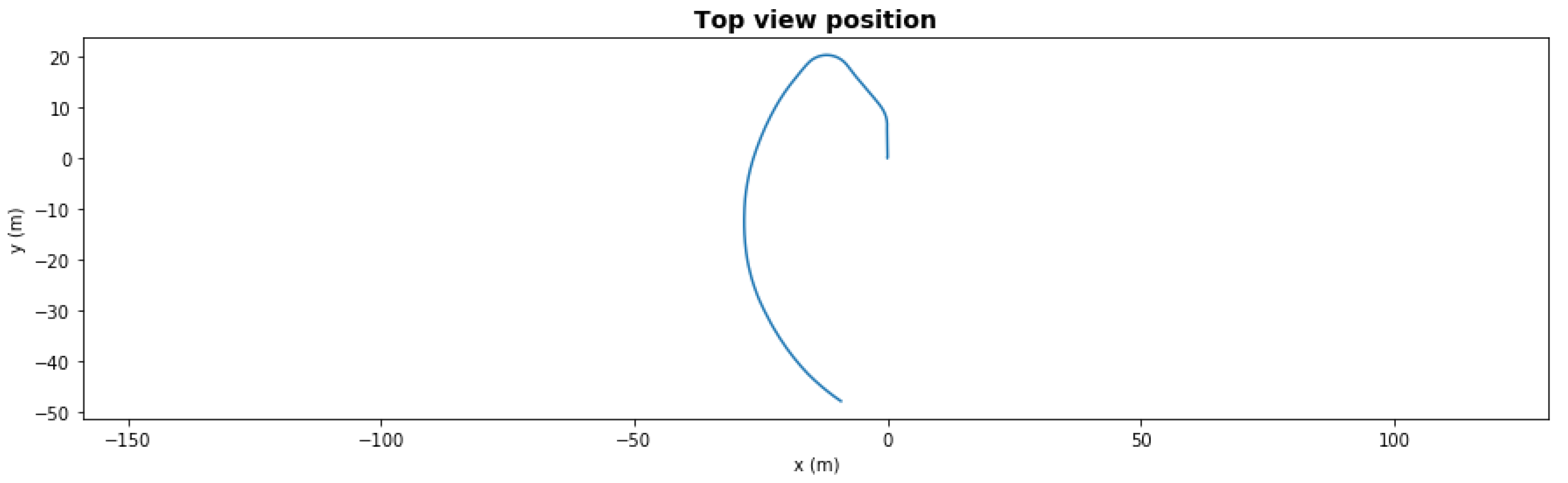

5. Results

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Agrawal, K.; Baruah, S.; Burns, A. The Safe and Effective Use of Learning-Enabled Components in Safety-Critical Systems. In Proceedings of the 32nd Euromicro Conference on Real-Time Systems (ECRTS 2020), Virtual, Modena, Italy, 7–10 July 2020; Volume 165, pp. 7:1–7:20. [Google Scholar] [CrossRef]

- Kim, S.; Park, K.J. A Survey on Machine-Learning Based Security Design for Cyber-Physical Systems. Appl. Sci. 2021, 11, 5458. [Google Scholar] [CrossRef]

- Hwang, I.; Kim, S.; Kim, Y.; Seah, C. A survey of fault detection, isolation, and reconfiguration methods. IEEE Trans. Control Syst. Technol. 2010, 18, 636–653. [Google Scholar] [CrossRef]

- Babaei, M.; Shi, J.; Abdelwahed, S. A Survey on Fault Detection, Isolation, and Reconfiguration Methods in Electric Ship Power Systems. IEEE Access 2018, 6, 9430–9441. [Google Scholar] [CrossRef]

- Guo, D.; Wang, Y.; Zhong, M.; Zhao, Y. Fault detection and isolation for Unmanned Aerial Vehicle sensors by using extended PMI filter. IFAC-PapersOnLine 2018, 51, 818–823. [Google Scholar] [CrossRef]

- Guzmán-Rabasa, J.; López-Estrada, F.; González-Contreras, B.; Valencia-Palomo, G.; Chadli, M.; Pérez-Patricio, M. Actuator fault detection and isolation on a quadrotor unmanned aerial vehicle modeled as a linear parameter-varying system. Meas. Control 2019, 52, 1228–1239. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, N.; Mung, N.; Hong, S. Actuator fault detection and fault-tolerant control for hexacopter. Sensors 2019, 19, 4721. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Capocci, R.; Omerdic, E.; Dooly, G.; Toal, D. Fault-tolerant control for ROVs using control reallocation and power isolation. J. Mar. Sci. Eng. 2018, 6, 40. [Google Scholar] [CrossRef] [Green Version]

- He, J.; Li, Y.; Li, Y.; Jiang, Y.; An, L. Fault diagnosis in autonomous underwater vehicle propeller in the transition stage based on GP-RPF. Int. J. Adv. Robot. Syst. 2018, 15. [Google Scholar] [CrossRef] [Green Version]

- Hsieh, Y.Y.; Lin, W.Y.; Li, D.L.; Chuang, J.H. Deep Learning-Based Obstacle Detection and Depth Estimation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1635–1639. [Google Scholar]

- Ivanov, R.; Carpenter, T.J.; Weimer, J.; Alur, R.; Pappas, G.J.; Lee, I. Verifying the Safety of Autonomous Systems with Neural Network Controllers. ACM Trans. Embed. Comput. Syst. 2020, 20, 1–26. [Google Scholar] [CrossRef]

- Yel, E.; Carpenter, T.; Di Franco, C.; Ivanov, R.; Kantaros, Y.; Lee, I.; Weimer, J.; Bezzo, N. Assured runtime monitoring and planning: Toward verification of neural networks for safe autonomous operations. IEEE Robot. Autom. Mag. 2020, 27, 102–116. [Google Scholar] [CrossRef]

- Cheng, C.H.; Huang, C.H.; Brunner, T.; Hashemi, V. Towards Safety Verification of Direct Perception Neural Networks. In Proceedings of the 2020 Design, Automation Test in Europe Conference Exhibition (DATE), Grenoble, France, 9–13 March 2020; pp. 1640–1643. [Google Scholar]

- Sun, X.; Khedr, H.; Shoukry, Y. Formal verification of neural network controlled autonomous systems. In Proceedings of the 22nd ACM International Conference on Hybrid Systems: Computation and Control, Montreal, QC, Canada, 16–18 April 2019; pp. 147–156. [Google Scholar]

- Zhang, X.; Rattan, K.; Clark, M.; Muse, J. Controller verification in adaptive learning systems towards trusted autonomy. In Proceedings of the ACM/IEEE Sixth International Conference on Cyber-Physical Systems, Seattle, WA, USA, 14–16 April 2015; pp. 31–40. [Google Scholar]

- Muvva, V.; Bradley, J.; Wolf, M.; Johnson, T. Assuring learning-enabled components in small unmanned aircraft systems. In Proceedings of the AIAA Scitech 2021 Forum, Virtual Event, 11–15 & 19–21 January 2021; pp. 1–11. [Google Scholar]

- Hartsell, C.; Mahadevan, N.; Ramakrishna, S.; Dubey, A.; Bapty, T.; Johnson, T.; Koutsoukos, X.; Sztipanovits, J.; Karsai, G. Model-Based Design for CPS with Learning-Enabled Components. In Proceedings of the Workshop on Design Automation for CPS and IoT, New York, NY, USA, 15 April 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Hartsell, C.; Mahadevan, N.; Ramakrishna, S.; Dubey, A.; Bapty, T.; Johnson, T.; Koutsoukos, X.; Sztipanovits, J.; Karsai, G. CPS Design with Learning-Enabled Components: A Case Study. In Proceedings of the 30th International Workshop on Rapid System Prototyping (RSP’19), New York, NY, USA, 17–18 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 57–63. [Google Scholar] [CrossRef] [Green Version]

- Klöckner, A. Behavior Trees for UAV Mission Management. In Proceedings of the INFORMATIK 2013: Informatik Angepasst an Mensch, Organisation und Umwelt, Koblenz, Germany, 16–20 September 2013; pp. 57–68. [Google Scholar]

- Ogren, P. Increasing modularity of UAV control systems using computer game Behavior Trees. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Minneapolis, MN, USA, 13–16 August 2012. [Google Scholar] [CrossRef] [Green Version]

- Segura-Muros, J.A.; FernAndez-Olivares, J. Integration of an Automated Hierarchical Task Planner in ROS Using Behaviour Trees. In Proceedings of the 2017 6th International Conference on Space Mission Challenges for Information Technology (SMC-IT), Madrid, Spain, 27 September 2017; pp. 20–25. [Google Scholar] [CrossRef]

- Grzadziel, A. Results from Developments in the Use of a Scanning Sonar to Support Diving Operations from a Rescue Ship. Remote Sens. 2020, 12, 693. [Google Scholar] [CrossRef] [Green Version]

- Qin, R.; Zhao, X.; Zhu, W.; Yang, Q.; He, B.; Li, G.; Yan, T. Multiple Receptive Field Network (MRF-Net) for Autonomous Underwater Vehicle Fishing Net Detection Using Forward-Looking Sonar Images. Sensors 2021, 21, 1933. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; He, B.; Li, K.; Yan, T.; Shen, Y.; Wang, Q.; Wu, M. Side-scan sonar images segmentation for AUV with recurrent residual convolutional neural network module and self-guidance module. Appl. Ocean Res. 2021, 113, 102608. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Manhães, M.; Scherer, S.; Voss, M.; Douat, L.; Rauschenbach, T. UUV Simulator: A Gazebo-based package for underwater intervention and multi-robot simulation. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016. [Google Scholar] [CrossRef]

- BlueROV2 Git Repository. Available online: https://github.com/fredvaz/bluerov2 (accessed on 12 June 2021).

- MAVROS Git Repository. Available online: https://github.com/mavlink/mavros (accessed on 26 June 2021).

- Gazebo Simulator. Available online: http://gazebosim.org/ (accessed on 23 August 2021).

- ArduSub Codebase. Available online: https://www.ardusub.com/ (accessed on 23 August 2021).

- Mahtani, A.; Sanchez, L.; Fernandez, E.; Martinez, A. Effective Robotics Programming with ROS, 3rd ed.; Packt Publishing: Birmingham, UK, 2016. [Google Scholar]

- Chua, A.; MacNeill, A.; Wallace, D. Democratizing ocean technology: Low-cost innovations in underwater robotics. Egu Gen. Assem. 2020. [Google Scholar] [CrossRef]

- Garcia de Marina, H.; Kapitanyuk, Y.; Bronz, M.; Hattenberger, G.; Cao, M. Guidance algorithm for smooth trajectory tracking of a fixed wing UAV flying in wind flows. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5740–5745. [Google Scholar] [CrossRef] [Green Version]

- Papadopoulos, H. Inductive Conformal Prediction: Theory and Application to Neural Networks; INTECH Open Access Publisher: Rijeka, Croatia, 2008. [Google Scholar]

- Balasubramanian, V.; Ho, S.S.; Vovk, V. Conformal Prediction for Reliable Machine Learning: Theory, Adaptations and Applications, 1st ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2014. [Google Scholar]

- Vovk, V.; Gammerman, A.; Shafer, G. Algorithmic Learning in a Random World; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Shafer, G.; Vovk, V. A Tutorial on Conformal Prediction. J. Mach. Learn. Res. 2008, 9, 371–421. [Google Scholar]

- Johansson, U.; Linusson, H.; Löfström, T.; Boström, H. Model-agnostic nonconformity functions for conformal classification. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2072–2079. [Google Scholar]

- Boursinos, D.; Koutsoukos, X. Assurance Monitoring of Cyber-Physical Systems with Machine Learning Components. arXiv 2020, arXiv:2001.05014. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition; ICML Deep Learning Workshop: Lille, France, 2015; Volume 2. [Google Scholar]

- El-Yaniv, R. On the Foundations of Noise-free Selective Classification. J. Mach. Learn. Res. 2010, 11, 1605–1641. [Google Scholar]

- Wiener, Y.; El-Yaniv, R. Agnostic selective classification. Adv. Neural Inf. Process. Syst. 2011, 24, 1665–1673. [Google Scholar]

- Geifman, Y.; Uziel, G.; El-Yaniv, R. Bias-Reduced Uncertainty Estimation for Deep Neural Classifiers. In Proceedings of the 7th International Conference on Learning Representations, ICLR, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef] [Green Version]

| # | Layer | Layer Parameters |

|---|---|---|

| 1 | Input | 13 units |

| 2 | Dense | 256 units, ReLU |

| 3 | Dense | 32 units, ReLU |

| 4 | Dense | 16 units, ReLU |

| 5 | Output | 22 units |

| Credibility | Confidence | Description |

|---|---|---|

| High | High | The preferred situation that usually leads into accepting the FDI LEC classification. is high and much higher than the p-values of the other classes. |

| High | Low | is high but there are other high p-values so choosing a single credible class may not be possible. |

| Low | High | None of the p-values are high for a credible decision. |

| Low | Low | A label different than could be more credible. |

| LEC + a.m. + AE | LEC + AM, Credibility Threshold | Raw LEC, SoftMax Threshold | Raw LEC, NO AM | |

|---|---|---|---|---|

| Applied Threshold | −0.1 | 0.6 | 0.99 | - |

| Recall | 98.37% | 91.64% | 21.45% | 84.05% |

| Accuracy | 93.85% | 92.37% | 33.24% | 84.05% |

| Rejected | 12.54% | 29.50% | 81.24% | 0.00% |

| GT Degradation Thruster ID | GT Degradation Efficiency (%) | GT LEC Class | Cross Track Error (m) | Time to Complete (s) |

|---|---|---|---|---|

| 0 | 41 | 0 | 5.54 | −1.00 |

| 0 | 56.5 | 1 | 2.05 | 93.00 |

| 0 | 66.5 | 2 | 1.85 | 90.33 |

| 0 | 76.5 | 3 | 1.74 | 89.33 |

| 0 | 86.5 | 4 | 1.75 | 90.67 |

| 1 | 41 | 5 | 5.37 | 86.00 |

| 1 | 56.5 | 6 | 1.91 | 93.67 |

| 1 | 66.5 | 7 | 1.81 | 91.33 |

| 1 | 76.5 | 8 | 1.53 | 88.00 |

| 1 | 86.5 | 9 | 1.60 | 88.00 |

| 2 | 41 | 10 | 11.94 | −1.00 |

| 2 | 56.5 | 11 | 16.27 | −1.00 |

| 2 | 66.5 | 12 | 12.81 | −1.00 |

| 2 | 76.5 | 13 | 3.00 | 88.50 |

| 2 | 86.5 | 14 | 1.74 | 91.00 |

| 3 | 41 | 15 | 12.94 | −1.00 |

| 3 | 56.5 | 16 | 13.74 | −1.00 |

| 3 | 66.5 | 17 | 9.74 | −1.00 |

| 3 | 76.5 | 18 | 5.62 | −1.00 |

| 3 | 86.5 | 19 | 2.10 | 93.00 |

| GT Thruster ID | GT Efficiency (%) | FDI LEC Thruster ID | FDI LEC class | Cross Track Error (m) | Time to Complete (s) | Reallo- Cation Time (s) |

|---|---|---|---|---|---|---|

| 0 | 41 | 0 | 0 | 1.37 | 87.00 | 1.41 |

| 0 | 56.5 | 0 | 1 | 1.63 | 87.00 | 2.42 |

| 0 | 66.5 | 0 | 2 | 1.53 | 85.00 | 1.38 |

| 0 | 76.5 | 0 | 3 | 1.68 | 88.33 | 2.12 |

| 0 | 86.5 | 0 | 4 | 1.78 | 92.00 | 4.44 |

| 1 | 41 | 1 | 5 | 1.36 | 86.00 | 1.41 |

| 1 | 56.5 | 1 | 6 | 1.60 | 87.33 | 1.36 |

| 1 | 66.5 | 1 | 7 | 1.64 | 88.50 | 1.40 |

| 1 | 76.5 | 1 | 8 | 1.81 | 91.00 | 2.37 |

| 1 | 86.5 | 1 | 9 | 1.66 | 88.00 | 2.04 |

| 2 | 41 | 2 | 10 | 5.73 | −1.00 | 1.36 |

| 2 | 56.5 | 2 | 11 | 1.64 | 88.00 | 1.71 |

| 2 | 66.5 | 2 | 12 | 1.44 | 86.00 | 3.39 |

| 2 | 76.5 | 2 | 13 | 1.61 | 88.00 | 2.02 |

| 2 | 86.5 | 2 | 14 | 1.79 | 89.67 | 8.37 |

| 3 | 41 | 3 | 15 | 4.62 | −1.00 | 2.05 |

| 3 | 56.5 | 3 | 16 | 1.90 | 91.00 | 2.40 |

| 3 | 66.5 | 3 | 17 | 1.75 | 90.33 | 1.71 |

| 3 | 76.5 | 3 | 18 | 1.63 | 88.67 | 1.36 |

| 3 | 86.5 | 3 | 19 | 1.60 | 86.00 | 3.37 |

| Time to Complete (s) | Cross-Track Error (m) | |

|---|---|---|

| Nominal | 88.21 | 1.63 |

| Degraded | 90.55 | 5.75 |

| Degraded with FDI | 88.66 | 1.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stojcsics, D.; Boursinos, D.; Mahadevan, N.; Koutsoukos, X.; Karsai, G. Fault-Adaptive Autonomy in Systems with Learning-Enabled Components. Sensors 2021, 21, 6089. https://doi.org/10.3390/s21186089

Stojcsics D, Boursinos D, Mahadevan N, Koutsoukos X, Karsai G. Fault-Adaptive Autonomy in Systems with Learning-Enabled Components. Sensors. 2021; 21(18):6089. https://doi.org/10.3390/s21186089

Chicago/Turabian StyleStojcsics, Daniel, Dimitrios Boursinos, Nagabhushan Mahadevan, Xenofon Koutsoukos, and Gabor Karsai. 2021. "Fault-Adaptive Autonomy in Systems with Learning-Enabled Components" Sensors 21, no. 18: 6089. https://doi.org/10.3390/s21186089

APA StyleStojcsics, D., Boursinos, D., Mahadevan, N., Koutsoukos, X., & Karsai, G. (2021). Fault-Adaptive Autonomy in Systems with Learning-Enabled Components. Sensors, 21(18), 6089. https://doi.org/10.3390/s21186089