BBNet: A Novel Convolutional Neural Network Structure in Edge-Cloud Collaborative Inference

Abstract

:1. Introduction

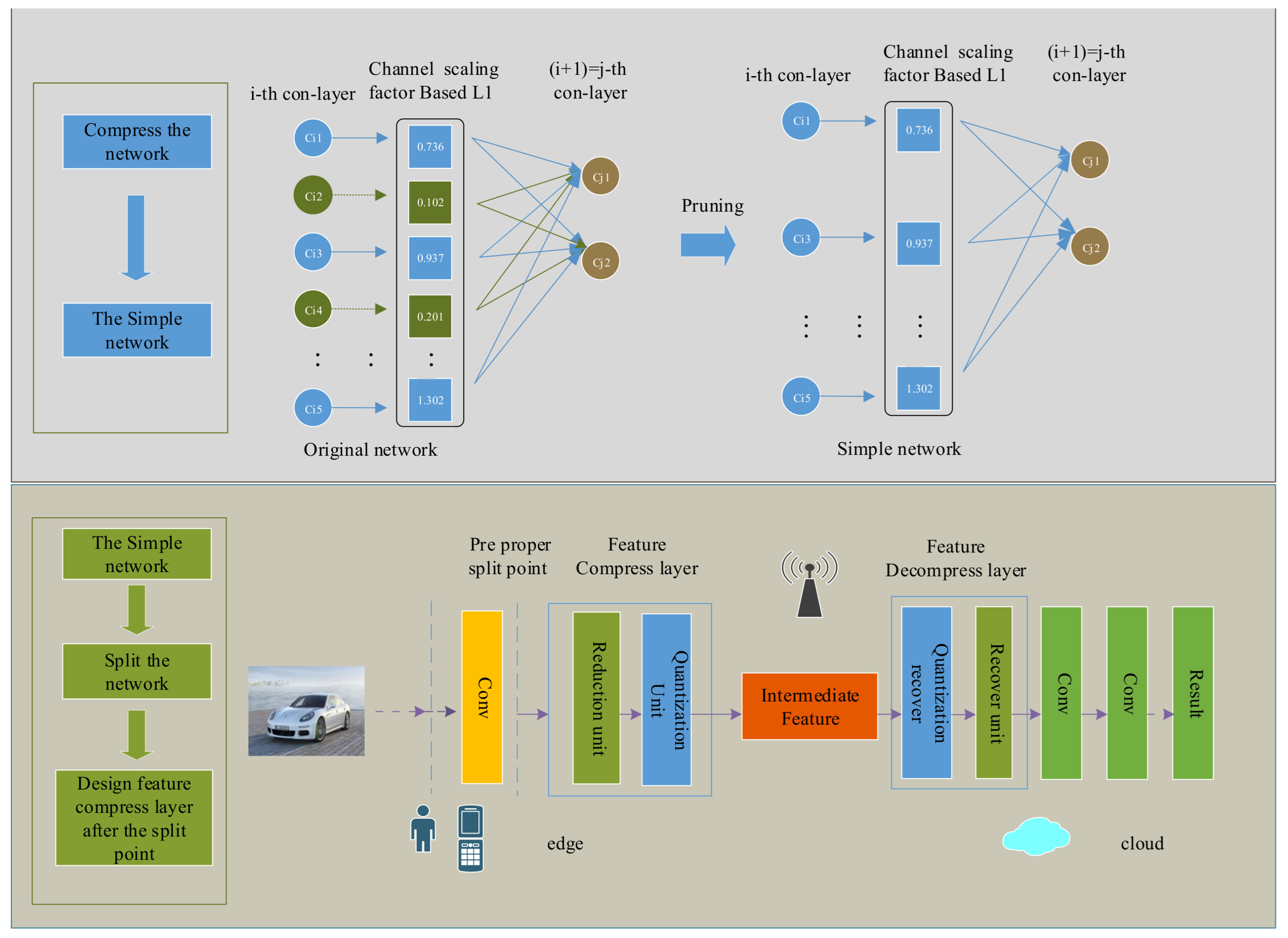

- We propose a novel convolutional neural network structure in edge-cloud collaborative inference which economizes end-to-end latency through accelerated inference from two directions.

- Improved model compression, which can reduce the number of model calculations and parameters while the accuracy loss is small.

- We designed the feature compression layer, which can achieve the highest bit-compression rate within the defined accuracy loss range.

- Finally, the BBNet prototype was implemented on NIVIDIA Nano and the server. The evaluation results based on different static network bandwidths demonstrate the effectiveness of the proposed BBNet framework.

2. Related Work

2.1. Model Compression

2.2. DNN Model Partition

2.3. Feature Compression

3. Proposed Method

3.1. Channel-Pruning

3.1.1. Scaling Factor and Sparsity

3.1.2. The Scaling Factor in BN Layer

3.1.3. Channel-Pruning and Global Fine-Tuning

3.2. Feature Compression Layer

3.2.1. Reduction Unit and Recover Unit

3.2.2. Quantization Unit

3.2.3. Accuracy-Aware Training

3.3. Formulation of Partition Point

| Algorithm 1 The partition algorithm. |

Input: N: The numbers of layers in the DNN L: The numbers of partitioning points in the DNN : current load level of mobile : current load level of cloud : The latency in mobile with j partitioning point and load level : The latency in mobile with j partitioning point and load level : The max of spatial scaling factor : The max of channel number : The compact model after Channel-pruning : The bandwidth of wireless network : The accuracy of training model Output: The best partitioned mode Variables: : The compressed data size in each of M splitting point //train phase

|

4. Evaluation

4.1. Experiment Setup

4.1.1. Edge and Cloud Settings

4.1.2. Model Selection and Pre-Partition Point Selection

4.1.3. Dataset

4.2. Reduce Model Size

4.3. Bit-Compression Rate of Different Split Points

4.4. End-to-End Latency Improvement

5. Summary and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DNN | Deep Neural Network |

| CNN | Convolutional Neural Network |

| ILP | Integer Linear Program |

| PNG | Portable Network Graphic |

| RB | Residual Block |

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Kang, Y.; Hauswald, J.; Cao, G.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative Intelligence between the Cloud and Mobile Edge. ACM Sigplan Not. 2017, 52, 615–629. [Google Scholar] [CrossRef] [Green Version]

- Choi, H.; Bajić, I.V. Near-Lossless Deep Feature Compression for Collaborative Intelligence. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar]

- Li, G.; Liu, L.; Wang, X.; Dong, X.; Zhao, P.; Feng, X. Auto-Tuning Neural Network Quantization Framework for Collaborative Inference between the Cloud and Edge. In Proceedings of the Artificial Neural Networks and Machine Learning (ICANN 2018), Rhodes, Greece, 4–7 October 2018; pp. 402–411. [Google Scholar]

- Li, H.; Hu, C.; Jiang, J.; Wang, Z.; Wen, Y.; Zhu, W. Jalad: Joint Accuracy-and Latency-Aware Deep Structure Decoupling for Edge-Cloud Execution. In Proceedings of the 2018 IEEE 24th International Conference on Parallel and Distributed Systems (ICPADS), Singapore, 11–13 December 2018; pp. 671–678. [Google Scholar]

- Eshratifar, A.E.; Abrishami, M.S.; Pedram, M. Jointdnn: An Efficient Training and Inference Engine for Intelligent Mobile Cloud Computing Services. IEEE Trans. Mob. Comput. 2021, 20, 565–576. [Google Scholar] [CrossRef] [Green Version]

- Li, E.; Zeng, L.; Zhou, Z.; Chen, X. Edge Ai: On-Demand Accelerating Deep Neural Network Inference Via Edge Computing. IEEE Trans. Wirel. Commun. 2020, 19, 447–457. [Google Scholar] [CrossRef] [Green Version]

- Eshratifar, A.E.; Esmaili, A.; Pedram, M. Bottlenet: A Deep Learning Architecture for Intelligent Mobile Cloud Computing Services. In Proceedings of the 2019 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), Lausanne, Switzerland, 29–31 July 2019; pp. 1–6. [Google Scholar]

- Zhuang, L.; Li, J.; Shen, Z.; Gao, H.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2755–2763. [Google Scholar]

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing neural network architectures using reinforcement learning. arXiv 2017, arXiv:1611.02167. [Google Scholar]

- Han, S.; Mao, H.; Dally, W. Deep Compression: Compressing Deep Neural Network with Pruning, Trained Quantization and Huffman Coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Chen, Z.; Lin, W.; Wang, S.; Duan, L.Y.; Kot, A. Intermediate Deep Feature Compression: The Next Battlefield of Intelligent Sensing. arXiv 2018, arXiv:1809.06196. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the Importance of Initialization and Momentum in Deep Learning. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 1139–1147. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. Comput. Sci. 2015, 14, 38–39. [Google Scholar]

- Chintala, S. Training an Object Classifier in Torch-7 on Multiple gpus over Imagenet. 2017. Available online: https://github.com/soumith/imagenet-multiGPU.torch (accessed on 16 December 2020).

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed Deep Neural Networks over the Cloud, the Edge and End Devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar]

- Zeng, L.; Li, E.; Zhou, Z.; Chen, X. Boomerang: On-Demand Cooperative Deep Neural Network Inference for Edge Intelligence on the Industrial Internet of Things. IEEE Netw. 2019, 33, 96–103. [Google Scholar] [CrossRef]

- Zhang, C.; Dong, M.; Ota, K. Accelerate Deep Learning in Iot: Human-Interaction Co-Inference Networking System for Edge. In Proceedings of the 2020 13th International Conference on Human System Interaction (HSI), Tokyo, Japan, 6–8 June 2020; pp. 1–6. [Google Scholar]

- Zeng, L.; Chen, X.; Zhou, Z.; Yang, L.; Zhang, J. Coedge: Cooperative Dnn Inference with Adaptive Workloa d Partitioning over Heterogeneous Edge Devices. IEEE/ACM Trans. Netw. 2021, 29, 595–608. [Google Scholar] [CrossRef]

- Wu, Q.; Chen, X.; Zhou, Z.; Zhang, J. Fedhome: Cloud-Edge Based Personalized Federated Learning for in-Home Health Monitoring. IEEE Trans. Mob. Comput. 2020. [Google Scholar] [CrossRef]

- Wang, Q.; Li, Z.; Nai, K.; Chen, Y.; Wen, M. Dynamic Resource Allocation for Jointing Vehicle-Edge Deep Neural Network Inference. J. Syst. Archit. 2021, 117, 102133. [Google Scholar] [CrossRef]

- Tishby, N.; Zaslavsky, N. Deep Learning and the Information Bottleneck Principle. In Proceedings of the 2015 IEEE Information Theory Workshop (ITW), Jerusalem, Israel, 26 April–1 May 2015; pp. 1–5. [Google Scholar]

- Luo, S.; Yang, Y.; Yin, Y.; Shen, C.; Zhao, Y.; Song, M. Deepsic: Deep Semantic Image Compression. In Proceedings of the ICONIP 2018, Siem Reap, Cambodia, 13–16 December 2018; pp. 96–106. [Google Scholar]

- Luo, S.; Yang, Y.; Yin, Y.; Shen, C.; Zhao, Y.; Song, M. Deepsic: Multi-Task Learning with Compressible Features for Collaborative Intelligence. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1705–1709. [Google Scholar]

- Sze, V.; Chen, Y.; Yang, T.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef] [Green Version]

- Shao, J.; Zhang, J. Bottlenet++: An End-to-End Approach for Feature Compression in Device-Edge Co-Inference Systems. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Mulhollon, V. Wondershaper. 2004. Available online: http://manpages.ubuntu.com/manpages/trusty/man8/wondershaper.8.html (accessed on 10 May 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gross, S.; Wilber, M. cifar.torch. 2004. Available online: https://github.com/szagoruyko/cifar.torch (accessed on 26 November 2020).

- Huang, G.; Sun, Y.; Liu, Z.; Sedra, D.; Weinberger, K.Q. Deep Networks with Stochastic Depth. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 646–661. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arXiv 2014, arXiv:1312.4400. [Google Scholar]

- Shao, J.; Zhang, J. Communication-Computation Trade-Off in Resource-Constrained Edge Inference. IEEE Commun. Mag. 2020, 58, 20–26. [Google Scholar] [CrossRef]

| Component | Specification |

|---|---|

| System | Jetson Nano Developer Kits |

| GPU | NVIDIA Maxwell w/128 NVIDIA CUDA cores |

| CPU | quad-core ARM Cortex-A57 64-bit |

| Memory | 4 GB LPDDR4 |

| Component | Specification |

|---|---|

| System | windows 10.0 |

| GPU | NVIDIA Geforce GTX1060 1280 NVIDIA CUDA cores |

| CPU | Intel(R) Core(TM) i7-8750H CPU @2.20 GHz |

| Memory | 16 GB DDR4 |

| Baseline | Reduce Channel | Pruned (40%) | Fine-Tuned | Rate | |

|---|---|---|---|---|---|

| Top1 accuracy | 94.27 | 93.59 | 13.2 | 93.74 | – |

| Parameters | 11.17 | 2.79 | 0.97 | 0.97 | 11.5× |

| FLOPs | 27.28 | 6.86 | 4.81 | 4.81 | 5.6× |

| Bandwidth (kbps) | Time (s) | Acc (%) | Offloaded Data (B) | |

|---|---|---|---|---|

| nano-only | – | 4.7436 | 94.27 | – |

| cloud-only | 100 | 11.378 | 94.27 | 307,200 |

| 300 | 4.233 | 94.27 | 307,200 | |

| 500 | 2.802 | 94.27 | 307,200 | |

| 700 | 2.188 | 94.27 | 307,200 | |

| 900 | 1.850 | 94.27 | 307,200 | |

| 1100 | 1.635 | 94.27 | 307,200 | |

| 1300 | 1.486 | 94.27 | 307,200 | |

| 1500 | 1.376 | 94.27 | 307,200 | |

| 2500 | 1.093 | 94.27 | 307,200 | |

| 3500 | 0.970 | 94.27 | 307,200 | |

| BBNet | 100 | 1.188 | 93.58 | 1600 |

| 300 | 1.164 | 93.58 | 1600 | |

| 500 | 1.122 | 91.88 | 12,800 | |

| 700 | 1.097 | 91.88 | 12,800 | |

| 900 | 1.083 | 91.88 | 12,800 | |

| 1100 | 1.074 | 91.88 | 12,800 | |

| 1300 | 1.069 | 91.88 | 12,800 | |

| 1500 | 1.064 | 91.88 | 12,800 | |

| 2500 | 0.993 | 92.10 | 140,800 | |

| 3500 | 0.934 | 92.10 | 140,800 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Zhang, W.; Wang, C.; Ma, X.; Yu, H. BBNet: A Novel Convolutional Neural Network Structure in Edge-Cloud Collaborative Inference. Sensors 2021, 21, 4494. https://doi.org/10.3390/s21134494

Zhou H, Zhang W, Wang C, Ma X, Yu H. BBNet: A Novel Convolutional Neural Network Structure in Edge-Cloud Collaborative Inference. Sensors. 2021; 21(13):4494. https://doi.org/10.3390/s21134494

Chicago/Turabian StyleZhou, Hongbo, Weiwei Zhang, Chengwei Wang, Xin Ma, and Haoran Yu. 2021. "BBNet: A Novel Convolutional Neural Network Structure in Edge-Cloud Collaborative Inference" Sensors 21, no. 13: 4494. https://doi.org/10.3390/s21134494

APA StyleZhou, H., Zhang, W., Wang, C., Ma, X., & Yu, H. (2021). BBNet: A Novel Convolutional Neural Network Structure in Edge-Cloud Collaborative Inference. Sensors, 21(13), 4494. https://doi.org/10.3390/s21134494