Abstract

Population-based optimization algorithms are one of the most widely used and popular methods in solving optimization problems. In this paper, a new population-based optimization algorithm called the Teamwork Optimization Algorithm (TOA) is presented to solve various optimization problems. The main idea in designing the TOA is to simulate the teamwork behaviors of the members of a team in order to achieve their desired goal. The TOA is mathematically modeled for usability in solving optimization problems. The capability of the TOA in solving optimization problems is evaluated on a set of twenty-three standard objective functions. Additionally, the performance of the proposed TOA is compared with eight well-known optimization algorithms in providing a suitable quasi-optimal solution. The results of optimization of objective functions indicate the ability of the TOA to solve various optimization problems. Analysis and comparison of the simulation results of the optimization algorithms show that the proposed TOA is superior and far more competitive than the eight compared algorithms.

1. Introduction

Optimization is the setting of inputs or specifications of a device, a mathematical function, or an experiment in which the output (result) is maximized or minimized [1,2]. In optimization problems, the input contains a number of parameters (decision variables), the process or function is called the cost function or the fitness function, and the output is called the cost or fitness [3]. An optimization problem with only one variable is a one-dimensional optimization problem, otherwise it is a multidimensional optimization problem. As the dimensional of the optimization problem increases, optimization will become more difficult [4].

In general, optimization problem-solving methods are classified into two classes of deterministic and stochastic methods [5]. Deterministic methods include two groups of gradient-based methods and non-gradient based methods. Methods that use derivative information or gradients of objective functions and constraint functions are called gradient-based methods. These methods are divided into two categories of first-order and second-order gradient methods. The first-order gradient methods use only the objective function gradient and constraint functions, while the second-order gradient methods use Hessian information of the objective function or constraint functions in addition to the gradient [6]. One inherent drawback of mathematical programming methods is a high probability of stagnation in local optima during the search of non-linear space. Methods that do not use gradients or Hessian information in any way (either analytically or numerically) are called non-gradient-based or zero-order methods. One of the drawbacks of non-gradient-based deterministic methods is that their implementation is not easy and requires high mathematical preparation to understand and use them.

Population-based optimization algorithms (PBOAs) are stochastic methods that are able to provide appropriate solutions to optimization problems without the need for derivative information and gradients and are based on random scanning of the problem search space [7]. PBOAs are methods that first offer a number of random solutions to an optimization problem. Then, in an iterative process based on the steps of the algorithm, these proposed solutions are improved. After completing the iterations of the algorithm and at the end of implementing the algorithm on the optimization problem, the algorithm presents the best proposed solution for the optimization problem.

Each optimization problem has a basic solution called a global optimal solution. Achieving a global optimal solution requires a lot of time and computation in some complex optimization problems. The advantage of PBOAs is that they provide suitable solutions in less time and computations. The important issue about solutions provided by PBOAs is that these solutions are not necessarily global optimal solutions. For this reason, the solution provided by the PBOAs is called a quasi-optimal solution. The quasi-optimal solution is at best the same as the global optimal, otherwise it should be as close to the global optimal as possible. Therefore, in comparing the performance of several PBOAs in solving an optimization problem, the algorithm that presents the quasi-optimal solution closer to the global optimal is a better algorithm. Additionally, an optimization algorithm may perform well in solving one optimization problem but fail to solve another optimization problem and fail to provide a suitable quasi-optimal solution. For these reasons, the various PBOAs have been proposed to solve optimization problems and provide more appropriate quasi-optimal solutions.

PBOAs are presented based on modeling various processes and phenomena such as behaviors of animals, plants, and other living organisms in nature, laws of physics, rules of games, genetics and reproductive processes, and any other phenomenon or process that has the character of an evolutionary process or progress. For example, simulation of natural ant behaviors has been used in the design of Ant Colony Optimization (ACO) [8]. Hook’s physical law simulation has been used to design the Spring Search Algorithm (SSA) [9]. The rules of the game of throwing a ring and simulating the behavior of the players have been used in the design of Ring Toss Game-Based Optimization (RTGBO) [1]. Immune system simulation is used in the design of the Artificial Immune System algorithm as an evolutionary algorithm [10]. Additionally, by ideation of different phenomena, it is possible to design different systems and algorithms for problem solving. For example, in the Blackboard system, which is an artificial intelligence approach based on the Blackboard architecture model, a common knowledge base, the “blackboard”, is repeatedly updated by a diverse group of specialized knowledge resources [11].

The Genetic Algorithm (GA) is one of the most widely used and oldest evolutionary-based optimization methods which has been developed based on simulation of reproductive processes and Darwin’s theory of evolution. In the GA, changes in chromosomes occur during the reproductive process. Parents’ chromosomes are first identified by a selection operator, then randomly exchanged through a special process called crossover. Thus, children inherit some of the traits or characteristics of the father and some of the traits or characteristics of the mother. A rare process called mutation also causes changes in the characteristics of living things. Finally, these new children are considered as parents in the next generation and the process of the algorithm is repeated until the end of the implementation of the algorithm [12]. The concepts of the GA are simple and understandable, but having control parameters and high computation are the main disadvantages of the GA.

Particle Swarm Optimization (PSO) is another old and widely used method of optimization that is inspired by the natural behaviors of birds and the relationships between them. In PSO, the position of each member of the population is updated at any point in time under the influence of three components. The first component is the speed of the population member in the previous repetition, the second component is the personal experience of the population member gained until each repetition, and the third component is the experience that the entire population has gained up to that repetition. [13]. One of the advantages of PSO is the simplicity of the relationship and its implementation. However, poor convergence and entrapment in local optimum areas are the main disadvantages of PSO.

The Gravitational Search Algorithm (GSA) is a physics-based optimization algorithm based on modeling the gravitational force between objects. In the GSA, members of the population are objects that are in the problem-solving space at different distances from each other. Objects that have a more optimal position in the search space attract other objects based on the gravitational force [14]. High computation, a time-consuming process, having several control parameters, and poor convergence in complex objective functions are the most important disadvantages of the GSA.

Teaching–Learning-Based Optimization (TLBO) is an intelligent optimization algorithm inspired by the learning and teaching process between a teacher and students in the classroom. In the TLBO algorithm, a mathematical model for teaching and learning is considered, which is finally implemented in two stages and can lead to optimization:

- (i)

- Teaching stage: In this stage, the best member of the population is selected as a teacher and directs the average population towards itself. This is similar to what a teacher really does in the real world.

- (ii)

- Learning phase: In this phase, people in the population (who are considered classmates) develop their knowledge by cooperation together. This is similar to what really happens in relationships between friends and classmates [15].

The main disadvantage of TLBO is the convergence rate, and it gets even worse when dealing with high-dimensional problems.

The Grey Wolf Optimizer (GWO) is a swarm-based algorithm which models the hierarchical structure and social behavior of gray wolves while hunting. In the GWO, four types of gray wolves, alpha, beta, delta, and omega, are used to simulate the leadership hierarchy. In addition, the process of hunting is mathematically modeled in three main stages of searching prey, encircling prey, and attacking prey. In the GWO, alpha is the best solution, and beta and delta are the second and third best solutions. Other members of the population are considered as omega wolves. The hunting process is led by the alpha, beta, and delta wolves, while omega wolves follow these three types of wolves [16]. The main disadvantages of the GWO include low convergence speed, poor local search, and low accuracy in solving complex problems.

The Whale Optimization Algorithm (WOA) is a nature-inspired meta-heuristic optimization algorithm which mimics the social behavior of humpback whales. In the WOA, the bubble-net hunting strategy is simulated in three stages of encircling prey, spiral bubble-net feeding maneuver, and search for prey [17]. Low accuracy, slow convergence, and easily falling into local optimum are the main disadvantages of the WOA. Moreover, the WOA cannot perform well enough in solving high-dimensional optimization problems.

The Marine Predators Algorithm (MPA) which is a swarm-based algorithm which is inspired by the movement strategies that marine predators use when trapping their prey in the ocean. The optimization process in the MPA is divided into three main optimization stages due to the different hunting and prey speeds (see [18]):

- Phase 1:

- When the prey moves faster than the hunter.

- Phase 2:

- When the prey and the hunter move at almost the same speed.

- Phase 3:

- When the hunter is moving faster than the prey.

High computation, a time-consuming process, and having two control parameters whose adjustment is an important challenge in the quality of performance of the MPA are the most important disadvantages of the MPA.

The Tunicate Swarm Algorithm (TSA) is a swarm-based approach which is based on the simulation of swarm behaviors and jet propulsion of tunicates pending the navigation and foraging process. Although a tunicate has no mindset or idea about food sources, it is able to find food sources. In the TSA, jet propulsion behavior is modeled based on avoiding collisions between population members, moving toward the best member, and staying close to it. Swarm behavior has also been used to update members of the population [19].

Poor convergence and falling to local optimal solutions in solving high-dimensional multimodal optimization problems are the main disadvantages of the TSA. In addition, the TSA has several control parameters, and assigning appropriate values for these parameters is a challenging process.

The innovation and contribution of this paper is in presenting a new population-based optimization algorithm called the Teamwork Optimization Algorithm (TOA) to solve various optimization problems. The main idea in designing the proposed TOA is to simulate the activities, behaviors, and interactions of team members in performing teamwork in order to achieve the goal of the team. The theory and various stages of the TOA are explained and then, to implement it in optimization problems, its mathematical modeling is also presented. The capability of the TOA in optimizing and presenting suitable quasi-optimal solutions on a standard set of different objective functions is evaluated. In order to analyze and evaluate the quality of the obtained optimization results, the performance of the TOA is compared with eight well-known algorithms.

The rest of this paper is organized as follows. The proposed TOA is presented in Section 2. Simulation studies and performance analysis of the TOA are presented in Section 3. A discussion of the performance of the TOA is presented in Section 4. Finally, conclusions and several suggestions for future studies are presented in Section 5.

2. Teamwork Optimization Algorithm

In this section, the theory of the proposed algorithm is stated and its mathematical model is presented in order to implement it in solving optimization problems.

The Teamwork Optimization Algorithm (TOA) is a PBOA which is designed based on simulation of relationships and behaviors of team members in performing their duties and achieving the desired goal of the team. Therefore, in the TOA, the search agents are team members, the relationships between team members are a tool for transmitting information, and the goal of the team is actually the solution to the optimization problem.

In a team that uses teamwork to achieve a common goal, the relationships and behaviors of team members can be considered as follows:

Supervisor: A member of the team who is responsible for leading and guiding the team. The supervisor is the member of the team who has the best performance compared to the other team members.

Team members: Other members who perform more weakly than the supervisor.

Influence of supervisor on team members: Each team member strives to improve their performance in accordance with the supervisor’s guidelines and instructions.

Influence of better members on weak team members: Each team member tries to improve her/his performance by using the experiences of other team members who perform better than themselves.

Individual activities: Each team member, based on personal efforts and activities, tries to contribute more to the achievement of the whole team.

Modeling of the mentioned concepts is applied in the design of the proposed TOA.

In the proposed TOA, each member of the population represents a proposed solution to the optimization problem. In fact, each member of the population proposes values for the problem variable. This means that each member of the population can be mathematically modeled as a vector whose number of components is equal to the number of problem variables. Therefore, the population of the algorithm using a matrix whose number of rows is equal to the number of members of the population and the number of columns is equal to the problem variables and can be represented by Equation (1).

where is the population matrix of the TOA, is the th team member, is the value for the th problem variable suggested by the th team member, is the number of team members, and is the number of problem variables.

As mentioned, each member of the algorithm population proposes values for the problem variables, and by placing these proposed values in the variables of the objective function, a value for the objective function is obtained. Therefore, based on each member of the population, a value is evaluated for the objective function. The vector of the objective function values is determined using the following equation:

where is the vector of the objective function and is the objective function value of the th team member.

In each iteration of the algorithm, based on the comparison of the values of the objective function, the member who has provided the best performance among the team members is selected as the supervisor. The task of the supervisor in teamwork is to lead the team and guide the team members in order to achieve the goal of that team. The selection of the supervisor in the proposed TOA is modeled using Equation (3).

where S is the supervisor of the team.

Supervisor: S = Xs and s is the row number of the team member with a minimum of F vectors,

The algorithm population is updated in the TOA in three stages.

Stage 1: Supervisor guidance

In the first stage, team members are updated based on the supervisor’s instructions. At this stage, the supervisor shares her/his information and reports to other team members and guides them towards achieving the goal. This step of the update is simulated in the TOA using Equations (4)–(6).

where is the new status for the ith team member based on supervisor guidance, is the objective function value, is the new value for the th problem variable suggested by the th team member updated based on supervisor guidance, is the update index, and is a random number in a interval.

Stage 2: Information sharing

In the second stage, each team member tries to use the information of other team members who have performed better than themselves in order to improve the performance. This stage of updating team members in the proposed TOA is modeled using Equations (7)–(9).

where is the average of the team member which is better than that of the th team member, is the its objective function value, is the number of team members with better performance than the th team member, is the value of the th variable suggested by the th better team member for the th team member, is the new status for the th team member based on the second stage, and is the its objective function value.

Stage 3: Individual activity

At this stage, each team member tries to improve her/his performance based on her/his current situation. This stage of updating team members is modeled using Equations (10) and (11).

where is the new status for the th team member based on the third stage and is the objective function value.

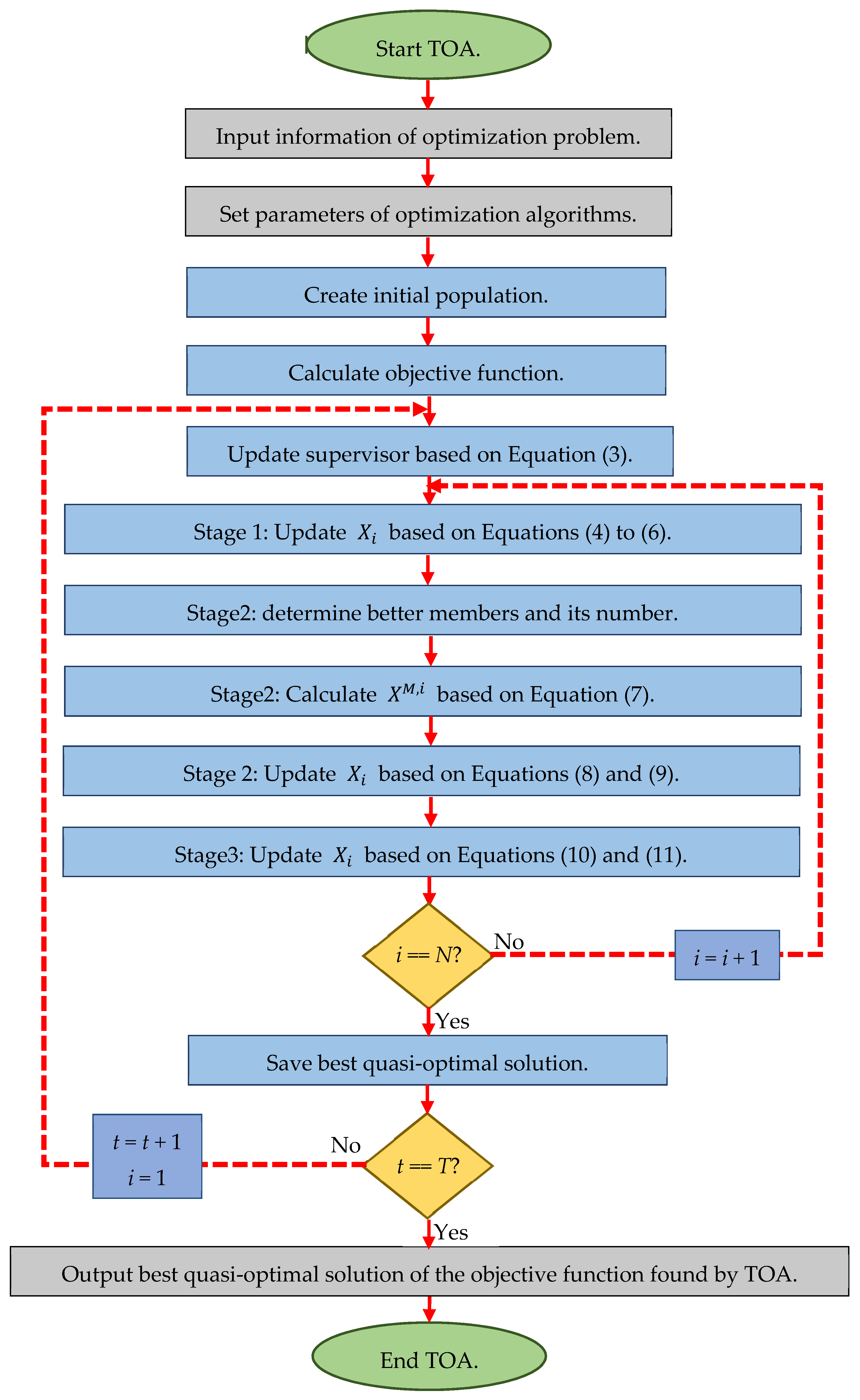

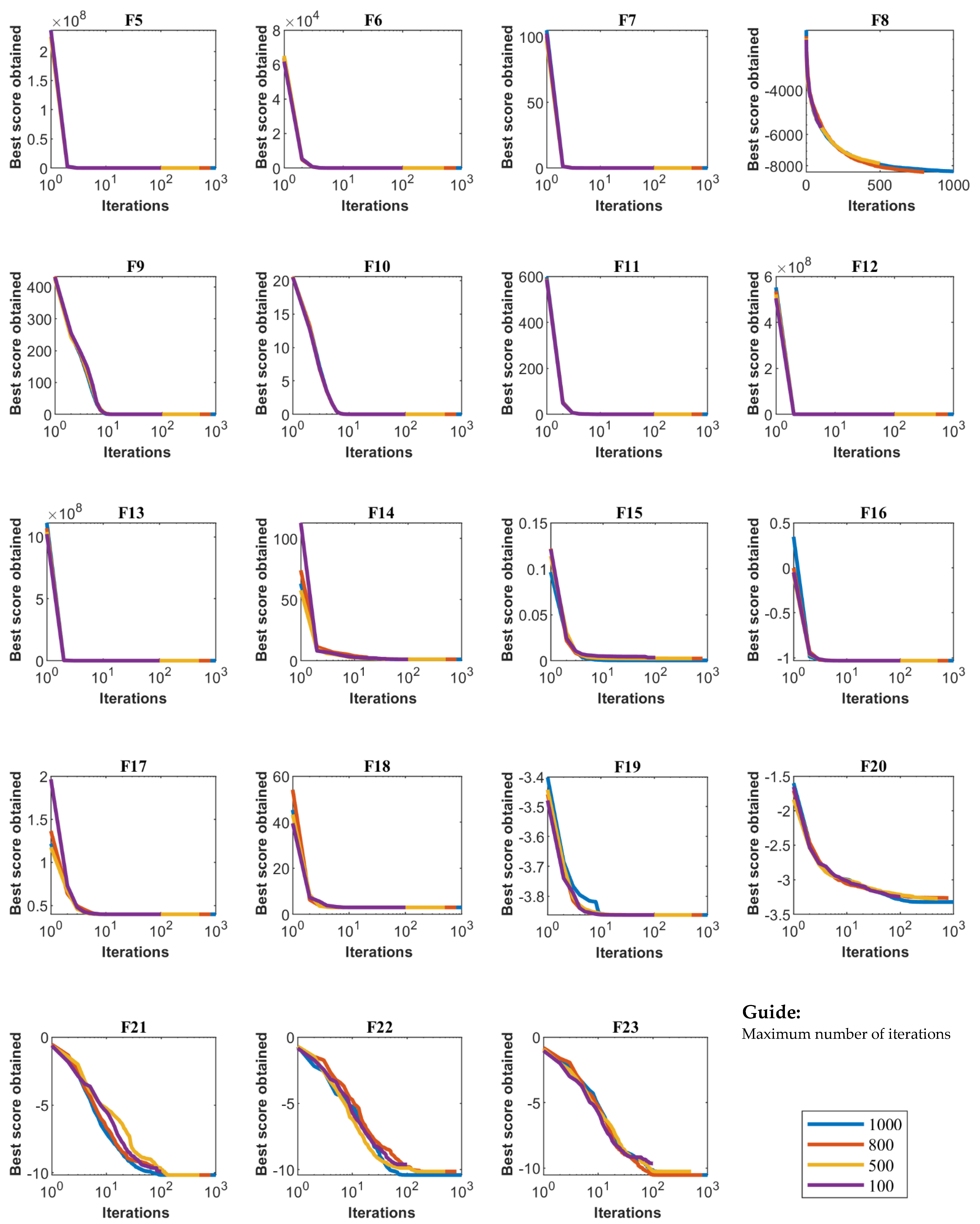

In each iteration of the algorithm, the members of the population are updated in three stages according to Equations (4)–(11). The update process is repeated until the algorithm reaches the condition of stopping. Finally, after the full implementation of the algorithm, the TOA proposes the best quasi-optimal solution obtained for the optimization problem. The pseudocode of the proposed TOA is presented in Algorithm 1 and its flowchart in Figure 1.

| Algorithm 1. Pseudocode of TOA. | ||||||

| Start TOA. | ||||||

| 1. | Input problem information: variables, objective function, and constraints. | |||||

| 2. | Set number of team members (N) and iterations (T). | |||||

| 3. | Generate an initial population matrix at random. | |||||

| 4. | Evaluate the objective function. | |||||

| 5. | For t = 1:T | |||||

| 6. | Update supervisor based on Equation (3). | |||||

| 7. | For i = 1:N | |||||

| 8. | Stage1: supervisor guidance | |||||

| 9. | Updatebased on first stage using Equations (4) and (6). | |||||

| 10. | Stage2: information sharing | |||||

| 11. | Determine better team members andfor i’th team member. | |||||

| 12. | Calculate based on Equation (7). | |||||

| 13. | Updatebased on second stage using Equations (8) and (9). | |||||

| 14. | Stage 3: individual activity | |||||

| 15. | Updatebased on third stage using Equations (10) and (11). | |||||

| 16. | End For i = 1:N | |||||

| 17. | Save best quasi-optimal solution obtained with the TOA so far. | |||||

| 18. | End For t = 1:T | |||||

| 19. | Output best quasi-optimal solution obtained with the TOA. | |||||

| End TOA. | ||||||

Figure 1.

Flowchart of the proposed TOA.

Step-by-Step Example

In this subsection, the steps of the TOA are implemented and described on an objective function. For this purpose, the sphere function with 2 variables and 10 population members has been used.

Sphere function:

Step 1:

In the first step, members of the population are randomly created. The values proposed for the problem variables must be within an acceptable range. The following general formula can be used to create initial and feasible random solutions:

X1:

Step 2:

In the second step, the objective function is evaluated based on the values proposed by each member of the population for the variables.

Step 3:

In the third step, based on the comparison of the values of the objective functions, the team supervisor is determined. The supervisor is the member of the population that provides the least amount of objective function.

Step 4:

In the fourth step, the members of the population are updated according to the supervisor’s instructions. This step is calculated using Equations (4)–(6).

Step 5:

In the fifth step, each member of the population is updated based on the guidance of better qualified team members. This step is calculated using Equations (7)–(9).

Step 6:

In the sixth step, each member of the population improves her/his condition with individual activities. This step is calculated using Equations (10) and (11).

Step 7:

The third to sixth steps are repeated until the stop condition is reached. Additionally, after the algorithm is fully implemented, the best solution is available.

The various steps of the proposed TOA for the first iteration are presented in Table 1. Additionally, the final solution for the “sphere function” is presented after 50 repetitions.

Table 1.

The various steps of the proposed TOA for the first iteration in sphere function solving.

3. Simulation Studies

In this section, simulation studies on the proposed TOA performance in solving optimization problems and the ability to provide quasi-optimal solutions are presented. To achieve this goal, the performance of the TOA is implemented and analyzed on twenty-three of different types of unimodal and multimodal standard objective functions. Complete information on these objective functions and their details are provided in Appendix A and Table A1, Table A2 and Table A3 [20].

In order to analyze the quality of the results obtained from the proposed algorithm, these results have been compared with the performance of eight other well-known algorithms: Particle Swarm Optimization (PSO) [13], the Genetic Algorithm (GA) [12], Teaching–Learning-Based Optimization (TLBO) [15], the Gravitational Search Algorithm (GSA) [14], the Whale Optimization Algorithm (WOA) [17], the Grey Wolf Optimizer (GWO) [16], the Tunicate Swarm Algorithm (TSA) [19], and the Marine Predators Algorithm (MPA) [18]. The simulation results of the performance and implementation of the TOA and compared optimization algorithms on the mentioned twenty-three objective functions are shown using two indicators of the average of the obtained best solutions (ave.) and the standard deviation of the obtained best solutions (std.).

The values of the parameters are selected based on the values used in similar studies and those proposed by the main authors of the algorithms. In general, having control parameters is a negative point for optimization algorithms. In fact, setting the appropriate values for the control parameters of optimization algorithms is a major challenge that has a significant impact on the performance of optimization algorithms. However, standard values for control parameters are usually suggested by algorithm designers. The values used for the main controlling parameters of the comparative algorithms are specified in Table 2.

Table 2.

Parameter values for the comparative algorithms.

3.1. Evaluation Unimodal Objective Function

The set of objective functions to has been selected from the unimodal objective functions. The proposed TOA and eight compared optimization algorithms are applied to optimize these objective functions. The optimization results of these objective functions are presented in Table 3. Based on the results of this table, the TOA is able to provide the global optimal solutions for , , , , and . The proposed TOA is also the best optimizer for objective functions and and has been able to provide better quasi-optimal solutions than the compared algorithms. The optimization results show that the TOA has provided a suitable and effective performance in solving this type of objective function and is much more competitive than the eight compared optimization algorithms.

Table 3.

Optimization results of TOA and compared algorithms on unimodal test function.

3.2. Evaluation High-Dimensional Multimodal Objective Functions

The objective functions from to are high-dimensional multimodal functions. The results of the implementation of the TOA and eight comparative optimization algorithms on this type of optimization problem are presented in Table 4. According to this table, it is clear that for the functions and , the proposed TOA provides the global optimal solutions. Analysis of the simulation results indicates the optimal ability of the proposed TOA to solve high-dimensional multimodal optimization problems.

Table 4.

Optimization results of TOA and compared algorithms on high-dimensional test function.

3.3. Evaluation Fixed-Dimensional Multimodal Objective Functions

The set of objective functions from to has been selected from the fixed-dimensional multimodal objective functions to analyze the optimization algorithms. Table 5 presents the optimization results of this type of objective function using the TOA and eight compared optimization algorithms. Based on this table, the TOA is able to provide the global optimal for . Although in other objective functions the index “ave.” obtained from the TOA is similar to some of the compared optimization algorithms, the TOA has a more efficient ability to solve this type of objective function by providing better performance in index “std.”. The optimization results show that the proposed TOA has a more efficient performance in solving this type of objective function.

Table 5.

Optimization results of TOA and compared algorithms on fixed-dimensional test function.

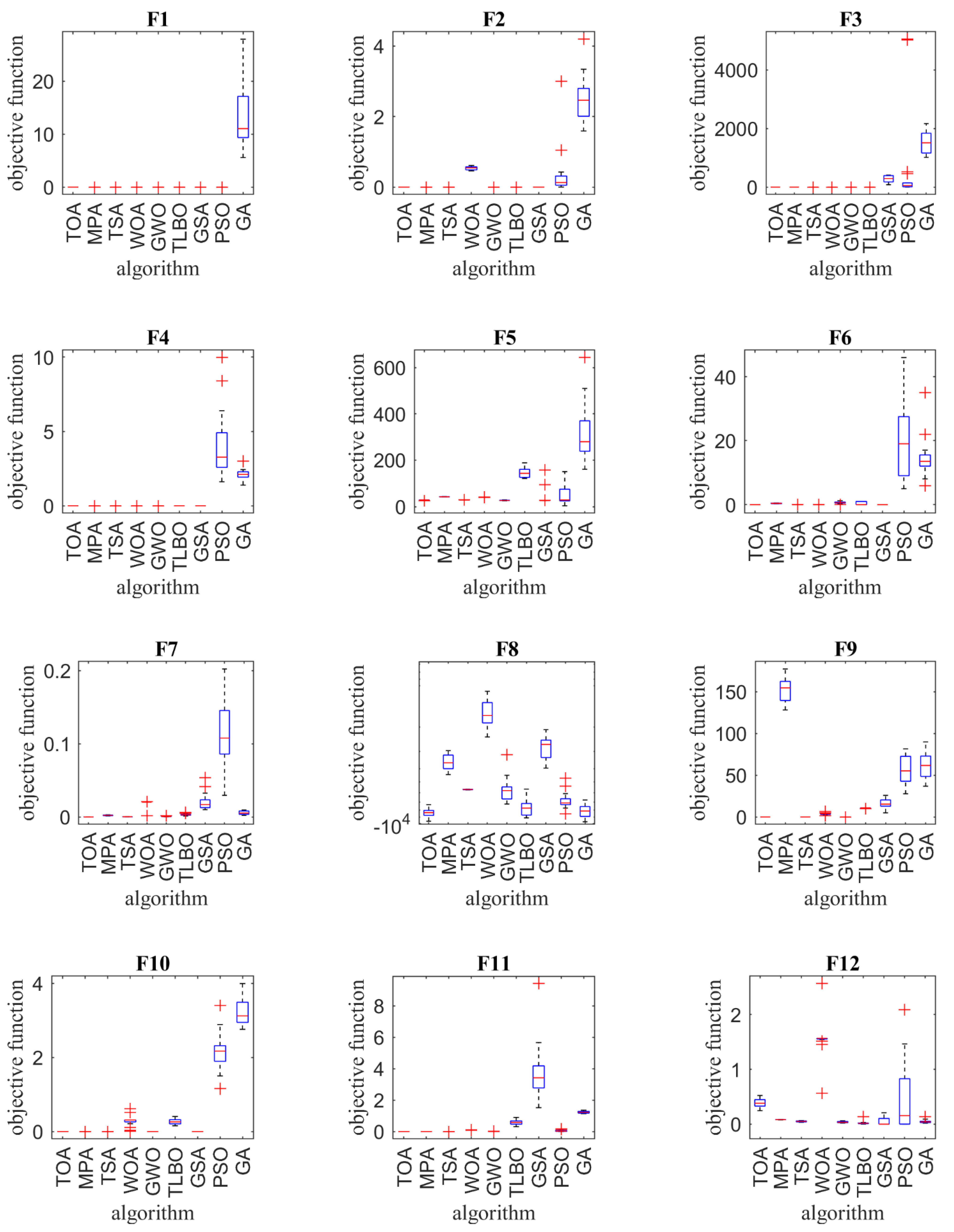

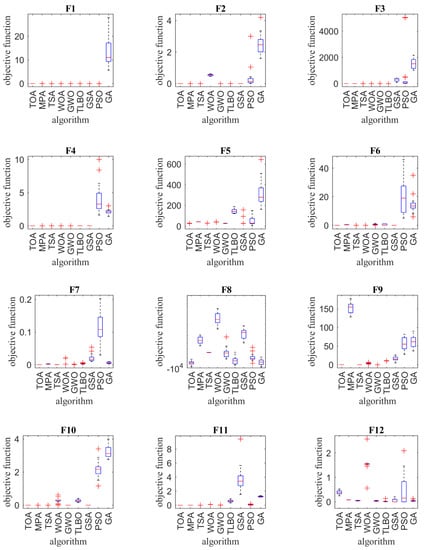

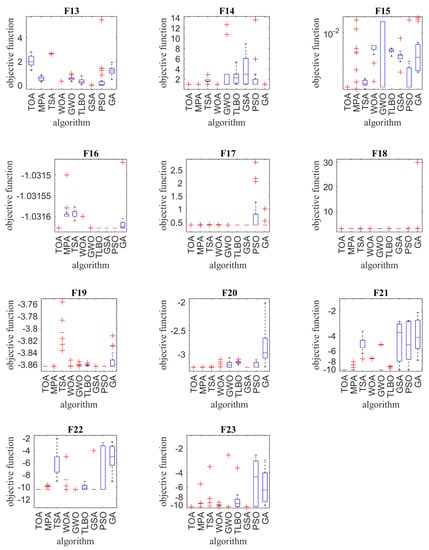

In order to compare the performance of optimization algorithms in solving optimization problems, the optimization results of twenty-three objective functions using the proposed TOA as well as eight compared optimization algorithms are presented in the form of the boxplot in Figure 2.

Figure 2.

Boxplot of composition objective function results for different optimization algorithms.

3.4. Statistical Analysis

The results of the optimization of objective functions using two indexes of averages of the best obtained quasi-optimal solutions and their standard deviation provide important and valuable information about the ability and effectiveness of optimization algorithms. However, even with a very low probability, the superiority of one optimization algorithm over the compared optimization algorithms may be stochastic after twenty independent runs. Therefore, a statistical test is used in order to ensure the superiority of the proposed TOA over the eight compared optimization algorithms. In this regard, the non-parametric Wilcoxon rank sum test is applied. The Wilcoxon rank sum test is used to evaluate the similarity of two dependent samples with a ranking scale.

A p-value specifies whether the given algorithm is statistically significant or not. If the p-value of the given optimization algorithm is less than 0.05, then the corresponding optimization algorithm is statistically significant. The result of the analysis using the Wilcoxon rank test for the objective functions is shown in Table 6. It can be observed from Table 6 that the TOA is significantly superior to the eight compared optimization algorithms based on the p-values, which are less than 0.05.

Table 6.

Obtained results from the Wilcoxon test (p ≥ 0.05).

In addition, in order to further analyze the results and performance of the optimization algorithms, another test, called the Friedman rank test, is used. The results of this test are presented in Table 7. Based on the results of the Friedman test, the proposed TOA ranks first in optimizing of all three types of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal objective functions compared to the GA, PSO, GSA, TLBO, GWO, WOA, TSA, and MPA.

Table 7.

Results of the Friedman rank test.

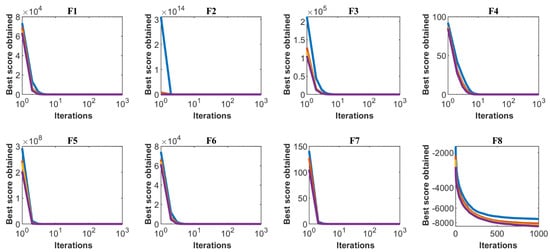

3.5. Sensitivity Analysis

In this section, the effects of two important parameters, the number of members of the algorithm population and the maximum number of iterations, on the performance of the proposed TOA in optimizing the objective functions are analyzed.

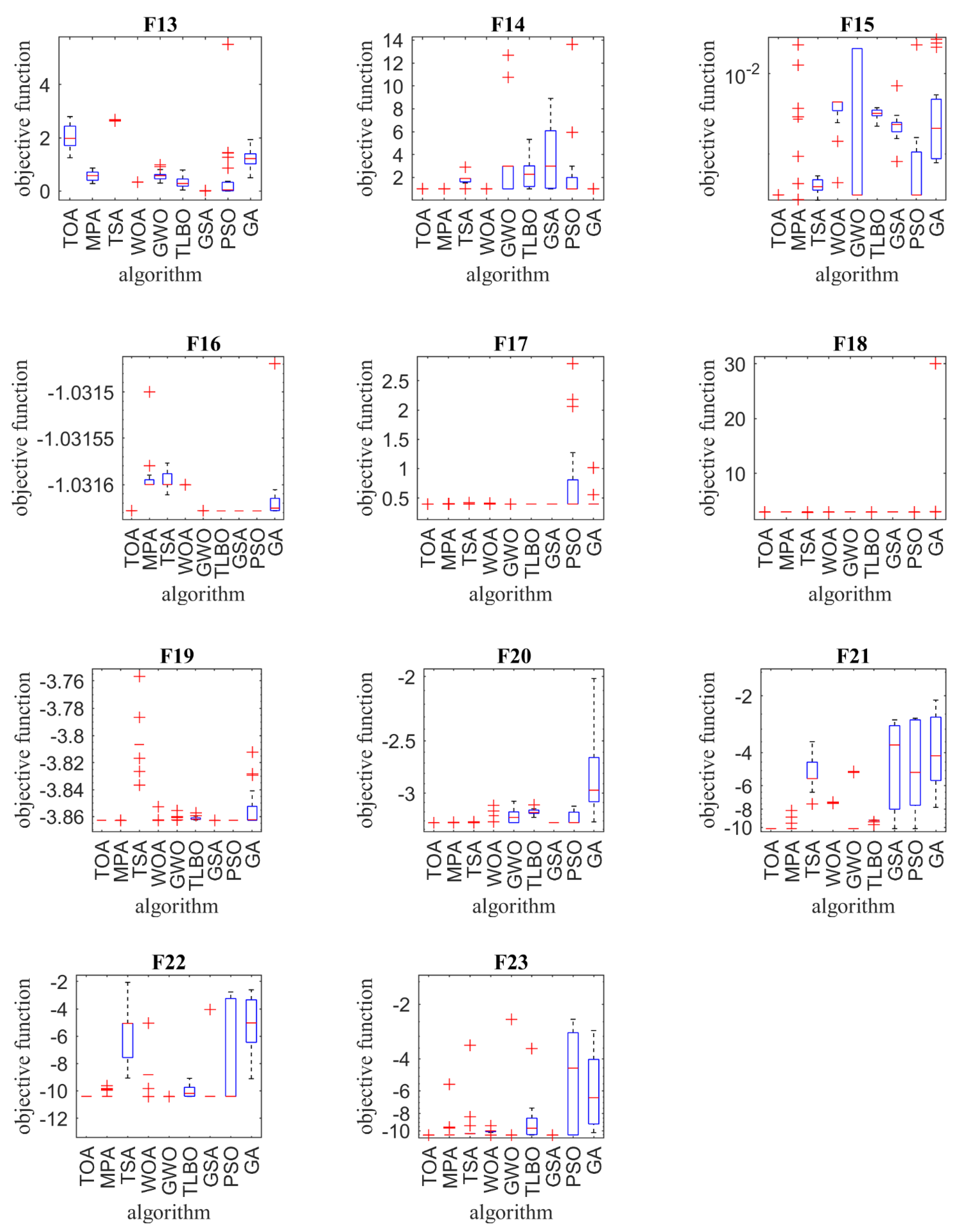

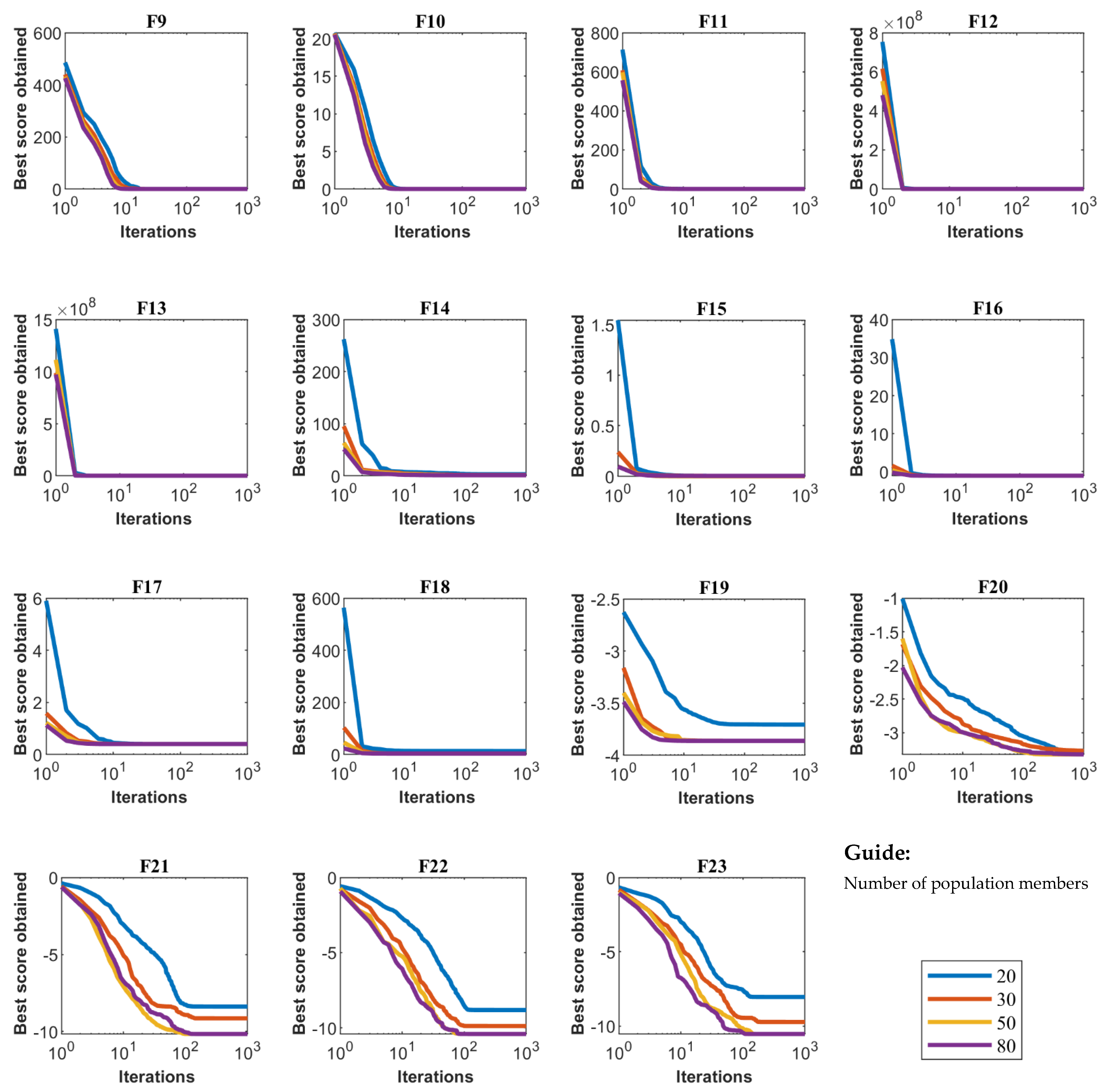

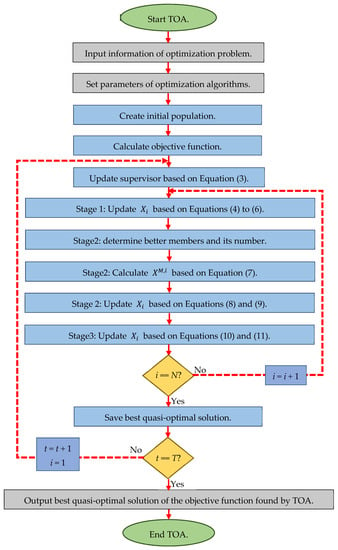

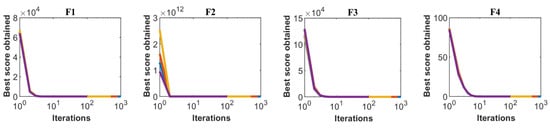

Sensitivity analysis of the proposed TOA to the parameter of the number of members of the population matrix is carried out for all twenty-three standard objective functions for numbers of members of the population of 20, 30, 50, and 80. The results of the sensitivity analysis of the TOA to the number of population members are presented in Table 8. Based on the analysis of the results presented in this table, it is found that when increasing the number of population members, the value of the objective function is decreased. Additionally, the effect of population members on the behavior of convergence curves is presented in Figure 3.

Table 8.

Results of the algorithm sensitivity analysis of the number of population members.

Figure 3.

Sensitivity analysis of TOA for number of population members.

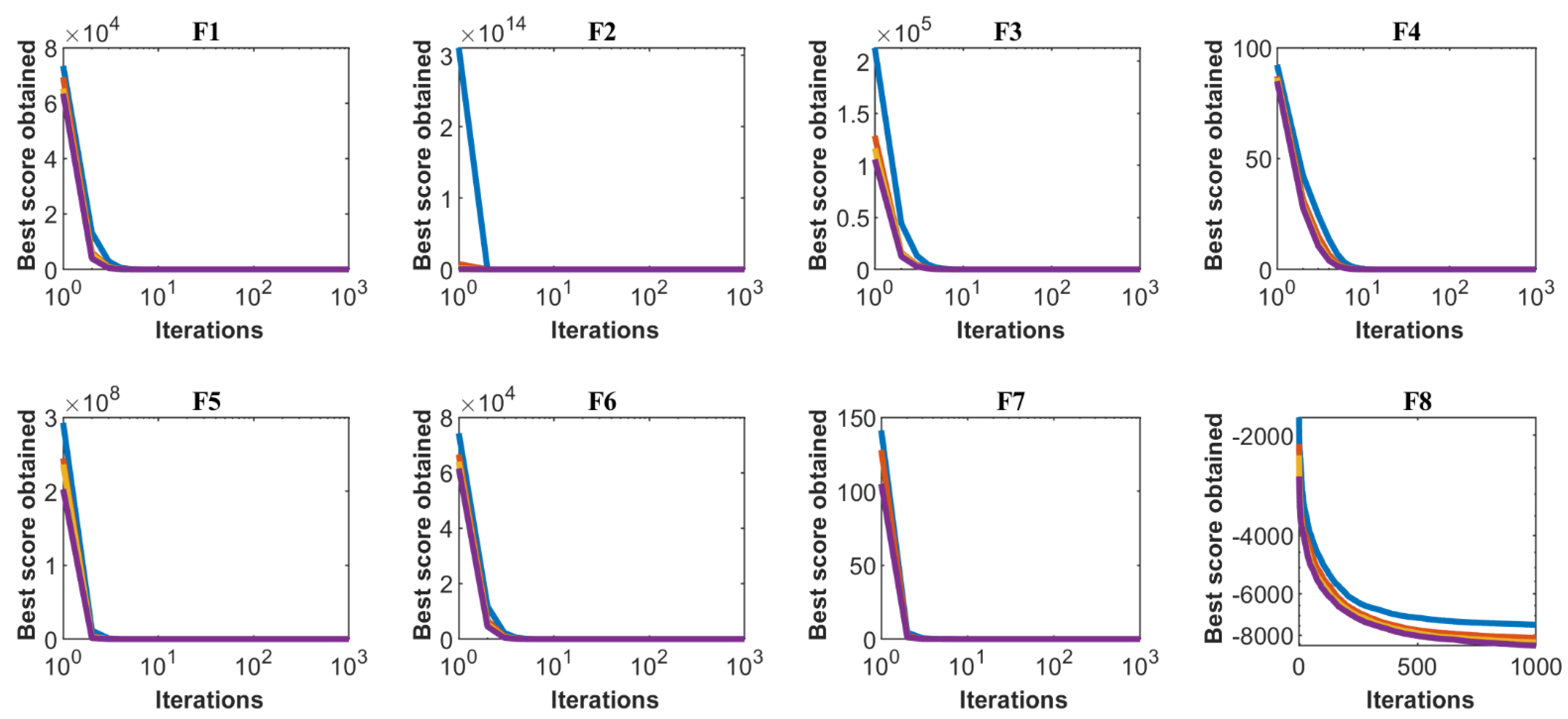

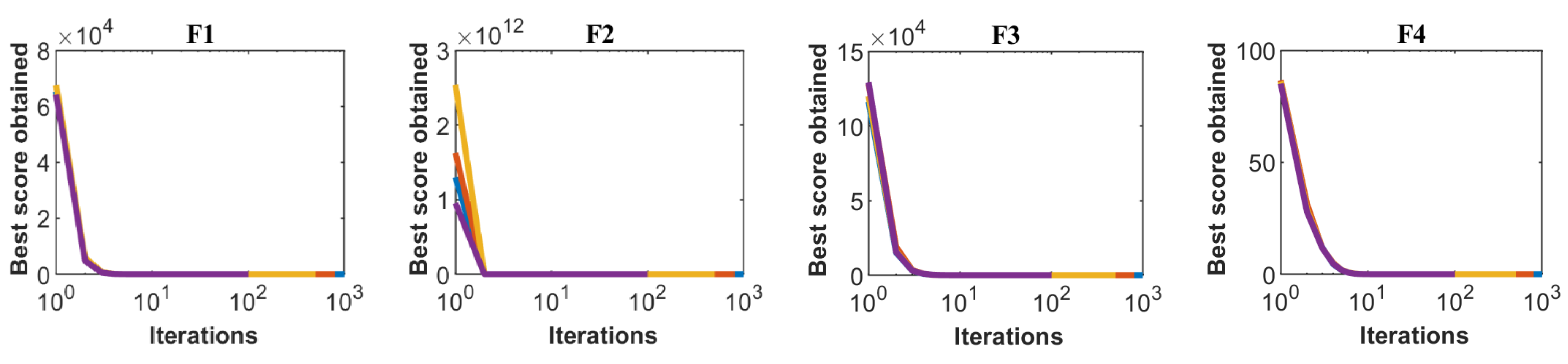

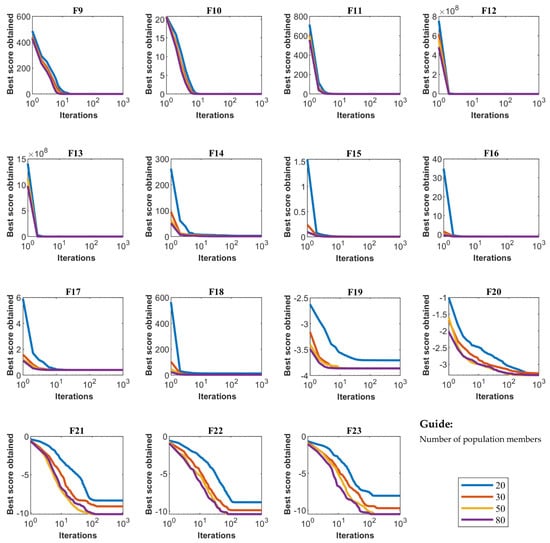

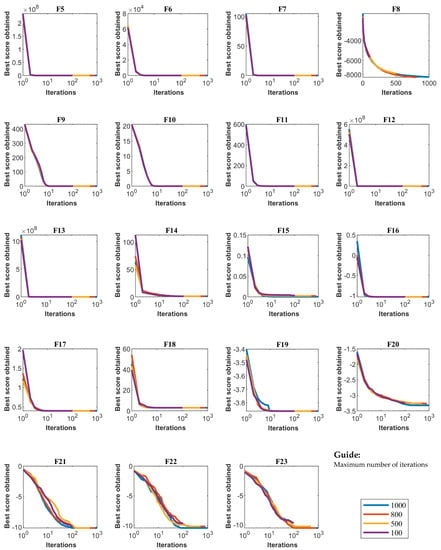

Sensitivity analysis of the TOA for the maximum number of iterations of the algorithm is carried out for all twenty-three standard objective functions for numbers of iterations of 100, 500, 800, and 100 repetitions. The simulation results obtained from this analysis for all objective functions and for the number of different iterations are presented in Table 9. Based on the sensitivity analysis of the number of iterations of the algorithm, it has been shown that by increasing the maximum number of iterations of the algorithm, the values of the objective functions are reduced and more suitable quasi-optimal solutions are obtained. The convergence curves of the objective functio1ns are plotted in Figure 4 under the influence of the different maximum numbers of iterations.

Table 9.

Results of the algorithm sensitivity analysis of the maximum number of iterations.

Figure 4.

Sensitivity analysis of TOA for maximum number of iterations.

4. Discussion

Exploitation and exploration indicators are two important and effective criteria in evaluating and analyzing the performance of optimization algorithms and comparing them with each other.

The exploitation index is an important criterion that indicates the ability of the algorithm to achieve a suitable quasi-optimal solution. In fact, according to the concept of exploitation, an optimization algorithm must be able to provide a quasi-optimal solution after a complete implementation of an optimization problem. Therefore, in comparing the exploitation index between several different algorithms, an algorithm has a higher ability in the exploitation index which can provide a more suitable quasi-optimal solution. The objective functions of the unimodal type, including to , have only one basic optimal solution. For this reason, these types of functions are desirable in order to evaluate the exploitation index. Based on the optimization results of these objective functions, as shown in Table 3, the proposed TOA has been able to achieve the global optimal solutions in the objective functions of , , , , and . Additionally, the TOA in and functions has provided a more suitable quasi-optimal solution than similar algorithms. These results indicate the high ability of the TOA in the exploitation index and the presentation of quasi-optimal solutions.

The exploration index is another criterion in evaluating the performance of optimization algorithms, which shows the ability of the algorithm to accurately search the problem-solving space and not get caught up in local optimal solutions. In fact, according to the concept of exploration, an optimization algorithm should be able to search the various regions of the problem-solving space during successive iterations of the algorithm and provide a suitable quasi-optimal solution that is close to the global optimal one. High-dimensional multimodal objective functions, including to , as well as fixed-dimensional multimodal objective functions, including to , have several local optimal solutions, and achieving the global optimal solution of these functions is a challenge. Therefore, these objective functions are suitable for evaluating the exploration index of optimization algorithms. Based on the optimization results of these objective functions, presented in Table 4 and Table 5, the TOA in the objective functions , , and has been able to provide the global optimal with its high exploration ability. Additionally, the TOA has provided acceptable performance in other objective functions and has been able to provide appropriate quasi-optimal solutions by carefully searching the problem-solving space. The results of optimizing the set of multimodal objective functions show that the proposed TOA has a high ability in the index of exploration and accurate search of the problem-solving space.

The important thing about optimization algorithms as stochastic methods is that it cannot be claimed that a particular algorithm provides the best performance in all optimization problems. It is also possible to improve the performance of an algorithm by modifying it. In this study, the proposed TOA is compared with standard versions of the GA, PSO, GSA, TLBO, GWO, WOA, TSA, and MPA. However, the results obtained from the proposed TOA are far more competitive than the results of the implementation of the eight algorithms. The TOA has also been able to provide optimal global solutions in , , , , , , , and functions. Therefore, one of the pros of the proposed TOA is that it is able to offer much more competitive solutions than similar algorithms. On the other hand, one of the cons of any optimization algorithm is that new optimization algorithms may be designed in the future that provide quasi-optimal solutions that are more appropriate and closer to the global optimal.

A comparative review of the TOA and compared algorithms, contrasting the characteristics of the heuristics, is presented in Table 10.

Table 10.

Comparative review of TOA and compared algorithms.

Comparison with Blackboard System

Based on the theoretical comparison of the TOA and Blackboard system, one can understand that it is very difficult to compare the two algorithms in the same conditions. The main idea in the Blackboard system is based on the metaphor of a group of experts standing next to a large blackboard to solve a problem. The blackboard is actually a place to develop a solution to the problem. Every specialist is waiting for the suitable time to use the blackboard and improve the solution [11]. However, the main idea of the proposed TOA is the hierarchical structure of management in teamwork, consisting of a supervisor and other team members.

In the structure of the proposed TOA, the supervisor leads the team members to achieve a specific goal. In fact, the team members are influenced by the supervisor’s information and even other better members of the population to update the proposed solutions. However, in the Blackboard structure, instead of the population of members, there is a set of knowledge modules named the Knowledge System (KS) and the KS does not use any other KSs to improve participation in problem solving over time.

In the TOA, the number of population members is constant during execution and problem solving, while in the Blackboard system, without changing other KSs, additional KSs can be added to the Blackboard system, inappropriate KSs can be removed, and poorer performing KSs can be enhanced.

From the analysis of the above concepts, it can be deduced that although there are some similarities in the idea of the proposed TOA and the Blackboard system, they differ completely in structure, updating, and implementation.

5. Conclusions and Future Works

There are many optimization problems in different disciplines of science that should be optimized using appropriate methods. Population optimization algorithms are one of the most efficient and widely used methods to solve optimization problems. In this paper, a new optimization algorithm called the Teamwork Optimization Algorithm (TOA) has been described. The main idea in designing the proposed TOA was to model the relationships and teamwork behaviors of the members of a team in order to achieve the goal of that team. In teamwork, the supervisor is the member who performs best and is responsible for leading the team. Other people carry out their activities as team members under the supervision of a supervisor. Each team member tries to improve their performance by being influenced by the supervisor’s instructions as well as following the example of other team members who perform better than themselves. Additionally, each team member tries to improve his/her situation based on his/her individual activities in order to have a greater share in the team’s achievement of the goal. The theory of the TOA was described and then mathematically modeled for implementation in solving optimization problems. The quality of the TOA in solving optimization problems has been tested on twenty-three standard objective functions of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal types. The results showed that the proposed TOA has a good ability to provide quasi-optimal solutions. Additionally, the performance of the TOA is compared with eight well-known optimization algorithms in providing quasi-optimal solutions. Analysis and evaluation of the results showed that the proposed TOA performed better in optimizing the objective functions and is much more competitive than the eight compared optimization algorithms.

The authors suggest some ideas and perspectives for future studies. The design of the binary version as well as the multiobjective version of the TOA is an interesting possibility for future investigations. Apart from this, implementing the TOA on various optimization problems and real-world optimization problems can be considered as significant contributions, as well. The use of a collaborative learning phase as a corrective phase in improving the performance of other algorithms is also a suggestion for further studies.

Author Contributions

Conceptualization, M.D. and P.T.; methodology, M.D.; software, P.T.; validation, M.D. and P.T.; formal analysis, M.D.; investigation, M.D.; resources, P.T.; data curation, M.D.; writing—original draft preparation, M.D.; writing—review and editing, P.T.; visualization, P.T.; supervision, M.D.; project administration, M.D.; funding acquisition, P.T. All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by the Excellence Project PřF UHK No. 2208/2021–2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data present in this study are available on request from the author M.D.

Acknowledgments

The authors would like to thank anonymous referees for their careful corrections and their comments that helped to improve the quality of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Information of the twenty-three objective functions is provided in Table A1, Table A2 and Table A3; these functions were introduced and studied by Yao [20]. Unimodal functions have only one optimal point and, in fact, the behavior of this type of function is such that if up to a point (mode) it is uniform and descending, from that point onwards, it is uniform but ascending. Multimodal functions, in addition to the main solution, also have several local solutions, and the main challenge in optimizing this type of function is not to get stuck in local solutions and approach the main solution.

Table A1.

Unimodal test functions.

Table A1.

Unimodal test functions.

| Objective Function | Range | Dimensions | Fmin |

|---|---|---|---|

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| [−30, 30] | 30 | 0 | |

| 30 | 0 | ||

| [−1.28, 1.28] | 30 | 0 |

Table A2.

High-dimensional multimodal test functions.

Table A2.

High-dimensional multimodal test functions.

| Objective Function | Range | Dimensions | Fmin |

|---|---|---|---|

| 30 | −12,569 | ||

| [−5.12, 5.12] | 30 | 0 | |

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 |

Table A3.

Fixed-dimensional multimodal test functions.

Table A3.

Fixed-dimensional multimodal test functions.

| Objective Function | Range | Dimensions | Fmin |

|---|---|---|---|

| 2 | 0.998 | ||

| 4 | 0.00030 | ||

| 2 | −1.0316 | ||

| [−5, 10][0, 15] | 2 | 0.398 | |

| 2 | 3 | ||

| 3 | −3.86 | ||

| 6 | −3.22 | ||

| 4 | −10.1532 | ||

| 4 | −10.4029 | ||

| [0, 10] | 4 | −10.5364 |

References

- Doumari, S.A.; Givi, H.; Dehghani, M.; Malik, O.P. Ring Toss Game-Based Optimization Algorithm for Solving Various Optimization Problems. Int. J. Intell. Eng. Syst. 2021, 14, 545–554. [Google Scholar]

- Dhiman, G. SSC: A hybrid nature-inspired meta-heuristic optimization algorithm for engineering applications. Knowl.-Based Syst. 2021, 222, 106926. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Dehghani, A.; Samet, H.; Sotelo, C.; Sotelo, D.; Ehsanifar, A.; Malik, O.P.; Guerrero, J.M.; Dhiman, G. DM: Dehghani Method for modifying optimization algorithms. Appl. Sci. 2020, 10, 7683. [Google Scholar] [CrossRef]

- Dhiman, G. ESA: A hybrid bio-inspired metaheuristic optimization approach for engineering problems. Eng. Comput. 2019, 37, 323–353. [Google Scholar] [CrossRef]

- Doumari, S.A.; Givi, H.; Dehghani, M.; Montazeri, Z.; Leiva, V.; Guerrero, J.M. A New Two-Stage Algorithm for Solving Optimization Problems. Entropy 2021, 23, 491. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, M.; Montazeri, Z.; Dehghani, A.; Ramirez-Mendoza, R.A.; Samet, H.; Guerrero, J.M.; Dhiman, G. MLO: Multi leader optimizer. Int. J. Intell. Eng. Syst 2020, 13, 364–373. [Google Scholar] [CrossRef]

- Sadeghi, A.; Doumari, S.A.; Dehghani, M.; Montazeri, Z.; Trojovský, P.; Ashtiani, H.J. A New “Good and Bad Groups-Based Optimizer” for Solving Various Optimization Problems. Appl. Sci. 2021, 11, 4382. [Google Scholar] [CrossRef]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 1996, 26, 29–41. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dehghani, M.; Montazeri, Z.; Dehghani, A.; Malik, O.P.; Morales-Menendez, R.; Dhiman, G.; Nouri, N.; Ehsanifar, A.; Guerrero, J.M.; Ramirez-Mendoza, R.A. Binary spring search algorithm for solving various optimization problems. Appl. Sci. 2021, 11, 1286. [Google Scholar] [CrossRef]

- Hofmeyr, S.A.; Forrest, S. Architecture for an artificial immune system. Evol. Comput. 2000, 8, 443–473. [Google Scholar] [CrossRef] [PubMed]

- Craig, I.D. Blackboard systems. Artif. Intell. Rev. 1988, 2, 103–118. [Google Scholar] [CrossRef]

- Bose, A.; Biswas, T.; Kuila, P. A novel genetic algorithm based scheduling for multi-core systems. In Smart Innovations in Communication and Computational Sciences; Springer: Singapore, 2019; pp. 45–54. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In proceeding of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.; Dhiman, G. Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).