Abstract

In this paper, a topology-based stereo matching method for 3D measurement using a single pattern of coded spot-array structured light is proposed. The pattern of spot array is designed with a central reference ring spot, and each spot in the pattern can be uniquely coded with the row and column indexes according to the predefined topological search path. A method using rectangle templates to find the encoded spots in the captured images is proposed in the case where coding spots are missing, and an interpolation method is also proposed for rebuilding the missing spots. Experimental results demonstrate that the proposed technique could exactly and uniquely decode each spot and establish the stereo matching relation successfully, which can be used to obtain three-dimensional (3D) reconstruction with a single-shot method.

1. Introduction

Non-contact optical measurement technologies are currently very popular and are extensively used in industrial inspection tasks due to their advantages of high efficiency, moderate accuracy and large measurement range compared with contact measurement technology. There exist many non-contact optical measurement technologies, such as triangulation, time of flight (TOF), interferometry and so on. Generally, the non-contact optical measurement technologies based on triangulation can be divided into two categories: stereo vision and structured light. Stereo vision realizes the 3D reconstruction of a point by “seeing” the point with two cameras at different perspectives. However, for the surfaces with no texture or little texture variation, the stereo matching process can be very difficult. Structured light technology can solve this problem by replacing one camera by a projector, which can generate artificial texture in order to ease the stereo matching process.

To obtain better stereo matching results, a lot of projection patterns of structured light are developed. Some review papers on this topic have been published [1,2,3,4,5]. In reference [4], Geng classified the structured light techniques into multiple-shot and single-shot categories. Furthermore, Geng divided single-shot techniques into three broad categories. Compared with the multiple-shot technique, the single-shot technique can perform a snapshot 3D measurement, which is very suitable for 3D reconstruction of moving targets. Up to now, the single-shot structured light technique is still a very hot research area [6,7,8,9,10]. Xu proposed a real-time 3D shape measurement approach based on one-shot structured light [6]. The method can satisfy the requirement of the online inspection of automotive production lines. To decode the correspondence of structured light, Zhang proposed a discontinuity-preserving matching method to improve the decoding of one-shot shape acquisition using regularized color [7]. Sagawa presented a grid-pattern based 3D reconstruction method, which can obtain a dense one-shot reconstruction by calculating dense phase information from a set of periodically encoded parallel lines [8]. However, the computational cost of this method is relatively high, which renders it unsuitable for real-time applications. Li designed a single striped pattern to measure the dense and accurate depth maps of 3D moving objects [9]. In this method, the De Bruijn sequence is used to encode the ID of each stripe in the pattern, and the epipolar segment is employed to eliminate periodic ambiguity. Garcia-Isais proposed a method to use a single composite fringe pattern containing three different frequencies to solve the problem of periodic ambiguity caused by surface discontinuities [10]. In this manner, the shape of objects having discontinuities or being spatially isolated can be reconstructed by projecting a single pattern. Since the Fourier method is employed, however, some information at the object edges or low modulation zones might be lost.

Although single-shot structured light techniques are very powerful in measurement efficiency, they still have a problem in obtaining accurate sensor calibration results because a simple and accurate calibration of a general projector is still a challenge compared with a camera calibration [11]. To overcome the disadvantage of structured light and simultaneously facilitate the stereo correspondence of stereovision, some researchers combined the two methods together [11,12,13,14,15,16,17,18,19,20]. Sun proposed a system that combined the technology of binocular stereo vision and multi-line structured light [12]. In this system, the time-multiplexing coding method was used and a sequence of patterns is projected. Pinto developed a system using stereo vision and fringe projection to measure large surfaces [13]. The system projects a sequence of sinusoidal fringes, in combination with a binary Graycode, onto the measurement surface. The absolute phase values are used for determining the correct 3D points of the measurement surface. Han proposed a method that combined the stereovision and phase shifting techniques [14]. This method can eliminate errors caused by inaccurate phase measurement. Three fringe patterns with a phase shift of π/3 are projected horizontally and then vertically. However, projecting too many patterns might consume a longer period of time, during which any movement of the object or the sensor would cause errors in 3D measurement. This prevents the method from measuring some workpiece, such as a hull plate, in the workshop where environmental factors such as vibration and movement often play a negative role.

Reducing the number of projection pattern is a very efficient method for improving measurement speed. Han used a visibility-modulated fringe pattern to further eliminate the need of the second fringe pattern [15]. With this new pattern, only three fringe patterns are required. To reduce the number of gray code images in the projection sequence, Burchardt proposed a method that used the restriction of the valid measuring volume and epipolar constraints to reduce the valid area for proper point correspondences [16]. Lohry also adopted three fringe patterns, which were modified to encode the quality map for efficient and accurate stereo matching [11]. In order to avoid global phase unwrapping, a random pattern is used to match corresponding phase points between two images roughly. After determining the coarse disparity map, a refined disparity map is further obtained by the local wrapped phase. Generally, in order to obtain the highest speed, the desired number of projection patterns might be one. Wang used a single pattern of coded stripes to measure the diameter of a hot large forging [17]. A spatial coded strategy is employed to characterize each stripe with a unique index. Thus, the stereo correspondence is established according to the stripe index and the corresponding epipolar line. Additionally, random illumination of structured light has been introduced into stereovision [18,19,20]. The random illumination-based methods can obtain a single-shot dense 3D reconstruction. In reference [19], the feature-based approach is introduced into stereo correspondence in order to preserve a low error rate. In reference [20], a method of disparity updating based on temporal consistency was further proposed in order to improve the speed of 3D measurement. In the above-mentioned methods [17,18,19,20], the epipolar constraint is employed in order to find correspondence points between two cameras. However, the stereo correspondence might suffer from errors caused by epipolar lines.

Sometimes, a dense 3D reconstruction is not necessary for some special objects, such as a large hull plate. Generally, the discrete sampling points can be used for representing a free-form surface. There are some methods that measure a free-form surface by reconstructing sample points on the surface [13,21,22]. In reference [13], the density of sample points is freely definable. However, this method requires projecting multiple patterns for a single measurement. In reference [21,22], however, only a few sample points, such as five or nine spots, are reconstructed for a single measurement.

In our previous work [23], an onsite inspection sensor based on active binocular stereo vison is proposed. This sensor has two cameras for composing a binocular stereo vision system and a projector to project structured light. The measurement principles, system development and system calibration are described in detail. A pattern with circular spot array is used to generate feature points onto the surface to be measured. Additionally, each spot is encoded with a gray code by projecting a sequence of gray code patterns onto the surface. With the extra projection of gray code patterns, effects of occlusion, discontinuous and depth step on spot stereo matching can be well eliminated. However, it is not very efficient since several extra gray code patterns are projected during one measurement, thus rendering this method unsuitable for onsite measurement of formed hull plates, especially in a workshop environment with radon vibration. In this paper, based on our previous work, a method that combines only one single-shot pattern of spot-array structured light and stereo vision is used to measure continuous free-form surfaces. A pattern of circular spot array with a marking referenced ring spot is designed. The circular spots in the pattern are row and column aligned. With the help of a digital projector, the spot density can also be adjusted according to the shape of a measured surface. Additionally, an algorithm for coding each spot with a unique 2D index is also developed. With the unique 2D index, the circular spot in the left and right camera can be matched directly without using the epipolar constraint. Then, the 3D reconstruction of the spot is calculated by triangulation. As only a single pattern is used, the proposed system is expected to be immune to vibration and disturbance problems encountered in an onsite inspection environment. It is very suitable for the onsite measurement of hull plates, because there are many vibrations in the formation workshop of hull plates. Moreover, the proposed method is very cost effective and easy to implement.

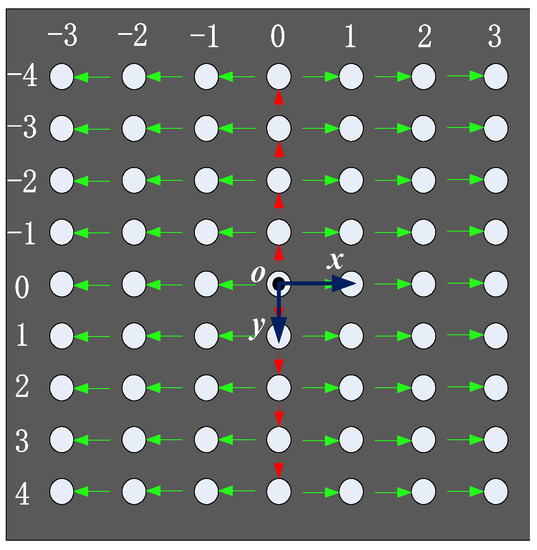

2. Coding Principle

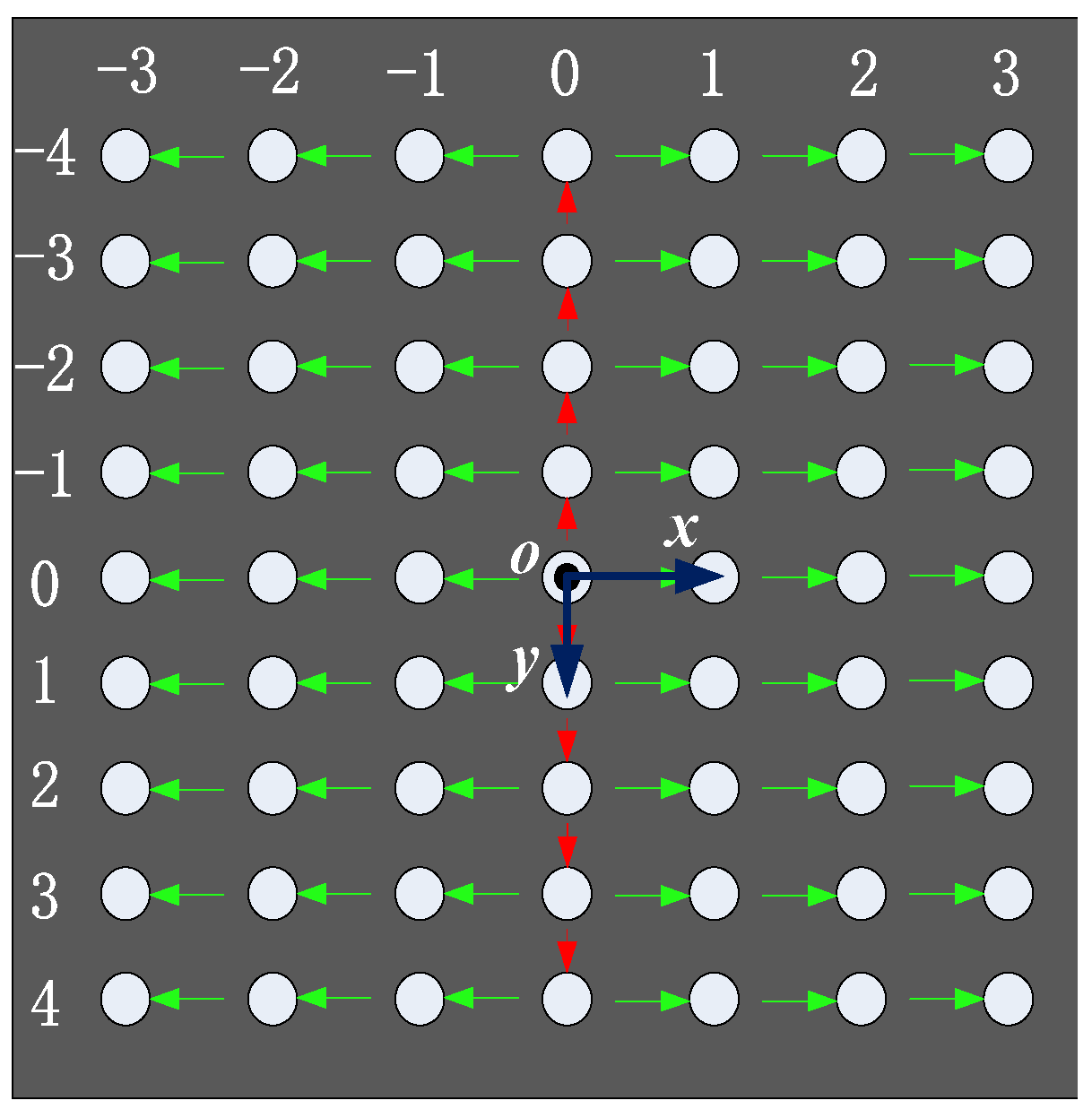

In order to illustrate the coding principle, a circular spot array of 9 rows × 7 columns is generated as an example shown in Figure 1. In the array, a special ring is used as reference center. According to the reference ring, a coordinate system can be built as shown in Figure 1, where the origin is the center of the reference ring, and the x and y axes are parallel to the image row and column, respectively. Then, the row and column indexes are used to code each spot in this coordinate system. In order to code each spot accurately, a coding process based on a topological relationship is defined in this paper. The coding process is divided into two steps, as shown in Figure 1. The first step is to code the spot located at origin and then to code the others along the positive and negative directions of y axis, respectively, which are denoted by red arrows. The second step is to code the spots in each row, and the coding sequences are denoted by green arrows.

Figure 1.

Coding process of a spot array.

2.1. Extracting Center Points of Spots

Before coding a spot array, the first step that should be performed is to detect the spots and extract their centers. The shape of the spot is generally a circle. For a circle, we can obtain the following:

where is the area, and is the perimeter. According to Equation (1), roundness can be deduced as follows.

For an ideal circle, should be 1. Therefore, two roundness thresholds of and can be used to exclude some outliers:

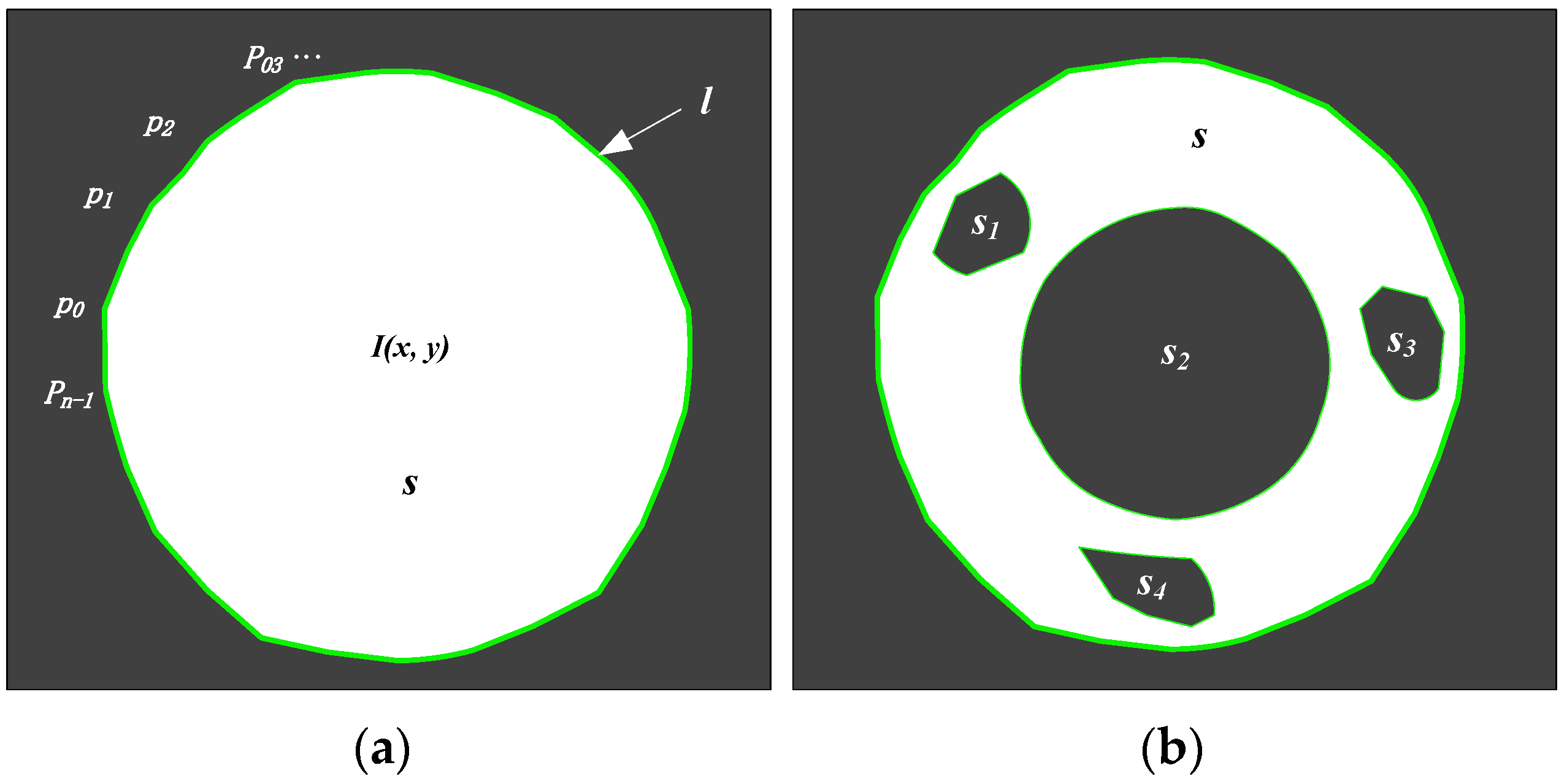

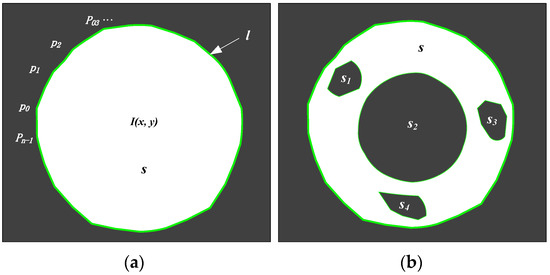

where and are the minimum and maximum roundness, respectively. In order to calculate the roundness of a spot, the area and the perimeter should be known according to Equation (2). For a circular spot in an image shown in Figure 2a, the area and the external contour perimeter can be obtained by using the following equations:

where x and y are the pixel coordinates; is the value after image binarization, which equals 1 inside the spot area and equals 0 outside the spot area; is the number of pixels on the external contour; depicts the ith pixel on the external contour; and represents the distance between the two adjacent pixels and on the spot area contour. Additionally, if the area threshold is considered, the outliers can be further excluded by using the following inequality:

where and are the minimum and maximum area. In summary, if a spot does not satisfy the inequalities (3) and (6), it can be considered as an outlier.

Figure 2.

Connected components in an image: (a) a circle; (b) a ring.

From the above analysis, it is known that the reference ring plays a very important role in coding a spot array. However, it might not satisfy the inequalities (3) and (6). In order to avoid excluding the reference ring from the spot array, some improvements are made for the spot detection algorithm. Without loss of generality, a ring with some noise blobs are considered, which is shown in Figure 2b. Obviously, the area surrounded by the external contour can be figured out by:

where is the area of the ring’s effective connected component; is the area surrounded by the ith internal contour. Furthermore, the roundness of the ring’s external contour can be expressed as:

where is the perimeter of the ring’s external contour. For the reference ring, and should satisfy the inequalities (3) and (6), because the ring’s external contour is the same as other circles. Then, the ring should be distinguished from the other circles. It is well known that a ring in general is composed by two concentric circles, which are a large one and a small one. The area ratio of the two circles for the reference ring is designed to be 0.6 when the spot-array pattern is designed. Therefore, we can detect the small circle to find the reference ring. For the reference ring, it should have an internal circle satisfying the following expression:

where is the area surrounded by the ith internal contour and is the roundness of the ith internal contour. Therefore, if a spot has some internal contours and only one of the internal contours satisfies the following inequality (9), it can be taken as the reference ring.

After the detection of the spot array, the centers of the spots should be extracted. The method proposed in our previous work [24] is employed to extract the centers of the spots in this paper.

2.2. Coding Spots with Row and Column Indexes

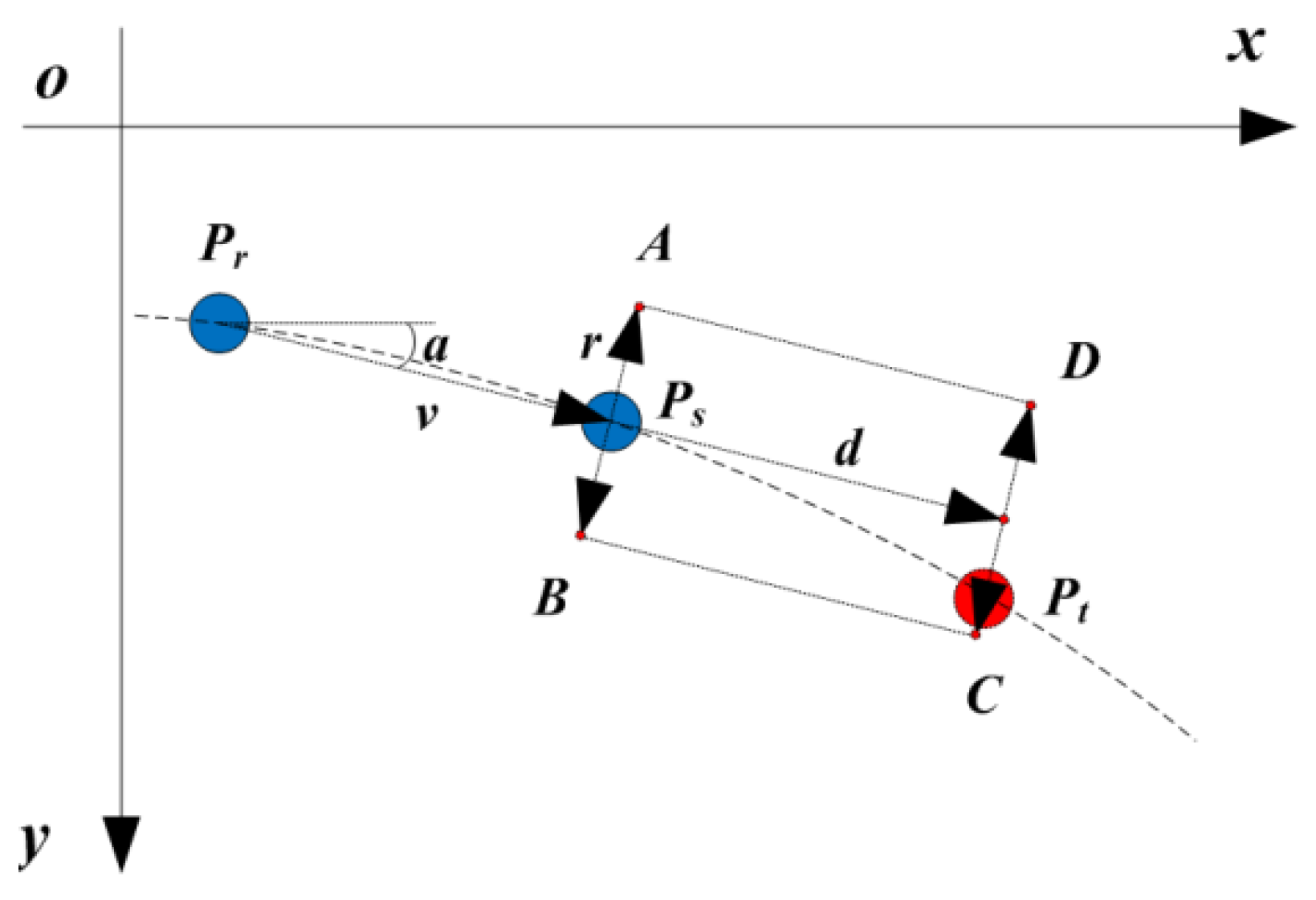

From Figure 1, it is known that the reference ring firstly should be coded with row and column indexes of (0, 0). Then, each spot can be coded one by one along a fixed search path. Therefore, for a coded spot in a search path, it is very crucial to accurately find the next uncoded spot in the same search path. In this paper, a method that uses rectangular templates to search the spots along a search path is proposed. Generally, in the design pattern, the spots are arranged in straight lines parallel to the image row or column. For this case, rectangular templates might be extended along the straight line, which renders the search process simple. However, the spots would be arranged in curves when the pattern is projected onto a free-form surface. For this case, the extension direction of rectangular templates should be adjusted to the tangents of curves. In order to illustrate the proposed method in detail, coding processes of spots arranged in a straight line and a curve are described in the following, respectively.

2.2.1. Spots Arranged in a Straight Line

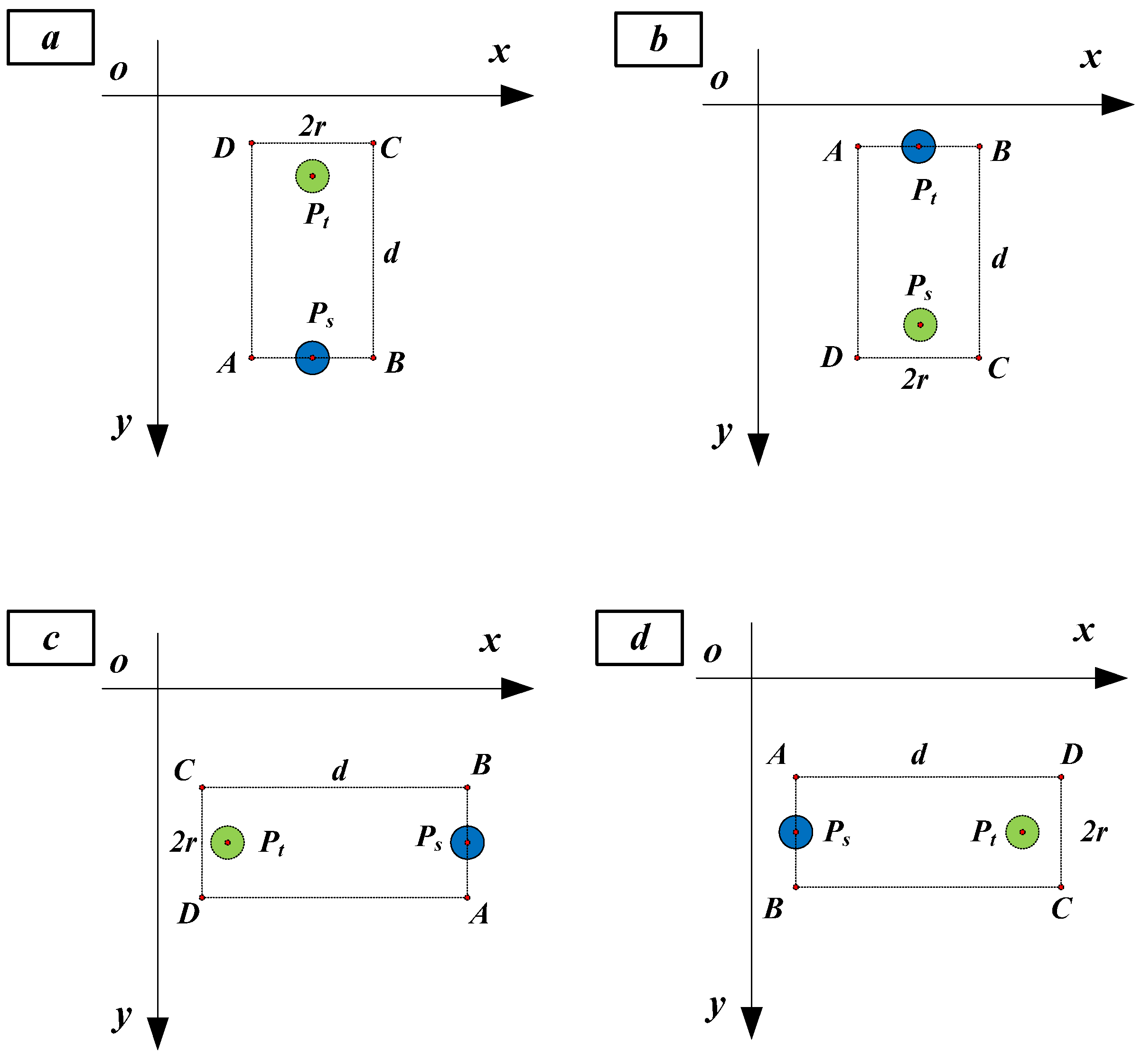

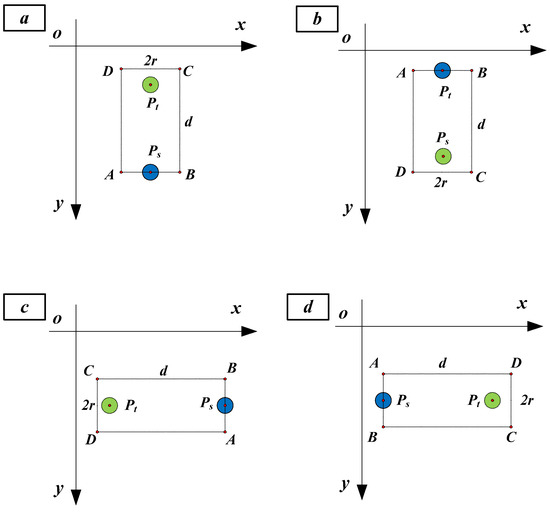

In a design pattern, for a given coded spot (, ), there are four search directions of an uncoded spot (, ), as shown in Figure 3. The four search directions are up, down, left and right, which are illustrated in Figure 3a–d, respectively.

Figure 3.

Four search directions: (a) up, (b) down, (c) left and (d) right.

Then, the four rectangle templates are generated as follows. First, the longer side of the rectangle denoted by should be extended along the search direction. Second, the shorter side of the rectangle denoted by should be vertical with respect to the search direction, and one of the short sides takes as the center. Third, as the search direction is parallel to the image row or column, the coordinates of the four corners of the rectangle denoted by A, B, C and D can be easily determined, as shown in Table 1. In Table 1, it can be judged whether is within the rectangle ABCD. If is outside ABCD, and will be enlarged until ABCD contains it. Suppose point is coded with row and column indexes of (, ) and point is within the ABCD, the row and column indexes of can then be coded, as shown in Table 1, according to the search direction and the number of intervals .

Table 1.

Coding spots arranged in a straight line.

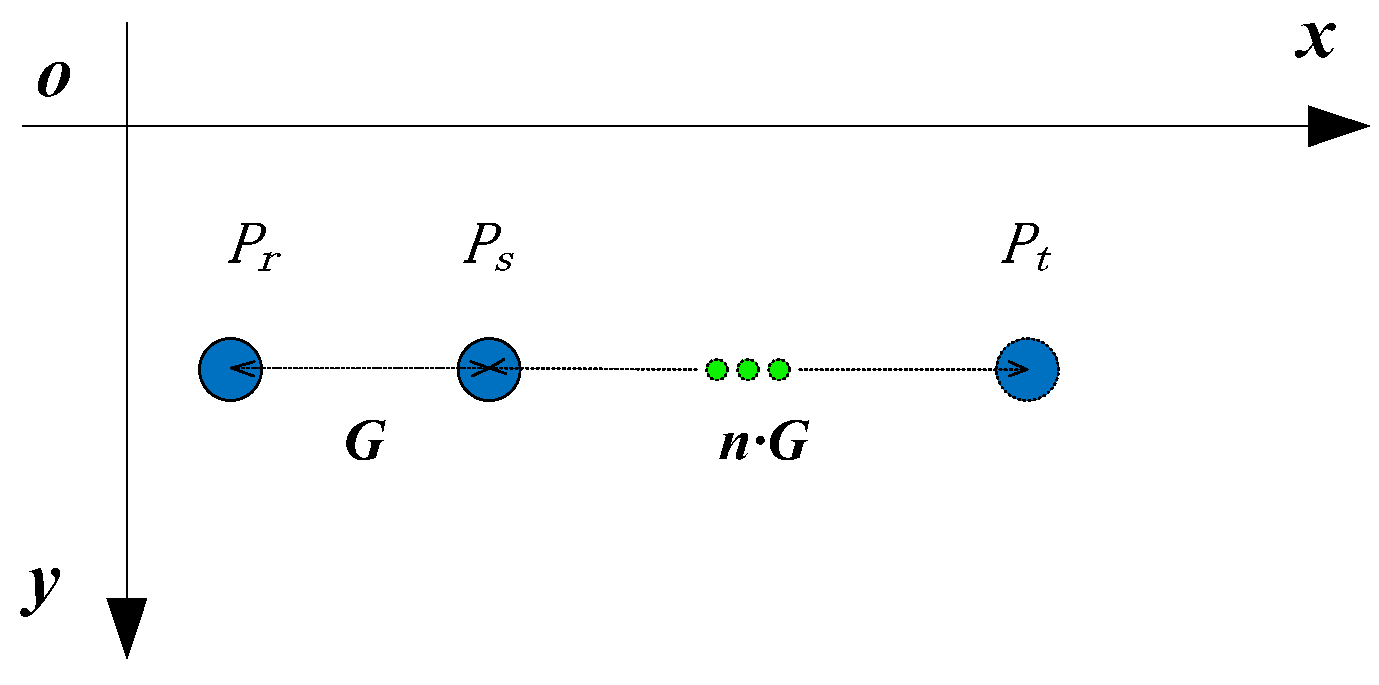

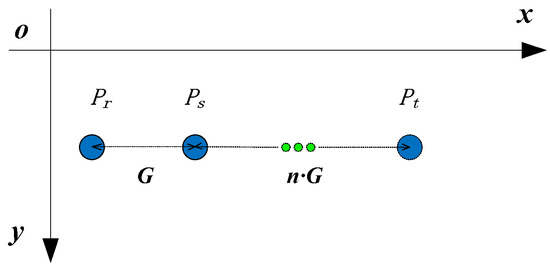

For a design pattern, might be one. However, for noise effects such as ambient illumination and non-uniform reflectivity of the measurement surface, some spots in the captured image of the spot array projected on the surface cannot be detected in practical application. Therefore, in order to obtain accurate row and column indexes, the number of intervals needs to be calculated if there are some missing spots in a search path. To succinctly illustrate this issue, three spots are arranged in a straight line, which are , and from left to right as shown in Figure 4. In Figure 4, and are actually two adjacent spots while there are missing spots between and . If the distance between and is , which is called unit distance in this paper, then the distance between and in theory should be . In practice, as the unit distance might change at the different areas of a spot array projected on a measured surface, is calculated as follows. First, find the four neighborhood spots of the reference ring, which are up, down, left and right, by the method shown in Figure 3. Second, calculate the distance between each of the four neighborhood spots and the reference ring. Then, compare the four distances and choose the middle distance as the initial value of . Third, renew the value of by the distance between two local adjacent spots in a search path.

Figure 4.

Missing spots arranged in a straight line.

Suppose that the row and column indexes of , and are (, ), (, ) and (, ), respectively. For and , the following can be deduced.

For and , the following can be deduced.

Then, it can be observed that Equation (10) is in fact a special case of Equation (11), when . Therefore, for spots arranged in a straight line, the number of intervals between the coded and uncoded points and, moreover, the row and column indexes of the uncoded point can be determined by Equation (11), if the unit distance and the distance between the coded and uncoded points are obtained. Finally, for spots arranged in a straight line, the row and column indexes are coded, as shown in Table 1.

2.2.2. Spots Arranged in a Curve

For spots arranged in a curve, it is unsuitable to use the method proposed for coding spots arranged in a straight line. This is because the search path of a curve might have a different tangent at each spot, which is different from the search path of a straight line. Therefore, in order to accurately and quickly find the uncoded spot, the search direction should be along the tangent at each spot of the search path. Suppose there are three spots, , and , arranged in a curve from left to right. The three points are shown in Figure 5, where and are two coded points with known indexes of row and column, while is an uncoded point.

Figure 5.

The search process of an uncoded spot in a curve.

To find , the following steps are performed. Firstly, is chosen as the search starting point. Secondly, the vector is calculated. Then, a normalized vector can be determined: , where is the angle between the and axis. Thirdly, take the vector as the search direction, and a search rectangle template ABCD with length of and width of can be generated as shown in Figure 5. If the pixel coordinates of is (, ), then the pixel coordinates of the four corners of the rectangle template can be deduced, respectively, as in the following.

Generally, the search direction used for generating the rectangle ABCD is not parallel to the or axis for a curved search path. Therefore, the criteria listed in Table 1 are not applicable for judging whether (x, y) is within ABCD for this case. In order to overcome this shortcoming, a more general criterion that is within ABCD is given as follows.

When is found by the rectangle template, the row and column indexes of can be determined by Table 1 according to the search direction.

2.2.3. Image Coordinates Reconstruction of Missing Spots

For missing spots, their image coordinates should be reconstructed before they are coded with row and column indexes. As the true image coordinates of missing spots in general are unable to be detected in a captured image, estimation methods of image coordinates for missing spots arranged in a straight line and a curve are proposed, respectively, in this paper. For missing spots arranged in a straight line, we use the case shown in Figure 4 to illustrate the image coordinates estimation method for missing spots. It can be deduced that there are missing spots between and . For missing spots arranged in a straight line, their image coordinates can be calculated by linear interpolation. Supposing that image coordinates and are and , and row and column indexes and are and , we can reconstruct the image coordinates of the ith missing spot as follows.

The row and column indexes of can be determined by the following.

From the above, it is known that the search direction is correct in Figure 4. For the other three search directions, we can still use Equation (17) to calculate the image coordinates of missing spots. However, Equation (18) could not be used to calculate the row and column indexes of missing spots for the other three directions and is only suitable for the search direction of right. In fact, for the ith missing spot, we can determine its row and column indexes according to Table 1 if the search direction is given.

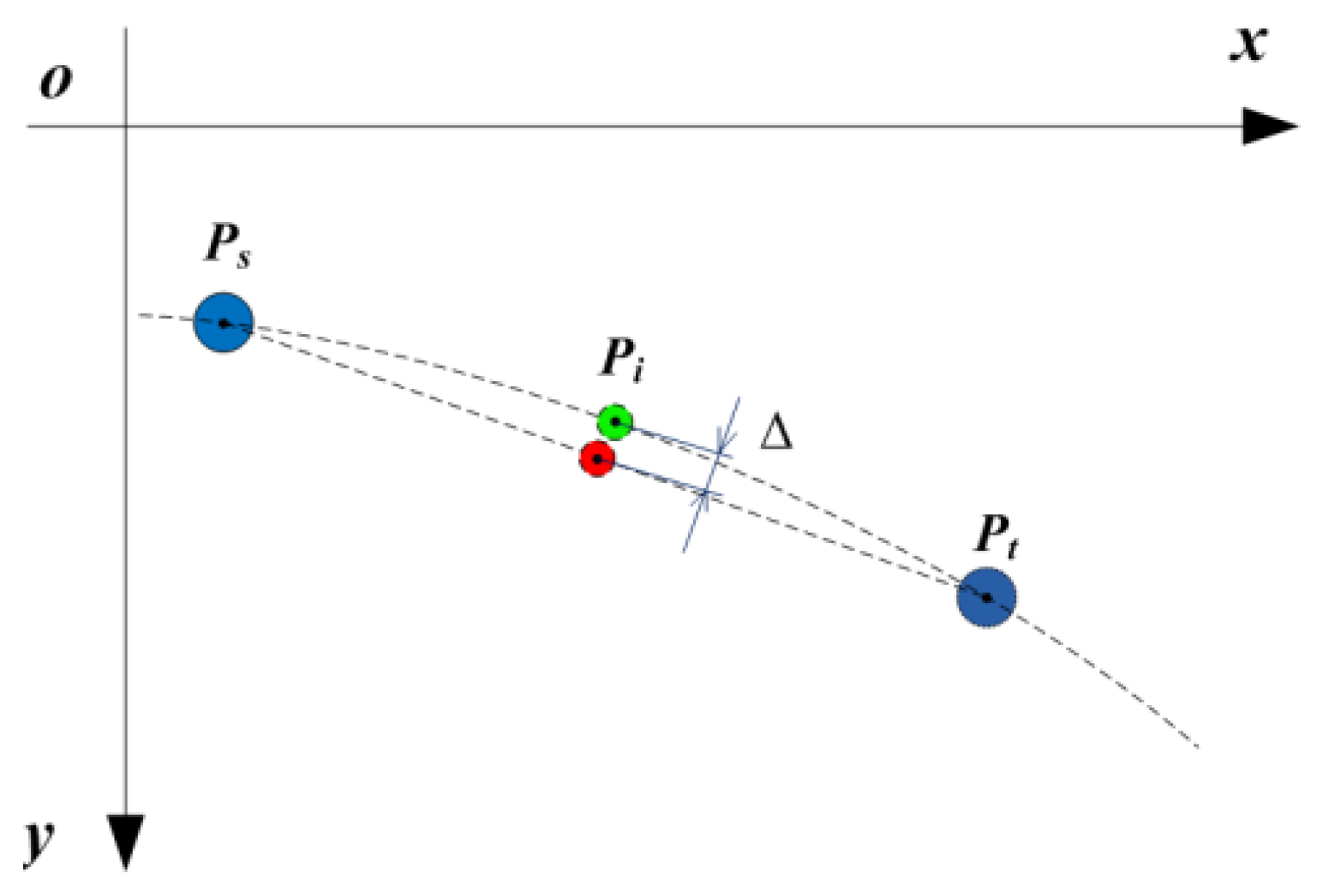

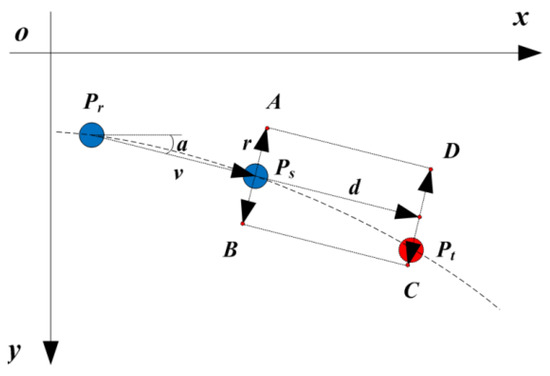

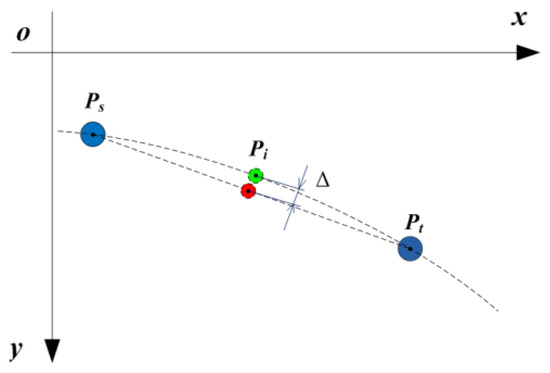

Compared with in a straight line, the case that missing spots are in a curve is more general. As spots are not arranged in a straight line, the linear interpolation is not suitable for calculating the image coordinates of these missing spots, and it could result in a calculation error, as shown in Figure 6. In Figure 6, and are two coded spots for which their row and column indexes are known, while is a missing spot between and . The green spot is the theoretical image coordinates of , and the red spots are the ones calculated by the linear interpolation method. It is clearly observed that there is a deviation between these two spots.

Figure 6.

The calculation error of missing spots in a curve by linear interpolation.

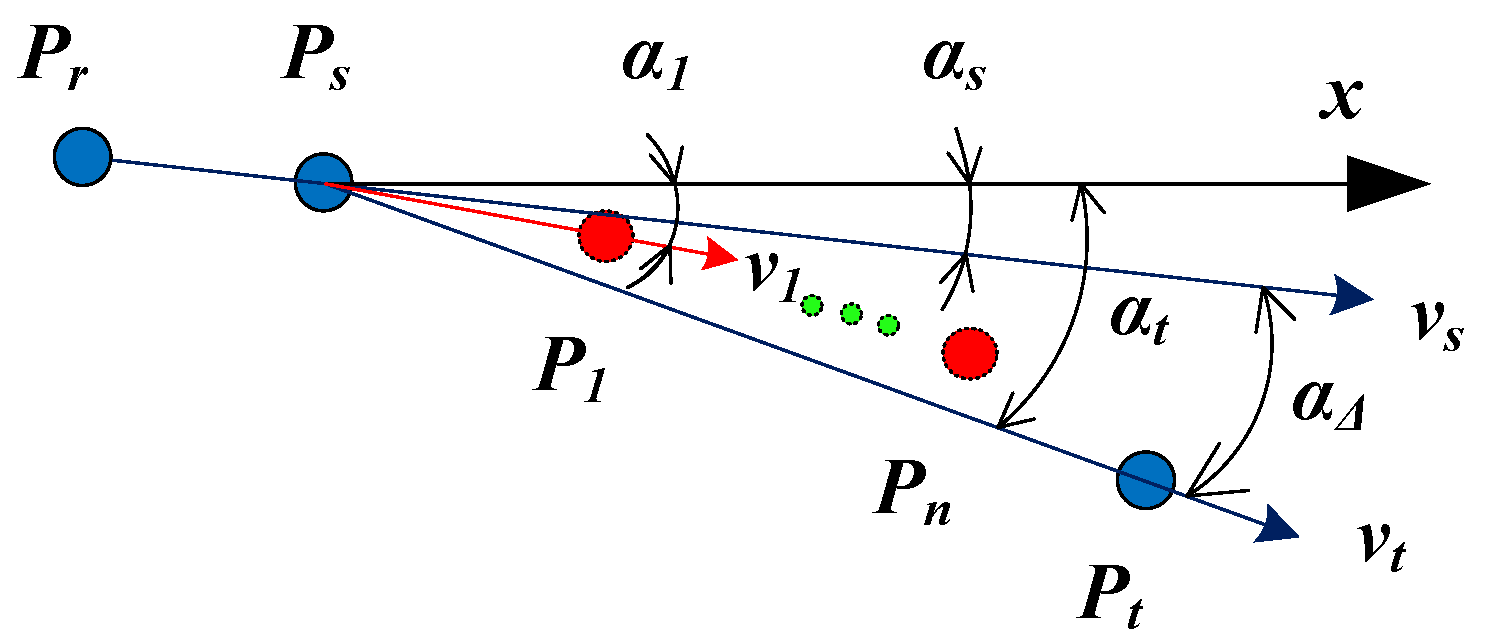

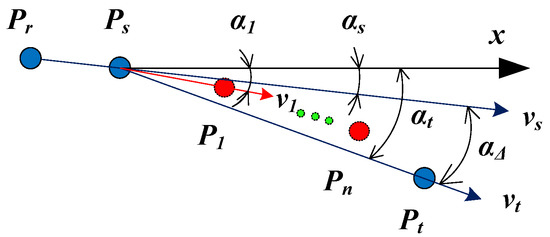

In order to reduce the calculation error of the linear interpolation method, a new interpolation method of missing spots in a curve is presented. The method is more accurate than the linear interpolation method. Moreover, it is efficient and simple to be executed. Suppose that there are missing spots between and , which are denoted by , , …, , respectively, as shown in Figure 7.

Figure 7.

The interpolation of missing spots in a curve.

In Figure 7, ; ; is the angle between and axes; is the angle between and axes; and is the angle between and . First of all, the missing spot is interpolated as an example to explain the interpolation method. If the pixel coordinates of are (, ), then can be obtained. Supposing denotes the angle between and axes, we can approximately calculate it by using the following equations.

Moreover, if the ambiguity of arccosine in the range of (0, 2) is considered, and can be calculated as follows.

According to Equations (21) and (22), it can be deduced that and . Then, according to Equation (20), can be obtained. However, considering the physical meaning of the angle between two vectors, should be in a range of (, ). By employing the cycle of 2, Equation (20) can be corrected as follows.

Therefore, can be calculated according to Equations (19) and (21)–(23), and then the pixel coordinates of can be calculated according to the following.

Then, can be used as a new starting point, and the pixel coordinates of can be obtained by repeating the above process. The above process can be continuously repeated until the last missing spot is determined.

3. Experiments

In order to verify the performance of the proposed coding method, some patterns of spot array generated by a computer are tested. Then, the proposed coding method is used for stereo matching, and two objects with continuous surfaces are measured by a developed active stereovision system. The details of the experiments are presented as follow.

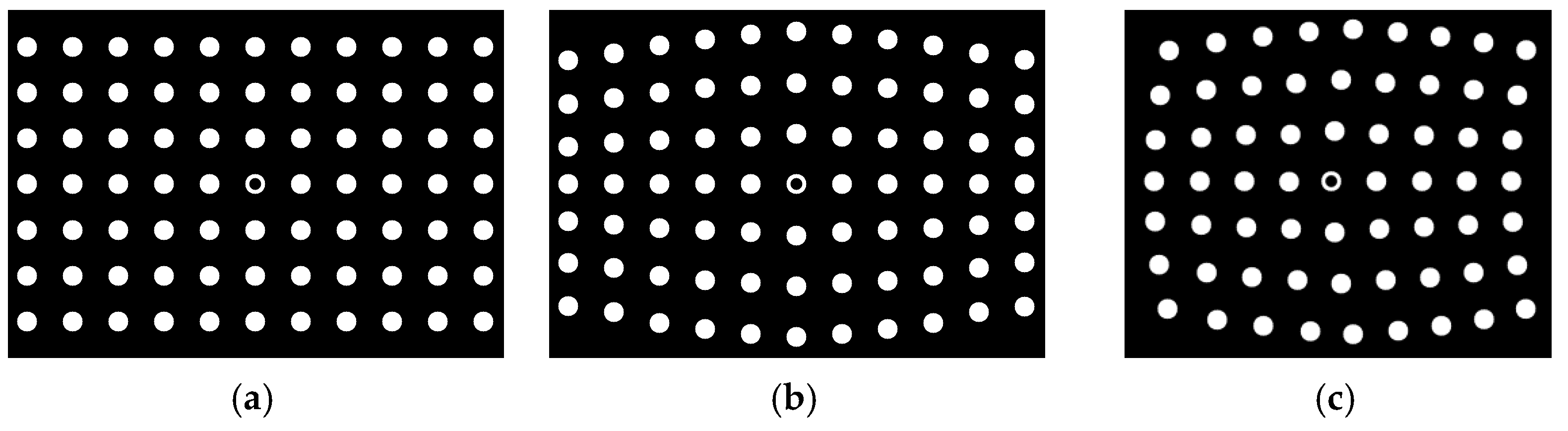

3.1. Coding Simulations

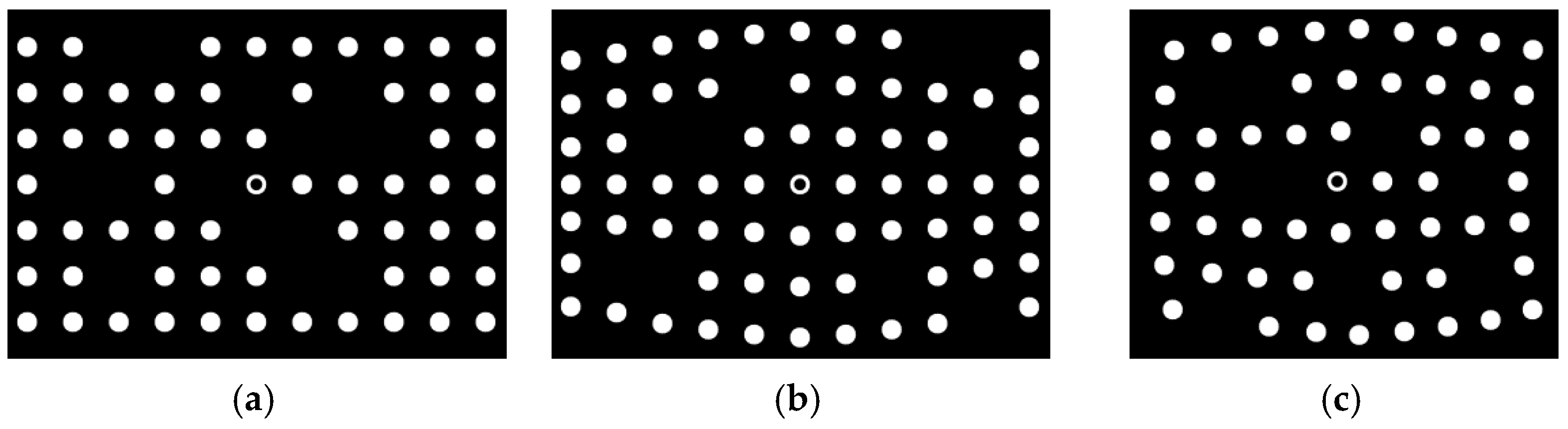

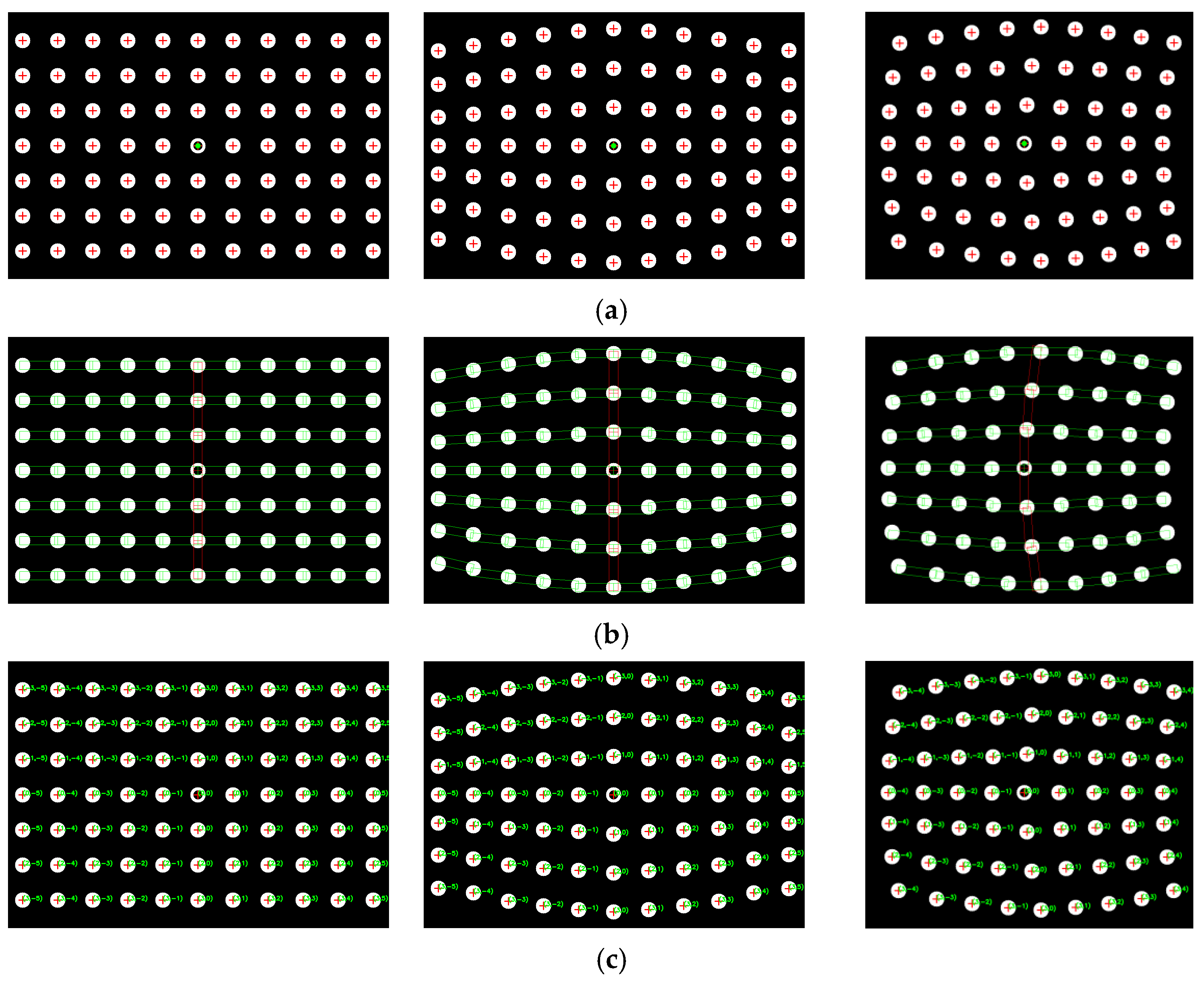

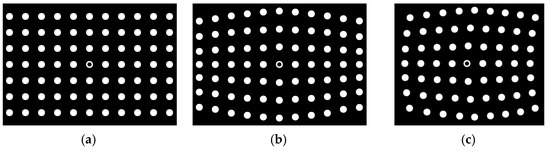

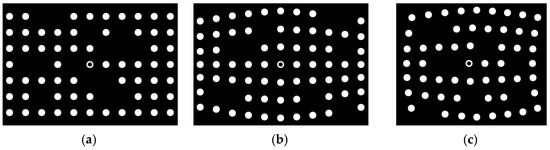

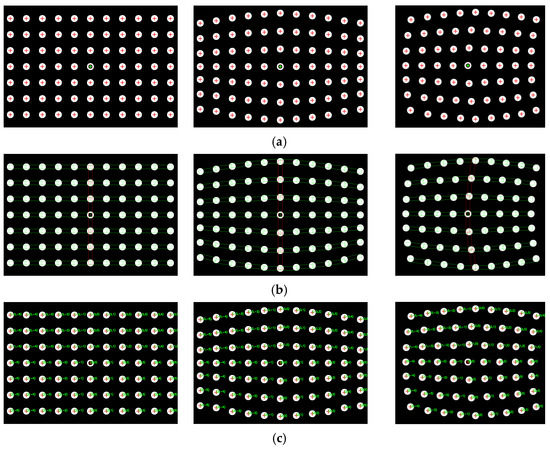

Firstly, two sets of spot-array patterns are generated, as shown in Figure 8 and Figure 9. Compared with Figure 8, the spot-array patterns in Figure 9 are not complete, and there are some missing spots in these patterns. All of these patterns are coded by the algorithm, and the results are shown in Figure 10 and Figure 11, respectively.

Figure 8.

Complete spot-array patterns, (a) non-bend arrangement, (b) row-bend arrangement and (c) bidirection-bend arrangement.

Figure 9.

Incomplete spot-array patterns, (a) non-bend arrangement, (b) row-bend arrangement and (c) bidirection-bend arrangement.

Figure 10.

The coding results of complete spot-array patterns: (a) centers detection, (b) the display of rectangle templates and (c) the display of coding indexes.

Figure 11.

The coding results of incomplete spot-array patterns, (a) the display of rectangle templates and (b) the display of coding indexes.

Figure 10a shows that the proposed method can extract the centers of all spots. The reference ring for which its center is marked by a small green spot is successfully distinguished from circular spots. Figure 10b demonstrates that rectangle templates can accurately find spots along both the straight and curved search path. Finally, the spots in each pattern are exactly coded with row and column indexes, as shown in Figure 10c. Furthermore, for the case that there are some missing spots in the search path, the proposed algorithm can not only reconstruct the missing spots but also code them correctly, which are denoted by the red spots and demonstrated in Figure 11a,b.

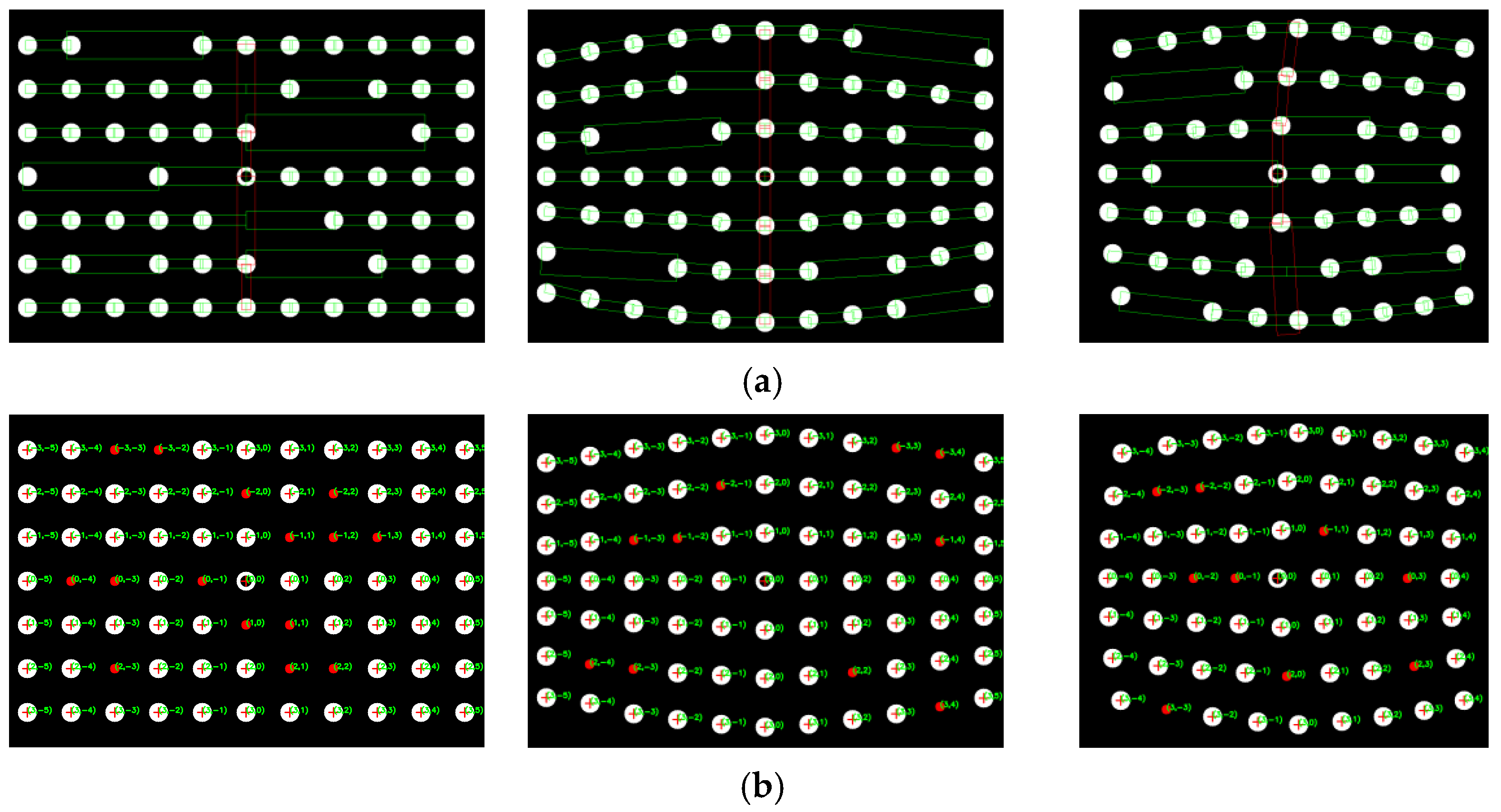

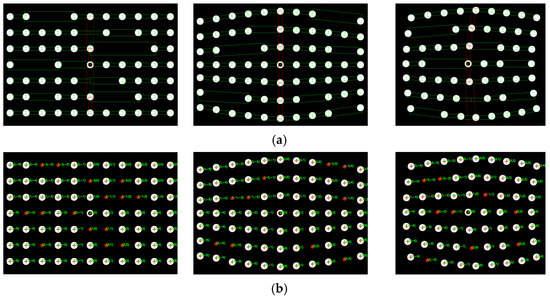

3.2. 3D Measurements

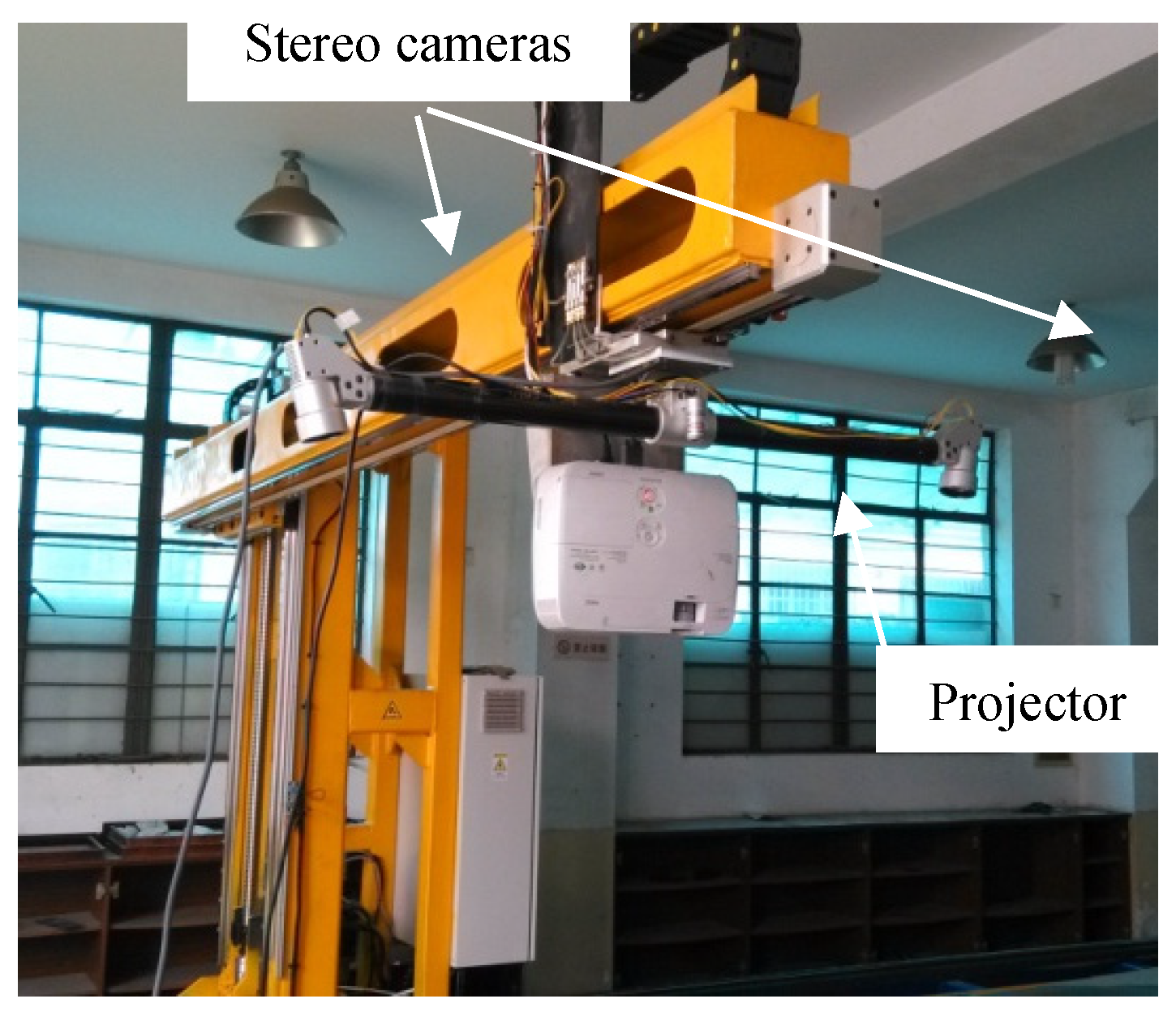

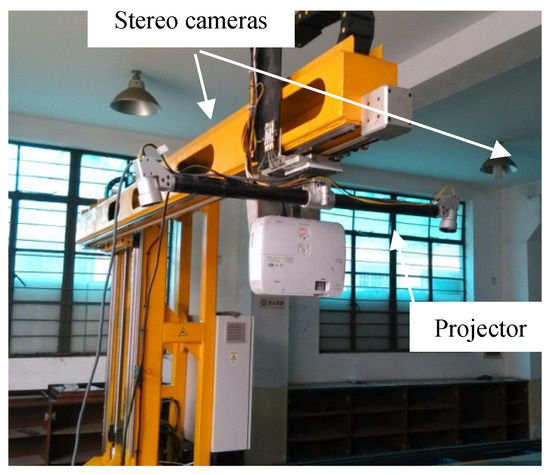

In order to verify the proposed topology-based stereo matching method for real 3D measurement, an experimental environment has been constructed, as shown in Figure 12. The constructed system includes two identical cameras and one projector. The camera resolution is 2048 × 1080 pixels, and the lens focal length is 8 mm. The projector resolution is 1024 × 768 pixels. The baseline length is about 1000 mm, and the working distance is about 1500 mm. The intrinsic and structure parameters of these two cameras are calibrated by the method proposed in Reference [25]. The projector does not need to be calibrated, because it is not involved in the triangulation process. The projector projects spot-array patterns onto the target surface, and the cameras capture pairs of images. Based on the proposed matching method, the corresponding spot centers can be established, which can be further 3D triangulated to reconstruct the surface shape.

Figure 12.

The experiment system of active binocular stereovision.

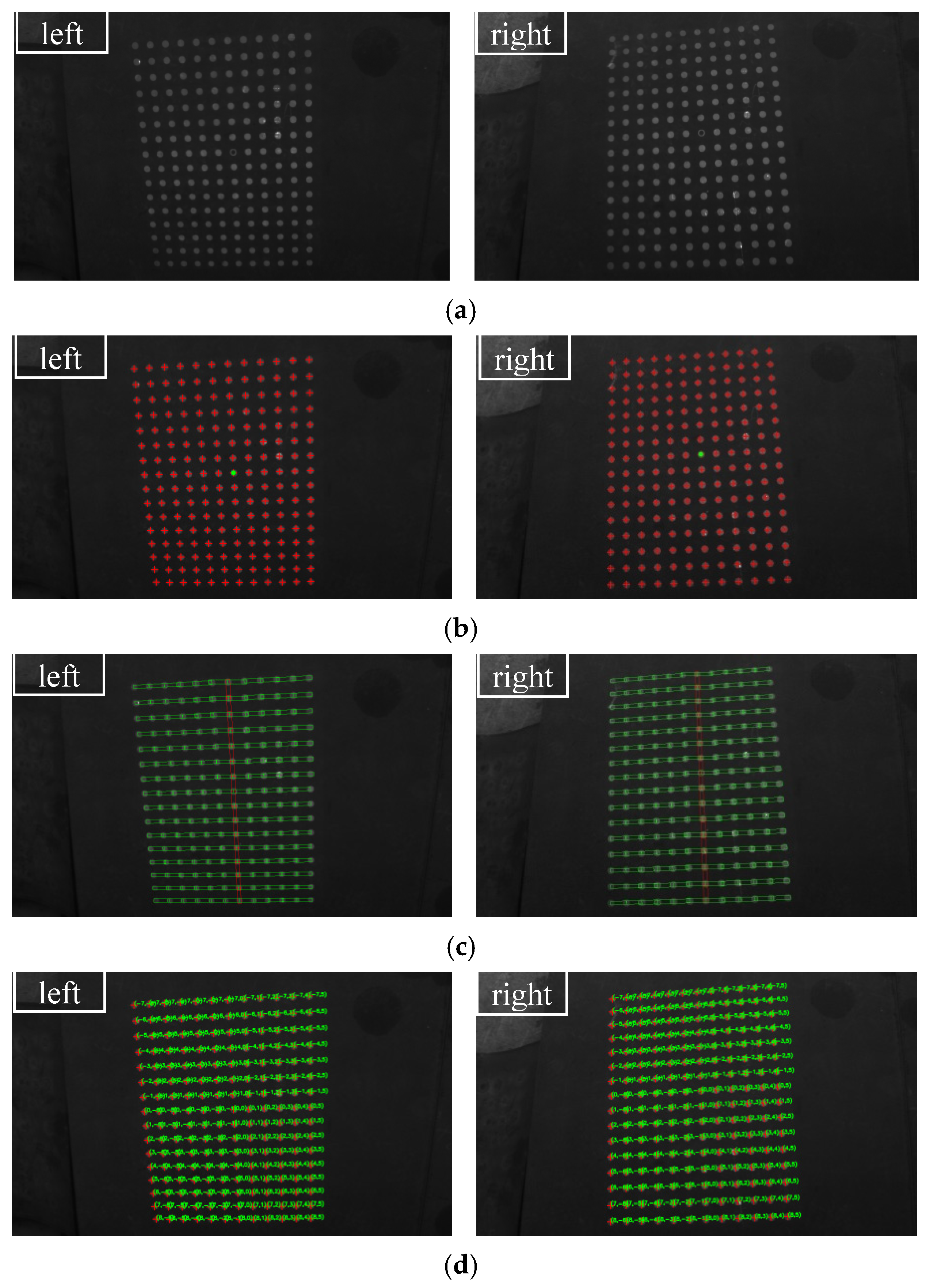

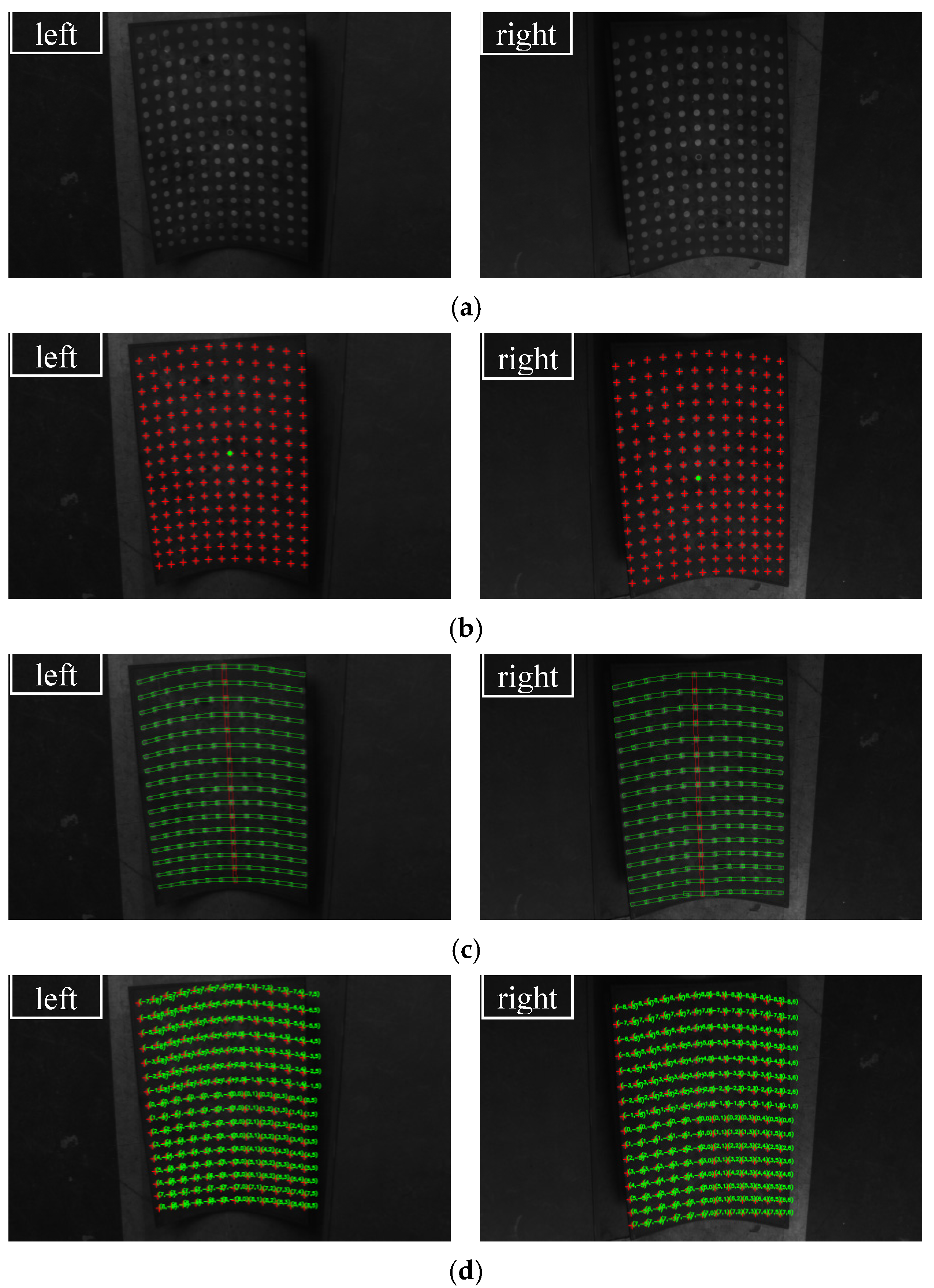

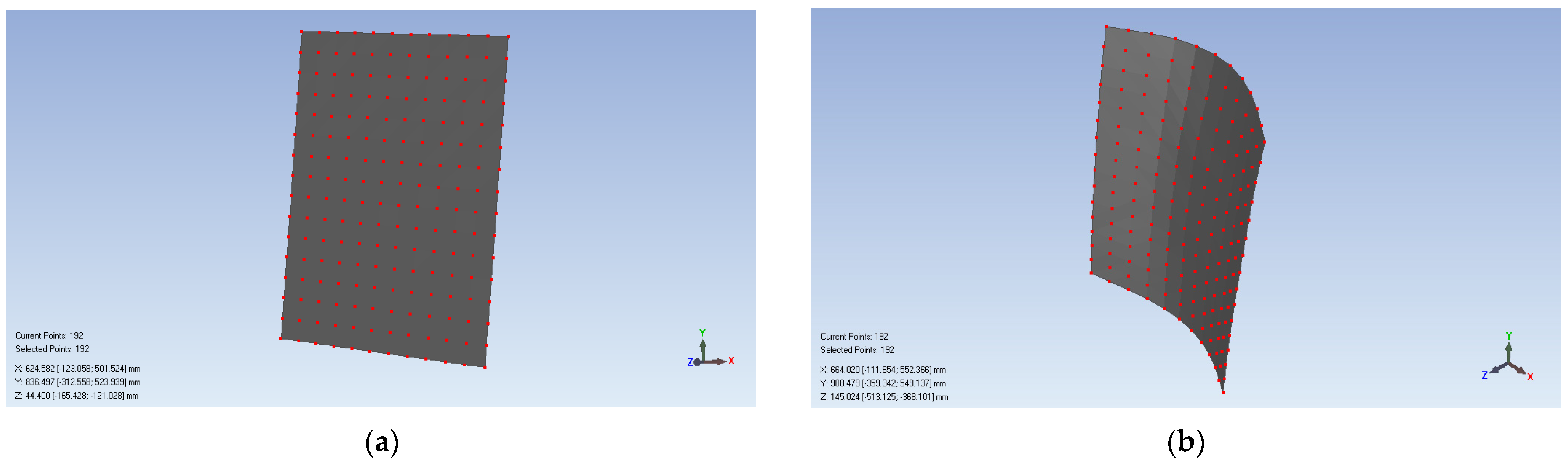

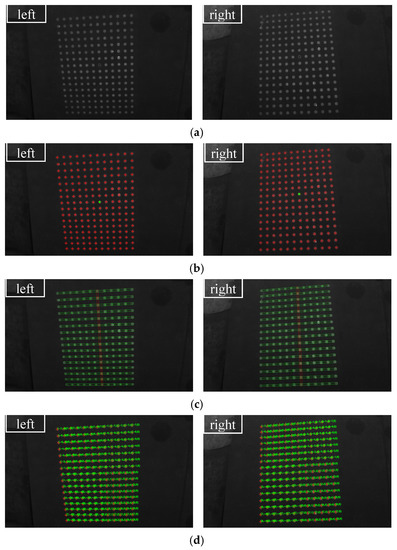

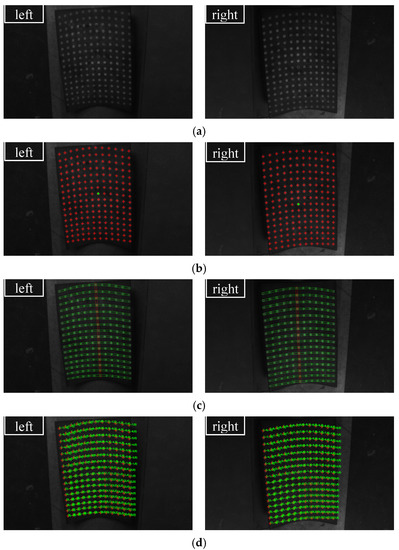

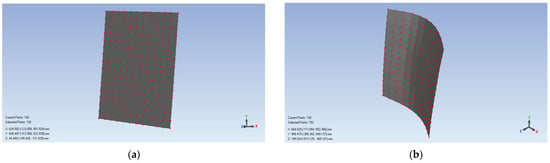

We use this system to measure a flat steel plate and a saddle-shaped hull plate to verify the proposed topology-based stereo matching method. The flat steel plate has a size of 650 mm × 700 mm, and the saddle-shaped hull plate is a real part from a ship building factory. The measurement processes and results are shown in Figure 13 and Figure 14, respectively. Figure 13a and Figure 14b show the original captured scenes where the spot-array pattern is projected onto the plates. Figure 13b and Figure 14b show that the proposed method can successfully extract the reference ring spot from circular spots and extract the circular spot centers. Figure 13c and Figure 14c demonstrate that the rectangle templates can accurately find spots along both the straight and curved search paths. Then, these spots are exactly coded with row and column indexes, as shown in Figure 13d and Figure 14d. Finally, with the obtained coded indexes, the corresponding spots in the left and right camera images can be matched, and they can be further used to three-dimensionally reconstruct these spot centers. The 3D reconstruction results of the flat steel plate and the saddle-shaped hull plater are provided in Figure 15.

Figure 13.

The coding process of the flat steel plate captured in the left and right cameras: (a) projection of a spot array on the surface, (b) centers detection, (c) rectangle templates display and (d) coding indexes display.

Figure 14.

The coding process of the saddle-shaped plate captured in the left and right cameras: (a) projection of a spot array on the surface, (b) centers detection, (c) rectangle templates display and (d) coding indexes display.

Figure 15.

The 3D reconstruction results: (a) the flat steel plate and (b) the saddle-shaped hull plate.

4. Conclusions

In order to avoid projector calibration and to improve measurement efficiency, a topology-based method of active stereo matching using a single pattern of spot array is proposed in this paper. The pattern of spot array is designed with a reference ring spot. Each spot in the pattern can be exactly and uniquely coded with the row and column indexes according to the topological search path. Coding the spots arranged in both a straight line and a curve is studied, and the issue of some missing spots in a search path is also analyzed. In order to solve these problems effectively, a method using rectangle templates to find uncoded spots is proposed. Moreover, an interpolation method that can rebuild missing spots is also developed. Compared with our previous work [23], the proposed method does not need to project extra gray code patterns; thus, this renders the measurement much faster and suitable for measuring a formed hull plate even in a workshop environment that normally has vibrations. Finally, computer simulations and real data testing show that the proposed method has a good performance. In addition to the ship building industry, the proposed topology-based stereo matching method for one-shot 3D measurement may contribute to real time measurement applications for the automotive industry or other application fields.

Author Contributions

J.M. and X.C. performed the experiments and wrote the manuscript, X.Y. performed the data analyses, Z.W. wrote the initial report, and J.X. contributed to the conception of this study. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (5217050991), the Defense Industrial Technology Development Program (JCKY2020203B039), the Science and Technology Commission of Shanghai Municipality (Project No. 19511106302, 21511102602), and the High-Tech Ship Scientific Research Project of MIIT (MC-201906-Z01).

Acknowledgments

The authors would like to thank Honghui Zhang for his technical support in this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Z. Review of Real-time Three-dimensional Shape Measurement Techniques. Measurement 2020, 156, 107624. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Zhang, Z. Review of single-shot 3D shape measurement by phase calculation-based fringe projection techniques. Opt. Lasers Eng. 2012, 50, 1097–1106. [Google Scholar] [CrossRef]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photon 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Yang, L.; Liu, Y.; Peng, J. Advances techniques of the structured light sensing in intelligent welding robots: A review. Int. J. Adv. Manuf. Technol. 2020, 110, 1027–1046. [Google Scholar] [CrossRef]

- Xu, J.; Xi, N.; Zhang, C.; Shi, Q.A.; Gregory, J. Real-time 3D shape inspection system of automotive parts based on struc-tured light pattern. Opt. Laser Technol. 2011, 43, 1–8. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Y.F.; Zhu, L.M. Discontinuity-preserving decoding of one-shot shape acquisition using regularized col-or. Opt. Laser Eng. 2012, 50, 1416–1422. [Google Scholar] [CrossRef]

- Sagawa, R.; Kawasaki, H.; Kiyota, S.; Furukawa, R. Dense one-shot 3D reconstruction by detecting continuous regions with parallel line projection. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1911–1918. [Google Scholar] [CrossRef]

- Li, Q.; Li, F.; Shi, G.M.; Qi, F.; Shi, Y.X.; Gao, S. Dense Depth Acquisition via One-shot Stripe Structured Light. In Proceedings of the 2013 IEEE International Conference on Visual Communications and Image Processing (IEEE VCIP 2013), Kuching, Malaysia, 17–20 November 2013. [Google Scholar]

- García-Isáis, C.; Ochoa, N.A. One shot profilometry using a composite fringe pattern. Opt. Lasers Eng. 2014, 53, 25–30. [Google Scholar] [CrossRef]

- Lohry, W.; Chen, V.; Zhang, S. Absolute three-dimensional shape measurement using coded fringe patterns without phase unwrapping or projector calibration. Opt. Express 2014, 22, 1287–1301. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, G.; Wei, Z.; Zhou, F. Large 3D free surface measurement using a mobile coded light-based stereo vision system. Sensors Actuators A Phys. 2006, 132, 460–471. [Google Scholar] [CrossRef]

- Pinto, T.; Kohler, C.; Albertazzi, A. Regular mesh measurement of large free form surfaces using stereo vision and fringe projection. Opt. Lasers Eng. 2012, 50, 910–916. [Google Scholar] [CrossRef]

- Han, X.; Huang, P. Combined stereovision and phase shifting method: A new approach for 3D shape measurement. SPIE Eur. Opt. Metrol. 2009, 7389, 73893C. [Google Scholar] [CrossRef]

- Han, X.; Huang, P. Combined stereovision and phase shifting method: Use of a visibility-modulated fringe pattern. SPIE Eur. Opt. Metrol. 2009, 7389, 73893H. [Google Scholar] [CrossRef]

- Uer-Burchardt, C.B.A.; Munkelt, C.; Heinze, M.; Hmstedt, P.K.U.; Notni, G. Fringe code reduction for 3D measurement systems using epipolar geometry. Proc. PCVIA ISPRS 2010, 38, 192–197. [Google Scholar]

- Wang, B.; Liu, W.; Jia, Z.; Lu, X.; Sun, Y. Dimensional measurement of hot, large forgings with stereo vision structured light system. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2011, 225, 901–908. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.Y.; Wang, H.T.; Chen, J.F. Surface Measurement Based on Instantaneous Random Illumination. Chin. J. Aeronaut. 2009, 22, 316–324. [Google Scholar]

- Fernandez, S.; Forest, J.; Salvi, J. Active stereo-matching for one-shot dense reconstruction. In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, ICPRAM 2012, Algarve, Portugal, 6–8 February 2012; pp. 541–545. [Google Scholar]

- Jiang, J.; Cheng, J.; Zhao, H. Stereo Matching Based on Random Speckle Projection for Dynamic 3D Sensing. In Proceedings of the 2012 Eleventh International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 12–15 December 2012; pp. 191–196. [Google Scholar]

- Lee, R.T.; Shiou, F.J. Calculation of the unit normal vector using the cross-curve moving mask method for probe radi-us compensation of a freeform surface measurement. Measurement 2010, 43, 469–478. [Google Scholar] [CrossRef]

- Lee, R.-T.; Shiou, F.-J. Multi-beam laser probe for measuring position and orientation of freeform surface. Measurement 2011, 44, 1–10. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Z.; Zhen, X.; Yang, R. An onsite inspection sensor for the formation of hull plates based on active binocular stereo-vision. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2014, 230, 887–896. [Google Scholar]

- Wang, Z.; Wu, Z.; Zhen, X.; Yang, R.; Xi, J. Iteration-based direct ellipse-specific algebraic fitting method of incomplete spots for onsite three-dimensional measurement. Opt. Eng. 2015, 54, 13109. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Z.; Zhen, X.; Yang, R.; Xi, J.; Chen, X. A two-step calibration method of a large FOV binocular stereovision sensor for onsite measurement. Measurement 2015, 62, 15–24. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).