CMOS Image Sensors in Surveillance System Applications

Abstract

1. Introduction

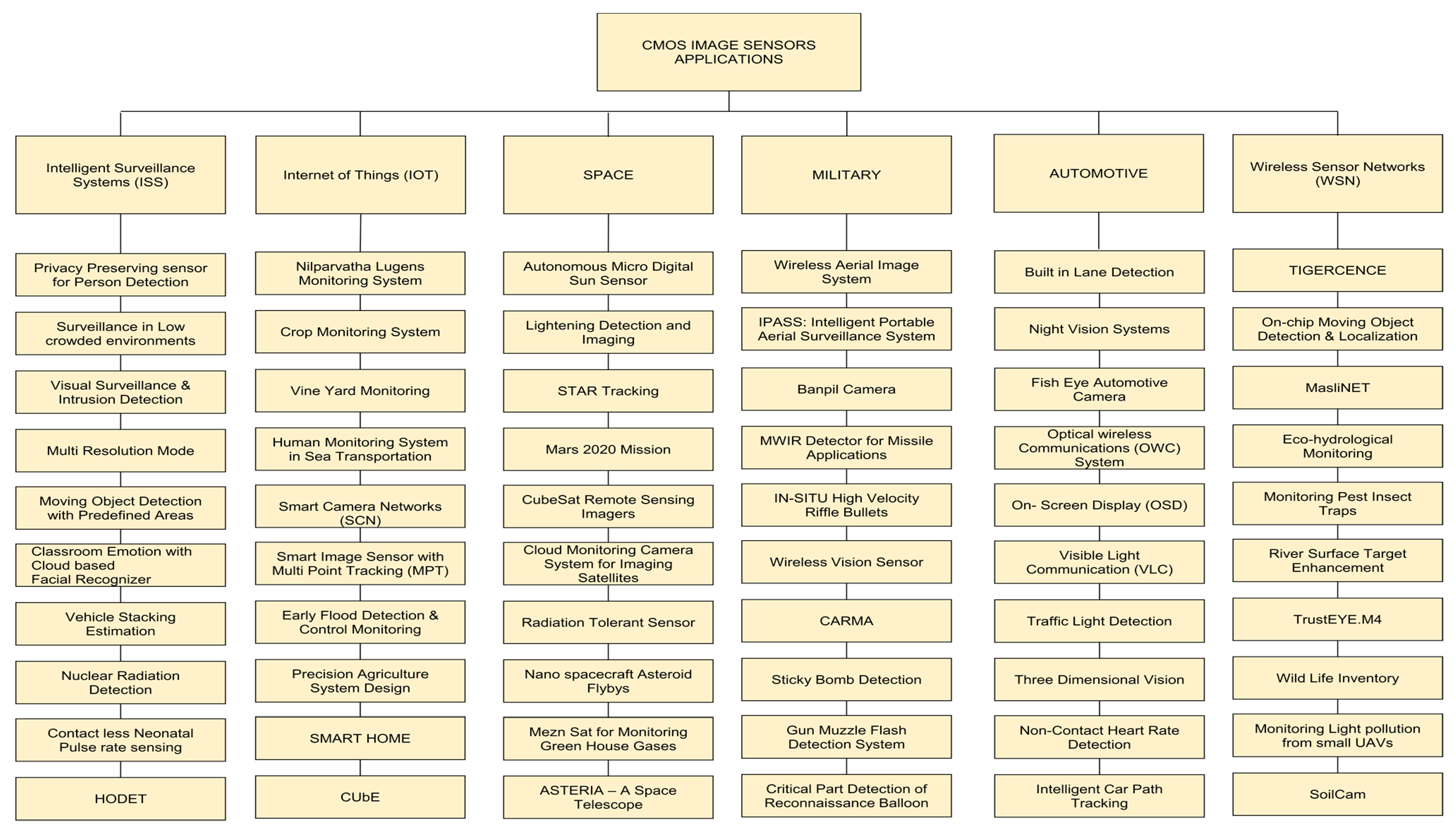

- We have conducted the first state-of-the-art comprehensive survey on CIS from an applications’ perspective in different predominant fields, which was not done before.

- A novel taxonomy has been introduced by us in which work is classified in terms of CIS models, applications, and design characteristics, as shown in Figure 1 and Appendix A Table A1.

- We have noted the limitations and future directions and related works are highlighted.

2. Taxonomy and Related Work

2.1. CMOS Image Sensors and Their Types

2.1.1. CMOS Image Sensor

2.1.2. Pixel Structures

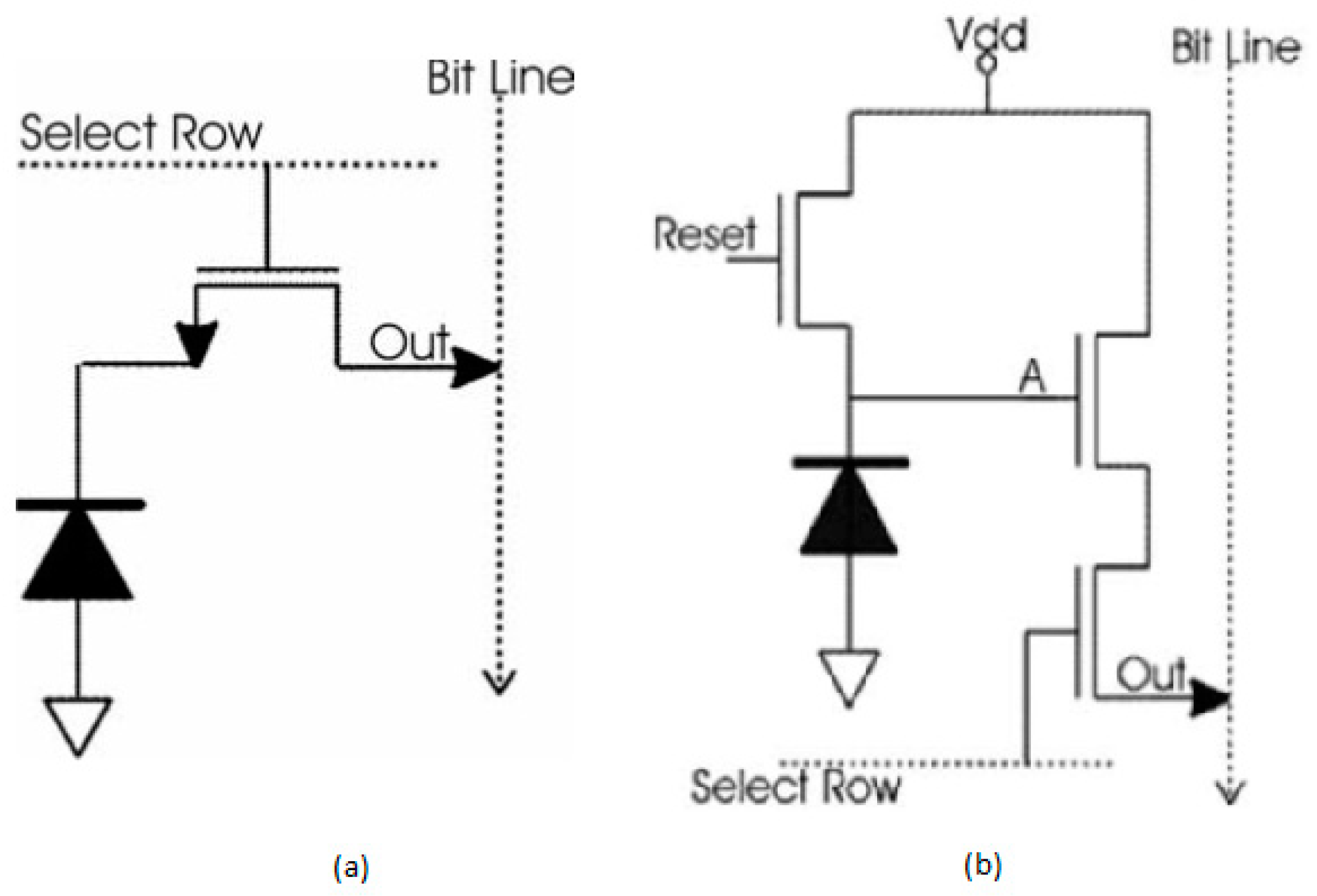

Passive Pixel Sensors

Active Pixel Sensors

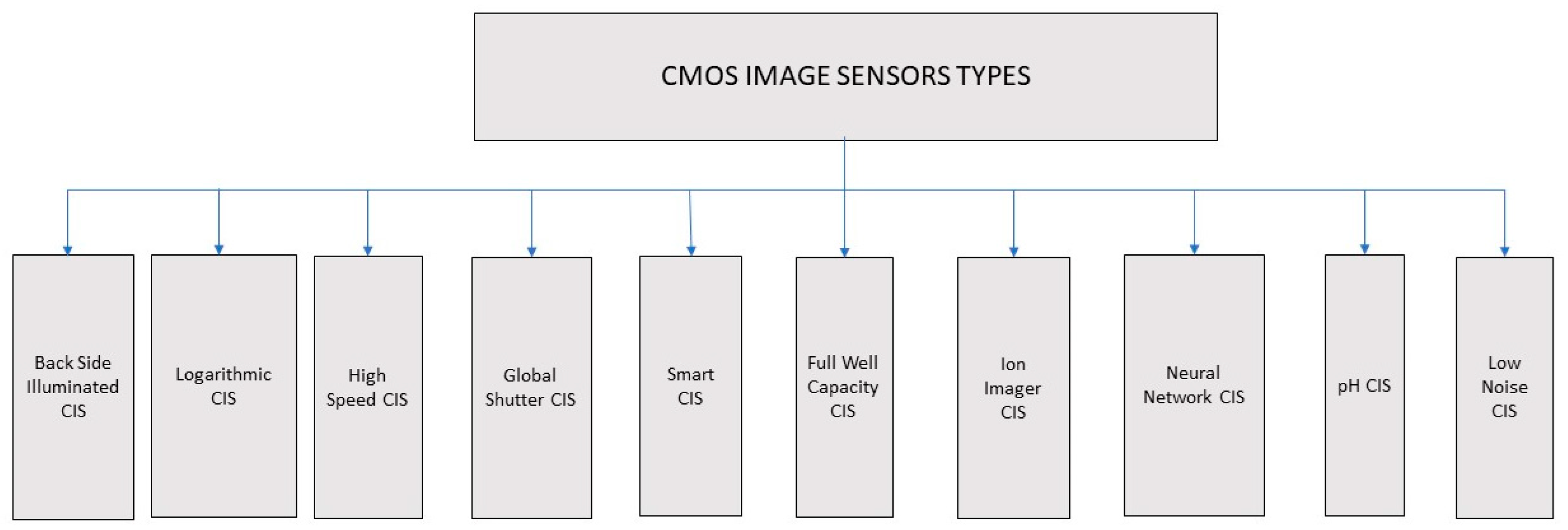

2.1.3. CIS Types

2.1.4. Advantages of CMOS Image Sensors over Charge Coupled Devices

- Generally, CCD sensors require specific fabrication, a dedicated and expensive manufacturing process. In contrast, CMOS image sensors are made using standard manufacturing facilities and can be made at a very low cost.

- The pixel architectures, i.e., APS, usually consume significantly less power, which is a hundred times less than CCD sensors. This parameter makes CMOS image sensors build compact applications that depend on batteries such as cell phones, laptops, etc. However, CCD applications consume immense power due to capacitive devices requiring more clock swings and control signals externally. And also, to operate, CCD systems require voltage regulators with additional power supplies a lot.

- Due to faster frame rates, CMOS APS architectures have been selective imager component in machine vision and motion estimation applications compared to PPS CMOS and CCDs.

- The other advantage of CMOS over CCD is its high integrability on the chip, which allows digital signal processing functions like image stabilization, image compression, multi-resolution imaging, wireless control, color encoding, etc., to call CIS as smart CIS than CCD.

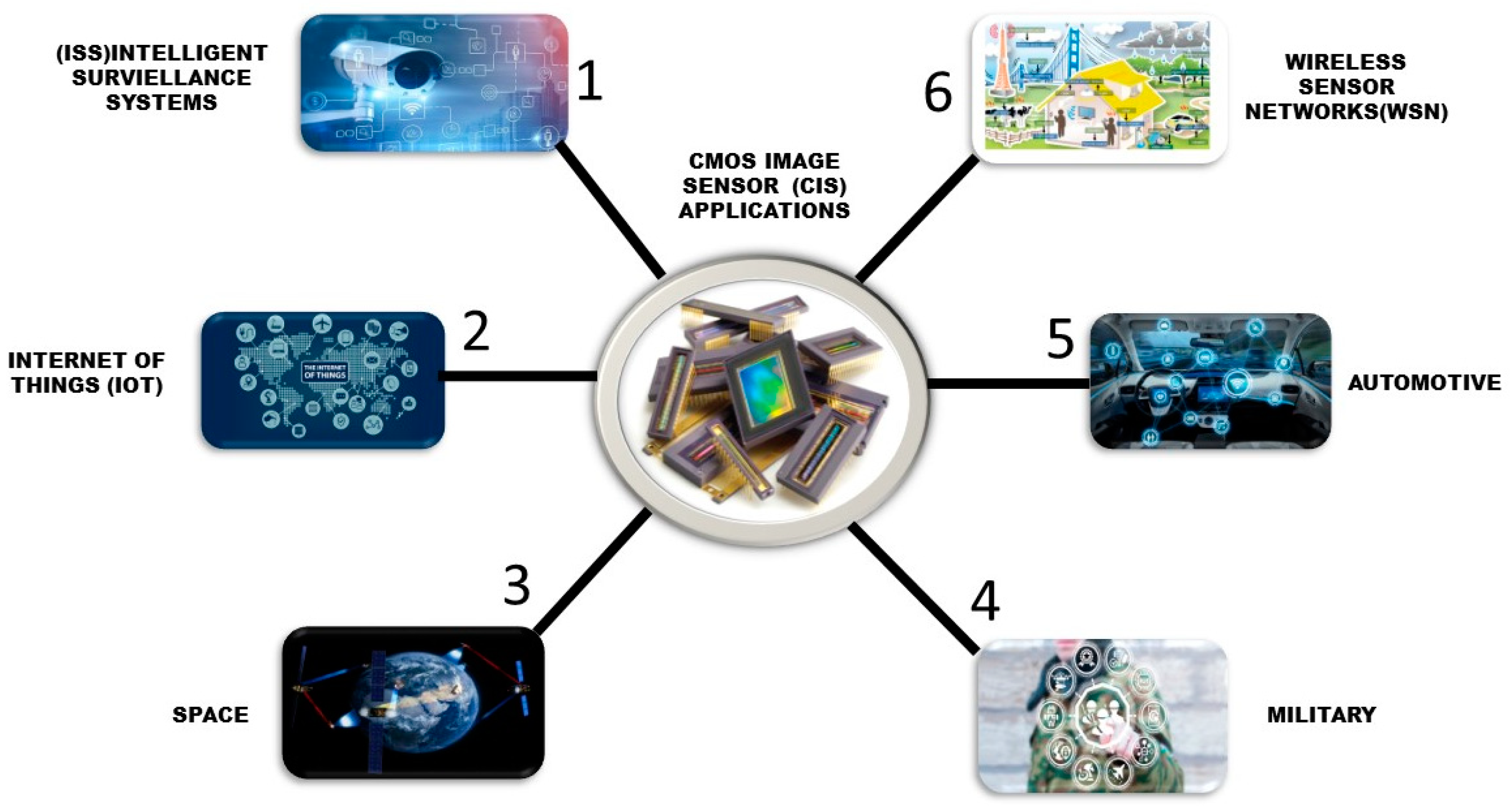

3. CMOS Image Sensor Applications

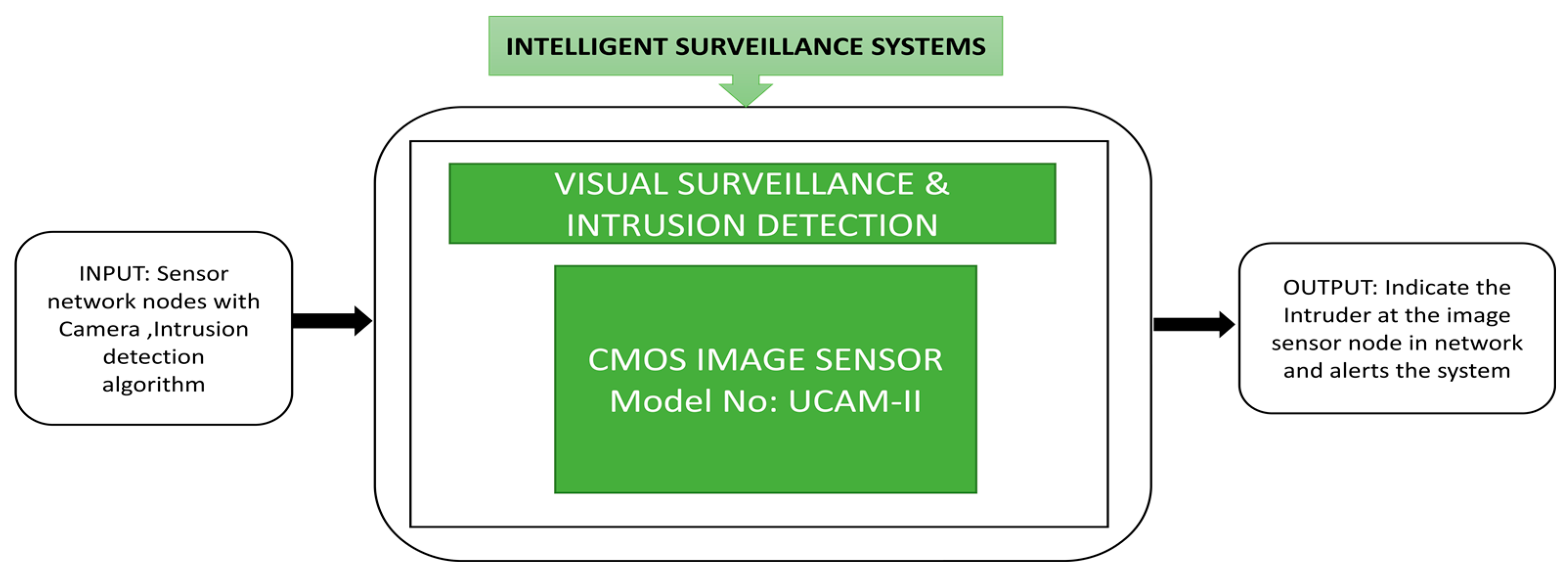

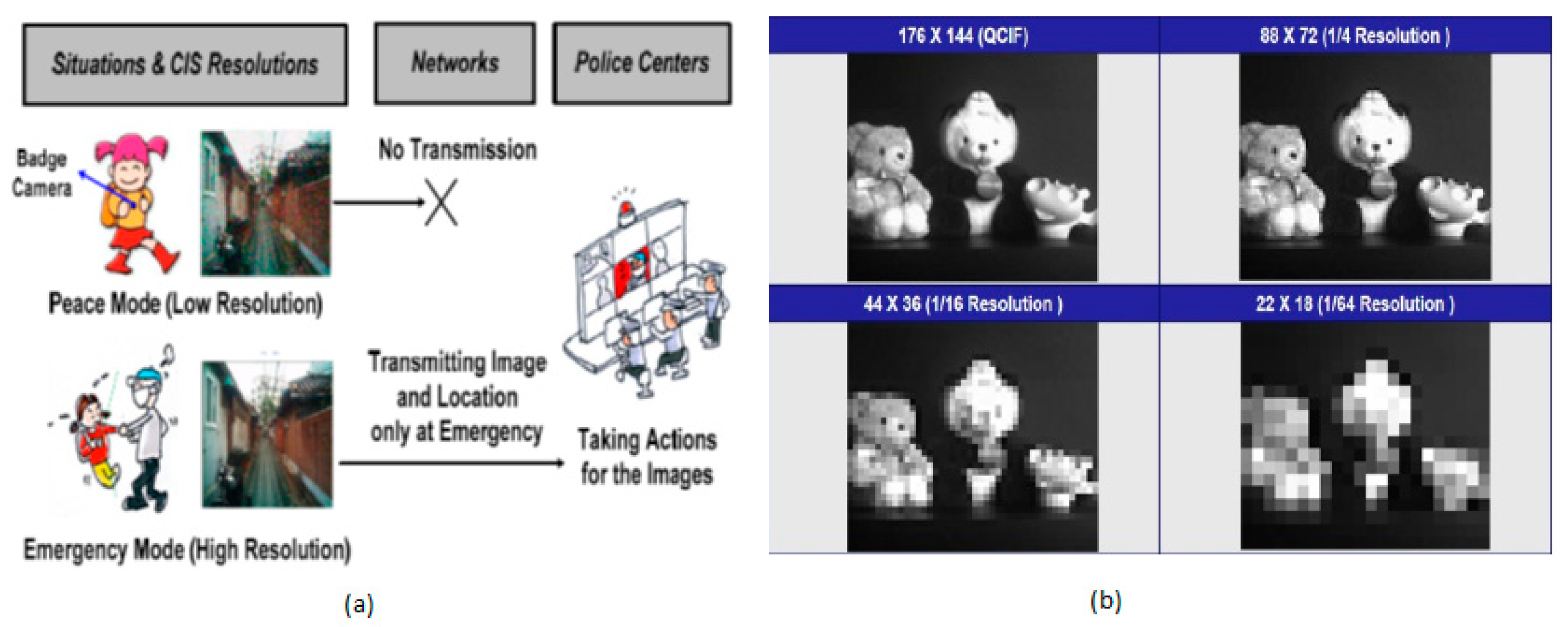

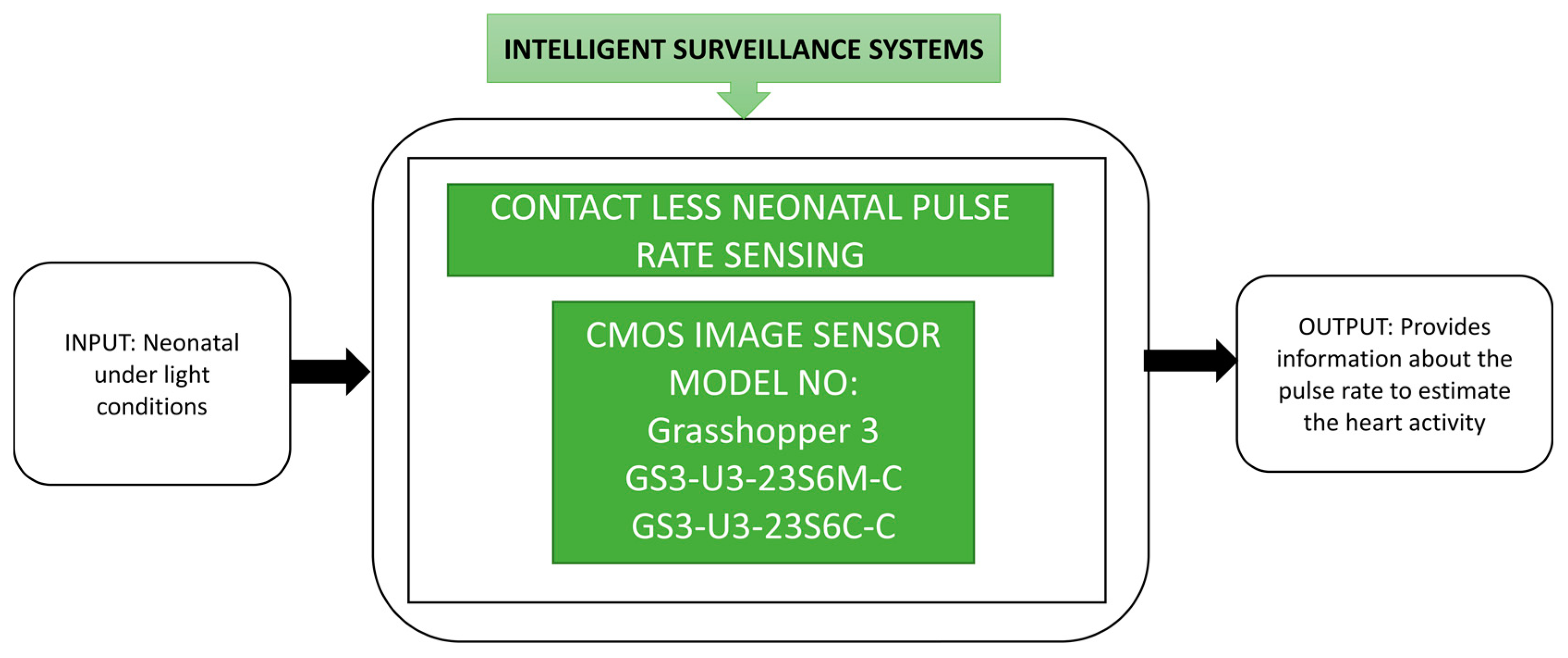

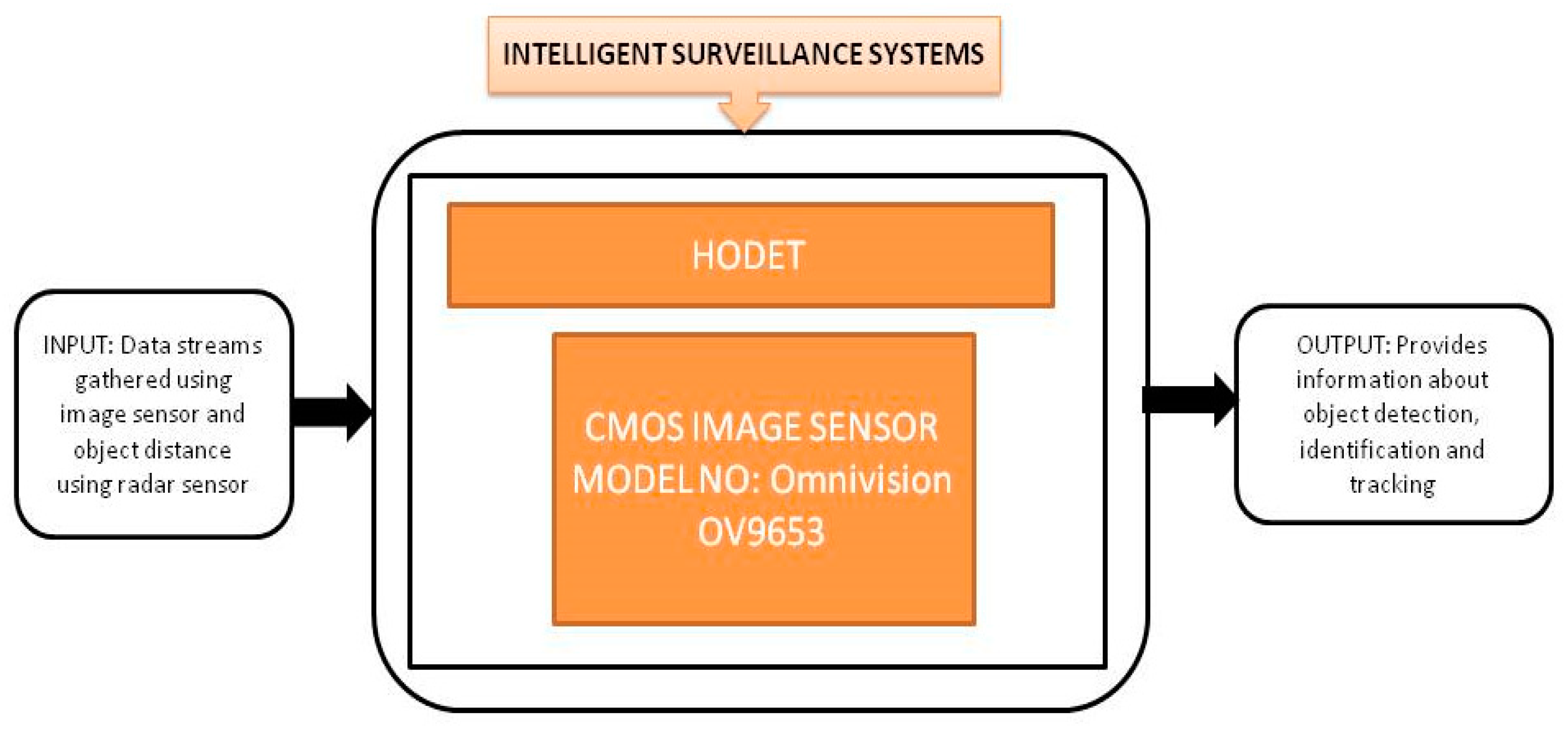

3.1. ISS (Intelligent Surveillance Systems) CIS Applications

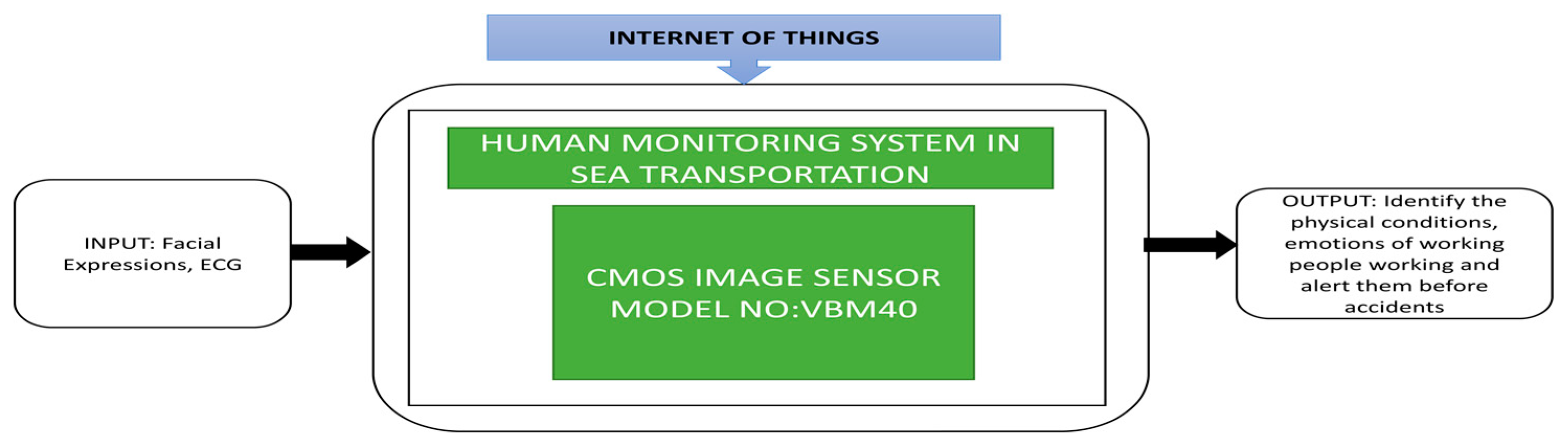

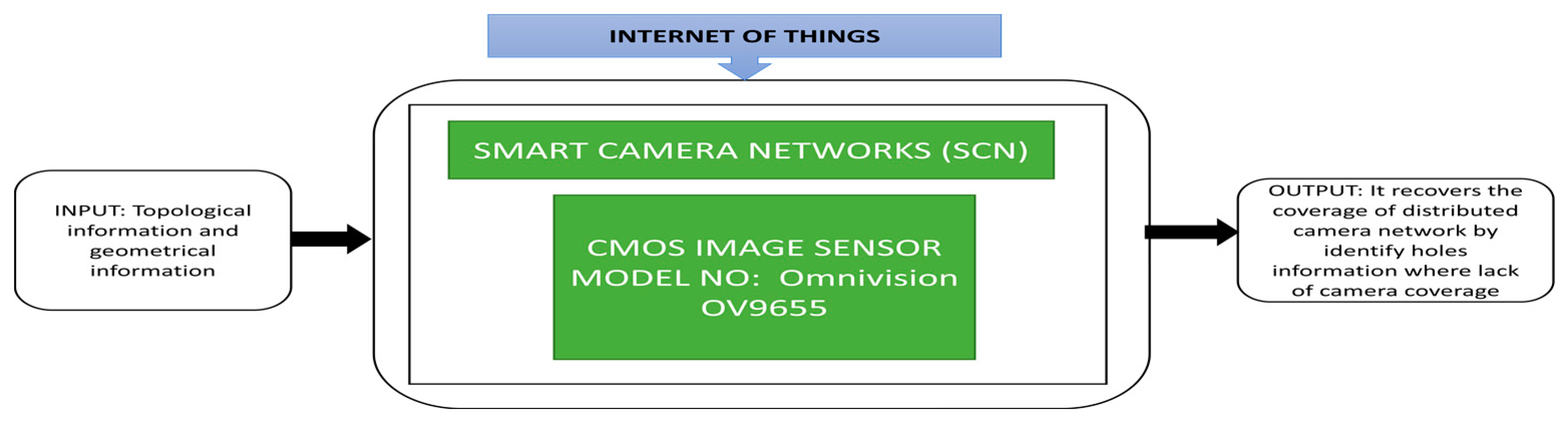

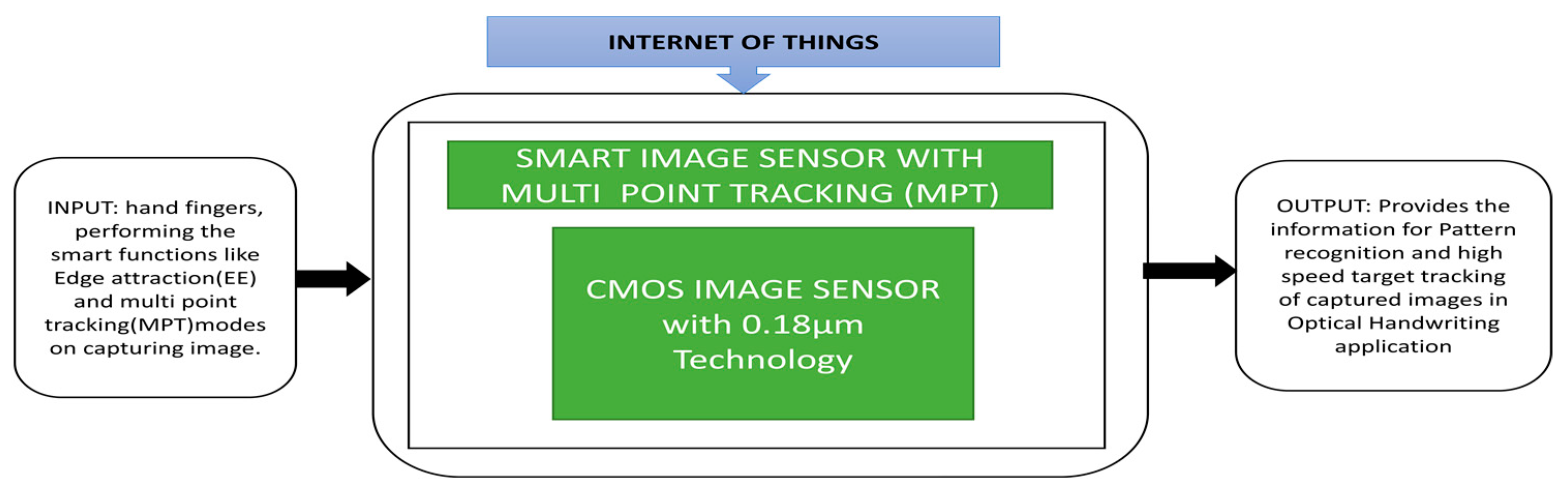

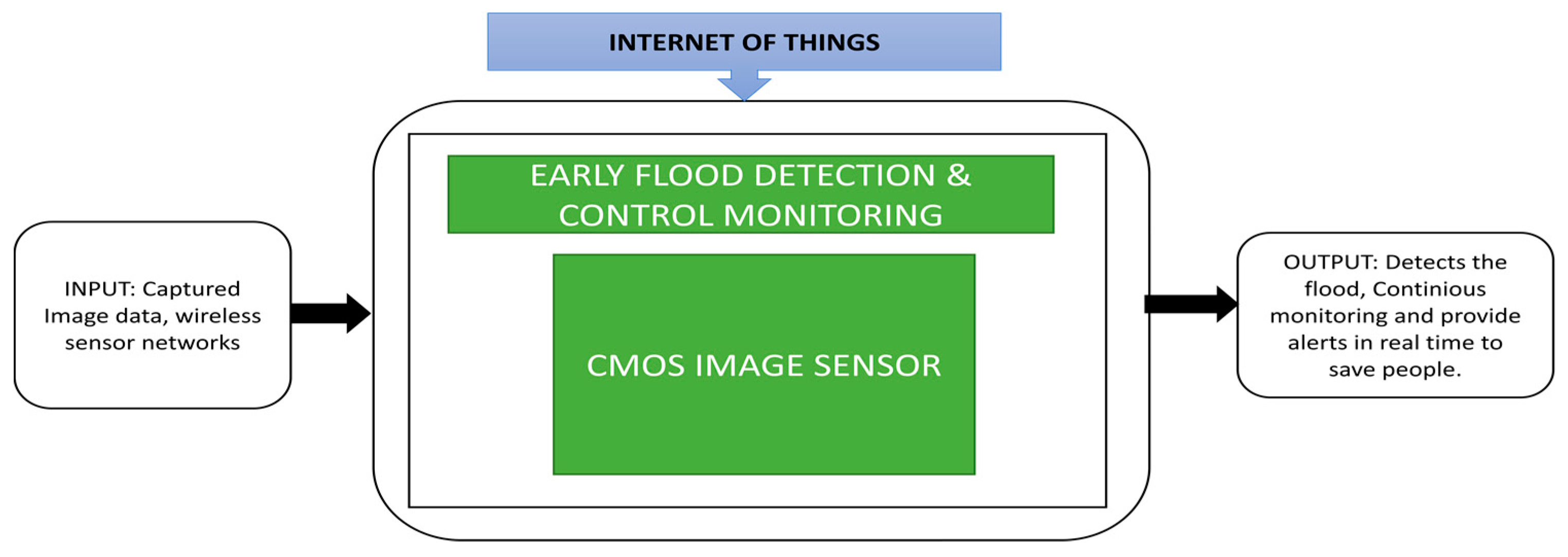

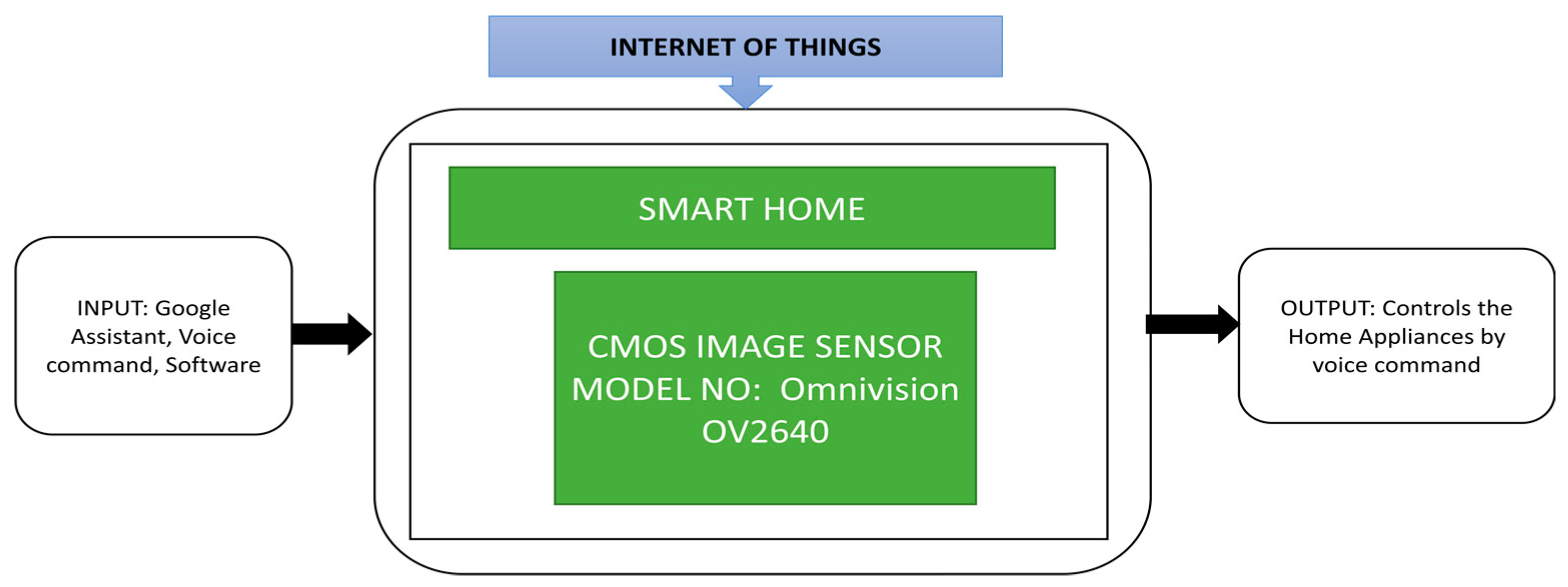

3.2. Internet of Things (IoT) CIS Applications

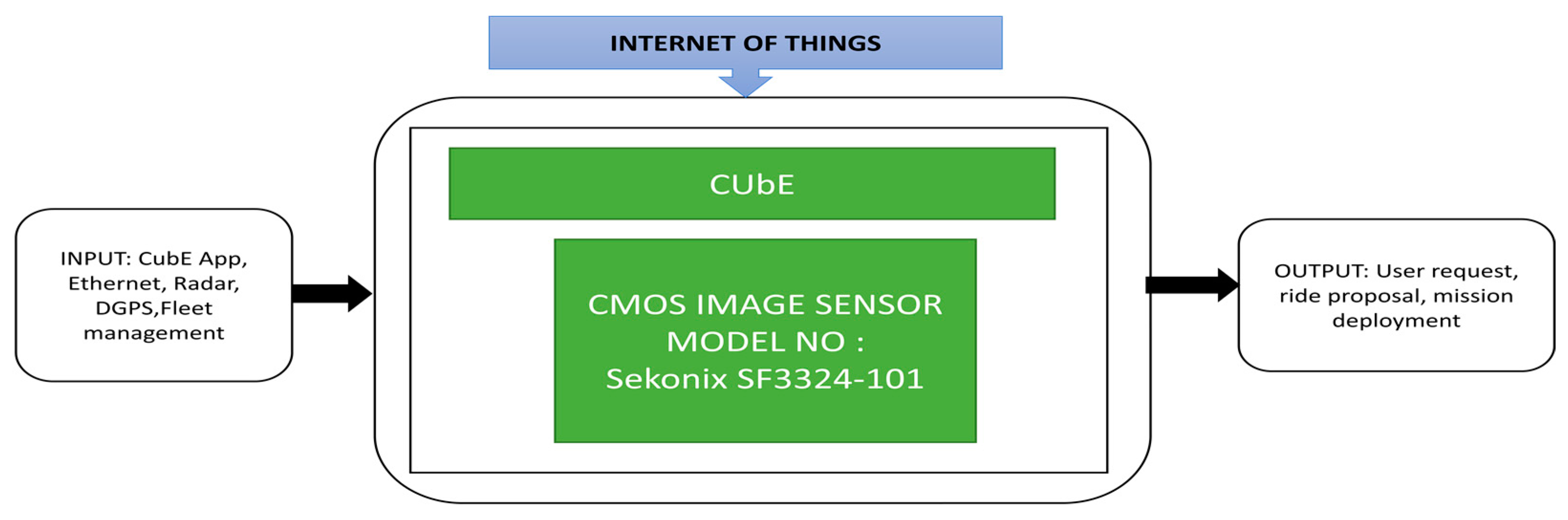

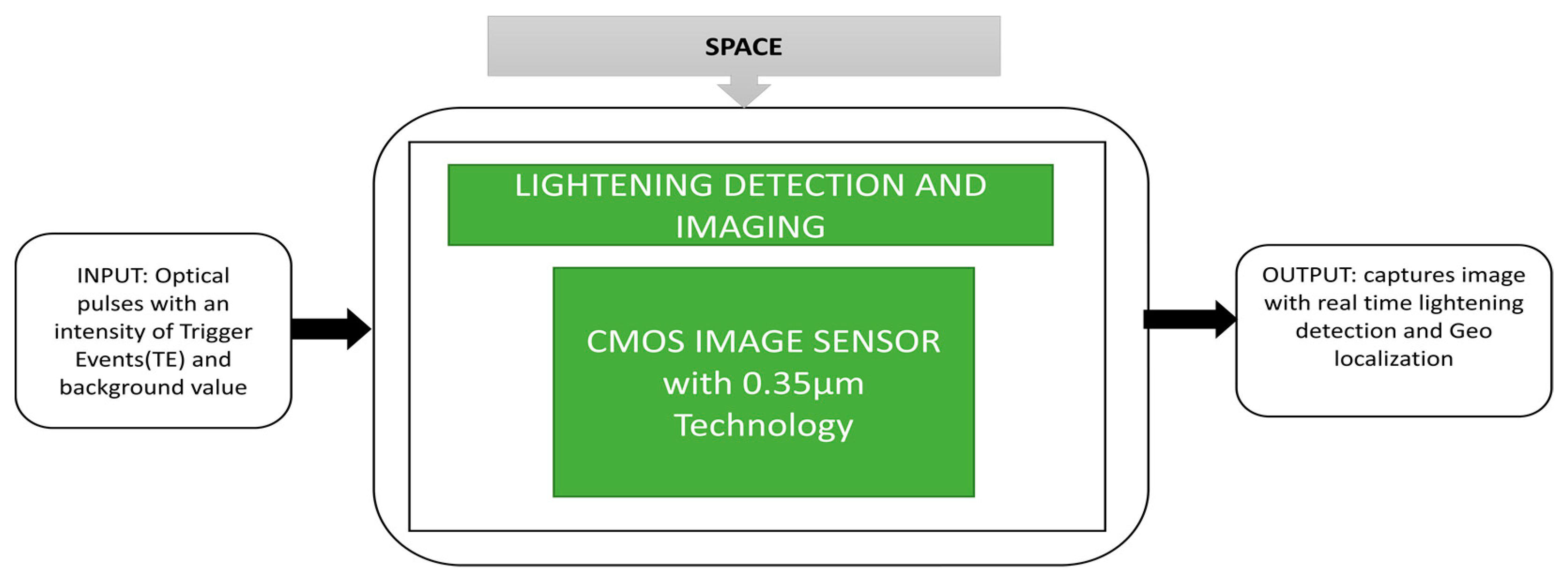

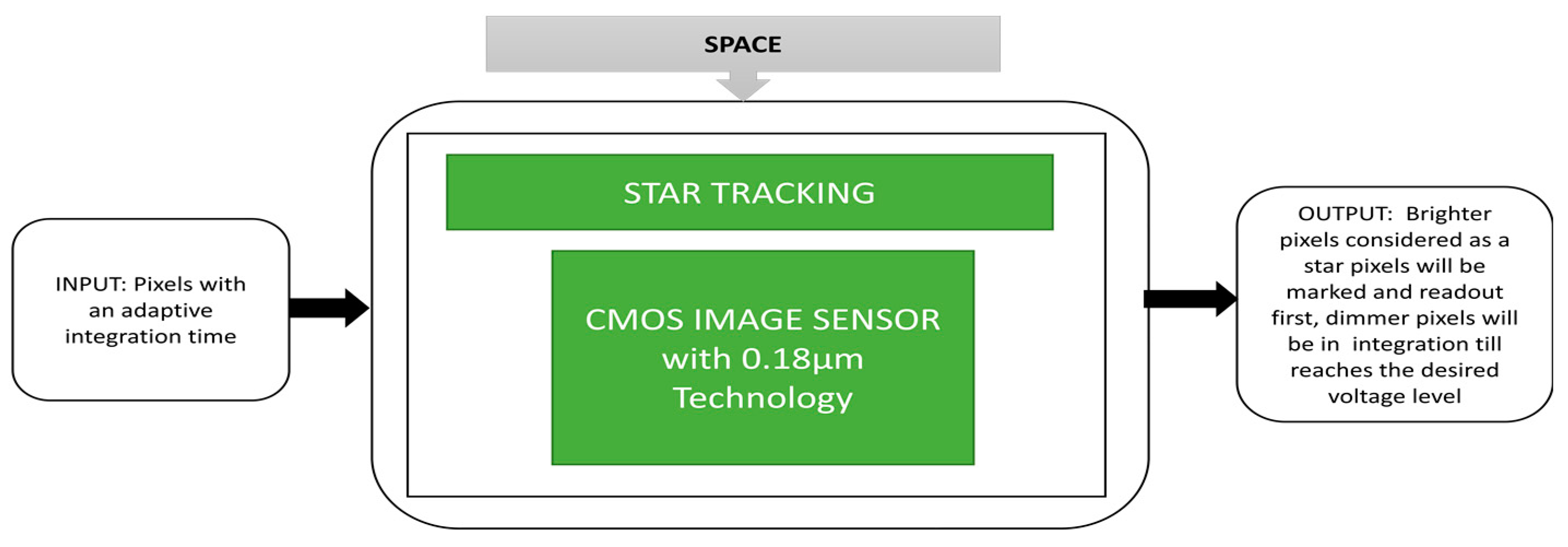

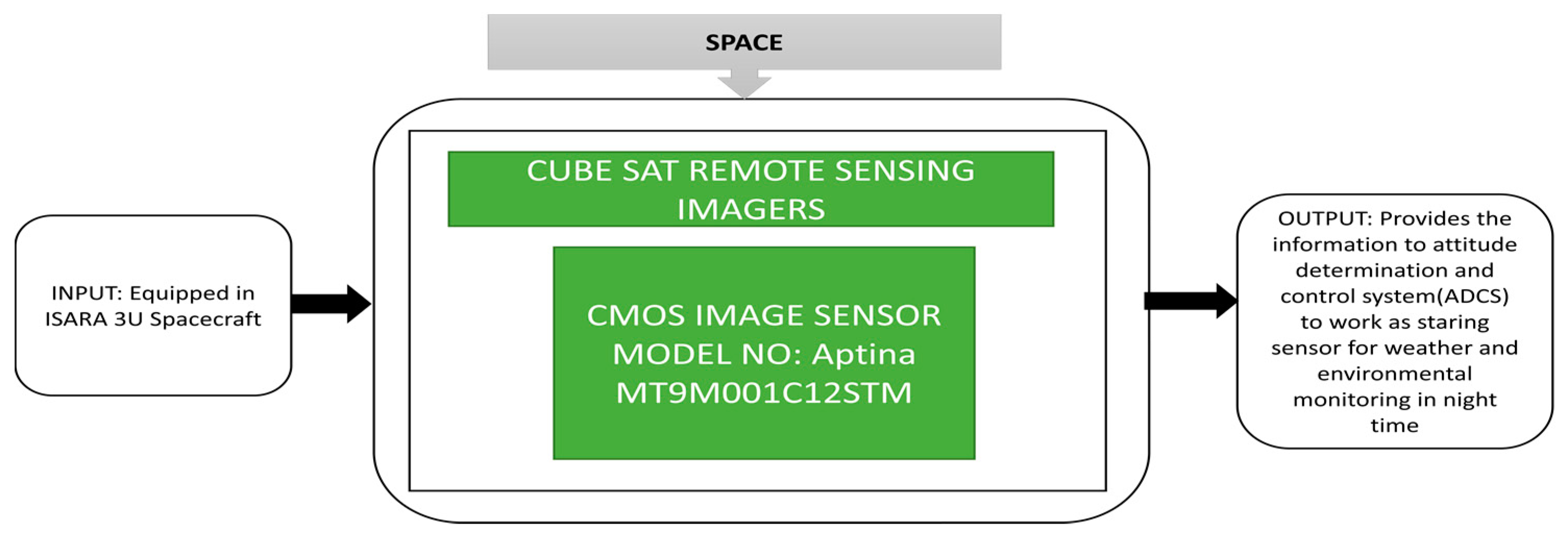

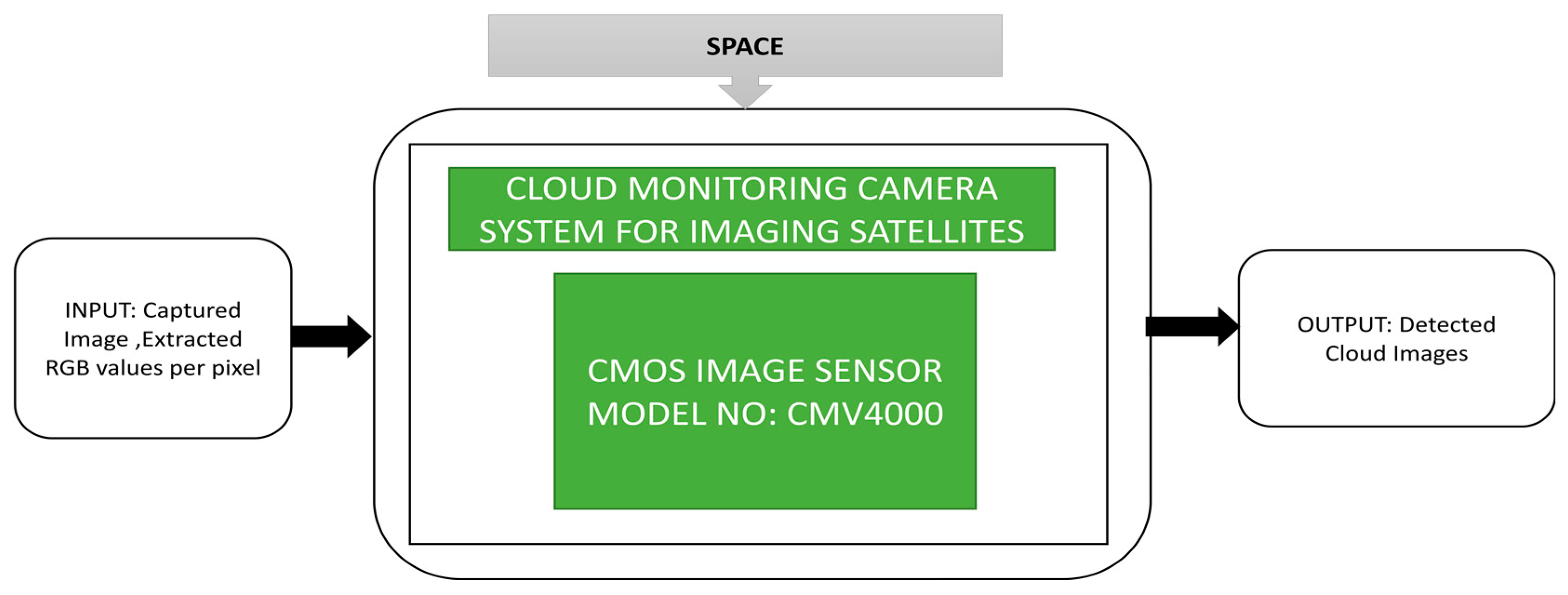

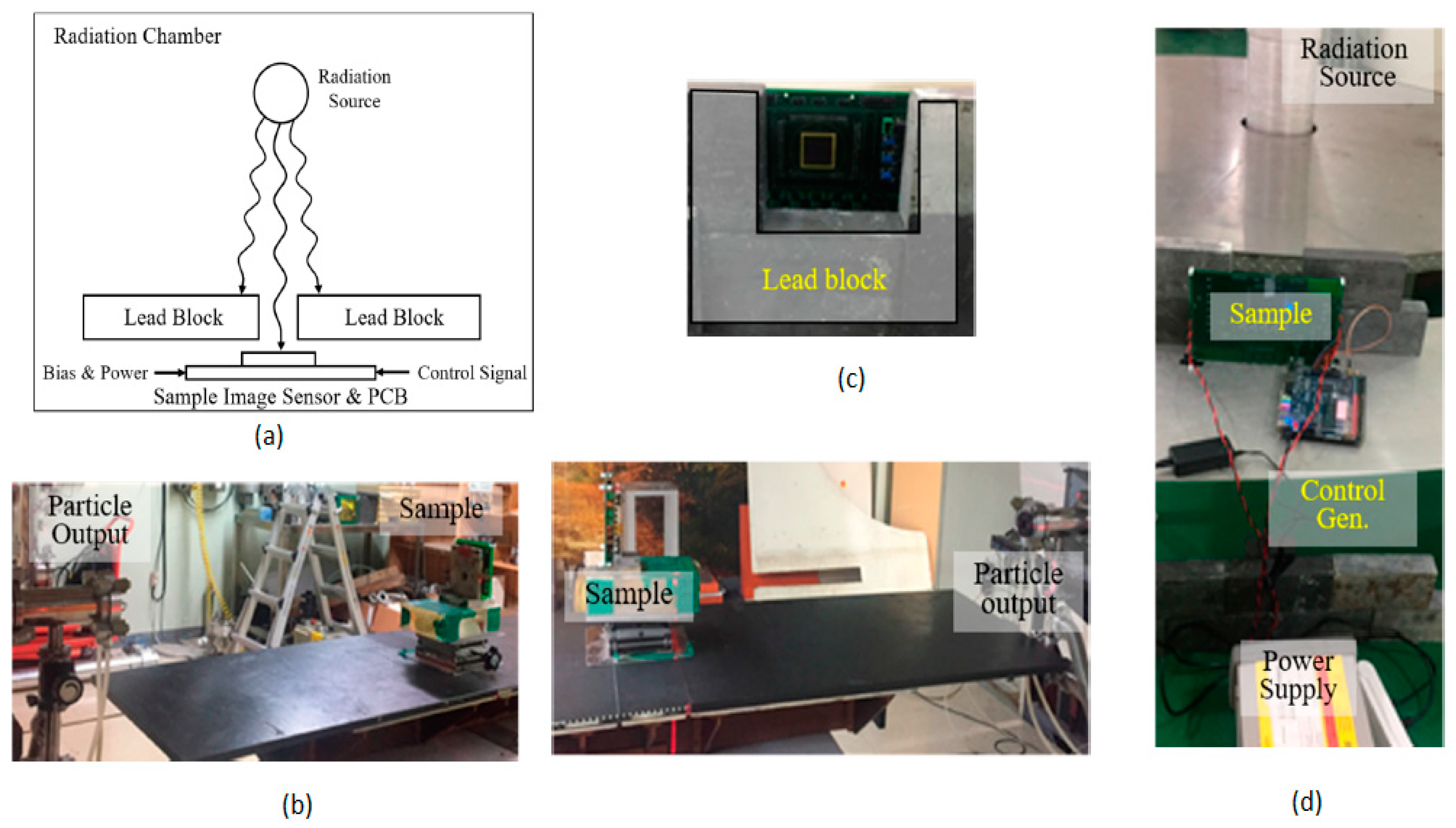

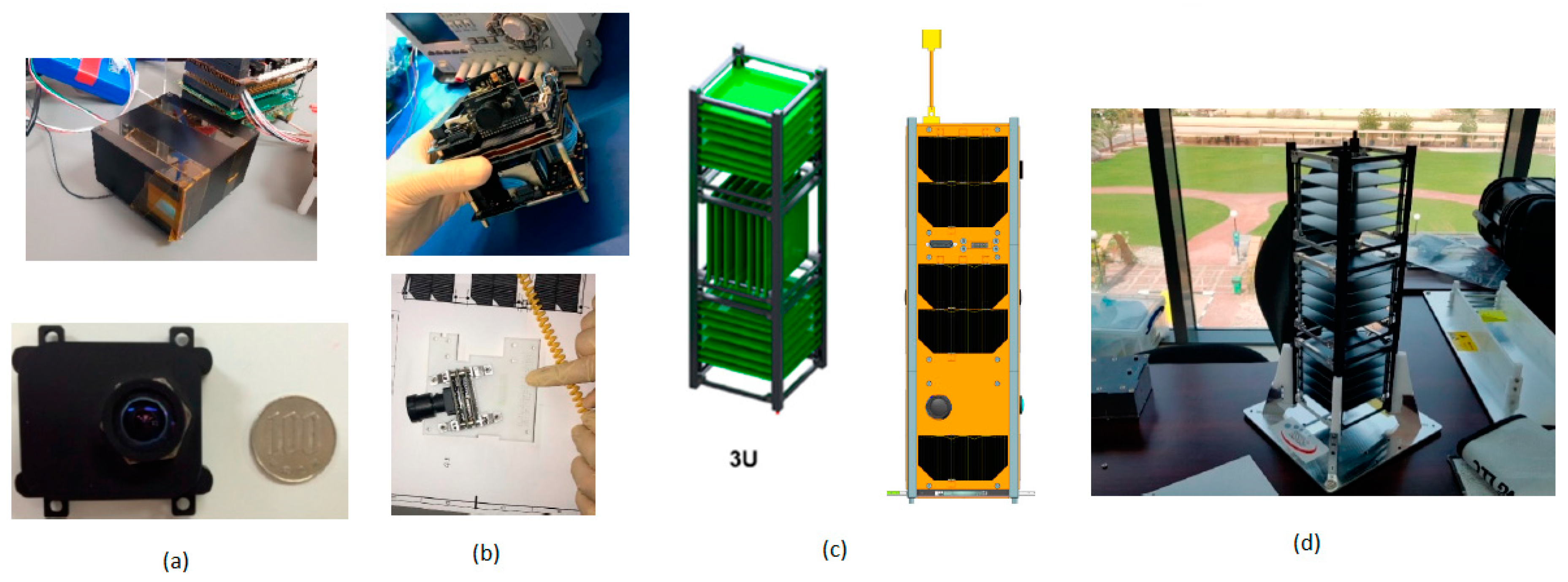

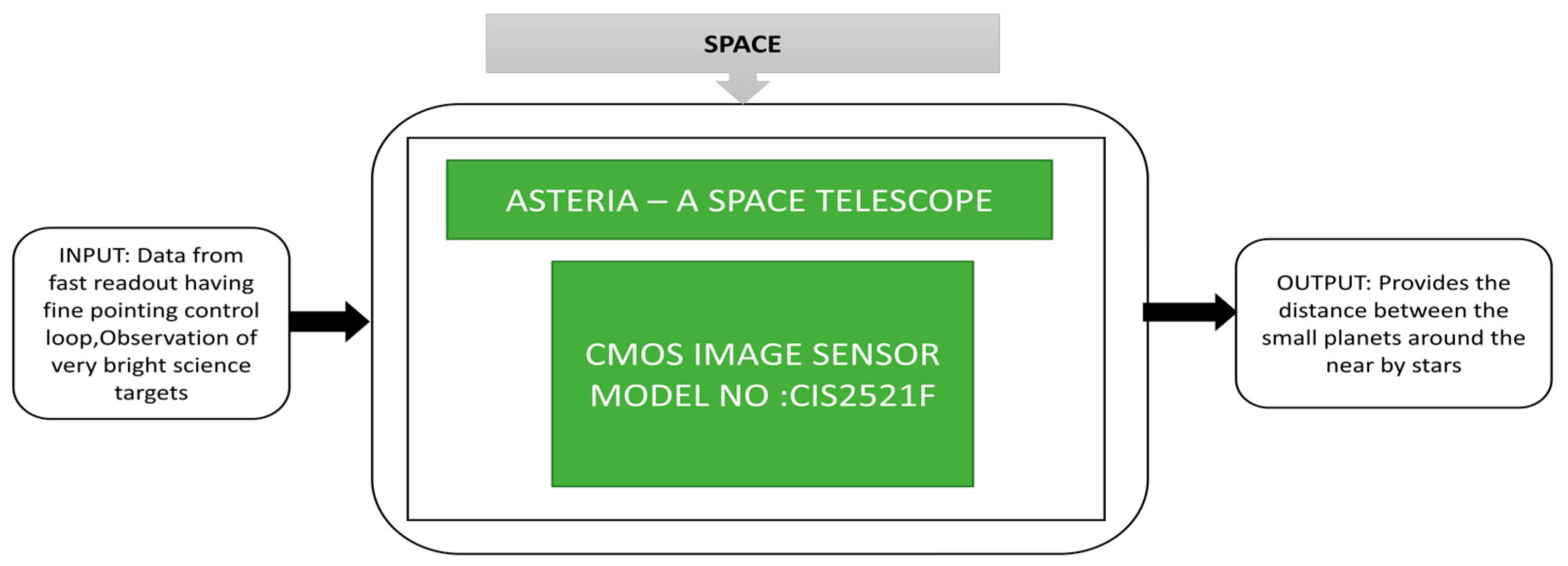

3.3. Space CIS Applications

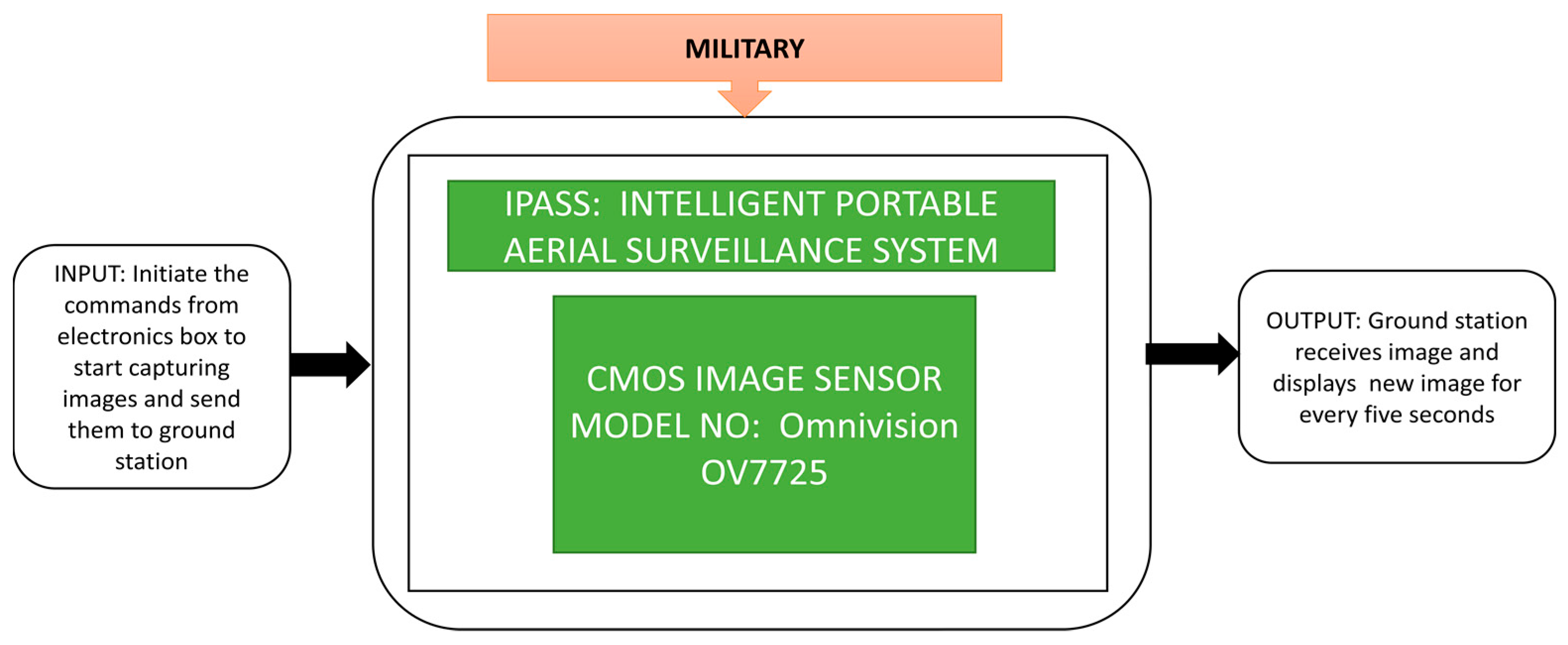

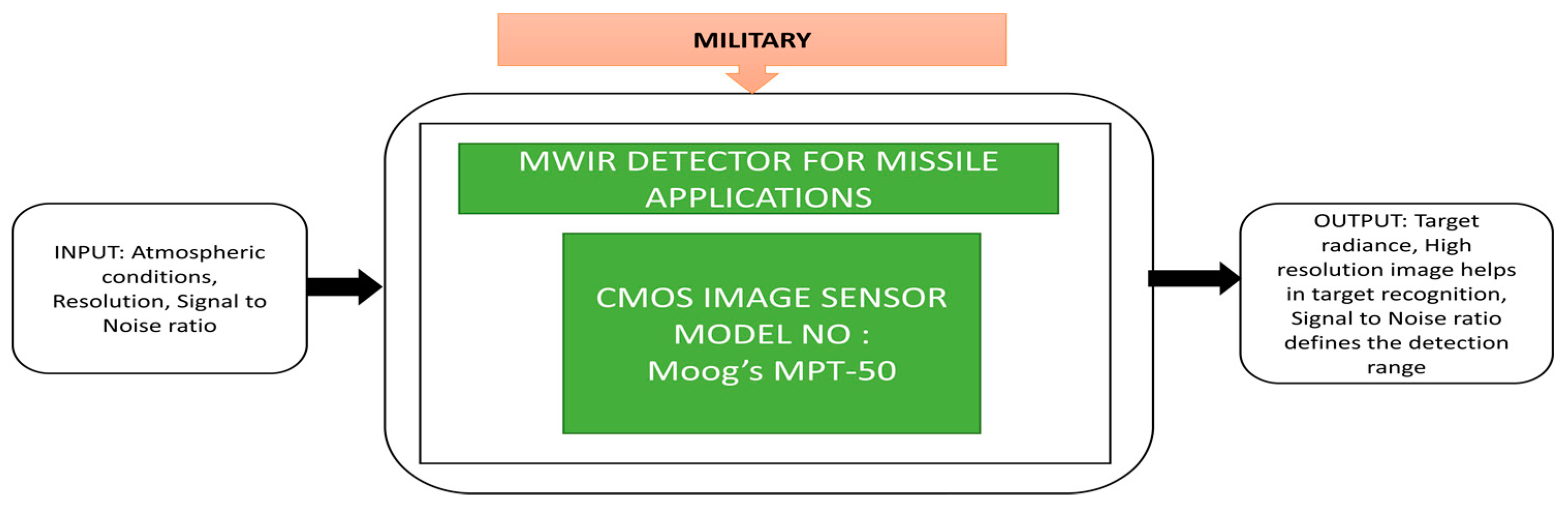

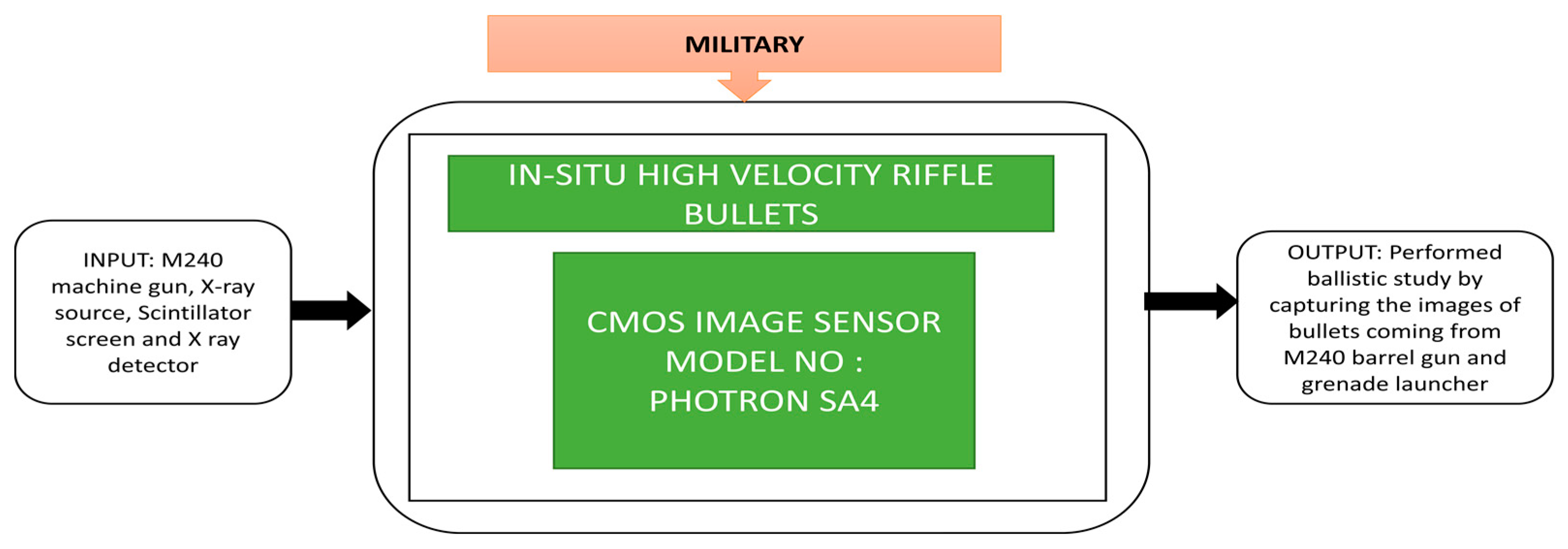

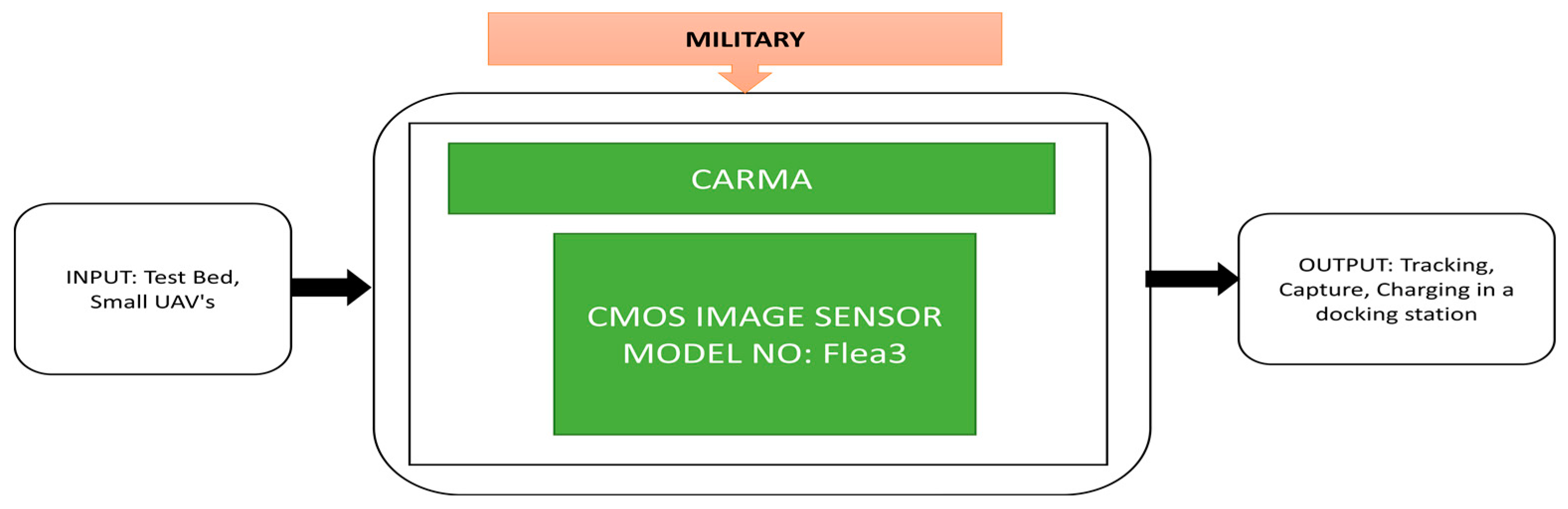

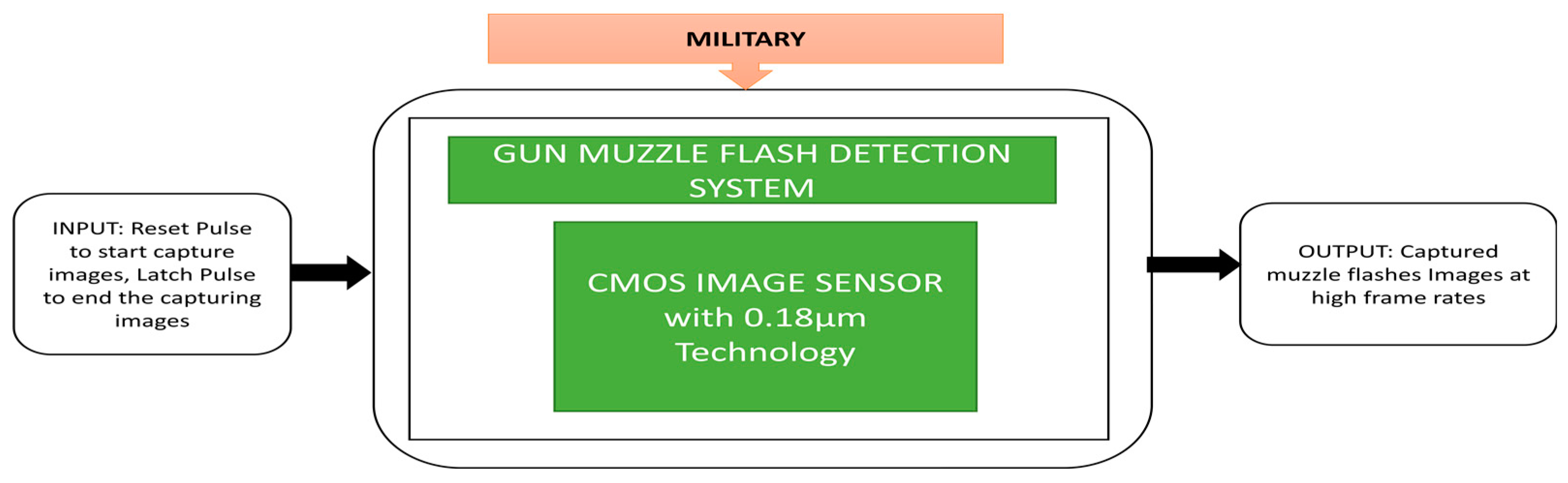

3.4. Military CIS Applications

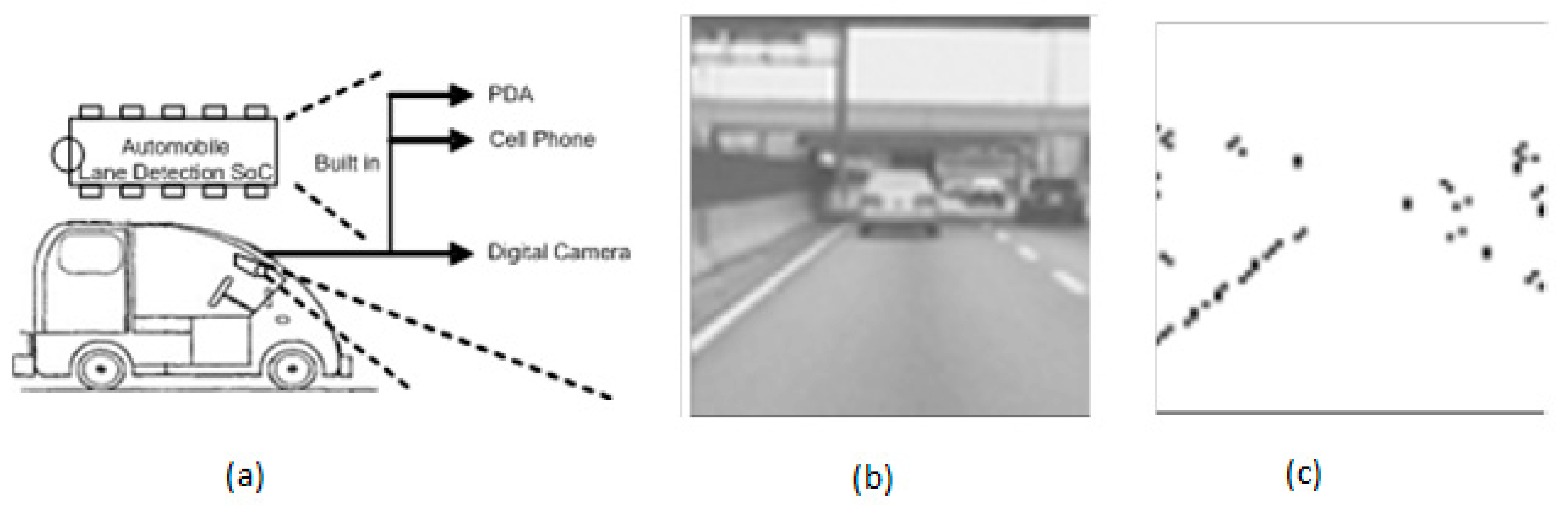

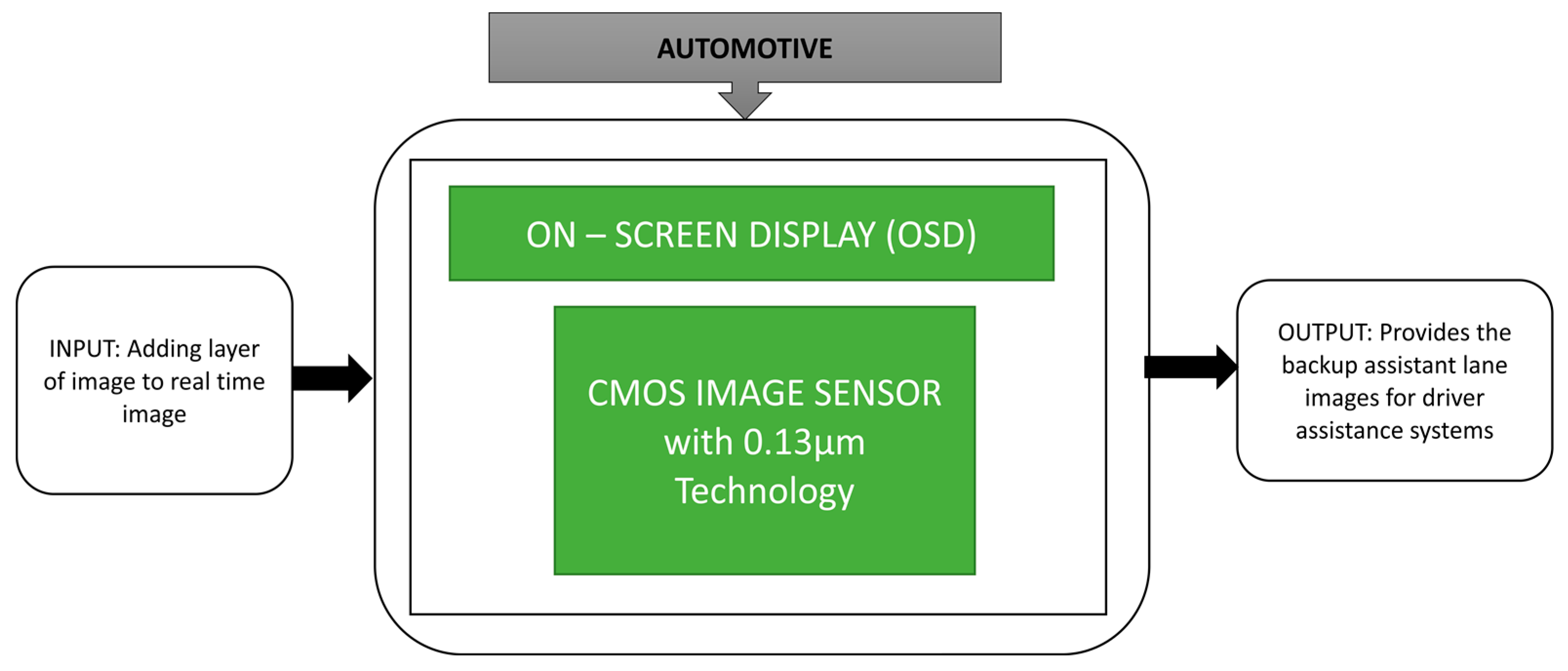

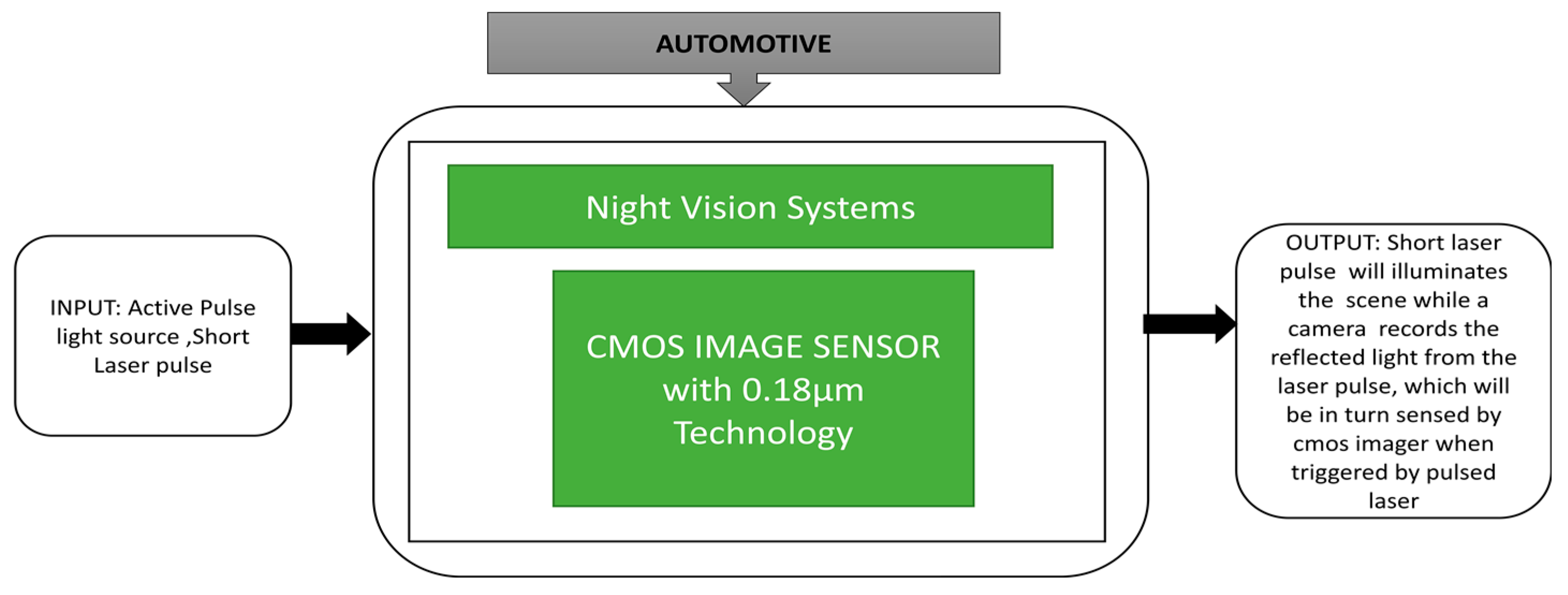

3.5. Automotive CIS Applications

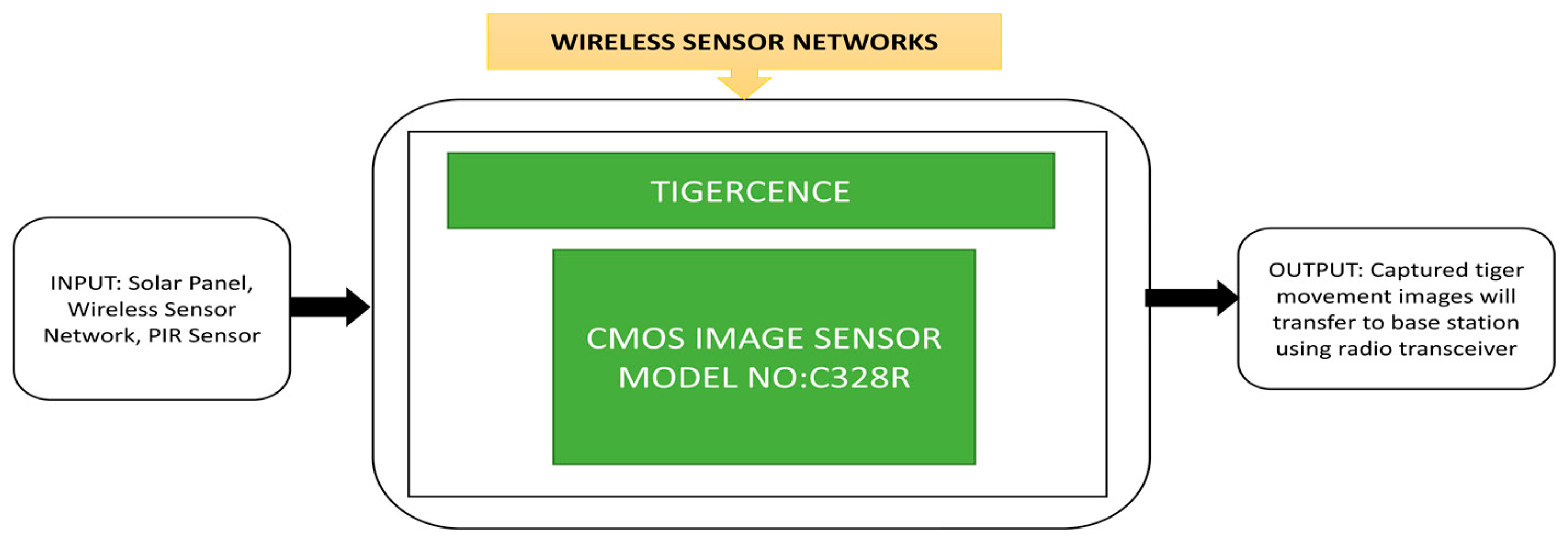

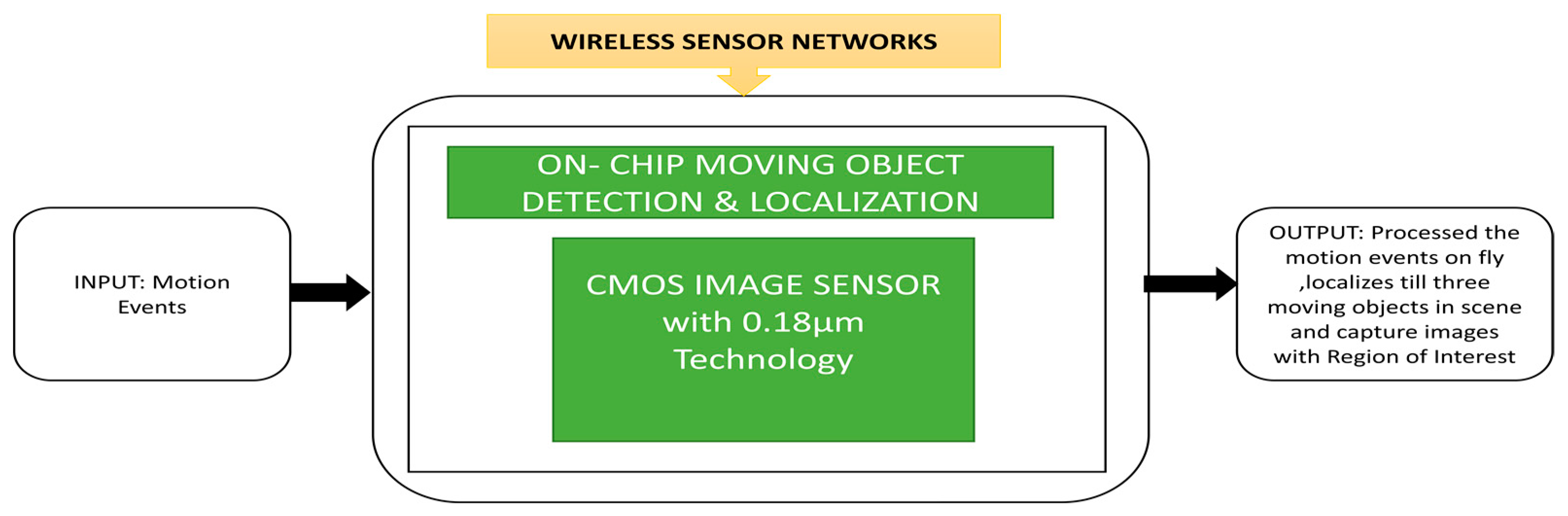

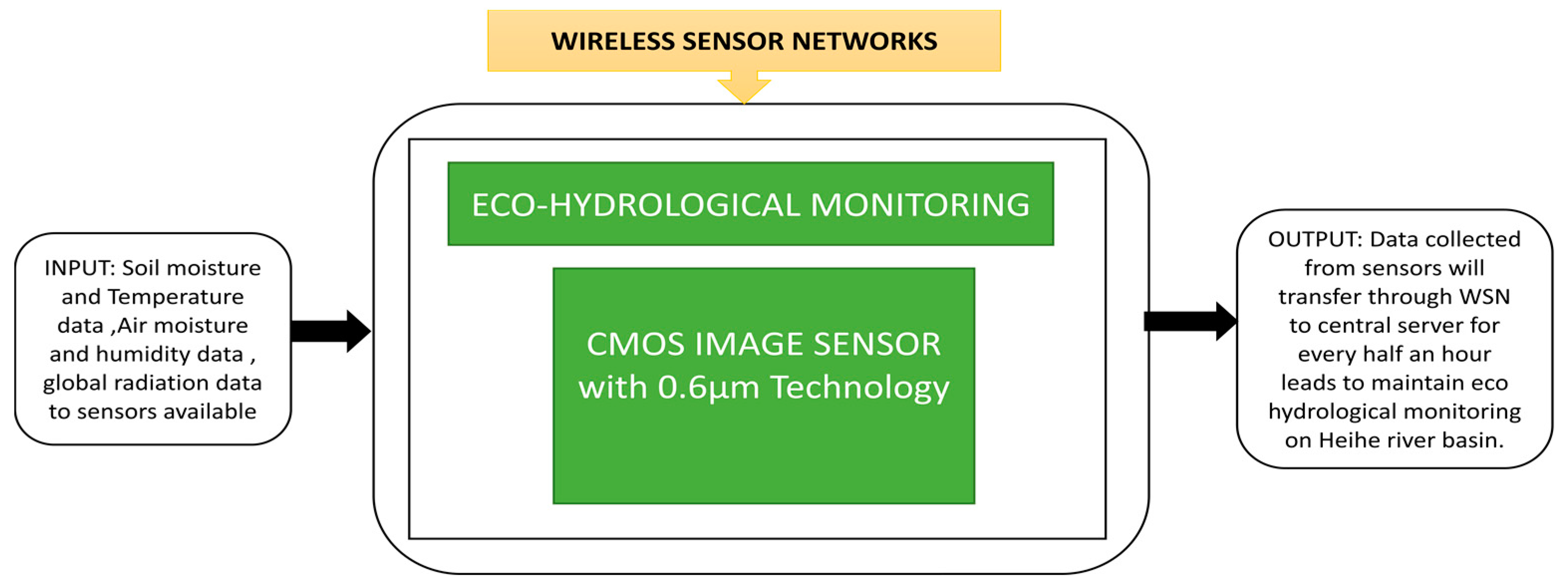

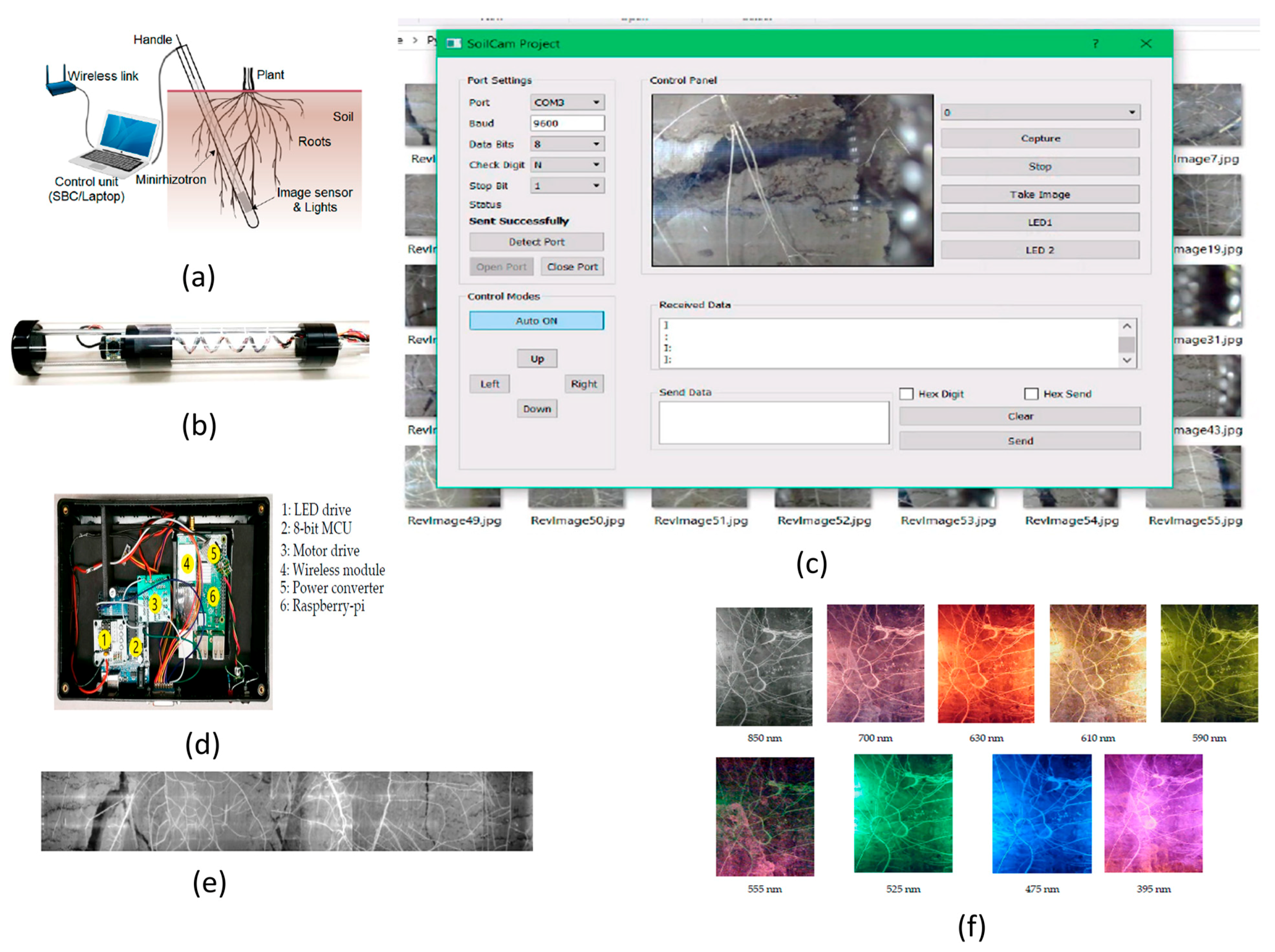

3.6. WSN (Wireless Sensor Networks) CIS Applications

4. Design Characteristics

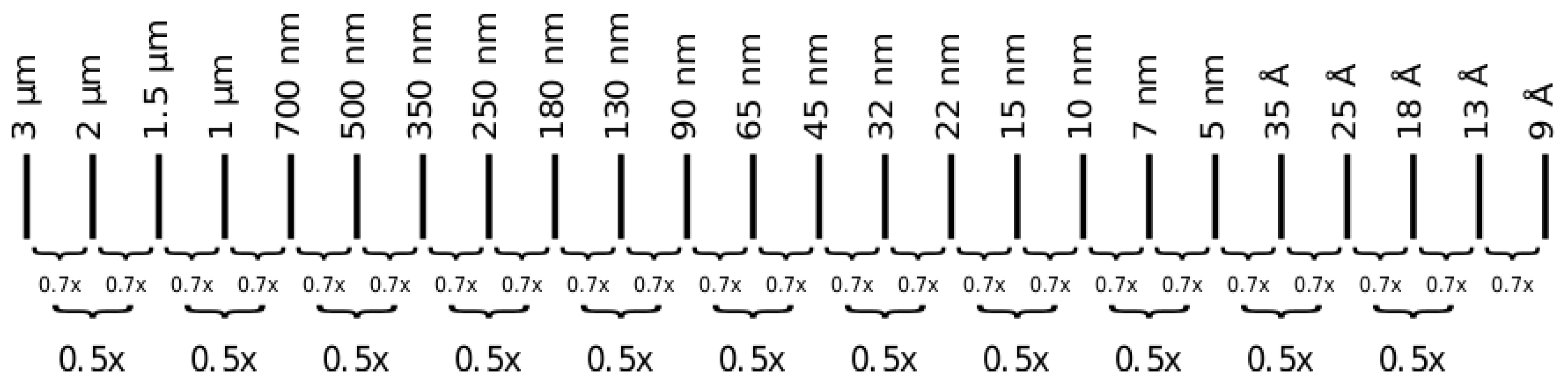

4.1. CMOS Technology

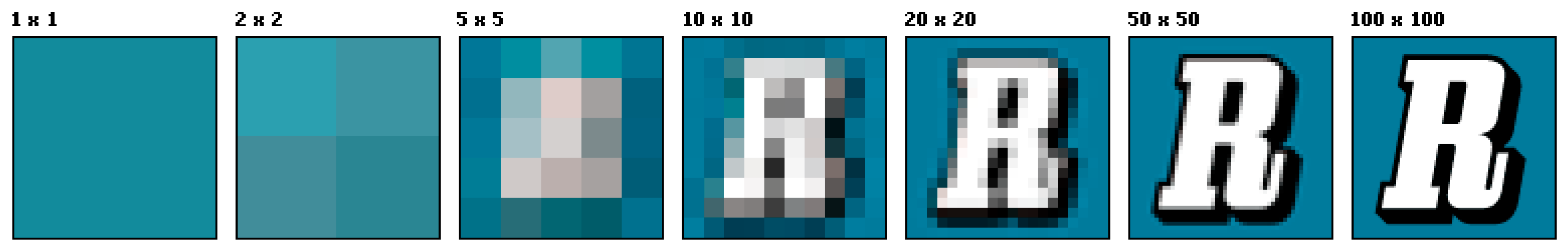

4.2. Resolution

4.3. Dynamic Range

4.4. Frame Rate

4.5. Signal to Noise Ratio (SNR)

5. Discussion

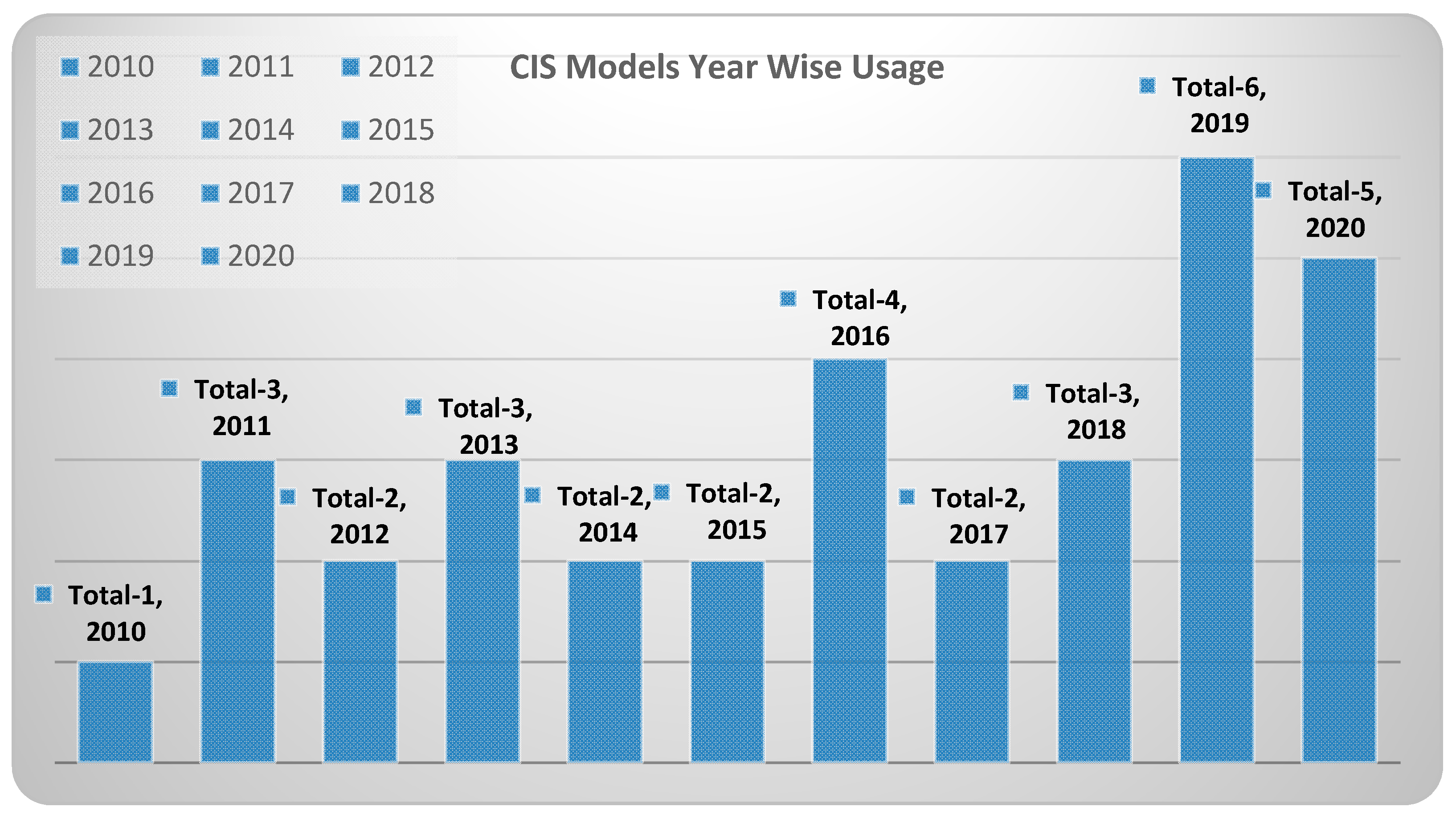

5.1. Camera Models

5.2. Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CIS | CMOS Image Sensor |

| DR | Dynamic Range |

| SNR | Signal to Noise Ratio |

| FPS | Frames Per Second |

| dB | Decibel |

| mm | Millimeter |

| µm | Micrometer |

| v/lux.sec | Volts per luminance. second |

| CCD | Charge Coupled Devices |

| CMOS | Complementary Metal Oxide Semiconductor |

| IoT | Internet of Things |

| ISS | Intelligent Surveillance Systems |

| WSN | Wireless Sensor Networks |

| BSI | Back Side Illumination |

| WDR | Wide Dynamic Range |

Appendix A

| S. No. | Year | Camera Module | Design Specifications | Application Name/Target | Field | Reference | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Technology | Resolution | SNR | Frame Rate | Dynamic Range | ||||||

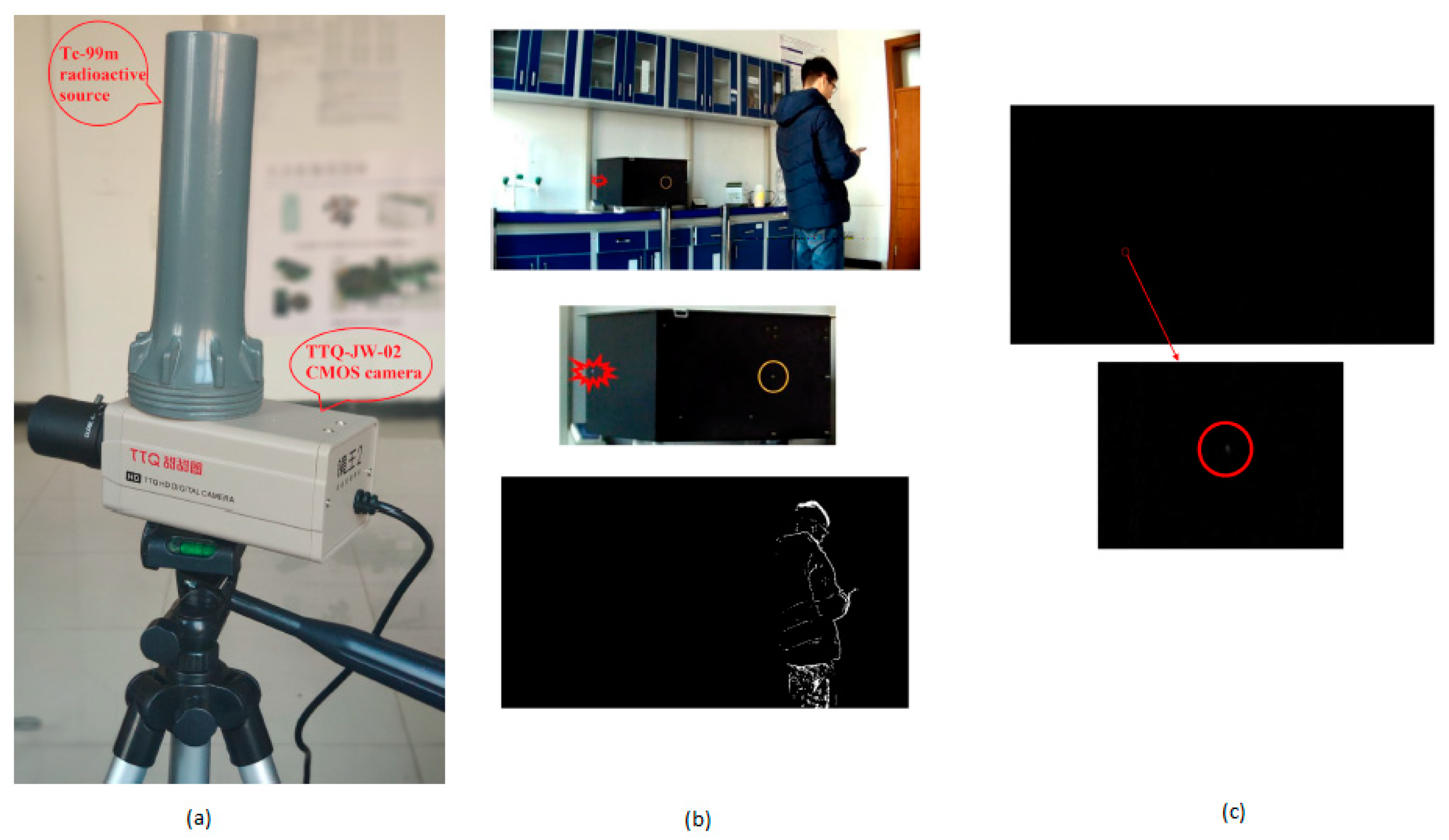

| 1 | 2020 | OV2710-1 E | -- | YES | YES | YES | YES | Nuclear Radiation Detection | ISS | Zhangfa Yan et al. [25] |

| 2 | 2020 | GS3-U3-23 S6 C-C | -- | YES | No | YES | No | Contact less Neonatal Pulse Rate Sensing | ISS | M. Paul et al. [26] |

| 3 | 2020 | OV9653 | -- | YES | YES | YES | YES | HODET | ISS | Joseph st. Cyr et al. [1] |

| 4 | 2020 | CIS2521 F | -- | YES | No | YES | YES | ASTERIA-A Space Telescope | Space | Mary Knapp et al. [45] |

| 5 | 2020 | MC1362 | -- | YES | No | YES | YES | Critical Part Detection of Reconnaissance Balloon | Millitary | Hanyu Hong et al. [57] |

| 6 | 2019 | OV2640 | -- | YES | YES | YES | YES | SMART HOME | IoT | Vivek Raj et al. [34] |

| 7 | 2019 | Sekonix SF3324-101 | -- | YES | No | No | No | CUbE | IoT | A. Hartmannsgruber et al. [35] |

| 8 | 2019 | ZTE Nubia UINX511 J | -- | YES | No | YES | No | Classroom Emotion with Cloud-Based Facial Recognizer | ISS | C. Boonroungrut et al. [23] |

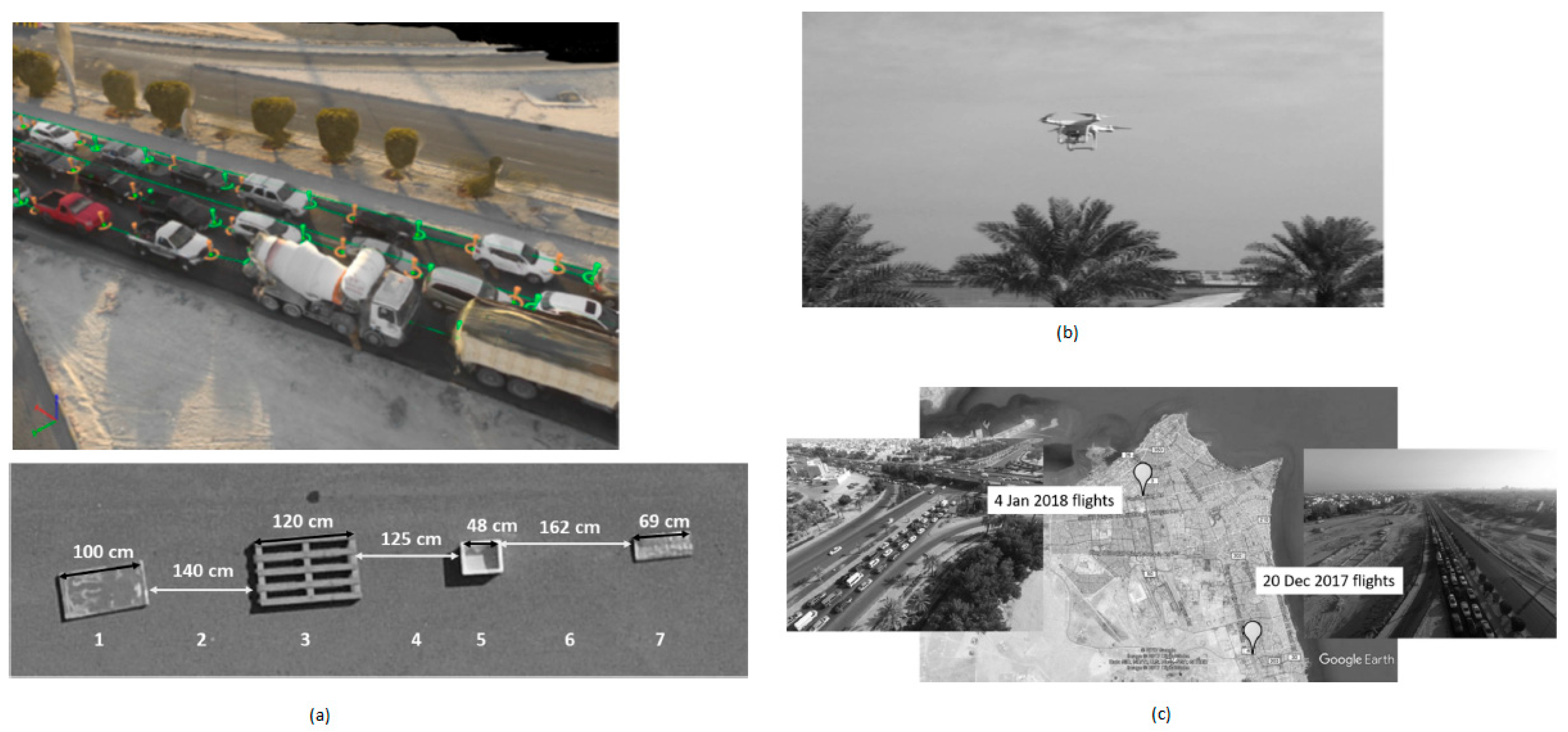

| 9 | 2019 | DJI PHANTOM 3 PRO | -- | YES | No | No | No | Vehicle Stacking Estimation | ISS | Brain S. Freeman et al. [24] |

| 10 | 2019 | -- | 0.11 µm | YES | YES | No | YES | Radiation Tolerant Sensor | Space | Woo-Tae Kim et al. [42] |

| 11 | 2019 | IMX 264 | -- | YES | No | YES | Nanospacecraft Asteroid Flybys | Space | Mihkel Pajusalu et al. [43] | |

| 12 | 2019 | OV9630 | -- | YES | YES | YES | YES | Mezn Sat for monitoring Green House Gases | Space | Halim Jallad et al. [4] |

| 13 | 2019 | -- | 0.18 µm | YES | No | YES | No | Gun Muzzle Flash Detection System | Millitary | Alex Katz et al. [56] |

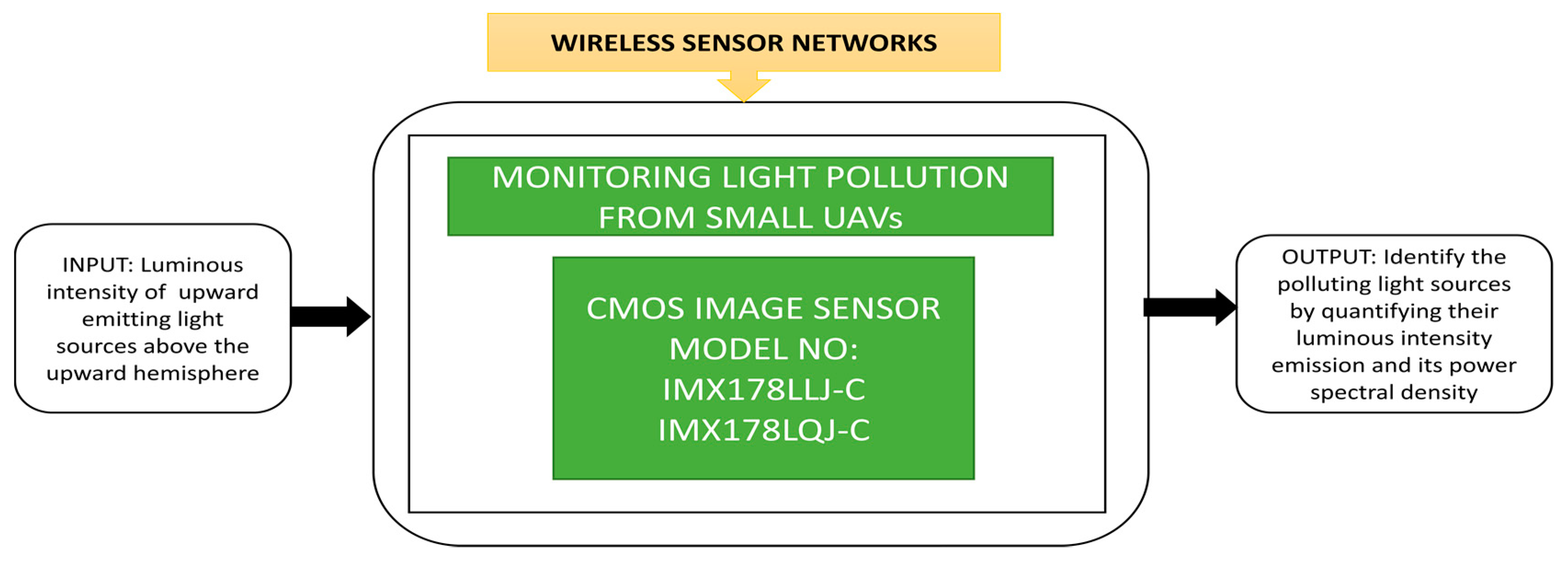

| 14 | 2019 | IMX178 LLJ,QJ-C | -- | YES | No | YES | No | Monitoring light pollution from small UAVs | WSN | Fiorentin et al. [76] |

| 15 | 2019 | ELP-USBFHD04 H-L170 | -- | YES | YES | YES | YES | SoilCam | WSN | Gazi Rahman et al. [77] |

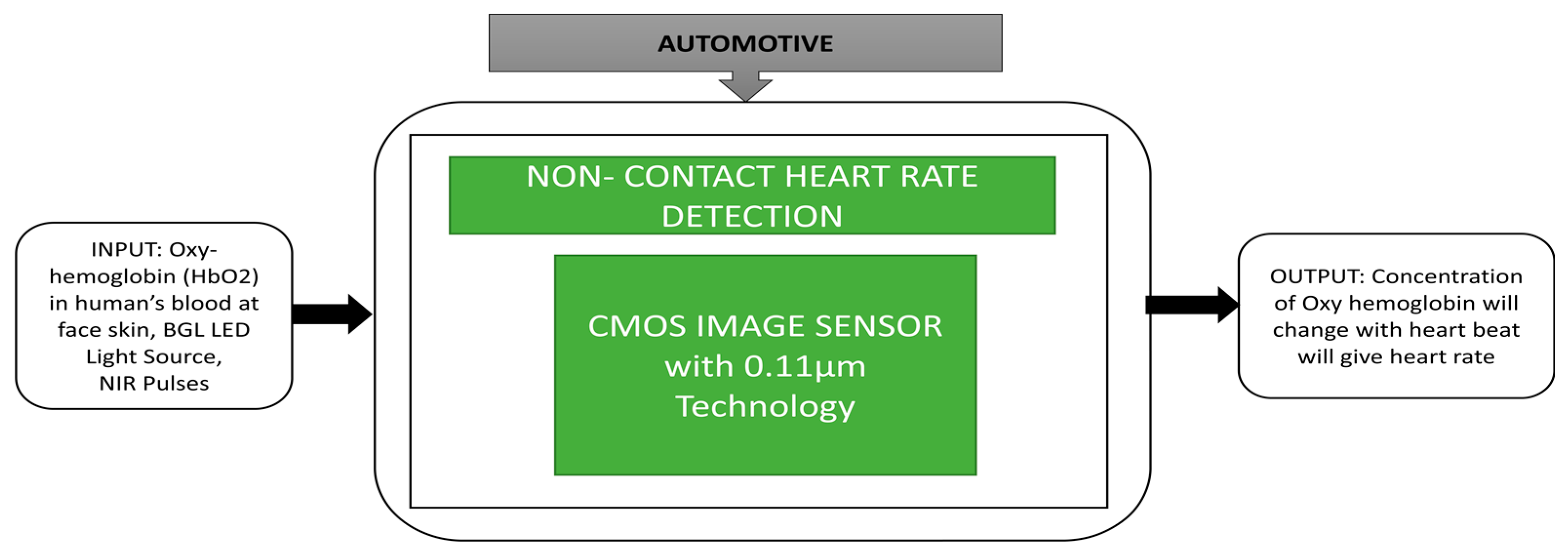

| 16 | 2018 | -- | 0.11 µm | YES | No | YES | No | Non Contact Heart rate Detection | Automotive | Chen Cao et al. [60] |

| 17 | 2018 | OV7725 | -- | YES | YES | YES | YES | Intelligent Car Path Tracking | Automotive | Zixin Mu et al. [68] |

| 18 | 2018 | OV7670 | -- | YES | YES | YES | YES | Precision Agriculture System Design | IoT | Arun M.Patokar et al. [33] |

| 19 | 2018 | -- | 0.09 µm | YES | No | YES | YES | Moving Object Detection With Pre-defined Areas | ISS | Oichi Kumagai et al. [22] |

| 20 | 2018 | CMV4000 | -- | YES | No | YES | YES | Cloud Monitoring Camera (CMC) System for Imaging Satellites | Space | Alpesh vala et al. [41] |

| 21 | 2018 | ESN-0510 | -- | YES | No | YES | No | Sticky Bomb Detection | Millitary | Raed Majeed et al. [55] |

| 22 | 2017 | -- | -- | No | No | No | No | Early Flood Detection & Control Monitoring | IoT | T M Thekkil et al. [32] |

| 23 | 2017 | -- | 0.18 µm | YES | YES | YES | YES | Multi Resolution Mode | ISS | Daehyeok Kim et al. [21] |

| 24 | 2017 | MT9 M001 C12 STM | -- | YES | YES | YES | YES | Cube SAT Remote Sensing Imagers | Space | Dee W. Pack et al. [40] |

| 25 | 2017 | Flea3 | -- | YES | No | YES | YES | CARMA | Millitary | Shannon Johnson et al. [53] |

| 26 | 2016 | -- | 0.18 µm | YES | No | No | YES | Smart Image Sensor with Multi Point Tracking (MPT) | IoT | Chin Yin et al. [31] |

| 27 | 2016 | CMV20000 | -- | YES | YES | YES | YES | MARS 2020 Mission: EECAM | Space | Mckinney et al. [39] |

| 28 | 2016 | MPT 50 | -- | YES | No | No | No | MWIR Detector for Missile Applications | Millitary | Ulas Kurum et al. [50] |

| 29 | 2016 | PHOTRON SA4 | -- | YES | YES | YES | No | IN-SITU High velocity Rifle Bullets | Millitary | J.D’Aries et al. [51] |

| 30 | 2016 | OV7670 | -- | YES | YES | YES | YES | Wireless Vision sensor | Millitary | Parivesh Pandey et al. [52] |

| 31 | 2015 | GUPPY-F036 C | -- | YES | No | YES | No | Traffic light Detection | Automotive | Moises Diaz et al. [67] |

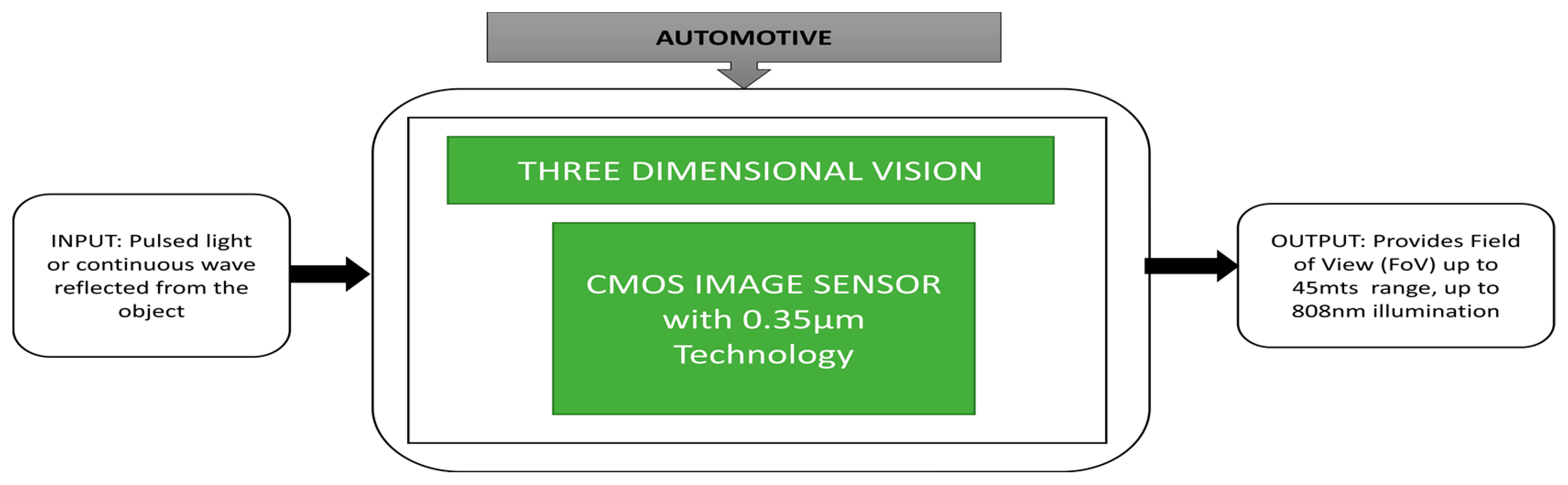

| 32 | 2015 | -- | 0.35 µm | YES | No | YES | YES | Three Dimensional Vision | Automotive | Danilo Bronzi et al. [64] |

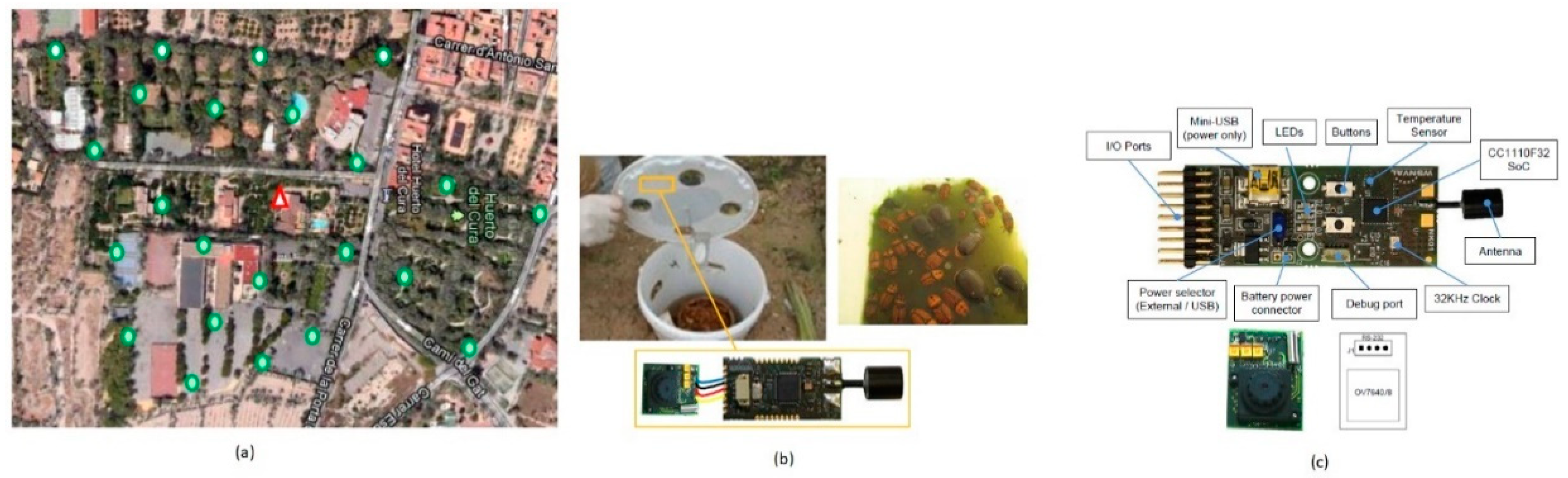

| 33 | 2015 | ucam-II | -- | YES | YES | No | YES | Visual surveillance and intrusion detection | ISS | Congduc Pham et al. [12] |

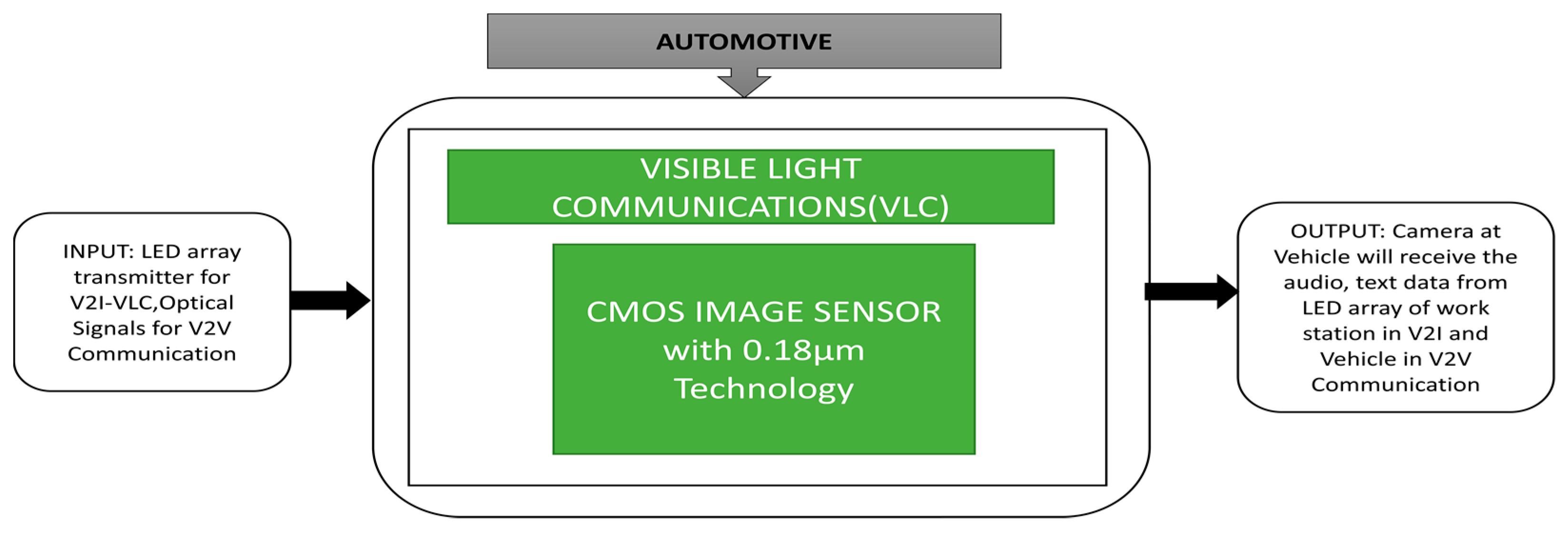

| 34 | 2014 | -- | 0.18 µm | YES | No | YES | No | Visible Light Communication | Automotive | Takaya Yamazato et al. [62] |

| 35 | 2014 | -- | -- | YES | No | No | No | Banpil Camera | Millitary | Patrick Odour et al. [49] |

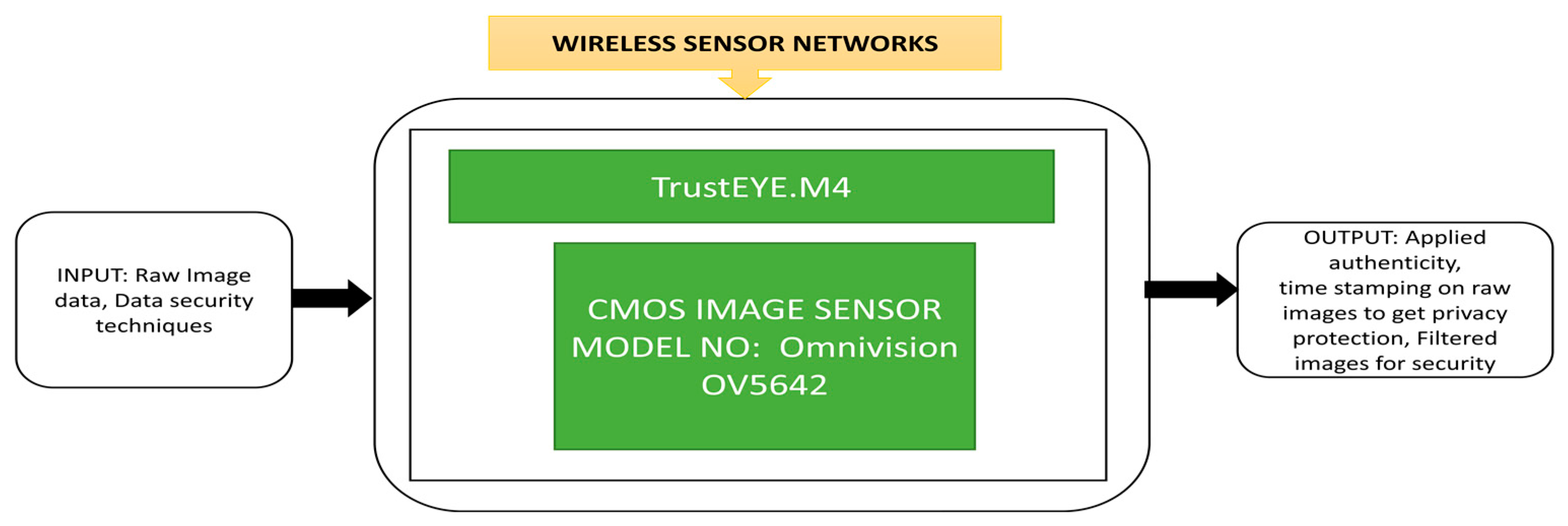

| 36 | 2014 | OV5642 | -- | YES | YES | YES | YES | TrustEYE.M4 | WSN | Thomas Winkler et al. [74] |

| 37 | 2014 | OV7725 | -- | YES | YES | YES | YES | Wild life Inventory | WSN | Luis Camacho et al. [75] |

| 38 | 2013 | -- | 0.18 µm | YES | No | YES | No | Optical Wireless Communication System | Automotive | Isamu Takai et al. [63] |

| 39 | 2013 | -- | 0.13 µm | YES | YES | No | YES | On-Screen-Display (OSD) | Automotive | Sheng Zhang et al. [59] |

| 40 | 2013 | VBM40 | -- | YES | No | YES | No | Human Monitoring System in Sea Transportation | IoT | Masakazu Arima et al. [3] |

| 41 | 2013 | OV9655 | -- | YES | No | YES | No | Smart Camera Networks (SCN) | IoT | Phoebus Chen et al. [30] |

| 42 | 2013 | -- | 0.35 µm | YES | No | No | No | Lightning Detection and Imaging | Space | Sebastien Rolando et al. [37] |

| 43 | 2013 | -- | 0.18 µm | YES | No | No | YES | STAR Tracking | Space | Xinyuan Qian et al. [38] |

| 44 | 2013 | OV7725 | -- | YES | YES | YES | YES | IPASS | Millitary | Adam Blumenau et al. [5] |

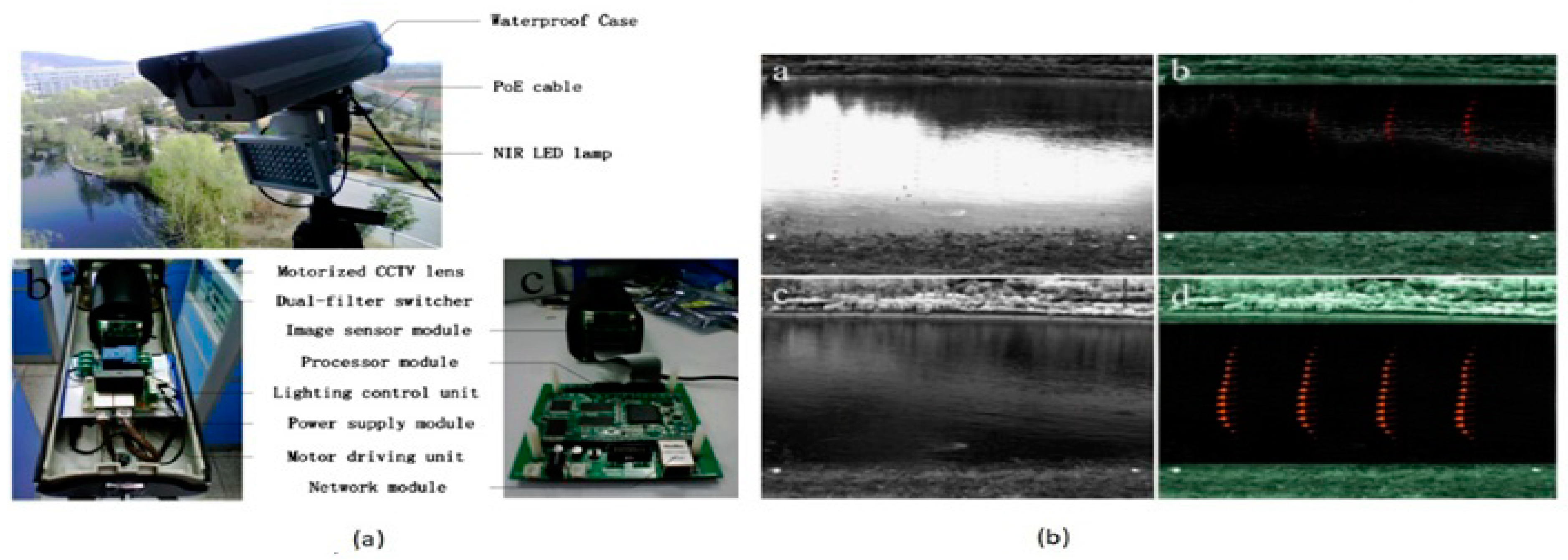

| 45 | 2013 | MT9 M001 | -- | YES | YES | YES | YES | River Surface Target Enhancement | WSN | Zhen Zhang et al. [73] |

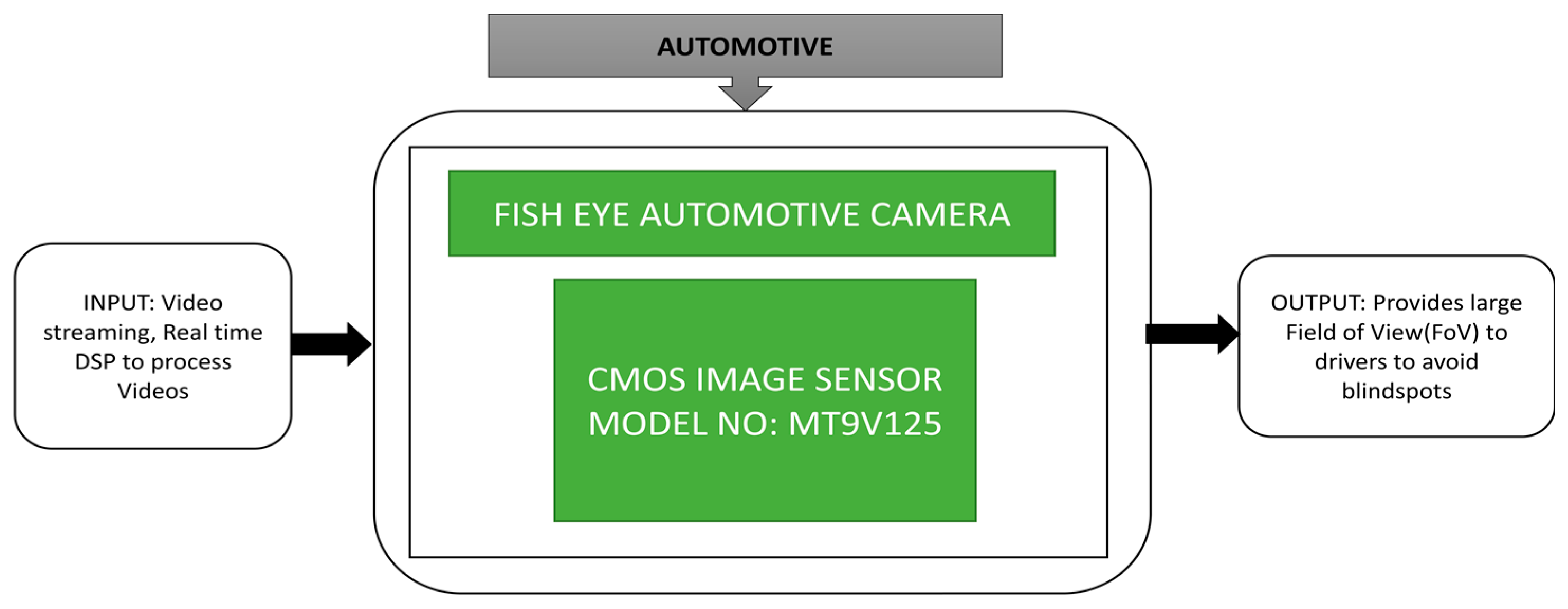

| 46 | 2012 | MT9 V125 | -- | YES | YES | YES | YES | Fish-Eye Automotive Camera | Automotive | Mauro Turturici et al. [2] |

| 47 | 2012 | -- | 0.18 µm | YES | No | YES | YES | Autonomous Micro Digital Sun Sensor | Space | Ning Xie et al. [36] |

| 48 | 2012 | 0.6 µm | YES | No | No | No | Eco-Hydrological Monitoring | WSN | L. Luo et al. [71] | |

| 49 | 2012 | C328-7640 | -- | YES | YES | YES | YES | Monitoring Pest Insect Traps | WSN | Otoniel Lopez et al. [72] |

| 50 | 2011 | -- | 0.18 µm | YES | YES | YES | YES | Night Vision Systems | Automotive | Arthur Spivak et al. [66] |

| 51 | 2011 | OV6620 | -- | YES | YES | YES | YES | Nilaparvata Lugens Monitoring System | IoT | Ken Cai et al. [27] |

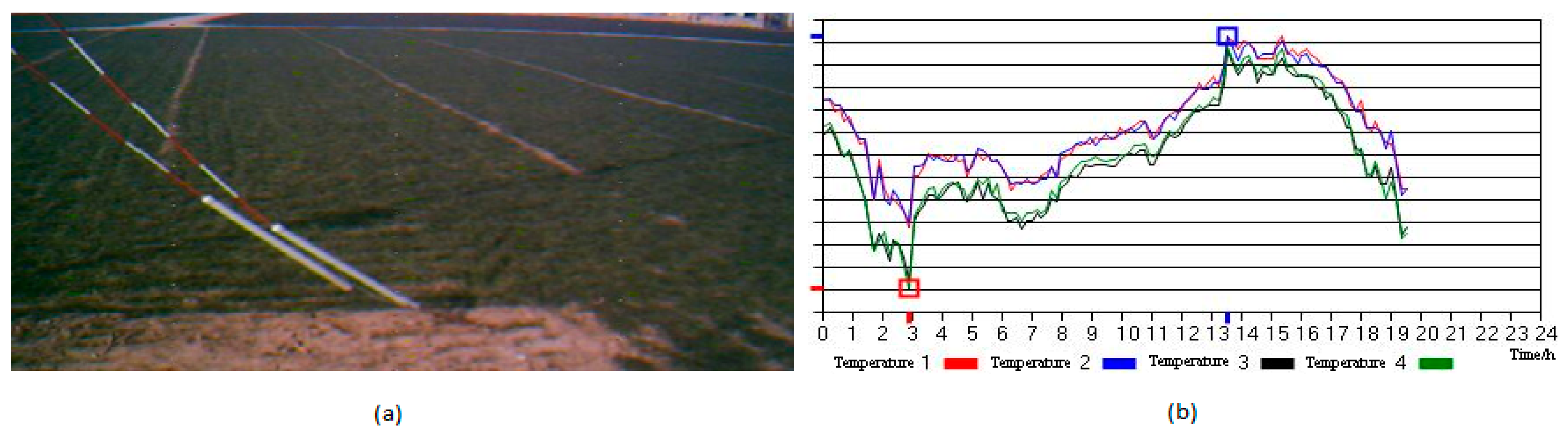

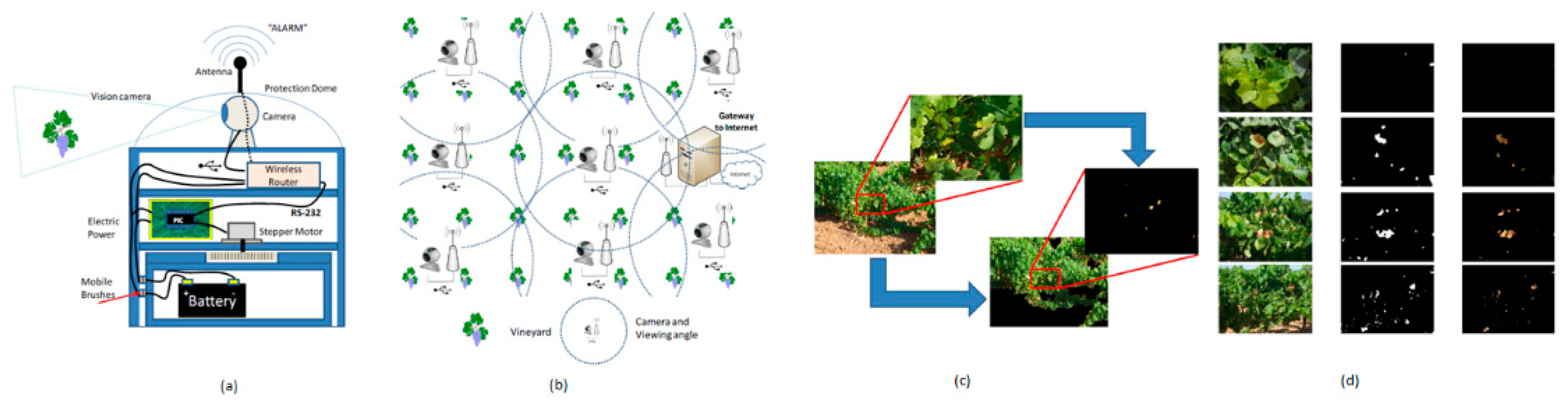

| 52 | 2011 | OV7640 | -- | YES | YES | YES | YES | Crop Monitoring System | IoT | Zhao Liqiag et al. [28] |

| 53 | 2011 | Hercules Webcam | -- | YES | No | YES | No | Vine Yard Monitoring | IoT | Jaime Lloret et al. [29] |

| 54 | 2011 | -- | 0.18 µm | YES | No | YES | No | On Chip Moving object Detection & Localization | WSN | Bo Zhao et al. [69] |

| 55 | 2011 | MT9 D131 | -- | YES | YES | YES | YES | MasliNET | WSN | Vana Jelicic et al. [70] |

| 56 | 2010 | 0.18 µm | YES | No | YES | No | Surveillance in low crowded environments | ISS | Mehdi Habibi et al. [11] | |

| 57 | 2010 | OV9653 | -- | YES | YES | YES | YES | Wireless Aerial Image System | Millitary | Li Zhang et al. [48] |

| 58 | 2010 | C328 R | -- | YES | No | No | No | Tigercense | WSN | Ravi Bagree et al. [6] |

| 59 | 2009 | -- | 0.35 µm | YES | No | YES | No | Built in lane Detection | Automotive | Pei-yung Hsiao et al. [58] |

| 60 | 2009 | Logitech Pro 9000 | -- | YES | No | YES | No | Privacy preserving sensor for Person Detection | ISS | Shota Nakashima et al. [10] |

| Year | Technology | Camera Module | Pixel Size (µm) | Resolution | Pixel Pitch (µm) | Area | Power (w/mw) | Dark Current (mv/s) | SNR (dB) | Conversion Gain (µV/e-) | Sensitivity (V/lux-s) | Frame Rate (fps) | Dynamic Range (dB) | Field | Application Name/Target |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2009 | N/A | Logitech Quickcam Pro 9000 | N/A | 1600 × 1200 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | 30 fps | N/A | ISS | Privacy reserving sensor for Person Detection |

| 2010 | 0.18 µm | N/A | 7.5 × 7.5 | 64 × 64 | 7.5 | 1 mm × 1 mm | 0.5 mw | N/A | N/A | N/A | N/A | 30 fps | N/A | ISS | Surveillance in low crowded areas |

| 2015 | N/A | ucam-II | 5.5 × 5.5 | 128 × 128 | 5.5 | 27.5 mm × 32.5 mm | N/A | 25.2 | 44.2 | N/A | 2.93 V/lux-s | N/A | 51 | ISS | Visual surveillance and intrusion detection |

| 2017 | 0.18 µm | N/A | 4.4 × 4.4 | 176 × 144 | 4.4 | 2.35 mm × 2.35 mm | 10 mw-HDR | N/A | 47 dB-HDR | N/A | -- | 14 fps | 61.8 | ISS | Multi Resolution Mode |

| 2018 | 0.09 µm | N/A | 1.5 × 1.5 | 2560 × 1536 | 1.5 | 4.48 mm × 4.48 mm | 95 mw | N/A | N/A | 55.8 | 8033 e-/lux-s | 60 fps | 67 | ISS | Moving Object Detection With Pre-defined Areas |

| 2019 | N/A | ZTE Nubia UINX511 J | N/A | 5344 × 3000 | N/A | 6.828 mm × 6.828 mm | N/A | N/A | N/A | N/A | N/A | 120 fps | N/A | ISS | Classroom Emotion with Cloud-Based Facial Recognizer |

| 2019 | N/A | DJI PHANTOM 3 PRO | N/A | 4000 × 3000 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | ISS | vehicle Stacking Estimation |

| 2020 | N/A | OV2710-1 E | 3 × 3 | 1920 × 1080 | 3 | 5886 µm × 3276 µm | 350 mW | 20 | 40 | N/A | 3.7 | 30 fps | 69 | ISS | Nuclear Radiation Detection |

| 2020 | N/A | GS3-U3-23 S6 C-C | 5.86 × 5.86 | 1920 × 1200 | 5.86 | N/A | N/A | N/A | N/A | N/A | N/A | 162 fps | N/A | ISS | Contact less Neonatal Pulse Rate Sensing |

| 2020 | N/A | OV9653 | 3.18 × 3.18 | 1300 × 1028 | 3.18 | 4.13 mm × 3.28 mm | 50 mw | 30 | 40 | N/A | 0.9 | 15–120 fps | 62 | ISS | HODET |

| 2011 | N/A | OV6620 | 9.0 × 8.2 | 356 × 292 | 9.0 | 3.1 mm × 2.5 mm | <80 mw | <0.2 nA /cm2 | >48 | N/A | N/A | 60 fps | >72 | IoT | Nilaparvata Lugens Monitoring System |

| 2011 | N/A | OV7640 | 5.6 × 5.6 | 640 × 480 | 5.6 | 3.6 mm × 2.7 mm | 40 mw | 30 | 46 | N/A | 3.0-Black /Color-1.12 | 30 fps | 62 | IoT | Crop Monitoring System |

| 2011 | N/A | Hercules Webcam | N/A | 1280 × 960 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | 30 fps | N/A | IoT | Vine Yard Monitoring |

| 2013 | N/A | VBM40 | N/A | 1280 × 960 | N/A | 132 mm × 152 mm | N/A | N/A | N/A | N/A | 0.6 V/lux | 30 fps | N/A | IoT | Human Monitoring System in Sea Transportation |

| 2013 | N/A | OV9655 | 3.18 × 3.18 | 1280 × 1024 | 3.18 | 5145 µm × 6145 µm | 90 mw | N/A | N/A | N/A | N/A | 15 fps | N/A | IoT | Smart Camera Networks (SCN) |

| 2016 | 0.18 µm | N/A | 20 × 20 | 64 × 64 | 20 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | 96.7 | IoT | Smart Image Sensor with Multi Point Tracking (MPT) |

| 2017 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | IoT | Early Flood Detection & Control Monitoring |

| 2018 | N/A | OV7670 | 3.6 × 3.6 | 640 × 480 | 3.6 | 2.36 mm × 1.76 mm | N/A | 12 mv/s | 40 | N/A | 1.1 V/lux−sec | 30 fps | 52 | IoT | Precision Agriculture System Design |

| 2019 | N/A | OV2640 | 2.2 × 2.2 | 1600 × 1200 | 2.2 | 3590 µm × 2684 µm | 125 mW | 15 | 40 | N/A | 0.6 | 15 fps | 50 | IoT | SMART HOME |

| 2019 | N/A | Sekonix SF3324-101 | 3 × 3 | 1928 × 1208 | 3 | 26 mm × 26 mm | N/A | N/A | N/A | N/A | N/A | N/A | N/A | IoT | CUbE |

| 2012 | 0.18 µm | N/A | N/A | 368 × 368 | N/A | 5 mm × 5 mm | 21.34 mw@ Acquisition 21.39 mW @ Tracking | N/A | N/A | N/A | N/A | 10 fps | 49.2 dB | Space | Autonomous Micro Digital Sun Sensor |

| 2013 | 0.35 µm | N/A | 60 × 60 | 256 × 256 | 60 | 17.8 mm × 17.8 mm | N/A | N/A | N/A | 5.7 | N/A | N/A | N/A | Space | Lightning Detection and Imaging |

| 2013 | 0.18 µm | N/A | 5 × 5 | 320 × 128 | 5 | 2.5 mm × 2.5 mm | 247 mW | 1537 fA | N/A | N/A | 0.25 | N/A | 126 dB | Space | STAR Tracking |

| 2016 | N/A | CMV20000 | 6.4 × 6.4 | 5120 × 3840 | 6.4 | 32.77 mm × 24.58 mm | <3 w | 125 e-/s | 41.8 dB | 0.25 | N/A | 0.45 fps | 66 dB | Space | MARS 2020 Mission: EECAM |

| 2017 | N/A | MT9 M001 C12 STM | 5.2 × 5.2 | 1280 × 1024 | 5.2 | 6.6 mm × 5.32 mm | 363 mW | N/A | 45 dB | N/A | 2.1 | 30 fps | 68.2 dB | Space | Cube SAT Remote Sensing Imagers |

| 2018 | N/A | CMV4000 | 5.5 × 5.5 | 2048 × 2048 | 5.5 | N/A | 650 mw | 125 e-/s | N/A | 0.075 LSB/e- | 5.56 | 180 fps | 60 dB | Space | Cloud Monitoring Camera (CMC) System for Imaging Satellites |

| 2019 | 0.11 µm | N/A | 6.5 × 6.5 | 3000 × 3000 | 6.5 | 22 mm × 22 mm | N/A | N/A | 45 dB | 8.55 | N/A | N/A | 72.4 dB | Space | Radiation Tolerant Sensor |

| 2019 | N/A | IMX 264 | 3.45 × 3.45 | 2464 × 2056 | 3.45 | N/A | N/A | N/A | N/A | N/A | 0.915 | 60 fps | N/A | Space | Nano spacecraft Asteroid Flybys |

| 2019 | N/A | OV9630 | 4.2 × 4.2 | 1280 × 1024 | 4.2 | 5.4 mm × 4.3 mm | 150 mW | 28 mv | 54 dB | N/A | 1 | 15 fps | 60 dB | Space | Mezn Sat for monitoring Green House Gases |

| 2020 | N/A | CIS2521 F | 6.5 × 6.5 | 2560 × 2160 | 6.5 | N/A | N/A | 35 e-/s | N/A | N/A | N/A | 100 fps | >86 dB | Space | ASTERIA-A Space Telescope |

| 2010 | N/A | OV9653 | 3.18 × 3.18 | 1300 × 1028 | 3.18 | 4.13 mm × 3.28 mm | 50 mw | 30 | 40 dB | N/A | 0.9 | 30 fps | 62 dB | Military | Wireless Aerial Image System |

| 2013 | N/A | OV7725 | 6.0 × 6.0 | 640 × 480 | 6 | 3984 µm × 2952 µm | 120 MW | 40 mV/s | 50 dB | N/A | 3 | 60 fps | 60 dB | Military | IPASS |

| 2014 | N/A | N/A | N/A | 640 × 512 | N/A | 9.6 mm × 7.7 mm | N/A | N/A | N/A | N/A | N/A | N/A | N/A | Military | Banpil Camera |

| 2016 | N/A | MPT 50 | 15 × 15 | 640 × 512 | 15 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | Military | MWIR Detector for Missile Applications |

| 2016 | N/A | PHOTRON SA4 | 20 × 20 | 1024 × 1024 | 20 | 160 mm × 153 mm | N/A | N/A | 80 dB | N/A | N/A | 3600 fps | N/A | Military | IN-SITU High velocity Rifle Bullets |

| 2016 | N/A | OV7670 | 3.6 × 3.6 | 640 × 480 | 3.6 | 2.36 mm × 1.76 mm | 60 mW | 12 | 46 dB | N/A | 1.3 | 15 fps | 52 dB | Military | Wireless Vision sensor |

| 2017 | N/A | Flea3 | 1.55 × 1.55 | 4000 × 3000 | 1.55 | 29 mm × 29 mm | N/A | N/A | N/A | N/A | N/A | 15 fps | 66.46 dB | Military | CARMA |

| 2018 | N/A | ESN-0510 | N/A | 640 × 480 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | 30 fps | N/A | Military | Sticky Bomb Detection |

| 2019 | 0.18 µm | N/A | 54 × 54 | 64 × 64 | 54 | 5 mm × 5.5 mm | N/A | N/A | N/A | N/A | N/A | 200 kfps | N/A | Military | Gun Muzzle Flash Detection System |

| 2020 | N/A | MC1362 | 14 × 14 | 1280 × 1024 | 14 | 17.92 mm × 14.34 mm | N/A | 0.2 | N/A | N/A | 25 | 200 hz | 90 dB | Military | Critical Part Detection of Reconnaissance Balloon |

| 2009 | 0.35 µm | N/A | 13.1 × 22.05 | 64 × 64 | N/A | 2194.4 µm × 2389.8 µm | 159.4 mw | N/A | N/A | N/A | N/A | 10 fps | N/A | Automotive | Built in lane Detection |

| 2011 | 0.18 µm | N/A | 13.75 × 13.75 | 128 × 256 | 8.5 | N/A | 10 mw | 0.1 fA | 51 db | 72 | N/A | 60 fps | 98 dB | Automotive | Night Vision Systems |

| 2012 | N/A | MT9 V125 | 5.6 × 5.6 | 720 × 480 | 5.6 | 3.63 mm × 2.78 mm | 320 mw | N/A | 39 dB | N/A | N/A | 30 fps | 70 dB | Automotive | Fish-Eye Automotive Camera |

| 2013 | 0.18 µm | N/A | 7.5 × 7.5 | 642 × 480 | 7.5 | 7.5 mm × 8.0 mm | N/A | N/A | N/A | N/A | N/A | 30 fps | N/A | Automotive | Optical Wireless Communication System |

| 2013 | 0.13 µm | N/A | 6.0 × 6.0 | 768 × 576 | 6 | N/A | N/A | N/A | 45 db | 95 | 4.8 V/lux-sec | N/A | 70 dB | Automotive | On-Screen-Display (OSD) |

| 2014 | 0.18 µm | N/A | 7.5 × 7.5 | 642 × 480 | 7.5 | 7.5 mm × 8.0 mm | N/A | N/A | N/A | N/A | N/A | 60 fps | N/A | Automotive | Visible Light Communication |

| 2015 | N/A | GUPPY-F036 C | 6.0 × 6.0 | 752 × 480 | 6 | N/A | N/A | N/A | N/A | N/A | N/A | 64 fps | N/A | Automotive | Traffic light Detection |

| 2015 | 0.35 µm | N/A | 150 × 150 | 64 × 32 | 150 | N/A | 4 w | N/A | N/A | N/A | N/A | 100 fps | 110 dB | Automotive | Three-Dimensional Vision |

| 2018 | 0.11 µm | N/A | 7.1 × 7.1 | 1280 × 1024 | 7.1 | 12.6 mm × 14.8 mm | N/A | N/A | N/A | 99.2 | 134.8 ke-/lux-s | 30 fps | N/A | Automotive | Non-Contact Heart Rate Detection |

| 2018 | N/A | OV7725 | 6.0 × 6.0 | 640 × 480 | 6 | 3984 µm × 2952 µm | 120 mW | 40 mv/s | 50 dB | N/A | 3.8 V/lux-sec | 60 fps | 60 dB | Automotive | Intelligent Car Path Tracking |

| 2010 | N/A | C328 R | N/A | 640 × 480 | 2 | 20 mm × 28 mm | N/A | N/A | N/A | N/A | N/A | N/A | N/A | WSN | Tigercense |

| 2011 | 0.18 µm | N/A | 14 × 14 | 64 × 64 | 14 | 1.5 mm × 1.5 mm | 0.4 mw | 6.7 fA | N/A | N/A | 0.11 V/lux-s | 100 fps | N/A | WSN | On Chip Moving object Detection & localization |

| 2011 | N/A | MT9 D131 | 2.8 × 2.8 | 1600 × 1200 | 2.8 | 4.73 mm × 3.52 mm | 348 mw | N/A | 42.3 dB | N/A | 1.0 V/lux-sec | 15 fps | 71 dB | WSN | MasliNET |

| 2012 | 0.6 µm | N/A | 24 × 24 | 384 × 288 | 24 | 11.5 mm × 7.7 mm | 150 mW | N/A | N/A | N/A | N/A | N/A | N/A | WSN | Eco-Hydrological Monitoring |

| 2012 | N/A | C328-7640 | 5.6 × 5.6 | 640 × 480 | 5.6 | 3.6 mm × 2.7 mm | 40 mw | 30 mv/s | 46 dB | N/A | 3.0 V/lux-s --B&W, 1.12 V/Lux-S --Colour | 30 fps | 62 dB | WSN | Monitoring Pest Insect Traps |

| 2013 | N/A | MT9 M001 | 5.2 × 5.2 | 1280 × 1024 | 5.2 | N/A | 325 mw | 20–30 e-s | >45 dB | N/A | 1.8 v/lux-sec | 30 fps | >62 dB | WSN | River Surface Target Enhancement |

| 2014 | N/A | OV5642 | 1.4 × 1.4 | 2592 × 1944 | 1.4 | 3673.6 µm × 2738.4 µm | N/A | N/A | 50 dB | N/A | 0.6 V/lux-sec | 15 fps | 40 dB | WSN | TrustEYE.M4 |

| 2014 | N/A | OV7725 | 6 × 6 | 640 × 480 | 6 | 3984 µm × 2952 µm | 120 mW | 40 mv/s | 50 dB | N/A | 3.8 V/lux-sec | 60 fps | 60 dB | WSN | Wild life Inventory |

| 2019 | N/A | IMX178 LLJ-C IMX178 LQJ-C | 2.4 × 2.4 | 3088 × 2064 | 2.4 | 8.92 mm × 8.92 mm | N/A | N/A | N/A | N/A | 0.38, 0.425 | 60 fps | N/A | WSN | Monitoring light pollution from small UAVs |

| 2019 | N/A | ELP-USBFHD04 H-L170 | 2.2 × 2.2 | 1920 × 1080 | 2.2 | 32 mm × 32 mm | N/A | N/A | 39 dB | N/A | 1.9 v/lux-sec | 30 fps | 72.4 dB | WSN | SoilCam |

References

- Cyr, J.S.; Vanderpool, J.; Chen, Y.; Li, X. HODET: Hybrid object detection and tracking using mmWave radar and visual sensors. In Sensors and Systems for Space Applications XIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2020; p. 114220I. [Google Scholar]

- Turturici, M.; Saponara, S.; Fanucci, L.; Franchi, E. Low-power embedded system for real-time correction of fish-eye automotive cameras. In Proceedings of the 2012 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 12–16 March 2012; pp. 340–341. [Google Scholar]

- Arima, M.; Kii, S. Development of an Autonomous Human Monitoring System for Preventative Safety in Sea Transportation. In Proceedings of the International Conference on Offshore Mechanics and Arctic Engineering, Nantes, France, 9–14 June 2013; p. V02AT02A040. [Google Scholar]

- Jallad, A.-H.; Marpu, P.; Abdul Aziz, Z.; Al Marar, A.; Awad, M. MeznSat—A 3U CubeSat for Monitoring Greenhouse Gases Using Short Wave Infra-Red Spectrometry: Mission Concept and Analysis. Aerospace 2019, 6, 118. [Google Scholar] [CrossRef]

- Blumenau, A.; Ishak, A.; Limone, B.; Mintz, Z.; Russell, C.; Sudol, A.; Linton, R.; Lai, L.; Padir, T.; Van Hook, R. Design and implementation of an intelligent portable aerial surveillance system (ipass). In Proceedings of the 2013 IEEE Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 22–23 April 2013; pp. 1–6. [Google Scholar]

- Bagree, R.; Jain, V.R.; Kumar, A.; Ranjan, P. Tigercense: Wireless image sensor network to monitor tiger movement. In Proceedings of the International Workshop on Real-world Wireless Sensor Networks, Colombo, Sri Lanka, 16–17 December 2010; pp. 13–24. [Google Scholar]

- Ohta, J. Smart CMOS Image Sensors and Applications; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Bigas, M.; Cabruja, E.; Forest, J.; Salvi, J. Review of CMOS image sensors. Microelectron. J. 2006, 37, 433–451. [Google Scholar] [CrossRef]

- El Gamal, A.; Eltoukhy, H. CMOS image sensors. IEEE Circuits Devices Mag. 2005, 21, 6–20. [Google Scholar] [CrossRef]

- Nakashima, S.; Kitazono, Y.; Zhang, L.; Serikawa, S. Development of privacy-preserving sensor for person detection. Procedia-Soc. Behav. Sci. 2010, 2, 213–217. [Google Scholar]

- Habibi, M. A low power smart CMOS image sensor for surveillance applications. In Proceedings of the 2010 6th Iranian Conference on Machine Vision and Image Processing, Isfahan, Iran, 27–28 October 2010; pp. 1–4. [Google Scholar]

- Pham, C. Low cost wireless image sensor networks for visual surveillance and intrusion detection applications. In Proceedings of the 2015 IEEE 12th International Conference on Networking, Sensing and Control, Taipei, Taiwan, 9–11 April 2015; pp. 376–381. [Google Scholar]

- Rahimi, M.; Baer, R.; Iroezi, O.I.; Garcia, J.C.; Warrior, J.; Estrin, D.; Srivastava, M. Cyclops: In situ image sensing and interpretation in wireless sensor networks. In Proceedings of the 3rd International Conference on Embedded Networked Sensor Systems, SenSys05, San Diego, CA, USA, 2–5 November 2005; pp. 192–204. [Google Scholar]

- Chen, P.; Ahammad, P.; Boyer, C.; Huang, S.-I.; Lin, L.; Lobaton, E.; Meingast, M.; Oh, S.; Wang, S.; Yan, P. CITRIC: A low-bandwidth wireless camera network platform. In Proceedings of the 2008 Second ACM/IEEE International Conference on Distributed Smart Cameras, Stanford, CA, USA, 7–11 September 2008; pp. 1–10. [Google Scholar]

- Evidence Embedding Technology, Seed-Eye Board, a Multimedia Wsn Device. Available online: http://rtn.sssup.it/index.php/hardware/seed-eye (accessed on 20 December 2013).

- Feng, W.-C.; Kaiser, E.; Feng, W.C.; Baillif, M.L. Panoptes: Scalable low-power video sensor networking technologies. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2005, 1, 151–167. [Google Scholar] [CrossRef]

- Paniga, S.; Borsani, L.; Redondi, A.; Tagliasacchi, M.; Cesana, M. Experimental evaluation of a video streaming system for wireless multimedia sensor networks. In Proceedings of the 2011 The 10th IFIP Annual Mediterranean Ad Hoc Networking Workshop, Sicily, Italy, 12–15 June 2011; pp. 165–170. [Google Scholar]

- Rowe, A.; Goel, D.; Rajkumar, R. Firefly mosaic: A vision-enabled wireless sensor networking system. In Proceedings of the 28th IEEE International Real-Time Systems Symposium (RTSS 2007), Tucson, AZ, USA, 3–6 December 2007; pp. 459–468. [Google Scholar]

- Rodríguez-Vázquez, Á.; Domínguez-Castro, R.; Jiménez-Garrido, F.; Morillas, S.; Listán, J.; Alba, L.; Utrera, C.; Espejo, S.; Romay, R. The Eye-RIS CMOS vision system. In Analog Circuit Design; Springer: Berlin/Heidelberg, Germany, 2008; pp. 15–32. [Google Scholar]

- Kleihorst, R.; Abbo, A.; Schueler, B.; Danilin, A. Camera mote with a high-performance parallel processor for real-time frame-based video processing. In Proceedings of the 2007 First ACM/IEEE International Conference on Distributed Smart Cameras, Vienna, Austria, 25–28 September 2007; pp. 109–116. [Google Scholar]

- Kim, D.; Song, M.; Choe, B.; Kim, S.Y. A multi-resolution mode CMOS image sensor with a novel two-step single-slope ADC for intelligent surveillance systems. Sensors 2017, 17, 1497. [Google Scholar] [CrossRef]

- Kumagai, O.; Niwa, A.; Hanzawa, K.; Kato, H.; Futami, S.; Ohyama, T.; Imoto, T.; Nakamizo, M.; Murakami, H.; Nishino, T. A 1/4-inch 3.9 Mpixel low-power event-driven back-illuminated stacked CMOS image sensor. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 86–88. [Google Scholar]

- Boonroungrut, C.; Oo, T.T. Exploring Classroom Emotion with Cloud-Based Facial Recognizer in the Chinese Beginning Class: A Preliminary Study. Int. J. Instr. 2019, 12, 947–958. [Google Scholar]

- Freeman, B.S.; Al Matawah, J.A.; Al Najjar, M.; Gharabaghi, B.; Thé, J. Vehicle stacking estimation at signalized intersections with unmanned aerial systems. Int. J. Transp. Sci. Technol. 2019, 8, 231–249. [Google Scholar] [CrossRef]

- Yan, Z.; Wei, Q.; Huang, G.; Hu, Y.; Zhang, Z.; Dai, T. Nuclear radiation detection based on uncovered CMOS camera under dynamic scene. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2020, 956, 163383. [Google Scholar] [CrossRef]

- Paul, M.; Karthik, S.; Joseph, J.; Sivaprakasam, M.; Kumutha, J.; Leonhardt, S.; Antink, C.H. Non-contact sensing of neonatal pulse rate using camera-based imaging: A clinical feasibility study. Physiol. Meas. 2020, 41, 024001. [Google Scholar]

- Cai, K.; Wu, X.; Liang, X.; Wang, K. Hardware Design of Sensor Nodes in the Nilaparvata Lugens Monitoring System Based on the Internet of Things. In Advanced Electrical and Electronics Engineering; Springer: Berlin/Heidelberg, Germany, 2011; pp. 571–578. [Google Scholar]

- Liqiang, Z.; Shouyi, Y.; Leibo, L.; Zhen, Z.; Shaojun, W. A crop monitoring system based on wireless sensor network. Procedia Environ. Sci. 2011, 11, 558–565. [Google Scholar] [CrossRef]

- Lloret, J.; Bosch, I.; Sendra, S.; Serrano, A. A wireless sensor network for vineyard monitoring that uses image processing. Sensors 2011, 11, 6165–6196. [Google Scholar] [PubMed]

- Chen, P.; Hong, K.; Naikal, N.; Sastry, S.S.; Tygar, D.; Yan, P.; Yang, A.Y.; Chang, L.-C.; Lin, L.; Wang, S. A low-bandwidth camera sensor platform with applications in smart camera networks. ACM Trans. Sens. Netw. 2013, 9, 1–23. [Google Scholar] [CrossRef]

- Yin, C.; Chiu, C.-F.; Hsieh, C.-C. A 0.5 V, 14.28-kframes/s, 96.7-dB smart image sensor with array-level image signal processing for IoT applications. IEEE Trans. Electron Devices 2016, 63, 1134–1140. [Google Scholar]

- Thekkil, T.M.; Prabakaran, N. Real-time WSN based early flood detection and control monitoring system. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 1709–1713. [Google Scholar]

- Patokar, A.M.; Gohokar, V.V. Precision agriculture system design using wireless sensor network. In Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2018; pp. 169–177. [Google Scholar]

- Raj, V.; Chandran, A.; RS, A. IoT Based Smart Home Using Multiple Language Voice Commands. In Proceedings of the 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kerala, India, 5–6 July 2019; pp. 1595–1599. [Google Scholar]

- Hartmannsgruber, A.; Seitz, J.; Schreier, M.; Strauss, M.; Balbierer, N.; Hohm, A. CUbE: A Research Platform for Shared Mobility and Autonomous Driving in Urban Environments. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2315–2322. [Google Scholar]

- Xie, N.; Theuwissen, A.J. An autonomous microdigital sun sensor by a cmos imager in space application. IEEE Trans. Electron Devices 2012, 59, 3405–3410. [Google Scholar]

- Rolando, S.; Goiffon, V.; Magnan, P.; Corbière, F.; Molina, R.; Tulet, M.; Bréart-de-Boisanger, M.; Saint-Pé, O.; Guiry, S.; Larnaudie, F. Smart CMOS image sensor for lightning detection and imaging. Appl. Opt. 2013, 52, C16–C23. [Google Scholar] [CrossRef]

- Qian, X.; Yu, H.; Chen, S.; Low, K.S. An adaptive integration time CMOS image sensor with multiple readout channels. IEEE Sens. J. 2013, 13, 4931–4939. [Google Scholar]

- Maki, J.; McKinney, C.; Sellar, R.; Copley-Woods, D.; Gruel, D.; Nuding, D. Enhanced Engineering Cameras (EECAMs) for the Mars 2020 Rover. In Proceedings of the 3rd International Workshop on Instrumentation for Planetary Mission, Pasadena, CA, USA, 24–27 October 2016; Volume 1980, p. 4132. Available online: http://adsabs.harvard.edu/abs/2016LPICo1980M (accessed on 20 September 2020).

- Pack, D.; Ardila, D.; Herman, E.; Rowen, D.; Welle, R.; Wiktorowicz, S.; Hattersley, B. Two Aerospace Corporation CubeSat remote Sensing Imagers: CUMULOS and R3. Available online: https://digitalcommons.usu.edu/smallsat/2017/all2017/82/ (accessed on 20 September 2020).

- Vala, A.; Patel, A.; Gosai, R.; Chaudharia, J.; Mewada, H.; Mahant, K. A low-cost and efficient cloud monitoring camera system design for imaging satellites. Int. J. Remote Sens. 2019, 40, 2739–2758. [Google Scholar]

- Kim, W.-T.; Park, C.; Lee, H.; Lee, I.; Lee, B.-G. A high full well capacity CMOS image sensor for space applications. Sensors 2019, 19, 1505. [Google Scholar]

- Pajusalu, M.; Slavinskis, A. Characterization of Asteroids Using Nanospacecraft Flybys and Simultaneous Localization and Mapping. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–9. [Google Scholar]

- The CubeSat Program. CubeSat Design Specification Rev. 13. California Polytechnic State University. 2014. Available online: http://www.cubesat.org/s/cds_rev13_final2.pdf (accessed on 16 May 2019).

- Knapp, M.; Seager, S.; Demory, B.-O.; Krishnamurthy, A.; Smith, M.W.; Pong, C.M.; Bailey, V.P.; Donner, A.; Di Pasquale, P.; Campuzano, B. Demonstrating high-precision photometry with a CubeSat: ASTERIA observations of 55 Cancrie. Astron. J. 2020, 160, 23. [Google Scholar]

- Ricker, G.; Winn, J. Transiting Exoplanet Survey Satellite. J. Astron. Telesc. Instrum. Syst. 2014, 1, 014003. [Google Scholar] [CrossRef]

- Catala, C.; Appourchaux, T.; Consortium, P.M. PLATO: PLAnetary Transits and Oscillations of stars. In Proceedings of the Journal of Physics, Conference Series, Aix-en-Provence, France, 27 June–2 July 2010; p. 012084. [Google Scholar]

- Zhang, L.; Liu, C.; Qian, G. The portable wireless aerial image transmission system based on DSP. In Proceedings of the 2010 International Conference on Microwave and Millimeter Wave Technology, Chengdu, China, 8–11 May 2010; pp. 1591–1594. [Google Scholar]

- Oduor, P.; Mizuno, G.; Olah, R.; Dutta, A.K. Development of low-cost high-performance multispectral camera system at Banpil. In Proceedings of the Image Sensing Technologies, Materials, Devices, Systems, and Applications, Baltimore, MD, USA, 11 June 2014; p. 910006. [Google Scholar]

- Kürüm, U. Scenario-based analysis of binning in MWIR detectors for missile applications. In Proceedings of the Infrared Imaging Systems, Design, Analysis, Modeling, and Testing XXVII, Baltimore, MD, USA, 3 May 2016; p. 98200O. [Google Scholar]

- Lawrence, J.; Miller, S.R.; Robertson, R.; Singh, B.; Nagarkar, V.V. High frame-rate real-time x-ray imaging of in situ high-velocity rifle bullets. In Proceedings of the Anomaly Detection and Imaging with X-Rays (ADIX), Baltimore, MD, USA, 12 May 2016; p. 98470G. [Google Scholar]

- Pandey, P.; Laxmi, V. Design of low cost and power efficient Wireless vision Sensor for surveillance and monitoring. In Proceedings of the 2016 International Conference on Computation of Power, Energy Information and Communication (ICCPEIC), Chennai, India, 20–21 April 2016; pp. 113–117. [Google Scholar]

- Johnson, S.; Stroup, R.; Gainer, J.J.; De Vries, L.D.; Kutzer, M.D. Design of a Robotic Catch and Release Manipulation Architecture (CARMA). In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Tampa, FL, USA, 3–9 November 2017; p. V04BT05A010. [Google Scholar]

- Valenti, M.; Bethke, B.; Dale, D.; Frank, A.; McGrew, J.; Ahrens, S.; How, J.P.; Vian, J. The MIT indoor multi-vehicle flight testbed. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 2758–2759. [Google Scholar]

- Majeed, R.; Hatem, H.; Mohammed, M. Automatic Detection System to the Sticky Bomb. Comput. Sci. Eng. 2018, 8, 17–22. [Google Scholar]

- Katz, A.; Shoham, A.; Vainstein, C.; Birk, Y.; Leitner, T.; Fenigstein, A.; Nemirovsky, Y. Passive CMOS Single Photon Avalanche Diode Imager for a Gun Muzzle Flash Detection System. IEEE Sens. J. 2019, 19, 5851–5858. [Google Scholar] [CrossRef]

- Hong, H.; Shi, J.; Liu, Z.; Zhang, Y.; Wu, J. A real-time critical part detection for the blurred image of infrared reconnaissance balloon with boundary curvature feature analysis. J. Real-Time Image Process. 2020, 1–16. [Google Scholar] [CrossRef]

- Hsiao, P.-Y.; Cheng, H.-C.; Huang, S.-S.; Fu, L.-C. CMOS image sensor with a built-in lane detector. Sensors 2009, 9, 1722–1737. [Google Scholar] [PubMed]

- Zhang, S.; Zhang, H.; Chen, B.; Shao, D.; Xu, C. On-Screen-display (OSD) and SPI interface on CMOS image sensor for automobile application. In Proceedings of the 2013 Fifth International Conference on Computational Intelligence, Communication Systems and Networks, Madrid, Spain, 5–7 June 2013; pp. 405–408. [Google Scholar]

- Cao, C.; Shirakawa, Y.; Tan, L.; Seo, M.-W.; Kagawa, K.; Yasutomi, K.; Kosugi, T.; Aoyama, S.; Teranishi, N.; Tsumura, N. A two-tap NIR lock-in pixel CMOS image sensor with background light cancelling capability for non-contact heart rate detection. In Proceedings of the 2018 IEEE Symposium on VLSI Circuits, Honolulu, HI, USA, 18–22 June 2018; pp. 75–76. [Google Scholar]

- Friel, M.; Hughes, C.; Denny, P.; Jones, E.; Glavin, M. Automatic calibration of fish-eye cameras from automotive video sequences. IET Intell. Transp. Syst. 2010, 4, 136–148. [Google Scholar] [CrossRef]

- Yamazato, T.; Takai, I.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S.; Andoh, M.; Harada, T.; Yasutomi, K.; Kagawa, K. Image-sensor-based visible light communication for automotive applications. IEEE Commun. Mag. 2014, 52, 88–97. [Google Scholar]

- Takai, I.; Ito, S.; Yasutomi, K.; Kagawa, K.; Andoh, M.; Kawahito, S. LED and CMOS image sensor based optical wireless communication system for automotive applications. IEEE Photonics J. 2013, 5, 6801418. [Google Scholar] [CrossRef]

- Bronzi, D.; Zou, Y.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Automotive three-dimensional vision through a single-photon counting SPAD camera. IEEE Trans. Intell. Transp. Syst. 2015, 17, 782–795. [Google Scholar]

- Kwon, D.; Park, S.; Baek, S.; Malaiya, R.K.; Yoon, G.; Ryu, J.-T. A study on development of the blind spot detection system for the IoT-based smart connected car. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–14 June 2018; pp. 1–4. [Google Scholar]

- Spivak, A.; Belenky, A.; Fish, A.; Yadid-Pecht, O. A wide-dynamic-range CMOS image sensor with gating for night vision systems. IEEE Trans. Circuits Syst. II Express Briefs 2011, 58, 85–89. [Google Scholar]

- Diaz-Cabrera, M.; Cerri, P.; Medici, P. Robust real-time traffic light detection and distance estimation using a single camera. Expert Syst. Appl. 2015, 42, 3911–3923. [Google Scholar] [CrossRef]

- Mu, Z.; Li, Z. Intelligent tracking car path planning based on Hough transform and improved PID algorithm. In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI), Nanjing, China, 10–12 November 2018; pp. 24–28. [Google Scholar]

- Zhao, B.; Zhang, X.; Chen, S.; Low, K.-S.; Zhuang, H. A 64$\,\times\, $64 CMOS Image Sensor With On-Chip Moving Object Detection and Localization. IEEE Trans. Circuits Syst. Video Technol. 2011, 22, 581–588. [Google Scholar] [CrossRef]

- Jeličić, V.; Ražov, T.; Oletić, D.; Kuri, M.; Bilas, V. MasliNET: A Wireless Sensor Network based environmental monitoring system. In Proceedings of the 2011 34th International Convention MIPRO, Opatija, Croatia, 23–27 May 2011; pp. 150–155. [Google Scholar]

- Luo, L.; Zhang, Y.; Zhu, W. E-Science application of wireless sensor networks in eco-hydrological monitoring in the Heihe River basin, China. IET Sci. Meas. Technol. 2012, 6, 432–439. [Google Scholar] [CrossRef]

- López, O.; Rach, M.M.; Migallon, H.; Malumbres, M.P.; Bonastre, A.; Serrano, J.J. Monitoring pest insect traps by means of low-power image sensor technologies. Sensors 2012, 12, 15801–15819. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X.; Fan, T.; Xu, L. River surface target enhancement and background suppression for unseeded LSPIV. Flow Meas. Instrum. 2013, 30, 99–111. [Google Scholar] [CrossRef]

- Winkler, T.; Erdélyi, A.; Rinner, B. TrustEYE. M4: Protecting the sensor—Not the camera. In Proceedings of the 2014 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Seoul, Korea, 26–29 August 2014; pp. 159–164. [Google Scholar]

- Camacho, L.; Baquerizo, R.; Palomino, J.; Zarzosa, M. Deployment of a set of camera trap networks for wildlife inventory in western amazon rainforest. IEEE Sens. J. 2017, 17, 8000–8007. [Google Scholar] [CrossRef]

- Fiorentin, P.; Bettanini, C.; Bogoni, D.; Aboudan, A.; Colombatti, G. Calibration of an imaging system for monitoring light pollution from small UAVs. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Torino, Italy, 19–21 June 2019; pp. 267–271. [Google Scholar]

- Rahman, G.; Sohag, H.; Chowdhury, R.; Wahid, K.A.; Dinh, A.; Arcand, M.; Vail, S. SoilCam: A Fully Automated Minirhizotron using Multispectral Imaging for Root Activity Monitoring. Sensors 2020, 20, 787. [Google Scholar] [CrossRef]

- Semiconductor and Computer Engineering. Available online: https://en.wikichip.org/wiki/technology_node (accessed on 20 September 2020).

- Image Resolution. Available online: https://en.wikipedia.org/wiki/Image_resolution (accessed on 20 September 2020).

- Dupuis, Y.; Savatier, X.; Vasseur, P. Feature subset selection applied to model-free gait recognition. Image Vis. Comput. 2013, 31, 580–591. [Google Scholar] [CrossRef]

- Rida, I.; Jiang, X.; Marcialis, G.L. Human body part selection by group lasso of motion for model-free gait recognition. IEEE Signal Process. Lett. 2015, 23, 154–158. [Google Scholar] [CrossRef]

- Rida, I.; Almaadeed, N.; Almaadeed, S. Robust gait recognition: A comprehensive survey. IET Biom. 2018, 8, 14–28. [Google Scholar] [CrossRef]

- Wan, C.; Wang, L.; Phoha, V.V. A survey on gait recognition. ACM Comput. Surv. (CSUR) 2018, 51, 1–35. [Google Scholar] [CrossRef]

| S. No. | Year | Technology | Camera Module | Resolution | SNR (dB) | Frame Rate (fps) | Dynamic Range (dB) | Application Name/Target | Field |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2009 | 0.35 µm | N/A | 64 × 64 | N/A | 10 | N/A | Built in lane Detection | Automotive |

| 2 | 2011 | 0.18 µm | N/A | 128 × 256 | 51 | 60 | 98 | Night Vision Systems | Automotive |

| 3 | 2012 | N/A | MT9 V125 | 720 × 480 | 39 | 30 | 70 | Fish-Eye Automotive Camera | Automotive |

| 4 | 2013 | 0.18 µm | N/A | 642 × 480 | N/A | 30 | N/A | Optical Wireless Communication System | Automotive |

| 5 | 2013 | 0.13 µm | N/A | 768 × 576 | 45 | N/A | 70 | On-Screen-Display (OSD) | Automotive |

| 6 | 2014 | 0.18 µm | N/A | 642 × 480 | N/A | 60 | N/A | Visible Light Communication | Automotive |

| 7 | 2015 | N/A | GUPPY-F036 C | 752 × 480 | N/A | 64 | N/A | Traffic light Detection | Automotive |

| 8 | 2015 | 0.35 µm | N/A | 64 × 32 | N/A | 100 | 110 | Three Dimensional Vision | Automotive |

| 9 | 2018 | 0.11 µm | N/A | 1280 × 1024 | N/A | 30 | N/A | Non Contact Heart rate Detection | Automotive |

| 10 | 2018 | N/A | OV7725 | 640 × 480 | 50 | 60 | 60 | Intelligent Car Path Tracking | Automotive |

| 11 | 2011 | N/A | OV6620 | 356 × 292 | >48 | 60 | >72 | Nilaparvata Lugens Monitoring System | IoT |

| 12 | 2011 | N/A | OV7640 | 640 × 480 | 46 | 30 | 62 | Crop Monitoring System | IoT |

| 13 | 2011 | N/A | Hercules Webcam | 1280 × 960 | N/A | 30 | N/A | Vine Yard Monitoring | IoT |

| 14 | 2013 | N/A | VBM40 | 1280 × 960 | N/A | 30 | N/A | Human Monitoring System in Sea Transportation | IoT |

| 15 | 2013 | N/A | OV9655 | 1280 × 1024 | N/A | 15 | N/A | Smart Camera Networks (SCN) | IoT |

| 16 | 2016 | 0.18 µm | N/A | 64 × 64 | N/A | N/A | 96.7 | Smart Image Sensor with Multi Point Tracking (MPT) | IoT |

| 17 | 2017 | N/A | N/A | N/A | N/A | N/A | N/A | Early Flood Detection & Control Monitoring | IoT |

| 18 | 2018 | N/A | OV7670 | 640 × 480 | 40 | 30 | 52 | Precision Agriculture System Design | IoT |

| 19 | 2019 | N/A | OV2640 | 1600 × 1200 | 40 | 15 | 50 | SMART HOME | IoT |

| 20 | 2019 | N/A | SF3324-101 | 1928 × 1208 | N/A | N/A | N/A | CUbE | IoT |

| 21 | 2009 | N/A | Quickcam Pro 9000 | 1600 × 1200 | N/A | 30 | N/A | Privacy preserving sensor for Person Detection | ISS |

| 22 | 2010 | 0.18 µm | N/A | 64 × 64 | N/A | 30 | N/A | surveillance in low crowded environments | ISS |

| 23 | 2015 | N/A | ucam-II | 128 × 128 | 44.2 | N/A | 51 | Visual surveillance and intrusion detection | ISS |

| 24 | 2017 | 0.18 µm | N/A | 176 × 144 | 47 | 14 | 61.8 | Multi Resolution Mode | ISS |

| 25 | 2018 | 0.09 µm | N/A | 2560 × 1536 | N/A | 60 | 67 | Moving Object Detection With Pre-defined Areas | ISS |

| 26 | 2019 | N/A | ZTE Nubia UINX511 J | 5344 × 3000 | N/A | 120 | N/A | Classroom Emotion with Cloud-Based Facial Recognizer | ISS |

| 27 | 2019 | N/A | DJI PHANTOM 3 PRO | 4000 × 3000 | N/A | N/A | N/A | vehicle Stacking Estimation | ISS |

| 28 | 2020 | N/A | OV2710-1 E | 1920 × 1080 | 40 | 30 | 69 | Nuclear Radiation Detection | ISS |

| 29 | 2020 | N/A | GS3-U3-23 S6 C-C | 1920 × 1200 | N/A | 162 | N/A | Contact less Neonatal Pulse Rate Sensing | ISS |

| 30 | 2020 | N/A | OV9653 | 1300 × 1028 | 40 | 15 to 120 | 62 | HODET | ISS |

| 31 | 2012 | 0.18 µm | N/A | 368 × 368 | N/A | 10 | 49.2 | Autonomous Micro Digital Sun Sensor | Space |

| 32 | 2013 | 0.35 µm | N/A | 256 × 256 | N/A | N/A | N/A | Lightning Detection and Imaging | Space |

| 33 | 2013 | 0.18 µm | N/A | 320 × 128 | N/A | N/A | 126 | STAR Tracking | Space |

| 34 | 2016 | N/A | CMV20000 | 5120 × 3840 | 41.8 | 0.45 | 66 | MARS 2020 Mission: EECAM | Space |

| 35 | 2017 | N/A | MT9 M001 C12 STM | 1280 × 1024 | 45 | 30 | 68.2 | Cube SAT Remote Sensing Imagers | Space |

| 36 | 2018 | N/A | CMV4000 | 2048 × 2048 | N/A | 180 | 60 | Cloud Monitoring Camera (CMC) System for Imaging Satellites | Space |

| 37 | 2019 | 0.11 µm | N/A | 3000 × 3000 | 45 | N/A | 72.4 | Radiation Tolerant Sensor | Space |

| 38 | 2019 | N/A | IMX 264 | 2464 × 2056 | N/A | 60 | N/A | Nanospacecraft Asteroid Flybys | Space |

| 39 | 2019 | N/A | OV9630 | 1280 × 1024 | 54 | 15 | 60 | Mezn Sat for monitoring Green House Gases | Space |

| 40 | 2020 | N/A | CIS2521 F | 2560 × 2160 | N/A | 100 | >86 | ASTERIA-A Space Telescope | Space |

| 41 | 2010 | N/A | OV9653 | 1300 × 1028 | 40 | 30 | 62 | Wireless Aerial Image System | Millitary |

| 42 | 2013 | N/A | OV7725 | 640 × 480 | 50 | 60 | 60 | IPASS | Millitary |

| 43 | 2014 | N/A | N/A | 640 × 512 | N/A | N/A | N/A | Banpil Camera | Millitary |

| 44 | 2016 | N/A | MPT 50 | 640 × 512 | N/A | N/A | N/A | MWIR Detector for MissileApplications | Millitary |

| 45 | 2016 | N/A | PHOTRON SA4 | 1024 × 1024 | 80 | 3600 | N/A | IN-SITU High velocity Rifle Bullets | Millitary |

| 46 | 2016 | N/A | OV7670 | 640 × 480 | 46 | 15 | 52 | Wireless Vision sensor | Millitary |

| 47 | 2017 | N/A | Flea3 | 4000 × 3000 | N/A | 15 | 66.46 | CARMA | Millitary |

| 48 | 2018 | N/A | ESN-0510 | 640 × 480 | N/A | 30 | N/A | Sticky Bomb Detection | Millitary |

| 49 | 2019 | 0.18 µm | N/A | 64 × 64 | N/A | 200 k | N/A | Gun Muzzle Flash Detection System | Millitary |

| 50 | 2020 | N/A | MC1362 | 1280 × 1024 | N/A | 200 hz | 90 | Critical Part Detection of Reconnaissance Balloon | Millitary |

| 51 | 2010 | N/A | C328 R | 640 × 480 | N/A | N/A | N/A | Tigercense | WSN |

| 52 | 2011 | 0.18 µm | N/A | 64 × 64 | N/A | 100 | N/A | On Chip Moving object Detection & Localization | WSN |

| 53 | 2011 | N/A | MT9 D131 | 1600 × 1200 | 42.3 | 15 | 71 | MasliNET | WSN |

| 54 | 2012 | 0.6 µm | N/A | 384 × 288 | N/A | N/A | N/A | Eco-Hydrological Monitoring | WSN |

| 55 | 2012 | N/A | C328-7640 | 640 × 480 | 46 | 30 | 62 | Monitoring Pest Insect Traps | WSN |

| 56 | 2013 | N/A | MT9 M001 | 1280 × 1024 | >45 | 30 | >62 | River Surface Target Enhancement | WSN |

| 57 | 2014 | N/A | OV5642 | 2592 × 1944 | 50 | 15 | 40 | TrustEYE.M4 | WSN |

| 58 | 2014 | N/A | OV7725 | 640 × 480 | 50 | 60 | 60 | Wild life Inventory | WSN |

| 59 | 2019 | N/A | IMX178 LLJ-C | 3088 × 2064 | N/A | 60 | N/A | Monitoring light pollution from small UAVs | WSN |

| 60 | 2019 | N/A | ELP-USBFHD04 H-L170 | 1920 × 1080 | 39 | 30 | 72.4 | SoilCam | WSN |

| CMOS Image Sensor Model | Automotive | IoT | ISS | Military | Space | WSN |

|---|---|---|---|---|---|---|

| Aptina MT9 M001 C12 STM | 1 | |||||

| Cube SAT Remote Sensing Imagers | ||||||

| C328-7640 | 1 | |||||

| Monitoring Pest Insect Traps | ||||||

| CIS2521 F | 1 | |||||

| ASTERIA-A Space Telescope | ||||||

| CMV20000 | 1 | |||||

| MARS 2020 Mission: EECAM | ||||||

| CMV4000 | 1 | |||||

| Cloud Monitoring Camera (CMC) System for Imaging Satellites | ||||||

| ELP-USBFHD04 H-L170 | 1 | |||||

| Soil Cam | ||||||

| Flea3 | 1 | |||||

| CARMA | ||||||

| Grasshopper 3 GS3-U3-23 S6 C-C | 1 | |||||

| Contact less Neonatal Pulse Rate Sensing | ||||||

| GUPPY-F036 C | 1 | |||||

| Traffic light Detection | ||||||

| IMX 264 | 1 | |||||

| Nano spacecraft Asteroid Flybys | ||||||

| IMX178 LLJ-C IMX178 LQJ-C | 1 | |||||

| Monitoring light pollution from small UAVs | ||||||

| MC1362 | 1 | |||||

| Critical Part Detection of Reconnaissance Balloon | ||||||

| MPT 50 | 1 | |||||

| MWIR Detector for Missile Applications | ||||||

| MT9 D131 | 1 | |||||

| MasliNET | ||||||

| MT9 M001 | 1 | |||||

| River Surface Target Enhancement | ||||||

| MT9 V125 | 1 | |||||

| Fish-Eye Automotive Camera | ||||||

| OV2640 | 1 | |||||

| SMART HOME | ||||||

| OV2710-1 E | 1 | |||||

| Nuclear Radiation Detection | ||||||

| OV5642 | 1 | |||||

| TrustEYE.M4 | ||||||

| OV6620 | 1 | |||||

| Nilaparvata Lugens Monitoring System | ||||||

| OV7640 | 1 | |||||

| Crop Monitoring System | ||||||

| OV7670 | 1 | 1 | ||||

| Precision Agriculture | ||||||

| Wireless Vision sensor | 1 | |||||

| OV7725 | 1 | 1 | 1 | |||

| Intelligent Car Path Tracking | ||||||

| IPASS | ||||||

| Wild life Inventory | 1 | |||||

| OV9630 | 1 | |||||

| Mezn Sat for monitoring Green House Gases | ||||||

| OV9653 | 1 | 1 | ||||

| HODET | ||||||

| Wireless Aerial Image System | 1 | |||||

| OV9655 | 1 | |||||

| Smart Camera Networks (SCN) | ||||||

| PHOTRON SA4 | 1 | |||||

| IN-SITU High velocity Rifle Bullets | ||||||

| Sekonix Camera SF3324-101 | 1 | |||||

| CUbE | ||||||

| ucam-II | 1 | |||||

| Visual surveillance and intrusion detection | ||||||

| Grand Total (33) | 3 | 6 | 4 | 7 | 6 | 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sukhavasi, S.B.; Sukhavasi, S.B.; Elleithy, K.; Abuzneid, S.; Elleithy, A. CMOS Image Sensors in Surveillance System Applications. Sensors 2021, 21, 488. https://doi.org/10.3390/s21020488

Sukhavasi SB, Sukhavasi SB, Elleithy K, Abuzneid S, Elleithy A. CMOS Image Sensors in Surveillance System Applications. Sensors. 2021; 21(2):488. https://doi.org/10.3390/s21020488

Chicago/Turabian StyleSukhavasi, Susrutha Babu, Suparshya Babu Sukhavasi, Khaled Elleithy, Shakour Abuzneid, and Abdelrahman Elleithy. 2021. "CMOS Image Sensors in Surveillance System Applications" Sensors 21, no. 2: 488. https://doi.org/10.3390/s21020488

APA StyleSukhavasi, S. B., Sukhavasi, S. B., Elleithy, K., Abuzneid, S., & Elleithy, A. (2021). CMOS Image Sensors in Surveillance System Applications. Sensors, 21(2), 488. https://doi.org/10.3390/s21020488