Spectral Characteristics of EEG during Active Emotional Musical Performance

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

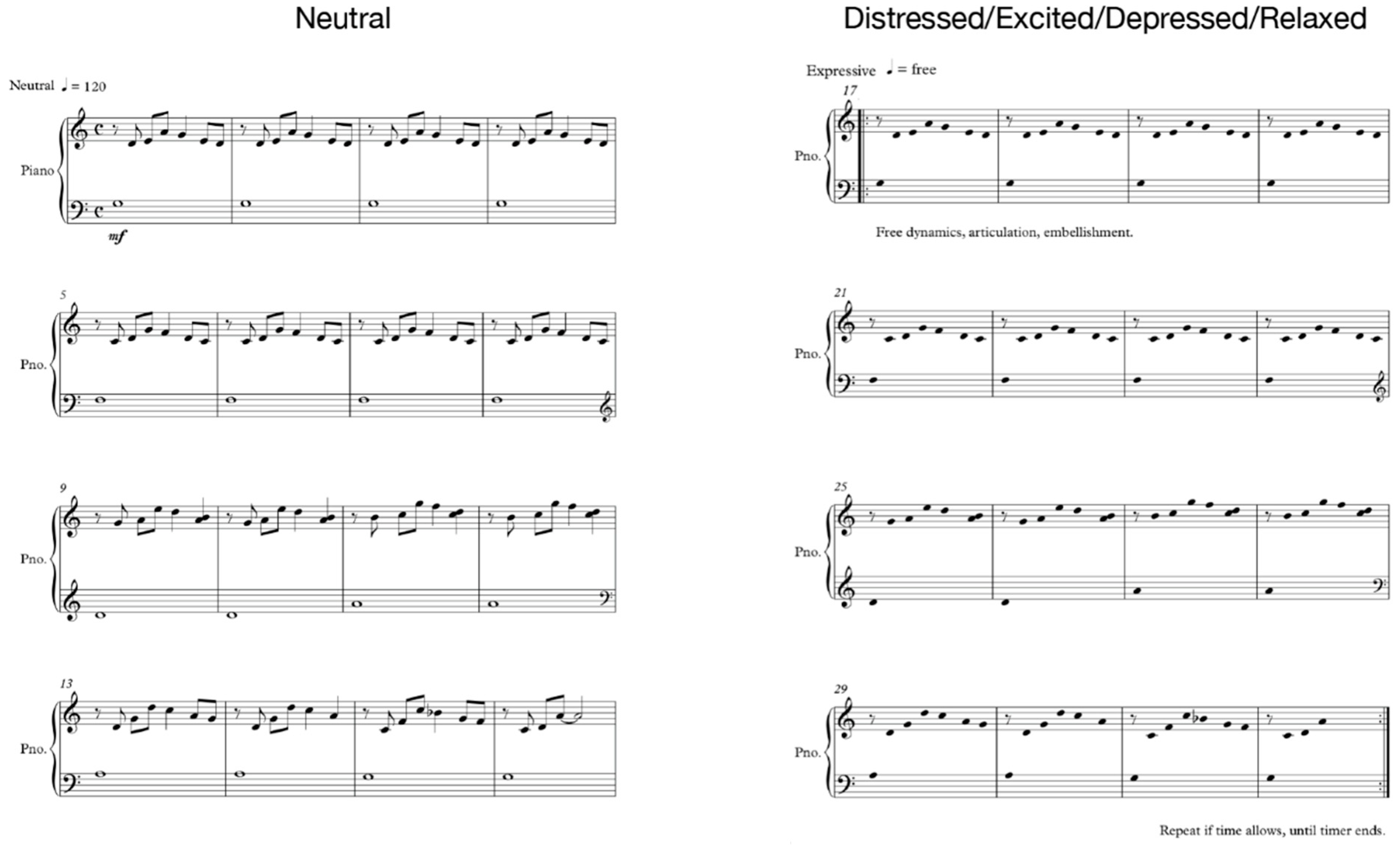

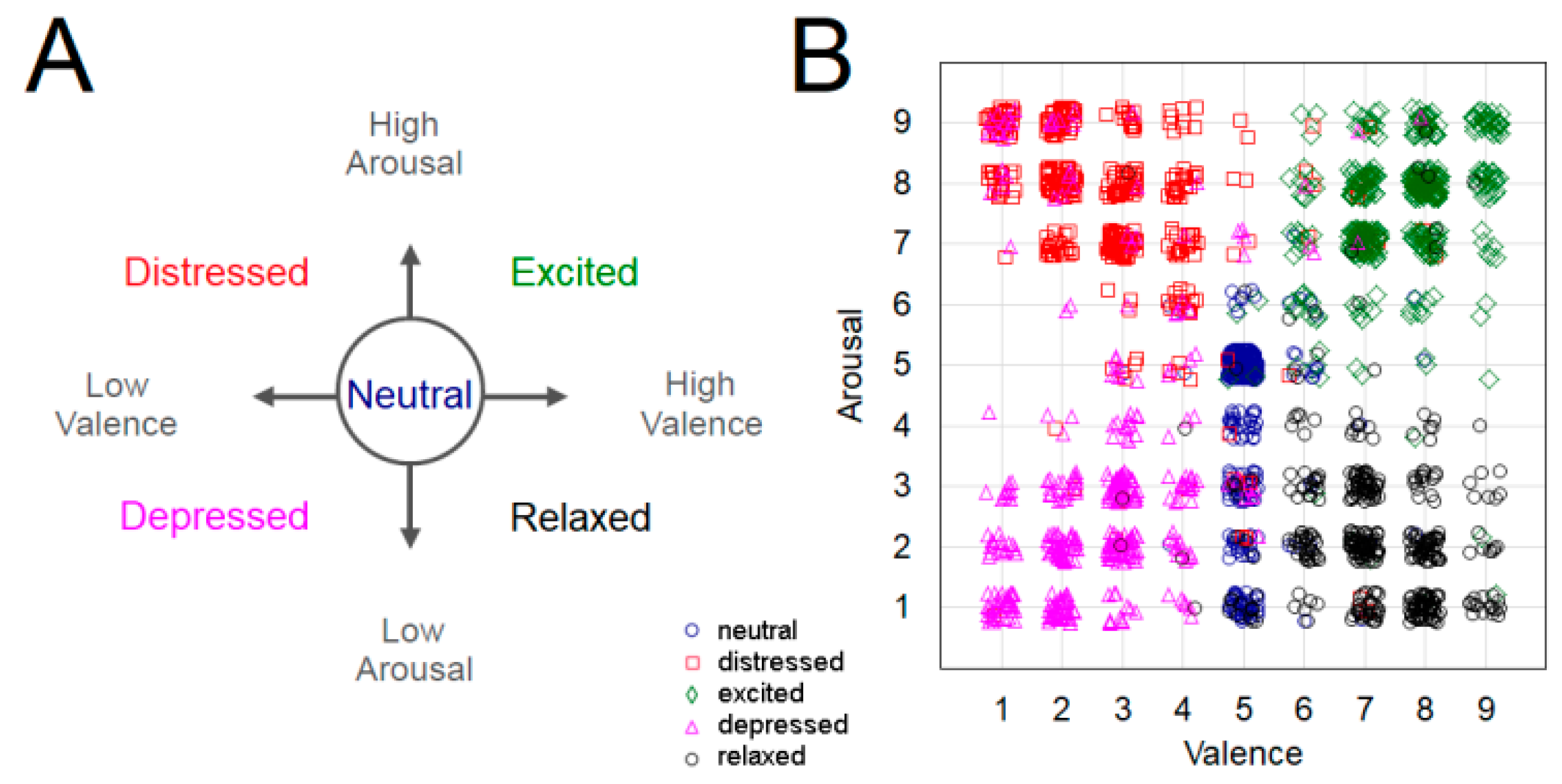

2.2. Experimental Design and Procedure

2.3. EEG Acquisition

2.4. EEG Preprocessing

2.5. EEG Analysis

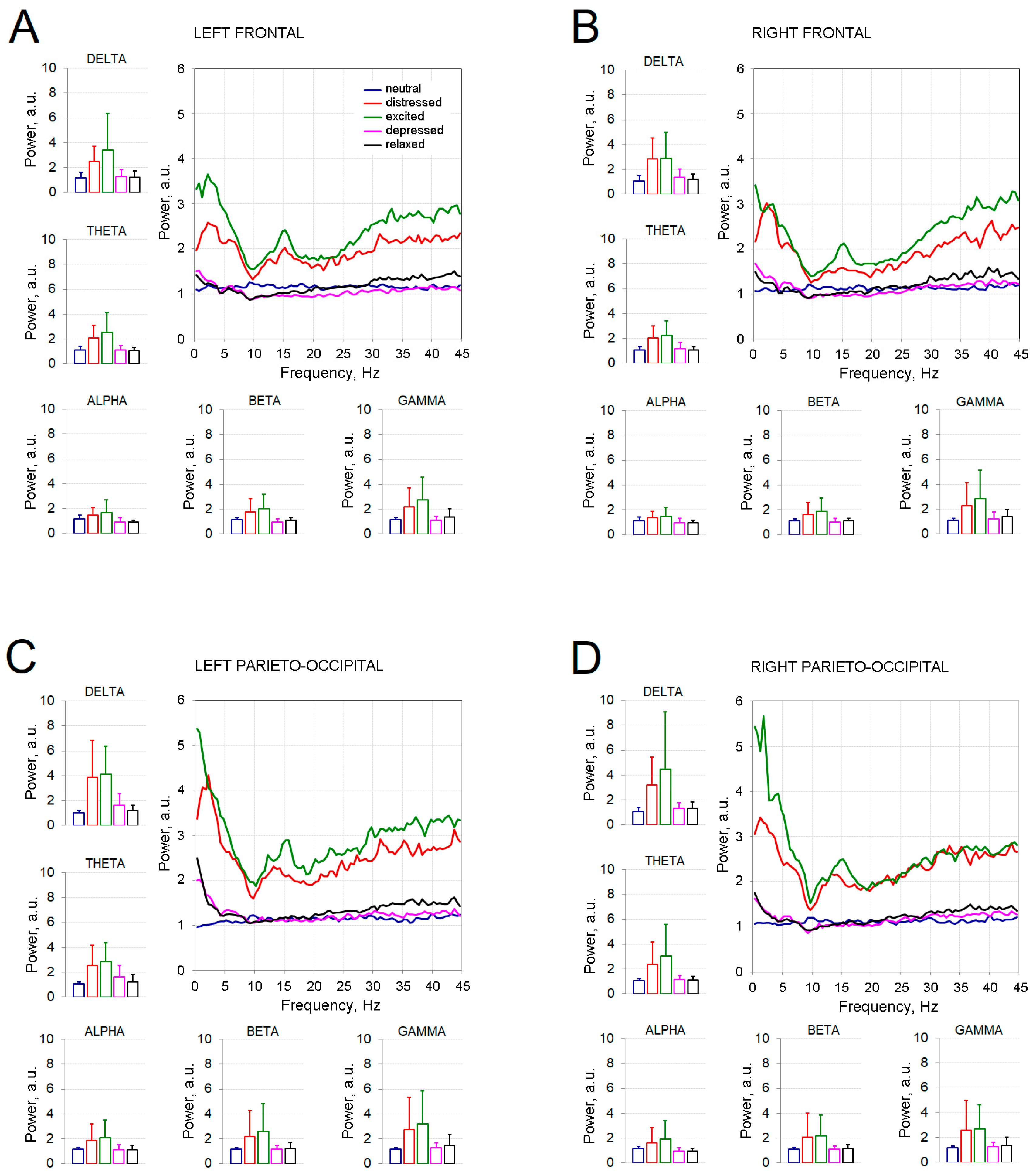

3. Results

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krumhansl, C. An Exploratory Study of Musical Emotions and Psychophysiology. Can. J. Exp. Psychol. Rev. Can. Psychol. Exp. 1997, 51, 336. [Google Scholar] [CrossRef] [Green Version]

- Miell, D.; MacDonald, R.; Hargreaves, D.J. (Eds.) Musical Communication; Oxford University Press: Oxford, UK; New York, NY, USA, 2005; ISBN 9780198529361. [Google Scholar]

- Juslin, P.N.; Västfjäll, D. Emotional Responses to Music: The Need to Consider Underlying Mechanisms. Behav. Brain Sci. 2008, 31, 559–575. [Google Scholar] [CrossRef] [Green Version]

- Blood, A.J.; Zatorre, R.J. Intensely Pleasurable Responses to Music Correlate with Activity in Brain Regions Implicated in Reward and Emotion. Proc. Natl. Acad. Sci. USA 2001, 98, 11818–11823. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.L.; Penhune, V.B.; Zatorre, R.J. Moving on Time: Brain Network for Auditory-Motor Synchronization Is Modulated by Rhythm Complexity and Musical Training. J. Cogn. Neurosci. 2008, 20, 226–239. [Google Scholar] [CrossRef]

- Leman, M. Systematic musicology at the crossroads of modern music research. In Systematic and Comparative Musicology: Concepts, Methods, Findings; Jahrbuch für Musikwissenschaft, Frankfurt am Main; Peter Lang: Hamburger, Germany, 2008; pp. 89–115. [Google Scholar]

- McGuiness, A.; Overy, K. Music, consciousness, and the brain: Music as shared experience of an embodied present. In Music and Consciousness: Philosophical, Psychological, and Cultural Perspectives; Oxford University Press: New York, NY, USA, 2011; pp. 245–262. ISBN 978-0-19-955379-2. [Google Scholar]

- Beaty, R.E. The Neuroscience of Musical Improvisation. Neurosci. Biobehav. Rev. 2015, 51, 108–117. [Google Scholar] [CrossRef] [Green Version]

- Clarke, E.F. Generative principles in music performance. In Generative Processes in Music: The Psychology of Performance, Improvisation, and Composition; Clarendon Press/Oxford University Press: New York, NY, USA, 1988; pp. 1–26. ISBN 978-0-19-852154-9. [Google Scholar]

- McPherson, M.J.; Barrett, F.S.; Lopez-Gonzalez, M.; Jiradejvong, P.; Limb, C.J. Emotional Intent Modulates The Neural Substrates Of Creativity: An FMRI Study of Emotionally Targeted Improvisation in Jazz Musicians. Sci. Rep. 2016, 6, 18460. [Google Scholar] [CrossRef]

- Russell, J. A Circumplex Model of Affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Gabrielsson, A.; Juslin, P.N. Emotional Expression in Music Performance: Between the Performer’s Intention and the Listener’s Experience. Psychol. Music 1996, 24, 68–91. [Google Scholar] [CrossRef]

- Juslin, P.N. Emotional Communication in Music Performance: A Functionalist Perspective and Some Data. Music Percept. 1997, 14, 383–418. [Google Scholar] [CrossRef]

- Van Zijl, A.G.W.; Sloboda, J. Performers’ Experienced Emotions in the Construction of Expressive Musical Performance: An Exploratory Investigation. Psychol. Music 2011, 39, 196–219. [Google Scholar] [CrossRef]

- Tseng, K.C. Electrophysiological Correlation Underlying the Effects of Music Preference on the Prefrontal Cortex Using a Brain–Computer Interface. Sensors 2021, 21, 2161. [Google Scholar] [CrossRef]

- Hou, Y.; Chen, S. Distinguishing Different Emotions Evoked by Music via Electroencephalographic Signals. Comput. Intell. Neurosci. 2019, 2019, e3191903. [Google Scholar] [CrossRef]

- Miyamoto, K.; Tanaka, H.; Nakamura, S. Music Generation and Emotion Estimation from EEG Signals for Inducing Affective States. In Proceedings of the Companion Publication of the 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 487–491. [Google Scholar]

- Dikaya, L.; Skirtach, I. Neurophysiological Correlates of Musical Creativity: The Example of Improvisation. Psychol. Russ. State Art 2015, 8, 84–97. [Google Scholar] [CrossRef] [Green Version]

- Sasaki, M.; Iversen, J.; Callan, D.E. Music Improvisation Is Characterized by Increase EEG Spectral Power in Prefrontal and Perceptual Motor Cortical Sources and Can Be Reliably Classified From Non-Improvisatory Performance. Front. Hum. Neurosci. 2019, 13, 435. [Google Scholar] [CrossRef]

- Rosen, D.S.; Oh, Y.; Erickson, B.; Zhang, F.; Kim, Y.E.; Kounios, J. Dual-Process Contributions to Creativity in Jazz Improvisations: An SPM-EEG Study. NeuroImage 2020, 213, 116632. [Google Scholar] [CrossRef]

- Bigand, E.; Delbé, C.; Poulin-Charronnat, B.; Leman, M.; Tillmann, B. Empirical Evidence for Musical Syntax Processing? Computer Simulations Reveal the Contribution of Auditory Short-Term Memory. Front. Syst. Neurosci. 2014, 8, 94. [Google Scholar] [CrossRef] [Green Version]

- Van Khe, T. Is the Pentatonic Universal? A Few Reflections on Pentatonism. World Music 1977, 19, 76–84. [Google Scholar]

- Stockmann, D. Universals in Aesthetic Valuation of Music? World Music 1983, 25, 26–45. [Google Scholar]

- Leman, M. Embodied Music Cognition and Mediation Technology; MIT Press: Cambridge, MA, USA, 2008; ISBN 978-0-262-12293-1. [Google Scholar]

- Peirce, J.; Gray, J.R.; Simpson, S.; MacAskill, M.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J.K. PsychoPy2: Experiments in Behavior Made Easy. Behav. Res. Methods 2019, 51, 195–203. [Google Scholar] [CrossRef] [Green Version]

- Gramfort, A.; Luessi, M.; Larson, E.; Engemann, D.A.; Strohmeier, D.; Brodbeck, C.; Parkkonen, L.; Hämäläinen, M.S. MNE Software for Processing MEG and EEG Data. NeuroImage 2014, 86, 446–460. [Google Scholar] [CrossRef] [Green Version]

- Jas, M.; Engemann, D.A.; Bekhti, Y.; Raimondo, F.; Gramfort, A. Autoreject: Automated Artifact Rejection for MEG and EEG Data. NeuroImage 2017, 159, 417–429. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perrin, F.; Pernier, J.; Bertrand, O.; Echallier, J.F. Spherical Splines for Scalp Potential and Current Density Mapping. Electroencephalogr. Clin. Neurophysiol. 1989, 72, 184–187. [Google Scholar] [CrossRef]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.-M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef]

- Popov, T.; Oostenveld, R.; Schoffelen, J.M. FieldTrip Made Easy: An Analysis Protocol for Group Analysis of the Auditory Steady State Brain Response in Time, Frequency, and Space. Front. Neurosci. 2018, 12, 711. [Google Scholar] [CrossRef] [Green Version]

- Maris, E.; Oostenveld, R. Nonparametric Statistical Testing of EEG- and MEG-Data. J. Neurosci. Methods 2007, 164, 177–190. [Google Scholar] [CrossRef] [PubMed]

- Schubert, E. Measuring Emotion Continuously: Validity and Reliability of the Two-Dimensional Emotion-Space. Aust. J. Psychol. 1999, 51, 154–165. [Google Scholar] [CrossRef]

- Schmidt, L.A.; Trainor, L.J. Frontal Brain Electrical Activity (EEG) Distinguishes Valence and Intensity of Musical Emotions. Cogn. Emot. 2001, 15, 487–500. [Google Scholar] [CrossRef]

- Chapin, H.; Jantzen, K.; Kelso, J.A.S.; Steinberg, F.; Large, E. Dynamic Emotional and Neural Responses to Music Depend on Performance Expression and Listener Experience. PLoS ONE 2010, 5, e13812. [Google Scholar] [CrossRef]

- Lin, Y.-P.; Wang, C.-H.; Jung, T.-P.; Wu, T.-L.; Jeng, S.-K.; Duann, J.-R.; Chen, J.-H. EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [CrossRef]

- Yang, K.; Tong, L.; Shu, J.; Zhuang, N.; Yan, B.; Zeng, Y. High Gamma Band EEG Closely Related to Emotion: Evidence From Functional Network. Front. Hum. Neurosci. 2020, 14, 89. [Google Scholar] [CrossRef]

- Persson, R.S. The subjective world of the performer. In Music and Emotion: Theory and Research; Series in Affective Science; Oxford University Press: New York, NY, USA, 2001; pp. 275–289. ISBN 978-0-19-263189-3. [Google Scholar]

- Lindström, E. The Contribution of Immanent and Performed Accents to Emotional Expression in Short Tone Sequences. J. New Music Res. 2003, 32, 269–280. [Google Scholar] [CrossRef]

- Sebastiani, L.; Simoni, A.; Gemignani, A.; Ghelarducci, B.; Santarcangelo, E.L. Autonomic and EEG Correlates of Emotional Imagery in Subjects with Different Hypnotic Susceptibility. Brain Res. Bull. 2003, 60, 151–160. [Google Scholar] [CrossRef]

- Aftanas, L.I.; Reva, N.V.; Savotina, L.N.; Makhnev, V.P. Neurophysiological Correlates of Induced Discrete Emotions in Humans: An Individually Oriented Analysis. Neurosci. Behav. Physiol. 2006, 36, 119–130. [Google Scholar] [CrossRef]

- Mao, M.; Rau, P.-L.P. EEG-Based Measurement of Emotion Induced by Mode, Rhythm, and MV of Chinese Pop Music. In Proceedings of the Cross-Cultural Design, Crete, Greece, 22–27 June 2014; Rau, P.L.P., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 89–100. [Google Scholar]

- Hadjidimitriou, S.K.; Hadjileontiadis, L.J. Toward an EEG-Based Recognition of Music Liking Using Time-Frequency Analysis. IEEE Trans. Biomed. Eng. 2012, 59, 3498–3510. [Google Scholar] [CrossRef] [PubMed]

- Adamos, D.A.; Dimitriadis, S.I.; Laskaris, N.A. Towards the Bio-Personalization of Music Recommendation Systems: A Single-Sensor EEG Biomarker of Subjective Music Preference. Inf. Sci. 2016, 343–344, 94–108. [Google Scholar] [CrossRef] [Green Version]

- Bhattacharya, J.; Petsche, H. Drawing on Mind’s Canvas: Differences in Cortical Integration Patterns between Artists and Non-Artists. Hum. Brain Mapp. 2005, 26, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Lopes da Silva, F.H. Event-Related EEG/MEG Synchronization and Desynchronization: Basic Principles. Clin. Neurophysiol. Off. J. Int. Fed. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Spitzer, B.; Haegens, S. Beyond the Status Quo: A Role for Beta Oscillations in Endogenous Content (Re)Activation. eNeuro 2017, 4, ENEURO.0170-17.2017. [Google Scholar] [CrossRef] [Green Version]

- Abbasi, O.; Gross, J. Beta-band Oscillations Play an Essential Role in Motor–Auditory Interactions. Hum. Brain Mapp. 2019, 41, 656–665. [Google Scholar] [CrossRef] [Green Version]

- Schalles, M.; Pineda, J. Musical Sequence Learning and EEG Correlates of Audiomotor Processing. Behav. Neurol. 2015, 2015, 638202. [Google Scholar] [CrossRef] [Green Version]

- Leite, J.A.A.; Dos Santos, M.A.C.; da Silva, R.M.C.; Andrade, A.D.O.; da Silva, G.M.; Bazan, R.; de Souza, L.A.P.S.; Luvizutto, G.J. Alpha and Beta Cortical Activity during Guitar Playing: Task Complexity and Audiovisual Stimulus Analysis. Somatosens. Mot. Res. 2020, 37, 245–251. [Google Scholar] [CrossRef]

- Li, P.; Liu, H.; Si, Y.; Li, C.; Li, F.; Zhu, X.; Huang, X.; Zeng, Y.; Yao, D.; Zhang, Y.; et al. EEG Based Emotion Recognition by Combining Functional Connectivity Network and Local Activations. IEEE Trans. Biomed. Eng. 2019, 66, 2869–2881. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Shin, G.-H.; Lee, S.-W. Frontal EEG Asymmetry of Emotion for the Same Auditory Stimulus. IEEE Access 2020, 8, 107200–107213. [Google Scholar] [CrossRef]

- Poole, B.D.; Gable, P.A. Affective Motivational Direction Drives Asymmetric Frontal Hemisphere Activation. Exp. Brain Res. 2014, 232, 2121–2130. [Google Scholar] [CrossRef]

- Rogenmoser, L.; Zollinger, N.; Elmer, S.; Jäncke, L. Independent Component Processes Underlying Emotions during Natural Music Listening. Soc. Cogn. Affect. Neurosci. 2016, 11, 1428–1439. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cooper, N.; Croft, R.; Dominey, S.; Burgess, A.; Gruzelier, J. Paradox Lost? Exploring the Role of Alpha Oscillations during Externally vs. Internally Directed Attention and the Implications for Idling and Inhibition Hypotheses. Int. J. Psychophysiol. Off. J. Int. Organ. Psychophysiol. 2003, 47, 65–74. [Google Scholar] [CrossRef]

- Cooper, N.R.; Burgess, A.P.; Croft, R.J.; Gruzelier, J.H. Investigating Evoked and Induced Electroencephalogram Activity in Task-Related Alpha Power Increases during an Internally Directed Attention Task. Neuroreport 2006, 17, 205–208. [Google Scholar] [CrossRef]

- Jäncke, L.; Kühnis, J.; Rogenmoser, L.; Elmer, S. Time Course of EEG Oscillations during Repeated Listening of a Well-Known Aria. Front. Hum. Neurosci. 2015, 9, 401. [Google Scholar] [CrossRef] [Green Version]

- Fink, A.; Benedek, M. EEG Alpha Power and Creative Ideation. Neurosci. Biobehav. Rev. 2014, 44, 111–123. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pousson, J.E.; Voicikas, A.; Bernhofs, V.; Pipinis, E.; Burmistrova, L.; Lin, Y.-P.; Griškova-Bulanova, I. Spectral Characteristics of EEG during Active Emotional Musical Performance. Sensors 2021, 21, 7466. https://doi.org/10.3390/s21227466

Pousson JE, Voicikas A, Bernhofs V, Pipinis E, Burmistrova L, Lin Y-P, Griškova-Bulanova I. Spectral Characteristics of EEG during Active Emotional Musical Performance. Sensors. 2021; 21(22):7466. https://doi.org/10.3390/s21227466

Chicago/Turabian StylePousson, Jachin Edward, Aleksandras Voicikas, Valdis Bernhofs, Evaldas Pipinis, Lana Burmistrova, Yuan-Pin Lin, and Inga Griškova-Bulanova. 2021. "Spectral Characteristics of EEG during Active Emotional Musical Performance" Sensors 21, no. 22: 7466. https://doi.org/10.3390/s21227466

APA StylePousson, J. E., Voicikas, A., Bernhofs, V., Pipinis, E., Burmistrova, L., Lin, Y.-P., & Griškova-Bulanova, I. (2021). Spectral Characteristics of EEG during Active Emotional Musical Performance. Sensors, 21(22), 7466. https://doi.org/10.3390/s21227466