Development and Testing of a UAV Laser Scanner and Multispectral Camera System for Eco-Geomorphic Applications

Abstract

:1. Introduction

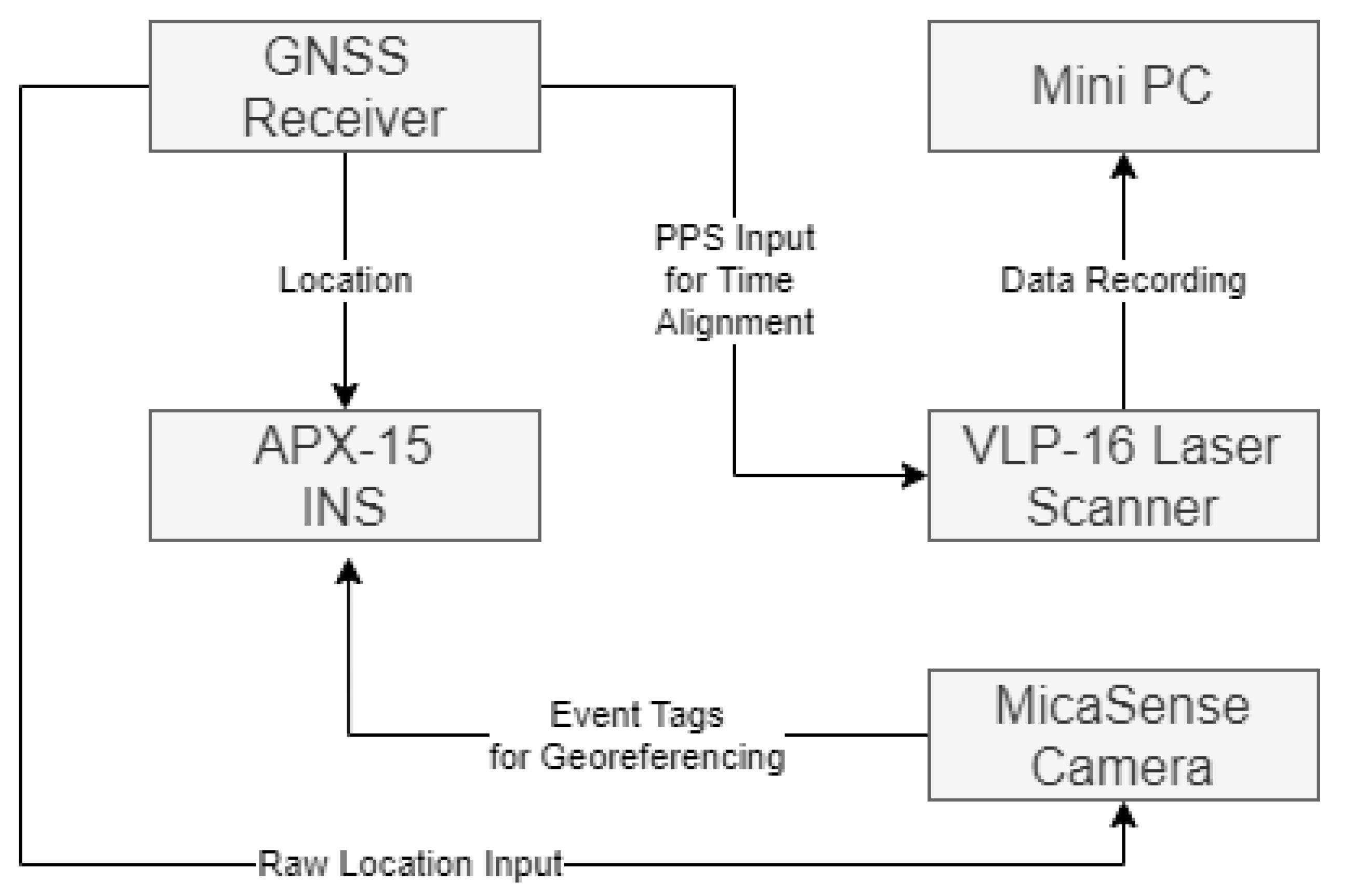

2. Development of a UAV Laser Scanner and Multispectral Camera Sensor System

2.1. Components of the System

2.1.1. Applanix APX-15 Inertial Navigation System (INS)

2.1.2. Velodyne VLP-16 Laser Scanner

2.1.3. MicaSense RedEdge-MX Multispectral Camera

2.1.4. Associated Hardware

2.2. Assembly of the System

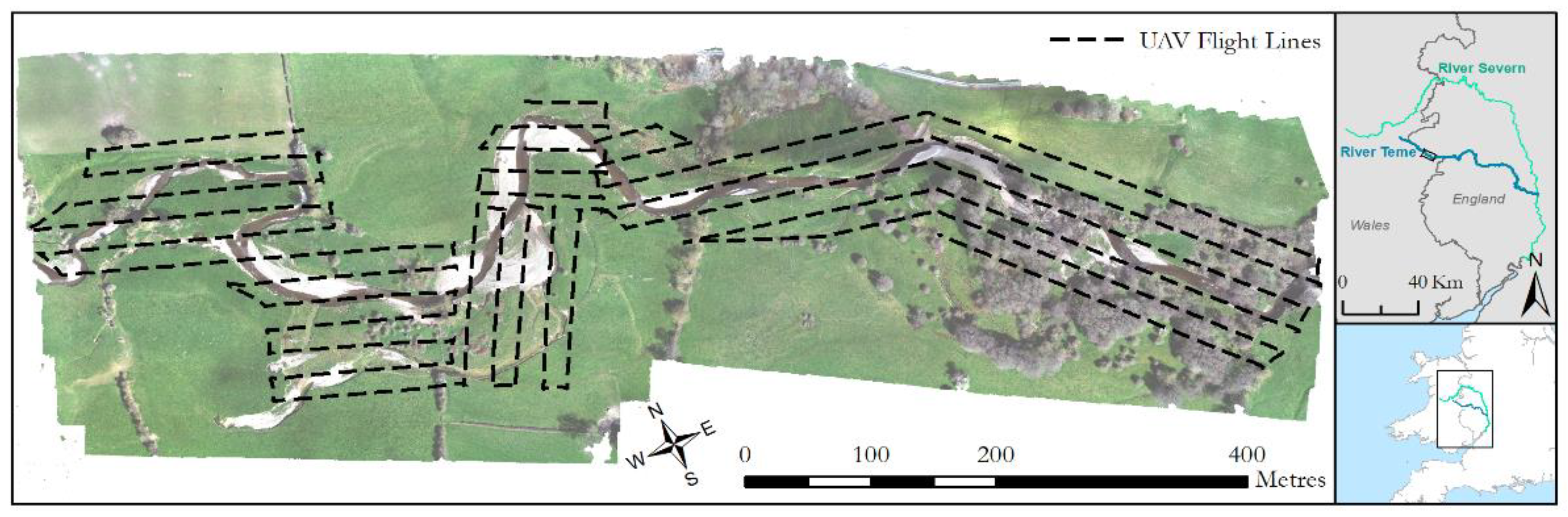

3. Field Deployment of the System

4. Data Processing Workflow

4.1. UAV Laser Scanner and Multispectral Camera Processing

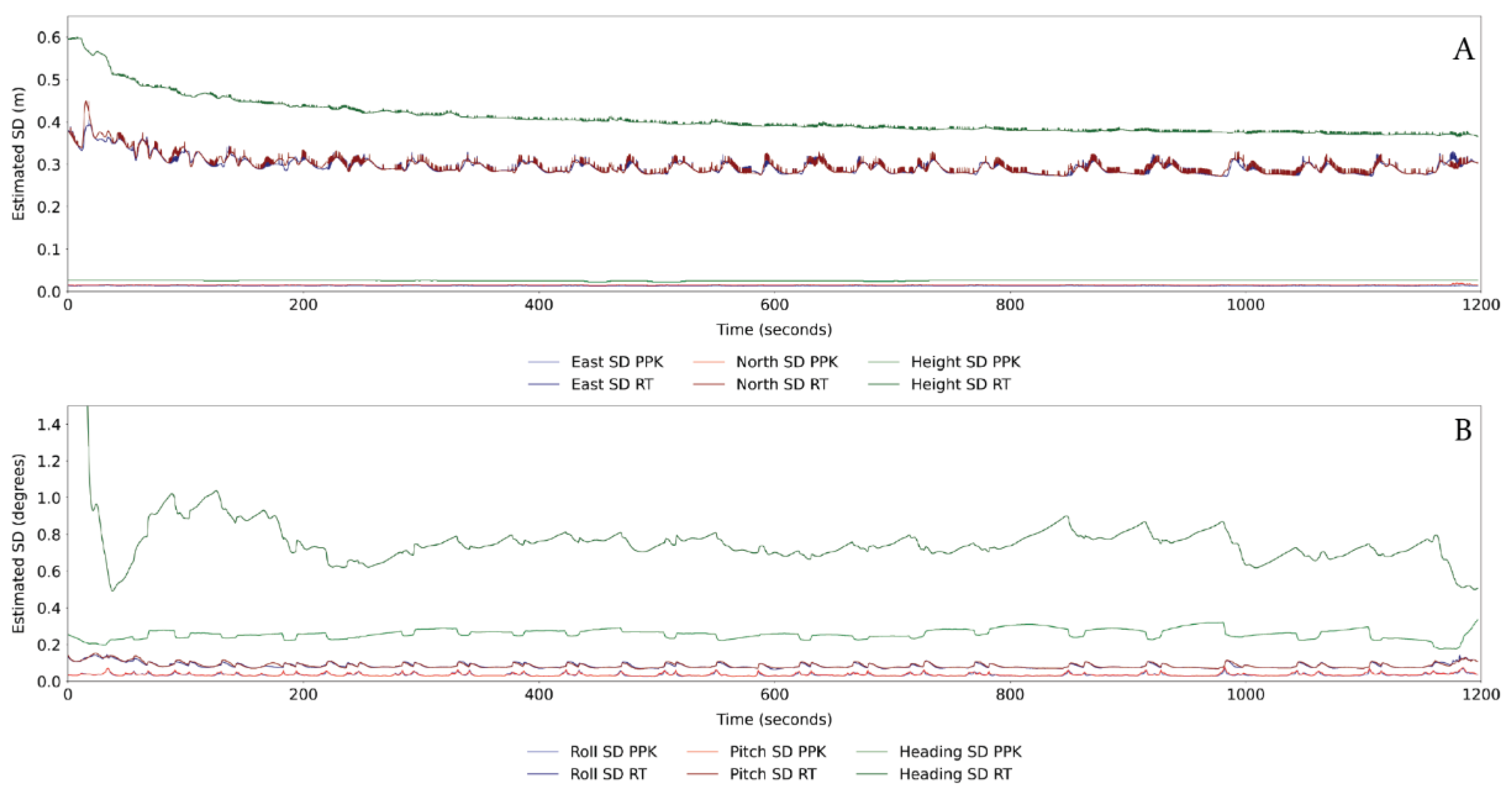

4.1.1. Inertial Navigation System Processing

4.1.2. UAV Laser Scanner Raw Data Processing

4.1.3. Combining Laser Scanner and Positional Data

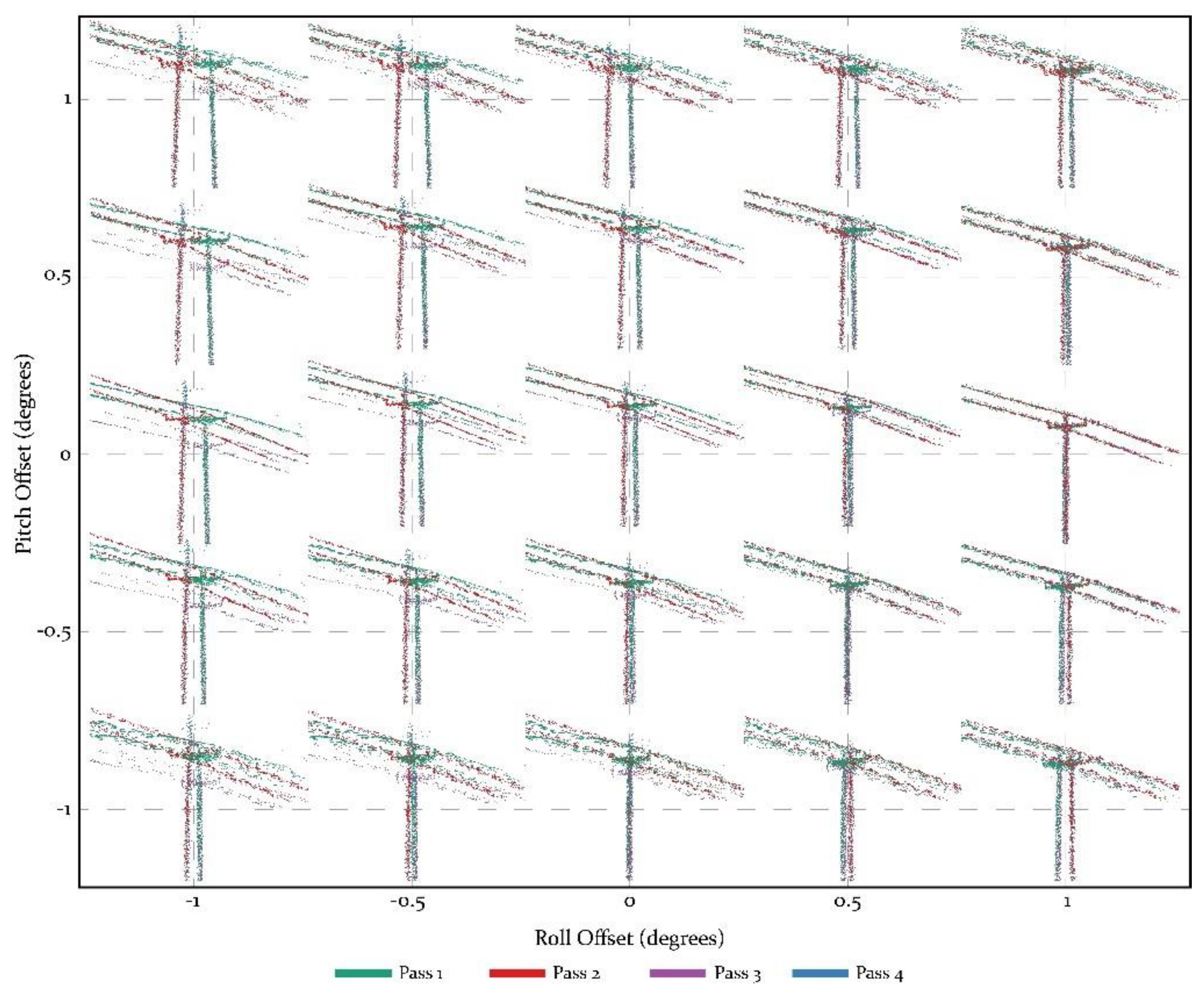

4.1.4. Offset and Boresight Angles

4.1.5. MicaSense Multispectral Imagery SfM Workflow

4.2. Data Processing for Comparison and Error Analysis

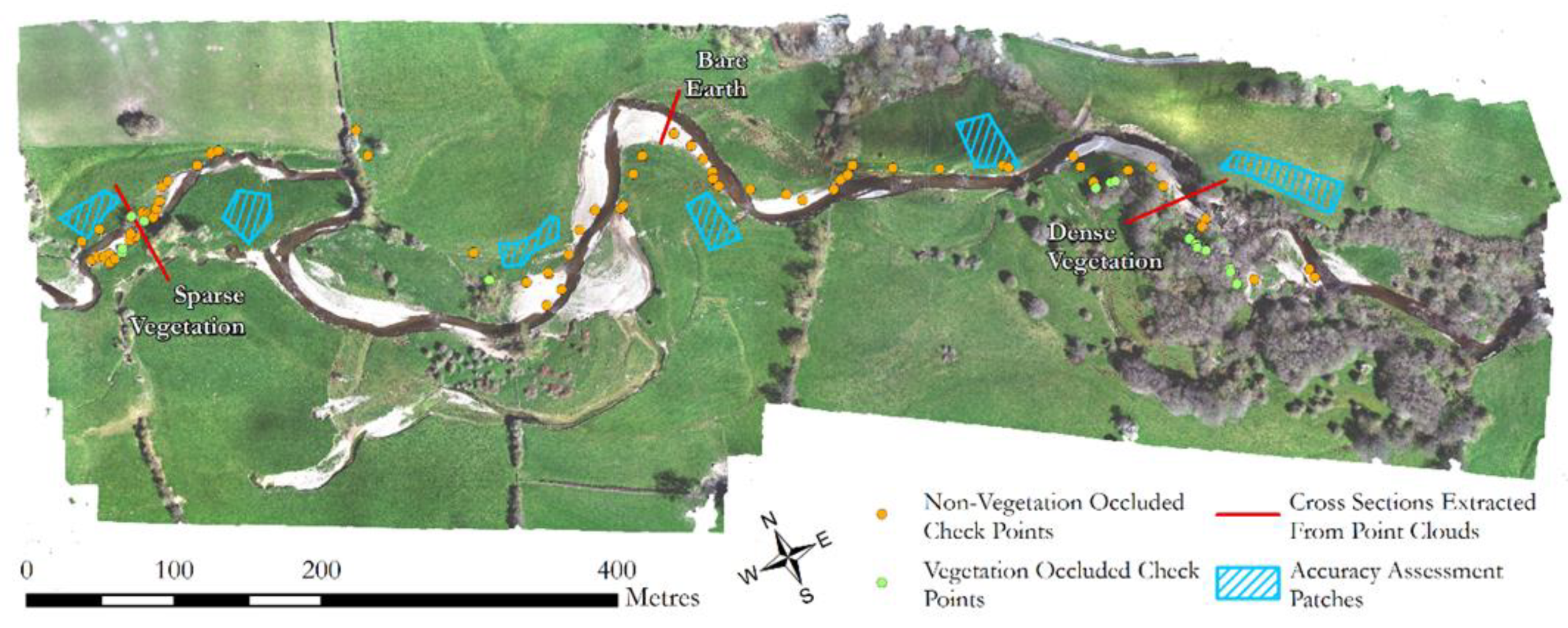

4.2.1. Absolute Accuracy Assessment

4.2.2. Relative Accuracy Assessment—Repeatability

5. Results

5.1. Absolute Accuracy of UAV-LS and UAV-MS (SfM)

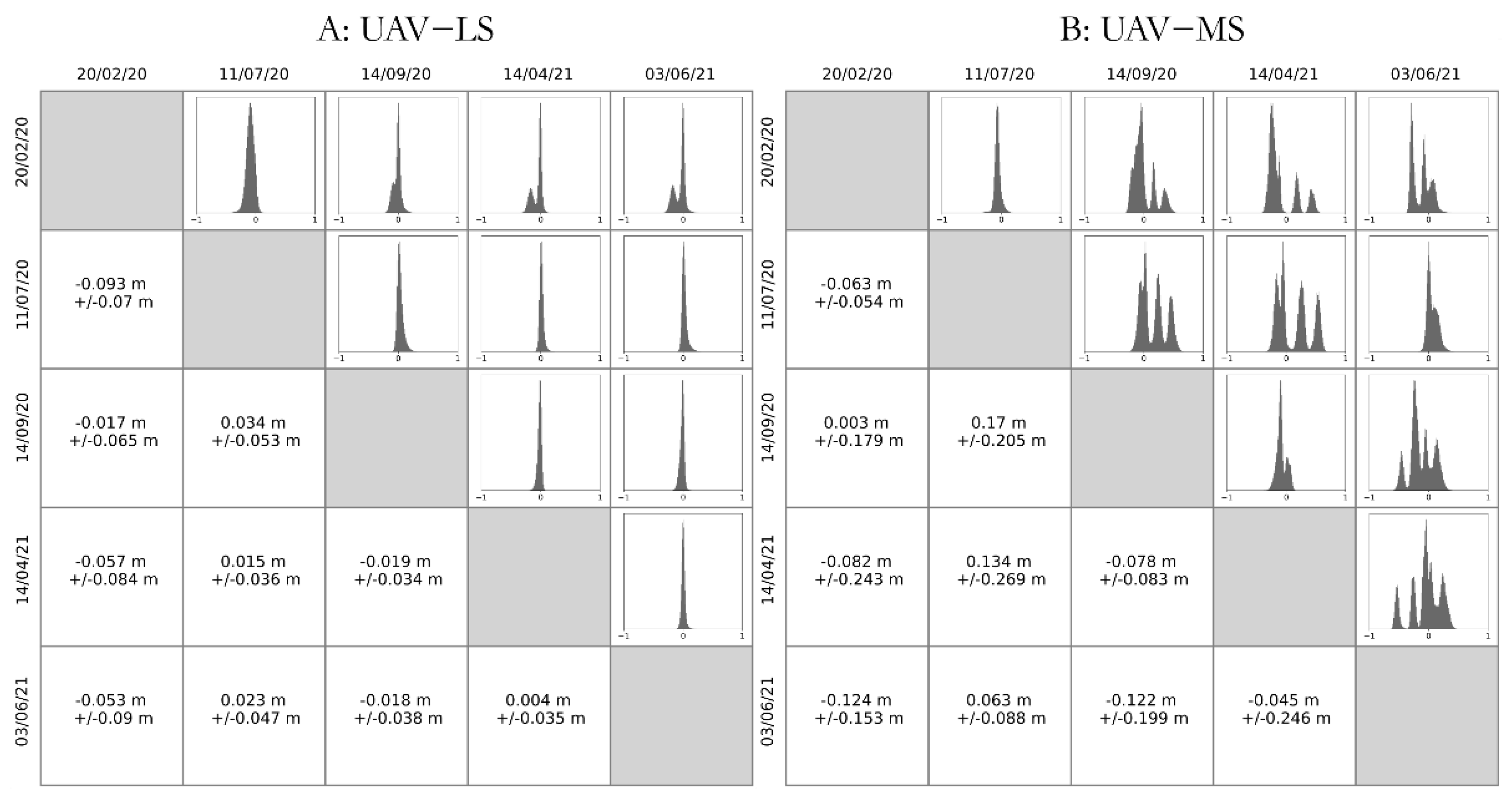

5.2. Relative Accuracy of Surveys: Repeatability

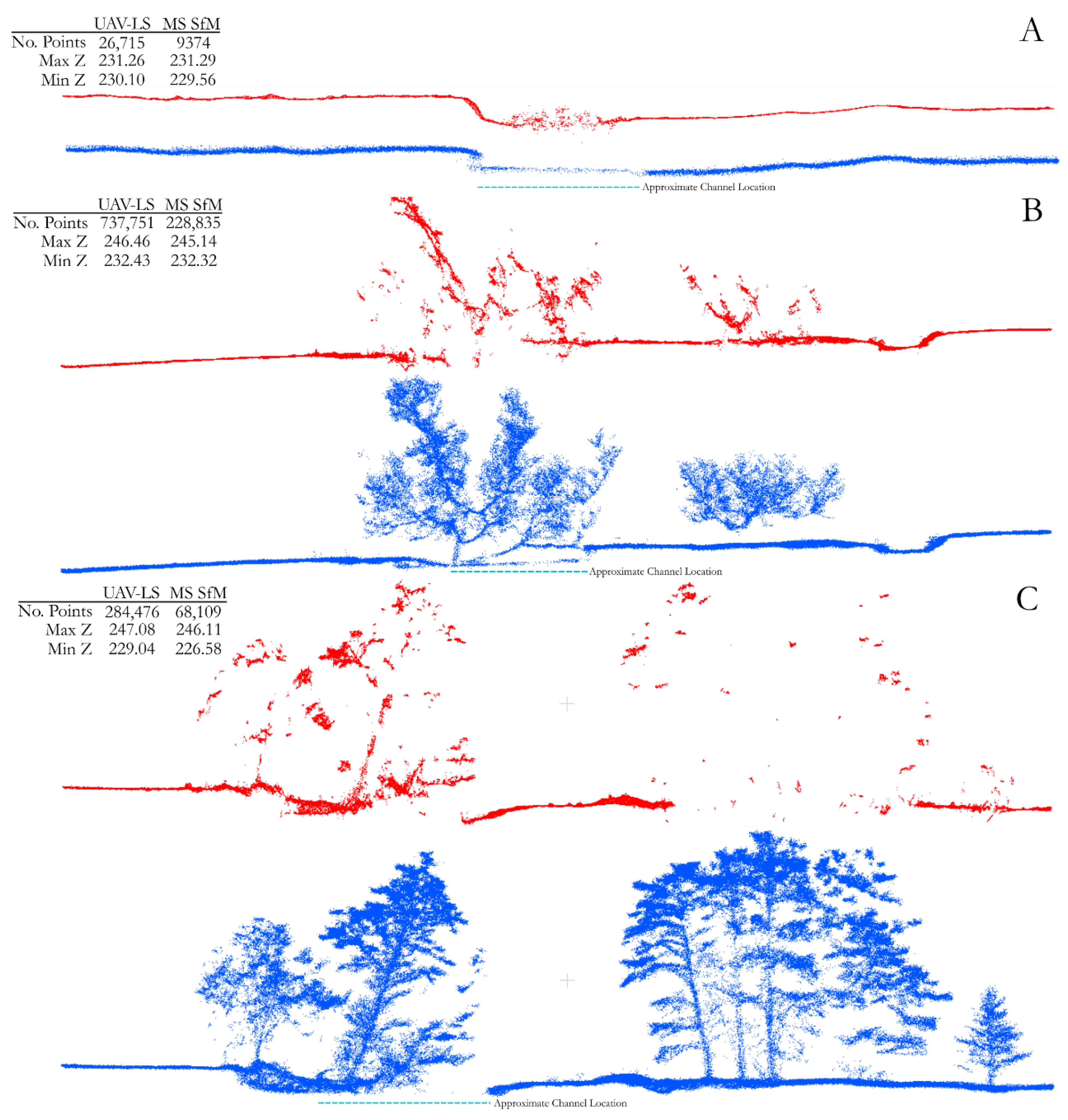

5.2.1. Comparison of UAV-LS and UAV-MS Derived SfM for the Same Dates

5.2.2. Repeatability of Survey Methods: Comparisons across Dates

6. Discussion

6.1. UAV Laser Scanner and Multispectral System

6.2. Eco-Geomorphic Applications

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Haala, N.; Cramer, M.; Weimer, F.; Trittler, M. Performance test on UAV-based photogrammetric data collection. Proc. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 7–12. [Google Scholar] [CrossRef] [Green Version]

- Stek, T.D. Drones over Mediterranean landscapes. The potential of small UAV’s (drones) for site detection and heritage management in archaeological survey projects: A case study from Le Pianelle in the Tappino Valley, Molise (Italy). J. Cult. Herit. 2016, 22, 1066–1071. [Google Scholar] [CrossRef]

- Carrivick, J.L.; Smith, M.W. Fluvial and aquatic applications of Structure from Motion photogrammetry and unmanned aerial vehicle/drone technology. WIREs Water 2019, 6, e1328. [Google Scholar] [CrossRef] [Green Version]

- Fawcett, D.; Blanco-Sacristán, J.; Benaud, P. Two decades of digital photogrammetry: Revisiting Chandler’s 1999 paper on “Effective application of automated digital photogrammetry for geomorphological research”—A synthesis. Prog. Phys. Geogr. Earth Environ. 2019, 43, 299–312. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O′Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Snavely, K.N. Scene Reconstruction and Visualization from Internet Photo Collections. PhD Thesis, University of Washington, Seattle, WA, USA, 2009. [Google Scholar]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012, 117, 17. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Micheletti, N.; Chandler, J.; Lane, S. Structure from Motion (SfM) Photogrammetry. In Geomorphological Techniques; Cook, S., Clarke, L., Nield, J., Eds.; British Society for Geomorphology: London, UK, 2015. [Google Scholar]

- Tomsett, C.; Leyland, J. Remote sensing of river corridors: A review of current trends and future directions. River Res. Appl. 2019, 35, 779–803. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Kraus, K. Photogrammetry: Geometry from Images and Laser Scans; Walter de Gruyter: Berlin, Germany, 2007. [Google Scholar]

- Wolf, P.D.B.; Wilkinson, B. Elements of Photogrammetry with Application in GIS, 4th ed.; McGraw-Hill Education: Maidenhead, UK, 2014. [Google Scholar]

- Eltner, A.; Kaiser, A.; Castillo, C.; Rock, G.; Neugirg, F.; Abellan, A. Image-based surface reconstruction in geomorphometry-merits, limits and developments. Earth Surf. Dyn. 2016, 4, 359–389. [Google Scholar] [CrossRef] [Green Version]

- Nagai, M.; Shibasaki, R.; Manandhar, D.; Zhao, H. Development of digital surface model and feature extraction by integrating laser scanner and CCD sensor with IMU. In Proceedings of the ISPRS Congress, Geo-Imagery Bridging Continents, Istanbul, Turkey, 12–23 July 2004. [Google Scholar]

- Turner, D.; Lucieer, A.; Wallace, L. Direct Georeferencing of Ultrahigh-Resolution UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-based earth topography reconstructions based on Structure-from-Motion algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- Cook, K. An evaluation of the effectiveness of low-cost UAVs and structure from motion for geomorphic change detection. Geomorphology 2017, 278, 195–208. [Google Scholar] [CrossRef]

- Dietrich, J. Riverscape mapping with helicopter-based Structure-from-Motion photogrammetry. Geomorphology 2016, 252, 144–157. [Google Scholar] [CrossRef]

- Brunier, G.; Fleury, J.; Anthony, E.; Pothin, V.; Vella, C.; Dussouillez, P.; Gardel, A.; Michaud, E. Structure-from-Motion photogrammetry for high-resolution coastal and fluvial geomorphic surveys. Geomorphologie 2016, 22, 147–161. [Google Scholar] [CrossRef]

- Lague, D. Chapter 8—Terrestrial laser scanner applied to fluvial geomorphology. In Developments in Earth Surface Processes; Tarolli, P., Mudd, S.M., Eds.; Elsevier: Amsterdam, The Netherlands, 2020; Volume 23, pp. 231–254. [Google Scholar]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Jaakkola, A.; Hyyppa, J.; Yu, X.W.; Kukko, A.; Kaartinen, H.; Liang, X.L.; Hyyppa, H.; Wang, Y.S. Autonomous Collection of Forest Field Reference-The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef] [Green Version]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 111355. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Cheng, Y.-T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for Mapping Coastal Environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef] [Green Version]

- Resop, J.P.; Lehmann, L.; Hession, W.C. Drone Laser Scanning for Modeling Riverscape Topography and Vegetation: Comparison with Traditional Aerial Lidar. Drones 2019, 3, 35. [Google Scholar] [CrossRef] [Green Version]

- Jacobs, J.M.; Hunsaker, A.G.; Sullivan, F.B.; Palace, M.; Burakowski, E.A.; Herrick, C.; Cho, E. Snow depth mapping with unpiloted aerial system lidar observations: A case study in Durham, New Hampshire, United States. Cryosphere 2021, 15, 1485–1500. [Google Scholar] [CrossRef]

- Samiappan, S.; Hathcock, L.; Turnage, G.; McCraine, C.; Pitchford, J.; Moorhead, R. Remote Sensing of Wildfire Using a Small Unmanned Aerial System: Post-Fire Mapping, Vegetation Recovery and Damage Analysis in Grand Bay, Mississippi/Alabama, USA. Drones 2019, 3, 43. [Google Scholar] [CrossRef] [Green Version]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Doughty, C.L.; Cavanaugh, K.C. Mapping Coastal Wetland Biomass from High Resolution Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2019, 11, 540. [Google Scholar] [CrossRef] [Green Version]

- Taddia, Y.; Russo, P.; Lovo, S.; Pellegrinelli, A. Multispectral UAV monitoring of submerged seaweed in shallow water. Appl. Geomat. 2020, 12, 19–34. [Google Scholar] [CrossRef] [Green Version]

- Stow, D.; Nichol, C.J.; Wade, T.; Assmann, J.J.; Simpson, G.; Helfter, C. Illumination Geometry and Flying Height Influence Surface Reflectance and NDVI Derived from Multispectral UAS Imagery. Drones 2019, 3, 55. [Google Scholar] [CrossRef] [Green Version]

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral sensors best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Applanix. Pos Av Datasheet. Available online: https://www.applanix.com/downloads/products/specs/posav_datasheet.pdf (accessed on 25 January 2018).

- Stöcker, C.; Nex, F.; Koeva, M.; Gerke, M. Quality Assessment of Combined Imu/gnss Data for Direct Georeferencing in the Context of Uav-Based Mapping. ISPRS 2017, 42W6, 355. [Google Scholar] [CrossRef] [Green Version]

- Velodyne Lidar. Puck Data Sheet. 2018. Available online: https://velodynelidar.com/products/puck/ (accessed on 17 November 2021).

- Glennie, C.; Kusari, A.; Facchin, A. Calibration and stability analysis of the vlp-16 laser scanner. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 40, 55. [Google Scholar] [CrossRef] [Green Version]

- Velodyne Lidar. Vlp-16 User Manual. Available online: https://velodynelidar.com/wp-content/uploads/2019/12/63-9243-Rev-E-VLP-16-User-Manual.pdf (accessed on 17 November 2021).

- MicaSense. RedEdge-MX. Available online: https://micasense.com/rededge-mx/ (accessed on 17 November 2021).

- MicaSense. Rededge MX Integration Guide. Available online: https://support.micasense.com/hc/en-us/articles/215924497-RedEdge-Integration-Guide (accessed on 17 November 2021).

- Lin, Y.; Hyyppa, J.; Jaakkola, A. Mini-UAV-Borne LIDAR for Fine-Scale Mapping. IEEE Geosci. Remote Sens. Lett. 2011, 8, 426–430. [Google Scholar] [CrossRef]

- Ma, L.; Wang, X.; Li, S. Accuracy analysis of GPS broadcast ephemeris in the 2036th GPS week. IOP Conf. Ser. Mater. Sci. Eng. 2019, 631, 042013. [Google Scholar] [CrossRef]

- Karaim, M.; Elsheikh, M.; Noureldin, A. GNSS Error Sources. 2018. Available online: https://www.intechopen.com/books/multifunctional-operation (accessed on 17 November 2021).

- Scherzinger, B.; Hutton, J. Applanix in-Fusion Technology Explained. 2021. Available online: https://www.applanix.com/pdf/Applanix_IN-Fusion.pdf (accessed on 17 November 2021).

- Kim, Y.; Bang, H. Introduction to Kalman Filter and Its Applications. 2018. Available online: https://cdn.intechopen.com/pdfs/63164.pdf (accessed on 17 November 2021).

- Hauser, D.; Glennie, C.; Brooks, B. Calibration and Accuracy Analysis of a Low-Cost Mapping-Grade Mobile Laser Scanning System. J. Surv. Eng. ASCE 2016, 142, 9. [Google Scholar] [CrossRef]

- Mostafa, M. Camera/IMU boresight calibration: New advances and performance analysis. In Proceedings of the ASPRS Annual Meeting, Washington, DC, USA, 22 April 2002. [Google Scholar]

- Rau, J.Y.; Chen, L.C.; Hsieh, C.C.; Huang, T.M. Static error budget analysis for a land-based dual-camera mobile mapping system. J. Chin. Inst. Eng. 2011, 34, 849–862. [Google Scholar] [CrossRef]

- Smith, M.; Asal, F.; Priestnall, G. The use of photogrammetry and LIDAR for landscape roughness estimation in hydrodynamic studies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 849–862. [Google Scholar]

- Dietrich, J. Bathymetric Structure-from-Motion: Extracting shallow stream bathymetry from multi-view stereo photogrammetry. Earth Surf. Process. Landf. 2017, 42, 355–364. [Google Scholar] [CrossRef]

- Woodget, A.; Carbonneau, P.; Visser, F.; Maddock, I. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 47–64. [Google Scholar] [CrossRef] [Green Version]

- Shintani, C.; Fonstad, M.A. Comparing remote-sensing techniques collecting bathymetric data from a gravel-bed river. Int. J. Remote Sens. 2017, 38, 2883–2902. [Google Scholar] [CrossRef]

- Legleiter, C.J. Remote measurement of river morphology via fusion of LiDAR topography and spectrally based bathymetry. Earth Surf. Process. Landf. 2012, 37, 499–518. [Google Scholar] [CrossRef]

- Marchetti, Z.Y.; Ramonell, C.G.; Brumnich, F.; Alberdi, R.; Kandus, P. Vegetation and hydrogeomorphic features of a large lowland river: NDVI patterns summarizing fluvial dynamics and supporting interpretations of ecological patterns. Earth Surf. Process. Landf. 2020, 45, 694–706. [Google Scholar] [CrossRef]

- Al-Ali, Z.M.; Abdullah, M.M.; Asadalla, N.B.; Gholoum, M. A comparative study of remote sensing classification methods for monitoring and assessing desert vegetation using a UAV-based multispectral sensor. Environ. Monit. Assess. 2020, 192, 389. [Google Scholar] [CrossRef]

- Nallaperuma, B.; Asaeda, T. The long-term legacy of riparian vegetation in a hydrogeomorphologically remodelled fluvial setting. River Res. Appl. 2020, 36, 1690–1700. [Google Scholar] [CrossRef]

- Bertoldi, W.; Drake, N.A.; Gurnell, A.M. Interactions between river flows and colonizing vegetation on a braided river: Exploring spatial and temporal dynamics in riparian vegetation cover using satellite data. Earth Surf. Process. Landf. 2011, 36, 1474–1486. [Google Scholar] [CrossRef]

- Rossi, L.; Mammi, I.; Pelliccia, F. UAV-Derived Multispectral Bathymetry. Remote Sens. 2020, 12, 3897. [Google Scholar] [CrossRef]

- Ren, L.; Liu, Y.; Zhang, S.; Cheng, L.; Guo, Y.; Ding, A. Vegetation Properties in Human-Impacted Riparian Zones Based on Unmanned Aerial Vehicle (UAV) Imagery: An Analysis of River Reaches in the Yongding River Basin. Forests 2021, 12, 22. [Google Scholar] [CrossRef]

| Band | Wavelength (nm) | Band Width (nm) |

|---|---|---|

| Blue | 475 | 32 |

| Green | 560 | 27 |

| Red | 668 | 14 |

| Red-Edge | 717 | 12 |

| Near Infra-Red | 842 | 57 |

| Sensor | Category | Summary Statistics | ||||

|---|---|---|---|---|---|---|

| Mean Error (Z) | Standard Deviation (Z) | Min | Max | Range | ||

| UAV-LS | Terrestrial | −0.182 m | 0.140 m | −0.366 m | 0.424 m | 0.790 m |

| Vegetated | −0.116 m | 0.181 m | −0.285 m | 0.299 m | 0.584 m | |

| UAV-MS | Terrestrial | −0.469 m | 0.381 m | −1.023 m | 1.085 m | 2.108 m |

| Vegetated | −0.181 m | 0.572 m | −0.915 m | 1.085 m | 2.000 m | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tomsett, C.; Leyland, J. Development and Testing of a UAV Laser Scanner and Multispectral Camera System for Eco-Geomorphic Applications. Sensors 2021, 21, 7719. https://doi.org/10.3390/s21227719

Tomsett C, Leyland J. Development and Testing of a UAV Laser Scanner and Multispectral Camera System for Eco-Geomorphic Applications. Sensors. 2021; 21(22):7719. https://doi.org/10.3390/s21227719

Chicago/Turabian StyleTomsett, Christopher, and Julian Leyland. 2021. "Development and Testing of a UAV Laser Scanner and Multispectral Camera System for Eco-Geomorphic Applications" Sensors 21, no. 22: 7719. https://doi.org/10.3390/s21227719

APA StyleTomsett, C., & Leyland, J. (2021). Development and Testing of a UAV Laser Scanner and Multispectral Camera System for Eco-Geomorphic Applications. Sensors, 21(22), 7719. https://doi.org/10.3390/s21227719