A DNN-Based UVI Calculation Method Using Representative Color Information of Sun Object Images

Abstract

:1. Introduction

2. UVI Calculation Based on Representative Color Information of Sun Object Images

2.1. Collection of Sky Images and UVI Data

2.2. Extraction of Sun Object Images and Representative Colors

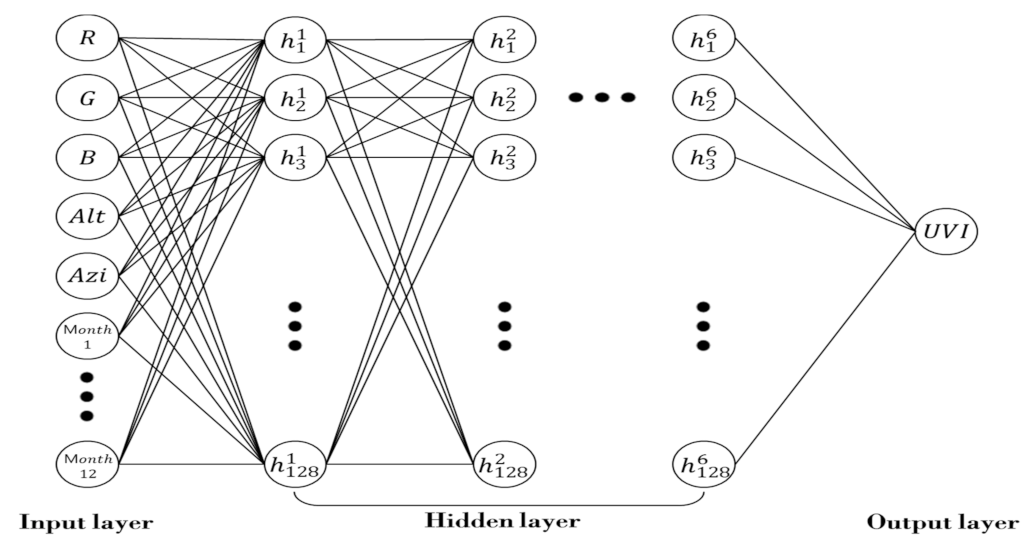

2.3. DNN Model for UVI Calculation

3. Experiments and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Van der Rhee, H.; de Vries, E.; Coebergh, J. Regular sun exposure benefits health. Med. Hypotheses 2016, 97, 34–37. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.-K.; Park, Y.; Han, S.-P.; Kim, H.C. The Alerting Effect from Rising Public Awareness of Air Quality on the Outdoor Activities of Megacity Residents. Sustainability 2020, 12, 820. [Google Scholar] [CrossRef] [Green Version]

- IARC Working Group. Exposure to Artificial UV Radiation and Skin Cancer; World Health Organization: Geneva, Switzerland, 2006; Volume 1. [Google Scholar]

- Diffey, B.L. Sources and measurement of ultraviolet radiation. Methods 2002, 28, 4–13. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization; World Meteorological Organization; United Nations Environment Programme; International Commission on Non-Ionizing Radiation Protection. Global Solar UV Index: A Practical Guide; WHO: Geneva, Switzerland, 2002. [Google Scholar]

- Kim, H.-S.; Oh, S.-T.; Lim, J.-H. Development of local area alert system against particulate matters and ultraviolet rays based on open IoT platform with P2P. Peer-to-Peer Netw. Appl. 2018, 11, 1240–1251. [Google Scholar] [CrossRef]

- Tian, J.; He, Y.; Li, J.; Wei, J.; Li, G.; Guo, J. Fast, Real-Time, In Situ Monitoring of Solar Ultraviolet Radiation Using Sun-light-Driven Photoresponsive Liquid Crystals. Adv. Opt. Mater. 2018, 6, 1701337. [Google Scholar] [CrossRef]

- Kwak, M.K.; Kim, J.H. The radiative characteristics of EUV-B over the Korean peninsula and exposure time for synthesizing adequate vitamin D. Atmosphere 2011, 21, 123–130. [Google Scholar]

- Hooke, R.; Pearson, A.; O’Hagan, J. Autonomous Portable Solar Ultraviolet Spectroradiometer (APSUS)—A New CCD Spec-trometer System for Localized, Real-Time Solar Ultraviolet (280–400 nm) Radiation Measurement. Photochem. Photobiol. 2014, 90, 903–910. [Google Scholar] [PubMed]

- Fahrni, T.; Kuhn, M.; Sommer, P.; Wattenhofer, R.; Welten, S. Sundroid: Solar radiation awareness with smartphones. In Proceedings of the 13th International Conference on Ubiquitous Computing, Beijing, China, 17–21 September 2011; pp. 365–374. [Google Scholar]

- Park, D.-H.; Oh, S.-T.; Lim, J.-H. Development of a UV Index Sensor-Based Portable Measurement Device with the EUVB Ratio of Natural Light. Sensors 2019, 19, 754. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Asare, K.O.; Leikanger, T.; Schuss, S.; Klakegg, A.; Visuri, A.; Ferreira, D. S3: Environmental finger-printing with a credit card-sized NFC powered sensor board. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Barcelona, Spain, 3–6 September 2018; pp. 298–305. [Google Scholar]

- Sosko, S.; Dalyot, S. Crowdsourcing User-Generated Mobile Sensor Weather Data for Densifying Static Geosensor Networks. ISPRS Int. J. Geo-Inf. 2017, 6, 61. [Google Scholar] [CrossRef] [Green Version]

- Igoe, D.P.; Parisi, A.V.; Amar, A.; Downs, N.J.; Turner, J. Atmospheric total ozone column evaluation with a smartphone image sensor. Int. J. Remote Sens. 2018, 39, 2766–2783. [Google Scholar] [CrossRef]

- Marquez, R.; Coimbra, C.F. Intra-hour DNI forecasting based on cloud tracking image analysis. Sol. Energy 2013, 91, 327–336. [Google Scholar] [CrossRef]

- Liu, X.; Song, Z.; Ngai, E.; Ma, J.; Wang, W.; Xiaoyang, L. PM2:5 monitoring using images from smartphones in participatory sensing. In Proceedings of the 2015 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Hong Kong, China, 26 April–1 May 2015; pp. 630–635. [Google Scholar]

- Roshani, S.; Jamshidi, M.B.; Mohebi, F.; Roshani, S. Design and Modeling of a Compact Power Divider with Squared Resonators Using Artificial Intelligence. Wirel. Pers. Commun. 2021, 117, 2085–2096. [Google Scholar] [CrossRef]

- Roshani, M.; Sattari, M.A.; Ali, P.J.M.; Roshani, G.H.; Nazemi, B.; Corniani, E.; Nazemi, E. Application of GMDH neural network technique to improve measuring precision of a simplified photon attenuation based two-phase flowmeter. Flow Meas. Instrum. 2020, 75, 101804. [Google Scholar] [CrossRef]

- Salman, A.G.; Kanigoro, B.; Heryadi, Y. Weather forecasting using deep learning techniques. In Proceedings of the 2015 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Depok, Indonesia, 10–11 October 2015; pp. 281–285. [Google Scholar]

- Tao, Y.; Chen, H.; Qiu, C. Wind power prediction and pattern feature based on deep learning method. In Proceedings of the 2014 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Hong Kong, China, 7–10 December 2014; pp. 1–4. [Google Scholar]

- Jo, Y.-S.; Choi, Y.-J.; Kim, M.-G.; Woo, C.-H.; Min, K.-W.; Seon, K.-I. Construction of a far-ultraviolet all-sky map from an in-complete survey: Application of a deep learning algorithm. Mon. Not. R. Astron. Soc. 2021, 502, 3200–3209. [Google Scholar] [CrossRef]

- McKenzie, R.; Blumthaler, M.; Diaz, S.; Fioletov, V.; Herman, J.; Seckmeyer, G.; Smedley, A.; Webb, A. Rationalizing Nomenclature for UV Doses and Effects on Humans; World Meteorological Organization: Geneva, Switzerland, 2014. [Google Scholar]

- Gidaris, S.; Komodakis, N. Object Detection via a Multi-region and Semantic Segmentation-Aware CNN Model. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1134–1142. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Matterport. matterport/Mask_RCNN.10. 2020. Available online: https://github.com/matterport/Mask_RCNN (accessed on 28 December 2020).

- Kaiser, L.; Gomez, A.N.; Shazeer, N.; Vaswani, A.; Parmar, N.; Jones, L.; Uszkoreit, J. One model to learn them all. arXiv 2017, arXiv:1706.05137. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Dutta, A.; Zisserman, A. The VIA Annotation Software for Images, Audio and Video. In Proceedings of the 27th ACM Interna-tional Conference on Multimedia, Nice, France, 21–15 October 2019; pp. 2276–2279. [Google Scholar]

- Ciresan, D.; Meier, U.; Schmidhuber, J. Multi-column deep neural networks for image classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3642–3649. [Google Scholar]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep neural networks for object detection. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep Clustering for Unsupervised Learning of Visual Features. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 132–149. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, V.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Oh, S.-T.; Ga, D.-H.; Lim, J.-H. Mobile Deep Learning System that Calculates UVI Using Illuminance Value of User’s Location. Sensors 2021, 21, 1227. [Google Scholar] [CrossRef] [PubMed]

- Khagi, B.; Kwon, G.R.; Lama, R. Comparative analysis of Alzheimer’s disease classification by CDR level using CNN, feature selection, and machine-learning techniques. Int. J. Imaging Syst. Technol. 2019, 29, 297–310. [Google Scholar] [CrossRef]

- Wanas, N.; Auda, G.; Kamel, M.S.; Karray, F. On the optimal number of hidden nodes in a neural network. In Proceedings of the IEEE Canadian Conference on Electrical and Computer Engineering, Waterloo, ON, Canada, 25–28 May 1998; Volume 2, pp. 918–921. [Google Scholar]

- Stathakis, D. How many hidden layers and nodes? Int. J. Remote Sens. 2009, 30, 2133–2147. [Google Scholar] [CrossRef]

| Time | 5 April 2020 08:00 | 5 April 2020 09:00 | 5 April 2020 10:00 | 5 April 2020 11:00 | 5 April 2020 12:00 |

|---|---|---|---|---|---|

| Sky Image |  |  |  |  |  |

| UVI | 1.32316 | 2.83357 | 4.60154 | 6.26907 | 7.45116 |

| Time | 5 April 2020 13:00 | 5 April 2020 14:00 | 5 April 2020 15:00 | 5 April 2020 16:00 | 5 April 2020 17:00 |

| Sky Image |  |  |  |  |  |

| UVI | 7.17109 | 6.84514 | 5.43987 | 3.73438 | 2.10725 |

| No. | R | G | B | Altitude | Azimuth | Month | UVI |

|---|---|---|---|---|---|---|---|

| 1 | 0.99215 | 0.99607 | 0.98823 | 0.35106 | 0.30414 | [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 2.73878 |

| 2 | 0.64705 | 0.71764 | 0.78039 | 0.29084 | −0.27222 | [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] | 1.15636 |

| N-1 | 0.98823 | 0.98039 | 0.93725 | 0.12618 | −0.41437 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1] | 0.70520 |

| N | 0.80784 | 0.85882 | 0.94509 | 0.51351 | −0.10385 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1] | 7.25948 |

| No. | R | G | B | Altitude | Azimuth | Month | MAE |

|---|---|---|---|---|---|---|---|

| 1 | O | X | X | X | X | X | 1.97 |

| 2 | X | O | X | X | X | X | 1.96 |

| 3 | X | X | O | X | X | X | 1.68 |

| 4 | O | O | O | X | X | X | 1.52 |

| 5 | O | O | O | O | X | X | 0.74 |

| 6 | O | O | O | X | O | X | 1.12 |

| 7 | O | O | O | O | O | X | 0.59 |

| 8 | O | O | O | O | O | O | 0.53 |

| (a) | (b) | (c) | (d) | (e) | (f) | |

|---|---|---|---|---|---|---|

| Pre-processing Image |  |  |  |  |  |  |

| K-Means Clustering |  |  |  |  |  |  |

| Result | 116, 116, 167 | 142, 183, 216 | 30, 78, 145 | 165, 183, 201 | 144, 163, 183 | 246, 249, 248 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ga, D.-H.; Oh, S.-T.; Lim, J.-H. A DNN-Based UVI Calculation Method Using Representative Color Information of Sun Object Images. Sensors 2021, 21, 7766. https://doi.org/10.3390/s21227766

Ga D-H, Oh S-T, Lim J-H. A DNN-Based UVI Calculation Method Using Representative Color Information of Sun Object Images. Sensors. 2021; 21(22):7766. https://doi.org/10.3390/s21227766

Chicago/Turabian StyleGa, Deog-Hyeon, Seung-Taek Oh, and Jae-Hyun Lim. 2021. "A DNN-Based UVI Calculation Method Using Representative Color Information of Sun Object Images" Sensors 21, no. 22: 7766. https://doi.org/10.3390/s21227766

APA StyleGa, D.-H., Oh, S.-T., & Lim, J.-H. (2021). A DNN-Based UVI Calculation Method Using Representative Color Information of Sun Object Images. Sensors, 21(22), 7766. https://doi.org/10.3390/s21227766