Rapid Dynamic Naturalistic Monitoring of Bradykinesia in Parkinson’s Disease Using a Wrist-Worn Accelerometer

Abstract

:1. Introduction

2. Material and Methods

2.1. Study Sample

2.2. Data Pre-Processing and Feature Extraction

2.3. Descriptive Statistics and Analysis of Variance

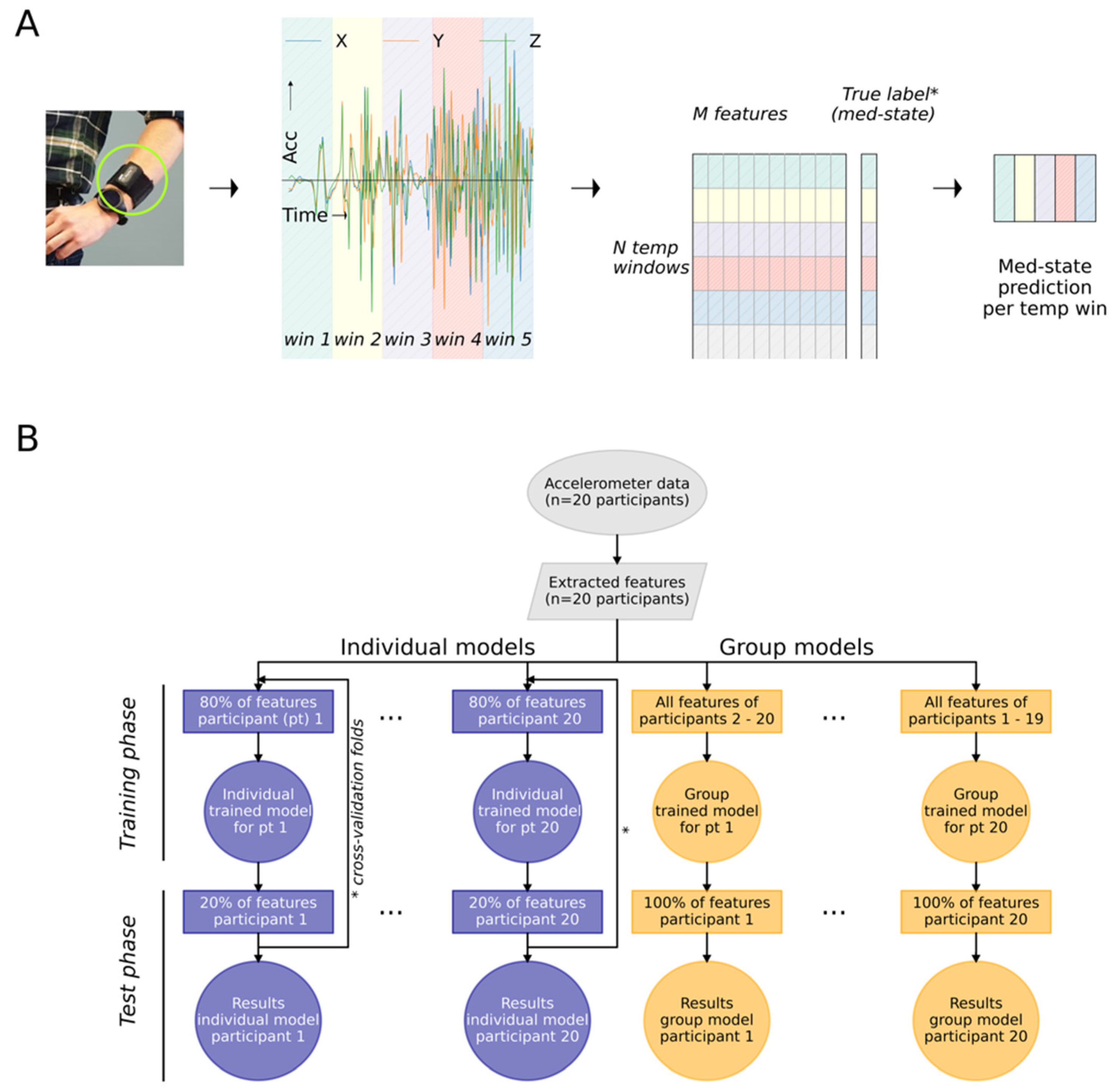

2.4. Classification of Medication States

2.4.1. Individually Trained and Group Trained Models

2.4.2. The Influence of Training Data Size, and Feature Window Lengths

2.4.3. Comparing Two Models’ Predictive Performance

2.4.4. Predictive Performance and Clinical Assessed Symptom Fluctuations

2.4.5. Software

2.4.6. Code and Data Availability

3. Results

3.1. Study Population and Recorded Data

3.2. Group Level Statistical Analysis of Cardinal Motion Features across Medication States

3.3. Machine Learning Classification of Short Window Data Epochs

3.4. Classification of Bradykinesia-Centred Motor Fluctuations Versus Co-Occurring Symptoms

3.5. Influence of Training Data Size and Feature Window Length

4. Discussion

4.1. Clinical Relevance and Methodological Challenges of Naturalistic and Rapid PD Motor Monitoring

4.2. Future Scientific Opportunities to Improve Naturalistic PD Monitoring Development

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bloem, B.R.; Okun, M.S.; Klein, C. Parkinson’s Disease. Lancet Lond. Engl. 2021, 397, 2284–2303. [Google Scholar] [CrossRef]

- Jankovic, J.; Tan, E.K. Parkinson’s Disease: Etiopathogenesis and Treatment. J. Neurol. Neurosurg. Psychiatry 2020, 91, 795–808. [Google Scholar] [CrossRef] [PubMed]

- Kuhlman, G.D.; Flanigan, J.L.; Sperling, S.A.; Barrett, M.J. Predictors of Health-Related Quality of Life in Parkinson’s Disease. Park. Relat. Disord. 2019, 65, 86–90. [Google Scholar] [CrossRef] [PubMed]

- De Bie, R.M.A.; Clarke, C.E.; Espay, A.J.; Fox, S.H.; Lang, A.E. Initiation of Pharmacological Therapy in Parkinson’s Disease: When, Why, and How. Lancet Neurol. 2020, 19, 452–461. [Google Scholar] [CrossRef]

- Fasano, A.; Fung, V.S.C.; Lopiano, L.; Elibol, B.; Smolentseva, I.G.; Seppi, K.; Takáts, A.; Onuk, K.; Parra, J.C.; Bergmann, L.; et al. Characterizing Advanced Parkinson’s Disease: OBSERVE-PD Observational Study Results of 2615 Patients. BMC Neurol. 2019, 19, 50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, H.-J.; Mason, S.; Foltynie, T.; Winder-Rhodes, S.; Barker, R.A.; Williams-Gray, C.H. Motor Complications in Parkinson’s Disease: 13-Year Follow-up of the CamPaIGN Cohort. Mov. Disord. 2020, 35, 185–190. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hechtner, M.C.; Vogt, T.; Zöllner, Y.; Schröder, S.; Sauer, J.B.; Binder, H.; Singer, S.; Mikolajczyk, R. Quality of Life in Parkinson’s Disease Patients with Motor Fluctuations and Dyskinesias in Five European Countries. Park. Relat. Disord. 2014, 20, 969–974. [Google Scholar] [CrossRef]

- Fox, S.H.; Katzenschlager, R.; Lim, S.Y.; Barton, B.; de Bie, R.M.A.; Seppi, K.; Coelho, M.; Sampaio, C. International Parkinson and Movement Disorder Society Evidence-Based Medicine Review: Update on Treatments for the Motor Symptoms of Parkinson’s Disease. Mov. Disord. 2018, 33, 1248–1266. [Google Scholar] [CrossRef]

- Van Hilten, J.J.; Middelkoop, H.A.; Kerkhof, G.A.; Roos, R.A. A New Approach in the Assessment of Motor Activity in Parkinson’s Disease. J. Neurol. Neurosurg. Psychiatry 1991, 54, 976–979. [Google Scholar] [CrossRef] [Green Version]

- Espay, A.J.; Hausdorff, J.M.; Sanchez-Ferro, A.; Klucken, J.; Merola, A.; Bonato, P.; Paul, S.S.; Horak, F.B.; Vizcarra, J.A.; Mestre, T.A.; et al. A Roadmap for Implementation of Patient-Centered Digital Outcome Measures in Parkinson’s Disease Obtained Using Mobile Health Technologies. Mov. Disord. 2019, 34, 657–663. [Google Scholar] [CrossRef]

- Warmerdam, E.; Hausdorff, J.M.; Atrsaei, A.; Zhou, Y.; Mirelman, A.; Aminian, K.; Espay, A.J.; Hansen, C.; Evers, L.J.; Keller, A. Long-Term Unsupervised Mobility Assessment in Movement Disorders. Lancet Neurol. 2020, 19, 462–470. [Google Scholar] [CrossRef]

- Fasano, A.; Mancini, M. Wearable-Based Mobility Monitoring: The Long Road Ahead. Lancet Neurol. 2020, 19, 378–379. [Google Scholar] [CrossRef]

- Odin, P.; Chaudhuri, K.R.; Volkmann, J.; Antonini, A.; Storch, A.; Dietrichs, E.; Pirtosek, Z.; Henriksen, T.; Horne, M.; Devos, D.; et al. Viewpoint and Practical Recommendations from a Movement Disorder Specialist Panel on Objective Measurement in the Clinical Management of Parkinson’s Disease. NPJ Park. Dis. 2018, 4, 14. [Google Scholar] [CrossRef]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-Sponsored Revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale Presentation and Clinimetric Testing Results. Mov. Disord. 2008, 23, 2129–2170. [Google Scholar] [CrossRef]

- Hagell, P.; Nygren, C. The 39 Item Parkinson’s Disease Questionnaire (PDQ-39) Revisited: Implications for Evidence Based Medicine. J. Neurol. Neurosurg. Psychiatry 2007, 78, 1191–1198. [Google Scholar] [CrossRef] [Green Version]

- Hauser, R.A.; McDermott, M.P.; Messing, S. Factors Associated with the Development of Motor Fluctuations and Dyskinesias in Parkinson Disease. Arch. Neurol. 2006, 63, 1756–1760. [Google Scholar] [CrossRef]

- Papapetropoulos, S.S. Patient Diaries as a Clinical Endpoint in Parkinson’s Disease Clinical Trials. CNS Neurosci. Ther. 2012, 18, 380–387. [Google Scholar] [CrossRef] [PubMed]

- Thorp, J.E.; Adamczyk, P.G.; Ploeg, H.L.; Pickett, K.A. Monitoring Motor Symptoms During Activities of Daily Living in Individuals With Parkinson’s Disease. Front Neurol. 2018, 9, 1036. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Horne, M.K.; McGregor, S.; Bergquist, F. An Objective Fluctuation Score for Parkinson’s Disease. PLoS ONE 2015, 10, e0124522. [Google Scholar] [CrossRef] [PubMed]

- Sama, A.; Perez-Lopez, C.; Rodriguez-Martin, D.; Catala, A.; Moreno-Arostegui, J.M.; Cabestany, J.; de Mingo, E.; Rodriguez-Molinero, A. Estimating Bradykinesia Severity in Parkinson’s Disease by Analysing Gait through a Waist-Worn Sensor. Comput. Biol. Med. 2017, 84, 114–123. [Google Scholar] [CrossRef]

- Powers, R.; Etezadi-Amoli, M.; Arnold, E.M.; Kianian, S.; Mance, I.; Gibiansky, M.; Trietsch, D.; Alvarado, A.S.; Kretlow, J.D.; Herrington, T.M.; et al. Smartwatch Inertial Sensors Continuously Monitor Real-World Motor Fluctuations in Parkinson’s Disease. Sci. Transl. Med. 2021, 13, eabd7865. [Google Scholar] [CrossRef] [PubMed]

- Sica, M.; Tedesco, S.; Crowe, C.; Kenny, L.; Moore, K.; Timmons, S.; Barton, J.; O’Flynn, B.; Komaris, D.-S. Continuous Home Monitoring of Parkinson’s Disease Using Inertial Sensors: A Systematic Review. PLoS ONE 2021, 16, e0246528. [Google Scholar] [CrossRef] [PubMed]

- Pahwa, R.; Isaacson, S.H.; Torres-Russotto, D.; Nahab, F.B.; Lynch, P.M.; Kotschet, K.E. Role of the Personal KinetiGraph in the Routine Clinical Assessment of Parkinson’s Disease: Recommendations from an Expert Panel. Expert Rev. Neurother. 2018, 18, 669–680. [Google Scholar] [CrossRef]

- Santiago, A.; Langston, J.W.; Gandhy, R.; Dhall, R.; Brillman, S.; Rees, L.; Barlow, C. Qualitative Evaluation of the Personal KinetiGraph TM Movement Recording System in a Parkinson’s Clinic. J. Park. Dis. 2019, 9, 207–219. [Google Scholar] [CrossRef] [Green Version]

- Joshi, R.; Bronstein, J.M.; Keener, A.; Alcazar, J.; Yang, D.D.; Joshi, M.; Hermanowicz, N. PKG Movement Recording System Use Shows Promise in Routine Clinical Care of Patients with Parkinson’s Disease. Front. Neurol. 2019, 10, 1027. [Google Scholar] [CrossRef]

- Farzanehfar, P.; Woodrow, H.; Horne, M. Assessment of Wearing Off in Parkinson’s Disease Using Objective Measurement. J. Neurol. 2020, 268, 914–922. [Google Scholar] [CrossRef]

- Ossig, C.; Gandor, F.; Fauser, M.; Bosredon, C.; Churilov, L.; Reichmann, H.; Horne, M.K.; Ebersbach, G.; Storch, A. Correlation of Quantitative Motor State Assessment Using a Kinetograph and Patient Diaries in Advanced PD: Data from an Observational Study. PLoS ONE 2016, 11, e0161559. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Molinero, A.; Sama, A.; Perez-Martinez, D.A.; Perez Lopez, C.; Romagosa, J.; Bayes, A.; Sanz, P.; Calopa, M.; Galvez-Barron, C.; de Mingo, E.; et al. Validation of a Portable Device for Mapping Motor and Gait Disturbances in Parkinson’s Disease. JMIR Mhealth Uhealth 2015, 3, e9. [Google Scholar] [CrossRef]

- Rodriguez-Molinero, A. Monitoring of Mobility of Parkinson’s Patients for Therapeutic Purposes—Clinical Trial (MoMoPa-EC). Available online: https://clinicialtrials.gov/ct2/show/NCT04176302 (accessed on 3 May 2021).

- Great Lake Technologies Kinesia 360 Parkinson’s Monitoring Study 2018. Available online: https://clinicialtrials.gov/ct2/show/NCT02657655 (accessed on 3 May 2021).

- Van Halteren, A.D.; Munneke, M.; Smit, E.; Thomas, S.; Bloem, B.R.; Darweesh, S.K.L. Personalized Care Management for Persons with Parkinson’s Disease. J. Park. Dis. 2020, 10, S11–S20. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Ensemble Deep Model for Continuous Estimation of Unified Parkinson’s Disease Rating Scale III. Biomed. Eng. Online 2021, 20, 32. [Google Scholar] [CrossRef]

- Clarke, C.E.; Patel, S.; Ives, N.; Rick, C.E.; Woolley, R.; Wheatley, K.; Walker, M.F.; Zhu, S.; Kandiyali, R.; Yao, G.; et al. UK Parkinson’s Disease Society Brain Bank Diagnostic Criteria; NIHR Journals Library; 2016. Available online: www.ncbi.nlm.nih.gov/books/NBK37 (accessed on 3 May 2021).

- Shawen, N.; O’Brien, M.K.; Venkatesan, S.; Lonini, L.; Simuni, T.; Hamilton, J.L.; Ghaffari, R.; Rogers, J.A.; Jayaraman, A. Role of Data Measurement Characteristics in the Accurate Detection of Parkinson’s Disease Symptoms Using Wearable Sensors. J. NeuroEng. Rehabil. 2020, 17, 52. [Google Scholar] [CrossRef] [Green Version]

- Lonini, L.; Dai, A.; Shawen, N.; Simuni, T.; Poon, C.; Shimanovich, L.; Daeschler, M.; Ghaffari, R.; Rogers, J.A.; Jayaraman, A. Wearable Sensors for Parkinson’s Disease: Which Data Are Worth Collecting for Training Symptom Detection Models. Npj Digit. Med. 2018, 1, 64. [Google Scholar] [CrossRef]

- Fisher, J.M.; Hammerla, N.Y.; Ploetz, T.; Andras, P.; Rochester, L.; Walker, R.W. Unsupervised Home Monitoring of Parkinson’s Disease Motor Symptoms Using Body-Worn Accelerometers. Park. Relat. Disord. 2016, 33, 44–50. [Google Scholar] [CrossRef] [Green Version]

- Evers, L.J.; Raykov, Y.P.; Krijthe, J.H.; Silva de Lima, A.L.; Badawy, R.; Claes, K.; Heskes, T.M.; Little, M.A.; Meinders, M.J.; Bloem, B.R. Real-Life Gait Performance as a Digital Biomarker for Motor Fluctuations: The Parkinson@Home Validation Study. J Med. Internet Res. 2020, 22, e19068. [Google Scholar] [CrossRef] [PubMed]

- De Lima, A.L.S.; Hahn, T.; de Vries, N.M.; Cohen, E.; Bataille, L.; Little, M.A.; Baldus, H.; Bloem, B.R.; Faber, M.J. Large-Scale Wearable Sensor Deployment in Parkinson’s Patients: The Parkinson@Home Study Protocol. JMIR Res. Protoc. 2016, 5, e5990. [Google Scholar] [CrossRef] [Green Version]

- De Lima, A.L.S.; Hahn, T.; Evers, L.J.W.; de Vries, N.M.; Cohen, E.; Afek, M.; Bataille, L.; Daeschler, M.; Claes, K.; Boroojerdi, B.; et al. Feasibility of Large-Scale Deployment of Multiple Wearable Sensors in Parkinson’s Disease. PLoS ONE 2017, 12, e0189161. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.J.; Koh, K.; Boyd, S.; Gorinevsky, D.L. Trend Filtering. SIAM Rev. 2009, 51, 339–360. [Google Scholar] [CrossRef]

- Van Brummelen, E.M.J.; Ziagkos, D.; de Boon, W.M.I.; Hart, E.P.; Doll, R.J.; Huttunen, T.; Kolehmainen, P.; Groeneveld, G.J. Quantification of Tremor Using Consumer Product Accelerometry Is Feasible in Patients with Essential Tremor and Parkinson’s Disease: A Comparative Study. J. Clin. Mov. Disord. 2020, 7, 4. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Mahadevan, N.; Demanuele, C.; Zhang, H.; Volfson, D.; Ho, B.; Erb, M.K.; Patel, S. Development of Digital Biomarkers for Resting Tremor and Bradykinesia Using a Wrist-Worn Wearable Device. NPJ Digit. Med. 2020, 3, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Keijsers, N.L.; Horstink, M.W.; Gielen, S.C. Ambulatory Motor Assessment in Parkinson’s Disease. Mov. Disord. 2006, 21, 34–44. [Google Scholar] [CrossRef] [Green Version]

- Korthauer, K.; Kimes, P.K.; Duvallet, C.; Reyes, A.; Subramanian, A.; Teng, M.; Shukla, C.; Alm, E.J.; Hicks, S.C. A Practical Guide to Methods Controlling False Discoveries in Computational Biology. Genome Biol. 2019, 20, 118. [Google Scholar] [CrossRef] [Green Version]

- Jgvhabets/Brady_reallife: First Release for Short-Term, Individual and Group Modelling Analyses|Zenodo. Available online: https://zenodo.org/record/4734199#.YJAOZRQza3J (accessed on 3 May 2021).

- Khodakarami, H.; Ricciardi, L.; Contarino, M.F.; Pahwa, R.; Lyons, K.E.; Geraedts, V.J.; Morgante, F.; Leake, A.; Paviour, D.; De Angelis, A.; et al. Prediction of the Levodopa Challenge Test in Parkinson’s Disease Using Data from a Wrist-Worn Sensor. Sensors 2019, 19, 5153. [Google Scholar] [CrossRef] [Green Version]

- Vibhash, D.S.; Safarpour, D.; Mehta, S.H.; Vanegas-Arroyave, N.; Weiss, D.; Cooney, J.W.; Mari, Z.; Fasano, A. Telemedicine and Deep Brain Stimulation—Current Practices and Recommendations. Park. Relat. Disord. 2021. [Google Scholar] [CrossRef]

- Van den Bergh, R.; Bloem, B.R.; Meinders, M.J.; Evers, L.J.W. The State of Telemedicine for Persons with Parkinson’s Disease. Curr. Opin. Neurol. 2021, 34, 589–597. [Google Scholar] [CrossRef] [PubMed]

- Velisar, A.; Syrkin-Nikolau, J.; Blumenfeld, Z.; Trager, M.H.; Afzal, M.F.; Prabhakar, V.; Bronte-Stewart, H. Dual Threshold Neural Closed Loop Deep Brain Stimulation in Parkinson Disease Patients. Brain Stimulat. 2019, 12, 868–876. [Google Scholar] [CrossRef]

- Castaño-Candamil, S.; Ferleger, B.I.; Haddock, A.; Cooper, S.S.; Herron, J.; Ko, A.; Chizeck, H.J.; Tangermann, M. A Pilot Study on Data-Driven Adaptive Deep Brain Stimulation in Chronically Implanted Essential Tremor Patients. Front. Hum. Neurosci. 2020, 14, 421. [Google Scholar] [CrossRef] [PubMed]

- Gilron, R.; Little, S.; Perrone, R.; Wilt, R.; de Hemptinne, C.; Yaroshinsky, M.S.; Racine, C.A.; Wang, S.S.; Ostrem, J.L.; Larson, P.S.; et al. Long-Term Wireless Streaming of Neural Recordings for Circuit Discovery and Adaptive Stimulation in Individuals with Parkinson’s Disease. Nat. Biotechnol. 2021, 39, 1078–1085. [Google Scholar] [CrossRef] [PubMed]

- Habets, J.G.V.; Heijmans, M.; Kuijf, M.L.; Janssen, M.L.F.; Temel, Y.; Kubben, P.L. An Update on Adaptive Deep Brain Stimulation in Parkinson’s Disease. Mov. Disord. 2018, 33, 1834–1843. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rochester, L.; Mazzà, C.; Mueller, A.; Caulfield, B.; McCarthy, M.; Becker, C.; Miller, R.; Piraino, P.; Viceconti, M.; Dartee, W.P.; et al. A Roadmap to Inform Development, Validation and Approval of Digital Mobility Outcomes: The Mobilise-D Approach. Digit. Biomark. 2020, 4, 13–27. [Google Scholar] [CrossRef]

- Kluge, F.; Del Din, S.; Cereatti, A.; Gaßner, H.; Hansen, C.; Helbostadt, J.L.; Klucken, J.; Küderle, A.; Müller, A.; Rochester, L.; et al. Consensus Based Framework for Digital Mobility Monitoring. medRxiv 2020. [Google Scholar] [CrossRef] [PubMed]

- Heijmans, M.; Habets, J.G.V.; Herff, C.; Aarts, J.; Stevens, A.; Kuijf, M.L.; Kubben, P.L. Monitoring Parkinson’s Disease Symptoms during Daily Life: A Feasibility Study. NPJ Park. Dis. 2019, 5, 21. [Google Scholar] [CrossRef]

- Vizcarra, J.A.; Sanchez-Ferro, A.; Maetzler, W.; Marsili, L.; Zavala, L.; Lang, A.E.; Martinez-Martin, P.; Mestre, T.A.; Reilmann, R.; Hausdorff, J.M.; et al. The Parkinson’s Disease e-Diary: Developing a Clinical and Research Tool for the Digital Age. Mov. Disord. 2019. [Google Scholar] [CrossRef] [PubMed]

- Habets, J.; Heijmans, M.; Herff, C.; Simons, C.; Leentjens, A.F.; Temel, Y.; Kuijf, M.; Kubben, P. Mobile Health Daily Life Monitoring for Parkinson Disease: Development and Validation of Ecological Momentary Assessments. JMIR Mhealth Uhealth 2020, 8, e15628. [Google Scholar] [CrossRef]

- Habets, J.G.V.; Heijmans, M.; Leentjens, A.F.G.; Simons, C.J.P.; Temel, Y.; Kuijf, M.L.; Kubben, P.L.; Herff, C. A Long-Term, Real-Life Parkinson Monitoring Database Combining Unscripted Objective and Subjective Recordings. Data 2021, 6, 22. [Google Scholar] [CrossRef]

- Bourke, A.K.; Ihlen, E.A.F.; Bergquist, R.; Wik, P.B.; Vereijken, B.; Helbostad, J.L. A Physical Activity Reference Data-Set Recorded from Older Adults Using Body-Worn Inertial Sensors and Video Technology-The ADAPT Study Data-Set. Sensors 2017, 17, 559. [Google Scholar] [CrossRef] [Green Version]

- Alberts, J.L.; Koop, M.M.; McGinley, M.P.; Penko, A.L.; Fernandez, H.H.; Shook, S.; Bermel, R.A.; Machado, A.; Rosenfeldt, A.B. Use of a Smartphone to Gather Parkinson’s Disease Neurological Vital Signs during the COVID-19 Pandemic. Park. Dis. 2021, 2021, 5534282. [Google Scholar] [CrossRef]

- Bloem, B.R.; Marks, W.J., Jr.; Silva de Lima, A.L.; Kuijf, M.L.; van Laar, T.; Jacobs, B.P.F.; Verbeek, M.M.; Helmich, R.C.; van de Warrenburg, B.P.; Evers, L.J.W.; et al. The Personalized Parkinson Project: Examining Disease Progression through Broad Biomarkers in Early Parkinson’s Disease. BMC Neurol. 2019, 19, 160. [Google Scholar] [CrossRef] [Green Version]

- Watts, J.; Khojandi, A.; Vasudevan, R.; Ramdhani, R. Optimizing Individualized Treatment Planning for Parkinson’s Disease Using Deep Reinforcement Learning. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montréal, QC, Canada, 20–24 July 2020; pp. 5406–5409. [Google Scholar]

- Matias, R.; Paixão, V.; Bouça, R.; Ferreira, J.J. A Perspective on Wearable Sensor Measurements and Data Science for Parkinson’s Disease. Front. Neurol. 2017, 8, 677. [Google Scholar] [CrossRef] [Green Version]

- MJFF, S. BEAT-PD DREAM Challenge (by Sage Bionetworks; Michael J. Fox Foundation). Available online: https://synapse.org/#!synapse:syn20825169/wiki/600904 (accessed on 3 May 2021).

- Pulliam, C.L.; Heldman, D.A.; Brokaw, E.B.; Mera, T.O.; Mari, Z.K.; Burack, M.A. Continuous Assessment of Levodopa Response in Parkinson’s Disease Using Wearable Motion Sensors. IEEE Trans. Biomed. Eng. 2018, 65, 159–164. [Google Scholar] [CrossRef]

- Hua, R.; Wang, Y. Monitoring Insole (MONI): A Low Power Solution Toward Daily Gait Monitoring and Analysis. IEEE Sens. J. 2019, 19, 6410–6420. [Google Scholar] [CrossRef]

- Daneault, J.; Lee, S.I.; Golabchi, F.N.; Patel, S.; Shih, L.C.; Paganoni, S.; Bonato, P. Estimating Bradykinesia in Parkinson’s Disease with a Minimum Number of Wearable Sensors. In Proceedings of the 2017 IEEE/ACM International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Philadelphia, PA, USA, 17–19 July 2017; pp. 264–265. [Google Scholar]

- Daneault, J.-F.; Vergara-Diaz, G.; Parisi, F.; Admati, C.; Alfonso, C.; Bertoli, M.; Bonizzoni, E.; Carvalho, G.F.; Costante, G.; Fabara, E.E.; et al. Accelerometer Data Collected with a Minimum Set of Wearable Sensors from Subjects with Parkinson’s Disease. Sci. Data 2021, 8, 48. [Google Scholar] [CrossRef]

| Characteristics | |

|---|---|

| Total number (% female) | 20 (60%) |

| Age (years, mean (sd)) | 63.4 (6.4) |

| Accelerometer data per medication state (minutes, mean (sd)) | 59.5 (14.3) |

| Accelerometer data per medication state, after activity filtering (minutes, mean (sd)) | 44.5 (13.9) |

| PD duration (years, mean (sd)) | 8.1 (3.5) |

| Levodopa equivalent daily dosage (milligrams, mean (sd)) | 959 (314) |

| MDS-UPDRS III pre-medication | 43.8 (11.6) |

| MDS-UPDRS III post-medication | 27.1 (9.6) |

| AIMS pre-medication | 0.5 (1.8) |

| AIMS post-medication | 3.7 (4.2) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Habets, J.G.V.; Herff, C.; Kubben, P.L.; Kuijf, M.L.; Temel, Y.; Evers, L.J.W.; Bloem, B.R.; Starr, P.A.; Gilron, R.; Little, S. Rapid Dynamic Naturalistic Monitoring of Bradykinesia in Parkinson’s Disease Using a Wrist-Worn Accelerometer. Sensors 2021, 21, 7876. https://doi.org/10.3390/s21237876

Habets JGV, Herff C, Kubben PL, Kuijf ML, Temel Y, Evers LJW, Bloem BR, Starr PA, Gilron R, Little S. Rapid Dynamic Naturalistic Monitoring of Bradykinesia in Parkinson’s Disease Using a Wrist-Worn Accelerometer. Sensors. 2021; 21(23):7876. https://doi.org/10.3390/s21237876

Chicago/Turabian StyleHabets, Jeroen G. V., Christian Herff, Pieter L. Kubben, Mark L. Kuijf, Yasin Temel, Luc J. W. Evers, Bastiaan R. Bloem, Philip A. Starr, Ro’ee Gilron, and Simon Little. 2021. "Rapid Dynamic Naturalistic Monitoring of Bradykinesia in Parkinson’s Disease Using a Wrist-Worn Accelerometer" Sensors 21, no. 23: 7876. https://doi.org/10.3390/s21237876

APA StyleHabets, J. G. V., Herff, C., Kubben, P. L., Kuijf, M. L., Temel, Y., Evers, L. J. W., Bloem, B. R., Starr, P. A., Gilron, R., & Little, S. (2021). Rapid Dynamic Naturalistic Monitoring of Bradykinesia in Parkinson’s Disease Using a Wrist-Worn Accelerometer. Sensors, 21(23), 7876. https://doi.org/10.3390/s21237876