1. Introduction

Human activity recognition (HAR) is an important research field in the world [

1]. It has a broad range of application scenarios in industrial automation [

2], sports [

3], medical [

4], security [

5], smart city [

6], and smart home [

7]. At the same time, HAR system plays an essential role in human-centered applications, such as health detection [

8], driver behavior monitoring [

9], gait detection [

10], fall detection [

11], and other personalized services. However, the HAR system trained through the generalized data set often does not reach the desired accuracy, especially when applied to new users [

12]. Therefore, how to improve the accuracy of the HAR system in increasingly complex application scenarios that enabling the model to adapt to specific users and enhancing the personalization of the model has great significance. HAR system recognizes human activity in the real environment by learning useful information from raw sensor data or images containing human activity [

13], which falls into two categories: Sensor-based HAR [

14] and vision-based HAR [

15,

16]. Considering the users’ privacy problem and real-time performance of measurement, this study focuses on the sensor-based HAR. Recently, with the development of wearable sensor technology, the sensor’s size is getting smaller, and the sensor’s portability is getting higher. Therefore, HAR system based on wearable sensors has attracted the attention of many researchers [

10].

Wearable sensors’ perception system usually includes the accelerometer module, gyroscope module, and magnetic module [

17]. Compared with the perception system of HAR based on vision systems such as RGB camera [

18], depth camera [

19], and laser sensor [

20], wearable sensor not only has the advantages of low cost, high efficiency, and easy portability but also avoids the invasion of users’ privacy and the limitation of the vision system in space. Electromyogram (EMG) is increasingly used in wearable devices for activity recognition in recent years [

21,

22]. As the most commonly used method to detect muscle activity, EMG signals are usually collected by needle electrodes and patch electrodes, both of which are perception devices close to the skin [

23]. However, these two acquisition methods are affected not only by the interference of electrical noise but also by sweat. It is noteworthy that the change in muscle strength is usually accompanied by muscle deformation. Therefore, using an external airbag and air pressure sensor to detect muscle deformation can obtain muscle movement information for posture recognition [

24,

25]. Moreover, the system based on air pressure has the characteristics of safety and flexibility, which is widely used in human interaction systems [

26,

27]. Yang et al. [

28] has proved that the HAR system’s accuracy will be improved when muscle motion data is added to motion information such as attitude angle and acceleration. In our study, we developed a compact wearable system that incorporates an inertial measurement unit (IMU) module and air pressure module. This system is more comfortable to wear and is insensitive to the wearing position due to the integrated design. It provides more dimensional data without increasing sensor node and provides a good database for the transfer learning in the HAR system.

HAR is performed through conventional machine learning methods or deep learning methods after the sensor collects the original data [

29,

30]. The conventional machine learning method recognizes activity relying on a shallow learning algorithm containing one or two nonlinear mapping layers. The HAR system based on machine learning algorithms usually requires data preprocessing, including segmentation, feature extraction, and selection. Preprocessed data is used to train the classifier based on the conventional machine learning algorithm [

31]. The accuracy of classification largely depends on the effect of feature extraction and selection [

32]. In the study of [

33], He et al. proposed a high-precision HAR system based on discrete cosine transform (DCT), principal component analysis (PCA), and support vector machine (SVM). Cheng et al. [

34] used SVM model, hidden Markov model (HMM), and artificial neural network (ANN) to train the classifier and proved that these three methods had achieved acceptable performance. Gao et al. [

35] proposed the Naive Bayes (NB) classifier based on multi-sensor fusion for activity recognition. Tao et al. [

36] used rank-preserving discriminant analysis to reduce the acceleration data’s dimensionality and used the K-Nearest Neighbor (KNN) model for action classification. In the study of [

37], the SVM model trained multi-sensor fusion is proposed for HAR by Liu et al. However, all the methods mentioned above are based on the assumption that training and test data follows same distribution. Whereas, due to the difference between people, this assumption is hardly guaranteed in real HAR applications. If the training data (source domain) and the test data (target domain) come from different feature distributions (different people), the above-mentioned conventional methods cannot satisfy HAR accuracy.

With the rapid development of deep learning, more and more researchers try to use deep learning methods and reinforcement learning to solve sensor-based HAR problems and achieved good performance [

38,

39]. Compared with conventional machine learning methods, deep learning is an end-to-end learning method based on a multi-layered network, automatically starting from the original raw data without feature extraction to activity recognition [

40]. Deep learning can also find complex structures and is adept at processing high-dimensional data [

41]. Although deep learning has advantages over conventional HAR methods, the performance is still not satisfactory when it uses a small amount of data to solve HAR problems.

Conventional machine learning and deep learning obey the same distribution on the training data and test data, and they need enough labeled data to train the model. Different users will significantly affect sensor data distribution due to differences between individuals and sensors’ wear locations. For example, when different people perform the same activity, their action-angle and speed will be different due to physical differences [

42]. If a user-specific HAR model trains for every user, a large amount of user’s labeled data needs to be collected. Obtaining these labeled data and training exclusive models is time-consuming and expensive. The ideal HAR system is that the classification capabilities learned in the generalized data set are used to identify new user’s activities. The conventional machine learning and deep learning method are difficult to achieve the ideal HAR system, which has strong generalization ability even the newly coming samples have different distributions with the training data. At the same time, it should be noted that this kind of HAR system could be realized by transfer learning. Therefore, in order to solve the problems mentioned above, which uses a small amount of user data to obtain a high-precision recognition model, this paper applies transfer learning to establish an accurate and generalized HAR model.

Transfer learning may effectively avoid the abovementioned disadvantages of conventional machine learning and deep learning. In transfer learning, training data, and test data may obey different distributions, and the model can be obtained without sufficient data annotation. This provides a basis for establishing a model with good generalization capabilities. Transfer learning is widely used in image classification [

42], emotion recognition [

43], brain–computer interface [

44,

45]. In HAR, we define the generalized data set as the source domain and the new users’ data sets as the target domain. In this situation, the distribution of the source domain and the target domain is different, but the two domains’ learning task is the same. This belongs to domain adaptation that is the subcategory of transfer learning.

In domain adaptation, researchers use various methods to align the data distribution of two different domains. The discrimination between the distribution of the source domain and the target domain reaches the minimum in the feature space [

46,

47]. Finally, the classifier trained from the source domain based on a large number of labeled data adapts to the limited or unlabeled target domain, thereby classifying the target domain. According to Yang’s study, domain adaptation is mainly divided into three categories, which are feature-based domain adaptation, sample-based domain adaptation, and model-based domain adaptation [

46]. The most popular method among them is feature-based domain adaptation. The feature-based method minimizes the difference of distribution between the source and target domains, which align the two domains’ distribution to learn shared features. Maximum mean discrepancy (MMD) is a commonly used measurement method for distribution difference [

45], which performs distribution matching by minimizing the MMD distance between the source domain and the target domain. In the study of [

48], Long et al. extended MMD to multi-kernel MMD, aligning multiple fields’ joint distribution. Sun et al. [

49] proposed the CORAL method to align the source and target domains’ mean and covariance. Zhang et al. [

50] proposed a discriminative joint probability adaptive algorithm based on the discriminative joint probability MMD method, which improved the migration and discrimination in the process of feature transformation.

It is time-consuming to obtain new users’ labeled data, and the ideal HAR system does not require new users to provide labeled data. Therefore, domain adaptation can also be divided into supervised domain adaptation and unsupervised domain adaptation according to whether the target domain has labeled data [

46]. In the unsupervised domain adaptation, pseudo-labels are usually used to overcome the impact of missing labeled samples in the target domain. However, inaccurate pseudo-labels can accumulate errors in transfer learning and even lead to negative transfer [

51]. Therefore, this paper proposes a joint probability domain adaptive method with improved pseudo-labels (IPL-JPDA). This method can avoid the accuracy decreasing caused by inaccurate pseudo-labels by combining improved pseudo-labeling strategy and discriminative joint probability MMD method [

50].

In this study, unsupervised domain adaptation is applied to the HAR system based on wearable sensors. This system does not require new users’ labeled data and directly transfers the HAR model trained on the generalized data set. The main contributions of this study are described as follows:

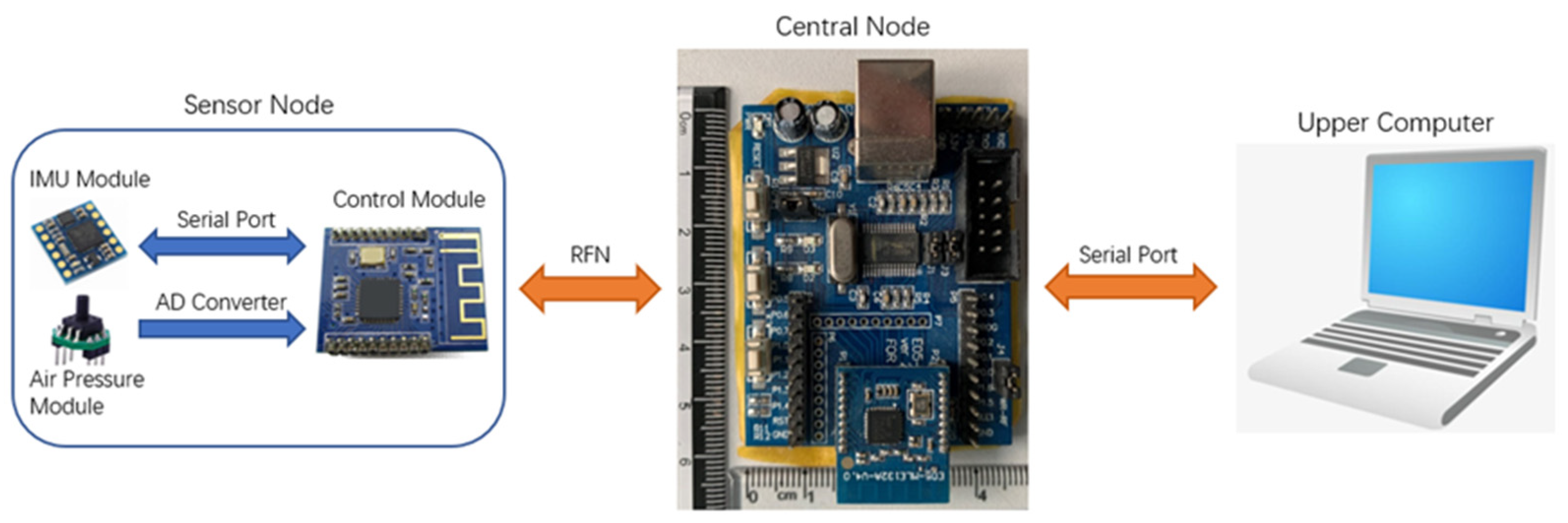

In this paper, a compact wireless wearable sensor node is designed, which combines an IMU module and an air pressure module.

This study proposes a brand-new domain adaption method called IPL-JPDA, which combining improved pseudo-labeling strategy and discriminative joint probability MMD method. This model can avoid reducing accuracy due to inaccurate initial pseudo-labels.

This study uses a newly designed sensor node to collect activity data for seven users. These data are used to train the HAR system based on transfer learning and the HAR system based on machine learning. At last, the performance of different HAR systems is compared.

The rest of this paper is organized as follows:

Section 2 introduces the structure of wearable sensors.

Section 3 introduces the IPL-JPDA algorithm.

Section 4 Experiment setup, and collects the sensor’s data. In

Section 5, the results of the experiment are presented and analyzed. Finally, the conclusions are drawn in

Section 6.

3. The Method of IPL-JPDA

In the HAR system based on transfer learning, the activity recognition knowledge is learned from the source domain dataset with the activity label. The learned knowledge is transferred to the target domain dataset without the activity label so that the activity of the target domain is recognized. Therefore, we assume that the feature space and label space of source domain and target domain are the same. There are labeled samples in the source domain , recorded as . There are unlabeled samples in the target domain , recorded as . is the feature vector, and is its label in the -class classification problem. The domain adaptation (DA) method attempts to find a mapping . The source domain and target domain are mapped to the same subspace, so that the classifier trained on can achieve good classification effect on . For example, a linear map for the source and the target domains, where . In this study, all the source domain and target domain data are collected by the compact sensor node.

3.1. Improved Pseudo-Labels

The improved pseudo-labels method also belongs to unsupervised domain adaptation. It uses supervised locality preserving projection (SLPP) [

54] to learn the projection matrix

. The source domain and target domain are mapped to the same subspace, so the same class samples were projected to the subspace, which closed to each other regardless of that they originally came from the source domain or the target domain.

In the generation of improved pseudo-labels, we use only the source domain to obtain projection matrix at the beginning and then assign pseudo labels to the target domain. We update the projection matrix with the labeled source domain and the pseudo-labeled target domain, and the IPL is generated from the projection matrix .

In the pseudo-labels, we use nearest class prototype (NCP) [

55] and structured prediction (SP) [

56] to label target domain. In the following sections, we present and analyze each component of the proposed method.

3.1.1. Dimensionality Reduction and Alignment

The dimension reduction method learns the transformed feature by minimizing the reconstruction error of the input data. For simplicity and generality, we will choose principal component analysis (PCA) for data reconstruction [

44].

represents the input data matrix, and

is after normalization, where

.

and

is the dimensionality of the feature space after applying PCA. In this study,

and we set

. PCA is to reduce the high dimensional data by linear feature transformation. Each feature vector in

is

.

The lower-dimensional feature space

learned by PCA. We use the SLPP to learn a domain invariant yet discriminative subspace

from

. In order to promote the class alignment of two domains, we use SLPP to achieve domain alignment [

54]. The goal of SLPP is to learn a projection matrix

by minimizing the following cost function.

where

and

is the dimensionality of the learned space. Since we have used PCA to reduce the dimension, in order to avoid further information loss, we set

.

is the

-th column of the labeled data matrix

.

, which is the element of a similarity matrix

, is determined as follows:

The same class samples were projected to the subspace, which closed to each other regardless of that they originally came from the source domain or the target domain. Similarity matrix

is a simplification of MMD metrics [

57,

58]. When we improve the invariance of domains, we retain the domain differentiation. The objective function can be rewritten as [

54,

57]:

where

is the laplacian matrix,

is a diagonal matrix with

.

is a collection of

labeled source data and

pseudo-labeled target data.

is a regularization term. The maximize problem (3) is equivalent to the following generalized eigenvalue problem:

solving the problem gives the optimal solution

where

is the eigenvector corresponding to the maximum

eigenvalue.

3.1.2. The Generation of Pseudo Label

Two methods are used to label the target domain in subspace. The one is the nearest class prototypes (NCP) [

55]. The one is structured prediction (SP) [

56]. Unlabeled target samples can be labeled in the learned subspace

where the projections of source and target samples are computed by:

At the NCP method, the centroid of each class in the subspace is calculated, which is called source class prototypes [

55]. The class prototype for class

is defined as the mean vector of the projected source samples with label

, which can be computed by:

where

if

and 0 otherwise. Therefore, the probability that the target domain sample

belongs to category

is

The second method is structured prediction (SP). The target domain samples are clustered into class C by K-means [

56]. The cluster centers are initialized as the source domain prototype calculated by (7). The cluster center of category

is

. In this method, the probability that sample

belongs to category

is as follows:

Thus, the pseudo label can be given by the following formula:

3.2. Joint Probability Domain Adaptation

Due to the difference between the source domain and the target domain, it is generally assumed that their probabilities distributions are not equal. The derivation of TCA, JDA and BDA algorithms are based on the inequality of the marginal probabilities

or the conditional probabilities

. However, the JPDA algorithm derives from the inequality assumption of joint probabilities

. Because JPDA directly considers the difference of joint probability distribution, the performance of JPDA is better than the traditional DA method, which JPDA can improve the between-domain transferability and the between-class discrimination. The JPDA algorithm is briefly introduced. For details, please refer to [

50].

Let the source domain one-hot coding label matrix be

, and the predicted target domain one-hot coding label matrix be

. Where

and

. Define

where

denotes the

-th column of

,

repeats

.

times to form a matrix in

, and

is formed by the 1st to the

-th (except the 1st) columns of

. Clearly,

and

.

is fixed, and

is constructed from the pseudo labels, which are updated iteratively.

Therefore, the objective function of JPDA can be written as follows:

where

is a trade-off parameter and

is a regularization parameter. We simply set

and

by cross-validation.

,

,

and

are defined as:

where

is the centering matrix, in which

and

is a matrix with all elements being

.

Let

, then we reach the Lagrange function of Equation (14)

where

has dimensionality

, which does not change with the number of classes. By setting the derivative

, (17) becomes a generalized eigen-decomposition problem:

is then formed by the trailing eigen-vectors. A classifier can then be trained on and applied to .

3.3. The Proposed Method IPL-JPDA

In this part, we combine JPDA with improved pseudo-labels based on SP and NCP to construct an improved algorithm IPL-JPDA. Before starting the JPDA loop, the selective pseudo-labeling is used to provide the optimized pseudo-labels to avoid JPDA’s cumulative error. The pseudocode of IPL-JPDA for classification is summarized in Algorithm 1.

| Algorithm 1: Joint Probability Distribution Adaptation with improved pseudo-labels (IPL-JPDA) |

| Input: |

| , source and target domain feature matrices; |

| Ys, source domain one-hot coding label matrix; |

| p, subspace dimensionality in JPDA; |

| μ, trade-off parameter; |

| λ, regularization parameter; |

| T, number of iterations; |

| k, dimension of PCA; |

| m, dimension of SLPP subspace; |

| Output: |

| , estimated target domain labels. |

| forn = 1, …, T do |

| if n == 1 |

| Dimensionality reduction by PCA. |

| Learn the projection P0 using only source data Ds. |

| Assign pseudo labels for all target data using (11). |

| Leaning P using Ds and , where . |

| Assign and update pseudo labels for all target data using (11). |

| else |

| Construct the joint probability matrix Rmin and Rmax by (18) and (19). |

| Solve the generalized eigen-decomposition problem in (20) and select. the p trailing eigenvectors to construct the projection matrix A. |

| Train a classifier f on and apply it to to obtain . |

| end |

5. Experimental Results

This research includes air pressure verification experiment (Experiment A) and the comparison of HAR models (Experiment B). This section analyzes the results of experiment A and experiment B respectively.

5.1. Experiment A—Air Pressure Verification Experiment

The classifier in experiment A uses the features with and without air pressure data to train the HAR model. The model whose training sample contains air pressure data is named Model-CP, and the model whose training sample deducts air pressure data is named Model-DP. In the target group, the four participants were called Subject A, Subject B, Subject C, and Subject D, respectively.

Table 4 shows the mean accuracy value of activity recognition of four subjects in nine different HAR models.

As shown in

Table 4, the bold number represents the evaluation indicator’s maximum value. We can clearly find that the performance of the HAR model trained with air pressure data is better than the model trained without air pressure data on the mean accuracy value. At the conventional machine learning classifier, the HAR model’s mean recognition accuracy using air pressure data is at least 1.78% higher than the HAR model that is not applicable to air pressure data. Meanwhile, the HAR model’s mean recognition accuracy based on the transfer learning algorithm is at least 5.36% higher when the HAR model uses air pressure data. Therefore, we can conclude that the air pressure data can improve the HAR model’s recognition accuracy.

In the target group, the experiment result of four participants performed similarly in experiment A. Hence, we take Subject A as a sample for result analysis.

Figure 10 shows the evaluation indicators of Subject A in different HAR models. The other subjects’ data can be found in

Appendix A (

Figure A1,

Figure A2 and

Figure A3).

Figure 10 shows the classification results of 18 different HAR models. The value of four evaluation indicators has been improved when the HAR model using air pressure data. Meanwhile, the air pressure data greatly impacts the HAR model based on the transfer learning algorithm. This kind of HAR model that does not use air pressure data has a 10% performance loss on Subject A’s accuracy index. It also significantly decreases in the other evaluation indicators. This is because that the air pressure data provides a broader data dimension for the source domain and the target domain. The source domain and the target domain can be better aligned, and this kind of HAR model can be better to identify the target domain’s activities.

On the other hand, the classifier based on conventional machine learning is not sensitive to the lack of air pressure data. Taking KNN as an example, as a lazy learning classifier, it mainly relies on the limited nearby samples around to determine its category. Therefore, the lack of air pressure data in the training sample has a small impact on the KNN model, but there is also a slight drop in recognition performance.

The F-measure indicator is the harmonic mean of precision and recall. The HAR model based on transfer learning performs better than the HAR model based on machine learning in the F-measure indicator. This shows that the former model has a higher quality than the latter model. Besides, the precision value is greater than the recall value in Subject A’s HAR model based on transfer learning. This indicated that this type of model is more conservative, and the model only makes predictions for its very confident samples. Among the remaining subjects’ evaluation indicators, the precision value of the HAR model based on transfer learning is almost all greater than the recall value, while the HAR model based on machine learning has no such feature.

5.2. Experiment B—The Comparison of HAR Models

Experiment A proves that the necessity and significance of air pressure data for HAR model. Therefore, Experiment B only compares models trained with air pressure data.

Table 5 shows the mean value of recognition evaluation indicators of four subjects in nine different HAR models.

As shown in

Table 5, the bold number represents the evaluation indicator’s maximum value. The mean values of all the four subjects’ recognition evaluation indicators are more than 90% in the HAR model of DT-SS, BDA, and IPL-JPDA. The IPL-JPDA model has the best performance in this evaluation indicator from the mean recognition accuracy, reaching 93.21%. The mean recognition accuracy of the IPL-JPDA algorithm is 1.42% higher than DT-OS, which has the best performance in traditional classifiers in this study.

In traditional classifiers, KNN and SVM have similar performance in four average evaluation indicators, and DT has the best performance. The DT can better deal with the irrelevant feature data and understand the data’s inherent meaning compared with the HAR model based on SVM and KNN. We can also notice that in the three traditional classifiers, the performance of Classifier-SS is better than that of Classifier-OS, and the average recognition accuracy of Classifier-SS is 10% higher than Classifier-OS. This is because Classifier-SS is a classifier trained based on its data. However, Classifier-SS has a fatal disadvantage, which belongs to supervised machine learning. Training the HAR model of Classifier-SS needs labeled data but collecting these labeled data is time-consuming and expensive. Meanwhile, due to the small amount of data in the target group’s dataset, which the training samples of Classifier-SS are insufficient, the Classifier-SS model’s average standard deviation is much higher than that of the Classifier-OS model.

The IPL-JPDA model has the best performance among the HAR models based on transfer learning. The mean recognition accuracy of IPL-JPDA is 6.2% higher than JDA and 1.78% higher than BDA. Because IPL-JPDA is based on the joint probability discriminant MMD metric, this method improves the traditional MMD metric by minimizing the difference in the joint probability distribution of the same category in different domains and maximizing the difference between different categories. Both JDA and BDA are based on marginal distribution and conditional distribution MMD. Not only that, IPL-JPDA improves the initial pseudo-label and avoids the negative migration caused by the accumulation of errors caused by the inaccurate initial pseudo-label.

In

Appendix B, we also compare the convergence steps of the different transfer learning algorithms.

Figure 11 presents four indicators of six unsupervised HAR models among the subjects in target group. The HAR model based on IPL-JPDA and BDA exceeded 90% in all the four subjects’ evaluation indicators, and almost all the indicators were better than KNN-OS and SVM-OS. The performance of JDA is slightly worse than the above two transfer learning algorithms but better than KNN-OS and SVM-OS in most cases. Simultaneously, the recognition accuracy of the three transfer learning algorithms in different subjects is stable. KNN-OS and SVM-OS model has poor recognition performance, and the recognition accuracy of all subjects in the target group is less than 85%. DT-OS is the best traditional classifier, and its performance on both Subject B and Subject C exceeds 90%. In Subject B, DT-OS has the best recognition accuracy, which is 2.86% higher than IPL-JPDA. However, the recognition accuracy of DT-OS in Subject D is only 74.29%, which is 17.14% less than that of JDA. This shows that DT-OS has weak generalization ability.

Considering the stability and accuracy of recognition, we can conclude that the HAR model’s performance based on transfer learning is better than that based on the traditional classifier. HAR model based on transfer learning has a strong generalization capability, and the recognition accuracy will not degrade on particular samples. However, under the influence of negative transfer on the classical BDA and JDA algorithms, activity recognition performance is worse than the DT-OS model in some subject samples.

Table 6 and

Table 7 are the confusion matrices of the subjects in the target group. In the traditional classifiers, the performance of three unsupervised HAR models is similar. KNN-OS has been used as a sample for comparative analysis with the IPL-JPDA algorithm. In the static activity (SIT, STAND, LIE), the transfer learning algorithm of IPL-JPDA has 100% recognition accuracy. The generalization ability of KNN-OS is low. When the KNN model trained by the source group is used to recognize the target group, some LIE is wrongly recognized as STAND. These two models have strong recognition ability to RUN in dynamic activities (WALK, RUN, UP, DOWN). However, the recognition ability of WALK, RUN, and UP are weak. The results show that the JPDA model’s recognition accuracy is more than 75% in these three activities, and that of the KNN-OS model is only more than 45%. Therefore, it can be concluded that the har algorithm based on transfer learning can better identify the action, which is easy to be confused, and it has an accurate recognition rate on the action, which is easy to distinguish compared with the traditional classifier.